Week9

主題

- 1.執行機制 機製介紹

- 2.flanel 網路介紹

- 3.Nginx+tomcat+NFS 實現動靜分離

1.執行機制 機製介紹

1.1 master 執行機制 機製

1.1.1 kube-apiserver

-

k8s API Server提供了k8s各類資源物件(pod,RC,Service等)的增刪改查及watch等HTTP Rest介面,是整個系統

的數據總線和數據中心。

apiserver 目前在master監聽兩個埠,通過 --insecure-port int 監聽一個非安全的127.0.0.1本地埠(預設爲

8080): -

該埠用於接收HTTP請求;

該埠預設值爲8080,可以通過API Server的啓動參數「–insecure-port」的值來修改預設值;

預設的IP地址爲「localhost」,可以通過啓動參數「–insecure-bind-address」的值來修改該IP地址;

非認證或未授權的HTTP請求通過該埠存取API Server(kube-controller-manager、kube-scheduler)。 -

通過參數–bind-address=192.168.7.101 監聽一個對外存取且安全(https)的埠(預設爲6443):

-

該埠預設值爲6443,可通過啓動參數「–secure-port」的值來修改預設值;

預設IP地址爲非本地(Non-Localhost)網路埠,通過啓動參數「–bind-address」設定該值;

該埠用於接收用戶端、dashboard等外部HTTPS請求;

用於基於Tocken檔案或用戶端證書及HTTP Base的認證;

用於基於策略的授權; -

kubernetes API Server的功能與使用:

提供了叢集管理的REST API介面(包括認證授權、數據校驗以及叢集狀態變更);

提供其他模組之間的數據互動和通訊的樞紐(其他模組通過API Server查詢或修改數據,只有API Server才直接操作

etcd);

是資源配額控制的入口;

擁有完備的叢集安全機制 機製.

# curl 127.0.0.1:8080/apis #分組api

# curl 127.0.0.1:8080/api/v1 #帶具體版本號的api

# curl 127.0.0.1:8080/ #返回核心api列表

# curl 127.0.0.1:8080/version #api 版本資訊

# curl 127.0.0.1:8080/healthz/etcd #與etcd的心跳監測

# curl 127.0.0.1:8080/apis/autoscaling/v1 #api的詳細資訊

# curl 127.0.0.1:8080/metrics #指標數據

- 啓動指令碼

root@master1:~# cat /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kube-apiserver \

--advertise-address=172.16.62.201 \

--allow-privileged=true \

--anonymous-auth=false \

--authorization-mode=Node,RBAC \

--bind-address=172.16.62.201 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--endpoint-reconciler-type=lease \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \

--etcd-servers=https://172.16.62.210:2379,https://172.16.62.211:2379,https://172.16.62.212:2379 \

--kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/admin.pem \

--kubelet-client-key=/etc/kubernetes/ssl/admin-key.pem \

--kubelet-https=true \

--service-account-key-file=/etc/kubernetes/ssl/ca.pem \

--service-cluster-ip-range=172.28.0.0/16 \ #service subnet

--service-node-port-range=20000-40000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--requestheader-allowed-names= \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/ssl/aggregator-proxy.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/aggregator-proxy-key.pem \

--enable-aggregator-routing=true \

--v=2

Restart=always #重新啓動策略

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

root@master1:~

1.1.2 kube-controller-manager

-

Controller Manager作爲叢集內部的管理控制中心,非安全預設埠10252,負責叢集內的Node、Pod副本、服

務端點(Endpoint)、名稱空間(Namespace)、服務賬號(ServiceAccount)、資源定額(ResourceQuota)

的管理,當某個Node意外宕機時,Controller Manager會及時發現並執行自動化修復流程,確保叢集始終處於預

期的工作狀態。 -

啓動指令碼

root@master1:~# cat /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/bin/kube-controller-manager \

--address=127.0.0.1 \

--allocate-node-cidrs=true \

--cluster-cidr=10.20.0.0/16 \ #pod subnet

--cluster-name=kubernetes \ #namespace name

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--leader-elect=true \

--node-cidr-mask-size=24 \ #pod 子網掩碼

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-cluster-ip-range=172.28.0.0/16 \

--use-service-account-credentials=true \

--v=2

Restart=always #重新啓動策略

RestartSec=5 #5秒重新啓動

[Install]

WantedBy=multi-user.target

root@master1:~#

1.1.3 kube-scheduler

-

Scheduler負責Pod排程,在整個系統中起"承上啓下"作用,

-

承上:負責接收Controller Manager建立的新的Pod,

爲其選擇一個合適的Node;

啓下:Node上的kubelet接管Pod的生命週期。 -

啓動指令碼

root@master1:~# cat /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/bin/kube-scheduler \

--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

root@master1:~#

- 通過排程演算法爲待排程Pod列表的每個Pod從可用Node列表中選擇一個最適合的Node,並將資訊寫入etcd中

node節點上的kubelet通過API Server監聽到kubernetes Scheduler產生的Pod系結資訊,然後獲取對應的Pod清

單,下載Image,並啓動容器。

優選策略

1.LeastRequestedPriority

優先從備選節點列表中選擇資源消耗最小的節點(CPU+記憶體)。

2.CalculateNodeLabelPriority

優先選擇含有指定Label的節點。

3.BalancedResourceAllocation

優先從備選節點列表中選擇各項資源使用率最均衡的節點。

1.2.node 執行機制 機製

1.2.1 kubelet

-

在kubernetes叢集中,每個Node節點都會啓動kubelet進程,用來處理Master節點下發到本節點的任務,管理

Pod和其中的容器。kubelet會在API Server上註冊節點資訊,定期向Master彙報節點資源使用情況,並通過

cAdvisor(顧問)監控容器和節點資源,可以把kubelet理解成Server/Agent架構中的agent,kubelet是Node上的

pod管家。 -

啓動指令碼

root@node1:~# cat /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStartPre=/bin/mount -o remount,rw '/sys/fs/cgroup'

ExecStartPre=/bin/mkdir -p /sys/fs/cgroup/cpuset/system.slice/kubelet.service

ExecStartPre=/bin/mkdir -p /sys/fs/cgroup/hugetlb/system.slice/kubelet.service

ExecStartPre=/bin/mkdir -p /sys/fs/cgroup/memory/system.slice/kubelet.service

ExecStartPre=/bin/mkdir -p /sys/fs/cgroup/pids/system.slice/kubelet.service

ExecStart=/usr/bin/kubelet \

--config=/var/lib/kubelet/config.yaml \

--cni-bin-dir=/usr/bin \

--cni-conf-dir=/etc/cni/net.d \

--hostname-override=172.16.62.207 \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--network-plugin=cni \

--pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.1 \

--root-dir=/var/lib/kubelet \

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

root@node1:~#

1.2.2 kube-proxy

-

https://kubernetes.io/zh/docs/concepts/services-networking/service/

-

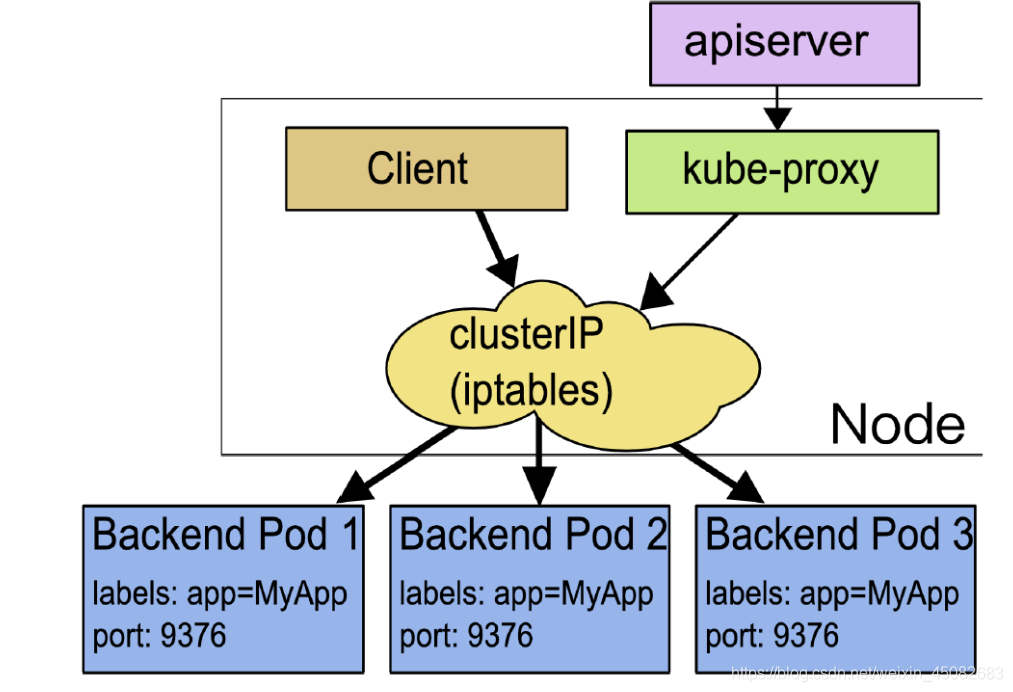

kube-proxy 執行在每個節點上,監聽 API Server 中服務物件的變化,再通過管理 IPtables 來實現網路的轉發。

Kube-Proxy 不同的版本可支援三種工作模式:

UserSpace

k8s v1.2 及以後就已經淘汰

IPtables

目前預設方式,1.1開始支援,1.2開始爲預設

IPVS

1.9引入到1.11正式版本,需要安裝ipvsadm、ipset 工具包和載入 ip_vs 內核模組

- 啓動指令碼

root@node1:~# cat /etc/systemd/system/cat /etc/systemd/system/kube-proxy.service

cat: /etc/systemd/system/cat: No such file or directory

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

# kube-proxy 根據 --cluster-cidr 判斷叢集內部和外部流量,指定 --cluster-cidr 或 --masquerade-all 選項後,kube-proxy 會對存取 Service IP 的請求做 SNAT

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/bin/kube-proxy \

--bind-address=172.16.62.207 \

--cluster-cidr=10.20.0.0/16 \

--hostname-override=172.16.62.207 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--logtostderr=true \

--proxy-mode=ipvs

Restart=always

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

root@node1:~#

1.3 iptables

Kube-Proxy 監聽 Kubernetes Master 增加和刪除 Service 以及 Endpoint 的訊息。對於每一個 Service,Kube

Proxy 建立相應的 IPtables 規則,並將發送到 Service Cluster IP 的流量轉發到 Service 後端提供服務的 Pod 的相

應埠上。

注:

雖然可以通過 Service 的 Cluster IP 和伺服器端口存取到後端 Pod 提供的服務,但該 Cluster IP 是 Ping 不通的。

其原因是 Cluster IP 只是 IPtables 中的規則,並不對應到一個任何網路裝置。

IPVS 模式的 Cluster IP 是可以 Ping 通的。

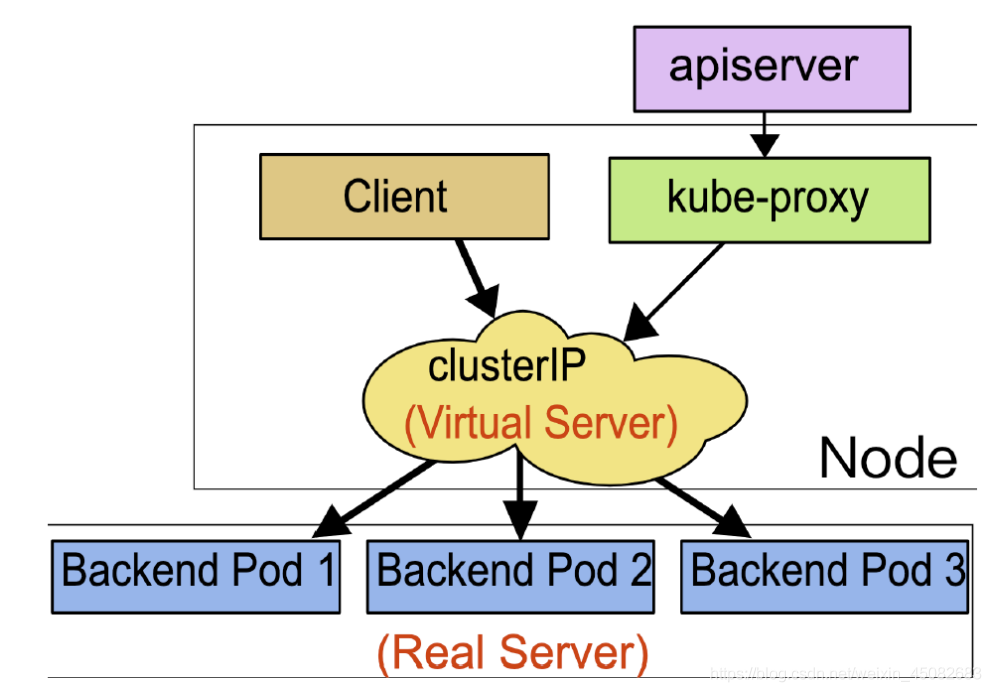

1.4 IPVS

- kubernetes從1.9開始測試支援ipvs(Graduate kube-proxy IPVS mode to beta),https://github.com/kubernete

s/kubernetes/blob/master/CHANGELOG-1.9.md#ipvs,從1.11版本正式支援ipvs(IPVS-based in-cluster load

balancing is now GA),https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-

1.11.md#ipvs。

IPVS 相對 IPtables 效率會更高一些,使用 IPVS 模式需要在執行 Kube-Proxy 的節點上安裝 ipvsadm、ipset 工具

包和載入 ip_vs 內核模組,當 Kube-Proxy 以 IPVS 代理模式啓動時,Kube-Proxy 將驗證節點上是否安裝了 IPVS

模組,如果未安裝,則 Kube-Proxy 將回退到 IPtables 代理模式。

使用IPVS模式,Kube-Proxy會監視Kubernetes Service物件和Endpoints,呼叫宿主機內核Netlink介面以相應

地建立IPVS規則並定期與Kubernetes Service物件 Endpoints物件同步IPVS規則,以確保IPVS狀態與期望一致,

存取服務時,流量將被重定向到其中一個後端 Pod,IPVS使用雜湊表作爲底層數據結構並在內核空間中工作,這意味着

IPVS可以更快地重定向流量,並且在同步代理規則時具有更好的效能,此外,IPVS 爲負載均衡演算法提供了更多選項,例

如:rr (輪詢排程)、lc (最小連線數)、dh (目標雜湊)、sh (源雜湊)、sed (最短期望延遲)、nq(不排隊排程)

等。

1.5 etcd執行機制 機製

- etcd是CoreOS團隊於2013年6月發起的開源專案,它的目標是構建一個高可用的分佈式鍵值(key-value)數據庫。

etcd內部採用raft協定作爲一致性演算法,etcd基於Go語言實現。 - github地址:https://github.com/etcd-io/etcd

- 官方網站:https://etcd.io/

Etcd具有下面 下麪這些屬性:

完全複製:叢集中的每個節點都可以使用完整的存檔

高可用性:Etcd可用於避免硬體的單點故障或網路問題

一致性:每次讀取都會返回跨多主機的最新寫入

簡單:包括一個定義良好、面向使用者的API(gRPC)

安全:實現了帶有可選的用戶端證書身份驗證的自動化TLS

快速:每秒10000次寫入的基準速度

可靠:使用Raft演算法實現了儲存的合理分佈Etcd的工作原理

- 啓動指令碼

root@etcd1:/tmp/netplan_5juwqwqg# cat /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/bin/etcd \

--name=etcd1 \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--initial-advertise-peer-urls=https://172.16.62.210:2380 \ #通告自己的叢集埠

--listen-peer-urls=https://172.16.62.210:2380 \ #叢集之間通訊埠

--listen-client-urls=https://172.16.62.210:2379,http://127.0.0.1:2379 \ #用戶端存取地址

--advertise-client-urls=https://172.16.62.210:2379 \ #通告自己的用戶端埠

--initial-cluster-token=etcd-cluster-0 \ #建立叢集使用的token,一個叢集內的節點保持一致

--initial-cluster=etcd1=https://172.16.62.210:2380,etcd2=https://172.16.62.211:2380,etcd3=https://172.16.62.212:2380 \ #叢集所有的節點資訊

--initial-cluster-state=new \ 新建叢集的時候的值爲new,如果是已經存在的叢集爲existing

--data-dir=/var/lib/etcd #數據目錄路徑

Restart=always

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

root@etcd1:/tmp/netplan_5juwqwqg#

1.5.1 檢視成員資訊

- etcd有多個不同的API存取版本,v1版本已經廢棄,etcd v2 和 v3 本質上是共用同一套 raft 協定程式碼的兩個獨立的

應用,介面不一樣,儲存不一樣,數據互相隔離。也就是說如果從 Etcd v2 升級到 Etcd v3,原來v2 的數據還是隻

能用 v2 的介面存取,v3 的介面建立的數據也只能存取通過 v3 的介面存取。

WARNING:

Environment variable ETCDCTL_API is not set; defaults to etcdctl v2. #預設使用V2版本

Set environment variable ETCDCTL_API=3 to use v3 API or ETCDCTL_API=2 to use v2 API. #設定API版本

1.5.2 驗證當前etcd所有成員狀態:

root@etcd3:~# export NODE_IPS="172.16.62.210 172.16.62.211 172.16.62.212"

root@etcd3:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl endpoint health --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem; done

https://172.16.62.210:2379 is healthy: successfully committed proposal: took = 30.252449ms

https://172.16.62.211:2379 is healthy: successfully committed proposal: took = 29.714374ms

https://172.16.62.212:2379 is healthy: successfully committed proposal: took = 28.290729ms

root@etcd3:~#

1.5.3 檢視etcd 數據資訊

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 etcdctl get / --prefix --keys-only

/registry/apiregistration.k8s.io/apiservices/v1.

/registry/apiregistration.k8s.io/apiservices/v1.admissionregistration.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.apiextensions.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.apps

/registry/apiregistration.k8s.io/apiservices/v1.authentication.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v1.batch

/registry/apiregistration.k8s.io/apiservices/v1.coordination.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.networking.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.rbac.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.scheduling.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.storage.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.admissionregistration.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.apiextensions.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.authentication.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.batch

/registry/apiregistration.k8s.io/apiservices/v1beta1.certificates.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.coordination.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.discovery.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.events.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.extensions

/registry/apiregistration.k8s.io/apiservices/v1beta1.networking.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.node.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.policy

/registry/apiregistration.k8s.io/apiservices/v1beta1.rbac.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.scheduling.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.storage.k8s.io

/registry/apiregistration.k8s.io/apiservices/v2beta1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v2beta2.autoscaling

/registry/clusterrolebindings/admin-user

/registry/clusterrolebindings/cluster-admin

/registry/clusterrolebindings/flannel

/registry/clusterrolebindings/kubernetes-dashboard

/registry/clusterrolebindings/system:basic-user

/registry/clusterrolebindings/system:controller:attachdetach-controller

/registry/clusterrolebindings/system:controller:certificate-controller

/registry/clusterrolebindings/system:controller:clusterrole-aggregation-controller

/registry/clusterrolebindings/system:controller:cronjob-controller

/registry/clusterrolebindings/system:controller:daemon-set-controller

/registry/clusterrolebindings/system:controller:deployment-controller

/registry/clusterrolebindings/system:controller:disruption-controller

/registry/clusterrolebindings/system:controller:endpoint-controller

/registry/clusterrolebindings/system:controller:expand-controller

/registry/clusterrolebindings/system:controller:generic-garbage-collector

/registry/clusterrolebindings/system:controller:horizontal-pod-autoscaler

/registry/clusterrolebindings/system:controller:job-controller

/registry/clusterrolebindings/system:controller:namespace-controller

/registry/clusterrolebindings/system:controller:node-controller

/registry/clusterrolebindings/system:controller:persistent-volume-binder

/registry/clusterrolebindings/system:controller:pod-garbage-collector

/registry/clusterrolebindings/system:controller:pv-protection-controller

/registry/clusterrolebindings/system:controller:pvc-protection-controller

/registry/clusterrolebindings/system:controller:replicaset-controller

/registry/clusterrolebindings/system:controller:replication-controller

/registry/clusterrolebindings/system:controller:resourcequota-controller

/registry/clusterrolebindings/system:controller:route-controller

/registry/clusterrolebindings/system:controller:service-account-controller

/registry/clusterrolebindings/system:controller:service-controller

/registry/clusterrolebindings/system:controller:statefulset-controller

/registry/clusterrolebindings/system:controller:ttl-controller

/registry/clusterrolebindings/system:coredns

/registry/clusterrolebindings/system:discovery

/registry/clusterrolebindings/system:kube-controller-manager

/registry/clusterrolebindings/system:kube-dns

/registry/clusterrolebindings/system:kube-scheduler

/registry/clusterrolebindings/system:node

/registry/clusterrolebindings/system:node-proxier

/registry/clusterrolebindings/system:public-info-viewer

/registry/clusterrolebindings/system:volume-scheduler

/registry/clusterroles/admin

/registry/clusterroles/cluster-admin

/registry/clusterroles/edit

/registry/clusterroles/flannel

/registry/clusterroles/kubernetes-dashboard

/registry/clusterroles/system:aggregate-to-admin

/registry/clusterroles/system:aggregate-to-edit

/registry/clusterroles/system:aggregate-to-view

/registry/clusterroles/system:auth-delegator

/registry/clusterroles/system:basic-user

/registry/clusterroles/system:certificates.k8s.io:certificatesigningrequests:nodeclient

/registry/clusterroles/system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

/registry/clusterroles/system:controller:attachdetach-controller

/registry/clusterroles/system:controller:certificate-controller

/registry/clusterroles/system:controller:clusterrole-aggregation-controller

/registry/clusterroles/system:controller:cronjob-controller

/registry/clusterroles/system:controller:daemon-set-controller

/registry/clusterroles/system:controller:deployment-controller

/registry/clusterroles/system:controller:disruption-controller

/registry/clusterroles/system:controller:endpoint-controller

/registry/clusterroles/system:controller:expand-controller

/registry/clusterroles/system:controller:generic-garbage-collector

/registry/clusterroles/system:controller:horizontal-pod-autoscaler

/registry/clusterroles/system:controller:job-controller

/registry/clusterroles/system:controller:namespace-controller

/registry/clusterroles/system:controller:node-controller

/registry/clusterroles/system:controller:persistent-volume-binder

/registry/clusterroles/system:controller:pod-garbage-collector

/registry/clusterroles/system:controller:pv-protection-controller

/registry/clusterroles/system:controller:pvc-protection-controller

/registry/clusterroles/system:controller:replicaset-controller

/registry/clusterroles/system:controller:replication-controller

/registry/clusterroles/system:controller:resourcequota-controller

/registry/clusterroles/system:controller:route-controller

/registry/clusterroles/system:controller:service-account-controller

/registry/clusterroles/system:controller:service-controller

/registry/clusterroles/system:controller:statefulset-controller

/registry/clusterroles/system:controller:ttl-controller

/registry/clusterroles/system:coredns

/registry/clusterroles/system:discovery

/registry/clusterroles/system:heapster

/registry/clusterroles/system:kube-aggregator

/registry/clusterroles/system:kube-controller-manager

/registry/clusterroles/system:kube-dns

/registry/clusterroles/system:kube-scheduler

/registry/clusterroles/system:kubelet-api-admin

/registry/clusterroles/system:node

/registry/clusterroles/system:node-bootstrapper

/registry/clusterroles/system:node-problem-detector

/registry/clusterroles/system:node-proxier

/registry/clusterroles/system:persistent-volume-provisioner

/registry/clusterroles/system:public-info-viewer

/registry/clusterroles/system:volume-scheduler

/registry/clusterroles/view

/registry/configmaps/kube-system/coredns

/registry/configmaps/kube-system/extension-apiserver-authentication

/registry/configmaps/kube-system/kube-flannel-cfg

/registry/configmaps/kubernetes-dashboard/kubernetes-dashboard-settings

/registry/controllerrevisions/kube-system/kube-flannel-ds-amd64-fcb99d957

/registry/csinodes/172.16.62.201

/registry/csinodes/172.16.62.202

/registry/csinodes/172.16.62.203

/registry/csinodes/172.16.62.207

/registry/csinodes/172.16.62.208

/registry/csinodes/172.16.62.209

/registry/daemonsets/kube-system/kube-flannel-ds-amd64

/registry/deployments/default/net-test1

/registry/deployments/default/net-test2

/registry/deployments/default/net-test3

/registry/deployments/default/nginx-deployment

/registry/deployments/kube-system/coredns

/registry/deployments/kubernetes-dashboard/dashboard-metrics-scraper

/registry/deployments/kubernetes-dashboard/kubernetes-dashboard

/registry/events/default/busybox.162762ce821f3622

/registry/events/default/busybox.162762cf683a6f3e

/registry/events/default/busybox.162762cf7640b61e

/registry/events/default/busybox.162762cf9c878ced

/registry/leases/kube-node-lease/172.16.62.201

/registry/leases/kube-node-lease/172.16.62.202

/registry/leases/kube-node-lease/172.16.62.203

/registry/leases/kube-node-lease/172.16.62.207

/registry/leases/kube-node-lease/172.16.62.208

/registry/leases/kube-node-lease/172.16.62.209

/registry/leases/kube-system/kube-controller-manager

/registry/leases/kube-system/kube-scheduler

/registry/masterleases/172.16.62.201

/registry/masterleases/172.16.62.202

/registry/masterleases/172.16.62.203

/registry/minions/172.16.62.201

/registry/minions/172.16.62.202

/registry/minions/172.16.62.203

/registry/minions/172.16.62.207

/registry/minions/172.16.62.208

/registry/minions/172.16.62.209

/registry/namespaces/default

/registry/namespaces/kube-node-lease

/registry/namespaces/kube-public

/registry/namespaces/kube-system

/registry/namespaces/kubernetes-dashboard

/registry/pods/default/busybox

/registry/pods/default/net-test1-5fcc69db59-9mr5d

/registry/pods/default/net-test1-5fcc69db59-dqrf8

/registry/pods/default/net-test1-5fcc69db59-mbt9f

/registry/pods/default/net-test2-8456fd74f7-229tw

/registry/pods/default/net-test2-8456fd74f7-r8d2d

/registry/pods/default/net-test2-8456fd74f7-vxnsk

/registry/pods/default/net-test3-59c6947667-jjf4n

/registry/pods/default/net-test3-59c6947667-ll4tm

/registry/pods/default/net-test3-59c6947667-pg7x8

/registry/pods/default/nginx-deployment-795b7c6c68-zgtzj

/registry/pods/kube-system/coredns-cb9d89598-gfqw5

/registry/pods/kube-system/kube-flannel-ds-amd64-2htr5

/registry/pods/kube-system/kube-flannel-ds-amd64-72qbc

/registry/pods/kube-system/kube-flannel-ds-amd64-dqmg5

/registry/pods/kube-system/kube-flannel-ds-amd64-jsm4f

/registry/pods/kube-system/kube-flannel-ds-amd64-nh6j6

/registry/pods/kube-system/kube-flannel-ds-amd64-rnf4b

/registry/pods/kubernetes-dashboard/dashboard-metrics-scraper-7b8b58dc8b-pj9mg

/registry/pods/kubernetes-dashboard/kubernetes-dashboard-6dccc48d7-xgkhz

/registry/podsecuritypolicy/psp.flannel.unprivileged

/registry/priorityclasses/system-cluster-critical

/registry/priorityclasses/system-node-critical

/registry/ranges/serviceips

/registry/ranges/servicenodeports

/registry/replicasets/default/net-test1-5fcc69db59

/registry/replicasets/default/net-test2-8456fd74f7

/registry/replicasets/default/net-test3-59c6947667

/registry/replicasets/default/nginx-deployment-795b7c6c68

/registry/replicasets/kube-system/coredns-cb9d89598

/registry/replicasets/kubernetes-dashboard/dashboard-metrics-scraper-7b8b58dc8b

/registry/replicasets/kubernetes-dashboard/kubernetes-dashboard-5f5f847d57

/registry/replicasets/kubernetes-dashboard/kubernetes-dashboard-6dccc48d7

/registry/rolebindings/kube-public/system:controller:bootstrap-signer

/registry/rolebindings/kube-system/system::extension-apiserver-authentication-reader

/registry/rolebindings/kube-system/system::leader-locking-kube-controller-manager

/registry/rolebindings/kube-system/system::leader-locking-kube-scheduler

/registry/rolebindings/kube-system/system:controller:bootstrap-signer

/registry/rolebindings/kube-system/system:controller:cloud-provider

/registry/rolebindings/kube-system/system:controller:token-cleaner

/registry/rolebindings/kubernetes-dashboard/kubernetes-dashboard

/registry/roles/kube-public/system:controller:bootstrap-signer

/registry/roles/kube-system/extension-apiserver-authentication-reader

/registry/roles/kube-system/system::leader-locking-kube-controller-manager

/registry/roles/kube-system/system::leader-locking-kube-scheduler

/registry/roles/kube-system/system:controller:bootstrap-signer

/registry/roles/kube-system/system:controller:cloud-provider

/registry/roles/kube-system/system:controller:token-cleaner

/registry/roles/kubernetes-dashboard/kubernetes-dashboard

/registry/secrets/default/default-token-ddvdz

/registry/secrets/kube-node-lease/default-token-7kpl4

/registry/secrets/kube-public/default-token-wq894

/registry/secrets/kube-system/attachdetach-controller-token-mflx8

/registry/secrets/kube-system/certificate-controller-token-q85tw

/registry/secrets/kube-system/clusterrole-aggregation-controller-token-72qkv

/registry/secrets/kube-system/coredns-token-r6jnw

/registry/secrets/kube-system/cronjob-controller-token-tnphb

/registry/secrets/kube-system/daemon-set-controller-token-dz5qp

/registry/secrets/kube-system/default-token-65hrl

/registry/secrets/kube-system/deployment-controller-token-5klk8

/registry/secrets/kube-system/disruption-controller-token-jz2kp

/registry/secrets/kube-system/endpoint-controller-token-q27vg

/registry/secrets/kube-system/expand-controller-token-jr47v

/registry/secrets/kube-system/flannel-token-2wjp4

/registry/secrets/kube-system/generic-garbage-collector-token-96pbt

/registry/secrets/kube-system/horizontal-pod-autoscaler-token-g7rmw

/registry/secrets/kube-system/job-controller-token-9ktbt

/registry/secrets/kube-system/namespace-controller-token-42ncg

/registry/secrets/kube-system/node-controller-token-sb64t

/registry/secrets/kube-system/persistent-volume-binder-token-gwpch

/registry/secrets/kube-system/pod-garbage-collector-token-w4np7

/registry/secrets/kube-system/pv-protection-controller-token-6x5wt

/registry/secrets/kube-system/pvc-protection-controller-token-969b6

/registry/secrets/kube-system/replicaset-controller-token-bvb2d

/registry/secrets/kube-system/replication-controller-token-qgsnj

/registry/secrets/kube-system/resourcequota-controller-token-bhth8

/registry/secrets/kube-system/service-account-controller-token-4ltvx

/registry/secrets/kube-system/service-controller-token-gk5h9

/registry/secrets/kube-system/statefulset-controller-token-kmv7q

/registry/secrets/kube-system/ttl-controller-token-k4rjd

/registry/secrets/kubernetes-dashboard/admin-user-token-x4fpc

/registry/secrets/kubernetes-dashboard/default-token-xcv2x

/registry/secrets/kubernetes-dashboard/kubernetes-dashboard-certs

/registry/secrets/kubernetes-dashboard/kubernetes-dashboard-csrf

/registry/secrets/kubernetes-dashboard/kubernetes-dashboard-key-holder

/registry/secrets/kubernetes-dashboard/kubernetes-dashboard-token-bsxzt

/registry/serviceaccounts/default/default

/registry/serviceaccounts/kube-node-lease/default

/registry/serviceaccounts/kube-public/default

/registry/serviceaccounts/kube-system/attachdetach-controller

/registry/serviceaccounts/kube-system/certificate-controller

/registry/serviceaccounts/kube-system/clusterrole-aggregation-controller

/registry/serviceaccounts/kube-system/coredns

/registry/serviceaccounts/kube-system/cronjob-controller

/registry/serviceaccounts/kube-system/daemon-set-controller

/registry/serviceaccounts/kube-system/default

/registry/serviceaccounts/kube-system/deployment-controller

/registry/serviceaccounts/kube-system/disruption-controller

/registry/serviceaccounts/kube-system/endpoint-controller

/registry/serviceaccounts/kube-system/expand-controller

/registry/serviceaccounts/kube-system/flannel

/registry/serviceaccounts/kube-system/generic-garbage-collector

/registry/serviceaccounts/kube-system/horizontal-pod-autoscaler

/registry/serviceaccounts/kube-system/job-controller

/registry/serviceaccounts/kube-system/namespace-controller

/registry/serviceaccounts/kube-system/node-controller

/registry/serviceaccounts/kube-system/persistent-volume-binder

/registry/serviceaccounts/kube-system/pod-garbage-collector

/registry/serviceaccounts/kube-system/pv-protection-controller

/registry/serviceaccounts/kube-system/pvc-protection-controller

/registry/serviceaccounts/kube-system/replicaset-controller

/registry/serviceaccounts/kube-system/replication-controller

/registry/serviceaccounts/kube-system/resourcequota-controller

/registry/serviceaccounts/kube-system/service-account-controller

/registry/serviceaccounts/kube-system/service-controller

/registry/serviceaccounts/kube-system/statefulset-controller

/registry/serviceaccounts/kube-system/ttl-controller

/registry/serviceaccounts/kubernetes-dashboard/admin-user

/registry/serviceaccounts/kubernetes-dashboard/default

/registry/serviceaccounts/kubernetes-dashboard/kubernetes-dashboard

/registry/services/endpoints/default/jack-nginx-service

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/kube-system/kube-controller-manager

/registry/services/endpoints/kube-system/kube-dns

/registry/services/endpoints/kube-system/kube-scheduler

/registry/services/endpoints/kubernetes-dashboard/dashboard-metrics-scraper

/registry/services/endpoints/kubernetes-dashboard/kubernetes-dashboard

/registry/services/specs/default/jack-nginx-service

/registry/services/specs/default/kubernetes

/registry/services/specs/kube-system/kube-dns

/registry/services/specs/kubernetes-dashboard/dashboard-metrics-scraper

/registry/services/specs/kubernetes-dashboard/kubernetes-dashboard

root@etcd3:/var/lib/etcd#

1.5.4 etcd增刪改查

#新增數據

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl put /testkey "test for linux"

OK

#檢視數據

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl get /testkey

/testkey

test for linux

#改動數據

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl put /testkey "test for linux202008"

OK

#檢視數據已經改動

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl get /testkey

/testkey

test for linux202008

#刪除數據

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl del /testkey

1

#檢視數據

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl get /testkey

root@etcd3:/var/lib/etcd#

1.5.5 etcd數據watch機制 機製

-

基於不斷監看數據,發生變化就主動觸發通知用戶端,Etcd v3 的watch機制 機製支援watch某個固定的key,也支援

watch一個範圍。

相比Etcd v2, Etcd v3的一些主要變化: -

介面通過grpc提供rpc介面,放棄了v2的http介面,優勢是長連線效率提升明顯,缺點是使用不如以前方便,尤其對不

方便維護長連線的場景。

廢棄了原來的目錄結構,變成了純粹的kv,使用者可以通過字首匹配模式模擬目錄。

記憶體中不再儲存value,同樣的記憶體可以支援儲存更多的key。

watch機制 機製更穩定,基本上可以通過watch機制 機製實現數據的完全同步。

提供了批次操作以及事務機制 機製,使用者可以通過批次事務請求來實現Etcd v2的CAS機制 機製(批次事務支援if條件判斷)。 -

watch測試:

#在etcd2新增數據

root@etcd2:~#

root@etcd2:~# ETCDCTL_API=3 /usr/bin/etcdctl put /testkey "test for data"

OK

root@etcd2:~#

#在etcd3 上檢視

root@etcd3:/var/lib/etcd# ETCDCTL_API=3 /usr/bin/etcdctl watch /testkey

PUT

/testkey

test for data

1.5.6 etcd數據備份和恢復

- WAL是write ahead log的縮寫,顧名思義,也就是在執行真正的寫操作之前先寫一個日誌。

wal: 存放預寫式日誌,最大的作用是記錄了整個數據變化的全部歷程。在etcd中,所有數據的修改在提交前,都要

先寫入到WAL中

1.5.7 etcd v3版本數據備份與恢復

- 備份

root@etcd1:/tmp# ETCDCTL_API=3 etcdctl snapshot save snapshot-0806.db

{"level":"info","ts":1596709497.3054078,"caller":"snapshot/v3_snapshot.go:110","msg":"created temporary db file","path":"snapshot-0806.db.part"}

{"level":"warn","ts":"2020-08-06T18:24:57.307+0800","caller":"clientv3/retry_interceptor.go:116","msg":"retry stream intercept"}

{"level":"info","ts":1596709497.3073182,"caller":"snapshot/v3_snapshot.go:121","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":1596709497.3965096,"caller":"snapshot/v3_snapshot.go:134","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","took":0.090895066}

{"level":"info","ts":1596709497.396825,"caller":"snapshot/v3_snapshot.go:143","msg":"saved","path":"snapshot-0806.db"}

Snapshot saved at snapshot-0806.db

#恢復到新目錄

root@etcd1:/tmp# ETCDCTL_API=3 etcdctl snapshot restore snapshot-0806.db --data-dir=/opt/test

{"level":"info","ts":1596709521.3448675,"caller":"snapshot/v3_snapshot.go:287","msg":"restoring snapshot","path":"snapshot-0806.db","wal-dir":"/opt/test/member/wal","data-dir":"/opt/test","snap-dir":"/opt/test/member/snap"}

{"level":"info","ts":1596709521.418283,"caller":"mvcc/kvstore.go:378","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":1373577}

{"level":"info","ts":1596709521.4332154,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"cdf818194e3a8c32","local-member-id":"0","added-peer-id":"8e9e05c52164694d","added-peer-peer-urls":["http://localhost:2380"]}

{"level":"info","ts":1596709521.4604409,"caller":"snapshot/v3_snapshot.go:300","msg":"restored snapshot","path":"snapshot-0806.db","wal-dir":"/opt/test/member/wal","data-dir":"/opt/test","snap-dir":"/opt/test/member/snap"}

#驗證

root@etcd1:/tmp# cd /opt/test/member/wal/

root@etcd1:/opt/test/member/wal# ll

total 62508

drwx------ 2 root root 4096 Aug 6 18:25 ./

drwx------ 4 root root 4096 Aug 6 18:25 ../

-rw------- 1 root root 64000000 Aug 6 18:25 0000000000000000-0000000000000000.wal

root@etcd1:/opt/test/member/wal#

1.5.8 自動備份數據

- 使用指令碼自動備份,可以設定計劃任務沒6小時備份一次

root@etcd1:/data# bash etcd_backup.sh

{"level":"info","ts":1596710073.3407273,"caller":"snapshot/v3_snapshot.go:110","msg":"created temporary db file","path":"/data/etcd-backup/etcdsnapshot-2020-08-06_18-34-33.db.part"}

{"level":"warn","ts":"2020-08-06T18:34:33.343+0800","caller":"clientv3/retry_interceptor.go:116","msg":"retry stream intercept"}

{"level":"info","ts":1596710073.3440814,"caller":"snapshot/v3_snapshot.go:121","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":1596710073.4372525,"caller":"snapshot/v3_snapshot.go:134","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","took":0.096120761}

{"level":"info","ts":1596710073.4379556,"caller":"snapshot/v3_snapshot.go:143","msg":"saved","path":"/data/etcd-backup/etcdsnapshot-2020-08-06_18-34-33.db"}

Snapshot saved at /data/etcd-backup/etcdsnapshot-2020-08-06_18-34-33.db

root@etcd1:/data#

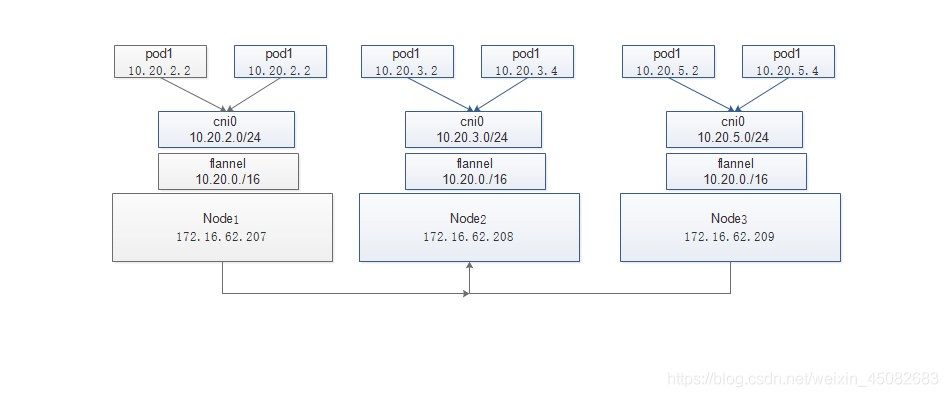

2.flanel 網路介紹

2.1 flanel 網路

-

官網:https://coreos.com/flannel/docs/latest/

-

文件:https://coreos.com/flannel/docs/latest/kubernetes.html

-

由CoreOS開源的針對k8s的網路服務,其目的爲解決k8s叢集中各主機上的pod相互通訊的問題,其藉助於etcd維

護網路IP地址分配,併爲每一個node伺服器分配一個不同的IP地址段。

Flannel 網路模型 (後端),Flannel目前有三種方式實現 UDP/VXLAN/host-gw:

#UDP:早期版本的Flannel使用UDP封裝完成報文的跨越主機轉發,其安全性及效能略有不足。

#VXLAN:Linux 內核在在2012年底的v3.7.0之後加入了VXLAN協定支援,因此新版本的Flannel也有UDP轉換爲VXLAN,VXLAN本質上是一種tunnel(隧道)協定,用來基於3層網路實現虛擬的2層網路,目前flannel 的網路模型已

經是基於VXLAN的疊加(覆蓋)網路。

#Host-gw:也就是Host GateWay,通過在node節點上建立到達各目標容器地址的路由表而完成報文的轉發,因此這種方式要求各node節點本身必須處於同一個區域網(二層網路)中,因此不適用於網路變動頻繁或比較大型的網路環境,但是

其效能較好

2.1.1 Flannel 元件的解釋

-

Cni0:網橋裝置,每建立一個pod都會建立一對 veth pair,其中一端是pod中的eth0,另一端是Cni0網橋中的埠

(網絡卡),Pod中從網絡卡eth0發出的流量都會發送到Cni0網橋裝置的埠(網絡卡)上,Cni0 裝置獲得的ip地址是該節點分配到的網段的第一個地址 -

Flannel.1: overlay網路的裝置,用來進行vxlan報文的處理(封包和解包),不同node之間的pod數據流量都從overlay裝置以隧道的形式發送到對端

2.1.2 vxlan 設定

2.1.2.1 node1 節點子網資訊,是10.20.2.1/24

root@node1:/run/flannel# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.20.0.0/16

FLANNEL_SUBNET=10.20.2.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

2.2 node1主機路由

- cni0 爲10.20.2.0

root@node1:/run/flannel# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.62.1 0.0.0.0 UG 0 0 0 eth0

10.20.0.0 10.20.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.1.0 10.20.1.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.20.3.0 10.20.3.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.4.0 10.20.4.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.5.0 10.20.5.0 255.255.255.0 UG 0 0 0 flannel.1

172.16.62.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

#檢視cni0 網路

root@node1:/run/flannel# ifconfig

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.20.2.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether ae:a3:87:c4:bd:84 txqueuelen 1000 (Ethernet)

RX packets 204920 bytes 17820806 (17.8 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 226477 bytes 23443847 (23.4 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

2.3 node1主機cni資訊

root@node1:/run/flannel# cat /var/lib/cni/flannel/2f649aea6ca393a45663bc9591f0d086714c0e34d445c2d4bb996e1c7aafd6d5

{"cniVersion":"0.3.1","hairpinMode":true,"ipMasq":false,"ipam":{"routes":[{"dst":"10.20.0.0/16"}],"subnet":"10.20.2.0/24","type":"host-local"},"isDefaultGateway":true,"isGateway":true,"mtu":1450,"name":"cbr0","type":"bridge"}root@node1:/run/flannel#

2.3 驗證跨主機pod網路通訊

#檢視pod 資訊

root@master1:/etc/ansible/roles/flannel/templates# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default busybox 1/1 Running 36 4d9h 10.20.3.4 172.16.62.208 <none> <none>

default net-test1-5fcc69db59-9mr5d 1/1 Running 4 4d8h 10.20.2.2 172.16.62.207 <none> <none>

default net-test1-5fcc69db59-dqrf8 1/1 Running 2 4d8h 10.20.3.3 172.16.62.208 <none> <none>

default net-test1-5fcc69db59-mbt9f 1/1 Running 2 4d8h 10.20.3.2 172.16.62.208 <none> <none>

default net-test2-8456fd74f7-229tw 1/1 Running 6 4d6h 10.20.5.4 172.16.62.209 <none> <none>

default net-test2-8456fd74f7-r8d2d 1/1 Running 3 4d6h 10.20.2.3 172.16.62.207 <none> <none>

default net-test2-8456fd74f7-vxnsk 1/1 Running 6 4d6h 10.20.5.2 172.16.62.209 <none> <none>

default net-test3-59c6947667-jjf4n 1/1 Running 2 4d4h 10.20.2.4 172.16.62.207 <none> <none>

default net-test3-59c6947667-ll4tm 1/1 Running 2 4d4h 10.20.5.5 172.16.62.209 <none> <none>

default net-test3-59c6947667-pg7x8 1/1 Running 2 4d4h 10.20.2.6 172.16.62.207 <none> <none>

default nginx-deployment-795b7c6c68-zgtzj 1/1 Running 1 2d23h 10.20.5.21 172.16.62.209 <none> <none>

kube-system coredns-cb9d89598-gfqw5 1/1 Running 0 4d3h 10.20.3.6 172.16.62.208 <none> <none>

#進入node2的pod

root@master1:/etc/ansible/roles/flannel/templates# kubectl exec -it net-test1-5fcc69db59-dqrf8 sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 06:F8:6C:28:AA:05

inet addr:10.20.3.3 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:21 errors:0 dropped:0 overruns:0 frame:0

TX packets:4 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1154 (1.1 KiB) TX bytes:224 (224.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

#測試node1 pod

/ # ping 10.20.2.2

PING 10.20.2.2 (10.20.2.2): 56 data bytes

64 bytes from 10.20.2.2: seq=0 ttl=62 time=1.197 ms

64 bytes from 10.20.2.2: seq=1 ttl=62 time=0.627 ms

^C

--- 10.20.2.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.627/0.912/1.197 ms

/ # traceroute 10.20.2.2

traceroute to 10.20.2.2 (10.20.2.2), 30 hops max, 46 byte packets

1 10.20.3.1 (10.20.3.1) 0.019 ms 0.011 ms 0.008 ms

2 10.20.2.0 (10.20.2.0) 0.391 ms 0.718 ms 0.267 ms

3 10.20.2.2 (10.20.2.2) 0.343 ms 0.681 ms 0.417 ms

/ # traceroute to 223.6.6.6 (223.6.6.6), 30 hops max, 46 byte packets

1 10.20.3.1 (10.20.3.1) 0.015 ms 0.056 ms 0.008 ms

2 172.16.62.1 (172.16.62.1) 0.321 ms 0.229 ms 0.189 ms

3 * * *

2.4 vxlan+directrouting

- Directrouting 爲在同一個二層網路中的node節點啓用直接路由機制 機製,類似於host-gw模式。

- 修改flannel支援Directrouting

root@master1:/etc/ansible/roles/flannel/defaults# more main.yml

# 部分flannel設定,參考 docs/setup/network-plugin/flannel.md

# 設定flannel 後端

#FLANNEL_BACKEND: "host-gw"

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: true #修改爲true

#flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flanneld_image: "easzlab/flannel:v0.11.0-amd64"

# 離線映象tar包

flannel_offline: "flannel_v0.11.0-amd64.tar"

root@master1:/etc/ansible/roles/flannel/defaults#

2.5 重新安裝網路外掛

- 安裝完成後需要重新啓動節點

root@master1:/etc/ansible# ansible-playbook 06.network.yml

2…6vxlan+directrouting 測試

#進入node2節點上的到容器

root@master1:~# kubectl exec -it net-test1-5fcc69db59-dqrf8 sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 0A:99:BB:82:5B:2B

inet addr:10.20.3.9 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:3 errors:0 dropped:0 overruns:0 frame:0

TX packets:1 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:126 (126.0 B) TX bytes:42 (42.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

#測試網路

/ # ping 10.20.2.8

PING 10.20.2.8 (10.20.2.8): 56 data bytes

64 bytes from 10.20.2.8: seq=0 ttl=62 time=1.709 ms

64 bytes from 10.20.2.8: seq=1 ttl=62 time=0.610 ms

#traceroute其他節點pod

- 沒有走flanel1.1 直接到了node的eth0網路

/ # traceroute 10.20.2.8

traceroute to 10.20.2.8 (10.20.2.8), 30 hops max, 46 byte packets

1 10.20.3.1 (10.20.3.1) 0.014 ms 0.011 ms 0.008 ms

2 172.16.62.207 (172.16.62.207) 0.424 ms 0.415 ms 0.222 ms

3 10.20.2.8 (10.20.2.8) 0.242 ms 0.329 ms 0.280 ms

2.7 主機路由對比

- 修改前主機路由

root@node1:/run/flannel# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.62.1 0.0.0.0 UG 0 0 0 eth0

10.20.0.0 10.20.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.1.0 10.20.1.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.20.3.0 10.20.3.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.4.0 10.20.4.0 255.255.255.0 UG 0 0 0 flannel.1

10.20.5.0 10.20.5.0 255.255.255.0 UG 0 0 0 flannel.1

172.16.62.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

- 修改後主機路由

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.62.1 0.0.0.0 UG 0 0 0 eth0

10.20.0.0 172.16.62.202 255.255.255.0 UG 0 0 0 eth0

10.20.1.0 172.16.62.201 255.255.255.0 UG 0 0 0 eth0

10.20.2.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.20.3.0 172.16.62.208 255.255.255.0 UG 0 0 0 eth0

10.20.4.0 172.16.62.203 255.255.255.0 UG 0 0 0 eth0

10.20.5.0 172.16.62.209 255.255.255.0 UG 0 0 0 eth0

172.16.62.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

root@node1:~#

3.Nginx+tomcat+NFS 實現動靜分離

- 環境介紹

| 角色 | 主機名 | IP | 備註 |

|---|---|---|---|

| k8s-master1 | kubeadm-master1.haostack.com | 172.16.62.201 | |

| k8s-master2 | kubeadm-master2.haostack.com | 172.16.62.202 | |

| k8s-master3 | kubeadm-master3.haostack.com | 172.16.62.203 | |

| ha1 | ha1.haostack.com | 172.16.62.204 | |

| ha2 | ha2.haostack.com | 172.16.62.205 | |

| node1 | node1.haostack.com | 172.16.62.207 | |

| node2 | node2.haostack.com | 172.16.62.208 | |

| node3 | node3.haostack.com | 172.16.62.209 | |

| etc1 | etc1.haostack.com | 172.16.62.210 | |

| etc2 | etc2.haostack.com | 172.16.62.211 | |

| etc3 | etc3.haostack.com | 172.16.62.212 | |

| harbor | harbor.haostack.com | 172.16.62.26 | |

| dns | haostack.com | 172.16.62.24 | |

| NFS | haostack.com | 172.16.62.24 |

3.1 基礎映象Centos 製作

3.1.1 centos 基礎映象製作

root@master1:/data/web/centos# cat Dockerfile

#自定義Centos 基礎映象

from harbor.haostack.com/official/centos:7.8.2003

MAINTAINER Jack.liu <[email protected]>

ADD filebeat-7.6.1-x86_64.rpm /tmp

RUN yum install -y /tmp/filebeat-7.6.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.6.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2019 && useradd www -u 2020

#建立centos映象

root@master1:/data/web/centos# cat build-command.sh

#!/bin/bash

docker build -t harbor.haostack.com/k8s/jack_k8s_base-centos:v1 .

sleep 3

docker push harbor.haostack.com/k8s/jack_k8s_base-centos:v1

root@master1:/data/web/centos#

- 檔案

root@master1:/data/web/centos# tree

.

├── build-command.sh

├── Dockerfile

└── filebeat-7.6.1-x86_64.rpm

0 directories, 3 files

root@master1:/data/web/centos#

3.2 nginx基礎映象製作

root@master1:/data/web/nginx-base# cat Dockerfile

#Nginx Base Image

FROM harbor.haostack.com/k8s/jack_k8s_base-centos:v1

MAINTAINER jack liu<[email protected]>

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.14.2.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.14.2 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.14.2.tar.gz

root@master1:/data/web/nginx-base#

- 檔案

root@master1:/data/web/nginx-base# tree

.

├── build-command.sh

├── Dockerfile

└── nginx-1.14.2.tar.gz

0 directories, 3 files

3.3 nginx業務映象製作

root@master1:/data/web/nginx-web1# cat Dockerfile

#自定義Nginx業務映象

from harbor.haostack.com/k8s/jack_k8s_base-nginx:v1

MAINTAINER Jack.liu <[email protected]>

ADD nginx.conf /usr/local/nginx/conf/nginx.conf

ADD app1.tar.gz /usr/local/nginx/html/webapp/

ADD index.html /usr/local/nginx/html/index.html

#靜態資源掛載路徑

RUN mkdir -p /usr/local/nginx/html/webapp/images /usr/local/nginx/html/webapp/static

EXPOSE 80 443

CMD ["/usr/sbin/nginx"]

root@master1:/data/web/nginx-web1

- 檔案

root@master1:/data/web/nginx-web1# tree

.

├── app1.tar.gz

├── build-command.sh

├── Dockerfile

├── index.html

├── nginx.conf

├── nginx.yaml

├── ns-uat.yaml

└── webapp

1 directory, 7 files

3.3.1 建立nginx-pod

3.3.2.1 建立namespace

root@master1:/data/web/nginx-web1# cat ns-uat.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ns-uat

root@master1:/data/web/nginx-web1#

3.3.2.2 建立nginx-pod

- kubectl apply -f nginx.yaml

root@master1:/data/web/nginx-web1# cat nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: uat-nginx-deployment-label

name: uat-nginx-deployment

namespace: ns-uat

spec:

replicas: 1

selector:

matchLabels:

app: uat-nginx-selector

template:

metadata:

labels:

app: uat-nginx-selector

spec:

containers:

- name: uat-nginx-container

image: harbor.haostack.com/k8s/jack_k8s_nginx-web1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- name: volume-nginx-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: volume-nginx-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: volume-nginx-images

nfs:

server: 172.16.62.24

path: /nfsdata/k8s/images

- name: volume-nginx-static

nfs:

server: 172.16.62.24

path: /nfsdata/k8s/static

#nodeSelector:

# group: magedu

---

kind: Service

apiVersion: v1

metadata:

labels:

app: uat-nginx-service-label

name: uat-nginx-service

namespace: ns-uat

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30016

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30443

selector:

app: uat-nginx-selector

root@master1:/data/web/nginx-web1#

3.4 NFS伺服器

- 172.16.62.24

[root@node24 ~]# cat /etc/exports

/nfsdata/node11 172.16.62.*(rw,sync,no_root_squash)

/nfsdata/node12 172.16.62.*(rw,sync,no_root_squash)

/nfsdata/node13 172.16.62.*(rw,sync,no_root_squash)

/nfsdata/harbor25 172.16.62.*(rw,sync,no_root_squash)

/nfsdata/harbor26 172.16.62.*(rw,sync,no_root_squash)

/nfsdata/k8s *(rw,sync,no_root_squash)

3.5 haproxy設定

listen uat-nginx-80

bind 172.16.62.191:80

mode tcp

balance roundrobin

server node1 172.16.62.207:30016 check inter 3s fall 3 rise 5

server node2 172.16.62.208:30016 check inter 3s fall 3 rise 5

server node3 172.16.62.209:30016 check inter 3s fall 3 rise 5

root@ha1:/etc/haproxy#

3.6 測試nginx

#nginx 預設頁面

[root@node24 ~]# curl http://172.16.62.191

k8s lab nginx web v1

#nginx webapp 頁面

[root@node24 ~]# curl http://172.16.62.191/webapp/index.html

webapp nginx v1

[root@node24 ~]#

3.7 JDK基礎映象製作

3.7.1 JAK Dockerfile

root@master1:/data/web/jdk-1.8.212# more Dockerfile

#JDK基礎映象製作

FROM harbor.haostack.com/k8s/jack_k8s_base-centos:v1

MAINTAINER jack liu<[email protected]>

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

root@master1:/data/web/jdk-1.8.212#

- 檔案

root@master1:/data/web/jdk-1.8.212# tree

.

├── build-command.sh

├── Dockerfile

├── jdk-8u212-linux-x64.tar.gz

└── profile

0 directories, 4 files

root@master1:/data/web/jdk-1.8.212#

3.8 tomcat基礎映象製作

root@master1:/data/web/tomcat-base# cat Dockerfile

#Tomcat 8.5.43基礎映象

FROM harbor.haostack.com/k8s/jack_k8s_base-jdk:v8.212

MAINTAINER jack liu<[email protected]>

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2021 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

root@master1:/data/web/tomcat-base#

- 檔案

root@master1:/data/web/tomcat-base# tree

.

├── apache-tomcat-8.5.43.tar.gz

├── build-command.sh

└── Dockerfile

0 directories, 3 files

root@master1:/data/web/tomcat-base#

3.9 tomcat-app1映象製作

3.9.1 1Dockerfile 檔案

root@master1:/data/web/tomcat-app1# cat Dockerfile

#tomcat-app1

FROM harbor.haostack.com/k8s/jack_k8s_base-tomcat:v8.5.43

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

#ADD myapp/* /data/tomcat/webapps/myapp/

ADD app1.tar.gz /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R tomcat.tomcat /data/ /apps/

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

root@master1:/data/web/tomcat-app1#

3.9.2 執行指令碼run_tomcat.sh

root@master1:/data/web/tomcat-app1# more run_tomcat.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#echo "192.168.7.248 k8s-vip.example.com" >> /etc/hosts

/usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

su - tomcat -c "/apps/tomcat/bin/catalina.sh start"

tail -f /etc/hosts

root@master1:/data/web/tomcat-app1#

3.9.3 server.xml

- 需要修改專案路徑

root@master1:/data/web/tomcat-app1# more server.xml

<?xml version='1.0' encoding='utf-8'?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

-->

<Server port="8005" shutdown="SHUTDOWN">

<Listener className="org.apache.catalina.startup.VersionLoggerListener" />

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL/TLS HTTP/1.1 Connector on port 8080

-->

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL/TLS HTTP/1.1 Connector on port 8443

This connector uses the NIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11NioProtocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

<Host name="localhost" appBase="/data/tomcat/webapps" unpackWARs="true" autoDeploy="true">

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

root@master1:/data/web/tomcat-app1#

3.9.4 yaml 檔案

root@master1:/data/web/tomcat-app1# more tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: uat-tomcat-app1-deployment-label

name: uat-tomcat-app1-deployment

namespace: ns-uat

spec:

replicas: 1

selector:

matchLabels:

app: uat-tomcat-app1-selector

template:

metadata:

labels:

app: uat-tomcat-app1-selector

spec:

containers:

- name: uat-tomcat-app1-container

image: harbor.haostack.com/k8s/jack_k8s_tomcat-app1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

---

kind: Service

apiVersion: v1

metadata:

labels:

app: uat-tomcat-app1-service-label

name: uat-tomcat-app1-service

namespace: ns-uat

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30017

selector:

app: uat-tomcat-app1-selector

root@master1:/data/web/tomcat-app1#

3.10 nginx+tomcat+NFS 實現動靜分離

3.10.1 nginx.conf 需要修改爲 server name 名稱,實現轉發

- server name名稱 uat-tomcat-app1-service.ns-uat.svc.haostack.com

root@master1:/data/web/nginx-web1# cat nginx.conf

user nginx nginx;

worker_processes auto;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

upstream tomcat_webserver {

server uat-tomcat-app1-service.ns-uat.svc.haostack.com:80;

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

location /webapp {

root html;

index index.html index.htm;

}

location /myapp {

proxy_pass http://tomcat_webserver;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

root@master1:/data/web/nginx-web1#

3.10.2 Dockerfile

3.10.3 nginx.yaml

root@master1:/data/web/nginx-web1# cat nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: uat-nginx-deployment-label

name: uat-nginx-deployment

namespace: ns-uat

spec:

replicas: 1

selector:

matchLabels:

app: uat-nginx-selector

template:

metadata:

labels:

app: uat-nginx-selector

spec:

containers:

- name: uat-nginx-container

image: harbor.haostack.com/k8s/jack_k8s_nginx-web1:v2

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- name: volume-nginx-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: volume-nginx-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: volume-nginx-images

nfs:

server: 172.16.62.24

path: /nfsdata/k8s/images

- name: volume-nginx-static

nfs:

server: 172.16.62.24

path: /nfsdata/k8s/static

#nodeSelector:

# group: magedu

---

kind: Service

apiVersion: v1

metadata:

labels:

app: uat-nginx-service-label

name: uat-nginx-service

namespace: ns-uat

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30016

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30443

selector:

app: uat-nginx-selector

root@master1:/data/web/nginx-web1#

3.11 測試

- haproxy上設定代理 172.16.62.191:80

- 測試nginx頁面

[root@node24 ~]# curl http://172.16.62.191

k8s lab nginx web v1

- 測試nginx webapp

[root@node24 ~]# curl http://172.16.62.191/webapp/

webapp nginx v1

- 測試nginx轉發到tomcat 頁面

[root@node24 ~]# curl http://172.16.62.191/myapp/index.html

k8s lab tomcat app1 v1

[root@node24 ~]#