泰坦尼克號——「十年生死兩茫茫」

機器學習——泰坦尼克號生死預測案例

引言:學習機器學習已經有一段時間了,在Kaggle裡看到一個針對初學者練手的一個案例——關於泰坦尼克號之災,今天我也拿它來練練手,順便記錄一下。

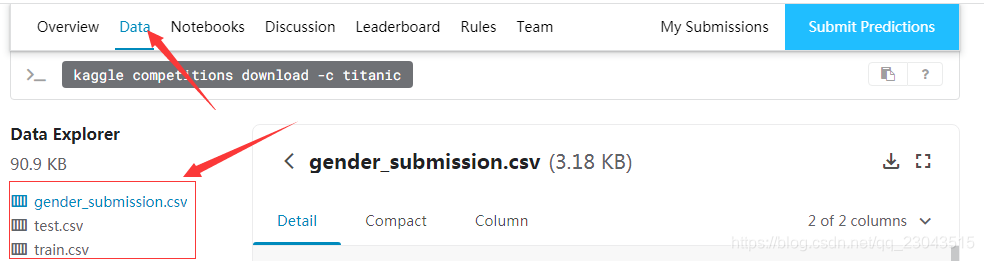

一、先從Kaggle官網上下載一些數據:

下載完,我們得到壓縮包,擠壓後得到3個檔案,一個是訓練數據集 train.csv,一個是測試數據集test.csv,還有一個是記錄乘客Id和是否存活的檔案gender_submission.csv。

這樣,我們專案數據已經準備好了。

二、特徵提取(對數據分析和清洗)

重要提示:我之前是做開發的,也有很多跟我一樣做開發的童鞋,平常拿到的配表之類的檔案,都是配好的,拿來可以直接帶入到邏輯程式碼裏面使用,但是,機器學習不一樣。做機器學習的主要流程:特徵提取、建立模型、訓練模型和評估模型,其中特徵提取,非常關鍵。我們後面的所有都是在特徵提取的基礎上來完成的,所有特徵提取就顯得尤爲關鍵。

1、建立一個專案資料夾taitan**,把三個數據檔案和**一個程式碼檔案(taitan.py)放在資料夾下,後面的程式碼我就都寫在taitan.py裏面了(小專案,不需要多個檔案)。

匯入我們要做特徵提取的一些類庫:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

2、首先,我們先分析一下train.csv裡的數據

trainData = pd.read_csv("train.csv")

trainData.info()

輸出資訊:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

從上面輸出,我們可以得到一下資訊:

a、 除去Survived,因爲Survived就是我們要預測的值。這樣我們一共有11個特徵資訊,其他特徵重要性後面我們再看。

b、 這裏面一共有891條乘客數據,Age 缺少177條;Cabin只有204條,缺少較多;Embarked只缺少2條。

3、我們逐條看看 各個特徵 和 Survived 之間的關係

a、PassengerId:乘客的編號,這個特徵資訊應該與Survived之間的關係不大

Name(名字先不看)它有點特殊

b、Pclass:社會等級,先看看Pclass裡的數據

print(trainData['Pclass'])

0 3

1 1

2 3

3 1

4 3

..

886 2

887 1

888 3

889 1

890 3

Name: Pclass, Length: 891, dtype: int64

Pclass都是一些int型的數據,數值都是{1,2,3}。

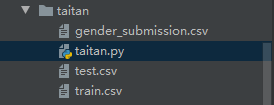

我們來看看Pclass和Survived之間的關係:

pclass = trainData['Pclass'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

輸出數據:

Pclass 1 2 3

Survived

0 80 97 372

1 136 87 119

pclass = trainData['Pclass'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

Pcalss = 1時候,存活的人數 > 死亡的人數;

Pcalss = 2時候,存活的人數 和 死亡的人數 幾乎一樣;

Pcalss = 3時候,存活的人數 < 死亡的人數;

看來,Pcalss 和 Survived有關係,估計有錢的人,在船艙的位置比較好,環境裏面人員少,比較好逃生。

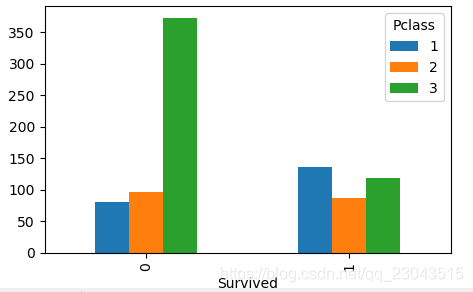

c、Sex:性別,跟Pclass一樣,直接看數據和圖

pclass = trainData['Sex'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

輸出數據:

Sex female male

Survived

0 81 468

1 233 109

pclass = trainData['Sex'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

圖和數據結合發現:死亡中,女的少,男的多;存活中,女的多,男的少;而且差異非常大。說明性別對獲救的影響非常高。

這讓我想起一句話:讓婦女和兒童先走!

Sex:它不是一個數值,做機器學習,是對數據進行分析,因此,這裏把Sex用one-hot編碼處理,新建2個列(」Sex_female「,「Sex_male」)。Sex_female=1,Sex_male=0 代表女性;Sex_female=0,Sex_male=1代表男性,並加入到原來的數據集裡。

程式碼如下:

dummies_Sex = pd.get_dummies(trainData['Sex'], prefix= 'Sex').astype("int64")

trainData = pd.concat([trainData,dummies_Sex],axis=1)#整合到原來數據**trainData**裏面

trainData.info()

輸入:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 14 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 889 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

dtypes: float64(2), int64(7), object(5)

memory usage: 97.6+ KB

## (本來這邊要分析Age(年齡)的,但是Age有缺損,放在後面)

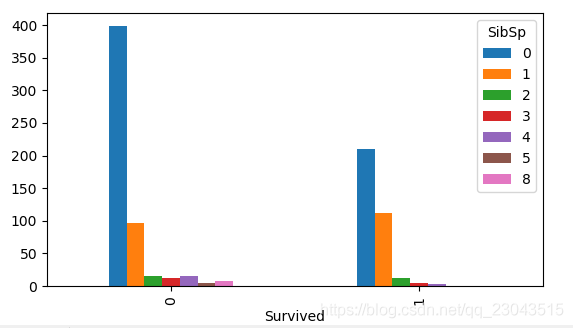

d、SibSp和Parch:這兩個分別是 直系親友 和 旁系親友

先來看看 SibSp和Survived:

pclass = trainData['SibSp'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

輸入:

SibSp 0 1 2 3 4 5 8

Survived

0 398.0 97.0 15.0 12.0 15.0 5.0 7.0

1 210.0 112.0 13.0 4.0 3.0 NaN NaN

pclass = trainData['SibSp'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

直系親友越多,存活率越高。

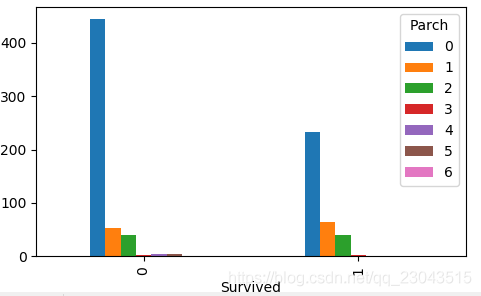

我們再看看 Parch和Survived:

pclass = trainData['Parch'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

輸入:

Parch 0 1 2 3 4 5 6

Survived

0 445.0 53.0 40.0 2.0 4.0 4.0 1.0

1 233.0 65.0 40.0 3.0 NaN 1.0 NaN

pclass = trainData['Parch'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

旁系親友越多,存活率越高。

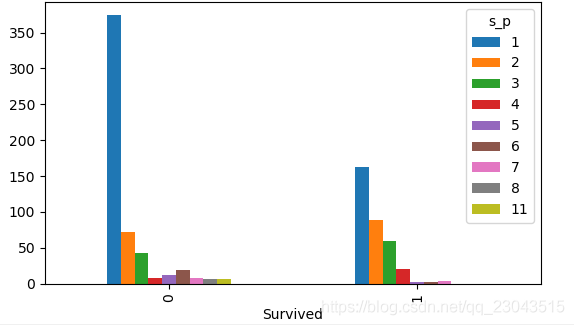

我們把這Parch和SibSp兩列的值合併,生成一個s_p特徵(當然自己也要加上),看看:

trainData["s_p"] = trainData["SibSp"] + trainData["Parch"]+1#都是沒有缺損值的int64型別數據,不需要其他處理

trainData.info()

輸出

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 15 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 889 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

dtypes: float64(2), int64(8), object(5)

memory usage: 104.5+ KB

這時候,來看看s_p和Survived的關係:

從上面對 Parch 、SibSp和s_p來分析,親友越多,存活率越高;但是當人數大於4的時候,存活率就下降了。可以想象逃生的時候有多個人一起幫忙,獲救率應該會高很多,但是人太多了,如果一個人救1、2個人還好,再繼續救人,搞不好會把自己搭上。

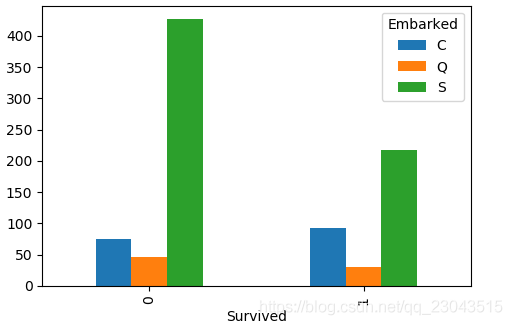

e、Embarked:上船時的港口編號:

直接看數據和圖:

pclass = trainData['Embarked'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

輸出:

Embarked C Q S

Survived

0 75 47 427

1 93 30 217

pclass = trainData['Embarked'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

從圖中發現,C港口存活率是1/2多一些,Q港口存活率略小於1/2,S港口存活率1/3多一些;看來Embarked和Survived還是有關係的。

但是Embarked不是數據,我們要把Embarked轉換成數據才能 纔能訓練,和Sex一樣,但是首先要把Embarked缺少的2個值補齊。

Pclass(社會地位)、Fare(票價)應該和Embarked(上船時的港口編號),是有聯繫的。社會地位高,Embarked肯定會好些,下面 下麪我們來看看他們三者之間的關係:

print(trainData.groupby(by=["Pclass","Embarked"]).Fare.median())

Pclass Embarked

1 C 78.2667

Q 90.0000

S 52.0000

2 C 24.0000

Q 12.3500

S 13.5000

3 C 7.8958

Q 7.7500

S 8.0500

Name: Fare, dtype: float64

再看看,2個缺損Embarked乘客的資訊:

print(trainData[pd.isna(trainData["Embarked"])])

PassengerId Survived Pclass ... Embarked_C Embarked_Q Embarked_S

61 62 1 1 ... 0 0 0

829 830 1 1 ... 0 0 0

[2 rows x 18 columns]

由於2人的Pclass都是1,並且2人的Fare都是80,結合上面Pclass(社會地位)、Fare(票價)應該和Embarked(上船時的港口編號)的關係數據,我們可以把這2個人缺損的Embarked都用C來代替。

trainData['Embarked'] = trainData['Embarked'].fillna('C')

trainData.info()

輸出:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 15 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 891 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

dtypes: float64(2), int64(8), object(5)

memory usage: 104.5+ KB

接着把Embarked進行one-hot編碼處理:

dummies_Embarked = pd.get_dummies(trainData['Embarked'], prefix= 'Embarked').astype("int64")

trainData = pd.concat([trainData,dummies_Embarked],axis=1)#整合到原來數據**trainData**裏面

trainData.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 18 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 891 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

15 Embarked_C 891 non-null int64

16 Embarked_Q 891 non-null int64

17 Embarked_S 891 non-null int64

dtypes: float64(2), int64(11), object(5)

memory usage: 125.4+ KB

f、Name:名字

一般名字與大部分事物預測沒啥聯繫,但是這裏給定特徵Name不一樣,它帶有一些專屬特稱,如:Mr、Mrs、Master等,具有一定社會地位的標記。我們把這些標記做一個分類:

‘Capt’, ‘Col’, ‘Major’, ‘Dr’, ‘Rev’——>「Officer」

‘Don’, ‘Sir’, ‘the Countess’, ‘Dona’, ‘Lady’——>「Royalty」

‘Mme’, ‘Ms’, ‘Mrs’——>「Mrs」

‘Mlle’, ‘Miss’——>「Miss」

‘Master’,‘Jonkheer’——>「Master」

「Mr」——>‘Mr’

trainData['Name_flag'] = trainData['Name'].apply(lambda x:x.split(',')[1].split('.')[0].strip())

trainData['Name_flag'].replace(['Capt', 'Col', 'Major', 'Dr', 'Rev'],'Officer', inplace=True)

trainData['Name_flag'].replace(['Don', 'Sir', 'the Countess', 'Dona', 'Lady'], 'Royalty', inplace=True)

trainData['Name_flag'].replace(['Mme', 'Ms', 'Mrs'],'Mrs', inplace=True)

trainData['Name_flag'].replace(['Mlle', 'Miss'], 'Miss', inplace=True)

trainData['Name_flag'].replace(['Master','Jonkheer'],'Master', inplace=True)

trainData['Name_flag'].replace(['Mr'], 'Mr', inplace=True)

trainData.info()

輸出:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 19 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 891 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

15 Embarked_C 891 non-null int64

16 Embarked_Q 891 non-null int64

17 Embarked_S 891 non-null int64

18 Name_flag 891 non-null object

dtypes: float64(2), int64(11), object(6)

memory usage: 132.4+ KB

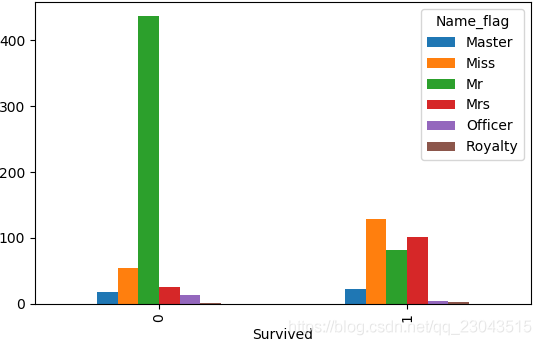

下面 下麪我們來看看Name_flag和Survived的相關性:

pclass = trainData['Name_flag'].groupby(trainData['Survived'])

print(pclass.value_counts().unstack())

Name_flag Master Miss Mr Mrs Officer Royalty

Survived

0 18 55 436 26 13 1

1 23 129 81 101 5 3

pclass = trainData['Name_flag'].groupby(trainData['Survived'])

pclass.value_counts().unstack().plot(kind='bar')

plt.show()

從上面數據我們可以看出,Miss和Mrs存活率比其他的高了很多。

下面 下麪我們把Name_flag進行one-hot編碼:

dummies_Name = pd.get_dummies(trainData['Name_flag'], prefix= 'Name_flag').astype("int64")

trainData = pd.concat([trainData,dummies_Name],axis=1)#整合到原來數據**trainData**裏面

trainData.info()

輸出:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 25 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 714 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 891 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

15 Embarked_C 891 non-null int64

16 Embarked_Q 891 non-null int64

17 Embarked_S 891 non-null int64

18 Name_flag 891 non-null object

19 Name_flag_Master 891 non-null int64

20 Name_flag_Miss 891 non-null int64

21 Name_flag_Mr 891 non-null int64

22 Name_flag_Mrs 891 non-null int64

23 Name_flag_Officer 891 non-null int64

24 Name_flag_Royalty 891 non-null int64

dtypes: float64(2), int64(17), object(6)

memory usage: 174.1+ KB

g、Age:年齡

年齡的缺失很大,用衆數或者平均數填充是不合理的,因此這裏使用隨機森林的方法來估測缺損的年齡數據。

from sklearn.ensemble import RandomForestRegressor

train_part = trainData[["Age",'Pclass', 'Sex_female','Sex_male',

'Name_flag_Master',"Name_flag_Miss","Name_flag_Mr",

"Name_flag_Mrs","Name_flag_Officer","Name_flag_Royalty"]]

train_part=pd.get_dummies(train_part)

age_has = train_part[train_part.Age.notnull()].values

age_no = train_part[train_part.Age.isnull()].values

y = age_has[:, 0]

X = age_has[:, 1:]

rfr_clf = RandomForestRegressor(random_state=60, n_estimators=100, n_jobs=-1)

rfr_clf.fit(X, y)

result = rfr_clf.predict(age_no[:, 1:])

trainData.loc[ (trainData.Age.isnull()), 'Age' ] = result

trainData.info()

輸出:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 25 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 PassengerId 891 non-null int64

1 Survived 891 non-null int64

2 Pclass 891 non-null int64

3 Name 891 non-null object

4 Sex 891 non-null object

5 Age 891 non-null float64

6 SibSp 891 non-null int64

7 Parch 891 non-null int64

8 Ticket 891 non-null object

9 Fare 891 non-null float64

10 Cabin 204 non-null object

11 Embarked 891 non-null object

12 Sex_female 891 non-null int64

13 Sex_male 891 non-null int64

14 s_p 891 non-null int64

15 Embarked_C 891 non-null int64

16 Embarked_Q 891 non-null int64

17 Embarked_S 891 non-null int64

18 Name_flag 891 non-null object

19 Name_flag_Master 891 non-null int64

20 Name_flag_Miss 891 non-null int64

21 Name_flag_Mr 891 non-null int64

22 Name_flag_Mrs 891 non-null int64

23 Name_flag_Officer 891 non-null int64

24 Name_flag_Royalty 891 non-null int64

dtypes: float64(2), int64(17), object(6)

memory usage: 174.1+ KB

這樣,缺少的Age資訊我們也補全了

h、Cabin這個數據缺損台多了,這裏我捨棄了

那麼現在,測試數據裏面的特徵以及清洗、提取了,後面我們要開始訓練我們的模型了。

三、訓練模型

import pandas as pd

import numpy as np

from sklearn.model_selection import StratifiedKFold

from sklearn.linear_model import SGDClassifier

from sklearn.base import clone

trainData = pd.read_csv("train.csv")

dummies_Sex = pd.get_dummies(trainData['Sex'], prefix= 'Sex').astype("int64")

trainData = pd.concat([trainData,dummies_Sex],axis=1)

trainData["s_p"] = trainData["SibSp"] + trainData["Parch"]+1

trainData['Embarked'] = trainData['Embarked'].fillna('C')

dummies_Embarked = pd.get_dummies(trainData['Embarked'], prefix= 'Embarked').astype("int64")

trainData = pd.concat([trainData,dummies_Embarked],axis=1)

trainData['Name_flag'] = trainData['Name'].apply(lambda x:x.split(',')[1].split('.')[0].strip())

trainData['Name_flag'].replace(['Capt', 'Col', 'Major', 'Dr', 'Rev'],'Officer', inplace=True)

trainData['Name_flag'].replace(['Don', 'Sir', 'the Countess', 'Dona', 'Lady'], 'Royalty', inplace=True)

trainData['Name_flag'].replace(['Mme', 'Ms', 'Mrs'],'Mrs', inplace=True)

trainData['Name_flag'].replace(['Mlle', 'Miss'], 'Miss', inplace=True)

trainData['Name_flag'].replace(['Master','Jonkheer'],'Master', inplace=True)

trainData['Name_flag'].replace(['Mr'], 'Mr', inplace=True)

dummies_Name = pd.get_dummies(trainData['Name_flag'], prefix= 'Name_flag').astype("int64")

trainData = pd.concat([trainData,dummies_Name],axis=1)

from sklearn.ensemble import RandomForestRegressor

train_part = trainData[["Age",'Pclass', 'Sex_female','Sex_male',

'Name_flag_Master',"Name_flag_Miss","Name_flag_Mr",

"Name_flag_Mrs","Name_flag_Officer","Name_flag_Royalty"]]

train_part=pd.get_dummies(train_part)

age_has = train_part[train_part.Age.notnull()].values

age_no = train_part[train_part.Age.isnull()].values

y = age_has[:, 0]

X = age_has[:, 1:]

rfr_clf = RandomForestRegressor(random_state=60, n_estimators=100, n_jobs=-1)

rfr_clf.fit(X, y)

result = rfr_clf.predict(age_no[:, 1:])

trainData.loc[ (trainData.Age.isnull()), 'Age' ] = result

train_Model_data = trainData[["Survived","Age",'Pclass', 'Sex_female','Sex_male',

'Name_flag_Master',"Name_flag_Miss","Name_flag_Mr",

"Name_flag_Mrs","Name_flag_Officer","Name_flag_Royalty",

"s_p","Embarked_C","Embarked_S","Embarked_Q"]].values

dataLen = len(train_Model_data)

train_x = train_Model_data[:,1:]

train_y = train_Model_data[:,0]

shuffle_index = np.random.permutation(dataLen)

train_x = train_x[shuffle_index]

train_y = train_y[shuffle_index]

skfolds = StratifiedKFold(n_splits=3, random_state=42)

sgd_clf = SGDClassifier(loss='log', random_state=42, max_iter=1000, tol=1e-4)

for train_index, test_index in skfolds.split(train_x, train_y):

clone_clf = clone(sgd_clf)

X_train_folds = train_x[train_index]

y_train_folds = train_y[train_index]

X_test_folds = train_x[test_index]

y_test_folds = train_y[test_index]

clone_clf.fit(X_train_folds, y_train_folds)

y_pred = clone_clf.predict(X_test_folds)

print(y_pred)

n_correct = sum(y_pred == y_test_folds)

print(n_correct / len(y_pred))

輸出:

[0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 1. 0. 0. 1. 0. 0. 0. 1. 0. 0. 0. 0. 0.

0. 1. 0. 0. 0. 0. 1. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 1. 0. 0. 0. 1. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 1. 0. 0. 1. 1. 0. 0. 0. 0. 0. 0.

0. 0. 1. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.

1. 0. 1. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 0. 1. 0. 1. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 1. 0. 0. 0. 0.

0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 1. 1. 0. 0. 0.

0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0.

1. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 1. 0. 1. 1. 0. 0. 0. 0. 0. 1. 1.

1. 0. 1. 1. 1. 1. 0. 1. 0.]

0.7676767676767676

[0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 1. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 0. 0. 0. 0. 1. 1. 0. 1.

1. 1. 1. 1. 0. 1. 0. 0. 1. 1. 0. 0. 0. 1. 1. 1. 0. 0. 0. 0. 1. 0. 0. 1.

1. 1. 0. 1. 1. 0. 0. 0. 1. 0. 1. 0. 0. 0. 1. 1. 1. 0. 1. 0. 0. 0. 0. 0.

0. 0. 0. 0. 1. 0. 0. 1. 1. 0. 1. 0. 1. 1. 1. 1. 0. 1. 1. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 1. 1. 0. 1. 0.

1. 0. 0. 0. 0. 1. 1. 0. 1. 1. 0. 0. 0. 0. 0. 1. 1. 0. 1. 1. 0. 0. 1. 0.

0. 1. 0. 0. 0. 1. 0. 0. 0. 1. 0. 1. 1. 0. 1. 0. 1. 0. 0. 1. 0. 0. 0. 1.

1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 1. 0. 0. 1. 0. 0. 1. 0. 1. 0. 1. 1. 0. 0. 0. 0. 1. 1. 0.

1. 0. 0. 0. 1. 1. 0. 0. 1. 0. 0. 0. 0. 1. 1. 0. 1. 0. 0. 0. 0. 0. 0. 1.

0. 1. 1. 0. 0. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 0. 0. 1. 1. 1. 1.

0. 1. 1. 1. 1. 1. 1. 1. 1.]

0.8249158249158249

[0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 1. 0. 1.

0. 1. 0. 0. 1. 1. 1. 0. 1. 0. 0. 0. 0. 1. 1. 0. 0. 0. 0. 1. 1. 0. 0. 0.

0. 0. 0. 1. 1. 0. 0. 0. 1. 0. 0. 1. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1.

1. 0. 1. 1. 1. 0. 0. 0. 0. 1. 0. 0. 1. 0. 0. 1. 0. 1. 1. 1. 0. 0. 1. 0.

1. 1. 0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 0. 0.

0. 0. 1. 0. 1. 0. 0. 0. 0. 1. 1. 1. 1. 0. 0. 1. 0. 1. 0. 1. 0. 1. 0. 0.

0. 0. 1. 0. 0. 0. 0. 1. 0. 0. 1. 0. 0. 1. 1. 0. 1. 0. 0. 0. 1. 0. 1. 1.

0. 0. 0. 0. 0. 0. 1. 1. 0. 1. 0. 0. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 1. 1.

0. 0. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 1. 1. 1. 1. 0. 0. 1.

0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 0. 1. 0. 0. 1. 0. 0. 1. 0. 0. 1. 0. 0.

0. 1. 1. 0. 1. 1. 0. 1. 0. 0. 1. 0. 1. 0. 0. 0. 0. 0. 1. 0. 0. 0. 1. 0.

0. 0. 1. 0. 0. 0. 1. 0. 0. 0. 1. 0. 1. 1. 1. 0. 0. 1. 0. 1. 0. 0. 0. 1.

1. 0. 0. 0. 1. 0. 1. 0. 0.]

0.8282828282828283

這個模型我沒有用 test.csv裡的數據測試,僅僅用了train.csv裡的數據進行了3折的交叉驗證。

最終:我的模型得分爲 0.8282828282828283