🔥🔥探索人工智慧的世界:構建智慧問答系統之實戰篇

引言

前面我們已經做好了必要的準備工作,包括對相關知識點的瞭解以及環境的安裝。今天我們將重點關注程式碼方面的內容。如果你已經具備了Java程式設計基礎,那麼理解Python語法應該不會成為問題,畢竟只是語法的差異而已。隨著時間的推移,你自然會逐漸熟悉和掌握這門語言。現在讓我們開始吧!

環境安裝命令

在使用之前,我們需要先進行一些必要的準備工作,其中包括執行一些命令。如果你已經仔細閱讀了Milvus的官方檔案,你應該已經瞭解到了這一點。下面是需要執行的一些命令範例:

pip3 install langchain

pip3 install openai

pip3 install protobuf==3.20.0

pip3 install grpcio-tools

python3 -m pip install pymilvus==2.3.2

python3 -c "from pymilvus import Collection"

快速入門

現在,我們來嘗試使用官方範例,看看在沒有整合LangChain的情況下,我們需要編寫多少程式碼才能完成插入、查詢等操作。官方範例已經在前面的註釋中詳細講解了所有的流程。總體流程如下:

- 連線到資料庫

- 建立集合(這裡還有分割區的概念,我們不深入討論)

- 插入向量資料(我看官方檔案就簡單插入了一些數位...)

- 建立索引(根據官方檔案的說法,通常在一定資料量下是不會經常建立索引的)

- 查詢資料

- 刪除資料

- 斷開與資料庫的連線

通過以上步驟,你會發現與連線MySQL資料庫的操作非常相似。

# hello_milvus.py demonstrates the basic operations of PyMilvus, a Python SDK of Milvus.

# 1. connect to Milvus

# 2. create collection

# 3. insert data

# 4. create index

# 5. search, query, and hybrid search on entities

# 6. delete entities by PK

# 7. drop collection

import time

import numpy as np

from pymilvus import (

connections,

utility,

FieldSchema, CollectionSchema, DataType,

Collection,

)

fmt = "\n=== {:30} ===\n"

search_latency_fmt = "search latency = {:.4f}s"

num_entities, dim = 3000, 8

#################################################################################

# 1. connect to Milvus

# Add a new connection alias `default` for Milvus server in `localhost:19530`

# Actually the "default" alias is a buildin in PyMilvus.

# If the address of Milvus is the same as `localhost:19530`, you can omit all

# parameters and call the method as: `connections.connect()`.

#

# Note: the `using` parameter of the following methods is default to "default".

print(fmt.format("start connecting to Milvus"))

connections.connect("default", host="localhost", port="19530")

has = utility.has_collection("hello_milvus")

print(f"Does collection hello_milvus exist in Milvus: {has}")

#################################################################################

# 2. create collection

# We're going to create a collection with 3 fields.

# +-+------------+------------+------------------+------------------------------+

# | | field name | field type | other attributes | field description |

# +-+------------+------------+------------------+------------------------------+

# |1| "pk" | VarChar | is_primary=True | "primary field" |

# | | | | auto_id=False | |

# +-+------------+------------+------------------+------------------------------+

# |2| "random" | Double | | "a double field" |

# +-+------------+------------+------------------+------------------------------+

# |3|"embeddings"| FloatVector| dim=8 | "float vector with dim 8" |

# +-+------------+------------+------------------+------------------------------+

fields = [

FieldSchema(name="pk", dtype=DataType.VARCHAR, is_primary=True, auto_id=False, max_length=100),

FieldSchema(name="random", dtype=DataType.DOUBLE),

FieldSchema(name="embeddings", dtype=DataType.FLOAT_VECTOR, dim=dim)

]

schema = CollectionSchema(fields, "hello_milvus is the simplest demo to introduce the APIs")

print(fmt.format("Create collection `hello_milvus`"))

hello_milvus = Collection("hello_milvus", schema, consistency_level="Strong")

################################################################################

# 3. insert data

# We are going to insert 3000 rows of data into `hello_milvus`

# Data to be inserted must be organized in fields.

#

# The insert() method returns:

# - either automatically generated primary keys by Milvus if auto_id=True in the schema;

# - or the existing primary key field from the entities if auto_id=False in the schema.

print(fmt.format("Start inserting entities"))

rng = np.random.default_rng(seed=19530)

entities = [

# provide the pk field because `auto_id` is set to False

[str(i) for i in range(num_entities)],

rng.random(num_entities).tolist(), # field random, only supports list

rng.random((num_entities, dim)), # field embeddings, supports numpy.ndarray and list

]

insert_result = hello_milvus.insert(entities)

hello_milvus.flush()

print(f"Number of entities in Milvus: {hello_milvus.num_entities}") # check the num_entities

################################################################################

# 4. create index

# We are going to create an IVF_FLAT index for hello_milvus collection.

# create_index() can only be applied to `FloatVector` and `BinaryVector` fields.

print(fmt.format("Start Creating index IVF_FLAT"))

index = {

"index_type": "IVF_FLAT",

"metric_type": "L2",

"params": {"nlist": 128},

}

hello_milvus.create_index("embeddings", index)

################################################################################

# 5. search, query, and hybrid search

# After data were inserted into Milvus and indexed, you can perform:

# - search based on vector similarity

# - query based on scalar filtering(boolean, int, etc.)

# - hybrid search based on vector similarity and scalar filtering.

#

# Before conducting a search or a query, you need to load the data in `hello_milvus` into memory.

print(fmt.format("Start loading"))

hello_milvus.load()

# -----------------------------------------------------------------------------

# search based on vector similarity

print(fmt.format("Start searching based on vector similarity"))

vectors_to_search = entities[-1][-2:]

search_params = {

"metric_type": "L2",

"params": {"nprobe": 10},

}

start_time = time.time()

result = hello_milvus.search(vectors_to_search, "embeddings", search_params, limit=3, output_fields=["random"])

end_time = time.time()

for hits in result:

for hit in hits:

print(f"hit: {hit}, random field: {hit.entity.get('random')}")

print(search_latency_fmt.format(end_time - start_time))

# -----------------------------------------------------------------------------

# query based on scalar filtering(boolean, int, etc.)

print(fmt.format("Start querying with `random > 0.5`"))

start_time = time.time()

result = hello_milvus.query(expr="random > 0.5", output_fields=["random", "embeddings"])

end_time = time.time()

print(f"query result:\n-{result[0]}")

print(search_latency_fmt.format(end_time - start_time))

# -----------------------------------------------------------------------------

# pagination

r1 = hello_milvus.query(expr="random > 0.5", limit=4, output_fields=["random"])

r2 = hello_milvus.query(expr="random > 0.5", offset=1, limit=3, output_fields=["random"])

print(f"query pagination(limit=4):\n\t{r1}")

print(f"query pagination(offset=1, limit=3):\n\t{r2}")

# -----------------------------------------------------------------------------

# hybrid search

print(fmt.format("Start hybrid searching with `random > 0.5`"))

start_time = time.time()

result = hello_milvus.search(vectors_to_search, "embeddings", search_params, limit=3, expr="random > 0.5",

output_fields=["random"])

end_time = time.time()

for hits in result:

for hit in hits:

print(f"hit: {hit}, random field: {hit.entity.get('random')}")

print(search_latency_fmt.format(end_time - start_time))

###############################################################################

# 6. delete entities by PK

# You can delete entities by their PK values using boolean expressions.

ids = insert_result.primary_keys

expr = f'pk in ["{ids[0]}" , "{ids[1]}"]'

print(fmt.format(f"Start deleting with expr `{expr}`"))

result = hello_milvus.query(expr=expr, output_fields=["random", "embeddings"])

print(f"query before delete by expr=`{expr}` -> result: \n-{result[0]}\n-{result[1]}\n")

hello_milvus.delete(expr)

result = hello_milvus.query(expr=expr, output_fields=["random", "embeddings"])

print(f"query after delete by expr=`{expr}` -> result: {result}\n")

###############################################################################

# 7. drop collection

# Finally, drop the hello_milvus collection

print(fmt.format("Drop collection `hello_milvus`"))

utility.drop_collection("hello_milvus")

升級版

現在,讓我們來看一下使用LangChain版本的程式碼。由於我們使用的是封裝好的Milvus,所以我們需要一個嵌入模型。在這裡,我們選擇了HuggingFaceEmbeddings中的sensenova/piccolo-base-zh模型作為範例,當然你也可以選擇其他模型,這裡沒有限制。只要能將其作為一個變數傳遞給LangChain定義的函數呼叫即可。

下面是一個簡單的範例,包括資料庫連線、插入資料、查詢以及得分情況的定義:

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import Milvus

model_name = "sensenova/piccolo-base-zh"

embeddings = HuggingFaceEmbeddings(model_name=model_name)

print("連結資料庫")

vector_db = Milvus(

embeddings,

connection_args={"host": "localhost", "port": "19530"},

collection_name="hello_milvus",

)

print("簡單傳入幾個值")

vector_db.add_texts(["12345678","789","努力的小雨是一個知名博主,其名下有公眾號【靈墨AI探索室】,部落格:稀土掘金、部落格園、51CTO及騰訊雲等","你好啊","我不好"])

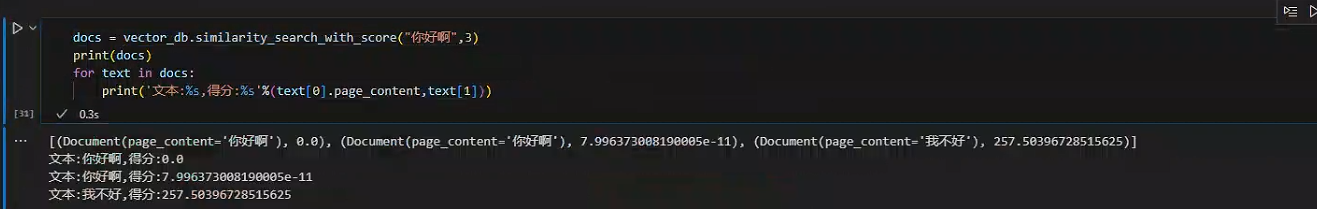

print("查詢前3個最相似的結果")

docs = vector_db.similarity_search_with_score("你好啊",3)

print("檢視其得分情況,分值越低越接近")

for text in docs:

print('文字:%s,得分:%s'%(text[0].page_content,text[1]))

注意,以上程式碼只是一個簡單範例,具體的實現可能會根據你的具體需求進行調整和優化。

在langchain版本的程式碼中,如果你想要執行除了自己需要開啟docker中的milvus容器之外的操作,還需要確保你擁有網路代理。這裡不多贅述,因為HuggingFace社群並不在國內。

個人客製化版

接下來,我們將詳細瞭解如何呼叫openai模型來回答問題!

from dotenv import load_dotenv

from langchain.prompts import ChatPromptTemplate, SystemMessagePromptTemplate, HumanMessagePromptTemplate;

from langchain import PromptTemplate

from langchain.chains import LLMChain

from langchain.chat_models.openai import ChatOpenAI

from langchain.schema import BaseOutputParser

# 載入env環境變數裡的key值

load_dotenv()

# 格式化輸出

class CommaSeparatedListOutputParser(BaseOutputParser):

"""Parse the output of an LLM call to a comma-separated list."""

def parse(self, text: str):

"""Parse the output of an LLM call."""

return text.strip().split(", ")

# 先從資料庫查詢問題解

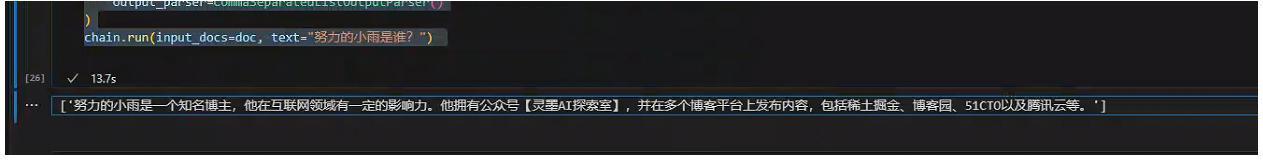

docs = vector_db.similarity_search("努力的小雨是誰?")

doc = docs[0].page_content

chat = ChatOpenAI(model_name='gpt-3.5-turbo', temperature=0)

template = "請根據我提供的資料回答問題,資料: {input_docs}"

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

human_template = "{text}"

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

# chat_prompt.format_messages(input_docs=doc, text="努力的小雨是誰?")

chain = LLMChain(

llm=chat,

prompt=chat_prompt,

output_parser=CommaSeparatedListOutputParser()

)

chain.run(input_docs=doc, text="努力的小雨是誰?")

當你成功執行完程式碼後,你將會得到你所期望的答案。如下圖所示,這些答案將會展示在你的螢幕上。不然,如果系統不知道這些問題的答案,那它又如何能夠給出正確的回答呢?

總結

通過本系列文章的學習,我們已經對個人或企業知識庫有了一定的瞭解。儘管OpenAI已經提供了私有知識庫的部署選項,但是其高昂的成本對於一些企業來說可能是難以承受的。無論將來國內企業是否會提供個人或企業知識庫的解決方案,我們都需要對其原理有一些瞭解。無論我們的預算多少,都可以找到適合自己的玩法,因為不同預算的玩法也會有所不同。