如何對PHP匯出的海量資料進行優化

2020-07-16 10:05:32

匯出資料量很大的情況下,生成excel的記憶體需求非常龐大,伺服器吃不消,這個時候考慮生成csv來解決問題,cvs讀寫效能比excel高。

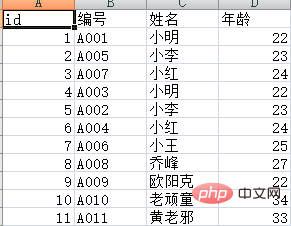

測試表student 資料(大家可以指令碼插入300多萬測資料。這裡只給個簡單的範例了)

SET NAMES utf8mb4; SET FOREIGN_KEY_CHECKS = 0; -- ---------------------------- -- Table structure for student -- ---------------------------- DROP TABLE IF EXISTS `student`; CREATE TABLE `student` ( `ID` int(11) NOT NULL AUTO_INCREMENT, `StuNo` varchar(32) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `StuName` varchar(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `StuAge` int(11) NULL DEFAULT NULL, PRIMARY KEY (`ID`) USING BTREE ) ENGINE = InnoDB AUTO_INCREMENT = 12 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Compact; -- ---------------------------- -- Records of student -- ---------------------------- INSERT INTO `student` VALUES (1, 'A001', '小明', 22); INSERT INTO `student` VALUES (2, 'A005', '小李', 23); INSERT INTO `student` VALUES (3, 'A007', '小紅', 24); INSERT INTO `student` VALUES (4, 'A003', '小明', 22); INSERT INTO `student` VALUES (5, 'A002', '小李', 23); INSERT INTO `student` VALUES (6, 'A004', '小紅', 24); INSERT INTO `student` VALUES (7, 'A006', '小王', 25); INSERT INTO `student` VALUES (8, 'A008', '喬峰', 27); INSERT INTO `student` VALUES (9, 'A009', '歐陽克', 22); INSERT INTO `student` VALUES (10, 'A010', '老頑童', 34); INSERT INTO `student` VALUES (11, 'A011', '黃老邪', 33); SET FOREIGN_KEY_CHECKS = 1;

匯出指令碼export.php

<?php

set_time_limit(0);

ini_set('memory_limit', '128M');

$fileName = date('YmdHis', time());

header('Content-Encoding: UTF-8');

header("Content-type:application/vnd.ms-excel;charset=UTF-8");

header('Content-Disposition: attachment;filename="' . $fileName . '.csv"');

//注意,資料量在大的情況下。比如匯出幾十萬到幾百萬,會出現504 Gateway Time-out,請修改php.ini的max_execution_time引數

//開啟php標準輸出流以寫入追加的方式開啟

$fp = fopen('php://output', 'a');

//連線資料庫

$dbhost = '127.0.0.1';

$dbuser = 'root';

$dbpwd = 'root';

$con = mysqli_connect($dbhost, $dbuser, $dbpwd);

if (mysqli_connect_errno())

die('connect error');

$database = 'test';//選擇資料庫

mysqli_select_db($con, $database);

mysqli_query($con, "set names UTF8");//如果需要請設定編碼

//用fputcsv從資料庫中匯出1百萬的資料,比如我們每次取1萬條資料,分100步來執行

//一次性讀取1萬條資料,也可以把$nums調小,$step相應增大。

$step = 100;

$nums = 10000;

$where = "where 1=1"; //篩選條件,可自行新增

//設定標題

$title = array('id', '編號', '姓名', '年齡'); //注意這裡是小寫id,否則ID命名開啟會提示Excel 已經檢測到"xxx.xsl"是SYLK檔案,但是不能將其載入: CSV 文或者XLS檔案的前兩個字元是大寫字母"I","D"時,會發生此問題。

foreach ($title as $key => $item)

$title[$key] = iconv("UTF-8", "GB2312//IGNORE", $item);

fputcsv($fp, $title);

for ($s = 1; $s <= $step; $s++) {

$start = ($s - 1) * $nums;

$result = mysqli_query($con, "SELECT ID,StuNo,StuName,StuAge FROM `student` " . $where . " ORDER BY `ID` LIMIT {$start},{$nums}");

if ($result) {

while ($row = mysqli_fetch_assoc($result)) {

foreach ($row as $key => $item)

$row[$key] = iconv("UTF-8", "GBK", $item); //這裡必須轉碼,不然會亂碼

fputcsv($fp, $row);

}

mysqli_free_result($result); //釋放結果集資源

ob_flush(); //每1萬條資料就重新整理緩衝區

flush();

}

}

mysqli_close($con);//斷開連線匯出效果:

相關教學:PHP視訊教學

以上就是如何對PHP匯出的海量資料進行優化的詳細內容,更多請關注TW511.COM其它相關文章!