【論文翻譯】Machine learning: Trends, perspectives, and prospects

論文題目:Machine learning: Trends, perspectives, and prospects

論文來源:Machine learning: Trends, perspectives, and prospects_2015_Science

翻譯人:BDML@CQUT實驗室

Machine learning: Trends, perspectives, and prospects

導讀

Despite practical challenges, we are hopeful that informed discussions among policy-makers and the public about data and the capabilities of machine learning, will lead to insightful designs of programs and policies that can balance the goals of protecting privacy and ensuring fairness with those of reaping the benefits to scientific research and to individual and public health. Our commitments to privacy and fairness are evergreen, but our policy choices must adapt to advance them, and support new techniques for deepening our knowledge.

儘管存在實際挑戰,但我們希望決策者之間就公開的數據和機器學習能力進行的知情討論,將導致程式設計的深刻設計,可以在保護隱私達到一個目標平衡,堅信公平將會給科學研究、個人隱私、公共健康帶來益處。 我們對隱私和公平的承諾一直在堅持,但我們的個人決策選擇必須適應不斷髮展的要求,並支援新技術以加深我們的知識。

Abstract

Machine learning addresses the question of how to build computers that improve automatically through experience. It is one of today’s most rapidly growing technical fields, lying at the intersection of computer science and statistics, and at the core of artificial intelligence and data science. Recent progress in machine learning has been driven both by the development of new learning algorithms and theory and by the ongoing explosion in the availability of online data and low-cost computation. The adoption of data-intensive machine-learning methods can be found throughout science, technology and commerce, leading to more evidence-based decision-making across many walks of life, including health care, manufacturing, education, financial modeling, policing, and marketing.

摘要

機器學習解決了如何通過經驗學習自動改進的計算機的問題。 它是當今發展最快的技術領域之一,位於電腦科學和統計學的交匯處,也是人工智慧和數據科學的核心。 機器學習的最新進展既受到新學習演算法和理論的發展的推動,也受到線上數據和低成本計算的不斷髮展的推動。 在科學,技術和商業中可以發現採用了數據密集型機器學習方法,從而導致在醫療,製造,教育,金融建模,警務和市場行銷等衆多領域進行了更多基於證據的決策 。

正文

Machine learning is a discipline focused on two interrelated questions: How can one construct computer systems that automatically improve through experience? and What are the fundamental statistical-computational- information-theoretic laws that govern all learning systems, including computers, humans, and organizations? The study of machine learning is important both for addressing these fundamental scientific and engineering questions and for the highly practical computer software it has produced and fielded across many applications.

機器學習是一門專注於兩個相互關聯的問題的學科:如何構建一個通過經驗自動改進的計算機系統?統計計算資訊理論的基本定律是什麼,支配着所有的學習系統,包括計算機、人類和組織?機器學習的研究對於解決這些基礎科學和工程問題以及它在許多應用中產生和應用的高度實用的計算機軟體都很重要。

Machine learning has progressed dramatically over the past two decades, from laboratory curiosity to a practical technology in widespread commercial use. Within artificial intelligence (AI), machine learning has emerged as the method of choice for developing practical software for computer vision, speech recognition, natural language processing, robot control, and other applications. Many developers of AI systems now recognize that, for many applications, it can be far easier to train a system by showing it examples of desired input-output behavior than to program it manually by anticipating the desired response for all possible inputs. The effect of machine learning has also been felt broadly across computer science and across a range of industries concerned with data-intensive issues, such as consumer services, the diagnosis of faults in complex systems, and the control of logistics chains. There has been a similarly broad range of effects across empirical sciences, from biology to cosmology to social science, as machine-learning methods have been developed to analyze high-throughput experimental data in novel ways. See Fig. 1 for a depiction of some recent areas of application of machine learning.

機器學習在過去的二十年裏取得了巨大的進步,從實驗室的好奇心到商業廣泛應用的實用技術。在人工智慧(AI)中,機器學習已經成爲開發計算機視覺、語音識別、自然語言處理、機器人控制和其他應用的實用軟體的首選方法。許多人工智慧系統的開發人員現在認識到,對於一些應用來說,它能很輕易的去訓練一個系統,只要展示它所期望的輸入輸出行爲比人爲手工程式設計來預計所有可能輸入的反映。在電腦科學以及涉及數據密集型問題的一系列行業中,也廣泛地感受到了機器學習的影響,例如消費者服務,複雜系統中的故障診斷以及物流鏈控制。 從生物學到宇宙學再到社會科學,在整個經驗科學領域都有類似的廣泛影響,因爲已經開發了機器學習方法來以新穎的方式分析高通量實驗數據。 圖1描述了有關機器學習的一些最新應用領域。

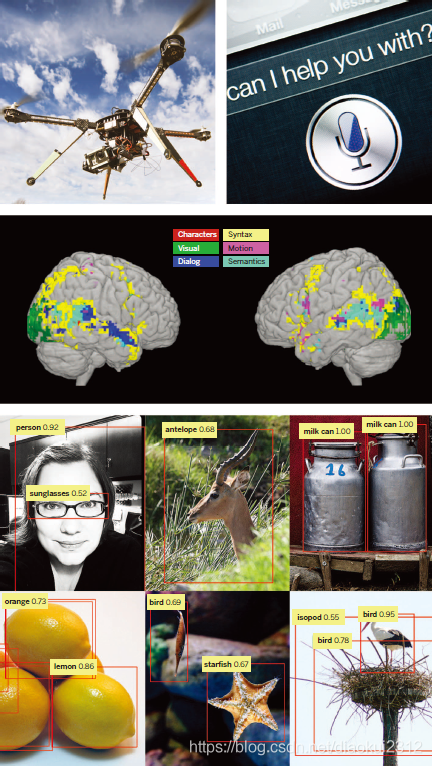

Fig. 1. Applications of machine learning. Machine learning is having a substantial effect on many areas of technology and science; examples of recent applied success stories include robotics and autonomous vehicle control (top left), speech processing and natural language processing (top right), neuroscience research (middle), and applications in computer vision (bottom).

圖1.機器學習的應用。機器學習對技術和科學的許多領域都產生了重大影響。 最近應用的成功案例包括機器人技術和自動駕駛汽車控制(左上),語音處理和自然語言處理(右上),神經科學研究(中)和計算機視覺中的應用(下)。

A learning problem can be defined as the problem of improving some measure of performance when executing some task, through some type of training experience. For example, in learning to detect credit-card fraud, the task is to assign a label of 「fraud」 or 「not fraud」 to any given credit-card transaction. The performance metric to be improved might be the accuracy of this fraud classifier, and the training experience might consist of a collection of historical credit-card transactions, each labeled in retrospect as fraudulent or not. Alternatively, one might define a different performance metric that assigns a higher penalty when 「fraud」 is labeled 「not fraud」 than when 「not fraud」 is incorrectly labeled 「fraud.」 One might also define a different type of training experience—for example, by including unlabeled credit-card transactions along with labeled examples.

學習問題可以定義爲通過某種型別的訓練經驗來提高執行某些任務時的某種效能度量的問題。 例如,在學習檢測信用卡欺詐時,任務是爲任何給定的信用卡交易標記「欺詐」或「非欺詐」標籤。 待改進的效能指標可能是該欺詐分類器的準確性,並且培訓經驗可能包括歷史信用卡交易的集合,每筆交易在回溯中都被標記爲欺詐或不欺詐。 或者,可以定義一個不同的效能指標,當「欺詐」被標記爲「非欺詐」時,比「非欺詐」被錯誤地標記爲「欺詐」時,受到更高的懲罰。 還可以定義一種不同類型的訓練實驗,例如,通過包括未標記的信用卡交易以及標記的範例。

A diverse array of machine-learning algorithms has been developed to cover the wide variety of data and problem types exhibited across different machine-learning problems (1, 2). Conceptually, machine-learning algorithms can be viewed as searching through a large space of candidate programs, guided by training experience, to find a program that optimizes the performance metric. Machine-learning algorithms vary greatly, in part by the way in which they represent candidate programs (e.g., decision trees, mathematical functions, and general programming languages) and in part by the way in which they search through this space of programs (e.g., optimization algorithms with well-understood convergence guarantees and evolutionary search methods that evaluate successive generations of randomly mutated programs). Here, we focus on approaches that have

been particularly successful to date.

如今已經開發了各種各樣的機器學習演算法,以涵蓋跨越不同機器學習問題(1、2)展示的各種數據和問題型別。 從概念上講,機器學習演算法可以看作是在訓練經驗的指導下搜尋大量候選程式,以找到優化效能指標的程式。 機器學習演算法的變化很大,部分原因是它們表示候選程式的方式(例如決策樹,數學函數和通用程式語言),部分原因是它們在程式的空間中進行搜尋的方式(例如, 具有易於理解的收斂保證的優化演算法和評估隨機變異程式的連續生成的進化搜尋方法)。 在這裏,我們將重點放在迄今爲止特別成功的方法上。

Many algorithms focus on function approximation problems, where the task is embodied in a function (e.g., given an input transaction, output a 「fraud」 or 「not fraud」 label), and the learning problem is to improve the accuracy of that function, with experience consisting of a sample of known input-output pairs of the function. In some cases, the function is represented explicitly as a parameterized functional form; in other cases, the function is implicit and obtained via a search process, a factorization, an optimization procedure, or a simulation-based procedure. Even when implicit, the function generally depends on parameters or other tunable degrees of freedom, and training corresponds to finding values for these parameters that optimize the performance metric.

許多演算法關注函數逼近問題,其中任務體現在函數中(例如,給定交易輸入,輸出「欺詐」或「非欺詐」標籤),學習問題是提高該函數的準確性,經驗具有由函數的已知輸入/輸出對組成的樣本。 在某些情況下,函數被明確表示爲參數化函數形式。 在其他情況下,該函數是隱式的,可以通過搜尋過程,分解,優化過程或基於模擬的過程來獲取。 即使是隱式的,這個函數依靠參數或者其它的自由可排程,和訓練去發現這些參數對應的值方法,來優化效能指標。

Whatever the learning algorithm, a key scientific and practical goal is to theoretically characterize the capabilities of specific learning algorithms and the inherent difficulty of any given learning problem: How accurately can the algorithm learn from a particular type and volume of training data? How robust is the algorithm to errors in its modeling assumptions or to errors in the training data? Given a learning problem with a given volume of training data, is it possible to design a successful algorithm or is this learning problem fundamentally intractable? Such theoretical characterizations of machine-learning algorithms and problems typically make use of the familiar frameworks of statistical decision theory and computational complexity theory. In fact, attempts to characterize machine-learning algorithms theoretically have led to blends of statistical and computational theory in which the goal is to simultaneously characterize the sample complexity (how much data are required to learn accurately) and the computational complexity (how much computation is required) and to specify how these depend on features of the learning algorithm such as the representation it uses for what it learns (3–6). A specific form of computational analysis that has proved particularly useful in recent years has been that of optimization theory, with upper and lower bounds on rates of convergence of optimization procedures merging well with the formulation of machine-learning problems as the optimization of a performance metric (7, 8).

無論採用哪種學習演算法,關鍵的科學和實踐目標都是從理論上描述特定學習演算法的能力和任何給定學習問題的固有困難:該演算法如何從特定型別和數量的訓練數據中學習的準確? 該演算法如何解決其建模假設中的錯誤或訓練數據中的錯誤?給定具有一定數量的訓練數據的學習問題,是否有可能設計一個成功的演算法,或者此學習問題從根本上是棘手的?機器學習演算法和問題的這種理論表徵通常利用統計決策理論和計算複雜性理論的熟悉框架。實際上,從理論上描述機器學習演算法的嘗試已導致統計和計算理論的融合,其目的是同時描述樣本複雜度(需要多少數據才能 纔能準確學習)和計算複雜度(需要多少計算量),並指定這些內容如何依賴於學習演算法的特徵,例如特徵可以用於它的學習(3-6)。最近幾年證明特別有用的一種特殊形式的計算分析是優化理論,優化程式的收斂速度的上限和下限與作爲效能指標優化的機器學習問題的公式很好地融合在一起(7、8)。

As a field of study, machine learning sits at the crossroads of computer science, statistics and a variety of other disciplines concerned with automatic improvement over time, and inference and decision-making under uncertainty. Related disciplines include the psychological study of human learning, the study of evolution, adaptive control theory, the study of educational practices, neuroscience, organizational behavior, and economics. Although the past decade has seen increased crosstalk with these other fields, we are just beginning to tap the potential synergies and the diversity of formalisms and experimental methods used across these multiple fields for studying systems that improve with experience.

作爲一個研究領域,機器學習處於電腦科學,統計學和其他各種學科的十字路口,這些學科涉及與時俱進的自動改進以及不確定性下的推理和決策。相關學科包括人類學習的心理學研究,進化研究,自適應控制理論,教育實踐研究,神經科學,組織行爲學和經濟學。 儘管在過去的十年中,與其他領域的交叉有所增加,但我們纔剛剛開始挖掘潛在的協同作用,以及在這些多個領域中使用的形式主義和實驗方法的多樣性,以研究隨經驗而改進的系統。

Drivers of machine-learning progress

The past decade has seen rapid growth in the ability of networked and mobile computing systems to gather and transport vast amounts of data, a phenomenon often referred to as 「Big Data.」 The scientists and engineers who collect such data have often turned to machine learning for solutions to the problem of obtaining useful insights, predictions, and decisions from such data sets. Indeed, the sheer size of the data makes it essential to develop scalable procedures that blend computational and statistical considerations, but the issue is more than the mere size of modern data sets; it is the granular, personalized nature of much of these data. Mobile devices and embedded computing permit large amounts of data to be gathered about individual humans, and machine-learning algorithms can learn from these data to customize their services to the needs and circumstances of each individual. Moreover, these personalized services can be connected, so that an overall service emerges that takes advantage of the wealth and diversity of data from many individuals while still customizing to the needs and circumstances of each. Instances of this trend toward capturing and mining large quantities of data to improve services and productivity can be found across many fields of commerce, science, and government. Historical medical records are used to discover which patients will respond best to which treatments; historical traffic data are used to improve traffic control and reduce congestion; historical crime data are used to help allocate local police to specific locations at specific times; and large experimental data sets are captured and curated to accelerate progress in biology, astronomy, neuroscience, and other dataintensive empirical sciences. We appear to be at the beginning of a decades-long trend toward increasingly data-intensive, evidence-based decisionmaking across many aspects of science, commerce, and government.

機器學習進度的驅動因素

在過去的十年中,網路和移動計算系統收集和傳輸大量數據的能力迅速增長,這種現象通常被稱爲「大數據」。 收集此類數據的科學家和工程師經常轉向機器學習,以解決從此類數據集獲得有用的見解,預測和決策的問題。 確實,龐大的數據量對於開發融合了計算和統計考慮因素的可延伸程式至關重要,但是問題不僅僅在於現代數據集的大小。 這是許多數據的細化,個性化性質所導致的。 移動裝置和嵌入式計算允許收集大量有關個人的數據,並且機器學習演算法可以從這些數據中學習以根據每個人的需求和情況定製其服務。 此外,這些個性化服務可以連線在一起,從而使整體服務出現,該服務可以利用來自許多個人的豐富數據和多樣性,同時仍可以根據每個人的需求和情況進行定製。 在商業,科學和政府的許多領域中都可以找到這種趨勢,即捕獲和挖掘大量數據以改善服務和生產率的趨勢。 使用歷史病歷來發現哪些患者對哪種治療最有效; 歷史交通數據用於改善交通控制和減少擁堵; 歷史犯罪數據用於幫助在特定時間將當地警察分配到特定地點; 並收集和整理大型實驗數據集,以加快生物學,天文學,神經科學和其他數據密集型經驗科學的進展。 我們似乎正處於數十年來的趨勢的開始,這種趨勢是在科學,商業和政府的許多方面進行越來越多的數據密集型,基於證據的決策。

With the increasing prominence of large-scale data in all areas of human endeavor has come a wave of new demands on the underlying machine-learning algorithms. For example, huge data sets require computationally tractable algorithms, highly personal data raise the need for algorithms that minimize privacy effects, and the availability of huge quantities of unlabeled data raises the challenge of designing learning algorithms to take advantage of it. The next sections survey some of the effects of these demands on recent work in machine-learning algorithms, theory, and practice.

隨着大規模數據在人類努力的各個領域中日益重要,已經對底層的機器學習演算法提出了新的要求。 例如,海量數據集需要計算上容易處理的演算法,高度個人化的數據提出了對最大限度減少隱私影響的演算法的需求,而大量未標記數據的可用性提出了設計學習演算法以利用它的挑戰。 下一部分將調查這些需求對機器學習演算法,理論和實踐中的最新工作的影響。

Core methods and recent progress

The most widely used machine-learning methods are supervised learning methods (1). Supervised learning systems, including spam classifiers of e-mail, face recognizers over images, and medical diagnosis systems for patients, all exemplify the function approximation problem discussed earlier, where the training data take the form of a collection of (x, y) pairs and the goal is to produce a prediction y* in response to a query x*. The inputs x may be classical vectors or they may be more complex objects such as documents, images, DNA sequences, or graphs. Similarly, many different kinds of output y have been studied. Much progress has been made by focusing on the simple binary classification problem in which y takes on one of two values (for example, 「spam」 or 「not spam」), but there has also been abundant research on problems such as multiclass classification (where y takes on one of K labels), multilabel classification (where y is labeled simultaneously by several of the K labels), ranking problems (where y provides a partial order on some set), and general structured prediction problems (where y is a combinatorial object such as a graph, whose components may be required to satisfy some set of constraints). An example of the latter problem is part-of-speech tagging, where the goal is to simultaneously label every word in an input sentence x as being a noun, verb, or some other part of speech. Supervised learning also includes cases in which y has realvalued components or a mixture of discrete and real-valued components.

核心方法及近期進展

最廣泛使用的機器學習方法是監督學習方法(1)。 有監督的學習系統,包括電子郵件的垃圾郵件分類器,影象上的人臉識別器以及患者的醫療診斷系統,都說明了前面討論的函數逼近問題,其中訓練數據採用(x,y)對的集合形式 並且目標是響應查詢x*生成預測 y *。輸入x可以是經典向量,也可以是更復雜的物件,例如文件,影象,DNA序列或圖形。 類似地,許多不同種類的輸出y也被研究過。 通過關注y取兩個值之一(例如「垃圾郵件」或「非垃圾郵件」)的簡單二進制分類問題,已經取得了很大的進步,但是對於諸如多類分類( 其中y代表K個標籤中的一個),多標籤分類(其中y由K個標籤中的幾個同時標記),排序問題(其中y在某些集合上提供部分順序)和一般的結構化預測問題(其中y是一個組合物件例如圖形,可能需要其元件滿足某些約束集)也進行了大量的研究。後一個問題的一個範例是詞性標記,其中的目的是同時將輸入句子x中的每個單詞標記爲名詞,動詞或其他詞性。 監督學習還包括y具有實值分量或離散分量和實值分量混合的情況。

Supervised learning systems generally form their predictions via a learned mapping f (x), which produces an output y for each input x (or a probability distribution over y given x). Many different forms of mapping f exist, including decision trees, decision forests, logistic regression, support vector machines, neural networks, kernel machines, and Bayesian classifiers (1). A variety of learning algorithms has been proposed to estimate these different types of mappings, and there are also generic procedures such as boosting and multiple kernel learning that combine the outputs of multiple learning algorithms. Procedures for learning f from data often make use of ideas from optimization theory or numerical analysis, with the specific form of machinelearning problems (e.g., that the objective function or function to be integrated is often the sum over a large number of terms) driving innovations. This diversity of learning architectures and algorithms reflects the diverse needs of applications, with different architectures capturing different kinds of mathematical structures, offering different levels of amenability to post-hoc visualization and explanation, and providing varying trade-offs between computational complexity, the amount of data, and performance.

監督學習系統通常通過學習對映f(x)形成預測,該對映爲每個輸入x生成輸出y(或給定x上y的概率分佈)。存在許多不同形式的對映f,包括決策樹,決策森林,邏輯迴歸,支援向量機,神經網路,核機和貝葉斯分類器(1)。已經提出了多種學習演算法來估計這些不同類型的對映,並且還存在諸如boost和多核學習之類的通用過程,其結合了多種學習演算法的輸出。從數據中學習f的過程通常利用優化理論或數值分析中的思想,並以特定形式的機器學習問題(例如,目標函數或要整合的函數通常是大量術語的總和)來推動創新 。學習架構和演算法的多樣性反映了應用程式的不同需求,不同的架構捕獲了不同種類的數學結構,爲事後的視覺化和解釋提供了不同級別的適應性,並在計算複雜度, 數據和效能提供了不同的權衡。

One high-impact area of progress in supervised learning in recent years involves deep networks, which are multilayer networks of threshold units, each of which computes some simple parameterized function of its inputs (9, 10). Deep learning systems make use of gradient-based optimization algorithms to adjust parameters throughout such a multilayered network based on errors at its output. Exploiting modern parallel computing architectures, such as graphics processing units originally developed for video gaming, it has been possible to build deep learning systems that contain billions of parameters and that can be trained on the very large collections of images, videos, and speech samples available on the Internet. Such large-scale deep learning systems have had a major effect in recent years in computer vision (11) and speech recognition (12), where they have yielded major improvements in performance over previous approaches (see Fig. 2). Deep network methods are being actively pursued in a variety of additional applications from natural language translation to collaborative filtering.

近年來,在監督學習中一個具有重大影響的領域涉及深層網路,它是閾值單元的多層網路,每個網路都計算其輸入的一些簡單參數化函數(9、10)。深度學習系統利用基於梯度的優化演算法,基於輸出處的誤差來調整整個多層網路中的參數。利用現代並行計算結構,例如最初爲視訊遊戲開發的圖形處理單元,就可以構建包含數十億個參數的深度學習系統,並且可以在網上對大量可用的影象,視訊和語音樣本進行訓練。近年來,這樣的大規模深度學習系統在計算機視覺(11)和語音識別(12)中產生了重大影響,與以前的方法相比,它們在效能方面產生了重大改進(見圖2)。 從自然語言翻譯到共同作業過濾,各種其他應用程式都在積極地追求深度網路方法。

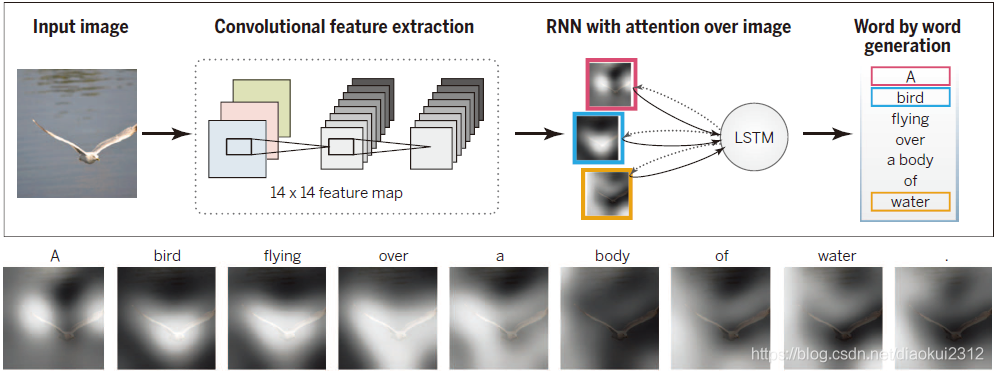

Fig. 2. Automatic generation of text captions for images with deep networks. A convolutional neural network is trained to interpret images, and its output is then used by a recurrent neural network trained to generate a text caption (top). The sequence at the bottom shows the word-by-word focus of the network on different parts of input image while it generates the caption word-by-word.

圖2.自動生成具有深層網路影象的文字標題。折積神經網路經過訓練以解釋影象,然後其輸出被訓練爲生成文字標題的回圈神經網路使用(頂部)。底部的序列顯示了網路在輸入影象的不同部分上的逐字聚焦,同時它逐字生成了標題。

The internal layers of deep networks can be viewed as providing learned representations of the input data. While much of the practical success in deep learning has come from supervised learning methods for discovering such representations, efforts have also been made to develop deep learning algorithms that discover useful representations of the input without the need for labeled training data (13). The general problem is referred to as unsupervised learning, a second paradigm in machine-learning research (2).

可以將深度網路的內部層視爲提供輸入數據學習表示形式。雖然深度學習的許多實際成功都來自用於發現此類表示形式的監督學習方法,但人們仍在努力開發深度學習演算法,該演算法可發現輸入的有用表示形式,而無需標記的訓練數據(13)。一般問題被稱爲無監督學習,這是機器學習研究中的第二個範式(2)。

Broadly, unsupervised learning generally involves the analysis of unlabeled data under assumptions about structural properties of the data (e.g., algebraic, combinatorial, or probabilistic). For example, one can assume that data lie on a low-dimensional manifold and aim to identify that manifold explicitly from data. Dimension reduction methods—including principal components analysis, manifold learning, factor analysis, random projections, and autoencoders (1, 2)—make different specific assumptions regarding the underlying manifold (e.g., that it is a linear subspace, a smooth nonlinear manifold, or a collection of submanifolds). Another example of dimension reduction is the topic modeling framework depicted in Fig. 3. A criterion function is defined that embodies these assumptions—often making use of general statistical principles such as maximum likelihood, the method of moments, or Bayesian integration—and optimization or sampling algorithms are developed to optimize the criterion. As another example, clustering is the problem of finding a partition of the observed data (and a rule for predicting future data) in the absence of explicit labels indicating a desired partition. A wide range of clustering procedures has been developed, all based on specific assumptions regarding the nature of a 「cluster.」 In both clustering and dimension reduction, the concern with computational complexity is paramount, given that the goal is to exploit the particularly large data sets that are available if one dispenses with supervised labels.

廣義地講,無監督學習通常涉及在有關數據結構特性的假設(例如代數,組合或概率)的前提下對未標記數據進行分析。例如,可以假設數據位於低維流形上,並旨在從數據中明確標識該流形。降維方法(包括主成分分析,流形學習,因子分析,隨機投影和自動編碼器(1、2))對底層流形做出了不同的特定假設(例如,它是線性子空間,平滑非線性流形或子流形的集合)。降維的另一個範例是圖3中描述的主題建模框架。定義了一個標準函數來體現這些假設(通常利用一般的統計原理,例如最大似然,矩量法或貝葉斯積分)以及優化或開發了採樣演算法以優化標準。作爲另一範例,聚類是在缺少指示期望分割區的顯式標籤的情況下找到觀測數據的分割區(以及用於預測未來數據的規則)的問題。 根據有關「叢集」性質的特定假設,已經開發了各種各樣的叢集程式。在聚類和降維方面,考慮到目標是要利用特別大的數據集(如果人們放棄了監督標籤的話),那麼對計算複雜性的關注是至關重要的。

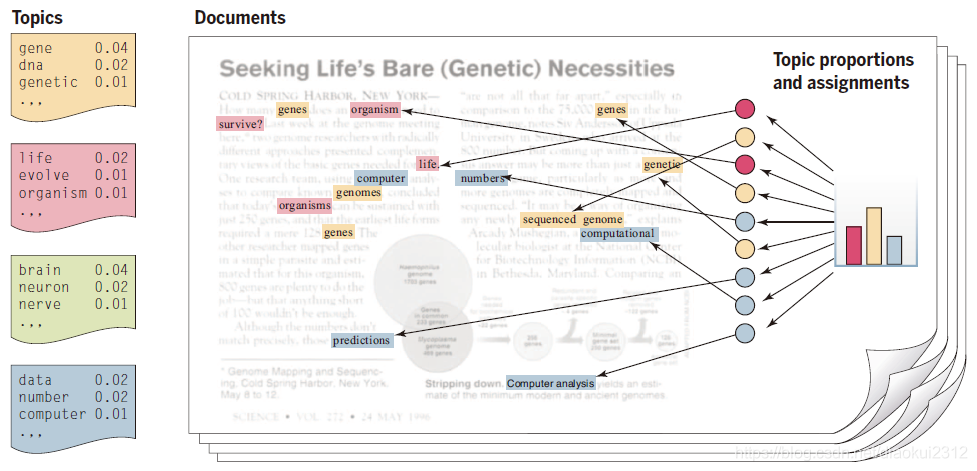

Fig. 3. Topic models. Topic modeling is a methodology for analyzing documents, where a document is viewed as a collection of words, and the words in the document are viewed as being generated by an underlying set of topics (denoted by the colors in the figure). Topics are probability distributions across words (leftmost column), and each document is characterized by a probability distribution across topics (histogram). These distributions are inferred based on the analysis of a collection of documents and can be viewed to classify, index, and summarize the content of documents.

圖3.主題模型。 主題建模是一種用於分析文件的方法,其中文件被視爲單詞的集合,文件中的單詞被視爲由基礎主題集(由圖中的顏色表示)生成。主題是單詞之間的概率分佈(最左列),每個文件的特徵是主題之間的概率分佈(直方圖)。這些分佈是根據對文件集合的分析得出的,可以檢視這些分佈以對文件的內容進行分類,索引和彙總。

A third major machine-learning paradigm is reinforcement learning (14, 15). Here, the information available in the training data is intermediate between supervised and unsupervised learning. Instead of training examples that indicate the correct output for a given input, the training data in reinforcement learning are assumed to provide only an indication as to whether an action is correct or not; if an action is incorrect, there remains the problem of finding the correct action. More generally, in the setting of sequences of inputs, it is assumed that reward signals refer to the entire sequence; the assignment of credit or blame to individual actions in the sequence is not directly provided. Indeed, although simplified versions of reinforcement learning known as bandit problems are studied, where it is assumed that rewards are provided after each action, reinforcement learning problems typically involve a general control-theoretic setting in which the learning task is to learn a control strategy (a 「policy」) for an agent acting in an unknown dynamical environment, where that learned strategy is trained to chose actions for any given state, with the objective of maximizing its expected reward over time. The ties to research in control theory and operations research have increased over the years, with formulations such as Markov decision processes and partially observed Markov decision processes providing points of contact (15, 16). Reinforcement-learning algorithms generally make use of ideas that are familiar from the control-theory literature, such as policy iteration, value iteration, rollouts, and variance reduction, with innovations arising to address the specific needs of machine learning (e.g., largescale problems, few assumptions about the unknown dynamical environment, and the use of supervised learning architectures to represent policies). It is also worth noting the strong ties between reinforcement learning and many decades of work on learning in psychology and neuroscience, one notable example being the use of reinforcement learning algorithms to predict the response of dopaminergic neurons in monkeys learning to associate a stimulus light with subsequent sugar reward (17).

第三個主要的機器學習範式是強化學習(14,15)。在這裏,訓練數據中可用的資訊是有監督學習和無監督學習之間的中間資訊。強化學習中的訓練數據不是指示給定輸入的正確輸出的訓練範例,而是假設只提供一個動作是否正確的指示;如果一個動作不正確,則仍然存在找到正確動作的問題。更一般地說,在輸入序列的設定中,假定獎勵信號指的是整個序列;在序列中,對單個行爲的信任或責備並不直接提供。事實上,雖然研究了強化學習的簡化版本bandit問題,其中假設在每個動作之後提供獎勵,但是強化學習問題通常涉及一個一般的控制理論設定,其中學習任務是爲代理學習控制策略(「策略」)一種未知的動態環境,在這種環境中,學習到的策略被訓練爲針對任何給定狀態選擇動作,目標是隨着時間的推移使其預期回報最大化。近年來,控制理論和運籌學研究的聯繫日益密切,馬爾可夫決策過程和部分觀察的馬爾可夫決策過程等公式提供了聯繫點(15,16)。強化學習演算法通常使用控制理論文獻中熟悉的思想,如策略迭代、值迭代、展開和方差縮減,並通過創新來解決機器學習的特定需求(例如,大規模問題,很少假設未知的動態環境,使用有監督的學習架構來表示策略)。同樣值得注意的是,強化學習與心理學和神經科學數十年的學習工作之間有着密切的聯繫,其中一個顯著的例子是使用強化學習演算法來預測猴子學習將刺鐳射與隨後的糖獎賞聯繫起來的多巴胺能神經元的反應(17)。

Although these three learning paradigms help to organize ideas, much current research involves blends across these categories. For example, semisupervised learning makes use of unlabeled data to augment labeled data in a supervised learning context, and discriminative training blends architectures developed for unsupervised learning with optimization formulations that make use of labels. Model selection is the broad activity of using training data not only to fit a model but also to select from a family of models, and the fact that training data do not directly indicate which model to use leads to the use of algorithms developed for bandit problems and to Bayesian optimization procedures. Active learning arises when the learner is allowed to choose data points and query the trainer to request targeted information, such as the label of an otherwise unlabeled example. Causal modeling is the effort to go beyond simply discovering predictive relations among variables, to distinguish which variables causally influence others (e.g., a high white-blood-cell count can predict the existence of an infection, but it is the infection that causes the high white-cell count). Many issues influence the design of learning algorithms across all of these paradigms, including whether data are available in batches or arrive sequentially over time, how data have been sampled, requirements that learned models be interpretable by users, and robustness issues that arise when data do not fit prior modeling assumptions.

儘管這三種學習範式有助於組織思想,但當前許多研究涉及這些類別的融合。例如,半監督學習在監督學習的上下文中利用未標記的數據來增強標記的數據,而判別訓練則將爲無監督學習而開發的體系結構與利用標籤的優化公式相結合。選擇模型是一項廣泛的活動,不僅使用訓練數據來擬合模型,而且還可以從一系列模型中進行選擇,並且訓練數據沒有直接指示要使用哪個模型,這一事實導致了針對bandit問題開發的演算法和貝葉斯優化程式。當允許學習者選擇數據點並查詢訓練者以請求有針對性的資訊(例如未標記數據的標籤)時,就會出現主動學習。 因果建模努力去解決變數之間的預測關係,還可以區分哪些變數對其他變數有因果關係(例如,一個白細胞數量能夠影響疾病的存在,但是是疾病導致了白細胞數量)。許多問題都會影響所有這些範式中學習演算法的設計,包括數據是成批提供還是隨時間順序到達,數據是如何採樣的,對使用者可解釋的學習模型的要求以及數據完成後不符合先前的建模假設出現的健壯性問題。

Emerging trends

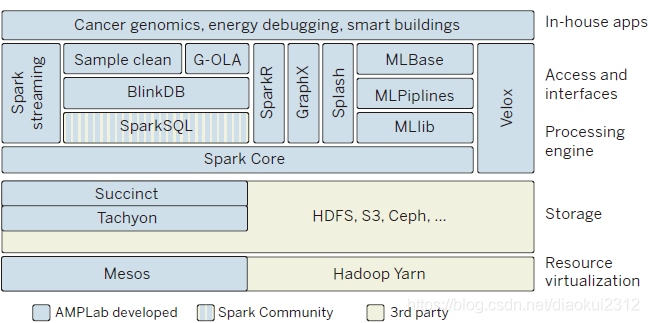

The field of machine learning is sufficiently young that it is still rapidly expanding, often by inventing new formalizations of machine-learning problems driven by practical applications. (An example is the development of recommendation systems, as described in Fig. 4.) One major trend driving this expansion is a growing concern with the environment in which a machine-learning algorithm operates. The word 「environment」 here refers in part to the computing architecture; whereas a classical machine-learning system involved a single program running on a single machine, it is now common for machine-learning systems to be deployed in architectures that include many thousands or ten of thousands of processors, such that communication constraints and issues of parallelism and distributed processing take center stage. Indeed, as depicted in Fig. 5, machine-learning systems are increasingly taking the form of complex collections of software that run on large-scale parallel and distributed computing platforms and provide a range of algorithms and services to data analysts.

新趨勢

機器學習領域還很年輕,以致它仍在迅速擴充套件,通常是通過發明由實際應用驅動的機器學習問題的新形式來實現的。 (如圖4所示是推薦系統的開發的例子。)推動這種發展的一個主要趨勢是人們對機器學習演算法執行環境的關注日益增長。 這裏的「環境」一詞是指計算架構。 傳統的機器學習系統涉及在單個機器上執行的單個程式,但是現在,將機器學習系統部署在包含成千上萬個處理器的體系結構中非常普遍,從而導致通訊限制和並行性和分佈式處理問題成爲焦點。確實,如圖5所示,機器學習系統越來越多地採用複雜軟體集合的形式,這些軟體在大規模並行和分佈式計算平臺上執行,併爲數據分析人員提供一系列演算法和服務。

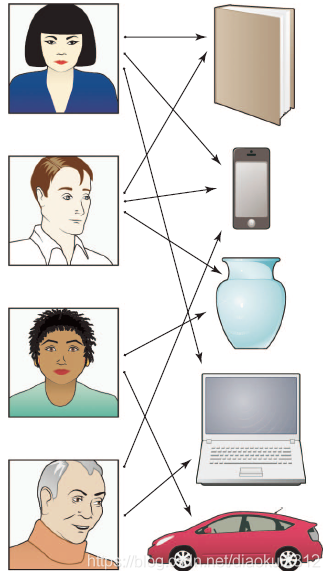

Fig. 4. Recommendation systems. A recommendation system is a machine-learning system that is based on data that indicate links between a set of a users (e.g., people) and a set of items (e.g., products). A link between a user and a product means that the user has indicated an interest in the product in some fashion (perhaps by purchasing that item in the past).The machine-learning problem is to suggest other items to a given user that he or she may also be interested in, based on the data across all users.

圖4.推薦系統。推薦系統是一種機器學習系統,其基於表明一組使用者(例如,人)和一組專案(例如,產品)之間的聯繫的數據。使用者與產品之間的聯繫意味着使用者已經以某種方式(可能是過去購買該產品)表明對該產品感興趣。機器學習問題是根據所有使用者的數據向給定的使用者建議他或她可能也會對此感興趣。

Fig. 5. Data analytics stack. Scalable machine-learning systems are layered architectures that are built on parallel and distributed computing platforms. The architecture depicted here—an opensource data analysis stack developed in the Algorithms, Machines and People (AMP) Laboratory at the University of California, Berkeley—includes layers that interface to underlying operating systems; layers that provide distributed storage, data management, and processing; and layers that provide core machine-learning competencies such as streaming, subsampling, pipelines, graph processing, and model serving.

圖5.數據分析堆疊。可延伸的機器學習系統是基於並行和分佈式計算平臺構建的分層體系結構。此處描述的架構是在加州大學伯克利分校的演算法,機器和人(AMP)實驗室開發的開源數據分析堆疊,其中包括與底層操作系統互動的層;提供分佈式儲存,數據管理和處理的層;以及提供核心機器學習能力(例如流,次採樣,管道,圖形處理和模型服務)的層。

The word 「environment」 also refers to the source of the data, which ranges from a set of people who may have privacy or ownership concerns, to the analyst or decision-maker who may have certain requirements on a machine-learning system (for example, that its output be visualizable), and to the social, legal, or political framework surrounding the deployment of a system. The environment also may include other machine-learning systems or other agents, and the overall collection of systems may be cooperative or adversarial. Broadly speaking, environments provide various resources to a learning algorithm and place constraints on those resources. Increasingly, machine-learning researchers are formalizing these relationships, aiming to design algorithms that are provably effective in various environments and explicitly allow users to express and control trade-offs among resources.

「環境」一詞也指數據的來源,範圍從可能有隱私或所有權問題的人到對機器學習系統可能有特定要求的分析師或決策者(視覺化輸出),以及圍繞系統部署的社會,法律或政治框架。環境還可以包括其他機器學習系統或其他代理,並且系統的總體集合可以是合作的或對立的。廣義上講,環境爲學習演算法提供了各種資源,並對這些資源施加了約束。越來越多的機器學習研究人員將這些關係形式化,旨在設計在各種環境中證明有效的演算法,並明確允許使用者表達和控制資源之間的權衡。

As an example of resource constraints, let us suppose that the data are provided by a set of individuals who wish to retain a degree of privacy. Privacy can be formalized via the notion of 「differential privacy,」 which defines a probabilistic channel between the data and the outside world such that an observer of the output of the channel cannot infer reliably whether particular individuals have supplied data or not (18). Classical applications of differential privacy have involved insuring that queries (e.g., 「what is the maximum balance across a set of accounts?」) to a privatized database return an answer that is close to that returned on the nonprivate data. Recent research has brought differential privacy into contact with machine learning, where queries involve predictions or other inferential assertions (e.g., 「given the data I’ve seen so far, what is the probability that a new transaction is fraudulent?」) (19, 20). Placing the overall design of a privacy-enhancing machine-learning system within a decision-theoretic framework provides users with a tuning knob whereby they can choose a desired level of privacy that takes into account the kinds of questions that will be asked of the data and their own personal utility for the answers. For example, a person may be willing to reveal most of their genome in the context of research on a disease that runs in their family but may ask for more stringent protection if information about their genome is being used to set insurance rates.

作爲資源約束的一個例子,讓我們假設數據是由一組希望保留一定程度隱私的個人提供的。隱私可以通過「差異隱私」的概念來形式化,「差異隱私」定義了數據與外部世界之間的概率通道,使得通道輸出的觀察者無法可靠地推斷出特定個人是否提供了數據(18)。差異隱私的經典應用涉及確保對私有化數據庫的查詢(例如「一組帳戶中的最大餘額是多少?」),返回的答案與對非私有數據返回的答案相近。最近的研究已將差異性隱私與機器學習聯繫起來,其中查詢涉及預測或其他推斷性斷言(例如,「鑑於我到目前爲止所看到的數據,新交易是欺詐的概率是多少?」)(19,20)。將隱私增強得機器學習系統的整體設計放在決策理論框架中,爲使用者提供了一個調節旋鈕,使他們可以選擇所需的隱私級別,並考慮到將要對數據提出的各種問題以及他們自己對答案的個人效用。例如,一個人可能願意在對家庭中所患疾病的研究中揭示其大部分基因組,但如果有關其基因組的資訊被用於設定保險費率,則可能會要求更嚴格的保護。

Communication is another resource that needs to be managed within the overall context of a distributed learning system. For example, data may be distributed across distinct physical locations because their size does not allow them to be aggregated at a single site or because of administrative boundaries. In such a setting, we may wish to impose a bit-rate communication constraint on the machine-learning algorithm. Solving the design problem under such a constraint will generally show how the performance of the learning system degrades under decrease in communication bandwidth, but it can also reveal how the performance improves as the number of distributed sites (e.g., machines or processors) increases, trading off these quantities against the amount of data (21, 22). Much as in classical information theory, this line of research aims at fundamental lower bounds on achievable performance and specific algorithms that achieve those lower bounds.

通訊是另一個需要在分佈式學習系統的整體環境中進行管理的資源。例如,數據可能分佈在不同的物理位置,因爲它們的大小不允許它們在單個站點上聚合,或者由於管理邊界。在這種情況下,我們可能希望對機器學習演算法施加一個位元率通訊約束。在這種約束下解決設計問題通常將顯示出學習系統的效能如何在通訊頻寬降低的情況下降低,但也可以揭示隨着分佈式站點(例如機器或處理器)數量的增加,這些數量與數據量的交易效能如何提高(21,22)。 就像在經典資訊理論中一樣,這方面的研究主要針對可實現的效能的基本下限和實現這些下限的特定演算法。

A major goal of this general line of research is to bring the kinds of statistical resources studied in machine learning (e.g., number of data points, dimension of a parameter, and complexity of a hypothesis class) into contact with the classical computational resources of time and space. Such a bridge is present in the 「probably approximately correct」 (PAC) learning framework, which studies the effect of adding a polynomial-time computation constraint on this relationship among error rates, training data size, and other parameters of the learning algorithm (3). Recent advances in this line of research include various lower bounds that establish fundamental gaps in performance achievable in certain machine-learning problems (e.g., sparse regression and sparse principal components analysis) via polynomial-time and exponential-time algorithms (23). The core of the problem, however, involves time-data tradeoffs that are far from the polynomial/exponential boundary. The large data sets that are increasingly the norm require algorithms whose time and space requirements are linear or sublinear in the problem size (number of data points or number of dimensions). Recent research focuses on methods such as subsampling, random projections, and algorithm weakening to achieve scalability while retaining statistical control (24, 25). The ultimate goal is to be able to supply time and space budgets to machine-learning systems in addition to accuracy requirements, with the system finding an operating point that allows such requirements to be realized.

這項研究的主要目標是使機器學習中研究的統計資源的種類(例如,數據點的數量,參數的維數和假設類的複雜性)與經典的時間和空間計算資源聯繫起來。 這樣的橋樑存在於「大概近似正確」(PAC)學習框架中,該框架研究了新增多項式時間計算約束對學習演算法的誤差率,訓練數據大小和其他參數之間的關係上的影響(3)。該研究領域的最新進展包括各種下界,這些下界通過多項式時間和指數時間演算法在某些機器學習問題(例如,稀疏迴歸和稀疏主成分分析)中可實現的效能上建立了根本性的差距(23)。然而,問題的核心涉及時間數據權衡遠大於多項式/指數邊界。越來越多的大型數據集要求演算法的時間和空間在問題大小(數據點數或維數)方面是線性或次線性的。最近的研究集中在次採樣,隨機投影和演算法弱化以實現可伸縮性同時保留統計控制的方法上(24,25)。最終目標是除了準確性要求外,還能夠爲機器學習系統提供時間和空間預算,並使系統找到一個可以實現此類要求的執行點。

Opportunities and challenges

Despite its practical and commercial successes, machine learning remains a young field with many underexplored research opportunities. Some of these opportunities can be seen by contrasting current machine-learning approaches to the types of learning we observe in naturally occurring systems such as humans and other animals, organizations, economies, and biological evolution. For example, whereas most machine-learning algorithms are targeted to learn one specific function or data model from one single data source, humans clearly learn many different skills and types of knowledge, from years of diverse training experience, supervised and unsupervised, in a simple-to-more-difficult sequence (e.g., learning to crawl, then walk, then run). This has led some researchers to begin exploring the question of how to construct computer lifelong or never-ending learners that operate nonstop for years, learning thousands of interrelated skills or functions within an overall architecture that allows the system to improve its ability to learn one skill based on having learned another (26–28). Another aspect of the analogy to natural learning systems suggests the idea of team-based, mixed-initiative learning. For example, whereas current machine-learning systems typically operate in isolation to analyze the given data, people often work in teams to collect and analyze data (e.g., biologists have worked as teams to collect and analyze genomic data, bringing together diverse experiments and perspectives to make progress on this difficult problem). New machine-learning methods capable of working collaboratively with humans to jointly analyze complex data sets might bring together the abilities of machines to tease out subtle statistical regularities from massive data sets with the abilities of humans to draw on diverse background knowledge to generate plausible explanations and suggest new hypotheses. Many theoretical results in machine learning apply to all learning systems, whether they are computer algorithms, animals, organizations, or natural evolution. As the field progresses, we may see machine-learning theory and algorithms increasingly providing models for understanding learning in neural systems, organizations, and biological evolution and see machine learning benefit from ongoing studies of these other types of learning systems.

機遇和挑戰

儘管機器學習在實踐和商業上取得了成功,但是它仍然是一個新的領域,有許多未開發的研究機會。 通過將當前的機器學習方法與我們在自然發生的系統(例如人類和其他動物,組織,經濟和生物進化)中觀察到的學習型別進行對比,可以看到其中一些機會。例如,儘管大多數機器學習演算法的目標是從一個單一的數據源中學習一種特定的函數或數據模型,但人類顯然可以從多年的有監督和無監督的各種訓練經驗中學習許多不同的技能和知識型別 ,從一個簡單到複雜的序列(例如,學習爬行,行走,然後奔跑)。這導致一些研究人員開始探索以下問題:如何構建計算機多年不間斷執行的終身學習或永無止境的學習,在整個體系結構中學習數千種相互關聯的技能或函數,使系統在學習另一種技能的基礎上提高其學習一項技能的能力(26-28)。類比自然學習系統的另一個方面提出了基於團隊的混合主動學習的思想。例如,儘管當前的機器學習系統通常獨立執行以分析給定的數據,但是人們經常以團隊的形式收集和分析數據(例如,生物學家作爲團隊收集和分析基因組數據,將各種實驗和觀點彙集在一起,以在這個難題上取得進展)。能夠與人類合作以共同分析複雜數據集的新的機器學習方法可能將機器從大量數據集中提取出細微統計規律的能力與人類利用各種背景知識來產生合理解釋和提出新的假設。機器學習的許多理論結果都適用於所有學習系統,無論它們是計算機演算法,動物,組織還是自然進化。隨着該領域的發展,我們可能會看到機器學習理論和演算法越來越多地提供了用於理解神經系統,組織和生物進化中的學習的模型,並且看到機器學習將從這些其他型別的學習系統的持續研究中受益。

As with any powerful technology, machine learning raises questions about which of its potential uses society should encourage and discourage. The push in recent years to collect new kinds of personal data, motivated by its economic value, leads to obvious privacy issues, as mentioned above. The increasing value of data also raises a second ethical issue: Who will have access to, and ownership of, online data, and who will reap its benefits? Currently, much data are collected by corporations for specific uses leading to improved profits, with little or no motive for data sharing. However, the potential benefits that society could realize, even from existing online data, would be considerable if those data were to be made available for public good.

與任何強大的技術一樣,機器學習引發了一個問題,即社會應鼓勵和阻止哪些潛在用途。如上所述,近年來,由於其經濟價值的原因,人們試圖收集新型個人數據導致了明顯的隱私問題。 數據價值的增長還引發了第二個道德問題:誰將有權存取和擁有線上數據,誰將從中受益?當前,公司爲特定用途收集了大量數據,從而提高了利潤,而很少或沒有共用數據的動機。但是,如果將這些數據提供給公衆,社會甚至可以從現有的線上數據中實現的潛在利益將是巨大的。

To illustrate, consider one simple example of how society could benefit from data that is already online today by using this data to decrease the risk of global pandemic spread from infectious diseases. By combining location data from online sources (e.g., location data from cell phones, from credit-card transactions at retail outlets, and from security cameras in public places and private buildings) with online medical data (e.g., emergency room admissions), it would be feasible today to implement a simple system to telephone individuals immediately if a person they were in close contact with yesterday was just admitted to the emergency room with an infectious disease, alerting them to the symptoms they should watch for and precautions they should take. Here, there is clearly a tension and trade-off between personal privacy and public health, and society at large needs to make the decision on how to make this trade-off. The larger point of this example, however, is that, although the data are already online, we do not currently have the laws, customs, culture, or mechanisms to enable society to benefit from them, if it wishes to do so. In fact, much of these data are privately held and owned, even though they are data about each of us. Considerations such as these suggest that machine learning is likely to be one of the most transformative technologies of the 21st century. Although it is impossible to predict the future, it appears essential that society begin now to consider how to maximize its benefits.

爲了說明這一點,請考慮一個簡單的例子,這個例子說明了社會如何通過使用這些數據來降低全球傳染病傳播的風險,從而從中獲得好處。 通過將來自線上資源的位置數據(例如,來自手機的位置數據,來自零售店的信用卡交易以及來自公共場所和私人建築物中的安全攝像頭的地點數據)與線上醫療數據(例如,急診室入院情況)相結合,如果一個昨天與別人密切聯繫的人剛被送進急診室感染傳染病,提醒他們應注意的症狀和應採取的預防措施,那麼今天實施一個簡單的系統立即打電話給個人的做法是可行的。在這裏,個人隱私與公共衛生之間顯然存在緊張和權衡,整個社會都需要做出如何權衡的決定。但是,這個例子的最大意義是,儘管數據已經線上,但我們目前尚無法律,習俗,文化或機制 機製來支援,只要社會願意,它們就可以使社會從中受益。實際上,儘管這些數據是關於我們每個人的數據,但其中許多都是私人持有和擁有的。這些考慮表明,機器學習可能是21世紀最具變革性的技術之一。儘管無法預測未來,但似乎社會必須開始考慮如何最大限度地發揮其利益。