【pytorch】簡單的一個模型做cifar10 分類(二)

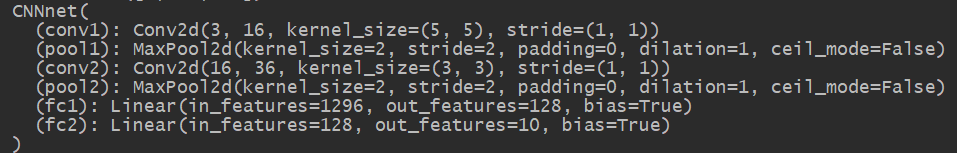

前面用的網路是pytorch官方給的一個範例網路,本文參照書本換了一個網路,如下:

程式碼如下:

class CNNnet(nn.Module):

def __init__(self):

super(CNNnet,self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5,stride=1)

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36,kernel_size=3,stride=1)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(1296, 128) # 1296 = 36 * 6 *6

self.fc2 = nn.Linear(128, 10)

def forward(self,x):

x =self.pool1(F.relu(self.conv1(x)))

x =self.pool2(F.relu(self.conv2(x)))

x = x.view(-1, 36*6*6)

x = F.relu(self.fc2(F.relu(self.fc1(x))))

return x

其中36*6*6怎麼計算來的,c*H*W,H和W都是用如下鏈接給的計算方式得到的:

【pytorch】折積層輸出尺寸的計算公式和分組折積的weight尺寸的計算https://mp.csdn.net/console/editor/html/107954603

結果如何呢?

當用瞭如下顯示初始化方式後,結果爲:

for m in net.modules():

if isinstance(m, nn.Conv2d):

nn.init.normal_(m.weight)

nn.init.xavier_normal_(m.weight)

nn.init.kaiming_normal_(m.weight) # 折積層初始化

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight) # 全連線層參數初始化

可以看出,其好像陷入了鞍點。其損失沒有下降了,那我還是把這個顯式初始化參數去掉試一下。

還真有效果,終於loss有值了,但是基本穩定在2.多,2個epoch時:

Accuracy of the network on the 10000 test images: 9 %

Accuracy of plane : 0 %

Accuracy of car : 89 %

Accuracy of bird : 0 %

Accuracy of cat : 0 %

Accuracy of deer : 0 %

Accuracy of dog : 0 %

Accuracy of frog : 4 %

Accuracy of horse : 0 %

Accuracy of ship : 0 %

Accuracy of truck : 0 %

那epoch=10來說,還是加上了顯式初始化哈,結果如下:

[10, 2000] loss: 1.785

[10, 4000] loss: 1.834

[10, 6000] loss: 1.833

[10, 8000] loss: 1.813

[10, 10000] loss: 1.865

[10, 12000] loss: 1.834

Finished Training

Accuracy of the network on the 10000 test images: 35 %

Accuracy of plane : 27 %

Accuracy of car : 44 %

Accuracy of bird : 12 %

Accuracy of cat : 42 %

Accuracy of deer : 38 %

Accuracy of dog : 9 %

Accuracy of frog : 47 %

Accuracy of horse : 42 %

Accuracy of ship : 64 %

Accuracy of truck : 24 %

堪憂呀。沒有達到書本上的結果。才發現初始學習率lr太高啦!改爲lr=0.001。

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

還是如下:

我把初始化全部去掉看看

[1, 2000] loss: 2.133

[1, 4000] loss: 1.955

[1, 6000] loss: 1.930

[1, 8000] loss: 1.907

[1, 10000] loss: 1.850

[1, 12000] loss: 1.849

[2, 2000] loss: 1.774

[2, 4000] loss: 1.805

[2, 6000] loss: 1.802

[2, 8000] loss: 1.781

[2, 10000] loss: 1.790

[2, 12000] loss: 1.796

[3, 2000] loss: 1.763

[3, 4000] loss: 1.784

[3, 6000] loss: 1.830

[3, 8000] loss: 1.771

[3, 10000] loss: 1.805

[3, 12000] loss: 1.830

[4, 2000] loss: 1.803

[4, 4000] loss: 1.805

[4, 6000] loss: 1.814

[4, 8000] loss: 1.790

[4, 10000] loss: 1.805

[4, 12000] loss: 1.805

[5, 2000] loss: 1.834

[5, 4000] loss: 1.851

[5, 6000] loss: 1.846

[5, 8000] loss: 1.836

[5, 10000] loss: 1.857

[5, 12000] loss: 1.859

[6, 2000] loss: 1.814

[6, 4000] loss: 1.811

[6, 6000] loss: 1.859

[6, 8000] loss: 1.908

[6, 10000] loss: 1.873

[6, 12000] loss: 1.857

[7, 2000] loss: 1.812

[7, 4000] loss: 1.865

[7, 6000] loss: 1.836

[7, 8000] loss: 1.873

[7, 10000] loss: 1.873

[7, 12000] loss: 1.912

[8, 2000] loss: 1.840

[8, 4000] loss: 1.880

[8, 6000] loss: 1.897

[8, 8000] loss: 1.881

[8, 10000] loss: 1.855

[8, 12000] loss: 1.882

[9, 2000] loss: 1.812

[9, 4000] loss: 1.820

[9, 6000] loss: 1.873

[9, 8000] loss: 1.824

[9, 10000] loss: 1.868

[9, 12000] loss: 1.870

[10, 2000] loss: 1.853

[10, 4000] loss: 1.842

[10, 6000] loss: 1.832

[10, 8000] loss: 1.820

[10, 10000] loss: 1.878

[10, 12000] loss: 1.838

Finished Training

Accuracy of the network on the 10000 test images: 34 %

Accuracy of plane : 13 %

Accuracy of car : 49 %

Accuracy of bird : 12 %

Accuracy of cat : 38 %

Accuracy of deer : 48 %

Accuracy of dog : 14 %

Accuracy of frog : 35 %

Accuracy of horse : 39 %

Accuracy of ship : 59 %

Accuracy of truck : 30 %