爬蟲小案例:使用selenium進行獲取簡書文章數據並插入數據庫

2020-08-12 14:29:41

網頁分析

https://www.jianshu.com/c/b4d0bf551689

進行獲取這些數據

在第一次進來的時候發現他有一個無線下拉的列表要將所有的列表動態加載出來

browser=webdriver.Chrome()

browser.get(url)

browser.execute_script("""

(function () {

var y = 0;

var step = 100;

window.scroll(0, 0);

function f() {

if (y < document.body.scrollHeight) {

y += step;

window.scroll(0, y);

setTimeout(f, 100);

} else {

window.scroll(0, 0);

document.title += "scroll-done";

}

}

setTimeout(f, 1000);

})();

""")

while True:

if "scroll-done" in browser.title:

break

else:

print('正在下拉')

下面 下麪就開始獲取要得到的數據:

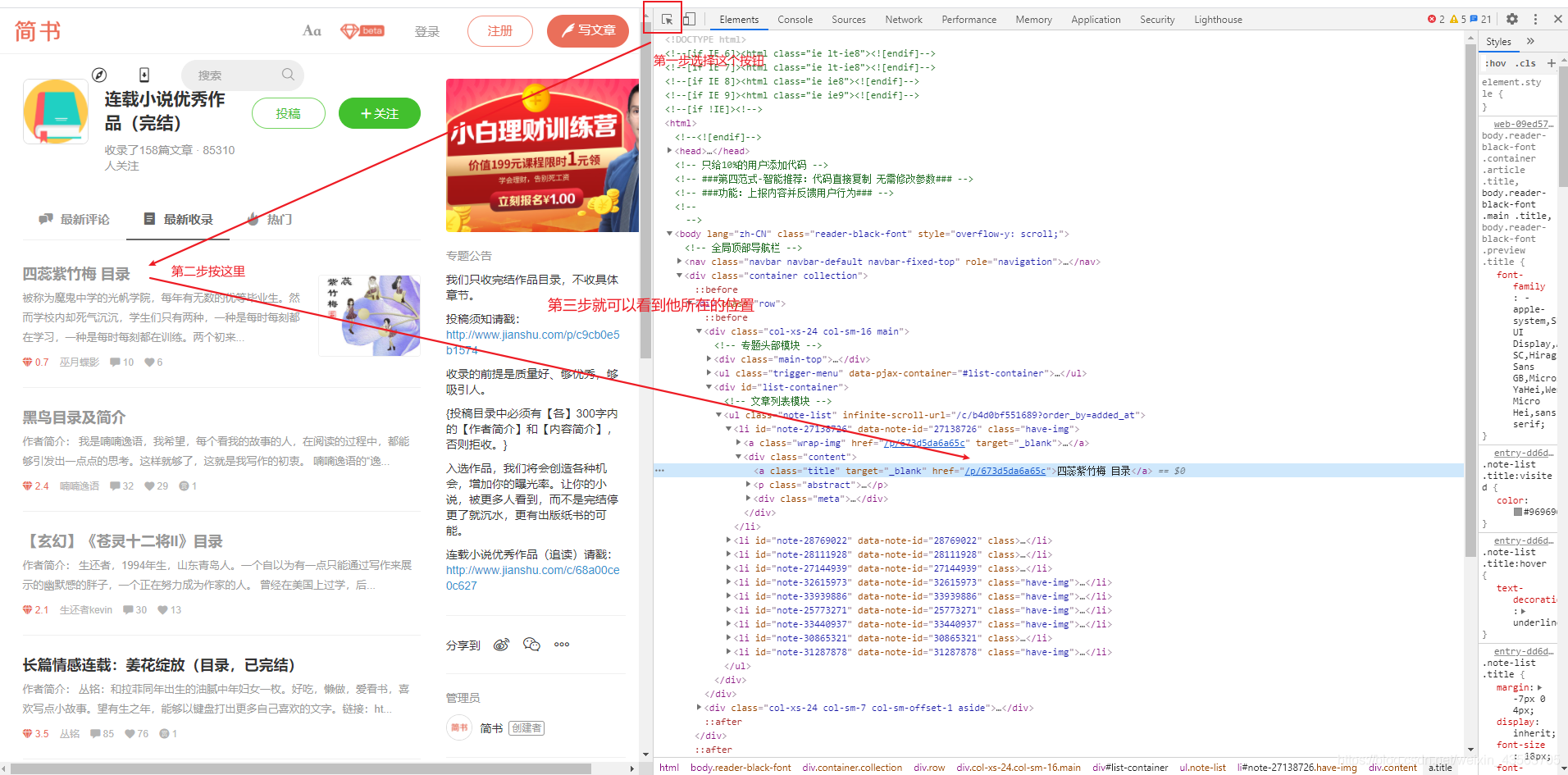

開始分析如何得到這兩個屬性:

按F12

下面 下麪所有你想獲取的數據同理

完整程式碼

# -*- encoding:utf-8 -*-

import requests

from selenium import webdriver

from bs4 import BeautifulSoup

import re

import time

import pymysql

def getHtml(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36 Edg/84.0.522.44'

}

respon = requests.get(url, headers=headers)

return respon.text

def get_Details(url):

#開啓瀏覽器

browser=webdriver.Chrome()

#將要獲取的連線進行連線

browser.get(url)

#開始進行滑動視窗至最底層

browser.execute_script("""

(function () {

var y = 0;

var step = 100;

window.scroll(0, 0);

function f() {

if (y < document.body.scrollHeight) {

y += step;

window.scroll(0, y);

setTimeout(f, 100);

} else {

window.scroll(0, 0);

document.title += "scroll-done";

}

}

setTimeout(f, 1000);

})();

""")

print("開始下拉.....")

# time.sleep(180)

#做一個標記,是否已經滑到最底層

count=False

while True:

#第一次進來爲False不會結束

if count==True:

break

#判斷是否已經到最底層如果到了那麼就開始獲取數據

if "scroll-done" in browser.title:

#獲取當前頁面的原始碼

page_html = browser.page_source

#對原始碼進行解析

page_soup = BeautifulSoup(page_html, 'html.parser')

#獲取所有的文章的父標籤

note_list = page_soup.find('ul', attrs={'class': 'note-list'})

#如果不爲空

if note_list != None:

#開始獲取所有的文章

li_All = note_list.findAll('li')

#這裏是爲了進入每一個文件的詳情頁面再次開啓一個瀏覽器

page_chrome=webdriver.Chrome()

#開始對每一個文章進行遍歷

for li in li_All:

sql={'id':'NULL',

'content_name': '',

'content_href':'',

'nice':'',

'chapter':'',

'article':'',

'abstract':''

}

if li.find('div', attrs={'class': 'content'})!=None:

if li.find('div', attrs={'class': 'content'}).find('a')!=None:

#獲取文章的標題名稱

content_name=li.find('div', attrs={'class': 'content'}).find('a').text#標題名稱

sql['content_name']=content_name

#獲取文章的地址,爲了方便進入下一級,詳情頁面

content_href=f"https://www.jianshu.com{li.find('div', attrs={'class': 'content'}).find('a')['href']}"#詳情地址

sql['content_href']=content_href

print("所在文章地址:",content_href)

#根據剛剛得到的文章詳情頁面的地址連線開始獲取

page_chrome.get(content_href)

time.sleep(3)

#獲取每一個詳情頁面的原始碼

details_html=page_chrome.page_source

#開始解析

details_soup=BeautifulSoup(details_html,'html.parser')

#獲取點讚的數值

pnjry=details_soup.find('div',attrs={'class':'_3Pnjry'})

if pnjry!=None:

puukr=pnjry.find('div',attrs={'class':'_1pUUKr'})

if puukr!=None:

png=puukr.find('div',attrs={'class':'P63n6G'})

if png!=None:

pnwj=png.find("span")

sql['nice']=pnwj.text

#開始獲取每一個章節

rhmja=details_soup.find('article',attrs={'class':'_2rhmJa'})

p_list=rhmja.findAll('p')

if p_list==None:

continue

p_name=[]

p_articles_Numpy=[]

for p in p_list:

if str(p).__contains__('叢銘'):

break

p_article_S_ALL = ""

time.sleep(2)

a=p.find('a')

if a ==None:

continue

if a!=None:

a_name=a.text

p_name.append(a_name)

#獲取每一個章節的內容頁面

a_href=a['href']

if not str(a_href).__contains__('https://www.jianshu.com/p'):

continue

page_chrome.get(a_href)

article_html=page_chrome.page_source

#如果此連線已失效就進行判斷不獲取

if not str(article_html).__contains__('抱歉,你存取的頁面不存在。'):

article_soup = BeautifulSoup(article_html, 'html.parser')

article=article_soup.find('article',attrs={'class':'_2rhmJa'})

p_article_All=article.findAll('p')

if p_article_All==None:

continue

for p_article in p_article_All:

p_article_S_ALL+=p_article.text

p_articles_Numpy.append(p_article_S_ALL)

# print(p_articles_Numpy)

# print(p_name)

sql['chapter']=p_name

sql['article']=p_articles_Numpy

if li.find('div', attrs={'class': 'content'}).find('p',attrs={'class':'abstract'})!=None:

abstract=str(li.find('div', attrs={'class': 'content'}).find('p', attrs={'class': 'abstract'}).text)#標題內容

abstract=abstract.strip("")

sql['abstract']=abstract

# print(abstract)

print(sql)

conneMysql(sql)

#當所有的數據獲取完之後改爲True就不會重複的進入了

count=True

else:

print("下拉中...")

def conneMysql(sql):

conn= pymysql.connect(

host='localhost',

user='root',

password='root',

db='test',

charset='utf8',

autocommit=True, # 如果插入數據,, 是否自動提交? 和conn.commit()功能一致。

)

cur= conn.cursor()

insert = 'insert into `jianshu_table` values ('

for item in sql:

if item == 'chapter':

chapter = str(sql[item]).replace("[", "").replace("]", "").replace(",", "|").replace("'","")

insert += f"'{chapter}',"

elif item == 'article':

article = str(sql[item]).replace("[", "").replace("]", "").replace(",", "|").replace("'","")

insert += f"'{article}',"

elif item == 'id':

insert += f"{sql[item]},"

elif item != 'abstract':

insert += f"'{sql[item]}',"

else:

insert += f"'{str(sql[item]).strip()}');"

try:

insert_sqli = insert

cur.execute(insert_sqli)

except Exception as e:

print("插入數據失敗:", e)

else:

# 如果是插入數據, 一定要提交數據, 不然數據庫中找不到要插入的數據;

conn.commit()

print("插入數據成功;")

if __name__ == '__main__':

url='https://www.jianshu.com/c/b4d0bf551689'

get_Details(url)

建立表

DROP TABLE IF EXISTS `jianshu_table`;

CREATE TABLE `jianshu_table` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`content_name` varchar(500) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`content_href` varchar(500) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`nice` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`chapter` varchar(2000) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`atticle` longtext CHARACTER SET utf8 COLLATE utf8_general_ci NULL,

`abstract` longtext CHARACTER SET utf8 COLLATE utf8_general_ci NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 2 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Compact;