使用tensorflow進行音樂型別的分類

音樂串流媒體服務的興起使得音樂無處不在。我們在上下班的時候聽音樂,鍛鍊身體,工作或者只是放鬆一下。

這些服務的一個關鍵特性是播放列表,通常按流派分組。這些數據可能來自出版歌曲的人手工標註。但這並不是一個很好的劃分,因爲可能是一些藝人想利用一個特定流派的流行趨勢。更好的選擇是依靠自動音樂型別分類。與我的兩位合作者張偉信(Wilson Cheung)和顧長樂(Joy Gu)一起,我們試圖比較不同的音樂樣本分類方法。特別是,我們評估了標準機器學習和深度學習方法的效能。我們發現特徵工程是至關重要的,而領域知識可以真正提高效能。

在描述了所使用的數據源之後,我對我們使用的方法及其結果進行了簡要概述。在本文的最後一部分,我將花更多的時間來解釋googlecolab中的TensorFlow框架如何通過TFRecord格式在GPU或TPU執行時高效地執行這些任務。所有程式碼都在這裏,我們很高興與感興趣的人分享我們更詳細的報告。

數據源

預測一個音訊樣本的型別是一個監督學習問題。換句話說,我們需要包含標記範例的數據。FreeMusicArchive是一個包含相關標籤和元數據的音訊片段庫,最初是在2017年的國際音樂資訊檢索會議(ISMIR)上爲論文而收集的。

我們將分析重點放在所提供數據的一小部分上。它包含8000個音訊片段,每段長度爲30秒,分爲八種不同類型之一:

- Hip-Hop

- Pop

- Folk

- Experimental

- Rock

- International

- Electronic

- Instrumental

每種型別都有1000個代表性的音訊片段。採樣率爲44100hz,這意味着每個音訊樣本有超過100萬個數據點,或者總共超過10個數據點。在分類器中使用所有這些數據是一個挑戰,我們將在接下來的章節中詳細討論。

有關如何下載數據的說明,請參閱儲存庫中包含的讀我檔案。我們非常感謝Michaël Defferrard、Kirell Benzi、Pierre Vandergheynst、Xavier Bresson將這些數據整合在一起並免費提供,但我們只能想象Spotify或Pandora Radio擁有的數據規模所能提供的見解。有了這些數據,我們可以描述各種模型來執行手頭的任務。

模型說明

我會盡量減少理論上的細節,但會盡可能地鏈接到相關資源。另外,我們的報告包含的資訊比我在這裏能包含的要多得多,尤其是關於功能工程的資訊。

標準機器學習

我們使用了Logistic迴歸、k-近鄰(kNN)、高斯樸素貝葉斯和支援向量機(SVM):

支援向量機(SVM)通過最大化訓練數據的裕度來尋找最佳決策邊界。核技巧通過將數據投影到高維空間來定義非線性邊界

kNN根據k個最近的訓練樣本的多數票分配一個標籤

naivebayes根據特徵預測不同類的概率。條件獨立性假設大大簡化了計算

Logistic迴歸還利用Logistic函數,通過對概率的直接建模來預測不同類別的概率

深度學習

對於深入學習,我們利用TensorFlow框架。我們根據輸入的型別建立了不同的模型。

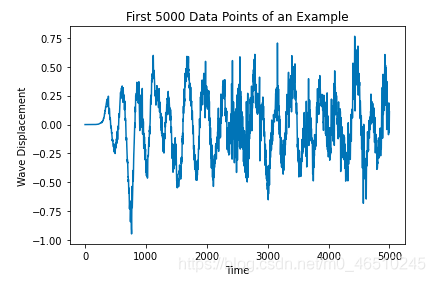

對於原始音訊,每個範例是一個30秒的音訊樣本,或者大約130萬個數據點。這些浮點值(正或負)表示在某一時刻的波位移。爲了管理計算資源,只能使用不到1%的數據。有了這些特徵和相關的標籤(一個熱點編碼),我們可以建立一個折積神經網路。總體架構如下:

- 一維折積層,其中過濾器結合來自偶然數據的資訊

- MaxPooling層,它結合了來自折積層的資訊

- 全連線層,建立提取的折積特徵的線性組合,並執行最終的分類

- Dropout層,它幫助模型泛化到不可見的數據

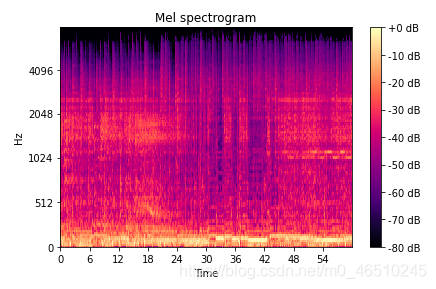

另一方面,光譜圖作爲音訊樣本的視覺表示。這啓發了將訓練數據視爲影象,並通過遷移學習利用預先訓練的模型。對於每個例子,我們可以形成一個矩陣的Mel譜圖。如果我們正確計算尺寸,這個矩陣可以表示爲224x224x3影象。這些都是利用MobileNetV2的正確維度,MobileNetV2在影象分類任務上有着出色的效能。轉移學習的思想是使用預先訓練的模型的基本層來提取特徵,並用一個定製的分類器(在我們的例子中是稠密層)代替最後一層。這是因爲基本層通常可以很好地泛化到所有影象,即使它們沒有經過訓練。

模型結果

我們使用20%的測試集來評估我們模型的效能。我們可以將結果彙總到下表中:

在譜圖中應用遷移學習的折積神經網路是效能最好的,儘管SVM和Gaussian naivebayes在效能上相似(考慮到後者的簡化假設,這本身就很有趣)。我們在報告中描述了最好的超參數和模型體系結構。

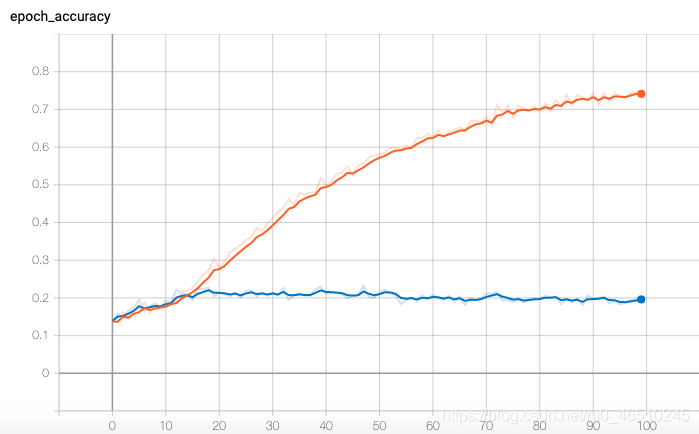

我們對訓練和驗證曲線的分析突出了過度擬合的問題,如下圖所示(我們的大多數模型都有類似的圖表)。目前的特徵模式有助於我們確定這一問題。我們爲此設計了一些解決方案,可以在本專案的未來迭代中實現:

- 降低數據的維數:PCA等技術可用於將提取的特徵組合在一起,並限制每個範例的特徵向量的大小

- 增加訓練數據的大小:數據源提供更大的數據子集。我們將探索範圍限制在整個數據集的10%以下。如果有更多的計算資源可用,或者成功地降低數據的維數,我們可以考慮使用完整的數據集。這很可能使我們的方法能夠隔離更多的模式,並大大提高效能

- 在我們的搜尋功能時請多加註 加注意:FreeMusicChive包含一系列功能。當我們使用這些特性而不是我們自己的特性時,我們確實看到了效能的提高,這使我們相信我們可以希望通過領域知識和擴充套件的特徵集獲得更好的結果

TensorFlow實現

TensorFlow是一個非常強大的工具,可以在規模上構建神經網路,尤其是與googlecolab的免費GPU/TPU執行時結合使用。這個專案的主要觀點是找出瓶頸:我最初的實現非常緩慢,甚至使用GPU。我發現問題出在I/O過程(從磁碟讀取數據,這是非常慢的)而不是訓練過程。使用TFrecord格式可以通過並行化來加快速度,這使得模型的訓練和開發更快。

在我開始之前,有一個重要的注意事項:雖然數據集中的所有歌曲都是MP3格式,但我將它們轉換成wav檔案,因爲TensorFlow有更好的內建支援。請參考GitHub上的庫以檢視與此專案相關的所有程式碼。程式碼還假設您有一個Google雲端儲存桶,其中所有wav檔案都可用,一個上載元數據的Google驅動器,並且您正在使用googlecolab。儘管如此,將所有程式碼調整到另一個系統(基於雲的或原生的)應該相對簡單。

初始設定

這個專案需要大量的庫。這個requirements.txt儲存庫中的檔案爲您處理安裝,但您也可以找到下面 下麪的詳細列表。

# import libraries

import pandas as pd

import tensorflow as tf

from IPython.display import Audio

import os

import matplotlib.pyplot as plt

import numpy as np

import math

import sys

from datetime import datetime

import pickle

import librosa

import ast

import scipy

import librosa.display

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from tensorflow import keras

from google.colab import files

keras.backend.clear_session()

tf.random.set_seed(42)

np.random.seed(42)

第一步是掛載驅動器(數據已上傳的位置),並使用儲存音訊檔的GCS儲存桶進行身份驗證。從技術上講,數據也可以上傳到GCS,這樣就不需要安裝驅動器了,但我自己的專案就是這樣構建的。

# mount the drive

# adapted from https://colab.sandbox.google.com/notebooks/io.ipynb#scrollTo=S7c8WYyQdh5i

from google.colab import drive

drive.mount('/content/drive')

# load the metadata to Colab from Drive, will greatly speed up the I/O process

zip_path_metadata = "/content/drive/My Drive/master_degree/machine_learning/Project/fma_metadata.zip"

!cp "{zip_path_metadata}" .

!unzip -q fma_metadata.zip

!rm fma_metadata.zip

# authenticate for GCS access

if 'google.colab' in sys.modules:

from google.colab import auth

auth.authenticate_user()

我們還儲存了一些變數以備將來使用,例如。

# set some variables for creating the dataset

AUTO = tf.data.experimental.AUTOTUNE # used in tf.data.Dataset API

GCS_PATTERN = 'gs://music-genre-classification-project-isye6740/fma_small_wav/*/*.wav'

GCS_OUTPUT_1D = 'gs://music-genre-classification-project-isye6740/tfrecords-wav-1D/songs' # prefix for output file names, first type of model

GCS_OUTPUT_2D = 'gs://music-genre-classification-project-isye6740/tfrecords-wav-2D/songs' # prefix for output file names, second type of model

GCS_OUTPUT_FEATURES = 'gs://music-genre-classification-project-isye6740/tfrecords-features/songs' # prefix for output file names, models built with extracted features

SHARDS = 16

window_size = 10000 # number of raw audio samples

length_size_2d = 50176 # number of data points to form the Mel spectrogram

feature_size = 85210 # size of the feature vector

N_CLASSES = 8

DATA_SIZE = (224,224,3) # required data size for transfer learning

建立TensorFlow數據集

下一步就是設定函數讀入數據時所需的必要資訊。我沒有寫這段程式碼,只是把它改編自FreeMusicArchive。這一部分很可能在您自己的專案中發生變化,這取決於您使用的數據集。

# function to load metadata

# adapted from https://github.com/mdeff/fma/blob/master/utils.py

def metadata_load(filepath):

filename = os.path.basename(filepath)

if 'features' in filename:

return pd.read_csv(filepath, index_col=0, header=[0, 1, 2])

if 'echonest' in filename:

return pd.read_csv(filepath, index_col=0, header=[0, 1, 2])

if 'genres' in filename:

return pd.read_csv(filepath, index_col=0)

if 'tracks' in filename:

tracks = pd.read_csv(filepath, index_col=0, header=[0, 1])

COLUMNS = [('track', 'tags'), ('album', 'tags'), ('artist', 'tags'),

('track', 'genres'), ('track', 'genres_all')]

for column in COLUMNS:

tracks[column] = tracks[column].map(ast.literal_eval)

COLUMNS = [('track', 'date_created'), ('track', 'date_recorded'),

('album', 'date_created'), ('album', 'date_released'),

('artist', 'date_created'), ('artist', 'active_year_begin'),

('artist', 'active_year_end')]

for column in COLUMNS:

tracks[column] = pd.to_datetime(tracks[column])

SUBSETS = ('small', 'medium', 'large')

try:

tracks['set', 'subset'] = tracks['set', 'subset'].astype(

pd.CategoricalDtype(categories=SUBSETS, ordered=True))

except ValueError:

# the categories and ordered arguments were removed in pandas 0.25

tracks['set', 'subset'] = tracks['set', 'subset'].astype(

pd.CategoricalDtype(categories=SUBSETS, ordered=True))

COLUMNS = [('track', 'genre_top'), ('track', 'license'),

('album', 'type'), ('album', 'information'),

('artist', 'bio')]

for column in COLUMNS:

tracks[column] = tracks[column].astype('category')

return tracks

# function to get genre information for each track ID

def track_genre_information(GENRE_PATH, TRACKS_PATH, subset):

"""

GENRE_PATH (str): path to the csv with the genre metadata

TRACKS_PATH (str): path to the csv with the track metadata

FILE_PATHS (list): list of paths to the mp3 files

subset (str): the subset of the data desired

"""

# get the genre information

genres = pd.read_csv(GENRE_PATH)

# load metadata on all the tracks

tracks = metadata_load(TRACKS_PATH)

# focus on the specific subset tracks

subset_tracks = tracks[tracks['set', 'subset'] <= subset]

# extract track ID and genre information for each track

subset_tracks_genre = np.array([np.array(subset_tracks.index),

np.array(subset_tracks['track', 'genre_top'])]).T

# combine the information in a dataframe

tracks_genre_df = pd.DataFrame({'track_id': subset_tracks_genre[:,0], 'genre': subset_tracks_genre[:,1]})

# label classes with numbers

encoder = LabelEncoder()

tracks_genre_df['genre_nb'] = encoder.fit_transform(tracks_genre_df.genre)

return tracks_genre_df

# get genre information for all tracks from the small subset

GENRE_PATH = "fma_metadata/genres.csv"

TRACKS_PATH = "fma_metadata/tracks.csv"

subset = 'small'

small_tracks_genre = track_genre_information(GENRE_PATH, TRACKS_PATH, subset)

然後我們需要函數來建立一個TensorFlow數據集。其思想是在檔名列表上回圈,在管道中應用一系列操作,這些操作返回批次處理數據集,其中包含一個特徵張量和一個標籤張量。我們使用TensorFlow內建函數和Python函數(與tf.py_函數,對於在數據管道中使用Python函數非常有用)。這裏我只包含從原始音訊數據建立數據集的函數,但過程與以頻譜圖作爲特性建立數據集的過程極爲相似。

# check the number of songs which are stored in GCS

nb_songs = len(tf.io.gfile.glob(GCS_PATTERN))

shard_size = math.ceil(1.0 * nb_songs / SHARDS)

print("Pattern matches {} songs which will be rewritten as {} .tfrec files containing {} songs each.".format(nb_songs, SHARDS, shard_size))

# functions to create the dataset from raw audio

# define a function to get the label associated with a file path

def get_label(file_path, genre_df=small_tracks_genre):

path = file_path.numpy()

path = path.decode("utf-8")

track_id = int(path.split('/')[-1].split('.')[0].lstrip('0'))

label = genre_df.loc[genre_df.track_id == track_id,'genre_nb'].values[0]

return tf.constant([label])

# define a function that extracts the desired features from a file path

def get_audio(file_path, window_size=window_size):

wav = tf.io.read_file(file_path)

audio = tf.audio.decode_wav(wav, desired_channels=1).audio

filtered_audio = audio[:window_size,:]

return filtered_audio

# process the path

def process_path(file_path, window_size=window_size):

label = get_label(file_path)

audio = get_audio(file_path, window_size)

return audio, label

# parser, wrap around the processing function and specify output shape

def parser(file_path, window_size=window_size):

audio, label = tf.py_function(process_path, [file_path], (tf.float32, tf.int32))

audio.set_shape((window_size,1))

label.set_shape((1,))

return audio, label

filenames = tf.data.Dataset.list_files(GCS_PATTERN, seed=35155) # This also shuffles the images

dataset_1d = filenames.map(parser, num_parallel_calls=AUTO)

dataset_1d = dataset_1d.batch(shard_size)

在GCS上使用TFRecord格式

現在我們有了數據集,我們使用TFRecord格式將其儲存在GCS上。這是GPU和TPU推薦使用的格式,因爲並行化帶來了快速的I/O。其主要思想是tf.Features和tf.Example. 我們將數據集寫入這些範例,儲存在GCS上。這部分程式碼應該需要對其他專案進行最少的編輯,除了更改特性型別之外。如果數據已經上傳到記錄格式一次,則可以跳過此部分。本節中的大部分程式碼都改編自TensorFlow官方文件以及本教學中有關音訊管道的內容。

# write to TFRecord

# need to TFRecord to greatly speed up the I/O process, previously a bottleneck

# functions to create various features

# adapted from https://codelabs.developers.google.com/codelabs/keras-flowers-data/#4

# and https://www.tensorflow.org/tutorials/load_data/tfrecord

def _bytestring_feature(list_of_bytestrings):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=list_of_bytestrings))

def _int_feature(list_of_ints): # int64

return tf.train.Feature(int64_list=tf.train.Int64List(value=list_of_ints))

def _float_feature(list_of_floats): # float32

return tf.train.Feature(float_list=tf.train.FloatList(value=list_of_floats))

# writer function

def to_tfrecord(tfrec_filewriter, song, label):

one_hot_class = np.eye(N_CLASSES)[label][0]

feature = {

"song": _float_feature(song.flatten().tolist()), # one song in the list

"class": _int_feature([label]), # one class in the list

"one_hot_class": _float_feature(one_hot_class.tolist()) # variable length list of floats, n=len(CLASSES)

}

return tf.train.Example(features=tf.train.Features(feature=feature))

def write_tfrecord(dataset, GCS_OUTPUT):

print("Writing TFRecords")

for shard, (song, label) in enumerate(dataset):

# batch size used as shard size here

shard_size = song.numpy().shape[0]

# good practice to have the number of records in the filename

filename = GCS_OUTPUT + "{:02d}-{}.tfrec".format(shard, shard_size)

with tf.io.TFRecordWriter(filename) as out_file:

for i in range(shard_size):

example = to_tfrecord(out_file,

song.numpy()[i],

label.numpy()[i])

out_file.write(example.SerializeToString())

print("Wrote file {} containing {} records".format(filename, shard_size))s

一旦這些記錄被儲存,我們需要其他函數來讀取它們。依次處理每個範例,從TFRecord中提取相關資訊並重新構造tf.數據集. 這看起來像是一個回圈過程(建立一個tf.數據集→作爲TFRecord上傳到GCS→將TFRecord讀入tf.數據集),但這實際上通過簡化I/O過程提供了巨大的速度效率。如果I/O是瓶頸,使用GPU或TPU是沒有幫助的,這種方法允許我們通過優化數據載入來充分利用它們在訓練期間的速度增益。

# function to parse an example and return the song feature and the one-hot class

# adapted from https://codelabs.developers.google.com/codelabs/keras-flowers-data/#4

# and https://www.tensorflow.org/tutorials/load_data/tfrecord

def read_tfrecord_1d(example):

features = {

"song": tf.io.FixedLenFeature([window_size], tf.float32), # tf.string means bytestring

"class": tf.io.FixedLenFeature([1], tf.int64), # shape [] means scalar

"one_hot_class": tf.io.VarLenFeature(tf.float32),

}

example = tf.io.parse_single_example(example, features)

song = example['song']

# song = tf.audio.decode_wav(example['song'], desired_channels=1).audio

song = tf.cast(example['song'], tf.float32)

song = tf.reshape(song, [window_size, 1])

label = tf.reshape(example['class'], [1])

one_hot_class = tf.sparse.to_dense(example['one_hot_class'])

one_hot_class = tf.reshape(one_hot_class, [N_CLASSES])

return song, one_hot_class

# function to load the dataset from TFRecords

def load_dataset_1d(filenames):

# read from TFRecords. For optimal performance, read from multiple

# TFRecord files at once and set the option experimental_deterministic = False

# to allow order-altering optimizations.

option_no_order = tf.data.Options()

option_no_order.experimental_deterministic = False

dataset = tf.data.TFRecordDataset(filenames, num_parallel_reads=AUTO)

dataset = dataset.with_options(option_no_order)

dataset = dataset.map(read_tfrecord_1d, num_parallel_calls=AUTO)

# ignore potentially corrupted records

dataset = dataset.apply(tf.data.experimental.ignore_errors())

return dataset

準備訓練、驗證和測試集

重要的是,將數據適當地分割成訓練驗證測試集(64%-16%-20%),前兩個測試集用於優化模型體系結構,後者用於評估模型效能。拆分發生在檔名級別。

# function to create training, validation and testing sets

# adapted from https://colab.sandbox.google.com/notebooks/tpu.ipynb

# and https://codelabs.developers.google.com/codelabs/keras-flowers-data/#4

def create_train_validation_testing_sets(TFREC_PATTERN,

VALIDATION_SPLIT=0.2,

TESTING_SPLIT=0.2):

"""

TFREC_PATTERN: string pattern for the TFREC bucket on GCS

"""

# see which accelerator is available

try: # detect TPUs

tpu = None

tpu = tf.distribute.cluster_resolver.TPUClusterResolver() # TPU detection

tf.config.experimental_connect_to_cluster(tpu)

tf.tpu.experimental.initialize_tpu_system(tpu)

strategy = tf.distribute.experimental.TPUStrategy(tpu)

except ValueError: # detect GPUs

strategy = tf.distribute.MirroredStrategy() # for GPU or multi-GPU machines

print("Number of accelerators: ", strategy.num_replicas_in_sync)

# Configuration

# adapted from https://codelabs.developers.google.com/codelabs/keras-flowers-data/#4

if tpu:

BATCH_SIZE = 16*strategy.num_replicas_in_sync # A TPU has 8 cores so this will be 128

else:

BATCH_SIZE = 32 # On Colab/GPU, a higher batch size does not help and sometimes does not fit on the GPU (OOM)

# splitting data files between training and validation

filenames = tf.io.gfile.glob(TFREC_PATTERN)

testing_split = int(len(filenames) * TESTING_SPLIT)

training_filenames = filenames[testing_split:]

testing_filenames = filenames[:testing_split]

validation_split = int(len(filenames) * VALIDATION_SPLIT)

validation_filenames = training_filenames[:validation_split]

training_filenames = training_filenames[validation_split:]

validation_steps = int(3670 // len(filenames) * len(validation_filenames)) // BATCH_SIZE

steps_per_epoch = int(3670 // len(filenames) * len(training_filenames)) // BATCH_SIZE

return tpu, BATCH_SIZE, strategy, training_filenames, validation_filenames, testing_filenames, steps_per_epoch

# get the batched dataset, optimizing for I/O performance

# follow best practice for shuffling and repeating data

def get_batched_dataset(filenames, load_func, train=False):

"""

filenames: filenames to load

load_func: specific loading function to use

train: Boolean, whether this is a training set

"""

dataset = load_func(filenames)

dataset = dataset.cache() # This dataset fits in RAM

if train:

# Best practices for Keras:

# Training dataset: repeat then batch

# Evaluation dataset: do not repeat

dataset = dataset.repeat()

dataset = dataset.batch(BATCH_SIZE)

dataset = dataset.prefetch(AUTO) # prefetch next batch while training (autotune prefetch buffer size)

# should shuffle too but this dataset was well shuffled on disk already

return dataset

# source: Dataset performance guide: https://www.tensorflow.org/guide/performance/datasets

# instantiate the datasets

training_dataset_1d = get_batched_dataset(training_filenames_1d, load_dataset_1d,

train=True)

validation_dataset_1d = get_batched_dataset(validation_filenames_1d, load_dataset_1d,

train=False)

testing_dataset_1d = get_batched_dataset(testing_filenames_1d, load_dataset_1d,

train=False)

模型和訓練

最後,我們可以使用kerasapi來構建和測試模型。網上有大量關於如何使用Keras構建模型的資訊,所以我不會深入討論細節,但是這裏是使用1D折積層與池層相結合來從原始音訊中提取特徵。

# create a CNN model

with strategy.scope():

# create the model

model = tf.keras.Sequential([

tf.keras.layers.Conv1D(filters=128,

kernel_size=3,

activation='relu',

input_shape=[window_size,1],

name = 'conv1'),

tf.keras.layers.MaxPooling1D(name='max1'),

tf.keras.layers.Conv1D(filters=64,

kernel_size=3,

activation='relu',

name='conv2'),

tf.keras.layers.MaxPooling1D(name='max2'),

tf.keras.layers.Flatten(name='flatten'),

tf.keras.layers.Dense(100, activation='relu', name='dense1'),

tf.keras.layers.Dropout(0.5, name='dropout2'),

tf.keras.layers.Dense(20, activation='relu', name='dense2'),

tf.keras.layers.Dropout(0.5, name='dropout3'),

tf.keras.layers.Dense(8, name='dense3')

])

#compile

model.compile(optimizer='adam',

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

model.summary()

# train the model

logdir = "logs/scalars/" + datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = keras.callbacks.TensorBoard(log_dir=logdir)

EPOCHS = 100

raw_audio_history = model.fit(training_dataset_1d, steps_per_epoch=steps_per_epoch,

validation_data=validation_dataset_1d, epochs=EPOCHS,

callbacks=tensorboard_callback)

# evaluate on the test data

model.evaluate(testing_dataset_1d)

最後一點相關資訊是關於使用TensorBoard繪製訓練和驗證曲線。

%load_ext tensorboard

%tensorboard --logdir logs/scalars

總結

總之,對同一個機器學習任務進行不同機器學習方法的基準測試是很有啓發性的。該專案強調了領域知識和特徵工程的重要性,以及標準的、相對容易的機器學習技術(如naivebayes)的威力。過擬合是一個問題,因爲與範例數量相比,特性的規模很大,但我相信未來的努力可以幫助緩解這個問題。

我很高興地看到了在譜圖上進行遷移學習的強大表現,並認爲我們可以通過使用更多的音樂理論特徵來做得更好。然而,如果有更多的數據可用於提取模式,原始音訊的深度學習技術確實顯示出希望。我們可以設想一個應用程式,其中分類可以直接發生在音訊樣本上,而不需要特徵工程。

作者:Célestin Hermez

本文程式碼 https://github.com/celestinhermez/music-genre-classification

deephub 翻譯組