基於開源模型搭建實時臉部辨識系統(二):人臉檢測概覽與模型選型

續 基於開源模型的實時臉部辨識系統

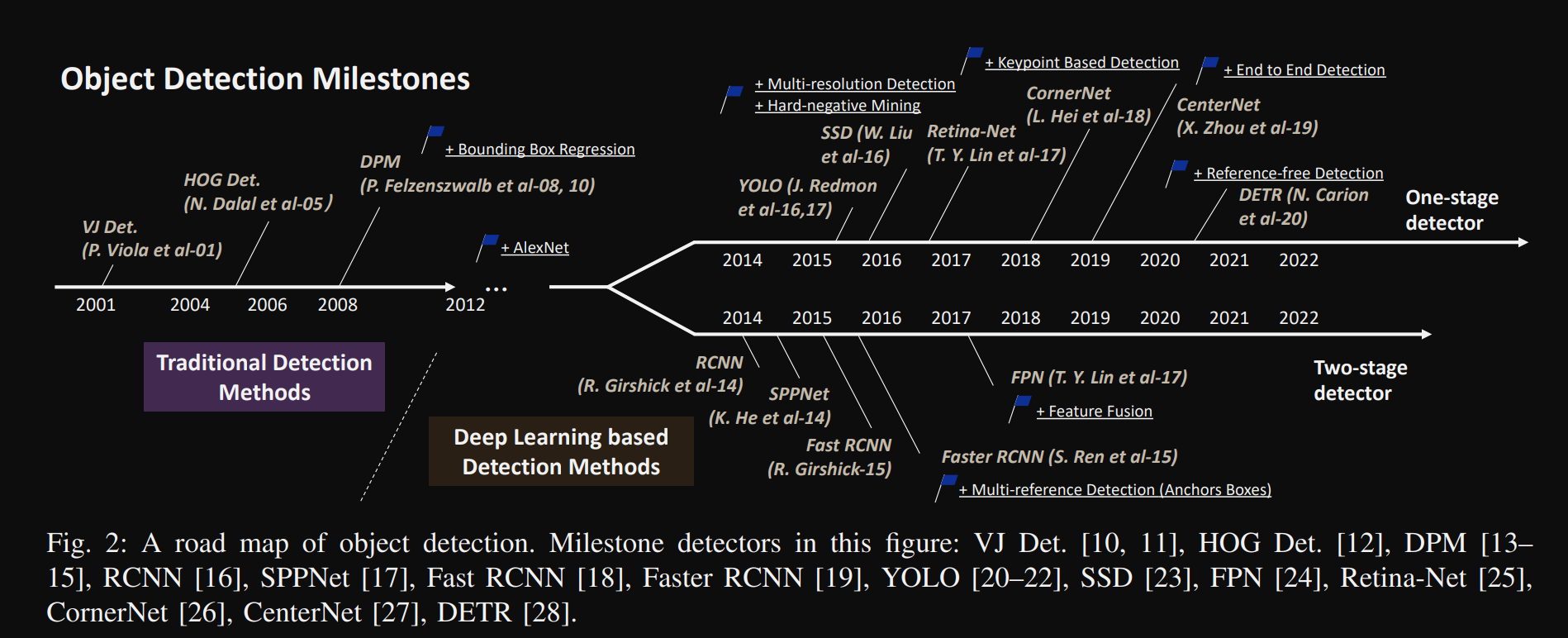

進行臉部辨識首要的任務就是要定位出畫面中的人臉,這個任務就是人臉檢測。人臉檢測總體上算是目標檢測的一個特殊情況,但也有自身的特點,比如角度多變,表情多變,可能存在各類遮擋。早期傳統的方法有Haar Cascade、HOG等,基本做法就是特徵描述子+滑窗+分類器,隨著2012年Alexnet的出現,慢慢深度學習在這一領域開始崛起。演演算法和硬體效能的發展,也讓基於深度學習的臉部辨識不僅效能取得了很大的提升,速度也能達到實時,使得人臉技術真正進入了實用。

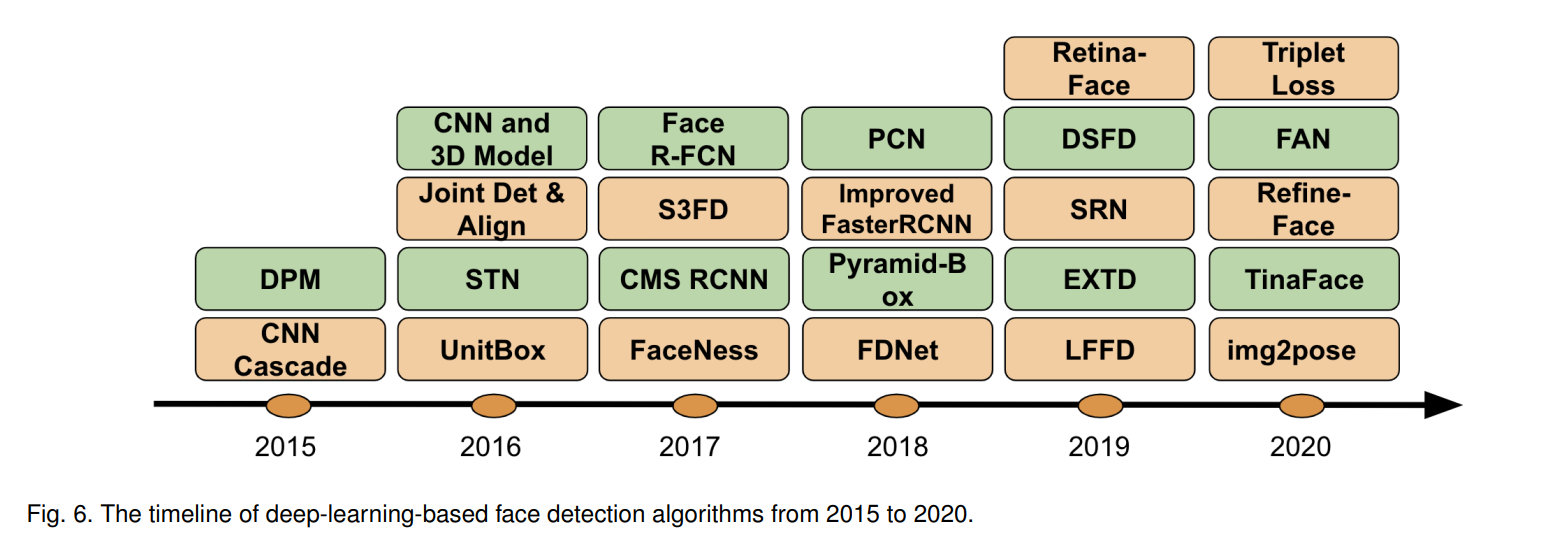

人臉檢測大體上跟隨目標檢測技術的發展,不過也有些自己的方法,主要可以分為一下幾類方法.

人臉檢測演演算法概覽

由於這個系列重點並不在於演演算法細節本身,因而對於一些演演算法只是提及,有興趣可以自己精讀。

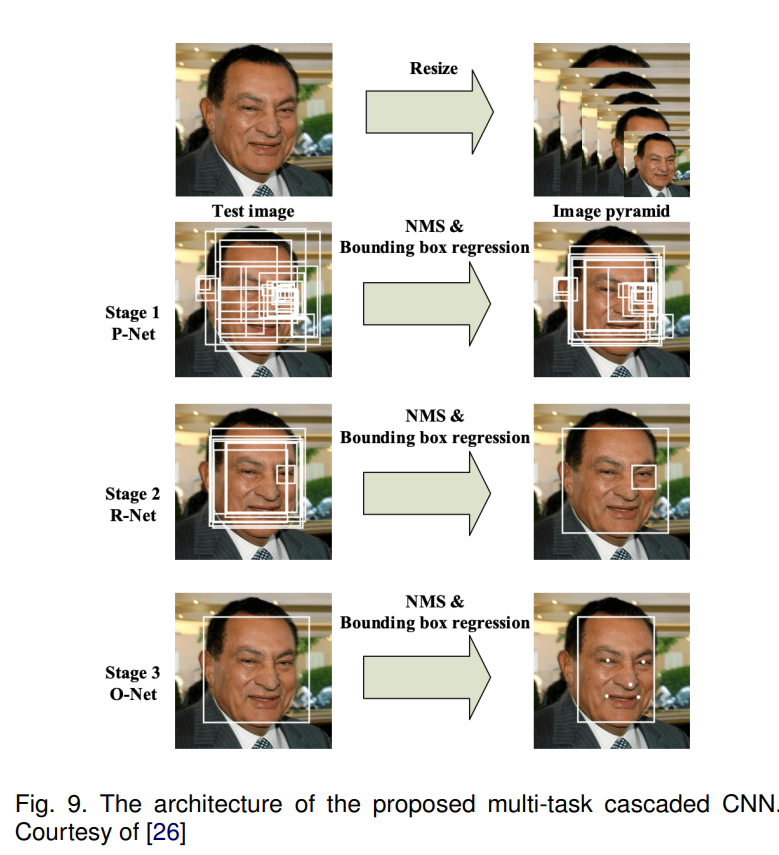

Cascade-CNN Based Models

這類方法通過級聯幾個網路來逐步提高準確率,比較有代表性的是MTCNN方法。

MTCNN通過級聯PNet, RNet, ONet,層層過濾來提高整個檢測的精度。這個方法更適合CPU,那個時期的嵌入式裝置使用比較多。 由於有3個網路,訓練起來比較麻煩。

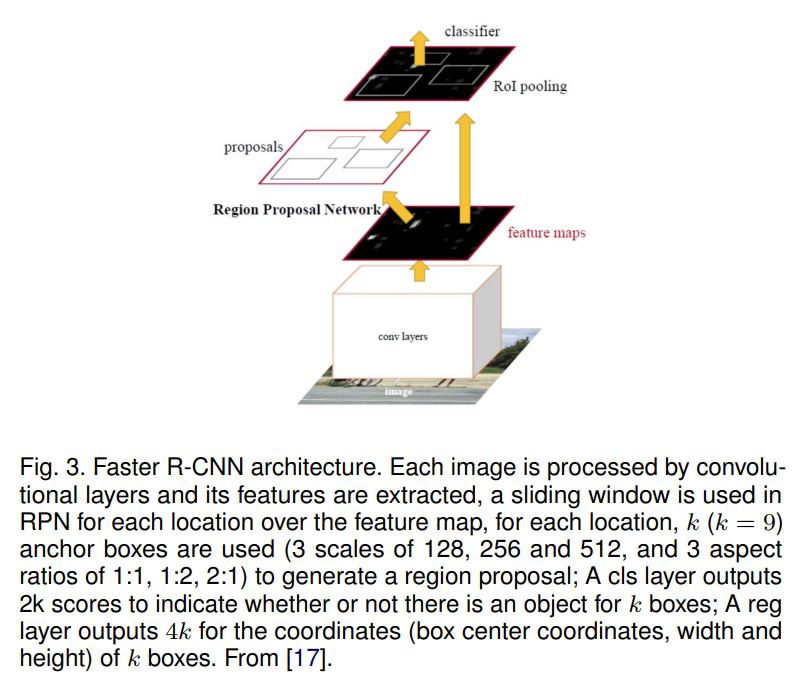

R-CNN

這一塊主要來源於目標檢測中的RCNN, Fast RCNN, Faster RCNN

這類方法精度高,但速度相對較慢。

Single Shot Detection Models

SSD是目標檢測領域比較有代表性的一個演演算法,與RCNN系列相比,它是one stage方法,速度比較快。基於它的用於人臉檢測的代表性方法是SSH.

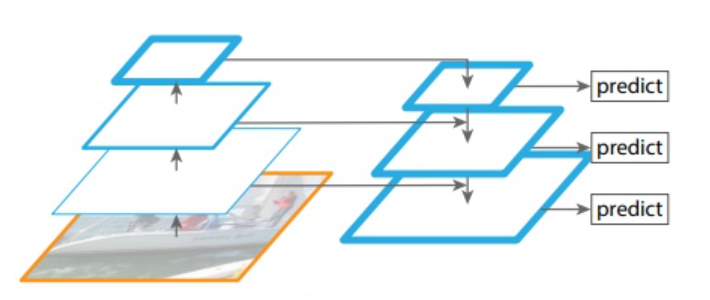

Feature Pyramid Network Based Models

YOLO系列

YOLO系列在目標檢測領域比較成功,自然的也會用在人臉檢測領域,比如tiny yolo face,yolov5face, yolov8face等,基本上每一代都會應用於人臉。

開源模型的選型

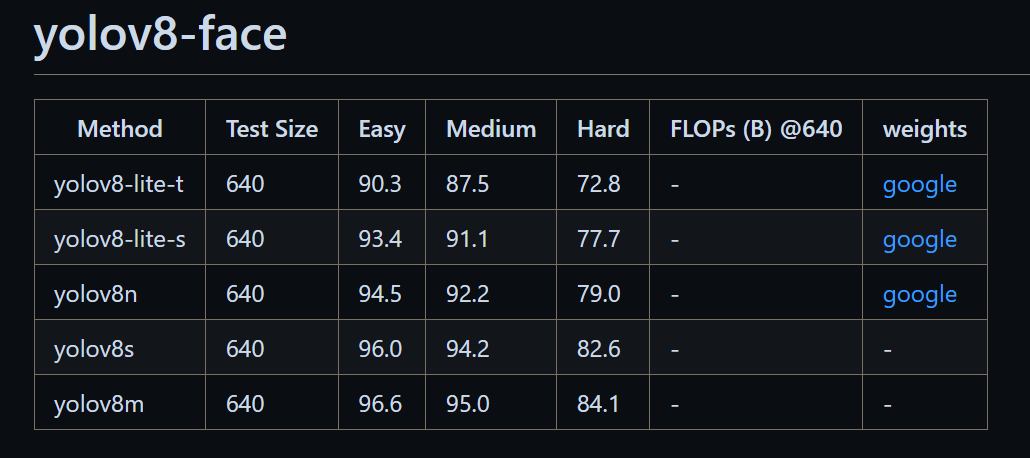

為了能夠達到實時,同時也要有較好的效果,我們將目光鎖定在yolo系列上,yolo在精度和速度的平衡上做的比較好,也比較易用。目前最新的是yolov8, 經過搜尋,也已經有人將其用在人臉檢測上了:derronqi/yolov8-face: yolov8 face detection with landmark (github.com),

推理框架的選擇

簡單起見,我們選擇onnxruntime,該框架既支援CPU也支援GPU, 基本滿足了我們的開發要求。

yolov8-face的使用

為了減少重複工作,我們可以定義一個模型的基礎類別, 對模型載入、推理的操作進行封裝,這樣就不需要每個模型都實現一遍了:

from easydict import EasyDict as edict

import onnxruntime

import threading

class BaseModel:

def __init__(self, model_path, device='cpu', **kwargs) -> None:

self.model = self.load_model(model_path, device)

self.input_layer = self.model.get_inputs()[0].name

self.output_layers = [output.name for output in self.model.get_outputs()]

self.lock = threading.Lock()

def load_model(self, model_path:str, device:str='cpu'):

available_providers = onnxruntime.get_available_providers()

if device == "gpu" and "CUDAExecutionProvider" not in available_providers:

print("CUDAExecutionProvider is not available, use CPUExecutionProvider instead")

device = "cpu"

if device == 'cpu':

self.model = onnxruntime.InferenceSession(model_path, providers=['CPUExecutionProvider'])

else:

self.model = onnxruntime.InferenceSession(model_path,providers=['CUDAExecutionProvider'])

return self.model

def inference(self, input):

with self.lock:

outputs = self.model.run(self.output_layers, {self.input_layer: input})

return outputs

def preprocess(self, **kwargs):

pass

def postprocess(self, **kwargs):

pass

def run(self, **kwargs):

pass

繼承BaseModel, 實現模型的前處理和後處理:

class Yolov8Face(BaseModel):

def __init__(self, model_path, device='cpu',**kwargs) -> None:

super().__init__(model_path, device, **kwargs)

self.conf_threshold = kwargs.get('conf_threshold', 0.5)

self.iou_threshold = kwargs.get('iou_threshold', 0.4)

self.input_size = kwargs.get('input_size', 640)

self.input_width, self.input_height = self.input_size, self.input_size

self.reg_max=16

self.project = np.arange(self.reg_max)

self.strides=[8, 16, 32]

self.feats_hw = [(math.ceil(self.input_height / self.strides[i]), math.ceil(self.input_width / self.strides[i])) for i in range(len(self.strides))]

self.anchors = self.make_anchors(self.feats_hw)

def make_anchors(self, feats_hw, grid_cell_offset=0.5):

"""Generate anchors from features."""

anchor_points = {}

for i, stride in enumerate(self.strides):

h,w = feats_hw[i]

x = np.arange(0, w) + grid_cell_offset # shift x

y = np.arange(0, h) + grid_cell_offset # shift y

sx, sy = np.meshgrid(x, y)

# sy, sx = np.meshgrid(y, x)

anchor_points[stride] = np.stack((sx, sy), axis=-1).reshape(-1, 2)

return anchor_points

def preprocess(self, image, **kwargs):

return resize_image(image, keep_ratio=True, dst_width=self.input_width, dst_height=self.input_height)

def distance2bbox(self, points, distance, max_shape=None):

x1 = points[:, 0] - distance[:, 0]

y1 = points[:, 1] - distance[:, 1]

x2 = points[:, 0] + distance[:, 2]

y2 = points[:, 1] + distance[:, 3]

if max_shape is not None:

x1 = np.clip(x1, 0, max_shape[1])

y1 = np.clip(y1, 0, max_shape[0])

x2 = np.clip(x2, 0, max_shape[1])

y2 = np.clip(y2, 0, max_shape[0])

return np.stack([x1, y1, x2, y2], axis=-1)

def postprocess(self, preds, scale_h, scale_w, top, left, **kwargs):

bboxes, scores, landmarks = [], [], []

for i, pred in enumerate(preds):

stride = int(self.input_height/pred.shape[2])

pred = pred.transpose((0, 2, 3, 1))

box = pred[..., :self.reg_max * 4]

cls = 1 / (1 + np.exp(-pred[..., self.reg_max * 4:-15])).reshape((-1,1))

kpts = pred[..., -15:].reshape((-1,15)) ### x1,y1,score1, ..., x5,y5,score5

# tmp = box.reshape(self.feats_hw[i][0], self.feats_hw[i][1], 4, self.reg_max)

tmp = box.reshape(-1, 4, self.reg_max)

bbox_pred = softmax(tmp, axis=-1)

bbox_pred = np.dot(bbox_pred, self.project).reshape((-1,4))

bbox = self.distance2bbox(self.anchors[stride], bbox_pred, max_shape=(self.input_height, self.input_width)) * stride

kpts[:, 0::3] = (kpts[:, 0::3] * 2.0 + (self.anchors[stride][:, 0].reshape((-1,1)) - 0.5)) * stride

kpts[:, 1::3] = (kpts[:, 1::3] * 2.0 + (self.anchors[stride][:, 1].reshape((-1,1)) - 0.5)) * stride

kpts[:, 2::3] = 1 / (1+np.exp(-kpts[:, 2::3]))

bbox -= np.array([[left, top, left, top]]) ###合理使用廣播法則

bbox *= np.array([[scale_w, scale_h, scale_w, scale_h]])

kpts -= np.tile(np.array([left, top, 0]), 5).reshape((1,15))

kpts *= np.tile(np.array([scale_w, scale_h, 1]), 5).reshape((1,15))

bboxes.append(bbox)

scores.append(cls)

landmarks.append(kpts)

bboxes = np.concatenate(bboxes, axis=0)

scores = np.concatenate(scores, axis=0)

landmarks = np.concatenate(landmarks, axis=0)

bboxes_wh = bboxes.copy()

bboxes_wh[:, 2:4] = bboxes[:, 2:4] - bboxes[:, 0:2] ####xywh

classIds = np.argmax(scores, axis=1)

confidences = np.max(scores, axis=1) ####max_class_confidence

mask = confidences>self.conf_threshold

bboxes_wh = bboxes_wh[mask] ###合理使用廣播法則

confidences = confidences[mask]

classIds = classIds[mask]

landmarks = landmarks[mask]

if len(bboxes_wh) == 0:

return np.empty((0, 5)), np.empty((0, 5))

indices = cv2.dnn.NMSBoxes(bboxes_wh.tolist(), confidences.tolist(), self.conf_threshold,

self.iou_threshold).flatten()

if len(indices) > 0:

mlvl_bboxes = bboxes_wh[indices]

confidences = confidences[indices]

classIds = classIds[indices]

## convert box to x1,y1,x2,y2

mlvl_bboxes[:, 2:4] = mlvl_bboxes[:, 2:4] + mlvl_bboxes[:, 0:2]

# concat box, confidence, classId

mlvl_bboxes = np.concatenate((mlvl_bboxes, confidences.reshape(-1, 1), classIds.reshape(-1, 1)), axis=1)

landmarks = landmarks[indices]

return mlvl_bboxes, landmarks.reshape(-1, 5, 3)[..., :2]

else:

return np.empty((0, 5)), np.empty((0, 5))

def run(self, image, **kwargs):

img, newh, neww, top, left = self.preprocess(image)

scale_h, scale_w = image.shape[0]/newh, image.shape[1]/neww

# convert to RGB

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = img.astype(np.float32)

img = img / 255.0

img = np.transpose(img, (2, 0, 1))

img = np.expand_dims(img, axis=0)

output = self.inference(img)

bboxes, landmarks = self.postprocess(output, scale_h, scale_w, top, left)

# limit box in image

bboxes[:, 0] = np.clip(bboxes[:, 0], 0, image.shape[1])

bboxes[:, 1] = np.clip(bboxes[:, 1], 0, image.shape[0])

return bboxes, landmarks

測試

在Intel(R) Core(TM) i5-10210U上,yolov8-lite-t耗時50ms, 基本可以達到實時的需求。

參考文獻:

ZOU, Zhengxia, et al. Object detection in 20 years: A survey. Proceedings of the IEEE, 2023.

MINAEE, Shervin, et al. Going deeper into face detection: A survey. arXiv preprint arXiv:2103.14983, 2021.

臉部辨識系統原始碼

本文來自部落格園,作者:CoderInCV,轉載請註明原文連結:https://www.cnblogs.com/haoliuhust/p/17700867.html