基於velero及minio實現etcd資料備份與恢復

1、Velero簡介

Velero 是vmware開源的一個雲原生的災難恢復和遷移工具,它本身也是開源的,採用Go語言編寫,可以安全的備份、恢復和遷移Kubernetes叢集資源資料;官網https://velero.io/。Velero 是西班牙語意思是帆船,非常符合Kubernetes社群的命名風格,Velero的開發公司Heptio,已被VMware收購。Velero 支援標準的K8S叢集,既可以是私有云平臺也可以是公有云,除了災備之外它還能做資源移轉,支援把容器應用從一個叢集遷移到另一個叢集。Velero 的工作方式就是把kubernetes中的資料備份到物件儲存以實現高可用和持久化,預設的備份儲存時間為720小時,並在需要的時候進行下載和恢復。

2、Velero與etcd快照備份的區別

- etcd 快照是全域性完成備份(類似於MySQL全部備份),即使需要恢復一個資源物件(類似於只恢復MySQL的一個庫),但是也需要做全域性恢復到備份的狀態(類似於MySQL的全庫恢復),即會影響其它namespace中pod執行服務(類似於會影響MySQL其它資料庫的資料)。

- Velero可以有針對性的備份,比如按照namespace單獨備份、只備份單獨的資源物件等,在恢復的時候可以根據備份只恢復單獨的namespace或資源物件,而不影響其它namespace中pod執行服務。

- velero支援ceph、oss等物件儲存,etcd 快照是一個為本地檔案。

- velero支援任務計劃實現週期備份,但etcd 快照也可以基於cronjob實現。

- velero支援對AWS EBS建立快照及還原https://www.qloudx.com/velero-for-kubernetes-backup-restore-stateful-workloads-with-aws-ebs-snapshots/

https://github.com/vmware-tanzu/velero-plugin-for-aws

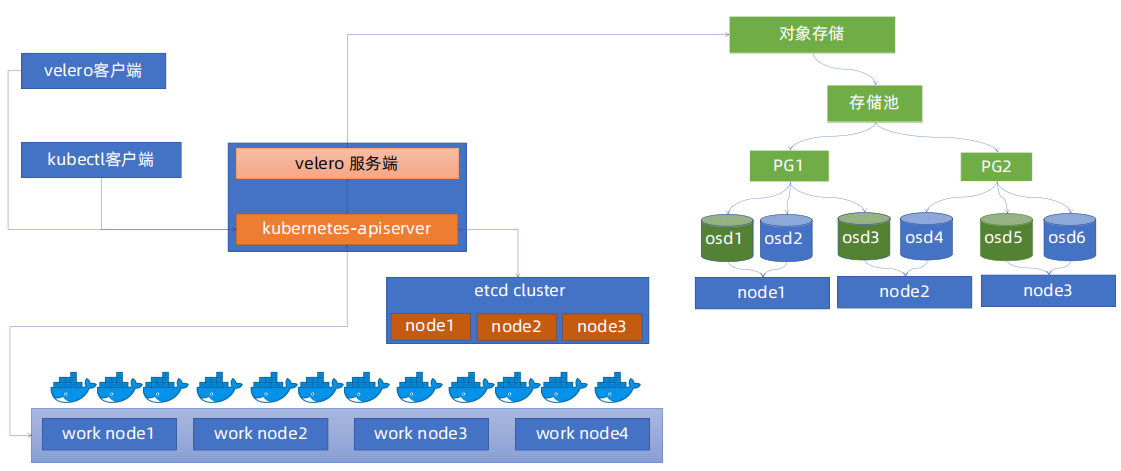

3、velero整體架構

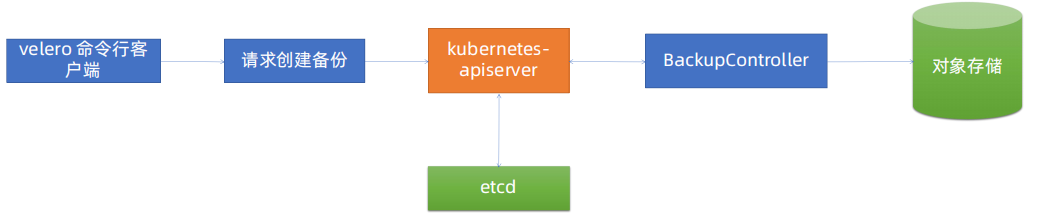

4、velero備份流程

Velero 使用者端呼叫Kubernetes API Server建立Backup任務。Backup 控制器基於watch 機制通過API Server獲取到備份任務。Backup 控制器開始執行備份動作,其會通過請求API Server獲取需要備份的資料。Backup 控制器將獲取到的資料備份到指定的物件儲存server端。

5、物件儲存minio部署

5.1、建立資料目錄

root@harbor:~# mkdir -p /data/minio

5.2、建立minio容器

下載映象

root@harbor:~# docker pull minio/minio:RELEASE.2023-08-31T15-31-16Z

RELEASE.2023-08-31T15-31-16Z: Pulling from minio/minio

0c10cd59e10e: Pull complete

b55c0ddd1333: Pull complete

4aade59ba7c6: Pull complete

7c45df1e40d6: Pull complete

adedf83b12e0: Pull complete

bc9f33183b0c: Pull complete

Digest: sha256:76868af456548aab229762d726271b0bf8604a500416b3e9bdcb576940742cda

Status: Downloaded newer image for minio/minio:RELEASE.2023-08-31T15-31-16Z

docker.io/minio/minio:RELEASE.2023-08-31T15-31-16Z

root@harbor:~#

建立minio容器

root@harbor:~# docker run --name minio \

> -p 9000:9000 \

> -p 9999:9999 \

> -d --restart=always \

> -e "MINIO_ROOT_USER=admin" \

> -e "MINIO_ROOT_PASSWORD=12345678" \

> -v /data/minio/data:/data \

> minio/minio:RELEASE.2023-08-31T15-31-16Z server /data \

> --console-address '0.0.0.0:9999'

ba5e511da5f30a17614d719979e28066788ca7520d87c67077a38389e70423f1

root@harbor:~#

如果不指定,則預設使用者名稱與密碼為 minioadmin/minioadmin,可以通過環境變數自定義(MINIO_ROOT_USER來指定使用者名稱,MINIO_ROOT_PASSWORD來指定使用者名稱名對應的密碼);

5.3、minio web介面登入

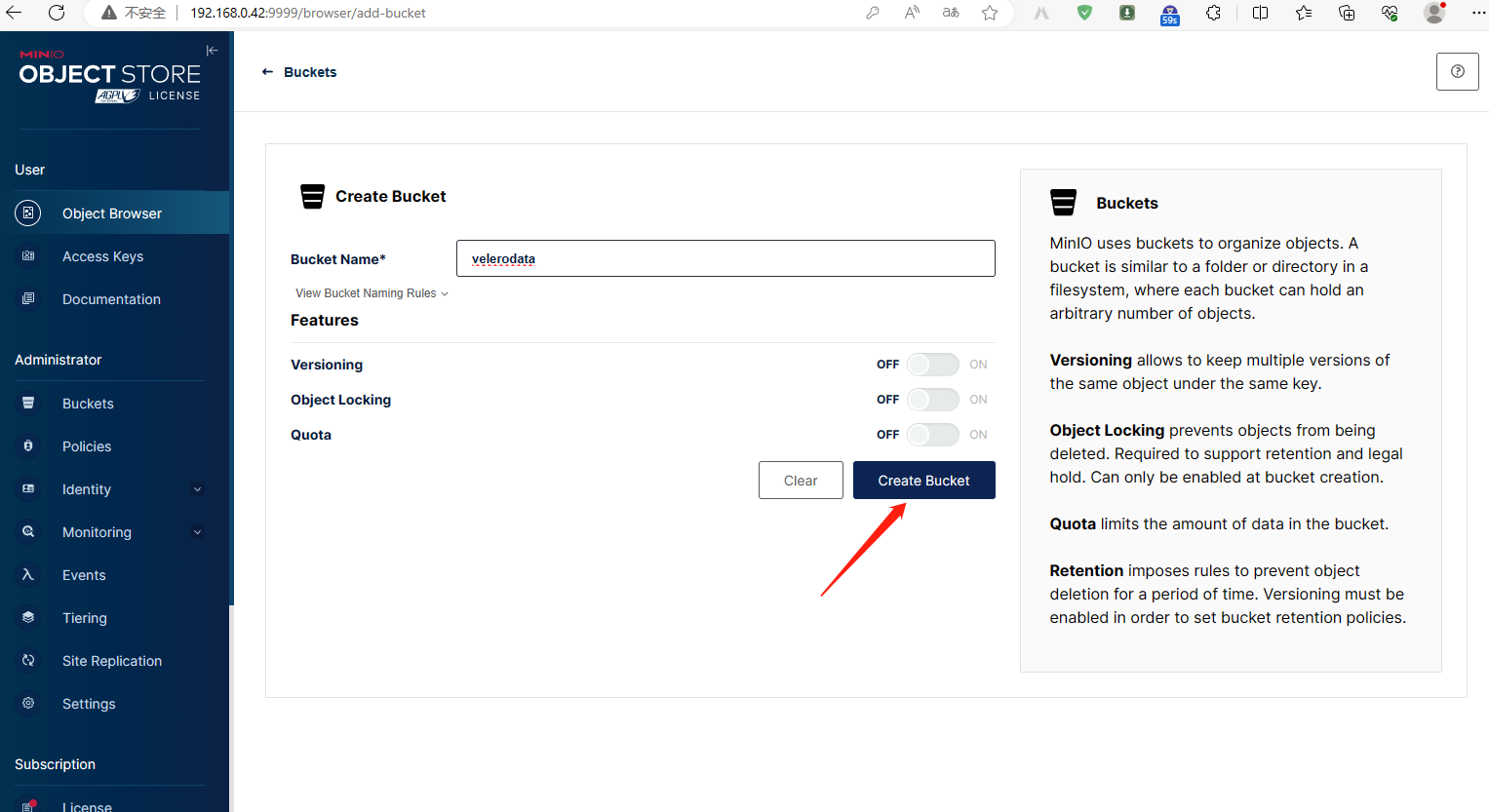

5.4、minio 建立bucket

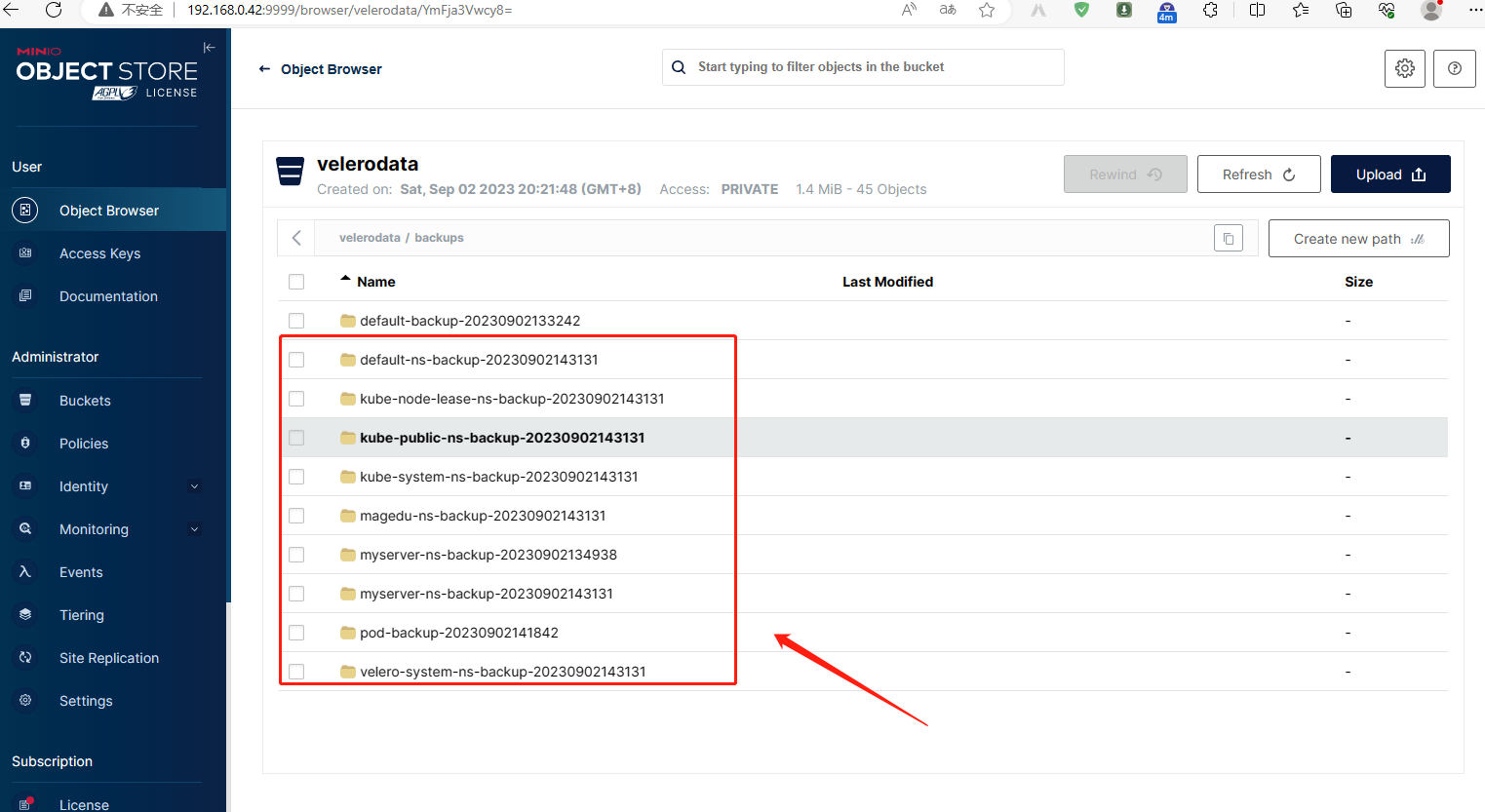

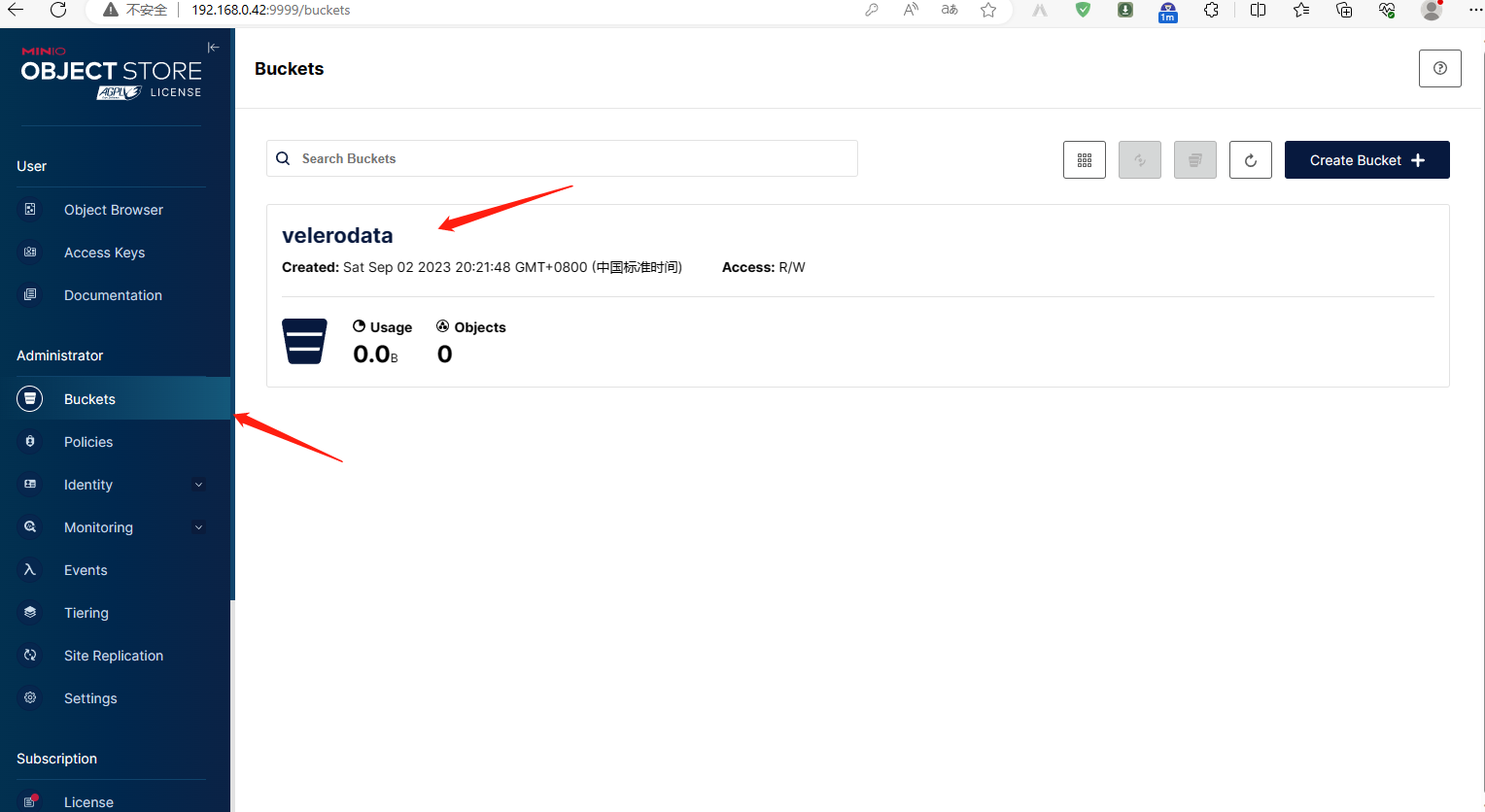

5.5、minio 驗證bucket

6、在master節點部署velero

6.1、下載velero使用者端工具

下載velero使用者端工具

root@k8s-master01:/usr/local/src# wget https://github.com/vmware-tanzu/velero/releases/download/v1.11.1/velero-v1.11.1-linux-amd64.tar.gz

解壓壓縮包

root@k8s-master01:/usr/local/src# ll

total 99344

drwxr-xr-x 3 root root 4096 Sep 2 12:38 ./

drwxr-xr-x 10 root root 4096 Feb 17 2023 ../

drwxr-xr-x 2 root root 4096 Oct 21 2015 bin/

-rw-r--r-- 1 root root 64845365 May 31 13:21 buildkit-v0.11.6.linux-amd64.tar.gz

-rw-r--r-- 1 root root 36864459 Sep 2 12:31 velero-v1.11.1-linux-amd64.tar.gz

root@k8s-master01:/usr/local/src# tar xf velero-v1.11.1-linux-amd64.tar.gz

root@k8s-master01:/usr/local/src# ll

total 99348

drwxr-xr-x 4 root root 4096 Sep 2 12:39 ./

drwxr-xr-x 10 root root 4096 Feb 17 2023 ../

drwxr-xr-x 2 root root 4096 Oct 21 2015 bin/

-rw-r--r-- 1 root root 64845365 May 31 13:21 buildkit-v0.11.6.linux-amd64.tar.gz

drwxr-xr-x 3 root root 4096 Sep 2 12:39 velero-v1.11.1-linux-amd64/

-rw-r--r-- 1 root root 36864459 Sep 2 12:31 velero-v1.11.1-linux-amd64.tar.gz

root@k8s-master01:/usr/local/src#

將velero二進位制檔案移動至/usr/local/bin

root@k8s-master01:/usr/local/src# ll velero-v1.11.1-linux-amd64

total 83780

drwxr-xr-x 3 root root 4096 Sep 2 12:39 ./

drwxr-xr-x 4 root root 4096 Sep 2 12:39 ../

-rw-r--r-- 1 root root 10255 Dec 13 2022 LICENSE

drwxr-xr-x 4 root root 4096 Sep 2 12:39 examples/

-rwxr-xr-x 1 root root 85765416 Jul 25 08:43 velero*

root@k8s-master01:/usr/local/src# cp velero-v1.11.1-linux-amd64/velero /usr/local/bin/

root@k8s-master01:/usr/local/src#

驗證velero命令是否可執行?

root@k8s-master01:/usr/local/src# velero --help

Velero is a tool for managing disaster recovery, specifically for Kubernetes

cluster resources. It provides a simple, configurable, and operationally robust

way to back up your application state and associated data.

If you're familiar with kubectl, Velero supports a similar model, allowing you to

execute commands such as 'velero get backup' and 'velero create schedule'. The same

operations can also be performed as 'velero backup get' and 'velero schedule create'.

Usage:

velero [command]

Available Commands:

backup Work with backups

backup-location Work with backup storage locations

bug Report a Velero bug

client Velero client related commands

completion Generate completion script

create Create velero resources

debug Generate debug bundle

delete Delete velero resources

describe Describe velero resources

get Get velero resources

help Help about any command

install Install Velero

plugin Work with plugins

repo Work with repositories

restore Work with restores

schedule Work with schedules

snapshot-location Work with snapshot locations

uninstall Uninstall Velero

version Print the velero version and associated image

Flags:

--add_dir_header If true, adds the file directory to the header of the log messages

--alsologtostderr log to standard error as well as files (no effect when -logtostderr=true)

--colorized optionalBool Show colored output in TTY. Overrides 'colorized' value from $HOME/.config/velero/config.json if present. Enabled by default

--features stringArray Comma-separated list of features to enable for this Velero process. Combines with values from $HOME/.config/velero/config.json if present

-h, --help help for velero

--kubeconfig string Path to the kubeconfig file to use to talk to the Kubernetes apiserver. If unset, try the environment variable KUBECONFIG, as well as in-cluster configuration

--kubecontext string The context to use to talk to the Kubernetes apiserver. If unset defaults to whatever your current-context is (kubectl config current-context)

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory (no effect when -logtostderr=true)

--log_file string If non-empty, use this log file (no effect when -logtostderr=true)

--log_file_max_size uint Defines the maximum size a log file can grow to (no effect when -logtostderr=true). Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)

--logtostderr log to standard error instead of files (default true)

-n, --namespace string The namespace in which Velero should operate (default "velero")

--one_output If true, only write logs to their native severity level (vs also writing to each lower severity level; no effect when -logtostderr=true)

--skip_headers If true, avoid header prefixes in the log messages

--skip_log_headers If true, avoid headers when opening log files (no effect when -logtostderr=true)

--stderrthreshold severity logs at or above this threshold go to stderr when writing to files and stderr (no effect when -logtostderr=true or -alsologtostderr=false) (default 2)

-v, --v Level number for the log level verbosity

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "velero [command] --help" for more information about a command.

root@k8s-master01:/usr/local/src#

能夠正常執行velero命令說明velero使用者端工具就準備就緒;

6.2、設定velero認證環境

6.2.1、建立velero工作目錄

root@k8s-master01:/usr/local/src# mkdir /data/velero -p

root@k8s-master01:/usr/local/src# cd /data/velero/

root@k8s-master01:/data/velero# ll

total 8

drwxr-xr-x 2 root root 4096 Sep 2 12:42 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

root@k8s-master01:/data/velero#

6.2.2、建立存取minio的認證檔案

root@k8s-master01:/data/velero# ll

total 12

drwxr-xr-x 2 root root 4096 Sep 2 12:43 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero# cat velero-auth.txt

[default]

aws_access_key_id = admin

aws_secret_access_key = 12345678

root@k8s-master01:/data/velero#

這個velero-auth.txt檔案中記錄了存取物件儲存minio的使用者名稱和密碼;其中aws_access_key_id這個變數用來指定物件儲存使用者名稱aws_secret_access_key變數用來指定密碼;這兩個變數是固定的不能隨意改動;

6.2.3、準備user-csr檔案

root@k8s-master01:/data/velero# ll

total 16

drwxr-xr-x 2 root root 4096 Sep 2 12:48 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero# cat awsuser-csr.json

{

"CN": "awsuser",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "SiChuan",

"L": "GuangYuan",

"O": "k8s",

"OU": "System"

}

]

}

root@k8s-master01:/data/velero#

該檔案用於製作證書所需資訊;

6.2.4、準備證書籤發環境

安裝證書籤發工具

root@k8s-master01:/data/velero# apt install golang-cfssl

下載cfssl

root@k8s-master01:/data/velero# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

下載cfssljson

root@k8s-master01:/data/velero# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

cfssl-certinfo

root@k8s-master01:/data/velero# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

重新命名

root@k8s-master01:/data/velero# ll

total 40248

drwxr-xr-x 2 root root 4096 Sep 2 12:57 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw-r--r-- 1 root root 13502544 Aug 31 03:00 cfssl-certinfo_1.6.1_linux_amd64

-rw-r--r-- 1 root root 16659824 Aug 31 03:00 cfssl_1.6.1_linux_amd64

-rw-r--r-- 1 root root 11029744 Aug 31 03:00 cfssljson_1.6.1_linux_amd64

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero# mv cfssl-certinfo_1.6.1_linux_amd64 cfssl-certinfo

root@k8s-master01:/data/velero# mv cfssl_1.6.1_linux_amd64 cfssl

root@k8s-master01:/data/velero# mv cfssljson_1.6.1_linux_amd64 cfssljson

root@k8s-master01:/data/velero# ll

total 40248

drwxr-xr-x 2 root root 4096 Sep 2 12:58 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw-r--r-- 1 root root 16659824 Aug 31 03:00 cfssl

-rw-r--r-- 1 root root 13502544 Aug 31 03:00 cfssl-certinfo

-rw-r--r-- 1 root root 11029744 Aug 31 03:00 cfssljson

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero#

移動二進位制檔案至/usr/local/bin/

root@k8s-master01:/data/velero# cp cfssl-certinfo cfssl cfssljson /usr/local/bin/

新增可執行許可權

root@k8s-master01:/data/velero# chmod a+x /usr/local/bin/cfssl*

root@k8s-master01:/data/velero# ll /usr/local/bin/cfssl*

-rwxr-xr-x 1 root root 16659824 Sep 2 12:59 /usr/local/bin/cfssl*

-rwxr-xr-x 1 root root 13502544 Sep 2 12:59 /usr/local/bin/cfssl-certinfo*

-rwxr-xr-x 1 root root 11029744 Sep 2 12:59 /usr/local/bin/cfssljson*

root@k8s-master01:/data/velero#

6.2.5、執行證書籤發

複製部署k8s叢集的ca-config.json檔案至/data/velero

root@k8s-deploy:~# scp /etc/kubeasz/clusters/k8s-cluster01/ssl/ca-config.json 192.168.0.31:/data/velero

ca-config.json 100% 459 203.8KB/s 00:00

root@k8s-deploy:~#

驗證ca-config.json是否正常複製

root@k8s-master01:/data/velero# ll

total 40252

drwxr-xr-x 2 root root 4096 Sep 2 13:03 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw-r--r-- 1 root root 459 Sep 2 13:03 ca-config.json

-rw-r--r-- 1 root root 16659824 Aug 31 03:00 cfssl

-rw-r--r-- 1 root root 13502544 Aug 31 03:00 cfssl-certinfo

-rw-r--r-- 1 root root 11029744 Aug 31 03:00 cfssljson

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero#

簽發證書

root@k8s-master01:/data/velero# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=./ca-config.json -profile=kubernetes ./awsuser-csr.json | cfssljson -bare awsuser

2023/09/02 13:05:37 [INFO] generate received request

2023/09/02 13:05:37 [INFO] received CSR

2023/09/02 13:05:37 [INFO] generating key: rsa-2048

2023/09/02 13:05:38 [INFO] encoded CSR

2023/09/02 13:05:38 [INFO] signed certificate with serial number 309924608852958492895277791638870960844710474947

2023/09/02 13:05:38 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

6.2.6、驗證證書

root@k8s-master01:/data/velero# ll

total 40264

drwxr-xr-x 2 root root 4096 Sep 2 13:05 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw------- 1 root root 1679 Sep 2 13:05 awsuser-key.pem

-rw-r--r-- 1 root root 1001 Sep 2 13:05 awsuser.csr

-rw-r--r-- 1 root root 1391 Sep 2 13:05 awsuser.pem

-rw-r--r-- 1 root root 459 Sep 2 13:03 ca-config.json

-rw-r--r-- 1 root root 16659824 Aug 31 03:00 cfssl

-rw-r--r-- 1 root root 13502544 Aug 31 03:00 cfssl-certinfo

-rw-r--r-- 1 root root 11029744 Aug 31 03:00 cfssljson

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero#

6.2.7、分發證書到api-server證書路徑

root@k8s-master01:/data/velero# cp awsuser-key.pem /etc/kubernetes/ssl/

root@k8s-master01:/data/velero# cp awsuser.pem /etc/kubernetes/ssl/

root@k8s-master01:/data/velero# ll /etc/kubernetes/ssl/

total 48

drwxr-xr-x 2 root root 4096 Sep 2 13:07 ./

drwxr-xr-x 3 root root 4096 Apr 22 14:56 ../

-rw-r--r-- 1 root root 1679 Apr 22 14:54 aggregator-proxy-key.pem

-rw-r--r-- 1 root root 1387 Apr 22 14:54 aggregator-proxy.pem

-rw------- 1 root root 1679 Sep 2 13:07 awsuser-key.pem

-rw-r--r-- 1 root root 1391 Sep 2 13:07 awsuser.pem

-rw-r--r-- 1 root root 1679 Apr 22 14:10 ca-key.pem

-rw-r--r-- 1 root root 1310 Apr 22 14:10 ca.pem

-rw-r--r-- 1 root root 1679 Apr 22 14:56 kubelet-key.pem

-rw-r--r-- 1 root root 1460 Apr 22 14:56 kubelet.pem

-rw-r--r-- 1 root root 1679 Apr 22 14:54 kubernetes-key.pem

-rw-r--r-- 1 root root 1655 Apr 22 14:54 kubernetes.pem

root@k8s-master01:/data/velero#

6.3、生成k8s叢集認證config檔案

root@k8s-master01:/data/velero# export KUBE_APISERVER="https://192.168.0.111:6443" root@k8s-master01:/data/velero# kubectl config set-cluster kubernetes \

> --certificate-authority=/etc/kubernetes/ssl/ca.pem \

> --embed-certs=true \

> --server=${KUBE_APISERVER} \

> --kubeconfig=./awsuser.kubeconfig

Cluster "kubernetes" set.

root@k8s-master01:/data/velero# ll

total 40268

drwxr-xr-x 2 root root 4096 Sep 2 13:12 ./

drwxr-xr-x 3 root root 4096 Sep 2 12:42 ../

-rw-r--r-- 1 root root 222 Sep 2 12:48 awsuser-csr.json

-rw------- 1 root root 1679 Sep 2 13:05 awsuser-key.pem

-rw-r--r-- 1 root root 1001 Sep 2 13:05 awsuser.csr

-rw------- 1 root root 1951 Sep 2 13:12 awsuser.kubeconfig

-rw-r--r-- 1 root root 1391 Sep 2 13:05 awsuser.pem

-rw-r--r-- 1 root root 459 Sep 2 13:03 ca-config.json

-rw-r--r-- 1 root root 16659824 Aug 31 03:00 cfssl

-rw-r--r-- 1 root root 13502544 Aug 31 03:00 cfssl-certinfo

-rw-r--r-- 1 root root 11029744 Aug 31 03:00 cfssljson

-rw-r--r-- 1 root root 69 Sep 2 12:43 velero-auth.txt

root@k8s-master01:/data/velero# cat awsuser.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVTW01blNKSUtCdGNmeXY3MVlZZy91QlBsT3JZd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TkRJeU1UTXpNekF3V2hnUE1qRXlNekF6TWpreE16TXpNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhEREFLQmdOVgpCQW9UQTJzNGN6RVBNQTBHQTFVRUN4TUdVM2x6ZEdWdE1SWXdGQVlEVlFRREV3MXJkV0psY201bGRHVnpMV05oCk1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBcTRmdWtncjl2ditQWVVtQmZnWjUKTVJIOTZRekErMVgvZG5hUlpzN1lPZjZMaEZ5ZWJxUTFlM3k2bmN3Tk90WUkyemJ3SVJKL0c3YTNsTSt0Qk5sTQpwdE5Db1lxalF4WVY2YkpOcGNIRFJldTY0Z1BYcHhHY1FNZGE2Q1VhVTBrNENMZ0I2ZGx1OE8rUTdaL1dNeWhTClZQMWp5dEpnK1I4UGZRUWVzdnlTanBzaUM4cmdUQjc2VWU0ZXJqaEFwb2JSbzRILzN2cGhVUXRLNTBQSWVVNlgKTnpuTVNONmdLMXRqSjZPSStlVkE1dWdTTnFOc3FVSXFHWmhmZXZSeFBhNzVBbDhrbmRxc3cyTm5WSFFOZmpGUApZR3lNOFlncllUWm9sa2RGYk9Wb2g0U3pncTFnclc0dzBpMnpySVlJTzAzNTBEODh4RFRGRTBka3FPSlRVb0JyCmtRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVU5SjZoekJaOTNZMklac1ZYYUYwZk1uZ0crS1V3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUZLNwpjZ3l3UnI4aWt4NmpWMUYwVUNJRGxEN0FPQ3dTcE1Odithd1Zyd2k4Mk5xL3hpL2RjaGU1TjhJUkFEUkRQTHJUClRRS2M4M2FURXM1dnpKczd5Nnl6WHhEbUZocGxrY3NoenVhQkdFSkhpbGpuSHJ0Z09tL1ZQck5QK3hhWXdUNHYKZFNOdEIrczgxNGh6OWhaSitmTHRMb1RBS2tMUjVMRjkyQjF2c0JsVnlkaUhLSnF6MCtORkdJMzdiY1pvc0cxdwpwbVpROHgyWUFxWHE2VFlUQnoxLzR6UGlSM3FMQmxtRkNMZVJCa1RJb2VhUkFxU2ZkeDRiVlhGeTlpQ1lnTHU4CjVrcmQzMEdmZU5pRUpZVWJtZzNxcHNVSUlQTmUvUDdHNU0raS9GSlpDcFBOQ3Y4aS9MQ0Z2cVhPbThvYmdYYm8KeDNsZWpWVlZ6eG9yNEtOd3pUZz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.0.111:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

root@k8s-master01:/data/velero#

6.3.1、設定使用者端證書認證

root@k8s-master01:/data/velero# kubectl config set-credentials awsuser \

> --client-certificate=/etc/kubernetes/ssl/awsuser.pem \

> --client-key=/etc/kubernetes/ssl/awsuser-key.pem \

> --embed-certs=true \

> --kubeconfig=./awsuser.kubeconfig

User "awsuser" set.

root@k8s-master01:/data/velero# cat awsuser.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVTW01blNKSUtCdGNmeXY3MVlZZy91QlBsT3JZd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TkRJeU1UTXpNekF3V2hnUE1qRXlNekF6TWpreE16TXpNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhEREFLQmdOVgpCQW9UQTJzNGN6RVBNQTBHQTFVRUN4TUdVM2x6ZEdWdE1SWXdGQVlEVlFRREV3MXJkV0psY201bGRHVnpMV05oCk1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBcTRmdWtncjl2ditQWVVtQmZnWjUKTVJIOTZRekErMVgvZG5hUlpzN1lPZjZMaEZ5ZWJxUTFlM3k2bmN3Tk90WUkyemJ3SVJKL0c3YTNsTSt0Qk5sTQpwdE5Db1lxalF4WVY2YkpOcGNIRFJldTY0Z1BYcHhHY1FNZGE2Q1VhVTBrNENMZ0I2ZGx1OE8rUTdaL1dNeWhTClZQMWp5dEpnK1I4UGZRUWVzdnlTanBzaUM4cmdUQjc2VWU0ZXJqaEFwb2JSbzRILzN2cGhVUXRLNTBQSWVVNlgKTnpuTVNONmdLMXRqSjZPSStlVkE1dWdTTnFOc3FVSXFHWmhmZXZSeFBhNzVBbDhrbmRxc3cyTm5WSFFOZmpGUApZR3lNOFlncllUWm9sa2RGYk9Wb2g0U3pncTFnclc0dzBpMnpySVlJTzAzNTBEODh4RFRGRTBka3FPSlRVb0JyCmtRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVU5SjZoekJaOTNZMklac1ZYYUYwZk1uZ0crS1V3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUZLNwpjZ3l3UnI4aWt4NmpWMUYwVUNJRGxEN0FPQ3dTcE1Odithd1Zyd2k4Mk5xL3hpL2RjaGU1TjhJUkFEUkRQTHJUClRRS2M4M2FURXM1dnpKczd5Nnl6WHhEbUZocGxrY3NoenVhQkdFSkhpbGpuSHJ0Z09tL1ZQck5QK3hhWXdUNHYKZFNOdEIrczgxNGh6OWhaSitmTHRMb1RBS2tMUjVMRjkyQjF2c0JsVnlkaUhLSnF6MCtORkdJMzdiY1pvc0cxdwpwbVpROHgyWUFxWHE2VFlUQnoxLzR6UGlSM3FMQmxtRkNMZVJCa1RJb2VhUkFxU2ZkeDRiVlhGeTlpQ1lnTHU4CjVrcmQzMEdmZU5pRUpZVWJtZzNxcHNVSUlQTmUvUDdHNU0raS9GSlpDcFBOQ3Y4aS9MQ0Z2cVhPbThvYmdYYm8KeDNsZWpWVlZ6eG9yNEtOd3pUZz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.0.111:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users:

- name: awsuser

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxekNDQXIrZ0F3SUJBZ0lVTmttQUJ6ZjVhdCtoZC9vYmtONXBVV3JWOU1Nd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13T1RBeU1UTXdNVEF3V2hnUE1qQTNNekE0TWpBeE16QXhNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJBd0RnWURWUVFJRXdkVGFVTm9kV0Z1TVJJd0VBWURWUVFIRXdsSGRXRnVaMWwxWVc0eApEREFLQmdOVkJBb1RBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVJBd0RnWURWUVFERXdkaGQzTjFjMlZ5Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12ekkKSHNqRmFZNzNmTm5aWVhqU0lsVEJKeDNqY1dYVGh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOAorVVFYTkFhTVYxOFhVaGdvSmJZaHRCWStpSGhjK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBECjNIZG1TUGJ0am0xRkVWaTFkVHpSeVhDSWxrTkJFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejMKaTlBS3ArOUhENUV6bE5QaVUwY1FlZkxERGEwRXp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNQpuVG1NNlNucEh5aFltNW5sd2FRdUZvekY2bWt4UzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6CkxRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGREtyYkphanpDTTNzM2ZHUzBtUwpLV0lHbm5XM01COEdBMVVkSXdRWU1CYUFGUFNlb2N3V2ZkMk5pR2JGVjJoZEh6SjRCdmlsTUEwR0NTcUdTSWIzCkRRRUJDd1VBQTRJQkFRQXd4b043eUNQZzFRQmJRcTNWT1JYUFVvcXRDVjhSUHFjd3V4bTJWZkVmVmdPMFZYanQKTHR5aEl2RDlubEsyazNmTFpWTVc2MWFzbVhtWkttUTh3YkZtL1RieE83ZkdJSWdpSzJKOGpWWHZYRnhNeExZNQpRVjcvd3QxUUluWjJsTjBsM0c3TGhkYjJ4UjFORmd1eWNXdWtWV3JKSWtpcU1Ma0lOLzdPSFhtSFZXazV1a1ZlCmNoYmVIdnJSSXRRNHBPYjlFZVgzTUxiZXBkRjJ4TWs5NmZrVXJGWmhKYWREVnB5NXEwbHFpUVJkMVpIWk4xSkMKWVBrZGRXdVQxbHNXaWJzN3BWTHRXMXlnV0JlS2hKQ0FVeTlUZEZ1WEt1QUZvdVJKUUJWQUs4dTFHRU1aL2JEYgp2eXRxN2N6ZndWOFNreVNpKzZHcldZRVBXUXllUEVEWjBPU1oKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12eklIc2pGYVk3M2ZOblpZWGpTSWxUQkp4M2pjV1hUCmh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOCtVUVhOQWFNVjE4WFVoZ29KYllodEJZK2lIaGMKK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBEM0hkbVNQYnRqbTFGRVZpMWRUelJ5WENJbGtOQgpFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejNpOUFLcCs5SEQ1RXpsTlBpVTBjUWVmTEREYTBFCnp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNW5UbU02U25wSHloWW01bmx3YVF1Rm96RjZta3gKUzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6TFFJREFRQUJBb0lCQVFDTWVnb2RSNndUcHliKwp5WklJcXBkbnpBamVtWktnd0ZET2tRWnFTS1NsREZsY0Y0ZWRueHE3WGJXV2RQZzRuREtxNHNqSnJGVlNzUW12CkNFWnVlNWxVUkt6QWRGVlkzeFdpQnhOMUJYek5jN3hkZFVqRUNOOUNEYUNiOS9nUWEzS2RiK2VVTE1yT3lZYVgKYW5xY2J3YXVBWEFTT0tZYmZxcjA2T2R2a1dwLzIxWnF2bnAvdmZrN3dIYzduUktLWmt1ZVg0bVExdFFqdVBoZQpDQXN5WWZOeWM0VjVyUDF1K3AzTGU4Ly9sTXZQZ0wydFBib3NaaGYvM0dCUGpPZHFabVdnL0R5blhzN21qcnhqCng2OHJOcHIxU2ZhQUNOMjNQdE9HbXcreXh4NjdENTNUSVJUaXZIY2Izd0FIMnNRdkVzbG9HN0lMU0d2THJ1S3IKS0c2RkQwb0JBb0dCQVBsWFdydWxQa3B6bzl5ZUJnd3hudmlyN2x2THZrVkV1Q3ZmRVg2MHViYm5HOVVsZm1BQgpEaVduOFcvUkVHVjE0cFBtcjQ2eE5QLzkrb1p3cDNRNUMzbFNocENVWEVxZjVHTzBUSXdSb1NVdndKcUo2UHc0Cm4yb0xEbXBNS3k5bkZEcFFCQTFoYUZSQnZJd3ZxOXdHc0NmK3Fyc3pTNHM2bHp1Qm1KVDZXYUdCQW9HQkFNNnYKSWJrSXJnVW54NlpuNngyRjVmSEQ0YVFydWRLbWFScnN3NzV4dFY0ZVlEdU1ZVVIrYWxwY2llVDZ4Y1Z3NUFvbQp6Q2o1VUNsejZJZ3pJc2MyTGRMR3JOeDVrcUFTMzA1K0UxaVdEeGh6UG44bUhETkI2NGY5WTVYdjJ6bm9maWVsCmNKd2pBaE5OZlR1ck45ODR5RXpQL0tHa1NsbGNxdHFsOVF6VVZrK3RBb0dCQU81c0RGTy85NmRqbW4yY0VYWloKZ0lTU2l2TDJDUFBkZVNwaVBDMW5qT29MWmI3VUFscTB4NTFVVVBhMTk3SzlIYktGZEx2Q1VVYXp5bm9CZ080TwptaDBodjVEQ2ZOblN1S1pxUW9QeFc2RGVYNUttYXNXN014eEloRGs2cWxUQ2dVSWRQeks0UVBYSWdnMmVpL3h4CjNNSHhyN29mbTQzL3NacnlHai9pZ0JDQkFvR0FWb3BzRTE3NEJuNldrUzIzKzUraUhXNElYOFpUUTBtY2ZzS2UKWDNLYkgzS1dscmg3emNNazR2c1dYZ05HcGhwVDBaQlhNZHphWE5FRWoycmg2QW5lZS8vbVIxYThOenhQdGowQgorcml5VDJtSnhKRi9nMUxadlJJekRZZm1Ba1EvOW5mR1JBcEFoemFOOWxzRnhQaXduY0VFcGVYMW41ODJodUN3ClQ1UGxJKzBDZ1lFQTN1WmptcXl1U0ZsUGR0Q3NsN1ZDUWc4K0N6L1hBTUNQZGt0SmF1bng5VWxtWVZXVFQzM2oKby9uVVRPVHY1TWZPTm9wejVYOXM4SCsyeXFOdWpna2NHZmFTeHFTNlBkbWNhcTJGMTYxTDdTR0JDb2w1MVQ5ZwpXQkRObnlqOFprSkQxd2pRNkNDWG4zNDZIMS9YREZjbmhnc2c2UHRjTGh3RC8yS0l3eFVmdzFBPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

root@k8s-master01:/data/velero#

6.3.2、設定上下文引數

root@k8s-master01:/data/velero# kubectl config set-context kubernetes \

> --cluster=kubernetes \

> --user=awsuser \

> --namespace=velero-system \

> --kubeconfig=./awsuser.kubeconfig

Context "kubernetes" created.

root@k8s-master01:/data/velero# cat awsuser.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVTW01blNKSUtCdGNmeXY3MVlZZy91QlBsT3JZd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TkRJeU1UTXpNekF3V2hnUE1qRXlNekF6TWpreE16TXpNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhEREFLQmdOVgpCQW9UQTJzNGN6RVBNQTBHQTFVRUN4TUdVM2x6ZEdWdE1SWXdGQVlEVlFRREV3MXJkV0psY201bGRHVnpMV05oCk1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBcTRmdWtncjl2ditQWVVtQmZnWjUKTVJIOTZRekErMVgvZG5hUlpzN1lPZjZMaEZ5ZWJxUTFlM3k2bmN3Tk90WUkyemJ3SVJKL0c3YTNsTSt0Qk5sTQpwdE5Db1lxalF4WVY2YkpOcGNIRFJldTY0Z1BYcHhHY1FNZGE2Q1VhVTBrNENMZ0I2ZGx1OE8rUTdaL1dNeWhTClZQMWp5dEpnK1I4UGZRUWVzdnlTanBzaUM4cmdUQjc2VWU0ZXJqaEFwb2JSbzRILzN2cGhVUXRLNTBQSWVVNlgKTnpuTVNONmdLMXRqSjZPSStlVkE1dWdTTnFOc3FVSXFHWmhmZXZSeFBhNzVBbDhrbmRxc3cyTm5WSFFOZmpGUApZR3lNOFlncllUWm9sa2RGYk9Wb2g0U3pncTFnclc0dzBpMnpySVlJTzAzNTBEODh4RFRGRTBka3FPSlRVb0JyCmtRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVU5SjZoekJaOTNZMklac1ZYYUYwZk1uZ0crS1V3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUZLNwpjZ3l3UnI4aWt4NmpWMUYwVUNJRGxEN0FPQ3dTcE1Odithd1Zyd2k4Mk5xL3hpL2RjaGU1TjhJUkFEUkRQTHJUClRRS2M4M2FURXM1dnpKczd5Nnl6WHhEbUZocGxrY3NoenVhQkdFSkhpbGpuSHJ0Z09tL1ZQck5QK3hhWXdUNHYKZFNOdEIrczgxNGh6OWhaSitmTHRMb1RBS2tMUjVMRjkyQjF2c0JsVnlkaUhLSnF6MCtORkdJMzdiY1pvc0cxdwpwbVpROHgyWUFxWHE2VFlUQnoxLzR6UGlSM3FMQmxtRkNMZVJCa1RJb2VhUkFxU2ZkeDRiVlhGeTlpQ1lnTHU4CjVrcmQzMEdmZU5pRUpZVWJtZzNxcHNVSUlQTmUvUDdHNU0raS9GSlpDcFBOQ3Y4aS9MQ0Z2cVhPbThvYmdYYm8KeDNsZWpWVlZ6eG9yNEtOd3pUZz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.0.111:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: velero-system

user: awsuser

name: kubernetes

current-context: ""

kind: Config

preferences: {}

users:

- name: awsuser

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxekNDQXIrZ0F3SUJBZ0lVTmttQUJ6ZjVhdCtoZC9vYmtONXBVV3JWOU1Nd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13T1RBeU1UTXdNVEF3V2hnUE1qQTNNekE0TWpBeE16QXhNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJBd0RnWURWUVFJRXdkVGFVTm9kV0Z1TVJJd0VBWURWUVFIRXdsSGRXRnVaMWwxWVc0eApEREFLQmdOVkJBb1RBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVJBd0RnWURWUVFERXdkaGQzTjFjMlZ5Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12ekkKSHNqRmFZNzNmTm5aWVhqU0lsVEJKeDNqY1dYVGh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOAorVVFYTkFhTVYxOFhVaGdvSmJZaHRCWStpSGhjK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBECjNIZG1TUGJ0am0xRkVWaTFkVHpSeVhDSWxrTkJFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejMKaTlBS3ArOUhENUV6bE5QaVUwY1FlZkxERGEwRXp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNQpuVG1NNlNucEh5aFltNW5sd2FRdUZvekY2bWt4UzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6CkxRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGREtyYkphanpDTTNzM2ZHUzBtUwpLV0lHbm5XM01COEdBMVVkSXdRWU1CYUFGUFNlb2N3V2ZkMk5pR2JGVjJoZEh6SjRCdmlsTUEwR0NTcUdTSWIzCkRRRUJDd1VBQTRJQkFRQXd4b043eUNQZzFRQmJRcTNWT1JYUFVvcXRDVjhSUHFjd3V4bTJWZkVmVmdPMFZYanQKTHR5aEl2RDlubEsyazNmTFpWTVc2MWFzbVhtWkttUTh3YkZtL1RieE83ZkdJSWdpSzJKOGpWWHZYRnhNeExZNQpRVjcvd3QxUUluWjJsTjBsM0c3TGhkYjJ4UjFORmd1eWNXdWtWV3JKSWtpcU1Ma0lOLzdPSFhtSFZXazV1a1ZlCmNoYmVIdnJSSXRRNHBPYjlFZVgzTUxiZXBkRjJ4TWs5NmZrVXJGWmhKYWREVnB5NXEwbHFpUVJkMVpIWk4xSkMKWVBrZGRXdVQxbHNXaWJzN3BWTHRXMXlnV0JlS2hKQ0FVeTlUZEZ1WEt1QUZvdVJKUUJWQUs4dTFHRU1aL2JEYgp2eXRxN2N6ZndWOFNreVNpKzZHcldZRVBXUXllUEVEWjBPU1oKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12eklIc2pGYVk3M2ZOblpZWGpTSWxUQkp4M2pjV1hUCmh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOCtVUVhOQWFNVjE4WFVoZ29KYllodEJZK2lIaGMKK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBEM0hkbVNQYnRqbTFGRVZpMWRUelJ5WENJbGtOQgpFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejNpOUFLcCs5SEQ1RXpsTlBpVTBjUWVmTEREYTBFCnp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNW5UbU02U25wSHloWW01bmx3YVF1Rm96RjZta3gKUzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6TFFJREFRQUJBb0lCQVFDTWVnb2RSNndUcHliKwp5WklJcXBkbnpBamVtWktnd0ZET2tRWnFTS1NsREZsY0Y0ZWRueHE3WGJXV2RQZzRuREtxNHNqSnJGVlNzUW12CkNFWnVlNWxVUkt6QWRGVlkzeFdpQnhOMUJYek5jN3hkZFVqRUNOOUNEYUNiOS9nUWEzS2RiK2VVTE1yT3lZYVgKYW5xY2J3YXVBWEFTT0tZYmZxcjA2T2R2a1dwLzIxWnF2bnAvdmZrN3dIYzduUktLWmt1ZVg0bVExdFFqdVBoZQpDQXN5WWZOeWM0VjVyUDF1K3AzTGU4Ly9sTXZQZ0wydFBib3NaaGYvM0dCUGpPZHFabVdnL0R5blhzN21qcnhqCng2OHJOcHIxU2ZhQUNOMjNQdE9HbXcreXh4NjdENTNUSVJUaXZIY2Izd0FIMnNRdkVzbG9HN0lMU0d2THJ1S3IKS0c2RkQwb0JBb0dCQVBsWFdydWxQa3B6bzl5ZUJnd3hudmlyN2x2THZrVkV1Q3ZmRVg2MHViYm5HOVVsZm1BQgpEaVduOFcvUkVHVjE0cFBtcjQ2eE5QLzkrb1p3cDNRNUMzbFNocENVWEVxZjVHTzBUSXdSb1NVdndKcUo2UHc0Cm4yb0xEbXBNS3k5bkZEcFFCQTFoYUZSQnZJd3ZxOXdHc0NmK3Fyc3pTNHM2bHp1Qm1KVDZXYUdCQW9HQkFNNnYKSWJrSXJnVW54NlpuNngyRjVmSEQ0YVFydWRLbWFScnN3NzV4dFY0ZVlEdU1ZVVIrYWxwY2llVDZ4Y1Z3NUFvbQp6Q2o1VUNsejZJZ3pJc2MyTGRMR3JOeDVrcUFTMzA1K0UxaVdEeGh6UG44bUhETkI2NGY5WTVYdjJ6bm9maWVsCmNKd2pBaE5OZlR1ck45ODR5RXpQL0tHa1NsbGNxdHFsOVF6VVZrK3RBb0dCQU81c0RGTy85NmRqbW4yY0VYWloKZ0lTU2l2TDJDUFBkZVNwaVBDMW5qT29MWmI3VUFscTB4NTFVVVBhMTk3SzlIYktGZEx2Q1VVYXp5bm9CZ080TwptaDBodjVEQ2ZOblN1S1pxUW9QeFc2RGVYNUttYXNXN014eEloRGs2cWxUQ2dVSWRQeks0UVBYSWdnMmVpL3h4CjNNSHhyN29mbTQzL3NacnlHai9pZ0JDQkFvR0FWb3BzRTE3NEJuNldrUzIzKzUraUhXNElYOFpUUTBtY2ZzS2UKWDNLYkgzS1dscmg3emNNazR2c1dYZ05HcGhwVDBaQlhNZHphWE5FRWoycmg2QW5lZS8vbVIxYThOenhQdGowQgorcml5VDJtSnhKRi9nMUxadlJJekRZZm1Ba1EvOW5mR1JBcEFoemFOOWxzRnhQaXduY0VFcGVYMW41ODJodUN3ClQ1UGxJKzBDZ1lFQTN1WmptcXl1U0ZsUGR0Q3NsN1ZDUWc4K0N6L1hBTUNQZGt0SmF1bng5VWxtWVZXVFQzM2oKby9uVVRPVHY1TWZPTm9wejVYOXM4SCsyeXFOdWpna2NHZmFTeHFTNlBkbWNhcTJGMTYxTDdTR0JDb2w1MVQ5ZwpXQkRObnlqOFprSkQxd2pRNkNDWG4zNDZIMS9YREZjbmhnc2c2UHRjTGh3RC8yS0l3eFVmdzFBPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

root@k8s-master01:/data/velero#

6.3.3、設定預設上下文

root@k8s-master01:/data/velero# kubectl config use-context kubernetes --kubeconfig=awsuser.kubeconfig

Switched to context "kubernetes".

root@k8s-master01:/data/velero# cat awsuser.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVTW01blNKSUtCdGNmeXY3MVlZZy91QlBsT3JZd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TkRJeU1UTXpNekF3V2hnUE1qRXlNekF6TWpreE16TXpNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhEREFLQmdOVgpCQW9UQTJzNGN6RVBNQTBHQTFVRUN4TUdVM2x6ZEdWdE1SWXdGQVlEVlFRREV3MXJkV0psY201bGRHVnpMV05oCk1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBcTRmdWtncjl2ditQWVVtQmZnWjUKTVJIOTZRekErMVgvZG5hUlpzN1lPZjZMaEZ5ZWJxUTFlM3k2bmN3Tk90WUkyemJ3SVJKL0c3YTNsTSt0Qk5sTQpwdE5Db1lxalF4WVY2YkpOcGNIRFJldTY0Z1BYcHhHY1FNZGE2Q1VhVTBrNENMZ0I2ZGx1OE8rUTdaL1dNeWhTClZQMWp5dEpnK1I4UGZRUWVzdnlTanBzaUM4cmdUQjc2VWU0ZXJqaEFwb2JSbzRILzN2cGhVUXRLNTBQSWVVNlgKTnpuTVNONmdLMXRqSjZPSStlVkE1dWdTTnFOc3FVSXFHWmhmZXZSeFBhNzVBbDhrbmRxc3cyTm5WSFFOZmpGUApZR3lNOFlncllUWm9sa2RGYk9Wb2g0U3pncTFnclc0dzBpMnpySVlJTzAzNTBEODh4RFRGRTBka3FPSlRVb0JyCmtRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVU5SjZoekJaOTNZMklac1ZYYUYwZk1uZ0crS1V3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUZLNwpjZ3l3UnI4aWt4NmpWMUYwVUNJRGxEN0FPQ3dTcE1Odithd1Zyd2k4Mk5xL3hpL2RjaGU1TjhJUkFEUkRQTHJUClRRS2M4M2FURXM1dnpKczd5Nnl6WHhEbUZocGxrY3NoenVhQkdFSkhpbGpuSHJ0Z09tL1ZQck5QK3hhWXdUNHYKZFNOdEIrczgxNGh6OWhaSitmTHRMb1RBS2tMUjVMRjkyQjF2c0JsVnlkaUhLSnF6MCtORkdJMzdiY1pvc0cxdwpwbVpROHgyWUFxWHE2VFlUQnoxLzR6UGlSM3FMQmxtRkNMZVJCa1RJb2VhUkFxU2ZkeDRiVlhGeTlpQ1lnTHU4CjVrcmQzMEdmZU5pRUpZVWJtZzNxcHNVSUlQTmUvUDdHNU0raS9GSlpDcFBOQ3Y4aS9MQ0Z2cVhPbThvYmdYYm8KeDNsZWpWVlZ6eG9yNEtOd3pUZz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.0.111:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: velero-system

user: awsuser

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: awsuser

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxekNDQXIrZ0F3SUJBZ0lVTmttQUJ6ZjVhdCtoZC9vYmtONXBVV3JWOU1Nd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13T1RBeU1UTXdNVEF3V2hnUE1qQTNNekE0TWpBeE16QXhNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJBd0RnWURWUVFJRXdkVGFVTm9kV0Z1TVJJd0VBWURWUVFIRXdsSGRXRnVaMWwxWVc0eApEREFLQmdOVkJBb1RBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVJBd0RnWURWUVFERXdkaGQzTjFjMlZ5Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12ekkKSHNqRmFZNzNmTm5aWVhqU0lsVEJKeDNqY1dYVGh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOAorVVFYTkFhTVYxOFhVaGdvSmJZaHRCWStpSGhjK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBECjNIZG1TUGJ0am0xRkVWaTFkVHpSeVhDSWxrTkJFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejMKaTlBS3ArOUhENUV6bE5QaVUwY1FlZkxERGEwRXp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNQpuVG1NNlNucEh5aFltNW5sd2FRdUZvekY2bWt4UzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6CkxRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGREtyYkphanpDTTNzM2ZHUzBtUwpLV0lHbm5XM01COEdBMVVkSXdRWU1CYUFGUFNlb2N3V2ZkMk5pR2JGVjJoZEh6SjRCdmlsTUEwR0NTcUdTSWIzCkRRRUJDd1VBQTRJQkFRQXd4b043eUNQZzFRQmJRcTNWT1JYUFVvcXRDVjhSUHFjd3V4bTJWZkVmVmdPMFZYanQKTHR5aEl2RDlubEsyazNmTFpWTVc2MWFzbVhtWkttUTh3YkZtL1RieE83ZkdJSWdpSzJKOGpWWHZYRnhNeExZNQpRVjcvd3QxUUluWjJsTjBsM0c3TGhkYjJ4UjFORmd1eWNXdWtWV3JKSWtpcU1Ma0lOLzdPSFhtSFZXazV1a1ZlCmNoYmVIdnJSSXRRNHBPYjlFZVgzTUxiZXBkRjJ4TWs5NmZrVXJGWmhKYWREVnB5NXEwbHFpUVJkMVpIWk4xSkMKWVBrZGRXdVQxbHNXaWJzN3BWTHRXMXlnV0JlS2hKQ0FVeTlUZEZ1WEt1QUZvdVJKUUJWQUs4dTFHRU1aL2JEYgp2eXRxN2N6ZndWOFNreVNpKzZHcldZRVBXUXllUEVEWjBPU1oKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBeVU3ZWtvQ0ZFS0Jnd3Z1SU12eklIc2pGYVk3M2ZOblpZWGpTSWxUQkp4M2pjV1hUCmh1eno5a013WktPRFNybmxWcTF0SnZ1dHRVNWpCaHRielJKOCtVUVhOQWFNVjE4WFVoZ29KYllodEJZK2lIaGMKK1dBNTYwaEEybEJaaFU2RGZzQjNVam9RbjNKdU02YUQ0eHBEM0hkbVNQYnRqbTFGRVZpMWRUelJ5WENJbGtOQgpFR3hLam5MSjZ5dC9YcnVuNW9wdjBudE9jQWw0VWJSWHFGejNpOUFLcCs5SEQ1RXpsTlBpVTBjUWVmTEREYTBFCnp3N1NyaDFpNG9rdnhVSnhyd0FhcTdaK1Q5blVRWkV5a0RpNW5UbU02U25wSHloWW01bmx3YVF1Rm96RjZta3gKUzFPYXJJMStiZGhYSHU1T3ViYWJKOXY0bVA5TDhYRFY3TDd6TFFJREFRQUJBb0lCQVFDTWVnb2RSNndUcHliKwp5WklJcXBkbnpBamVtWktnd0ZET2tRWnFTS1NsREZsY0Y0ZWRueHE3WGJXV2RQZzRuREtxNHNqSnJGVlNzUW12CkNFWnVlNWxVUkt6QWRGVlkzeFdpQnhOMUJYek5jN3hkZFVqRUNOOUNEYUNiOS9nUWEzS2RiK2VVTE1yT3lZYVgKYW5xY2J3YXVBWEFTT0tZYmZxcjA2T2R2a1dwLzIxWnF2bnAvdmZrN3dIYzduUktLWmt1ZVg0bVExdFFqdVBoZQpDQXN5WWZOeWM0VjVyUDF1K3AzTGU4Ly9sTXZQZ0wydFBib3NaaGYvM0dCUGpPZHFabVdnL0R5blhzN21qcnhqCng2OHJOcHIxU2ZhQUNOMjNQdE9HbXcreXh4NjdENTNUSVJUaXZIY2Izd0FIMnNRdkVzbG9HN0lMU0d2THJ1S3IKS0c2RkQwb0JBb0dCQVBsWFdydWxQa3B6bzl5ZUJnd3hudmlyN2x2THZrVkV1Q3ZmRVg2MHViYm5HOVVsZm1BQgpEaVduOFcvUkVHVjE0cFBtcjQ2eE5QLzkrb1p3cDNRNUMzbFNocENVWEVxZjVHTzBUSXdSb1NVdndKcUo2UHc0Cm4yb0xEbXBNS3k5bkZEcFFCQTFoYUZSQnZJd3ZxOXdHc0NmK3Fyc3pTNHM2bHp1Qm1KVDZXYUdCQW9HQkFNNnYKSWJrSXJnVW54NlpuNngyRjVmSEQ0YVFydWRLbWFScnN3NzV4dFY0ZVlEdU1ZVVIrYWxwY2llVDZ4Y1Z3NUFvbQp6Q2o1VUNsejZJZ3pJc2MyTGRMR3JOeDVrcUFTMzA1K0UxaVdEeGh6UG44bUhETkI2NGY5WTVYdjJ6bm9maWVsCmNKd2pBaE5OZlR1ck45ODR5RXpQL0tHa1NsbGNxdHFsOVF6VVZrK3RBb0dCQU81c0RGTy85NmRqbW4yY0VYWloKZ0lTU2l2TDJDUFBkZVNwaVBDMW5qT29MWmI3VUFscTB4NTFVVVBhMTk3SzlIYktGZEx2Q1VVYXp5bm9CZ080TwptaDBodjVEQ2ZOblN1S1pxUW9QeFc2RGVYNUttYXNXN014eEloRGs2cWxUQ2dVSWRQeks0UVBYSWdnMmVpL3h4CjNNSHhyN29mbTQzL3NacnlHai9pZ0JDQkFvR0FWb3BzRTE3NEJuNldrUzIzKzUraUhXNElYOFpUUTBtY2ZzS2UKWDNLYkgzS1dscmg3emNNazR2c1dYZ05HcGhwVDBaQlhNZHphWE5FRWoycmg2QW5lZS8vbVIxYThOenhQdGowQgorcml5VDJtSnhKRi9nMUxadlJJekRZZm1Ba1EvOW5mR1JBcEFoemFOOWxzRnhQaXduY0VFcGVYMW41ODJodUN3ClQ1UGxJKzBDZ1lFQTN1WmptcXl1U0ZsUGR0Q3NsN1ZDUWc4K0N6L1hBTUNQZGt0SmF1bng5VWxtWVZXVFQzM2oKby9uVVRPVHY1TWZPTm9wejVYOXM4SCsyeXFOdWpna2NHZmFTeHFTNlBkbWNhcTJGMTYxTDdTR0JDb2w1MVQ5ZwpXQkRObnlqOFprSkQxd2pRNkNDWG4zNDZIMS9YREZjbmhnc2c2UHRjTGh3RC8yS0l3eFVmdzFBPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

root@k8s-master01:/data/velero#

6.3.4、k8s叢集中建立awsuser賬戶

root@k8s-master01:/data/velero# kubectl create clusterrolebinding awsuser --clusterrole=cluster-admin --user=awsuser

clusterrolebinding.rbac.authorization.k8s.io/awsuser created

root@k8s-master01:/data/velero# kubectl get clusterrolebinding -A|grep awsuser

awsuser ClusterRole/cluster-admin 47s

root@k8s-master01:/data/velero#

6.3.5、驗證證書的可用性

root@k8s-master01:/data/velero# kubectl --kubeconfig ./awsuser.kubeconfig get nodes

NAME STATUS ROLES AGE VERSION

192.168.0.31 Ready,SchedulingDisabled master 132d v1.26.4

192.168.0.32 Ready,SchedulingDisabled master 132d v1.26.4

192.168.0.33 Ready,SchedulingDisabled master 132d v1.26.4

192.168.0.34 Ready node 132d v1.26.4

192.168.0.35 Ready node 132d v1.26.4

192.168.0.36 Ready node 132d v1.26.4

root@k8s-master01:/data/velero# kubectl --kubeconfig ./awsuser.kubeconfig get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5456dd947c-pwl2n 1/1 Running 31 (79m ago) 132d

calico-node-4zmb4 1/1 Running 26 (79m ago) 132d

calico-node-7lc66 1/1 Running 28 (79m ago) 132d

calico-node-bkhkd 1/1 Running 28 (13d ago) 132d

calico-node-mw49k 1/1 Running 28 (79m ago) 132d

calico-node-v726r 1/1 Running 26 (79m ago) 132d

calico-node-x9r7h 1/1 Running 28 (79m ago) 132d

coredns-77879dc67d-k9ztn 1/1 Running 4 (79m ago) 27d

coredns-77879dc67d-qwb48 1/1 Running 4 (79m ago) 27d

snapshot-controller-0 1/1 Running 28 (79m ago) 132d

root@k8s-master01:/data/velero#

使用--kubeconfig選項來指定認證檔案,如果能夠正常檢視k8s叢集,pod等資訊,說明該認證檔案沒有問題;

6.3.6、k8s叢集中建立namespace

root@k8s-master01:/data/velero# kubectl create ns velero-system

namespace/velero-system created

root@k8s-master01:/data/velero# kubectl get ns

NAME STATUS AGE

argocd Active 129d

default Active 132d

kube-node-lease Active 132d

kube-public Active 132d

kube-system Active 132d

magedu Active 90d

myserver Active 98d

velero-system Active 5s

root@k8s-master01:/data/velero#

6.4、執行安裝velero伺服器端

root@k8s-master01:/data/velero# velero --kubeconfig ./awsuser.kubeconfig \

> install \

> --provider aws \

> --plugins velero/velero-plugin-for-aws:v1.5.5 \

> --bucket velerodata \

> --secret-file ./velero-auth.txt \

> --use-volume-snapshots=false \

> --namespace velero-system \

> --backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://192.168.0.42:9000

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource client

CustomResourceDefinition/backuprepositories.velero.io: created

CustomResourceDefinition/backups.velero.io: attempting to create resource

CustomResourceDefinition/backups.velero.io: attempting to create resource client

CustomResourceDefinition/backups.velero.io: created

CustomResourceDefinition/backupstoragelocations.velero.io: attempting to create resource

CustomResourceDefinition/backupstoragelocations.velero.io: attempting to create resource client

CustomResourceDefinition/backupstoragelocations.velero.io: created

CustomResourceDefinition/deletebackuprequests.velero.io: attempting to create resource

CustomResourceDefinition/deletebackuprequests.velero.io: attempting to create resource client

CustomResourceDefinition/deletebackuprequests.velero.io: created

CustomResourceDefinition/downloadrequests.velero.io: attempting to create resource

CustomResourceDefinition/downloadrequests.velero.io: attempting to create resource client

CustomResourceDefinition/downloadrequests.velero.io: created

CustomResourceDefinition/podvolumebackups.velero.io: attempting to create resource

CustomResourceDefinition/podvolumebackups.velero.io: attempting to create resource client

CustomResourceDefinition/podvolumebackups.velero.io: created

CustomResourceDefinition/podvolumerestores.velero.io: attempting to create resource

CustomResourceDefinition/podvolumerestores.velero.io: attempting to create resource client

CustomResourceDefinition/podvolumerestores.velero.io: created

CustomResourceDefinition/restores.velero.io: attempting to create resource

CustomResourceDefinition/restores.velero.io: attempting to create resource client

CustomResourceDefinition/restores.velero.io: created

CustomResourceDefinition/schedules.velero.io: attempting to create resource

CustomResourceDefinition/schedules.velero.io: attempting to create resource client

CustomResourceDefinition/schedules.velero.io: created

CustomResourceDefinition/serverstatusrequests.velero.io: attempting to create resource

CustomResourceDefinition/serverstatusrequests.velero.io: attempting to create resource client

CustomResourceDefinition/serverstatusrequests.velero.io: created

CustomResourceDefinition/volumesnapshotlocations.velero.io: attempting to create resource

CustomResourceDefinition/volumesnapshotlocations.velero.io: attempting to create resource client

CustomResourceDefinition/volumesnapshotlocations.velero.io: created

Waiting for resources to be ready in cluster...

Namespace/velero-system: attempting to create resource

Namespace/velero-system: attempting to create resource client

Namespace/velero-system: already exists, proceeding

Namespace/velero-system: created

ClusterRoleBinding/velero-velero-system: attempting to create resource

ClusterRoleBinding/velero-velero-system: attempting to create resource client

ClusterRoleBinding/velero-velero-system: created

ServiceAccount/velero: attempting to create resource

ServiceAccount/velero: attempting to create resource client

ServiceAccount/velero: created

Secret/cloud-credentials: attempting to create resource

Secret/cloud-credentials: attempting to create resource client

Secret/cloud-credentials: created

BackupStorageLocation/default: attempting to create resource

BackupStorageLocation/default: attempting to create resource client

BackupStorageLocation/default: created

Deployment/velero: attempting to create resource

Deployment/velero: attempting to create resource client

Deployment/velero: created

Velero is installed! ⛵ Use 'kubectl logs deployment/velero -n velero-system' to view the status.

root@k8s-master01:/data/velero#

6.5、驗證安裝velero伺服器端

root@k8s-master01:/data/velero# kubectl get pod -n velero-system

NAME READY STATUS RESTARTS AGE

velero-5d675548c4-2dx8d 1/1 Running 0 105s

root@k8s-master01:/data/velero#

能夠在velero-system名稱空間下看到velero pod 正常running,這意味著velero伺服器端已經部署好了;

7、velero備份資料

7.1、對default ns進行備份

root@k8s-master01:/data/velero# DATE=`date +%Y%m%d%H%M%S`

root@k8s-master01:/data/velero# velero backup create default-backup-${DATE} \

> --include-cluster-resources=true \

> --include-namespaces default \

> --kubeconfig=./awsuser.kubeconfig \

> --namespace velero-system

Backup request "default-backup-20230902133242" submitted successfully.

Run `velero backup describe default-backup-20230902133242` or `velero backup logs default-backup-20230902133242` for more details.

root@k8s-master01:/data/velero#

驗證備份

root@k8s-master01:/data/velero# velero backup describe default-backup-20230902133242 --kubeconfig=./awsuser.kubeconfig --namespace velero-system

Name: default-backup-20230902133242

Namespace: velero-system

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.26.4

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=26

Phase: Completed

Namespaces:

Included: default

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: included

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

TTL: 720h0m0s

CSISnapshotTimeout: 10m0s

ItemOperationTimeout: 1h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2023-09-02 13:33:01 +0000 UTC

Completed: 2023-09-02 13:33:09 +0000 UTC

Expiration: 2023-10-02 13:33:01 +0000 UTC

Total items to be backed up: 288

Items backed up: 288

Velero-Native Snapshots: <none included>

root@k8s-master01:/data/velero#

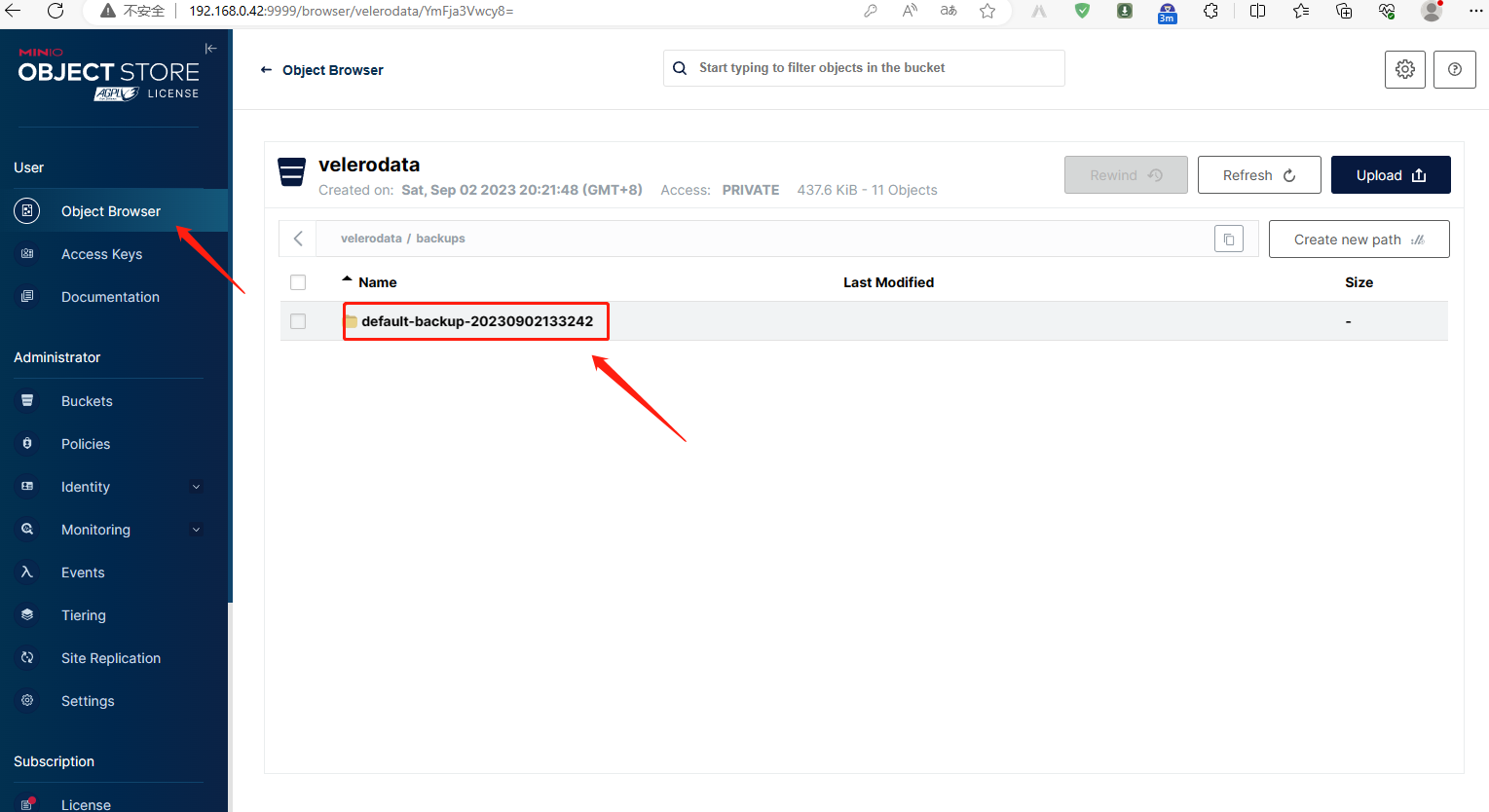

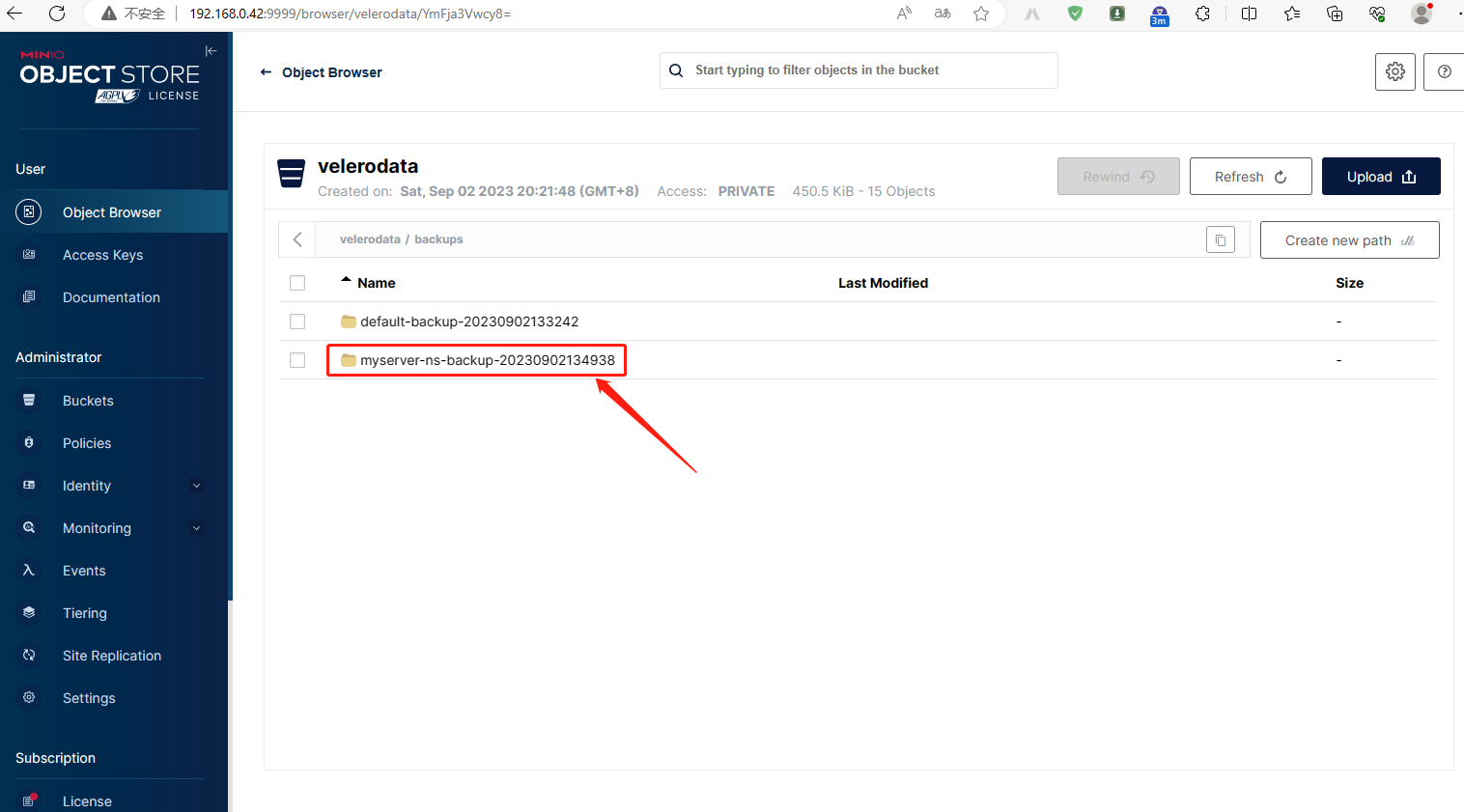

minio驗證備份資料

刪除pod並驗證資料恢復

- 刪除pod

root@k8s-master01:/data/velero# kubectl get pods

NAME READY STATUS RESTARTS AGE

bash 1/1 Running 5 (93m ago) 27d

root@k8s-master01:/data/velero# kubectl delete pod bash -n default

pod "bash" deleted

root@k8s-master01:/data/velero# kubectl get pods

No resources found in default namespace.

root@k8s-master01:/data/velero#

- 恢復pod

root@k8s-master01:/data/velero# velero restore create --from-backup default-backup-20230902133242 --wait --kubeconfig=./awsuser.kubeconfig --namespace velero-system

Restore request "default-backup-20230902133242-20230902134421" submitted successfully.

Waiting for restore to complete. You may safely press ctrl-c to stop waiting - your restore will continue in the background.

..............................

Restore completed with status: Completed. You may check for more information using the commands `velero restore describe default-backup-20230902133242-20230902134421` and `velero restore logs default-backup-20230902133242-20230902134421`.

root@k8s-master01:/data/velero#

- 驗證pod

root@k8s-master01:/data/velero# kubectl get pods

NAME READY STATUS RESTARTS AGE

bash 1/1 Running 0 77s

root@k8s-master01:/data/velero# kubectl exec -it bash bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@bash ~]# ping www.baidu.com

PING www.a.shifen.com (14.119.104.189) 56(84) bytes of data.

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=1 ttl=53 time=42.5 ms

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=2 ttl=53 time=42.2 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 42.234/42.400/42.567/0.264 ms

[root@bash ~]#

對myserver ns進行備份

root@k8s-master01:/data/velero# DATE=`date +%Y%m%d%H%M%S`

root@k8s-master01:/data/velero# velero backup create myserver-ns-backup-${DATE} \

> --include-cluster-resources=true \

> --include-namespaces myserver \

> --kubeconfig=/root/.kube/config \

> --namespace velero-system

Backup request "myserver-ns-backup-20230902134938" submitted successfully.

Run `velero backup describe myserver-ns-backup-20230902134938` or `velero backup logs myserver-ns-backup-20230902134938` for more details.

root@k8s-master01:/data/velero#

minio驗證備份

刪除pod並驗證恢復

root@k8s-master01:/data/velero# DATE=`date +%Y%m%d%H%M%S`

root@k8s-master01:/data/velero# velero backup create myserver-ns-backup-${DATE} \

> --include-cluster-resources=true \

> --include-namespaces myserver \

> --kubeconfig=/root/.kube/config \

> --namespace velero-system

Backup request "myserver-ns-backup-20230902134938" submitted successfully.

Run `velero backup describe myserver-ns-backup-20230902134938` or `velero backup logs myserver-ns-backup-20230902134938` for more details.

root@k8s-master01:/data/velero# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-deployment-name-6965765b9c-h4kj6 1/1 Running 13 (104m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-8zw5s 1/1 Running 13 (104m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-j276c 1/1 Running 13 (104m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-p76bw 1/1 Running 13 (13d ago) 98d

root@k8s-master01:/data/velero# kubectl get deployments.apps -n myserver

NAME READY UP-TO-DATE AVAILABLE AGE

myserver-myapp-deployment-name 1/1 1 1 98d

myserver-myapp-frontend-deployment 3/3 3 3 98d

root@k8s-master01:/data/velero# kubectl delete deployments.apps myserver-myapp-deployment-name -n myserver

deployment.apps "myserver-myapp-deployment-name" deleted

root@k8s-master01:/data/velero# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-frontend-deployment-6bd57599f4-8zw5s 1/1 Running 13 (106m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-j276c 1/1 Running 13 (106m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-p76bw 1/1 Running 13 (13d ago) 98d

root@k8s-master01:/data/velero# velero restore create --from-backup myserver-ns-backup-20230902134938 --wait \

> --kubeconfig=./awsuser.kubeconfig \

> --namespace velero-system

Restore request "myserver-ns-backup-20230902134938-20230902135401" submitted successfully.

Waiting for restore to complete. You may safely press ctrl-c to stop waiting - your restore will continue in the background.

...............................

Restore completed with status: Completed. You may check for more information using the commands `velero restore describe myserver-ns-backup-20230902134938-20230902135401` and `velero restore logs myserver-ns-backup-20230902134938-20230902135401`.

root@k8s-master01:/data/velero# kubectl get deployments.apps -n myserver

NAME READY UP-TO-DATE AVAILABLE AGE

myserver-myapp-deployment-name 0/1 1 0 37s

myserver-myapp-frontend-deployment 3/3 3 3 98d

root@k8s-master01:/data/velero# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-deployment-name-6965765b9c-h4kj6 0/1 PodInitializing 0 69s

myserver-myapp-frontend-deployment-6bd57599f4-8zw5s 1/1 Running 13 (108m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-j276c 1/1 Running 13 (108m ago) 98d

myserver-myapp-frontend-deployment-6bd57599f4-p76bw 1/1 Running 13 (13d ago) 98d

root@k8s-master01:/data/velero#

7.2、備份指定資源物件

備份指定namespace中的pod或特定資源

root@k8s-master01:/data/velero# DATE=`date +%Y%m%d%H%M%S`

root@k8s-master01:/data/velero# velero backup create pod-backup-${DATE} --include-cluster-resources=true \

> --ordered-resources 'pods=default/bash,magedu/ubuntu1804,magedu/mysql-0;deployments.apps=myserver/myserver-myapp-frontend-deployment,magedu/wordpress-app-deployment;services=myserver/myserver-myapp-service-name,magedu/mysql,magedu/zookeeper' \

> --namespace velero-system --include-namespaces=myserver,magedu,default

Backup request "pod-backup-20230902141842" submitted successfully.

Run `velero backup describe pod-backup-20230902141842` or `velero backup logs pod-backup-20230902141842` for more details.

root@k8s-master01:/data/velero#

刪除pod並驗證恢復

- 刪除資源

root@k8s-master01:/data/velero# kubectl get deployments.apps -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

magedu-consumer-deployment 3/3 3 3 22d

magedu-dubboadmin-deployment 1/1 1 1 22d

magedu-provider-deployment 3/3 3 3 22d

wordpress-app-deployment 1/1 1 1 13d

zookeeper1 1/1 1 1 90d

zookeeper2 1/1 1 1 90d

zookeeper3 1/1 1 1 90d

root@k8s-master01:/data/velero# kubectl delete deployments.apps wordpress-app-deployment -n magedu

deployment.apps "wordpress-app-deployment" deleted

root@k8s-master01:/data/velero# kubectl get deployments.apps -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

magedu-consumer-deployment 3/3 3 3 22d

magedu-dubboadmin-deployment 1/1 1 1 22d

magedu-provider-deployment 3/3 3 3 22d

zookeeper1 1/1 1 1 90d

zookeeper2 1/1 1 1 90d

zookeeper3 1/1 1 1 90d

root@k8s-master01:/data/velero# kubectl get svc -n magedu

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

magedu-consumer-server NodePort 10.100.208.121 <none> 80:49630/TCP 22d

magedu-dubboadmin-service NodePort 10.100.244.92 <none> 80:31080/TCP 22d

magedu-provider-spec NodePort 10.100.187.168 <none> 80:44873/TCP 22d

mysql ClusterIP None <none> 3306/TCP 79d

mysql-0 ClusterIP None <none> 3306/TCP 13d

mysql-read ClusterIP 10.100.15.127 <none> 3306/TCP 79d

redis ClusterIP None <none> 6379/TCP 88d

redis-access NodePort 10.100.117.185 <none> 6379:36379/TCP 88d

wordpress-app-spec NodePort 10.100.189.214 <none> 80:30031/TCP,443:30033/TCP 13d

zookeeper ClusterIP 10.100.237.95 <none> 2181/TCP 90d

zookeeper1 NodePort 10.100.63.118 <none> 2181:32181/TCP,2888:30541/TCP,3888:31200/TCP 90d

zookeeper2 NodePort 10.100.199.43 <none> 2181:32182/TCP,2888:32670/TCP,3888:32264/TCP 90d

zookeeper3 NodePort 10.100.41.9 <none> 2181:32183/TCP,2888:31329/TCP,3888:32546/TCP 90d

root@k8s-master01:/data/velero# kubectl delete svc mysql -n magedu

service "mysql" deleted

root@k8s-master01:/data/velero# kubectl delete svc zookeeper -n magedu

service "zookeeper" deleted

root@k8s-master01:/data/velero# kubectl get svc -n magedu

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

magedu-consumer-server NodePort 10.100.208.121 <none> 80:49630/TCP 22d

magedu-dubboadmin-service NodePort 10.100.244.92 <none> 80:31080/TCP 22d

magedu-provider-spec NodePort 10.100.187.168 <none> 80:44873/TCP 22d

mysql-0 ClusterIP None <none> 3306/TCP 13d

mysql-read ClusterIP 10.100.15.127 <none> 3306/TCP 79d

redis ClusterIP None <none> 6379/TCP 88d

redis-access NodePort 10.100.117.185 <none> 6379:36379/TCP 88d

wordpress-app-spec NodePort 10.100.189.214 <none> 80:30031/TCP,443:30033/TCP 13d

zookeeper1 NodePort 10.100.63.118 <none> 2181:32181/TCP,2888:30541/TCP,3888:31200/TCP 90d

zookeeper2 NodePort 10.100.199.43 <none> 2181:32182/TCP,2888:32670/TCP,3888:32264/TCP 90d

zookeeper3 NodePort 10.100.41.9 <none> 2181:32183/TCP,2888:31329/TCP,3888:32546/TCP 90d

root@k8s-master01:/data/velero#

- 恢復資源

root@k8s-master01:/data/velero# velero restore create --from-backup pod-backup-20230902141842 --wait \

> --kubeconfig=./awsuser.kubeconfig \

> --namespace velero-system

Restore request "pod-backup-20230902141842-20230902142341" submitted successfully.

Waiting for restore to complete. You may safely press ctrl-c to stop waiting - your restore will continue in the background.

............................................

Restore completed with status: Completed. You may check for more information using the commands `velero restore describe pod-backup-20230902141842-20230902142341` and `velero restore logs pod-backup-20230902141842-20230902142341`.

root@k8s-master01:/data/velero#

- 驗證對應資源是否恢復

root@k8s-master01:/data/velero# kubectl get kubectl get deployments.apps -n magedu

error: the server doesn't have a resource type "kubectl"

root@k8s-master01:/data/velero# kubectl get deployments.apps -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

magedu-consumer-deployment 3/3 3 3 22d

magedu-dubboadmin-deployment 1/1 1 1 22d

magedu-provider-deployment 3/3 3 3 22d

wordpress-app-deployment 1/1 1 1 2m57s

zookeeper1 1/1 1 1 90d

zookeeper2 1/1 1 1 90d

zookeeper3 1/1 1 1 90d

root@k8s-master01:/data/velero# kubectl get pods -n magedu

NAME READY STATUS RESTARTS AGE

magedu-consumer-deployment-798c7d785b-fp4b9 1/1 Running 3 (140m ago) 22d

magedu-consumer-deployment-798c7d785b-wmv9p 1/1 Running 3 (140m ago) 22d

magedu-consumer-deployment-798c7d785b-zqm74 1/1 Running 3 (13d ago) 22d

magedu-dubboadmin-deployment-798c4dfdd8-kvfvh 1/1 Running 3 (140m ago) 22d

magedu-provider-deployment-6fccc6d9f5-k6z7m 1/1 Running 3 (140m ago) 22d

magedu-provider-deployment-6fccc6d9f5-nl4zd 1/1 Running 3 (140m ago) 22d

magedu-provider-deployment-6fccc6d9f5-p94rb 1/1 Running 3 (140m ago) 22d

mysql-0 2/2 Running 12 (140m ago) 79d

mysql-1 2/2 Running 12 (140m ago) 79d

mysql-2 2/2 Running 12 (140m ago) 79d

redis-0 1/1 Running 8 (13d ago) 88d

redis-1 1/1 Running 8 (140m ago) 88d

redis-2 1/1 Running 8 (140m ago) 88d

redis-3 1/1 Running 8 (13d ago) 87d

redis-4 1/1 Running 8 (140m ago) 88d

redis-5 1/1 Running 8 (140m ago) 88d

ubuntu1804 0/1 Completed 0 88d

wordpress-app-deployment-64c956bf9c-6qp8q 2/2 Running 0 3m31s

zookeeper1-675c5477cb-vmwwq 1/1 Running 10 (13d ago) 90d

zookeeper2-759fb6c6f-7jktr 1/1 Running 10 (140m ago) 90d

zookeeper3-5c78bb5974-vxpbh 1/1 Running 10 (140m ago) 90d

root@k8s-master01:/data/velero# kubectl svc -n magedu

error: unknown command "svc" for "kubectl"

Did you mean this?

set

root@k8s-master01:/data/velero# kubectl get svc -n magedu

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

magedu-consumer-server NodePort 10.100.208.121 <none> 80:49630/TCP 22d

magedu-dubboadmin-service NodePort 10.100.244.92 <none> 80:31080/TCP 22d

magedu-provider-spec NodePort 10.100.187.168 <none> 80:44873/TCP 22d

mysql ClusterIP None <none> 3306/TCP 4m6s

mysql-0 ClusterIP None <none> 3306/TCP 13d

mysql-read ClusterIP 10.100.15.127 <none> 3306/TCP 79d

redis ClusterIP None <none> 6379/TCP 88d

redis-access NodePort 10.100.117.185 <none> 6379:36379/TCP 88d

wordpress-app-spec NodePort 10.100.189.214 <none> 80:30031/TCP,443:30033/TCP 13d

zookeeper ClusterIP 10.100.177.73 <none> 2181/TCP 4m6s

zookeeper1 NodePort 10.100.63.118 <none> 2181:32181/TCP,2888:30541/TCP,3888:31200/TCP 90d

zookeeper2 NodePort 10.100.199.43 <none> 2181:32182/TCP,2888:32670/TCP,3888:32264/TCP 90d

zookeeper3 NodePort 10.100.41.9 <none> 2181:32183/TCP,2888:31329/TCP,3888:32546/TCP 90d

root@k8s-master01:/data/velero#

7.3、批次備份所有namespace

root@k8s-master01:/data/velero# cat all-ns-backup.sh

#!/bin/bash

NS_NAME=`kubectl get ns | awk '{if (NR>2){print}}' | awk '{print $1}'`

DATE=`date +%Y%m%d%H%M%S`

cd /data/velero/

for i in $NS_NAME;do

velero backup create ${i}-ns-backup-${DATE} \

--include-cluster-resources=true \

--include-namespaces ${i} \

--kubeconfig=/root/.kube/config \

--namespace velero-system

done

root@k8s-master01:/data/velero#

執行指令碼備份

root@k8s-master01:/data/velero# bash all-ns-backup.sh

Backup request "default-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe default-ns-backup-20230902143131` or `velero backup logs default-ns-backup-20230902143131` for more details.

Backup request "kube-node-lease-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe kube-node-lease-ns-backup-20230902143131` or `velero backup logs kube-node-lease-ns-backup-20230902143131` for more details.

Backup request "kube-public-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe kube-public-ns-backup-20230902143131` or `velero backup logs kube-public-ns-backup-20230902143131` for more details.

Backup request "kube-system-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe kube-system-ns-backup-20230902143131` or `velero backup logs kube-system-ns-backup-20230902143131` for more details.

Backup request "magedu-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe magedu-ns-backup-20230902143131` or `velero backup logs magedu-ns-backup-20230902143131` for more details.

Backup request "myserver-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe myserver-ns-backup-20230902143131` or `velero backup logs myserver-ns-backup-20230902143131` for more details.

Backup request "velero-system-ns-backup-20230902143131" submitted successfully.

Run `velero backup describe velero-system-ns-backup-20230902143131` or `velero backup logs velero-system-ns-backup-20230902143131` for more details.

root@k8s-master01:/data/velero#

在minio驗證備份