OpenStack-T版+Ceph

OpenStack

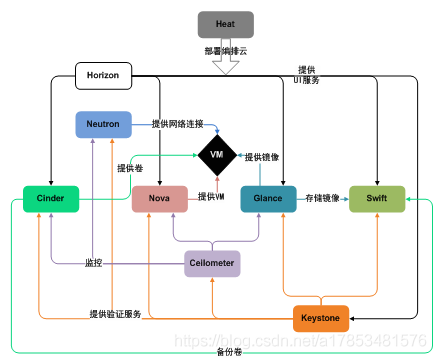

OpenStack 中有哪些元件

-

keystone:授權 [授權後各個元件才可以進行相應的功能]

Keystone 認證所有 OpenStack 服務並對其進行授權。同時,它也是所有服務的端點目錄。

-

glance: 提供虛擬機器器映象模板 [映象模板是用於建立虛擬機器器的]

[Glance 可儲存和檢索多個位置的虛擬機器器磁碟映象。]

-

nova: 主要作用: 提供虛擬機器器的執行環境; nova本身是沒有虛擬化技術的,它是通過呼叫kvm來提供虛擬化功能的。[管理虛擬機器器]

[管理虛擬機器器的整個生命週期:建立、執行、掛起、排程、關閉、銷燬等。這是真正的執行部件。接受 DashBoard 發來的命令並完成具體的動作。但是 Nova 不是虛擬機器軟體,所以還需要虛擬機器器軟體(如 KVM、Xen、Hyper-v 等)配合]

-

neutron: 給虛擬機器器提供網路。

[Neutron 能夠連線其他 OpenStack 服務並連線網路。]

-

dashboard [Horizon]:web管理介面

-

Swift:用於儲存映象 [物件儲存]

[是一種高度容錯的物件儲存服務,使用 RESTful API 來儲存和檢索非結構資料物件。]

-

Cinder:給虛擬機器器新增硬碟

[通過自助服務 API 存取持久塊儲存。]

-

Ceilometer:監控流量,按量付費

-

Heat:編排

[啟動10臺雲主機,每臺雲主機執行不同的指令碼,形成自動化起服務]

OpenStack 安裝

一、基本環境

1、虛擬機器器規劃

| 節點 | 主機名 | 記憶體 | IP | 作用 | cpu | 磁碟空間 |

|---|---|---|---|---|---|---|

| 控制節點 | controller | 大於3G | 172.16.1.160 | 管理 | 開啟虛擬化 | 50G |

| 計算節點 | compute1 | 大於1G | 172.16.1.161 | 執行虛擬機器器 | 開啟虛擬化 | 50G |

2、設定yum 源(每個節點都要設定)

[root@controller ~]# cd /etc/yum.repos.d

[root@controller yum.repos.d]# mkdir backup && mv C* backup

[root@controller yum.repos.d]# wget https://mirrors.aliyun.com/repo/Centos-7.repo

[root@controller yum.repos.d]# yum repolist all

3、關閉安全服務(每個節點都要設定)

關閉防火牆

[root@controller ~]# systemctl stop firewalld.service; systemctl disable firewalld.service

關閉selinux

[root@controller ~]# sed -i 's/SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config

[root@controller ~]# setenforce 0

[root@controller ~]# reboot

[root@controller ~]# getenforce

Disabled

4、設定時間服務(每個節點都要設定)

[root@controller ~]# yum install chrony -y

# 控制節點

[root@controller ~]# vim /etc/chrony.conf

……

server ntp6.aliyun.com iburst

……

allow 172.16.1.0/24 // 設定同步的網段, 也可以設定所有: all

local stratum 10

[root@controller ~]# systemctl restart chronyd && systemctl enable chronyd

# 計算節點

[root@computer1 ~]# yum install ntpdate -y

[root@computer1 ~]# vim /etc/chrony.conf

……

server 172.16.1.160 iburst

[root@computer1 ~]# systemctl restart chronyd && systemctl enable chronyd

[root@computer1 ~]# ntpdate 172.16.1.160

5、安裝OpenStack(每個節點都要設定)

安裝 OpenStack 使用者端

[root@controller ~]# yum install centos-release-openstack-train -y

[root@controller ~]# yum install python-openstackclient -y

安裝 OpenStack-selinux

[root@controller ~]# yum install openstack-selinux -y

6、SQL資料庫

#在控制節點上安裝

[root@controller ~]# yum -y install mariadb mariadb-server python2-PyMySQL # `python2-PyMySQL` python模組

[root@controller ~]# vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 172.16.1.160

default-storage-engine = innodb # 預設儲存引擎

innodb_file_per_table # 獨立表空間檔案

max_connections = 4096 # 最大連線數

collation-server = utf8_general_ci

character-set-server = utf8 # 預設字元集 utf-8

[root@controller ~]# systemctl enable mariadb.service && systemctl start mariadb.service

[root@controller ~]# mysql_secure_installation

7、訊息佇列

#在控制節點安裝

[root@controller ~]# yum install rabbitmq-server -y

[root@controller ~]# systemctl enable rabbitmq-server.service && systemctl start rabbitmq-server.service

[root@controller ~]# rabbitmqctl add_user openstack RABBIT_PASS ## 新增 openstack 使用者 [使openstack所有服務都能用上訊息佇列]

可以用合適的密碼替換 RABBIT_DBPASS,建議不修改,不然後面全部都要修改。

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*" ##給'openstack'使用者設定寫和讀許可權:

#啟用 rabbitmq 的管理外掛,方便以後做監控 < 可省略 >

[root@controller ~]# rabbitmq-plugins enable rabbitmq_management ##// 執行後會產生 15672 埠< 外掛的 >

[root@controller ~]# netstat -altnp | grep 5672

tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 2112/beam.smp

tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 2112/beam.smp

tcp 0 0 172.16.1.160:37231 172.16.1.160:25672 TIME_WAIT -

tcp6 0 0 :::5672 :::* LISTEN 2112/beam.smp

# 存取

IP:15672

# 預設密碼

使用者: guest

密碼: guest

8、Memcached

- 認證服務認證快取使用Memcached快取token。快取服務memecached執行在控制節點。

- token: 用於驗證使用者登入資訊, 利用memcached將token快取下來,那麼下次使用者登入時,就不需要驗證了[提高效率]

[root@controller ~]# yum install memcached python-memcached -y

[root@controller ~]# sed -i 's/127.0.0.1/172.16.1.160/g' /etc/sysconfig/memcached

[root@controller ~]# systemctl enable memcached.service && systemctl start memcached.service

二、Keystone認證服務

- 認證管理,授權管理和服務目錄

- 服務目錄 :使用者建立映象[9292],虛擬機器器[nova:8774],網路[9696]等服時,都要存取該服務的伺服器埠,而openstack的服務較多,使用者記起來很麻煩,即keystone提供的服務目錄解決了這一問題

1、先決條件

在你設定 OpenStack 身份認證服務前,你必須建立一個資料庫和管理員令牌(token)。

建立資料庫:

[root@controller ~]# mysql -u root -p

建立 keystone 資料庫:

MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.00 sec)

對'keystone'資料庫授予恰當的許可權:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'172.16.1.160' IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> flush privileges;

可以用合適的密碼替換 KEYSTONE_DBPASS

2、安裝並設定元件

[root@controller ~]# yum install openstack-keystone httpd mod_wsgi -y

[root@controller ~]# cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf_bak

[root@controller ~]# egrep -v '^$|#' /etc/keystone/keystone.conf_bak > /etc/keystone/keystone.conf

編輯檔案 /etc/keystone/keystone.conf 並完成如下動作:

在 [database] 部分,設定資料庫存取:

[database]

...

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

將``KEYSTONE_DBPASS``替換為你為資料庫選擇的密碼。

在``[token]``部分,設定以Fernet(方式) UUID令牌的提供者。

[token]

...

provider = fernet

注:keystone 認證方式: UUID、 PKI、 Fernet;

# 都只是生成一段隨機字串的方法

檢測

[root@controller ~]# md5sum /etc/keystone/keystone.conf

f6d8563afb1def91c1b6a884cef72f11 /etc/keystone/keystone.conf

3、同步資料庫

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

su: 切換使用者

-s: 指定 shell + **shell

-c: 指定執行的命令 + 命令

keystone: 使用者

# 意思: 切換到 keystone 使用者執行 /bin/shell < keystone-manage db_sync > 命令

mysql -u root -ppassword keystone -e "show tables;"

4、初始化Fernet

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

5、引導身份服務

[root@controller ~]# keystone-manage bootstrap --bootstrap-password ADMIN_PASS --bootstrap-admin-url http://controller:5000/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

#可替換ADMIN_PASS為適合管理使用者的密碼

6、設定 Apache HTTP 伺服器

編輯/etc/httpd/conf/httpd.conf 檔案,設定ServerName 選項為控制節點: [大約在95行]

[root@controller ~]# echo 'ServerName controller' >> /etc/httpd/conf/httpd.conf # 提高啟動 http 速度

建立檔案並編輯 /etc/httpd/conf.d/wsgi-keystone.conf

建立/usr/share/keystone/wsgi-keystone.conf檔案的連結:

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

[root@controller ~]# systemctl enable httpd.service;systemctl restart httpd.service

7、設定環境變數

[root@controller ~]# export OS_TOKEN=ADMIN_TOKEN # 設定認證令牌

[root@controller ~]# export OS_URL=http://controller:5000/v3 # 設定端點URL

[root@controller ~]# export OS_IDENTITY_API_VERSION=3 # 設定認證 API 版本

8、檢視環境變數

[root@controller keystone]# env | grep OS

HOSTNAME=controller

OS_IDENTITY_API_VERSION=3

OS_TOKEN=ADMIN_TOKEN

OS_URL=http://controller:5000/v3

9、建立域、專案、使用者和角色

##注:預設建立了default域和admin專案、admin使用者及角色,只需要建立service

1、建立域 default:

[root@controller keystone]# openstack domain create --description "Default Domain" default

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Default Domain |

| enabled | True |

| id | 5d191921be13447ba77bd25aeaad3c01 |

| name | default |

| tags | [] |

+-------------+----------------------------------+

2、在你的環境中,為進行管理操作,建立管理的專案、使用者和角色:

(1)、建立 admin 專案:

[root@controller keystone]# openstack project create --domain default --description "Admin Project" admin

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Admin Project |

| domain_id | 5d191921be13447ba77bd25aeaad3c01 |

| enabled | True |

| id | 664c99b0582f452a9cd04b6847912e41 |

| is_domain | False |

| name | admin |

| parent_id | 5d191921be13447ba77bd25aeaad3c01 |

| tags | [] |

+-------------+----------------------------------+

(2)、建立 admin 使用者: //這裡是將官網的-prompt密碼改為ADMIN_PASS

[root@controller keystone]# openstack user create --domain default --password ADMIN_PASS admin

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | 5d191921be13447ba77bd25aeaad3c01 |

| enabled | True |

| id | b8ee9f1c2b8640718f9628db33aad5f4 |

| name | admin |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

(3)、建立 admin 角色:

[root@controller keystone]# openstack role create admin

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 70c1f94f2edf4f6e9bdba9b7c3191a15 |

| name | admin |

+-----------+----------------------------------+

(4)、新增'admin' 角色到 admin 專案和使用者上:

[root@controller keystone]# openstack role add --project admin --user admin admin

(5)、本指南使用一個你新增到你的環境中每個服務包含獨有使用者的service 專案。建立``service``專案:

[root@controller keystone]# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | 5d191921be13447ba77bd25aeaad3c01 |

| enabled | True |

| id | b2e04f3a01eb4994a2990d9e75f8de11 |

| is_domain | False |

| name | service |

| parent_id | 5d191921be13447ba77bd25aeaad3c01 |

| tags | [] |

+-------------+----------------------------------+

10、認證測試

[root@controller ~]# vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

# 載入環境變數

[root@controller ~]# source admin-openrc

# 開機自動掛載

[root@controller ~]# echo 'source admin-openrc' >> /root/.bashrc

# 退出登入

[root@controller ~]# logout

[root@controller ~]# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2022-09-26T08:42:45+0000 |

| id | gAAAAABjMVf1pipTbv_5WNZHh3yhsAjfhRlFV8SQING_Ra_NT382uAUTOnYo1m0-VJMms8tP_ieSCCpavejPMqHphmj7Mvxw0jYjWwXHTY8lV69UeJt5SJPqCwtJ0wZJqlQkVzkicZI_QqXO3UyvBTTAvv19X5Q6GzXnhJMkk0rJ09CtrM1fPJI |

| project_id | 664c99b0582f452a9cd04b6847912e41 |

| user_id | b8ee9f1c2b8640718f9628db33aad5f4 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

三、Glance映象服務

OpenStack映象服務是IaaS的核心服務,如同 :ref:(https://docs.openstack.org/mitaka/zh_CN/install-guide-rdo/common/get_started_image_service.html#id1)get_started_conceptual_architecture所示。它接受磁碟映象或伺服器映象API請求,和來自終端使用者或OpenStack計算元件的後設資料定義。它也支援包括OpenStack物件儲存在內的多種型別倉庫上的磁碟映象或伺服器映象儲存。

大量週期性程序執行於OpenStack映象服務上以支援快取。同步複製(Replication)服務保證叢集中的一致性和可用性。其它週期性程序包括auditors, updaters, 和 reapers。

OpenStack映象服務包括以下元件:

-

glance-api

接收映象API的呼叫,諸如映象發現、恢復、儲存。

-

glance-registry

儲存、處理和恢復映象的後設資料,後設資料包括項諸如大小和型別。

1、先決條件

安裝和設定映象服務之前,你必須建立建立一個資料庫、服務憑證和API端點。

(1)、建立資料庫

[root@controller ~]# mysql -u root -p

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'172.16.1.160' IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.00 sec)

可用一個合適的密碼替換 GLANCE_DBPASS

(2)、建立使用者關聯角色

(I)、建立 glance 使用者:

[root@controller ~]# openstack user create --domain default --password GLANCE_PASS glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | 5d191921be13447ba77bd25aeaad3c01 |

| enabled | True |

| id | 821acc687c24458c9c643d5150fd266d |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

(II)、新增 admin 角色到 glance 使用者和 service 專案上:

[root@controller ~]# openstack role add --project service --user glance admin

(3)、建立服務並註冊 API

(I)、建立 glance 服務實體:

[root@controller ~]# openstack service create --name glance --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | 3bf4df103e5e42c5b507d67ed97921e8 |

| name | glance |

| type | image |

+-------------+----------------------------------+

(II)、建立映象服務的 API 端點:

[root@controller ~]# openstack endpoint create --region RegionOne image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8e2877750e0b4398aa54628f5039ad65 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3bf4df103e5e42c5b507d67ed97921e8 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3a1041640a1b4206aa094376c84d4148 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3bf4df103e5e42c5b507d67ed97921e8 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | a6b14642d90e467da07da6b68e8ebeae |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3bf4df103e5e42c5b507d67ed97921e8 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

2、安全並設定元件

(1)、安裝軟體包

[root@controller ~]# yum install openstack-glance -y

(2)、編輯檔案 /etc/glance/glance-api.conf 並完成如下動作:

[root@controller ~]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf_bak

[root@controller ~]# egrep -v "^$|#" /etc/glance/glance-api.conf_bak > /etc/glance/glance-api.conf

[root@controller ~]# vim /etc/glance/glance-api.conf

-

在

[database]部分,設定資料庫存取:[database] ... connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance 將``GLANCE_DBPASS`` 替換為你為映象服務選擇的密碼。 -

在

[keystone_authtoken]和[paste_deploy]部分,設定認證服務存取:[keystone_authtoken] ... www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = GLANCE_PASS [paste_deploy] ... flavor = keystone 將 GLANCE_PASS 替換為你為認證服務中你為 glance 使用者選擇的密碼。 -

在

[glance_store]部分,設定本地檔案系統儲存和映象檔案位置:[glance_store] ... stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/

(3)、編輯檔案'/etc/glance/glance-registry.conf'並完成如下動作:

[root@controller ~]# cp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf_bak

[root@controller ~]# egrep -v '^$|#' /etc/glance/glance-registry.conf_bak > /etc/glance/glance-registry.conf

[root@controller ~]# vim /etc/glance/glance-registry.conf

- 在

[database]部分,設定資料庫存取:

[database]

...

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

- 在

[keystone_authtoken]和[paste_deploy]部分,設定認證服務存取:

[keystone_authtoken]

...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

...

flavor = keystone

可將 GLANCE_PASS 替換為你為認證服務中你為 glance 使用者選擇的密碼。

(4)、寫入映象服務資料庫:

su -s /bin/sh -c "glance-manage db_sync" glance

驗證:

mysql -u root -ppassword glance -e "show tables;"

3、啟動服務

[root@controller ~]# systemctl enable openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl start openstack-glance-api.service openstack-glance-registry.service

4、驗證測試

(1)、下載源映象:

[root@controller ~]# wget http://cdit-support.thundersoft.com/download/System_ISO/ubuntu18.04/ubuntu-18.04.5-live-server-amd64.iso

(2)、使用 QCOW2 磁碟格式, bare 容器格式上傳映象到映象服務並設定公共可見,這樣所有的專案都可以存取它:

[root@controller ~]# openstack image create "ubuntu18.04-server" --file ubuntu-18.04.5-live-server-amd64.iso --disk-format qcow2 --container-format bare --public

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | fcd77cd8aa585da4061655045f3f0511 |

| container_format | bare |

| created_at | 2022-09-27T07:11:16Z |

| disk_format | qcow2 |

| file | /v2/images/348a05ba-8b07-41bd-8b90-d1af41e5783b/file |

| id | 348a05ba-8b07-41bd-8b90-d1af41e5783b |

| min_disk | 0 |

| min_ram | 0 |

| name | ubuntu18.04-server |

| owner | 664c99b0582f452a9cd04b6847912e41 |

| properties | os_hash_algo='sha512', os_hash_value='5320be1a41792ec35ac05cdd7f5203c4fa6406dcfd7ca4a79042aa73c5803596e66962a01aabb35b8e64a2e37f19f7510bffabdd4955cff040e8522ff5e1ec1e', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 990904320 |

| status | active |

| tags | |

| updated_at | 2022-09-27T07:11:28Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

四、Placement安裝

Placement 在 Openstack 中主要用於跟蹤和監控各種資源的使用情況,例如,在 Openstack 中包括計算資源、儲存資源、網路等各種資源。Placement 用來跟蹤管理每種資源的當前使用情況。 Placement 服務在 Openstack 14.0.0 Newton 版本中被引入到 nova 庫,並在 19.0.0 Stein 版本中被獨立到 Placement 庫中,即在 stein 版被獨立成元件。 Placement 服務提供 REST API 堆疊和資料模型,用於跟蹤資源提供者不同型別的資源的庫存和使用情況。 資源提供者可以是計算資源、共用儲存池、 IP 池等。例如,建立一個範例時會消耗計算節點的 CPU、記憶體,會消耗儲存節點的儲存;同時還會消耗網路節點的 IP 等等,所消耗資源的型別被跟蹤為 類。Placement 提供了一組標準資源類(例如 DISK_GB、MEMORY_MB 和 VCPU),並提供了根據需要定義自定義資源類的能力。

(一)、先決條件

1、建立資料庫並授權

[root@controller ~]# mysql -u root -p

MariaDB [(none)]> CREATE DATABASE placement;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'172.16.1.160' IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.000 sec)

可替換PLACEMENT_DBPASS為合適的密碼

2、建立使用者和 API 伺服器端點

#建立placment使用者

[root@controller ~]# openstack user create --domain default --password PLACEMENT_PASS placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 3aa59a7790734af593f1e0f0bb544860 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#將 Placement 使用者新增到具有管理員角色的服務專案中

[root@controller ~]# openstack role add --project service --user placement admin

#建立placement服務實體

[root@controller ~]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | dbb9e71a38584bedbda1ff318c38bdb2 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

#建立 Placement API 伺服器端點

[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b73f0c0eba1741fa8c20c3656278f3a9 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dbb9e71a38584bedbda1ff318c38bdb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 495bbf7aa8364210be166a39375d8121 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dbb9e71a38584bedbda1ff318c38bdb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 68a8c3a4e3b94a9eb30921b01170982a |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | dbb9e71a38584bedbda1ff318c38bdb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

(二)、安裝和設定元件

1、安裝軟體包

[root@controller ~]# yum install openstack-placement-api -y

2、修改組態檔

編輯/etc/placement/placement.conf檔案並完成以下操作:

[root@controller ~]# cp /etc/placement/placement.conf /etc/placement/placement.conf_bak

[root@controller ~]# egrep -v "^$|#" /etc/placement/placement.conf_bak > /etc/placement/placement.conf

[root@controller ~]# vim /etc/placement/placement.conf

-

在該

[placement_database]部分中,設定資料庫存取:[placement_database] # ... connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement -

在

[api]和[keystone_authtoken]部分中,設定身份服務存取:[api] # ... auth_strategy = keystone [keystone_authtoken] # ... auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = placement password = PLACEMENT_PASS

3、同步資料庫

[root@controller ~]# su -s /bin/sh -c "placement-manage db sync" placement

[root@controller ~]# mysql -uroot -ppassword placement -e "show tables;"

#忽略輸出資訊

4、重啟服務

[root@controller ~]# systemctl restart httpd

(三)、驗證測試

[root@controller ~]# placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

五、Nova計算服務

(一)、控制節點設定

1、資料庫授權

(1)、建立資料庫

[root@controller ~]# mysql -u root -p

#建立 nova_api 和 nova 資料庫:

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

#對資料庫進行正確的授權:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'172.16.1.160' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'172.16.1.160' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'172.16.1.160' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.00 sec)

可用合適的密碼代替 NOVA_DBPASS。

(2)、獲得 admin 憑證來獲取只有管理員能執行的命令的存取許可權

. admin-openrc

(3)、建立使用者並關聯角色

#建立 nova 使用者:

[root@controller ~]# openstack user create --domain default --password NOVA_PASS nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | f3e5c9439bf84f8cbb3a975eb852eb69 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#給 nova 使用者新增 admin 角色:

[root@controller ~]# openstack role add --project service --user nova admin

#建立 nova 服務實體:

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | ec3a89fcb73a4e0b9cf83f05372f78e8 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

#檢視endpoint資訊

[root@controller ~]# openstack endpoint list

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------+

| 0a489b615f244b14ba1cbbeb915a5eb4 | RegionOne | glance | image | True | admin | http://controller:9292 |

| 1161fbff859d4ffe96756ed17e975267 | RegionOne | glance | image | True | internal | http://controller:9292 |

| 495bbf7aa8364210be166a39375d8121 | RegionOne | placement | placement | True | internal | http://controller:8778 |

| 68a8c3a4e3b94a9eb30921b01170982a | RegionOne | placement | placement | True | admin | http://controller:8778 |

| 81e21f9e5069492086f7368ae983b640 | RegionOne | glance | image | True | public | http://controller:9292 |

| a91af59e7fa448698db106f1c9d9178c | RegionOne | keystone | identity | True | internal | http://controller:5000/v3/ |

| b73f0c0eba1741fa8c20c3656278f3a9 | RegionOne | placement | placement | True | public | http://controller:8778 |

| b792451b1fc344bbb541f8a4a5b67b50 | RegionOne | keystone | identity | True | public | http://controller:5000/v3/ |

| d2fb160ceac747d1afef1c348db92812 | RegionOne | keystone | identity | True | admin | http://controller:5000/v3/ |

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------+

#建立 Compute 服務 API 端點 :

[root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 72aac47247aa4c62b719241487389fb4 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ec3a89fcb73a4e0b9cf83f05372f78e8 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | a60b87e2f3c24cc3b2e078d114a7296a |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ec3a89fcb73a4e0b9cf83f05372f78e8 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 561b92669bb7487195d74956dd3e14b8 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ec3a89fcb73a4e0b9cf83f05372f78e8 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

2、安裝並設定元件

(1)、安裝軟體包

[root@controller ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

-y

openstack-nova-api: 接受並響應所有計算服務的請求, 管理雲主機的生命週期

openstack-nova-conductor: 修改資料庫中虛擬機器器的狀態

openstack-nova-novncproxy : web版的VNC 直接操作雲主機

openstack-nova-scheduler: 排程器

(2)、編輯 /etc/nova/nova.conf 檔案並完成下面的操作

[root@controller ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf_bak

[root@controller ~]# egrep -v "^$|#" /etc/nova/nova.conf_bak > /etc/nova/nova.conf

[root@controller ~]# vim /etc/nova/nova.conf

- 在

[DEFAULT]部分,只啟用計算和後設資料API

[DEFAULT]

...

enabled_apis = osapi_compute,metadata

- 在

[api_database]和[database]部分,設定資料庫的連線

[api_database]

...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

可用你為 Compute 資料庫選擇的密碼來代替 NOVA_DBPASS

- 在 「[DEFAULT]」 和 「[oslo_messaging_rabbit]」部分,設定 「RabbitMQ」 訊息佇列存取

[DEFAULT]

...

transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/

可用你在 「RabbitMQ」 中為 「openstack」 選擇的密碼替換 「RABBIT_PASS」

- 在 「[api]」 和 「[keystone_authtoken]」 部分,設定認證服務存取

[api]

...

auth_strategy = keystone

[keystone_authtoken]

...

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

可使用你在身份認證服務中設定的'nova' 使用者的密碼替換'NOVA_PASS'

- 在

[DEFAULT部分,設定my_ip來使用控制節點的管理介面的IP 地址

[DEFAULT]

...

my_ip = 172.16.1.160

- 在

[DEFAULT]部分,使能 Networking 服務

[DEFAULT]

...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

- 在

[vnc]部分,設定VNC代理使用控制節點的管理介面IP地址

[vnc]

...

enabled = true

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

- 在

[glance]區域,設定映象服務 API 的位置

[glance]

...

api_servers = http://controller:9292

- 在

[oslo_concurrency]部分,設定鎖路徑

[oslo_concurrency]

...

lock_path = /var/lib/nova/tmp

- 在該

[placement]部分中,設定對 Placement 服務的存取

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

(3)、同步Compute 資料庫

#同步nova-api資料庫

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

#註冊cell0資料庫

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

#建立cell1單元格

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

#同步nova資料庫

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

注:忽略輸出資訊,可以登陸資料庫檢視是否有表

#驗證 nova cell0 和 cell1 是否正確註冊:

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | b3252455-b9dd-427d-9395-da68baeda7c5 | rabbit://openstack:****@controller:5672/ | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

3、啟動服務

[root@controller ~]# systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

[root@controller ~]# systemctl start openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

(二)、計算節點設定

1、安裝並設定元件

(1)、安裝軟體包

[root@computer1 ~]# yum install openstack-nova-compute -y

(2)、編輯/etc/nova/nova.conf檔案並完成下面的操作

[root@compute1 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf_bak

[root@compute1 yum.repos.d]# egrep -v "^$|#" /etc/nova/nova.conf_bak > /etc/nova/nova.conf

[root@compute1 ~]# vim /etc/nova/nova.conf

-

在該

[DEFAULT]部分中,僅啟用計算和後設資料 API:[DEFAULT] # ... enabled_apis = osapi_compute,metadata -

在該

[DEFAULT]部分中,設定RabbitMQ訊息佇列存取:[DEFAULT] # ... transport_url = rabbit://openstack:RABBIT_PASS@controller替換為您在 中為 帳戶

RABBIT_PASS選擇的密碼。openstack``RabbitMQ -

在

[api]和[keystone_authtoken]部分中,設定身份服務存取:[api] # ... auth_strategy = keystone [keystone_authtoken] # ... www_authenticate_uri = http://controller:5000/ auth_url = http://controller:5000/ memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS 可替換為您在身份服務中`NOVA_PASS`為使用者選擇的密碼。`nova` -

在該

[DEFAULT]部分中,設定my_ip選項:[DEFAULT] # ... my_ip = 172.16.1.161替換為計算節點上管理網路介面的 IP 地址,對於範例架構

MANAGEMENT_INTERFACE_IP_ADDRESS中的第一個節點,通常為 10.0.0.31 。 -

在該

[DEFAULT]部分中,啟用對網路服務的支援:[DEFAULT] # ... use_neutron = true firewall_driver = nova.virt.firewall.NoopFirewallDriver -

在該

[vnc]部分中,啟用和設定遠端控制檯存取:[vnc] # ... enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://172.16.1.160:6080/vnc_auto.html -

在該

[glance]部分中,設定影象服務 API 的位置:[glance] # ... api_servers = http://controller:9292 -

在該

[oslo_concurrency]部分中,設定鎖定路徑:[oslo_concurrency] # ... lock_path = /var/lib/nova/tmp -

在該

[placement]部分中,設定 Placement API:[placement] # ... region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS #替換為您在身份服務中`PLACEMENT_PASS`為使用者選擇的密碼 。`placement`註釋掉該`[placement]`部分中的任何其他選項

2、完成安裝

(1)、確定您的計算節點是否支援虛擬機器器的硬體加速

[root@computer1 ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

2

如果這個命令返回了 one or greater 的值,那麼你的計算節點支援硬體加速且不需要額外的設定。

如果這個命令返回了 zero 值,那麼你的計算節點不支援硬體加速。你必須設定 libvirt 來使用 QEMU 去代替 KVM;如果不支援,在 /etc/nova/nova.conf 檔案的 [libvirt] 區域做出如下的編輯:

[libvirt]

...

virt_type = qemu

(2)、啟動計算服務及其依賴,並將其設定為隨系統自動啟動

[root@computer1 ~]# systemctl enable libvirtd.service openstack-nova-compute.service

[root@computer1 ~]# systemctl start libvirtd.service openstack-nova-compute.service

(三)、驗證

[root@controller ~]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------------+----------+---------+-------+----------------------------+

| 1 | nova-conductor | controller | internal | enabled | up | 2022-10-10T09:18:59.000000 |

| 2 | nova-scheduler | controller | internal | enabled | up | 2022-10-10T09:19:03.000000 |

| 7 | nova-compute | compute1 | nova | enabled | up | 2022-10-10T09:19:04.000000 |

+----+----------------+------------+----------+---------+-------+----------------------------+

#列出身份服務中的 API 端點以驗證與身份服務的連線:

[root@controller ~]# openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| glance | image | RegionOne |

| | | admin: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | RegionOne |

| | | public: http://controller:9292 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:5000/v3/ |

| | | |

| placement | placement | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | |

+-----------+-----------+-----------------------------------------+

六、Networking網路服務

OpenStack 網路使用的是一個 SDN(Software Defined Networking)元件,即 Neutron,SDN 是一個可插拔的架構,支援插入交換機、防火牆、負載均 衡器等,這些都定義在軟體中,從而實現對整個雲基礎設施的精細化管控。 前期規劃,將 ens33 網口作為外部網路(在 Openstack 術語中,外部網路常被稱之為 Provider 網路),同時也用作管理網路,便於測試存取,生產環境 建議分開;ens37 網路作為租戶網路,即 vxlan 網路;ens38 作為 ceph 叢集網路。

OpenStack 網路部署方式可選的有 OVS 和 LinuxBridge。此處選擇 LinuxBridge 模式,部署大同小異。

在控制節點上要啟動的服務 neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

(一)、控制節點設定

1、先決條件

(1)、建立資料庫並授權

[root@controller ~]# mysql -u root -p

MariaDB [(none)]> CREATE DATABASE neutron;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'172.16.1.160' IDENTIFIED BY 'NEUTRON_DBPASS';

Query OK, 0 rows affected (0.00 sec)

可對'neutron' 資料庫授予合適的存取許可權,使用合適的密碼替換'NEUTRON_DBPASS'

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.00 sec)

(2)、獲得 admin 憑證來獲取只有管理員能執行的命令的存取許可權

[root@controller ~]# .admin-openrc ##重新整理環境變數

(3)、要建立服務證書

- 建立neutron使用者

[root@controller ~]# openstack user create --domain default --password NEUTRON_PASS neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 81c68ee930884059835110c1b31b305c |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

- 新增admin角色到neutron使用者

[root@controller ~]# openstack role add --project service --user neutron admin

- 建立

neutron服務實體

[root@controller ~]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | cac6a744698445a88092f67521973bc3 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

- 建立網路服務API端點

[root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 040aa02925db4ec2b9d3ee43d94352e2 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cac6a744698445a88092f67521973bc3 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 92ccf1b6b687466493c2f05911510368 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cac6a744698445a88092f67521973bc3 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

+--------------+----------------------------------+

| enabled | True |

| id | 6a0ddef76c81405f99500f70739ce945 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cac6a744698445a88092f67521973bc3 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

2、設定網路選項

一、提供商網路

1、安裝元件

[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

openstack-neutron-linuxbridge:網橋,用於建立橋接網路卡

ebtables:防火牆規則

2、設定服務元件

編輯/etc/neutron/neutron.conf 檔案並完成如下操作

[root@controller ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/neutron.conf_bak > /etc/neutron/neutron.conf

[root@controller ~]# vim /etc/neutron/neutron.conf

- 在

[database]部分,設定資料庫存取

[database]

...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

- 在

[DEFAULT]部分,啟用ML2外掛並禁用其他外掛

[DEFAULT]

...

core_plugin = ml2

service_plugins =

- 在 「[DEFAULT]」 -設定 「RabbitMQ」 訊息佇列的連線

[DEFAULT]

...

transport_url = rabbit://openstack:RABBIT_PASS@controller

可用你在RabbitMQ中為``openstack``選擇的密碼替換 「RABBIT_PASS」

- 在 「[DEFAULT]」 和 「[keystone_authtoken]」 部分,設定認證服務存取

[DEFAULT]

...

auth_strategy = keystone

[keystone_authtoken]

...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

可將 NEUTRON_PASS 替換為你在認證服務中為 neutron 使用者選擇的密碼

- 在

[DEFAULT]和[nova]部分,設定網路服務來通知計算節點的網路拓撲變化

[DEFAULT]

...

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[nova]

...

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

可使用你在身份認證服務中設定的``nova`` 使用者的密碼替換``NOVA_PASS``

- 在

[oslo_concurrency]部分,設定鎖路徑

[oslo_concurrency]

...

lock_path = /var/lib/neutron/tmp

3、設定 Modular Layer 2 (ML2) 外掛

編輯/etc/neutron/plugins/ml2/ml2_conf.ini檔案並完成以下操作

[root@controller ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/plugins/ml2/ml2_conf.ini_bak > /etc/neutron/plugins/ml2/ml2_conf.ini

[root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

- 在

[ml2]部分,啟用flat和VLAN網路

[ml2]

...

type_drivers = flat,vlan

- 在

[ml2]部分,禁用私有網路

[ml2]

...

tenant_network_types =

- 在

[ml2]部分,啟用Linuxbridge機制

[ml2]

...

mechanism_drivers = linuxbridge

- 在

[ml2]部分,啟用埠安全擴充套件驅動

[ml2]

...

extension_drivers = port_security

- 在

[ml2_type_flat]部分,設定公共虛擬網路為flat網路

[ml2_type_flat]

...

flat_networks = provider

- 在該

[ml2_type_vxlan]部分中,為自助服務網路設定 VXLAN 網路識別符號範圍: - 在 [securitygroup]部分,啟用 [ipset]增加安全組規則的高效性

[securitygroup]

...

enable_ipset = True

4、設定Linuxbridge代理

Linuxbridge代理為範例建立layer-2虛擬網路並且處理安全組規則。

編輯/etc/neutron/plugins/ml2/linuxbridge_agent.ini檔案並且完成以下操作

[root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/plugins/ml2/linuxbridge_agent.ini_bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[root@controller ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

- 在

[linux_bridge]部分,將公共虛擬網路和公共物理網路介面對應起來

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

將``PROVIDER_INTERFACE_NAME`` 替換為底層的物理公共網路介面,我這裡是ens33

- 在

[vxlan]部分,禁止VXLAN覆蓋網路

[vxlan]

enable_vxlan = False

- 在 [securitygroup]部分,啟用安全組並設定 Linuxbridge iptables firewall driver

[securitygroup]

...

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

5、修改核心組態檔/etc/sysctl.conf,確保系統核心支援網橋過濾器

[root@controller ~]# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# modprobe br_netfilter

[root@controller ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# sed -i '$amodprobe br_netfilter' /etc/rc.local

[root@controller ~]# chmod +x /etc/rc.d/rc.local

6、設定DHCP代理

編輯/etc/neutron/dhcp_agent.ini檔案並完成下面的操作

[root@controller ~]# cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/dhcp_agent.ini_bak > /etc/neutron/dhcp_agent.ini

[root@controller ~]# vim /etc/neutron/dhcp_agent.ini

- 在

[DEFAULT]部分,設定Linuxbridge驅動介面,DHCP驅動並啟用隔離後設資料,這樣在公共網路上的範例就可以通過網路來存取後設資料

[DEFAULT]

...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

二、自助服務網路

1、安裝元件

[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

openstack-neutron-linuxbridge:網橋,用於建立橋接網路卡

ebtables:防火牆規則

2、設定服務元件

編輯/etc/neutron/neutron.conf 檔案並完成如下操作

[root@controller ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/neutron.conf_bak > /etc/neutron/neutron.conf

[root@controller ~]# vim /etc/neutron/neutron.conf

- 在

[database]部分,設定資料庫存取

[database]

...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

- 在

[DEFAULT]部分,啟用ML2外掛並禁用其他外掛

[DEFAULT]

...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

- 在 「[DEFAULT]」 -設定 「RabbitMQ」 訊息佇列的連線

[DEFAULT]

...

transport_url = rabbit://openstack:RABBIT_PASS@controller

可用你在RabbitMQ中為``openstack``選擇的密碼替換 「RABBIT_PASS」

- 在 「[DEFAULT]」 和 「[keystone_authtoken]」 部分,設定認證服務存取

[DEFAULT]

...

auth_strategy = keystone

[keystone_authtoken]

...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

可將 NEUTRON_PASS 替換為你在認證服務中為 neutron 使用者選擇的密碼

- 在

[DEFAULT]和[nova]部分,設定網路服務來通知計算節點的網路拓撲變化

[DEFAULT]

...

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

可使用你在身份認證服務中設定的``nova`` 使用者的密碼替換``NOVA_PASS``

- 在

[oslo_concurrency]部分,設定鎖路徑

[oslo_concurrency]

...

lock_path = /var/lib/neutron/tmp

3、設定 Modular Layer 2 (ML2) 外掛

編輯/etc/neutron/plugins/ml2/ml2_conf.ini檔案並完成以下操作

[root@controller ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/plugins/ml2/ml2_conf.ini_bak > /etc/neutron/plugins/ml2/ml2_conf.ini

[root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

- 在

[ml2]部分,啟用flat和VLAN網路

[ml2]

...

type_drivers = flat,vlan,vxlan

- 在

[ml2]部分,禁用私有網路

[ml2]

...

tenant_network_types = vxlan

- 在

[ml2]部分,啟用Linuxbridge機制

[ml2]

...

mechanism_drivers = linuxbridge,l2population

- 在

[ml2]部分,啟用埠安全擴充套件驅動

[ml2]

...

extension_drivers = port_security

- 在

[ml2_type_flat]部分,設定公共虛擬網路為flat網路

[ml2_type_flat]

...

flat_networks = provider

- 在

[ml2_type_vxlan]部分中,為自助服務網路設定 VXLAN 網路識別符號範圍:

[ml2_type_vxlan]

# ...

vni_ranges = 1:1000

- 在 [securitygroup]部分,啟用 [ipset]增加安全組規則的高效性

[securitygroup]

...

enable_ipset = True

4、設定Linuxbridge代理

Linuxbridge代理為範例建立layer-2虛擬網路並且處理安全組規則。

編輯/etc/neutron/plugins/ml2/linuxbridge_agent.ini檔案並且完成以下操作

[root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/plugins/ml2/linuxbridge_agent.ini_bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[root@controller ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

- 在

[linux_bridge]部分,將公共虛擬網路和公共物理網路介面對應起來

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

將``PROVIDER_INTERFACE_NAME`` 替換為底層的物理公共網路介面,我這裡是ens33

- 在

[vxlan]部分,禁止VXLAN覆蓋網路

[vxlan]

enable_vxlan = true

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = true

替換OVERLAY_INTERFACE_IP_ADDRESS為處理覆蓋網路的底層物理網路介面的IP地址。範例架構使用管理介面將流量通過隧道傳輸到其他節點。因此,替換OVERLAY_INTERFACE_IP_ADDRESS為控制器節點的管理IP地址(10.10.10.1)。

- 在 [securitygroup]部分,啟用安全組並設定 Linuxbridge iptables firewall driver

[securitygroup]

...

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

5、修改核心組態檔/etc/sysctl.conf,確保系統核心支援網橋過濾器

[root@controller ~]# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# modprobe br_netfilter

[root@controller ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# sed -i '$amodprobe br_netfilter' /etc/rc.local

[root@controller ~]# chmod +x /etc/rc.d/rc.local

6、設定第三層代理

第 3 層 (L3) 代理為自助服務虛擬網路提供路由和 NAT 服務

1、編輯/etc/neutron/l3_agent.ini檔案並完成以下操作:

[root@controller neutron]# cp /etc/neutron/l3_agent.ini /etc/neutron/l3_agent.ini_bak

[root@controller neutron]# egrep -v "^$|#" /etc/neutron/l3_agent.ini_bak > /etc/neutron/l3_agent.ini

[root@controller neutron]# vim /etc/neutron/l3_agent.ini

在該[DEFAULT]部分中,設定 Linux 橋接介面驅動程式:

[DEFAULT]

# ...

interface_driver = linuxbridge

7、設定DHCP代理

編輯/etc/neutron/dhcp_agent.ini檔案並完成下面的操作

[root@controller ~]# cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/dhcp_agent.ini_bak > /etc/neutron/dhcp_agent.ini

[root@controller ~]# vim /etc/neutron/dhcp_agent.ini

- 在

[DEFAULT]部分,設定Linuxbridge驅動介面,DHCP驅動並啟用隔離後設資料,這樣在公共網路上的範例就可以通過網路來存取後設資料

[DEFAULT]

...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

3、設定後設資料代理

作用:存取範例的憑證

編輯/etc/neutron/metadata_agent.ini檔案並完成以下操作

[root@controller ~]# cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini_bak

[root@controller ~]# egrep -v "^$|#" /etc/neutron/metadata_agent.ini_bak > /etc/neutron/metadata_agent.ini

[root@controller ~]# vim /etc/neutron/metadata_agent.ini

[DEFAULT]

...

nova_metadata_ip = controller

metadata_proxy_shared_secret = METADATA_SECRET

可用你為後設資料代理設定的密碼替換 METADATA_SECRET

4、為nova設定網路服務

編輯/etc/nova/nova.conf檔案並完成以下操作

[root@controller ~]# vim /etc/nova/nova.conf

- 在

[neutron]部分,設定存取引數,啟用後設資料代理並設定密碼

[neutron]

...

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

將 NEUTRON_PASS 替換為你在認證服務中為 neutron 使用者選擇的密碼。

可使用你為後設資料代理設定的密碼替換``METADATA_SECRET``

5、完成安裝

(1)、網路服務初始化指令碼需要一個超連結 /etc/neutron/plugin.ini``指向ML2外掛組態檔/etc/neutron/plugins/ml2/ml2_conf.ini``。如果超連結不存在,使用下面的命令建立它

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

(2)、同步資料庫

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

(3)、重啟計算API 服務

[root@controller ~]# systemctl restart openstack-nova-api.service

(4)、當系統啟動時,啟動 Networking 服務並設定它啟動

- 對於兩種網路選項:

[root@controller ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@controller ~]# systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

- 對於網路選項2,同樣啟用layer-3服務並設定其隨系統自啟動

[root@controller ~]# systemctl start neutron-l3-agent.service

[root@controller ~]# systemctl enable neutron-l3-agent.service

(二)、計算節點設定

1、安裝元件

[root@computer1 ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

2、設定通用元件

Networking 通用元件的設定包括認證機制、訊息佇列和外掛。

編輯/etc/neutron/neutron.conf 檔案並完成如下操作

[root@computer1 ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf_bak

[root@computer1 ~]# egrep -v "^$|#" /etc/neutron/neutron.conf_bak > /etc/neutron/neutron.conf

[root@computer1 ~]# vim /etc/neutron/neutron.conf

- 在 「[DEFAULT]」 ,設定 「RabbitMQ」 訊息佇列的連線

[DEFAULT]

...

transport_url = rabbit://openstack:RABBIT_PASS@controller

可用你在RabbitMQ中為``openstack``選擇的密碼替換 「RABBIT_PASS」

- 在 「[DEFAULT]」 和 「[keystone_authtoken]」 部分,設定認證服務存取

[DEFAULT]

...

auth_strategy = keystone

[keystone_authtoken]

...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

可將 NEUTRON_PASS 替換為你在認證服務中為 neutron 使用者選擇的密碼

- 在

[oslo_concurrency]部分,設定鎖路徑

[oslo_concurrency]

...

lock_path = /var/lib/neutron/tmp

3、 設定網路選項

(一)、提供商網路

由於該設定與控制節點一樣,即複製到計算節點即可

[root@computer1 ~]# scp -r root@controller:/etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini

修改核心組態檔/etc/sysctl.conf,確保系統核心支援網橋過濾器

[root@controller ~]# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# modprobe br_netfilter

[root@controller ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@controller ~]# sed -i '$amodprobe br_netfilter' /etc/rc.local

[root@controller ~]# chmod +x /etc/rc.d/rc.local

(二)、自助服務網路

1、設定 Linux 網橋代理

Linux 橋接代理為範例構建第 2 層(橋接和交換)虛擬網路基礎架構並處理安全組。

1、編輯/etc/neutron/plugins/ml2/linuxbridge_agent.ini檔案並完成以下操作

[root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini_bak

[root@compute1 ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

在該[linux_bridge]部分中,將提供者虛擬網路對映到提供者物理網路介面:

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

替換PROVIDER_INTERFACE_NAME為底層提供者物理網路介面的名稱。有關詳細資訊,請參閱主機網路 。

在該[vxlan]部分中,啟用 VXLAN 覆蓋網路,設定處理覆蓋網路的物理網路介面的 IP 地址,並啟用第 2 層填充:

[vxlan]

enable_vxlan = true

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = true

替換OVERLAY_INTERFACE_IP_ADDRESS為處理覆蓋網路的底層物理網路介面的 IP 地址。範例架構使用管理介面將流量通過隧道傳輸到其他節點。因此,替換OVERLAY_INTERFACE_IP_ADDRESS為計算節點的管理IP地址。有關詳細資訊,請參閱 主機網路。

在該[securitygroup]部分中,啟用安全組並設定 Linux 網橋 iptables 防火牆驅動程式:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

2、修改核心組態檔/etc/sysctl.conf,確保系統核心支援網橋過濾器

[root@compute1 ~]# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables

net.bridge.bridge-nf-call-ip6tables

4、為nova設定網路服務

編輯/etc/nova/nova.conf檔案並完成下面的操作

[root@computer1 ~]# vim /etc/nova/nova.conf

- 在

[neutron]部分,設定存取引數

[neutron]

...

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

可將 NEUTRON_PASS 替換為你在認證服務中為 neutron 使用者選擇的密碼

5、完成安裝

- 重啟計算服務

[root@computer1 ~]# systemctl restart openstack-nova-compute.service

- 啟動Linuxbridge代理並設定它開機自啟動

[root@computer1 ~]# systemctl enable neutron-linuxbridge-agent.service

[root@computer1 ~]# systemctl start neutron-linuxbridge-agent.service

(三)、驗證操作

[root@controller ~]# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 02199446-fe60-4f56-a0f0-3ea6827f6891 | Linux bridge agent | compute1 | None | :-) | UP | neutron-linuxbridge-agent |

| 1d8812ec-4237-4d75-937c-40a9fac82c65 | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

| 2cd47568-54a1-4bea-b2fa-bb1d1b2fe935 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

| 533aa260-78f3-4391-b14c-4a1639eda135 | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| fd1c7b5c-21ad-4e47-967d-4625e66c3962 | Linux bridge agent | controller | None | :-) | UP | neutron-linuxbridge-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

(四)、建立一個範例(controller節點)

1、建立虛擬網路

- 提供商網路

1、執行admin憑證獲取存取許可權

[root@controller ~]# . admin-openrc

2、建立提供商網路

[root@controller ~]# openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-17T10:13:25Z |

| description | |

| dns_domain | None |

| id | 3ae54d14-14e6-48a2-ab7d-10329ce9bb93 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| location | cloud='', project.domain_id=, project.domain_name='Default', project.id='2c9db0df0c9d4543816a07cec1e4d5d5', project.name='admin', region_name='', zone= |

| mtu | 1500 |

| name | provider |

| port_security_enabled | True |

| project_id | 2c9db0df0c9d4543816a07cec1e4d5d5 |

| provider:network_type | flat |

| provider:physical_network | provider |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 1 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2022-10-17T10:13:25Z |

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

3、建立一個子網

[root@controller ~]# openstack subnet create --network provider --allocation-pool start=172.16.1.220,end=172.16.1.240 --dns-nameserver 192.168.87.8 --gateway 172.16.1.2 --subnet-range 172.16.1.0/24 provider

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| allocation_pools | 172.16.1.220-172.16.1.240 |

| cidr | 172.16.1.0/24 |

| created_at | 2022-10-17T10:16:10Z |

| description | |

| dns_nameservers | 192.168.87.8 |

| enable_dhcp | True |

| gateway_ip | 172.16.1.2 |

| host_routes | |

| id | eba80af0-8b35-4b5d-9e61-4a524579f631 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| location | cloud='', project.domain_id=, project.domain_name='Default', project.id='2c9db0df0c9d4543816a07cec1e4d5d5', project.name='admin', region_name='', zone= |

| name | provider |

| network_id | 3ae54d14-14e6-48a2-ab7d-10329ce9bb93 |

| prefix_length | None |

| project_id | 2c9db0df0c9d4543816a07cec1e4d5d5 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2022-10-17T10:16:10Z |

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

- 自助服務網路

1、執行admin憑證獲取存取許可權

[root@controller ~]# . admin-openrc

2、建立自助服務網路

[root@controller ~]# openstack network create selfservice

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-17T09:51:04Z |

| description | |

| dns_domain | None |

| id | 7bf4d5b8-7190-4e05-b3cb-201dae570c1d |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| location | cloud='', project.domain_id=, project.domain_name='Default', project.id='2c9db0df0c9d4543816a07cec1e4d5d5', project.name='admin', region_name='', zone= |

| mtu | 1450 |

| name | selfservice |

| port_security_enabled | True |

| project_id | 2c9db0df0c9d4543816a07cec1e4d5d5 |

| provider:network_type | vxlan |

| provider:physical_network | None |

| provider:segmentation_id | 1 |

| qos_policy_id | None |

| revision_number | 1 |

| router:external | Internal |

| segments | None |

| shared | False |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2022-10-17T09:51:04Z |

+---------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

3、建立一個子網

[root@controller ~]# openstack subnet create --network selfservice --dns-nameserver 114.114.114.114 --gateway 192.168.1.1 --subnet-range 192.168.1.0/24 selfservice

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| allocation_pools | 10.10.10.1-10.10.10.253 |

| cidr | 10.10.10.0/24 |

| created_at | 2022-10-17T10:03:05Z |

| description | |

| dns_nameservers | 114.114.114.114 |

| enable_dhcp | True |

| gateway_ip | 10.10.10.254 |

| host_routes | |

| id | d5898751-981c-40e2-8e1b-bbe9812cdbf6 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| location | cloud='', project.domain_id=, project.domain_name='Default', project.id='2c9db0df0c9d4543816a07cec1e4d5d5', project.name='admin', region_name='', zone= |

| name | selfservice |

| network_id | 7bf4d5b8-7190-4e05-b3cb-201dae570c1d |

| prefix_length | None |

| project_id | 2c9db0df0c9d4543816a07cec1e4d5d5 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2022-10-17T10:03:05Z |

+-------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

4、建立路由

[root@controller ~]# openstack router create router

+-------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+-------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-17T10:04:49Z |

| description | |

| distributed | False |

| external_gateway_info | null |

| flavor_id | None |

| ha | False |

| id | 33c7f8f1-7798-49fc-a3d5-83786a70819b |

| location | cloud='', project.domain_id=, project.domain_name='Default', project.id='2c9db0df0c9d4543816a07cec1e4d5d5', project.name='admin', region_name='', zone= |

| name | router |

| project_id | 2c9db0df0c9d4543816a07cec1e4d5d5 |

| revision_number | 1 |

| routes | |

| status | ACTIVE |

| tags | |

| updated_at | 2022-10-17T10:04:49Z |

+-------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------+

5、在路由器上新增自助網路子網作為介面

[root@controller ~]# openstack router add subnet router selfservice

6、給路由器設定提供商網路閘道器

[root@controller ~]# openstack router set router --external-gateway provider

2、驗證操作

1、列出網路名稱空間。你應該可以看到一個’ qrouter ‘名稱空間和兩個’qdhcp ‘ 名稱空間

[root@controller ~]# ip netns

qdhcp-3ae54d14-14e6-48a2-ab7d-10329ce9bb93 (id: 2)

qrouter-33c7f8f1-7798-49fc-a3d5-83786a70819b (id: 1)

qdhcp-7bf4d5b8-7190-4e05-b3cb-201dae570c1d (id: 0)

2、列出路由器上的埠來確定公網閘道器的IP 地址

[root@controller ~]# neutron router-port-list router

neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead.

+--------------------------------------+------+----------------------------------+-------------------+-------------------------------------------------------------------------------------+

| id | name | tenant_id | mac_address | fixed_ips |

+--------------------------------------+------+----------------------------------+-------------------+-------------------------------------------------------------------------------------+

| 90943cea-ee27-42a3-9b4f-6b6a8f4c70ab | | 2c9db0df0c9d4543816a07cec1e4d5d5 | fa:16:3e:f3:31:ec | {"subnet_id": "d5898751-981c-40e2-8e1b-bbe9812cdbf6", "ip_address": "10.10.10.254"} |

| ae5858c5-03a1-4a51-9d8e-71d0d74b7900 | | | fa:16:3e:3b:60:2f | {"subnet_id": "eba80af0-8b35-4b5d-9e61-4a524579f631", "ip_address": "172.16.1.236"} |

+--------------------------------------+------+----------------------------------+-------------------+-------------------------------------------------------------------------------------+

3、建立一個範例型別

[root@controller ~]# openstack flavor create --id 0 --vcpus 2 --ram 512 --disk 1 m1.nano

+----------------------------+---------+

| Field | Value |

+----------------------------+---------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 1 |

| id | 0 |

| name | m1.nano |

| os-flavor-access:is_public | True |

| properties | |

| ram | 512 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 2 |

+----------------------------+---------+

4、生成一個鍵值對

#生成和新增金鑰對

[root@controller ~]# ssh-keygen -q -N ""

[root@controller ~]# openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

+-------------+-------------------------------------------------+

| Field | Value |

+-------------+-------------------------------------------------+

| fingerprint | 78:21:ea:39:d0:e0:a0:12:26:55:5e:50:62:cb:f4:78 |

| name | mykey |

| user_id | 58126687cbcc4888bfa9ab73a2256f27 |

+-------------+-------------------------------------------------+

#驗證公鑰的新增

[root@controller ~]# openstack keypair list

+-------+-------------------------------------------------+

| Name | Fingerprint |

+-------+-------------------------------------------------+

| mykey | 78:21:ea:39:d0:e0:a0:12:26:55:5e:50:62:cb:f4:78 |

+-------+-------------------------------------------------+

5、增加安全組規則

-

新增規則到

default安全組允許 ICMP (ping):

[root@controller ~]# openstack security group rule create --proto icmp default

允許安全 shell (SSH) 的存取:

[root@controller ~]# openstack security group rule create --proto tcp --dst-port 22 default

6、啟動一個範例

- 列出範例型別

[root@controller ~]# openstack flavor list

+----+---------+-----+------+-----------+-------+-----------+

| ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+----+---------+-----+------+-----------+-------+-----------+

| 0 | m1.nano | 512 | 1 | 0 | 2 | True |

+----+---------+-----+------+-----------+-------+-----------+

- 列出可用映象

[root@controller ~]# openstack image list

+--------------------------------------+-----------------------+--------+

| ID | Name | Status |

+--------------------------------------+-----------------------+--------+

| d8e30d01-3b95-4ec7-9b22-785cd0076ae4 | cirros | active |

| f09fe2d3-5a6e-4169-926b-cb13cd5e6018 | ubuntu-18.04-server | active |

| cae84d10-2034-4f8b-8ae0-3d0115d90a68 | ubuntu2004-01Snapshot | active |

| 2ada7482-a406-487e-a9b3-d7bd235fe29f | vm5 | active |

+--------------------------------------+-----------------------+--------+

- 列出安全組

[root@controller ~]# openstack security group list

+--------------------------------------+---------+------------------------+----------------------------------+------+

| ID | Name | Description | Project | Tags |

+--------------------------------------+---------+------------------------+----------------------------------+------+

| 9fa1a67a-1d4e-41ca-a86a-b0ed03a06c37 | default | Default security group | 2c9db0df0c9d4543816a07cec1e4d5d5 | [] |

| a3258f5d-039b-4ece-ba94-aa95a2ea82f4 | default | Default security group | 9c18512ba8d241619aef8a8018d25587 | [] |

+--------------------------------------+---------+------------------------+----------------------------------+------+

- 列出可用網路

[root@controller ~]# openstack network list

+--------------------------------------+-------------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+-------------+--------------------------------------+

| 3ae54d14-14e6-48a2-ab7d-10329ce9bb93 | provider | eba80af0-8b35-4b5d-9e61-4a524579f631 |

| 7bf4d5b8-7190-4e05-b3cb-201dae570c1d | selfservice | d5898751-981c-40e2-8e1b-bbe9812cdbf6 |

+--------------------------------------+-------------+--------------------------------------+

- 啟動範例

使用selfservice網路的ID替換SELFSERVICE_NET_ID