Flutter ncnn 使用

Flutter 實現手機端 App,如果想利用 AI 模型新增新穎的功能,那麼 ncnn 就是一種可考慮的手機端推理模型的框架。

本文即是 Flutter 上使用 ncnn 做模型推理的實踐分享。有如下內容:

- ncnn 體驗:環境準備、模型轉換及測試

- Flutter 專案體驗: 本文 demo_ncnn 體驗

- Flutter 專案實現

- 建立 FFI plugin,實現 dart 繫結 C 介面

- 建立 App,於 Linux 應用 plugin 做推理

- 適配 App,於 Android 能編譯執行

demo_ncnn 程式碼: https://github.com/ikuokuo/start-flutter/tree/main/demo_ncnn

ncnn 體驗

ncnn 環境準備

獲取 ncnn 原始碼,並編譯。以下是 Ubuntu 上的步驟:

# demo 用的預編譯庫,建議與其版本一致

export YYYYMMDD=20230517

git clone -b $YYYYMMDD --depth 1 https://github.com/Tencent/ncnn.git

# Build for Linux

# https://github.com/Tencent/ncnn/wiki/how-to-build#build-for-linux

sudo apt install build-essential git cmake libprotobuf-dev protobuf-compiler libvulkan-dev vulkan-tools libopencv-dev

cd ncnn/

git submodule update --init

mkdir -p build; cd build

# cmake -LAH ..

cmake -DCMAKE_BUILD_TYPE=Release \

-DCMAKE_INSTALL_PREFIX=$HOME/ncnn-$YYYYMMDD \

-DNCNN_VULKAN=ON \

-DNCNN_BUILD_EXAMPLES=ON \

-DNCNN_BUILD_TOOLS=ON \

..

make -j$(nproc); make install

設定 ncnn 環境,

# 軟鏈,以便替換

sudo ln -sfT $HOME/ncnn-$YYYYMMDD /usr/local/ncnn

cat <<-EOF >> ~/.bashrc

# ncnn

export NCNN_HOME=/usr/local/ncnn

export PATH=\$NCNN_HOME/bin:\$PATH

EOF

# 測試 tools

ncnnoptimize

測試 YOLOX 推理樣例,

# 下載 YOLOX ncnn 模型,解壓進工作目錄 ncnn/build/examples

# 說明可見 ncnn/examples/yolox.cpp 的註釋

# https://github.com/Megvii-BaseDetection/YOLOX/releases/download/0.1.1rc0/yolox_s_ncnn.tar.gz

tar -xzvf yolox_s_ncnn.tar.gz

# 下載 YOLOX 測試圖片,拷貝進工作目錄 ncnn/build/examples

# https://github.com/Megvii-BaseDetection/YOLOX/blob/main/assets/dog.jpg

# 進入工作目錄

cd ncnn/build/examples

# 執行 YOLOX ncnn 樣例

./yolox dog.jpg

ncnn 模型轉換

上述 YOLOX 推理,用的是已轉換好的模型。實際推理某一個模型,得了解如何做轉換。

這裡還以 YOLOX 模型為例,體驗 ncnn 轉換、修改、量化模型的過程。步驟依照的 YOLOX/demo/ncnn 的說明。此外,ncnn/tools 下有各類模型轉換工具的說明。

Step 1) 下載 YOLOX 模型

- yolox_nano.onnx: YOLOX-Nano ONNX 模型

Step 2) onnx2ncnn 轉換模型

# onnx 簡化

# https://github.com/daquexian/onnx-simplifier

# pip3 install onnxsim

python3 -m onnxsim yolox_nano.onnx yolox_nano_sim.onnx

# onnx 轉換為 ncnn

onnx2ncnn yolox_nano_sim.onnx yolox_nano.param yolox_nano.bin

報錯 Unsupported slice step ! 可忽略。Focus layer 已經於 demo 的 yolox.cpp 裡實現了。

Step 3) 修改 yolox_nano.param

修改 yolox_nano.param 把第一個 Convolution 前的層都刪掉,另加個 YoloV5Focus 層,並修改層數值。

修改前:

291 324

Input images 0 1 images

Split splitncnn_input0 1 4 images images_splitncnn_0 images_splitncnn_1 images_splitncnn_2 images_splitncnn_3

Crop 630 1 1 images_splitncnn_3 630 -23309=2,0,0 -23310=2,2147483647,2147483647 -23311=2,1,2

Crop 635 1 1 images_splitncnn_2 635 -23309=2,0,1 -23310=2,2147483647,2147483647 -23311=2,1,2

Crop 640 1 1 images_splitncnn_1 640 -23309=2,1,0 -23310=2,2147483647,2147483647 -23311=2,1,2

Crop 650 1 1 images_splitncnn_0 650 -23309=2,1,1 -23310=2,2147483647,2147483647 -23311=2,1,2

Concat Concat_40 4 1 630 640 635 650 683 0=0

Convolution Conv_41 1 1 683 1177 0=16 1=3 11=3 2=1 12=1 3=1 13=1 4=1 14=1 15=1 16=1 5=1 6=1728

修改後:

286 324

Input images 0 1 images

YoloV5Focus focus 1 1 images 683

注:onnx 簡化這裡用處不大,合了本來要刪除的幾個

Crop層。

Step 4) ncnnoptimize 量化模型

ncnnoptimize 轉為 fp16,減少一半權重:

ncnnoptimize yolox_nano.param yolox_nano.bin yolox_nano_fp16.param yolox_nano_fp16.bin 65536

如果量化為 int8,可見 Post Training Quantization Tools。

ncnn 推理實踐

修改 ncnn/examples/yolox.cpp detect_yolox() 裡模型路徑,重編譯後測試:

cd ncnn/build/examples

./yolox dog.jpg

demo_ncnn 體驗

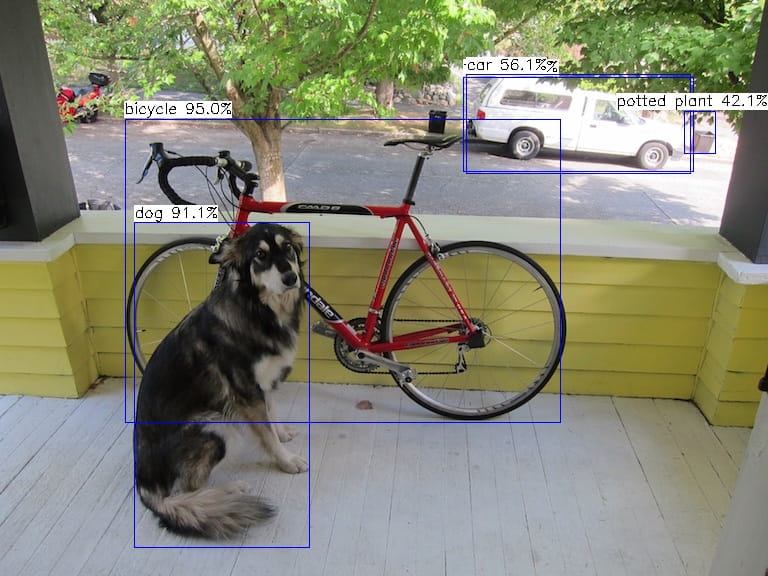

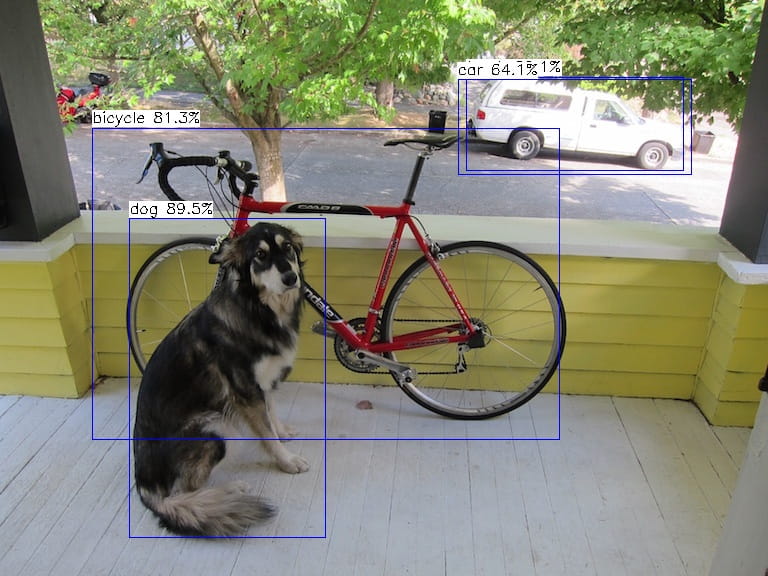

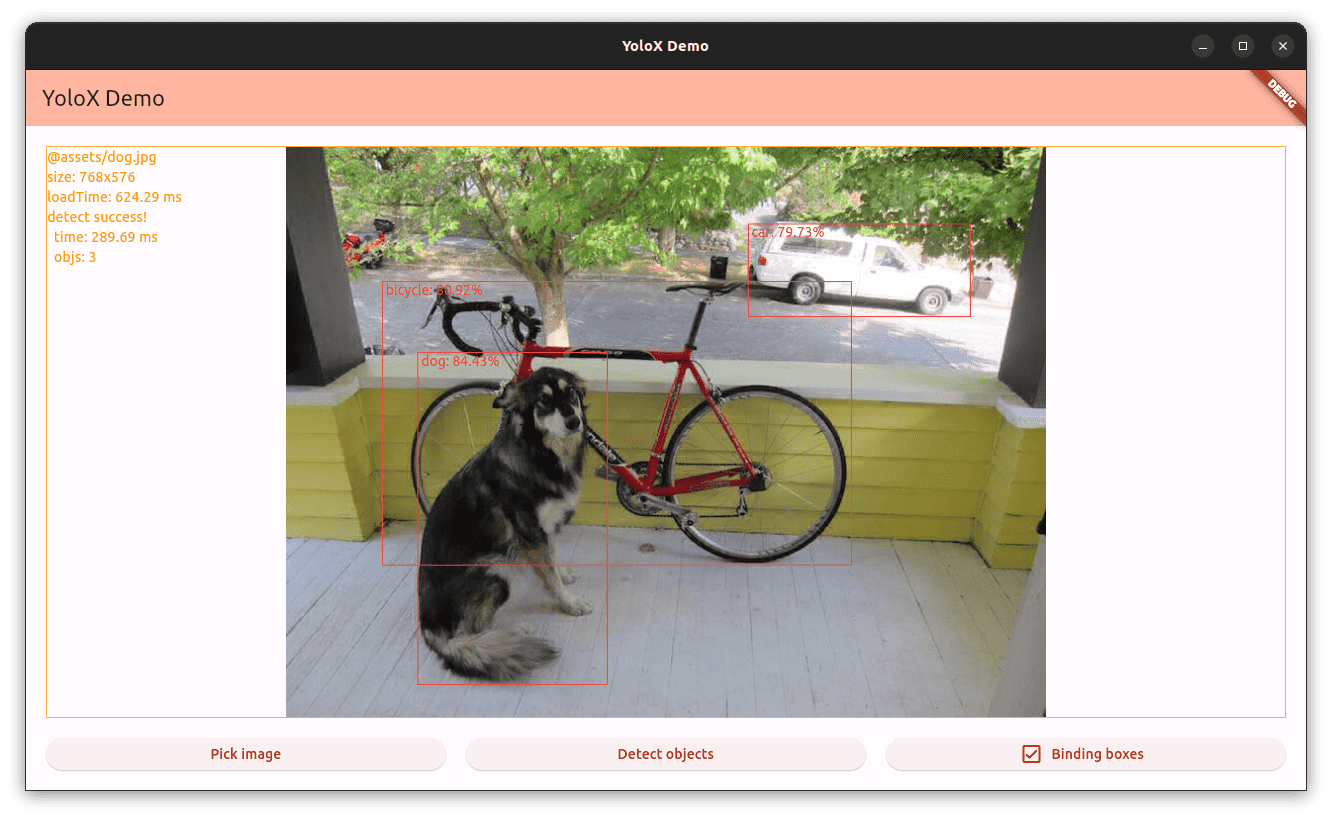

demo_ncnn 是本文實踐的演示專案,可以執行體驗。效果如下:

準備 Flutter 環境

Flutter 請依照官方檔案 Get started 進行準備。

準備 demo_ncnn 專案

獲取 demo_ncnn 原始碼,

git clone --depth 1 https://github.com/ikuokuo/start-flutter.git

其中,

demo_ncnn/: 選擇圖片進行 ncnn 推理的 Flutter 應用plugins/ncnn_yolox/: ncnn 推理 yolox 模型的 Flutter FFI 外掛

安裝依賴,

cd demo_ncnn/

flutter pub get

sudo apt-get install libclang-dev libomp-dev

準備 Linux 預編譯庫,

解壓進 plugins/ncnn_yolox/linux/。

準備 Android 預編譯庫,

解壓進 plugins/ncnn_yolox/android/。

確認 ncnn_yolox/src/CMakeLists.txt 裡 ncnn_DIR OpenCV_DIR 的路徑正確。

體驗 demo_ncnn 專案

執行體驗,

cd demo_ncnn/

flutter run

# 或檢視裝置,-d 指定執行

flutter devices

flutter run -d linux

demo_ncnn 實現

demo_ncnn 實現,分為兩部分:

- Flutter FFI 外掛:實現 dart 繫結 C 介面

- Flutter App 應用:實現 UI 並應用外掛做推理

建立 FFI 外掛

# 建立 FFI 外掛

flutter create --org dev.flutter -t plugin_ffi --platforms=android,ios,linux ncnn_yolox

cd ncnn_yolox

# 更新 ffigen 版本

# 不然,可能報錯 Error: The type 'YoloX' must be 'base', 'final' or 'sealed'

flutter pub outdated

flutter pub upgrade --major-versions

之後,只需在 src/ncnn_yolox.h 裡定義 C 介面並實現,然後用 package:ffigen 自動生成 Dart 繫結就可以了。

Step 1) 定義 C 介面

#ifdef __cplusplus

extern "C" {

#endif

FFI_PLUGIN_EXPORT typedef int yolox_err_t;

#define YOLOX_OK 0

#define YOLOX_ERROR -1

FFI_PLUGIN_EXPORT struct YoloX {

const char *model_path; // path to model file

const char *param_path; // path to param file

float nms_thresh; // nms threshold

float conf_thresh; // threshold of bounding box prob

float target_size; // target image size after resize, might use 416 for small model

};

// ncnn::Mat::PixelType

FFI_PLUGIN_EXPORT enum PixelType {

PIXEL_RGB = 1,

PIXEL_BGR = 2,

PIXEL_GRAY = 3,

PIXEL_RGBA = 4,

PIXEL_BGRA = 5,

};

FFI_PLUGIN_EXPORT struct Rect {

float x;

float y;

float w;

float h;

};

FFI_PLUGIN_EXPORT struct Object {

int label;

float prob;

struct Rect rect;

};

FFI_PLUGIN_EXPORT struct DetectResult {

int object_num;

struct Object *object;

};

FFI_PLUGIN_EXPORT struct YoloX *yoloxCreate();

FFI_PLUGIN_EXPORT void yoloxDestroy(struct YoloX *yolox);

FFI_PLUGIN_EXPORT struct DetectResult *detectResultCreate();

FFI_PLUGIN_EXPORT void detectResultDestroy(struct DetectResult *result);

FFI_PLUGIN_EXPORT yolox_err_t detectWithImagePath(

struct YoloX *yolox, const char *image_path, struct DetectResult *result);

FFI_PLUGIN_EXPORT yolox_err_t detectWithPixels(

struct YoloX *yolox, const uint8_t *pixels, enum PixelType pixelType,

int img_w, int img_h, struct DetectResult *result);

#ifdef __cplusplus

}

#endif

Step 2) 實現 C 介面

src/ncnn_yolox.cc 實現參考 ncnn/examples/yolox.cpp 來做的。

Step 3) 更新 Dart 繫結介面

lib/ncnn_yolox_bindings_generated.dart,

flutter pub run ffigen --config ffigen.yaml

如果要了解 dart 怎麼與 C 互動,可見:C interop using dart:ffi。

Step 4) 準備依賴庫

- Linux,解壓進

linux/- ncnn-YYYYMMDD-ubuntu-2204-shared.zip

- opencv-mobile-4.6.0-ubuntu-2204.zip

- Android,解壓進

android/- ncnn-YYYYMMDD-android-vulkan-shared.zip

- opencv-mobile-4.6.0-android.zip

Step 5) 寫構建指令碼

# packages

if(CMAKE_SYSTEM_NAME STREQUAL "Linux")

set(ncnn_DIR "${MY_PROJ}/linux/ncnn-20230517-ubuntu-2204-shared/lib/cmake")

set(OpenCV_DIR "${MY_PROJ}/linux/opencv-mobile-4.6.0-ubuntu-2204/lib/cmake")

elseif(CMAKE_SYSTEM_NAME STREQUAL "Android")

set(ncnn_DIR "${MY_PROJ}/android/ncnn-20230517-android-vulkan-shared/${ANDROID_ABI}/lib/cmake/ncnn")

set(OpenCV_DIR "${MY_PROJ}/android/opencv-mobile-4.6.0-android/sdk/native/jni")

else()

message(FATAL_ERROR "system not support: ${CMAKE_SYSTEM_NAME}")

endif()

if(NOT EXISTS ${ncnn_DIR})

message(FATAL_ERROR "ncnn_DIR not exists: ${ncnn_DIR}")

endif()

if(NOT EXISTS ${OpenCV_DIR})

message(FATAL_ERROR "OpenCV_DIR not exists: ${OpenCV_DIR}")

endif()

## ncnn

find_package(ncnn REQUIRED)

message(STATUS "ncnn_FOUND: ${ncnn_FOUND}")

## opencv

find_package(OpenCV 4 REQUIRED)

message(STATUS "OpenCV_VERSION: ${OpenCV_VERSION}")

message(STATUS "OpenCV_INCLUDE_DIRS: ${OpenCV_INCLUDE_DIRS}")

message(STATUS "OpenCV_LIBS: ${OpenCV_LIBS}")

# targets

include_directories(

${MY_PROJ}/src

${OpenCV_INCLUDE_DIRS}

)

## ncnn_yolox

add_library(ncnn_yolox SHARED

"ncnn_yolox.cc"

)

target_link_libraries(ncnn_yolox ncnn ${OpenCV_LIBS})

set_target_properties(ncnn_yolox PROPERTIES

PUBLIC_HEADER ncnn_yolox.h

OUTPUT_NAME "ncnn_yolox"

)

target_compile_definitions(ncnn_yolox PUBLIC DART_SHARED_LIB)

測試 ncnn 推理

首先,把準備好的模型放進 assets 目錄。如:

assets/

├── dog.jpg

├── yolox_nano_fp16.bin

└── yolox_nano_fp16.param

之後,於 Linux 可以自測 C & Dart 介面實現。

Step 1) C 介面測試

std::string assets_dir("../assets/");

std::string image_path = assets_dir + "dog.jpg";

std::string model_path = assets_dir + "yolox_nano_fp16.bin";

std::string param_path = assets_dir + "yolox_nano_fp16.param";

auto yolox = yoloxCreate();

yolox->model_path = model_path.c_str();

yolox->param_path = param_path.c_str();

yolox->nms_thresh = 0.45;

yolox->conf_thresh = 0.25;

yolox->target_size = 416;

// yolox->target_size = 640;

auto detect_result = detectResultCreate();

auto err = detectWithImagePath(yolox, image_path.c_str(), detect_result);

if (err == YOLOX_OK) {

auto num = detect_result->object_num;

printf("yolox detect ok, num=%d\n", num);

for (int i = 0; i < num; i++) {

Object *obj = detect_result->object + i;

printf(" object[%d] label=%d prob=%.2f rect={x=%.2f y=%.2f w=%.2f h=%.2f}\n",

i, obj->label, obj->prob, obj->rect.x, obj->rect.y, obj->rect.w, obj->rect.h);

}

} else {

printf("yolox detect fail, err=%d\n", err);

}

draw_objects(image_path.c_str(), detect_result);

detectResultDestroy(detect_result);

yoloxDestroy(yolox);

Step 2) Dart 介面測試

final yoloxLib = NcnnYoloxBindings(dlopen('ncnn_yolox', 'build/shared'));

const assetsDir = '../assets';

final imagePath = '$assetsDir/dog.jpg'.toNativeUtf8();

final modelPath = '$assetsDir/yolox_nano_fp16.bin'.toNativeUtf8();

final paramPath = '$assetsDir/yolox_nano_fp16.param'.toNativeUtf8();

final yolox = yoloxLib.yoloxCreate();

yolox.ref.model_path = modelPath.cast();

yolox.ref.param_path = paramPath.cast();

yolox.ref.nms_thresh = 0.45;

yolox.ref.conf_thresh = 0.25;

yolox.ref.target_size = 416;

// yolox.ref.target_size = 640;

final detectResult = yoloxLib.detectResultCreate();

final err =

yoloxLib.detectWithImagePath(yolox, imagePath.cast(), detectResult);

if (err == YOLOX_OK) {

final num = detectResult.ref.object_num;

print('yolox detect ok, num=$num');

for (int i = 0; i < num; i++) {

var obj = detectResult.ref.object.elementAt(i).ref;

print(' object[$i] label=${obj.label}'

' prob=${obj.prob.toStringAsFixed(2)} rect=${obj.rect.str()}');

}

} else {

print('yolox detect fail, err=$err');

}

calloc.free(imagePath);

calloc.free(modelPath);

calloc.free(paramPath);

yoloxLib.detectResultDestroy(detectResult);

yoloxLib.yoloxDestroy(yolox);

Step 3) 執行測試

cd ncnn_yolox/linux

make

# cpp test

./build/ncnn_yolox_test

# dart test

dart ncnn_yolox_test.dart

建立 App 寫 UI

建立 App 專案,

flutter create --project-name demo_ncnn --org dev.flutter --android-language java --ios-language objc --platforms=android,ios,linux demo_ncnn

本文專案新增瞭如下些依賴:

cd demo_ncnn

dart pub add path logging image easy_debounce

flutter pub add mobx flutter_mobx provider path_provider

flutter pub add -d build_runner mobx_codegen

App 狀態管理用的 MobX。若要了解使用,可見:

App 主要就兩個功能:選圖片、做推理。對應實現了兩個 Store 類:

- image_store.dart: 給圖片路徑,非同步載入圖片資料

- yolox_store.dart: 給圖片資料,非同步預測圖片物件

因為載入、預測都比較耗時,故用的 MobX ObservableFuture 非同步方式。若要了解使用,可見:

以上就是 App 實現的關鍵內容,也可採取不同方案。

應用外掛做推理

App 裡應用外掛,首先要於 pubspec.yaml 里加上外掛的依賴:

dependencies:

ncnn_yolox:

path: ../plugins/ncnn_yolox

然後,yolox_store.dart 應用了外掛做推理,過程與之前 Dart 介面測試基本一致。差異主要在:

- 多了將

assets裡的模型拷貝進臨時路徑的操作,因為 App 裡無法獲取資源的絕對路徑。要麼改 C 介面,模型以位元組給到。 - 多了將圖片資料從

Uint8List到Pointer<Uint8>的拷貝,因為要從 Dart 堆記憶體進 C 堆記憶體。可見註釋的 Issue 瞭解。

import 'dart:ffi';

import 'dart:io';

import 'package:ffi/ffi.dart';

import 'package:flutter/services.dart';

import 'package:image/image.dart' as img;

import 'package:mobx/mobx.dart';

import 'package:ncnn_yolox/ncnn_yolox_bindings_generated.dart' as yo;

import 'package:path/path.dart' show join;

import 'package:path_provider/path_provider.dart';

import '../util/image.dart';

import '../util/log.dart';

import 'future_store.dart';

part 'yolox_store.g.dart';

class YoloxStore = YoloxBase with _$YoloxStore;

class YoloxObject {

int label = 0;

double prob = 0;

Rect rect = Rect.zero;

}

class YoloxResult {

List<YoloxObject> objects = [];

Duration detectTime = Duration.zero;

}

abstract class YoloxBase with Store {

late yo.NcnnYoloxBindings _yolox;

YoloxBase() {

final dylib = Platform.isAndroid || Platform.isLinux

? DynamicLibrary.open('libncnn_yolox.so')

: DynamicLibrary.process();

_yolox = yo.NcnnYoloxBindings(dylib);

}

@observable

FutureStore<YoloxResult> detectFuture = FutureStore<YoloxResult>();

@action

Future detect(ImageData data) async {

try {

detectFuture.errorMessage = null;

detectFuture.future = ObservableFuture(_detect(data));

detectFuture.data = await detectFuture.future;

} catch (e) {

detectFuture.errorMessage = e.toString();

}

}

Future<YoloxResult> _detect(ImageData data) async {

final timebeg = DateTime.now();

// await Future.delayed(const Duration(seconds: 5));

final modelPath = await _copyAssetToLocal('assets/yolox_nano_fp16.bin',

package: 'ncnn_yolox', notCopyIfExist: false);

final paramPath = await _copyAssetToLocal('assets/yolox_nano_fp16.param',

package: 'ncnn_yolox', notCopyIfExist: false);

log.info('yolox modelPath=$modelPath');

log.info('yolox paramPath=$paramPath');

final modelPathUtf8 = modelPath.toNativeUtf8();

final paramPathUtf8 = paramPath.toNativeUtf8();

final yolox = _yolox.yoloxCreate();

yolox.ref.model_path = modelPathUtf8.cast();

yolox.ref.param_path = paramPathUtf8.cast();

yolox.ref.nms_thresh = 0.45;

yolox.ref.conf_thresh = 0.45;

yolox.ref.target_size = 416;

// yolox.ref.target_size = 640;

final detectResult = _yolox.detectResultCreate();

final pixels = data.image.getBytes(order: img.ChannelOrder.bgr);

// Pass Uint8List to Pointer<Void>

// https://github.com/dart-lang/ffi/issues/27

// https://github.com/martin-labanic/camera_preview_ffi_image_processing/blob/master/lib/image_worker.dart

final pixelsPtr = calloc.allocate<Uint8>(pixels.length);

for (int i = 0; i < pixels.length; i++) {

pixelsPtr[i] = pixels[i];

}

final err = _yolox.detectWithPixels(

yolox,

pixelsPtr,

yo.PixelType.PIXEL_BGR,

data.image.width,

data.image.height,

detectResult);

final objects = <YoloxObject>[];

if (err == yo.YOLOX_OK) {

final num = detectResult.ref.object_num;

for (int i = 0; i < num; i++) {

final o = detectResult.ref.object.elementAt(i).ref;

final obj = YoloxObject();

obj.label = o.label;

obj.prob = o.prob;

obj.rect = Rect.fromLTWH(o.rect.x, o.rect.y, o.rect.w, o.rect.h);

objects.add(obj);

}

}

calloc

..free(pixelsPtr)

..free(modelPathUtf8)

..free(paramPathUtf8);

_yolox.detectResultDestroy(detectResult);

_yolox.yoloxDestroy(yolox);

final result = YoloxResult();

result.objects = objects;

result.detectTime = DateTime.now().difference(timebeg);

return result;

}

// ...

}

最後,於 UI home_page.dart 裡使用,

class HomePage extends StatefulWidget {

const HomePage({super.key, required this.title});

final String title;

@override

State<HomePage> createState() => _HomePageState();

}

class _HomePageState extends State<HomePage> {

late ImageStore _imageStore;

late YoloxStore _yoloxStore;

late OptionStore _optionStore;

@override

void didChangeDependencies() {

_imageStore = Provider.of<ImageStore>(context);

_yoloxStore = Provider.of<YoloxStore>(context);

_optionStore = Provider.of<OptionStore>(context);

_imageStore.load();

super.didChangeDependencies();

}

void _pickImage() async {

final result = await FilePicker.platform.pickFiles(type: FileType.image);

if (result == null) return;

final image = result.files.first;

_imageStore.load(imagePath: file.path);

}

void _detectImage() {

if (_imageStore.loadFuture.futureState != FutureState.loaded) return;

_yoloxStore.detect(_imageStore.loadFuture.data!);

}

@override

Widget build(BuildContext context) {

const pad = 20.0;

return Scaffold(

appBar: AppBar(

backgroundColor: Theme.of(context).colorScheme.inversePrimary,

title: Text(widget.title),

),

body: Padding(

padding: const EdgeInsets.all(pad),

child: Column(

mainAxisAlignment: MainAxisAlignment.spaceBetween,

crossAxisAlignment: CrossAxisAlignment.stretch,

children: [

// 圖片與結果

Expanded(

flex: 1,

child: Observer(builder: (context) {

if (_imageStore.loadFuture.futureState ==

FutureState.loading) {

return const Center(child: CircularProgressIndicator());

}

if (_imageStore.loadFuture.errorMessage != null) {

return Center(

child: Text(_imageStore.loadFuture.errorMessage!));

}

final data = _imageStore.loadFuture.data;

if (data == null) {

return const Center(child: Text('Image load null :('));

}

_yoloxStore.detectFuture.reset();

return Container(

decoration: BoxDecoration(

border: Border.all(color: Colors.orangeAccent)),

child: DetectResultPage(imageData: data),

);

})),

const SizedBox(height: pad),

// 三個按鈕:選圖、推理、是否顯示框

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Expanded(

child: ElevatedButton(

child: const Text('Pick image'),

onPressed: () => _debounce('_pickImage', _pickImage),

),

),

const SizedBox(width: pad),

Expanded(

child: ElevatedButton(

child: const Text('Detect objects'),

onPressed: () => _debounce('_detectImage', _detectImage),

),

),

const SizedBox(width: pad),

Expanded(

child: Observer(builder: (context) {

return ElevatedButton.icon(

icon: Icon(_optionStore.bboxesVisible

? Icons.check_box_outlined

: Icons.check_box_outline_blank),

label: const Text('Binding boxes'),

onPressed: () => _optionStore

.setBboxesVisible(!_optionStore.bboxesVisible),

);

}),

),

],

),

],

),

),

);

}

}

適配 Android 工程

Android 構建指令碼在 android/build.gradle,也用的 CMake,與 Linux 共用了 src/CMakeLists.txt。不過要把 minSdkVersion 改成 24,以使用 Vulkan。

Vulkan 於 Android 7.0 (Nougat), API level 24 or higher 開始支援,可見 NDK / Get started with Vulkan。

plugins/ncnn_yolox/android/build.gradle 設定:

android {

defaultConfig {

minSdkVersion 24

ndk {

moduleName "ncnn_yolox"

abiFilters "armeabi-v7a", "arm64-v8a", "x86", "x86_64"

}

}

}

demo_ncnn/android/app/build.gradle 也一樣修改 minSdkVersion 為 24。

最後,即可 flutter run 執行。更多可見 Build and release an Android app。

適配 iOS 工程

本文專案未適配 iOS。如何適配 iOS,請見:

Xcode 14 不再支援提交含有 bitcode 的應用,Flutter 3.3.x 之後也移除了 bitcode 的支援,可見 Creating an iOS Bitcode enabled app。