將onnx的靜態batch改為動態batch及修改輸入輸出層的名稱

背景

在模型的部署中,為了高效利用硬體算力,常常會需要將多個輸入組成一個batch同時輸入網路進行推理,這個batch的大小根據系統的負載或者攝像頭的路數時刻在變化,因此網路的輸入batch是在動態變化的。對於pytorch等框架來說,我們並不會感受到這個問題,因為整個網路在pytorch中都是動態的。而在實際的工程化部署中,為了執行效率,卻並不能有這樣的靈活性。可能會有人說,那我就把batch固定在一個最大值,然後輸入實際的batch,這樣實際上網路是以最大batch在推理的,浪費了算力。所以我們需要能支援動態的batch,能夠根據輸入的batch數來執行。

一個常見的訓練到部署的路徑是:pytorch→onnx→tensorrt。在pytorch匯出onnx時,我們可以指定輸出為動態的輸入:

torch_out = torch.onnx.export(model, inp,

save_path,input_names=["data"],output_names=["fc1"],dynamic_axes={

"data":{0:'batch_size'},"fc1":{0:'batch_size'}

})

而另一些時候,我們部署的模型來源於他人或開源模型,已經失去了原始的pytorch模型,此時如果onnx是靜態batch的,在移植到tensorrt時,其輸入就為靜態輸入了。想要動態輸入,就需要對onnx模型本身進行修改了。另一方面,演演算法工程師在導模型的時候,如果沒有指定輸入層輸出層的名稱,匯出的模型的層名有時候可讀性比較差,比如輸出是batchnorm_274這類名稱,為了方便維護,也有需要對onnx的輸入輸出層名稱進行修改。

操作

修改輸入輸出層

def change_input_output_dim(model):

# Use some symbolic name not used for any other dimension

sym_batch_dim = "batch"

# The following code changes the first dimension of every input to be batch-dim

# Modify as appropriate ... note that this requires all inputs to

# have the same batch_dim

inputs = model.graph.input

for input in inputs:

# Checks omitted.This assumes that all inputs are tensors and have a shape with first dim.

# Add checks as needed.

dim1 = input.type.tensor_type.shape.dim[0]

# update dim to be a symbolic value

dim1.dim_param = sym_batch_dim

# or update it to be an actual value:

# dim1.dim_value = actual_batch_dim

outputs = model.graph.output

for output in outputs:

# Checks omitted.This assumes that all inputs are tensors and have a shape with first dim.

# Add checks as needed.

dim1 = output.type.tensor_type.shape.dim[0]

# update dim to be a symbolic value

dim1.dim_param = sym_batch_dim

model = onnx.load(onnx_path)

change_input_output_dim(model)

通過將輸入層和輸出層的shape的第一維修改為非數位,就可以將onnx模型改為動態batch。

修改輸入輸出層名稱

def change_input_node_name(model, input_names):

for i,input in enumerate(model.graph.input):

input_name = input_names[i]

for node in model.graph.node:

for i, name in enumerate(node.input):

if name == input.name:

node.input[i] = input_name

input.name = input_name

def change_output_node_name(model, output_names):

for i,output in enumerate(model.graph.output):

output_name = output_names[i]

for node in model.graph.node:

for i, name in enumerate(node.output):

if name == output.name:

node.output[i] = output_name

output.name = output_name

程式碼中input_names和output_names是我們希望改到的名稱,做法是遍歷網路,若有node的輸入層名與要修改的輸入層名稱相同,則改成新的輸入層名。輸出層類似。

完整程式碼

import onnx

def change_input_output_dim(model):

# Use some symbolic name not used for any other dimension

sym_batch_dim = "batch"

# The following code changes the first dimension of every input to be batch-dim

# Modify as appropriate ... note that this requires all inputs to

# have the same batch_dim

inputs = model.graph.input

for input in inputs:

# Checks omitted.This assumes that all inputs are tensors and have a shape with first dim.

# Add checks as needed.

dim1 = input.type.tensor_type.shape.dim[0]

# update dim to be a symbolic value

dim1.dim_param = sym_batch_dim

# or update it to be an actual value:

# dim1.dim_value = actual_batch_dim

outputs = model.graph.output

for output in outputs:

# Checks omitted.This assumes that all inputs are tensors and have a shape with first dim.

# Add checks as needed.

dim1 = output.type.tensor_type.shape.dim[0]

# update dim to be a symbolic value

dim1.dim_param = sym_batch_dim

def change_input_node_name(model, input_names):

for i,input in enumerate(model.graph.input):

input_name = input_names[i]

for node in model.graph.node:

for i, name in enumerate(node.input):

if name == input.name:

node.input[i] = input_name

input.name = input_name

def change_output_node_name(model, output_names):

for i,output in enumerate(model.graph.output):

output_name = output_names[i]

for node in model.graph.node:

for i, name in enumerate(node.output):

if name == output.name:

node.output[i] = output_name

output.name = output_name

onnx_path = ""

save_path = ""

model = onnx.load(onnx_path)

change_input_output_dim(model)

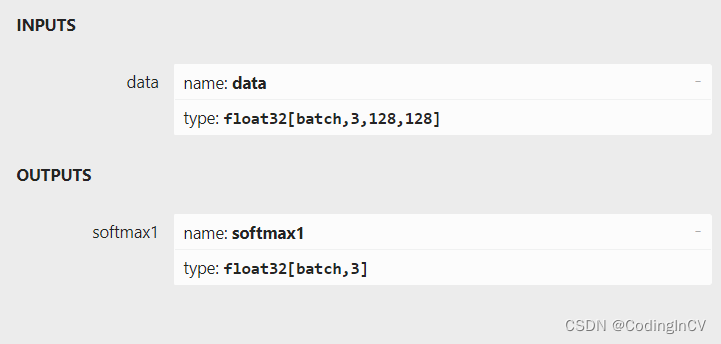

change_input_node_name(model, ["data"])

change_output_node_name(model, ["fc1"])

onnx.save(model, save_path)

經過修改後的onnx模型輸入輸出將成為動態batch,可以方便的移植到tensorrt等框架以支援高效推理。

本文來自部落格園,作者:CoderInCV,轉載請註明原文連結:https://www.cnblogs.com/haoliuhust/p/17519161.html