淺談OpenCV的多物件匹配影象的實現,以及如何匹配半透明控制元件,不規則影象

淺談OpenCV的多物件匹配透明影象的實現,以及如何匹配半透明控制元件

引子

- OpenCV提供的templateMatch只負責將(相關性等)計算出來,並不會直接提供目標的對應座標,一般來說我們直接遍歷最高的相關度,就可以得到匹配度最高的座標。但是這樣一般只能得到一個座標。

- 在實際操作中,我們可能需要匹配一個不規則的影象,把這個不規則的影象放進矩形Mat裡,會出現很多不應該參與匹配的地方參與結果的計算,導致識別率下降。

- 有時候面對半透明控制元件,其後的背景完全不一樣,傳統的匹配方法直接歇菜了,怎麼辦?

解決方法

1. 解決多物件匹配的問題

通過templateMatch演演算法,可以得到目標與原影象中等大子影象對應歸一化的相關係數,這個歸一化的相關係數可以看作是對於的概率(其實不是這樣),可以設定一個閾值,把大於這個閾值的座標都篩選出來。但是這樣在一個成功匹配的座標附近也會存在許多相關性稍小的座標也大於這個閾值,我們無法區分這些座標對於的影象是原來的影象還是其他的影象,這樣就把這個問題轉化為了怎麼把這些副產物給去除。有cv經驗的應該很快會想到[nms演演算法](非極大值抑制(NMS)演演算法講解|理論+程式碼 - 知乎 (zhihu.com))。想了解的同學可以點進去看看。下面就只提供程式碼實現。

2. 解決不規則影象匹配問題

OpenCV的templateMatch中提供了一個可選的引數mask,這個mask是和目標等大的一張圖,可以是U8C1也可以是FP32,其中U8C1對於每個點的含義是為0則放棄匹配該點,非0就會匹配,FP32是會將這個點畫素在計算相關性時賦予對於的權重。要求比較簡單,只需要不匹配不規則影象中的空白部分就好了,可以在mask中把這裡塗黑,要匹配的地方塗白就好了(綠幕摳像?)。

3. 解決半透明控制元件的匹配問題

對於半透明控制元件,某個座標對應的畫素值就是會隨著背景變化而變化的。templateMatch這種通過計算位元組上相似度的演演算法會因為背景變化而導致整個影象的畫素髮生整體性的大規模變化而受到影響。但是即便整個影象的畫素髮生變化,尋找目標顏色與座標的相對關係是基本不變的(目標具有某種特徵,這也就是人為什麼可以對這種控制元件進行識別)。可以用特徵匹配的方法,利用這個特性對透明控制元件進行匹配。

需要注意的是部分演演算法來自於nonfree的xfeature,使用時請注意避免糾紛,當然也需要使用者手動開啟這個編譯開關,相關程式碼Fork自OpenCV: Features2D + Homography to find a known object

最終程式碼實現

libmatch.h

#ifdef LIBMATCH_EXPORTS

#define LIBMATCH_API extern "C" __declspec(dllexport)

struct objectEx

{

cv::Rect_<float> rect;

float prob;

};

struct objectEx2

{

cv::Point2f dots[4];

};

static void qsort_descent_inplace(std::vector<objectEx>& objects)

{

if (objects.empty())

return;

std::sort(objects.begin(), objects.end(), [](const objectEx& a, const objectEx& b) {return a.prob > b.prob; });

}

static inline float intersection_area(const objectEx& a, const objectEx& b)

{

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

static void nms_sorted_bboxes(const std::vector<objectEx>& faceobjects, std::vector<int>& picked, float nms_threshold)

{

picked.clear();

const int n = faceobjects.size();

std::vector<float> areas(n);

for (int i = 0; i < n; i++)

{

areas[i] = faceobjects[i].rect.area();

}

for (int i = 0; i < n; i++)

{

const objectEx& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++)

{

const objectEx& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

// float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

const int version = 230622;

#else

#define LIBMATCH_API extern "C" __declspec(dllimport)

struct objectEx

{

struct Rect{

float x, y, width, height;

} rect;

float prob;

};

struct objectEx2

{

struct

{

float x, y;

}dots[4];

};

#endif

LIBMATCH_API int match_get_version();

LIBMATCH_API size_t match_scan(

uint8_t* src_img_data,

const size_t src_img_size,

uint8_t* target_img_data,

const size_t target_img_size,

const float prob_threshold,

const float nms_threshold,

objectEx* RetObejectArr,

const size_t maxRetCount,

const uint32_t MaskColor //Just For BGR,if high 2bit isn`t zero,mask will be disabled

);

LIBMATCH_API bool match_feat(

uint8_t* src_img_data,

const size_t src_img_size,

uint8_t* target_img_data,

const size_t target_img_size,

objectEx2 &result

);

libmatch.cpp

// libmatch.cpp : 定義 DLL 的匯出函數。

//

#include "pch.h"

#include "framework.h"

#include "libmatch.h"

LIBMATCH_API int match_get_version()

{

return version;

}

LIBMATCH_API size_t match_scan(

uint8_t* src_img_data,

const size_t src_img_size,

uint8_t* target_img_data,

const size_t target_img_size,

const float prob_threshold,

const float nms_threshold,

objectEx* RetObejectArr,

const size_t maxRetCount,

const uint32_t MaskColor //Just For BGR,if high 2bit isn`t zero,mask will be disabled

)

{

//Read and Process img Start

cv::_InputArray src_img_arr(src_img_data, src_img_size);

cv::Mat src_mat = cv::imdecode(src_img_arr, cv::IMREAD_GRAYSCALE);

if (src_mat.empty())

{

std::cout << "[Match] Err Can`t Read src_img" << std::endl;

return -1;

}

cv::_InputArray target_img_arr(target_img_data, target_img_size);

cv::Mat target_mat = cv::imdecode(target_img_arr, cv::IMREAD_GRAYSCALE);

if (target_mat.empty())

{

std::cout << "[Match] Err Can`t Read target_img" << std::endl;

return -1;

}

if (target_mat.cols > src_mat.cols || target_mat.rows > src_mat.rows)

{

std::cout << "[Match]ERR Target is too large" << std::endl;

return false;

}

//Read Over

//Template Match Start

cv::Mat result(src_mat.cols - target_mat.cols + 1, src_mat.rows - target_mat.rows + 1, CV_32FC1);

if ((MaskColor & 0xff000000) != 0)

{

cv::matchTemplate(src_mat, target_mat, result, cv::TM_CCOEFF_NORMED);

}

else

{

cv::Mat temp_target_mat = cv::imdecode(target_img_arr, cv::IMREAD_COLOR);

cv::Mat maks_mat = cv::Mat::zeros(target_mat.rows, target_mat.cols, CV_8U);

//Replace MaskColor

for (int i = 0; i < temp_target_mat.rows; i++)

for (int j = 0; j < temp_target_mat.cols; j++) {

cv::Vec3b temp_color=temp_target_mat.at<cv::Vec3b>(cv::Point(j, i));

if (((temp_color[0] << 16) | (temp_color[1] << 8) | temp_color[2]) != MaskColor) {

// std::cout << ((temp_color[0] << 16) | (temp_color[1] << 8) | temp_color[2]) << std::endl;

maks_mat.at<uint8_t>(cv::Point(j, i)) = 255;

}

}

// cv::imshow("result", maks_mat);

// cv::waitKey();

cv::matchTemplate(src_mat, target_mat, result, cv::TM_CCOEFF_NORMED, maks_mat);

}

//Template Match Over

//BackEnd Process

std::vector <objectEx> proposals;

for (int i = 0; i < result.rows; ++i)

for (int j = 0; j < result.cols; ++j)

{

if (result.at<float>(cv::Point(j, i)) >= prob_threshold)

{

objectEx buf;

buf.prob = result.at<float>(cv::Point(j, i));

buf.rect.x = j;

buf.rect.y = i;

buf.rect.height = target_mat.rows;

buf.rect.width = target_mat.cols;

proposals.push_back(buf);

}

}

std::vector<int> picked;

qsort_descent_inplace(proposals);

nms_sorted_bboxes(proposals, picked, nms_threshold);

std::vector <objectEx> objects;

for (auto x : picked)

objects.emplace_back(proposals[x]);

//BackEnd Over

memcpy(RetObejectArr, objects.data(), sizeof(objectEx) * std::min(objects.size(), maxRetCount));

return objects.size();

}

LIBMATCH_API bool match_feat(

uint8_t* src_img_data,

const size_t src_img_size,

uint8_t* target_img_data,

const size_t target_img_size,

objectEx2 &result

)

{

//Read and Process img Start

cv::_InputArray src_img_arr(src_img_data, src_img_size);

cv::Mat src_mat = cv::imdecode(src_img_arr, cv::IMREAD_GRAYSCALE);

if (src_mat.empty())

{

std::cout << "[Match] Err Can`t Read src_img" << std::endl;

return false;

}

cv::_InputArray target_img_arr(target_img_data, target_img_size);

cv::Mat target_mat = cv::imdecode(target_img_arr, cv::IMREAD_GRAYSCALE);

if (target_mat.empty())

{

std::cout << "[Match] Err Can`t Read target_img" << std::endl;

return false;

}

//Read Over

//-- Step 1: Detect the keypoints using SURF Detector, compute the descriptors

int minHessian = 400;

cv::Ptr<cv::xfeatures2d::SURF> detector = cv::xfeatures2d::SURF::create(minHessian);

std::vector<cv::KeyPoint> keypoints_object, keypoints_scene;

cv::Mat descriptors_object, descriptors_scene;

detector->detectAndCompute(target_mat, cv::noArray(), keypoints_object, descriptors_object);

detector->detectAndCompute(src_mat,cv::noArray(), keypoints_scene, descriptors_scene);

//-- Step 2: Matching descriptor vectors with a FLANN based matcher

// Since SURF is a floating-point descriptor NORM_L2 is used

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create(cv::DescriptorMatcher::FLANNBASED);

std::vector< std::vector<cv::DMatch> > knn_matches;

matcher->knnMatch(descriptors_object, descriptors_scene, knn_matches, 2);

//-- Filter matches using the Lowe's ratio test

const float ratio_thresh = 0.75f;

std::vector<cv::DMatch> good_matches;

for (size_t i = 0; i < knn_matches.size(); i++)

{

if (knn_matches[i][0].distance < ratio_thresh * knn_matches[i][1].distance)

{

good_matches.push_back(knn_matches[i][0]);

}

}

if (good_matches.size() == 0)

return false;

//-- Draw matches

//Mat img_matches;

//drawMatches(img_object, keypoints_object, img_scene, keypoints_scene, good_matches, img_matches, Scalar::all(-1),

// Scalar::all(-1), std::vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

//-- Localize the object

std::vector<cv::Point2f> obj;

std::vector<cv::Point2f> scene;

for (size_t i = 0; i < good_matches.size(); i++)

{

//-- Get the keypoints from the good matches

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt);

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt);

}

cv::Mat H = findHomography(obj, scene, cv::RANSAC);

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point2f(0, 0);

obj_corners[1] = cv::Point2f((float)target_mat.cols, 0);

obj_corners[2] = cv::Point2f((float)target_mat.cols, (float)target_mat.rows);

obj_corners[3] = cv::Point2f(0, (float)target_mat.rows);

std::vector<cv::Point2f> buf_corners(4);

cv::perspectiveTransform(obj_corners, buf_corners, H);

memcpy(result.dots, buf_corners.data(), buf_corners.size() * sizeof(cv::Point2f));

return true;

}

實現效果

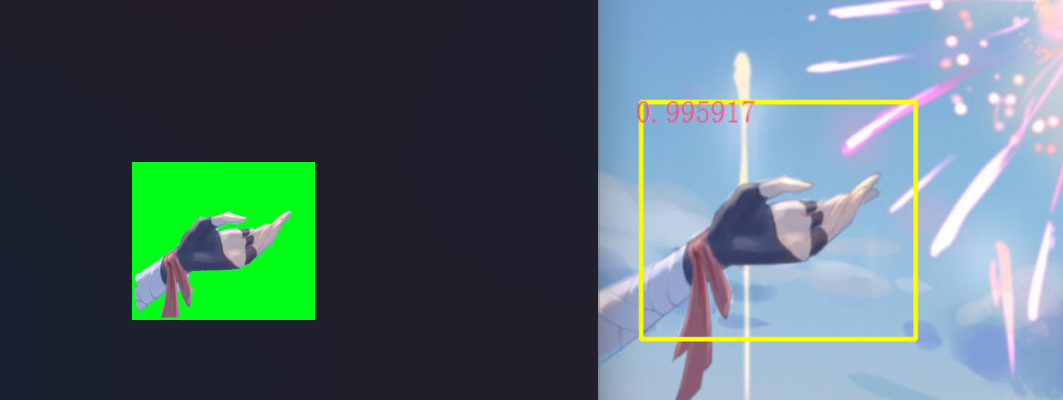

多物件匹配+不規則匹配

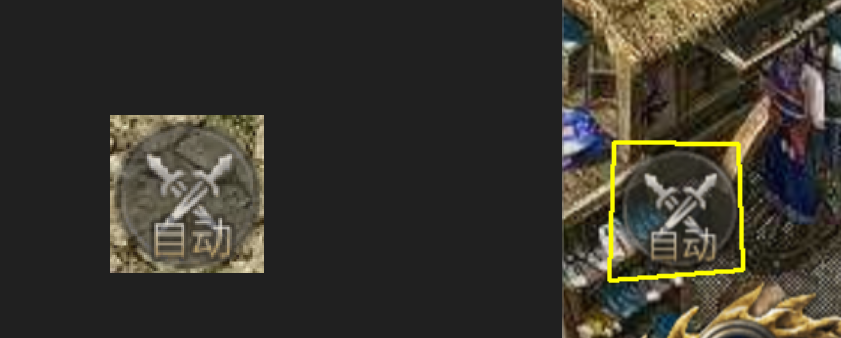

半透明控制元件匹配

後記

緊張而刺激的高考在本月落下了帷幕,結束了長達12年的通識教育,筆者終於能夠潛下心來研究這些東西背後的數學原理。由於筆者的能力有限,本文存在不嚴謹的部分,希望讀者可以諒解。

演演算法交流群:904511841,143858000