kafka學習之三_信創CPU下單節點kafka效能測試驗證

2023-06-21 06:00:50

kafka學習之三_信創CPU下單節點kafka效能測試驗證

背景

前面學習了 3controller+5broker 的叢集部署模式.

晚上想著能夠驗證一下國產機器的效能. 但是國產機器上面的裝置有限.

所以想著進行單節點的安裝與測試. 並且記錄一下簡單結果

希望對以後的工作有指導意義

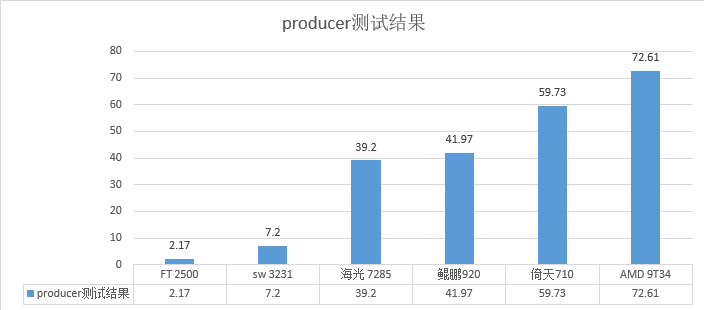

發現producer的效能比較與之前的 測試結果比較接近.

但是consumer的測試結果看不出太多頭緒來.

自己對kafka的學習還不夠深入, 準備下一期就進行consumer的調優驗證.

測試結果驗證

| CPU型別 | producer測試結果 | consumer測試結果 |

|---|---|---|

| sw 3231 | 7.20 MB/sec | 2.62 MB/sec |

| FT 2500 | 2.17 MB/sec | 測試失敗 |

| 海光 7285 | 39.20 MB/sec | 5.77 MB/sec |

| 鯤鵬920 | 41.97 MB/sec | 5.6037 MB/sec |

| 倚天710 | 59.73 MB/sec | 6.19 MB/sec |

| AMD 9T34 | 72.61 MB/sec | 6.68 MB/sec |

測試結果

廣告一下自己的公眾號

以申威為例進行安裝說明

因為kafka 其實是基於java進行編寫的訊息佇列.

所以不需要有繁雜的編譯等過程.

只要jdk支援, 理論上就可以進行執行.

比較麻煩的就是穩定性和效能的表現.

所以這裡進行一下安裝與驗證.

安裝過程-1

上傳檔案到/root目錄下面並且解壓縮

cd /root && tar -zxvf kafka_2.13-3.5.0.tgz

然後編輯對應的檔案:

cat > /root/kafka_2.13-3.5.0/config/kafka_server_jaas.conf <<EOF

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Testxxxxxx"

user_admin="Testxxxxxx"

user_comsumer="Testxxxxxx"

user_producer="Testxxxxxx";

};

EOF

# 增加一個使用者端組態檔 使用者端才可以連線伺服器端

cat > /root/kafka_2.13-3.5.0/config/sasl.conf <<EOF

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Testxxxxxx";

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

EOF

安裝過程-2

vim /root/kafka_2.13-3.5.0/config/kraft/server.properties

主要修改的點:

因為是單節點所以比較簡單了:

process.roles=broker,controller

node.id=100

[email protected]:9094

listeners=SASL_PLAINTEXT://127.0.0.1:9093,CONTROLLER://127.0.0.1:9094

sasl.enabled.mechanisms=PLAIN

sasl.mechanism.inter.broker.protocol=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.evervone.if.no.acl.found=true

advertised.listeners=SASL_PLAINTEXT://127.0.0.1:9093

# 最簡單的方法可以刪除組態檔的前面 50行, 直接放進去這些內容

vim->:1,50d->paste->edit_ips

其他ip地址可以如此替換:

sed -i 's/127.0.0.1/10.110.136.41/g' /root/kafka_2.13-3.5.0/config/kraft/server.properties

安裝過程-3

修改啟動指令碼:

vim /root/kafka_2.13-3.5.0/bin/kafka-server-start.sh

在任意一個java opt 處增加:

-Djava.security.auth.login.config=/root/kafka_2.13-3.5.0/config/kafka_server_jaas.conf

安裝過程-4

# 初始化

/root/kafka_2.13-3.5.0/bin/kafka-storage.sh random-uuid

cd /root/kafka_2.13-3.5.0

bin/kafka-storage.sh format -t 7ONT3dn3RWWNCZyIwLrEqg -c config/kraft/server.properties

# 啟動服務

cd /root/kafka_2.13-3.5.0 && bin/kafka-server-start.sh -daemon config/kraft/server.properties

# 建立topic

bin/kafka-topics.sh --create --command-config config/sasl.conf --replication-factor 1 --partitions 3 --topic zhaobsh01 --bootstrap-server 127.0.0.1:9093

# 測試producer

bin/kafka-producer-perf-test.sh --num-records 100000 --record-size 1024 --throughput -1 --producer.config config/sasl.conf --topic zhaobsh01 --print-metrics --producer-props bootstrap.servers=127.0.0.1:9093

# 測試consumer

bin/kafka-consumer-perf-test.sh --fetch-size 10000 --messages 1000000 --topic zhaobsh01 --consumer.config config/sasl.conf --print-metrics --bootstrap-server 127.0.0.1:9093

# 檢視紀錄檔

tail -f /root/kafka_2.13-3.5.0/logs/kafkaServer.out

申威的測試結果

producer:

100000 records sent, 7370.283019 records/sec (7.20 MB/sec), 2755.49 ms avg latency, 3794.00 ms max latency, 3189 ms 50th, 3688 ms 95th, 3758 ms 99th, 3785 ms 99.9th.

consumer:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-06-20 21:56:02:493, 2023-06-20 21:56:39:755, 97.6563, 2.6208, 100000, 2683.6992, 5599, 31663, 3.0842, 3158.2604

飛騰的測試結果-hdd

producer:

100000 records sent, 1828.922582 records/sec (1.79 MB/sec), 6999.20 ms avg latency, 51476.00 ms max latency, 875 ms 50th, 21032 ms 95th, 21133 ms 99th, 21167 ms 99.9th.

consumer:

飛騰的測試結果-ssd

producer:

100000 records sent, 2219.706555 records/sec (2.17 MB/sec), 7073.51 ms avg latency, 41100.00 ms max latency, 1089 ms 50th, 20816 ms 95th, 20855 ms 99th, 20873 ms 99.9th.

海光的測試結果

producer:

100000 records sent, 40144.520273 records/sec (39.20 MB/sec), 486.67 ms avg latency, 681.00 ms max latency, 456 ms 50th, 657 ms 95th, 674 ms 99th, 678 ms 99.9th.

consumer:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-06-20 22:28:04:364, 2023-06-20 22:28:21:274, 97.6563, 5.7751, 100000, 5913.6606, 3809, 13101, 7.4541, 7633.0051

鯤鵬的測試結果

producer:

100000 records sent, 42973.785991 records/sec (41.97 MB/sec), 463.69 ms avg latency, 621.00 ms max latency, 472 ms 50th, 593 ms 95th, 612 ms 99th, 619 ms 99.9th.

consumer:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-06-20 22:33:58:168, 2023-06-20 22:34:15:595, 97.6563, 5.6037, 100000, 5738.2223, 3799, 13628, 7.1659, 7337.8339

倚天的測試結果

producer:

100000 records sent, 61162.079511 records/sec (59.73 MB/sec), 335.18 ms avg latency, 498.00 ms max latency, 326 ms 50th, 476 ms 95th, 494 ms 99th, 497 ms 99.9th.

consumer:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-06-20 22:37:49:668, 2023-06-20 22:38:05:426, 97.6563, 6.1972, 100000, 6345.9830, 3597, 12161, 8.0303, 8223.008

AMD9T34的測試結果

producer:

100000 records sent, 74349.442379 records/sec (72.61 MB/sec), 253.07 ms avg latency, 364.00 ms max latency, 259 ms 50th, 344 ms 95th, 359 ms 99th, 363 ms 99.9th.

consumer:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-06-20 22:44:14:446, 2023-06-20 22:44:29:058, 97.6563, 6.6833, 100000, 6843.6901, 3504, 11108, 8.7915, 9002.5207