【模型部署 01】C++實現分類模型(以GoogLeNet為例)在OpenCV DNN、ONNXRuntime、TensorRT、OpenVINO上的推理部署

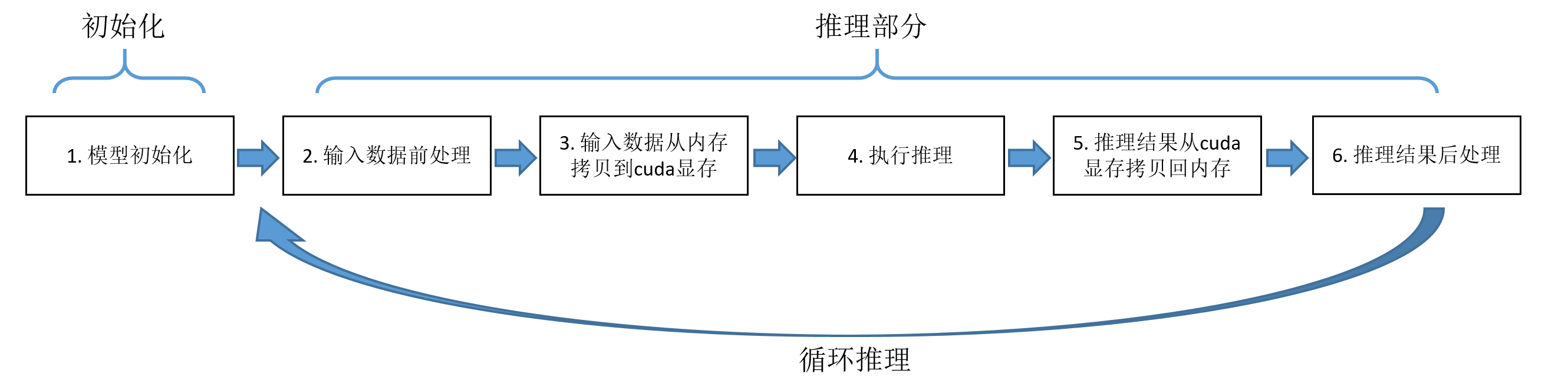

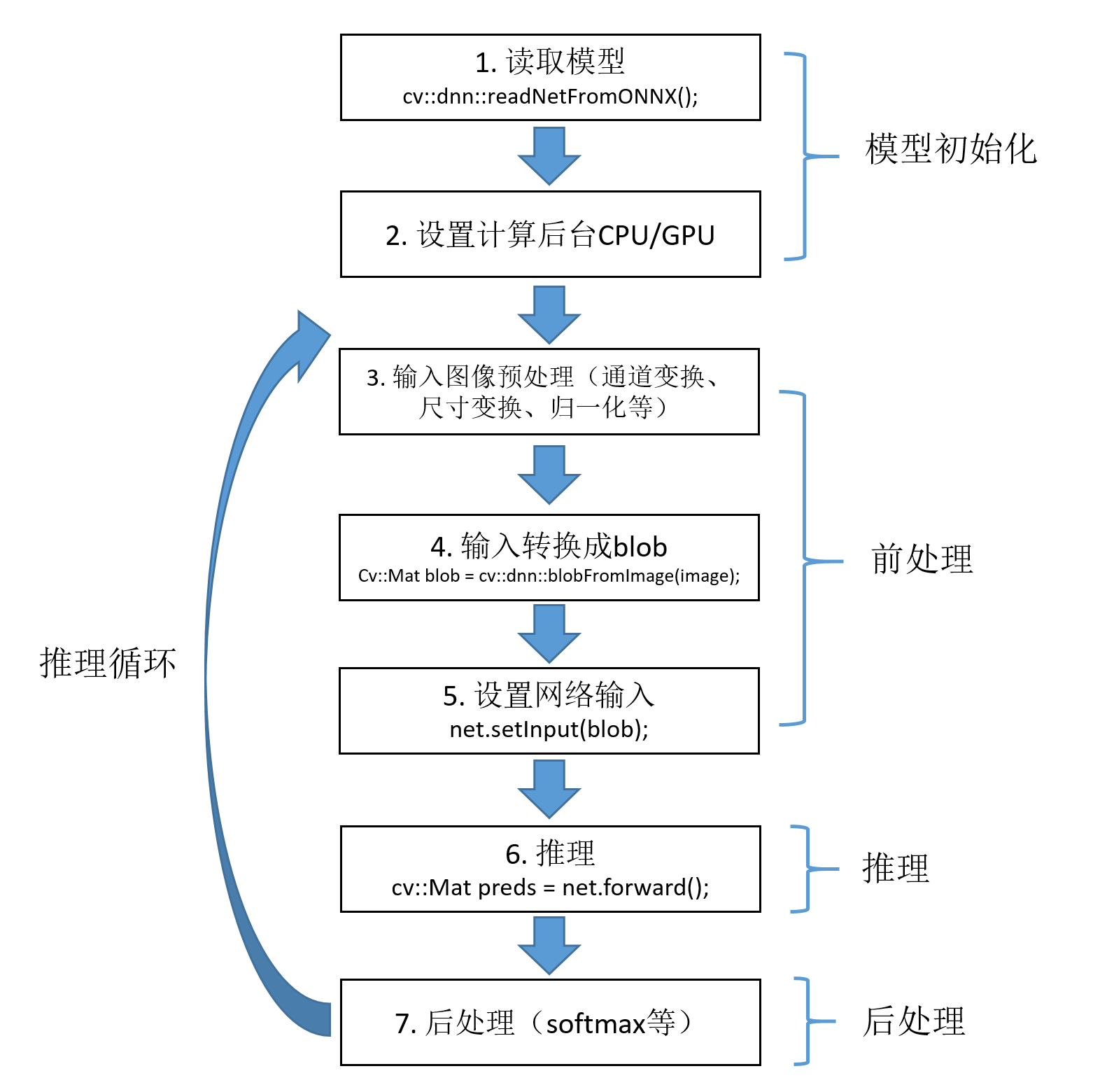

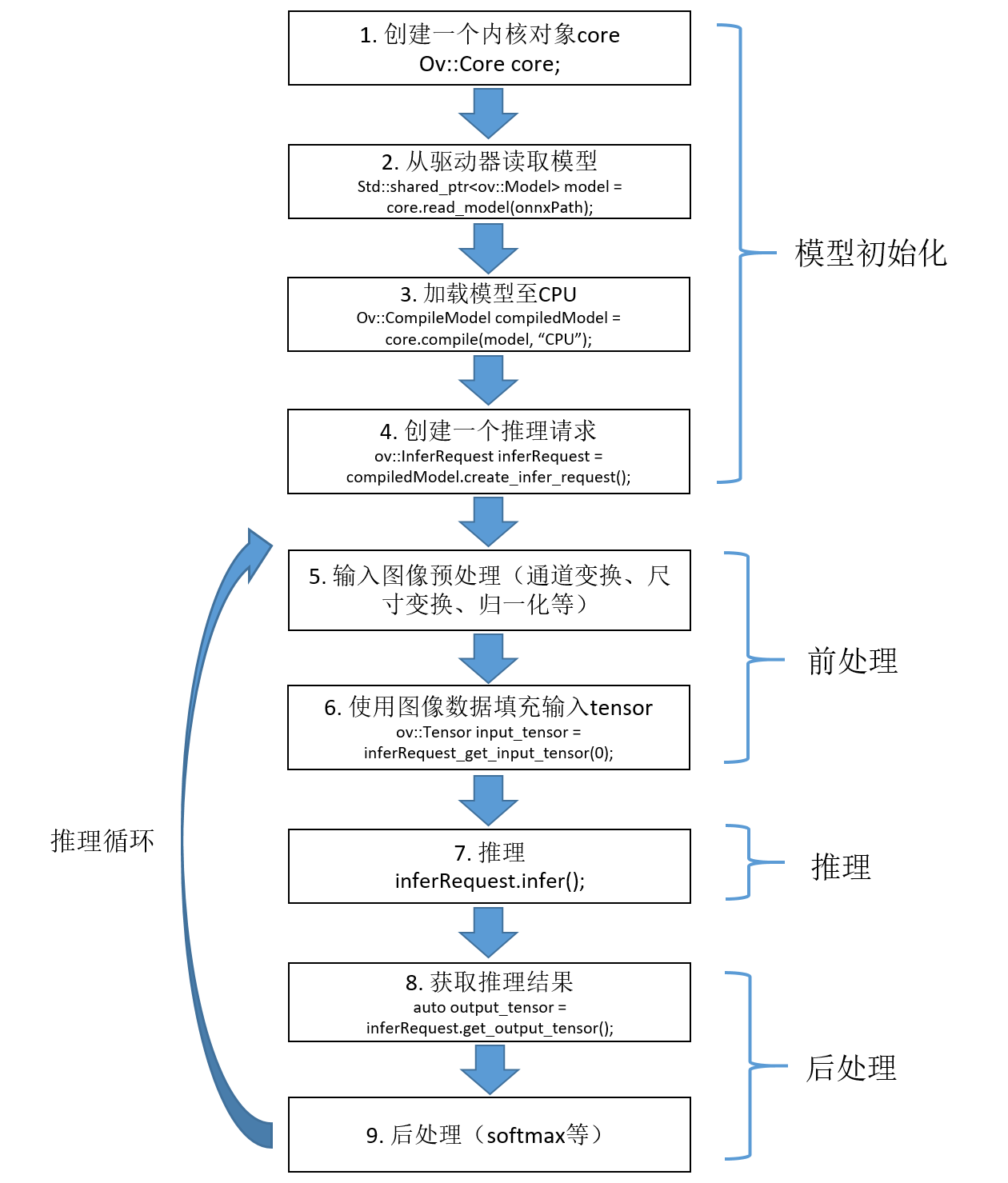

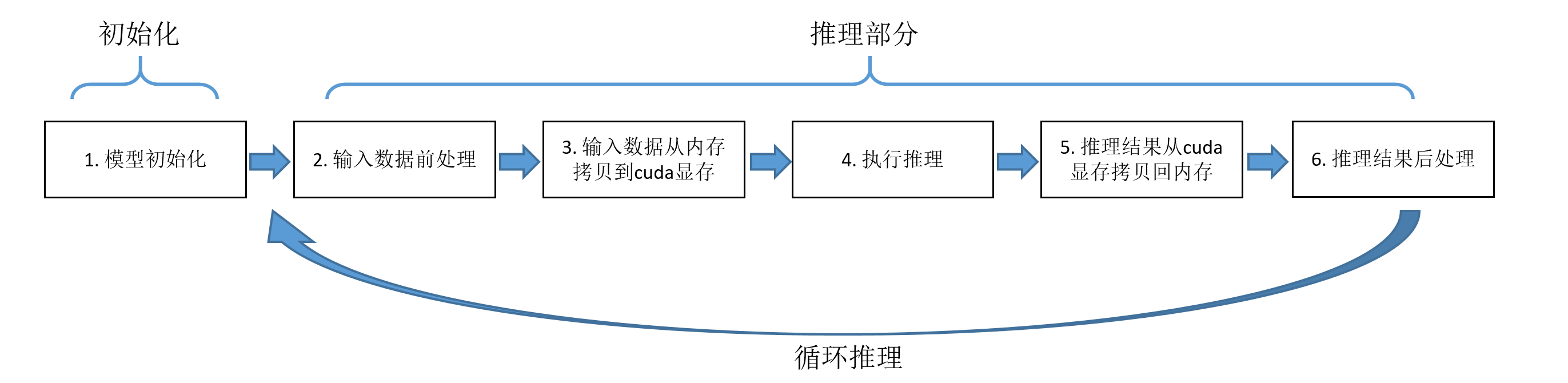

深度學習領域常用的基於CPU/GPU的推理方式有OpenCV DNN、ONNXRuntime、TensorRT以及OpenVINO。這幾種方式的推理過程可以統一用下圖來概述。整體可分為模型初始化部分和推理部分,後者包括步驟2-5。

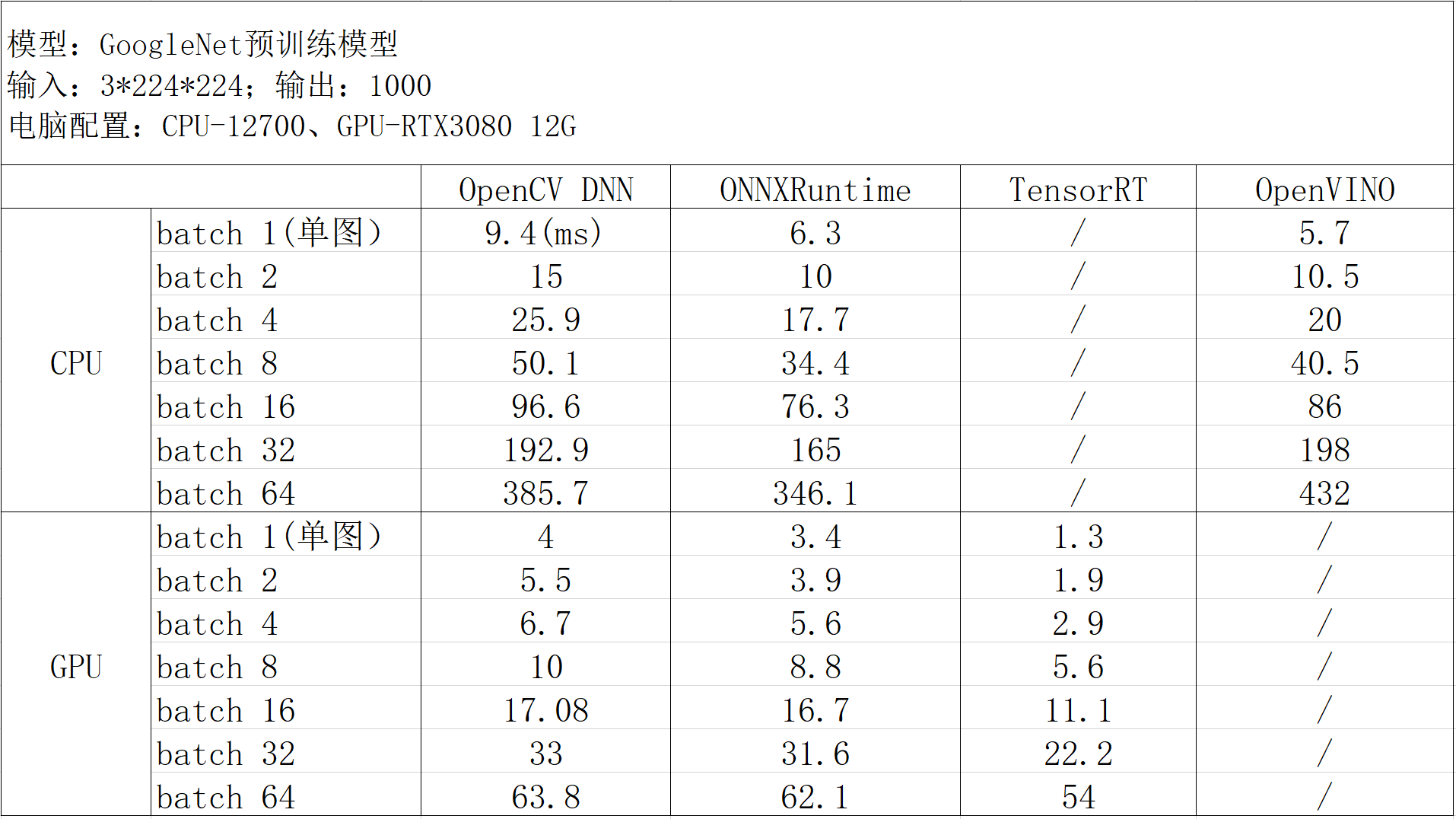

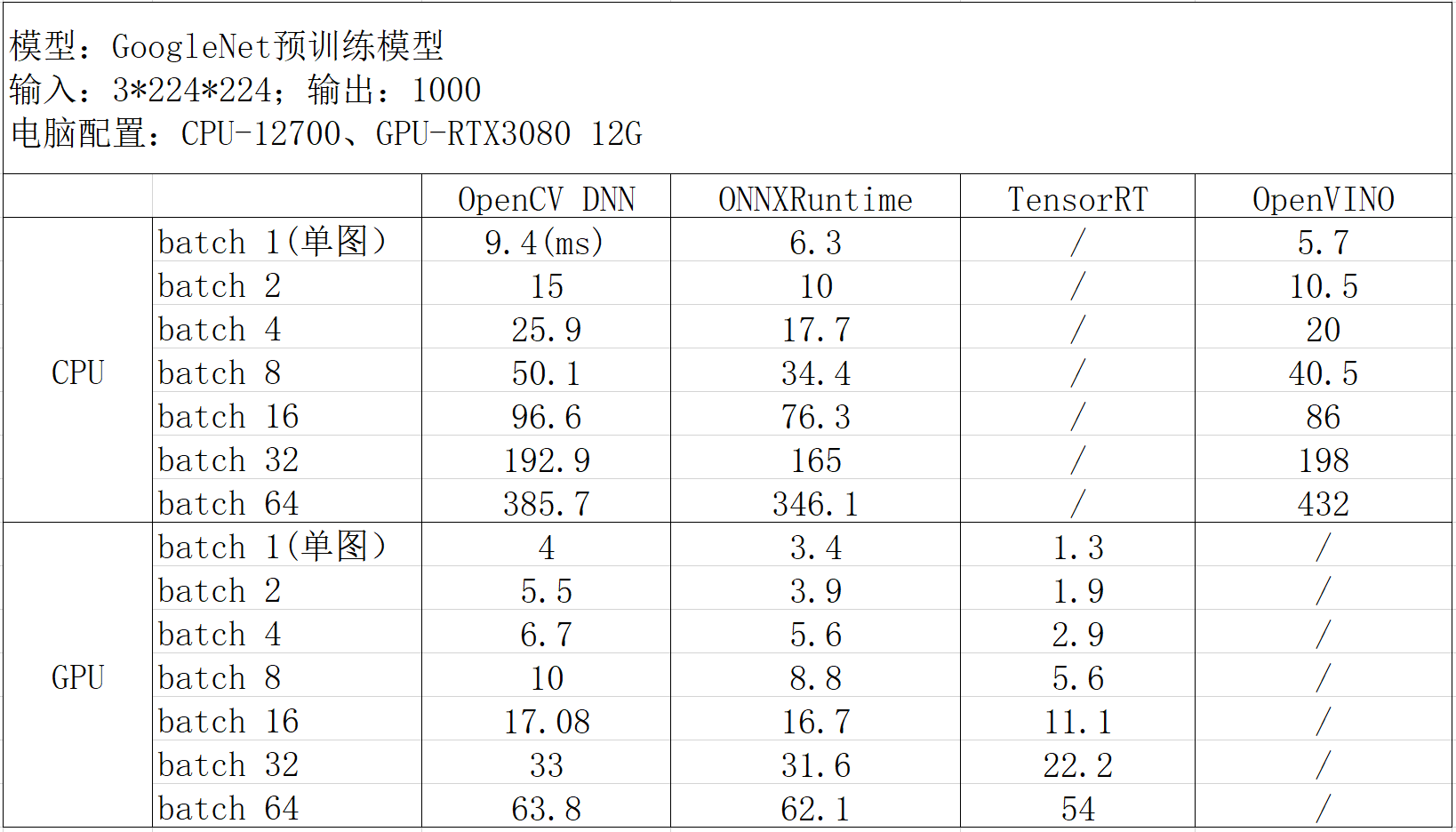

以GoogLeNet模型為例,測得幾種推理方式在推理部分的耗時如下:

結論:GPU加速首選TensorRT;CPU加速首選OpenVINO;如果需要兼具CPU和GPU推理功能,可以選擇ONNXRuntime。

下一篇內容:【模型部署 02】Python實現分類模型(以GoogLeNet為例)在OpenCV DNN、ONNXRuntime、TensorRT、OpenVINO上的推理部署

1. 環境設定

1.1 OpenCV DNN

【模型部署】OpenCV4.6.0+CUDA11.1+VS2019環境設定

1.2 ONNXRuntime

【模型部署】在C++和Python中設定ONNXRuntime環境

1.3 TensorRT

【模型部署】在C++和Python中搭建TensorRT環境

1.4 OpenVINO2022

【模型部署】在C++和Python中設定OpenVINO2022環境

2. PyTorch模型檔案(pt/pth/pkl)轉ONNX

2.1 pt/pth/pkl互轉

PyTorch中支援匯出三種字尾格式的模型檔案:pt、pth和pkl,這三種格式在儲存方式上並無區別,只是字尾不同。三種格式之間的轉換比較簡單,只需要建立模型並載入模型引數,然後再儲存為其他格式即可。

以pth轉pt為例:

import torch

import torchvision

# 構建模型

model = torchvision.models.googlenet(num_classes=2, init_weights=True)

# 載入模型引數,pt/pth/pkl三種格式均可

model.load_state_dict(torch.load("googlenet_catdog.pth"))

model.eval()

# 重新儲存為所需要轉換的格式

torch.save(model.state_dict(), 'googlenet_catdog.pt')

2.2 pt/pth/pkl轉ONNX

PyTorch中提供了現成的函數torch.onnx.export(),可將模型檔案轉換成onnx格式。該函數原型如下:

export(model, args, f, export_params=True, verbose=False, training=TrainingMode.EVAL,

input_names=None, output_names=None, operator_export_type=None,

opset_version=None, do_constant_folding=True, dynamic_axes=None,

keep_initializers_as_inputs=None, custom_opsets=None,

export_modules_as_functions=False)

主要引數含義:

- model (torch.nn.Module, torch.jit.ScriptModule or torch.jit.ScriptFunction) :需要轉換的模型。

- args (tuple or torch.Tensor) :args可以被設定為三種形式:

- 一個tuple,這個tuple應該與模型的輸入相對應,任何非Tensor的輸入都會被寫死入onnx模型,所有Tensor型別的引數會被當做onnx模型的輸入。

args = (x, y, z)

- 一個Tensor,一般這種情況下模型只有一個輸入。

args = torch.Tensor([1, 2, 3])

- 一個帶有字典的tuple,這種情況下,所有字典之前的引數會被當做「非關鍵字」引數傳入網路,字典中的鍵值對會被當做關鍵字引數傳入網路。如果網路中的關鍵字引數未出現在此字典中,將會使用預設值,如果沒有設定預設值,則會被指定為None。

args = (x, {'y': input_y, 'z': input_z})NOTE:一個特殊情況,當網路本身最後一個引數為字典時,直接在tuple最後寫一個字典則會被誤認為關鍵字傳參。所以,可以通過在tuple最後新增一個空字典來解決。

# 錯誤寫法: torch.onnx.export( model, (x, # WRONG: will be interpreted as named arguments {y: z}), "test.onnx.pb") # 糾正 torch.onnx.export( model, (x, {y: z}, {}), "test.onnx.pb")

- 一個tuple,這個tuple應該與模型的輸入相對應,任何非Tensor的輸入都會被寫死入onnx模型,所有Tensor型別的引數會被當做onnx模型的輸入。

- f:一個檔案類物件或一個路徑字串,二進位制的protocol buffer將被寫入此檔案,即onnx檔案。

- export_params (bool, default False) :如果為True則匯出模型的引數。如果想匯出一個未訓練的模型,則設為False。

- verbose (bool, default False) :如果為True,則列印一些轉換紀錄檔,並且onnx模型中會包含doc_string資訊。

- training (enum, default TrainingMode.EVAL) :列舉型別包括:

- TrainingMode.EVAL - 以推理模式匯出模型。

- TrainingMode.PRESERVE - 如果model.training為False,則以推理模式匯出;否則以訓練模式匯出。

- TrainingMode.TRAINING - 以訓練模式匯出,此模式將禁止一些影響訓練的優化操作。

- input_names (list of str, default empty list) :按順序分配給onnx圖的輸入節點的名稱列表。

- output_names (list of str, default empty list) :按順序分配給onnx圖的輸出節點的名稱列表。

- operator_export_type (enum, default None) :預設為OperatorExportTypes.ONNX, 如果Pytorch built with DPYTORCH_ONNX_CAFFE2_BUNDLE,則預設為OperatorExportTypes.ONNX_ATEN_FALLBACK。列舉型別包括:

- OperatorExportTypes.ONNX - 將所有操作匯出為ONNX操作。

- OperatorExportTypes.ONNX_FALLTHROUGH - 試圖將所有操作匯出為ONNX操作,但碰到無法轉換的操作(如onnx未實現的操作),則將操作匯出為「自定義操作」,為了使匯出的模型可用,執行時必須支援這些自定義操作。支援自定義操作方法見連結。

- OperatorExportTypes.ONNX_ATEN - 所有ATen操作匯出為ATen操作,ATen是Pytorch的內建tensor庫,所以這將使得模型直接使用Pytorch實現。(此方法轉換的模型只能被Caffe2直接使用)

- OperatorExportTypes.ONNX_ATEN_FALLBACK - 試圖將所有的ATen操作也轉換為ONNX操作,如果無法轉換則轉換為ATen操作(此方法轉換的模型只能被Caffe2直接使用)。例如:

# 轉換前: graph(%0 : Float): %3 : int = prim::Constant[value=0]() # conversion unsupported %4 : Float = aten::triu(%0, %3) # conversion supported %5 : Float = aten::mul(%4, %0) return (%5) # 轉換後: graph(%0 : Float): %1 : Long() = onnx::Constant[value={0}]() # not converted %2 : Float = aten::ATen[operator="triu"](%0, %1) # converted %3 : Float = onnx::Mul(%2, %0) return (%3)

- opset_version (int, default 9) :取值必須等於_onnx_main_opset或在_onnx_stable_opsets之內。具體可在torch/onnx/symbolic_helper.py中找到。例如:

_default_onnx_opset_version = 9 _onnx_main_opset = 13 _onnx_stable_opsets = [7, 8, 9, 10, 11, 12] _export_onnx_opset_version = _default_onnx_opset_version

- do_constant_folding (bool, default False) :是否使用「常數摺疊」優化。常數摺疊將使用一些算好的常數來優化一些輸入全為常數的節點。

- example_outputs (T or a tuple of T, where T is Tensor or convertible to Tensor, default None) :當需輸入模型為ScriptModule 或 ScriptFunction時必須提供。此引數用於確定輸出的型別和形狀,而不跟蹤(tracing)模型的執行。

- dynamic_axes (dict<string, dict<python:int, string>> or dict<string, list(int)>, default empty dict) :通過以下規則設定動態的維度:

- KEY(str) - 必須是input_names或output_names指定的名稱,用來指定哪個變數需要使用到動態尺寸。

- VALUE(dict or list) - 如果是一個dict,dict中的key是變數的某個維度,dict中的value是我們給這個維度取的名稱。如果是一個list,則list中的元素都表示此變數的某個維度。

程式碼實現:

import torch

import torchvision

weight_file = 'googlenet_catdog.pt'

onnx_file = 'googlenet_catdog.onnx'

model = torchvision.models.googlenet(num_classes=2, init_weights=True)

model.load_state_dict(torch.load(weight_file, map_location=torch.device('cpu')))

model.eval()

# 單輸入單輸出,固定batch

input = torch.randn(1, 3, 224, 224)

input_names = ["input"]

output_names = ["output"]

torch.onnx.export(model=model,

args=input,

f=onnx_file,

input_names=input_names,

output_names=output_names,

opset_version=11,

verbose=True)

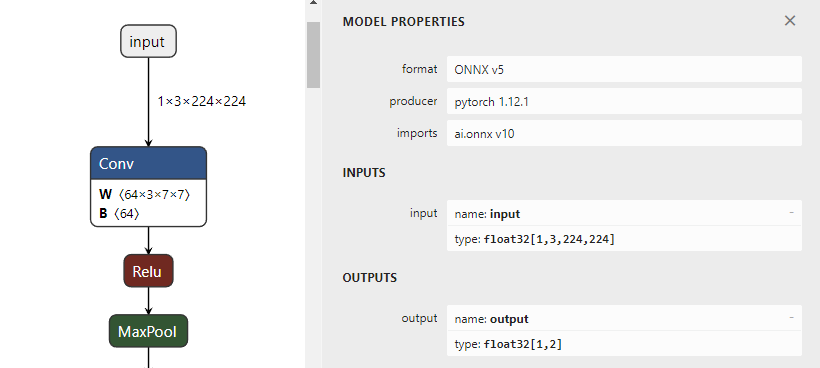

通過netron.app視覺化onnx的輸入輸出:

如果需要多張圖片同時進行推理,可以通過設定export的dynamic_axes引數,將模型輸入輸出的指定維度設定為變數。

import torch

import torchvision

weight_file = 'googlenet_catdog.pt'

onne_file = 'googlenet_catdog.onnx'

model = torchvision.models.googlenet(num_classes=2, init_weights=True)

model.load_state_dict(torch.load(weight_file, map_location=torch.device('cpu')))

model.eval()

# 單輸入單輸出,動態batch

input = torch.randn(1, 3, 224, 224)

input_names = ["input"]

output_names = ["output"]

torch.onnx.export(model=model,

args=input,

f=onnx_file,

input_names=input_names,

output_names=output_names,

opset_version=11,

verbose=True,

dynamic_axes={'input': {0: 'batch'}, 'output': {0: 'batch'}})

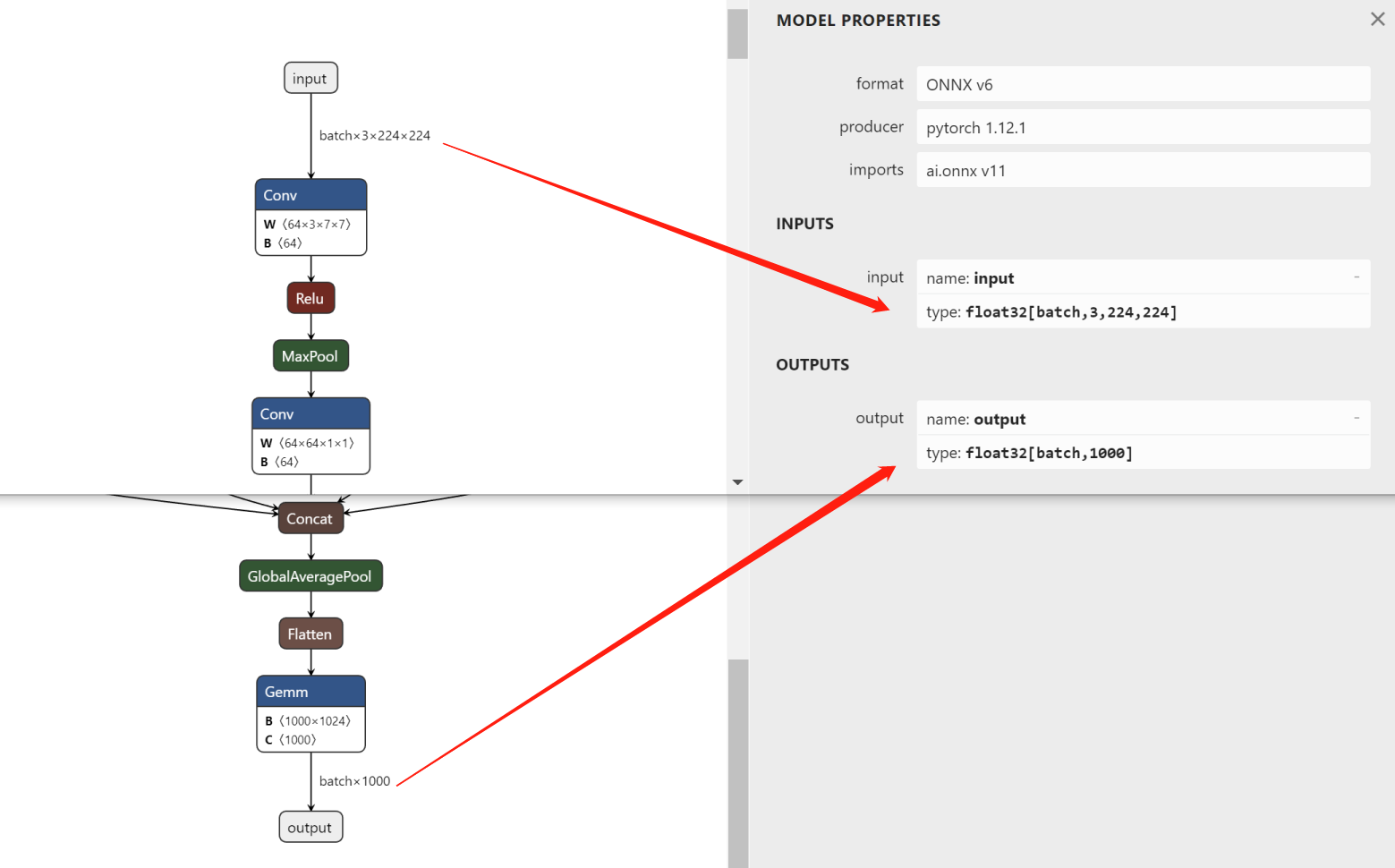

動態batch的onnx檔案輸入輸出在netron.app視覺化如下,其中batch維度是變數的形式,可以根據自己需要設定為大於0的任意整數。

如果模型有多個輸入和輸出,按照以下形式匯出:

# 模型有兩個輸入和兩個輸出,動態batch

input1 = torch.randn(1, 3, 256, 192).to(opt.device)

input2 = torch.randn(1, 3, 256, 192).to(opt.device)

input_names = ["input1", "input2"]

output_names = ["output1", "output2"]

torch.onnx.export(model=model,

args=(input1, input2),

f=opt.onnx_path,

input_names=input_names,

output_names=output_names,

opset_version=16,

verbose=True,

dynamic_axes={'input1': {0: 'batch'},

'input2': {0: 'batch'},

'output1': {0: 'batch'},

'output2': {0: 'batch'}})

3. OpenCV DNN部署GoogLeNet

3.1 推理過程及程式碼實現

整個推理過程可分為前處理、推理、後處理三部分。具體細節請閱讀程式碼,包括單圖推理、動態batch推理的實現。

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <chrono>

#include <fstream>

using namespace std;

using namespace cv;

using namespace cv::dnn;

std::string onnxPath = "E:/inference-master/models/engine/googlenet-pretrained_batch.onnx";

std::string imagePath = "E:/inference-master/images/catdog";

std::string classNamesPath = "E:/inference-master/imagenet-classes.txt"; // 標籤名稱列表(類名)

cv::dnn::Net net;

std::vector<std::string> classNameList; // 標籤名,可以從檔案讀取

int batchSize = 32;

int softmax(const cv::Mat& src, cv::Mat& dst)

{

float max = 0.0;

float sum = 0.0;

max = *max_element(src.begin<float>(), src.end<float>());

cv::exp((src - max), dst);

sum = cv::sum(dst)[0];

dst /= sum;

return 0;

}

// GoogLeNet模型初始化

void ModelInit(string onnxPath)

{

net = cv::dnn::readNetFromONNX(onnxPath);

// net = cv::dnn::readNetFromCaffe("E:/inference-master/2/deploy.prototxt", "E:/inference-master/2/default.caffemodel");

// 設定計算後臺和計算裝置

// CPU(預設)

// net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);

// net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

// CUDA

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

// 讀取標籤名稱

ifstream fin(classNamesPath.c_str());

string strLine;

classNameList.clear();

while (getline(fin, strLine))

classNameList.push_back(strLine);

fin.close();

}

// 單圖推理

bool ModelInference(cv::Mat srcImage, std::string& className, float& confidence)

{

auto start = chrono::high_resolution_clock::now();

cv::Mat image = srcImage.clone();

// 預處理(尺寸變換、通道變換、歸一化)

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cv::resize(image, image, cv::Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

cv::subtract(image, mean, image);

cv::divide(image, std, image);

// blobFromImage操作順序:swapRB交換通道 -> scalefactor比例縮放 -> mean求減 -> size進行resize;

// mean操作時,ddepth不能選取CV_8U;

// crop=True時,先等比縮放,直到寬高之一率先達到對應的size尺寸,另一個大於或等於對應的size尺寸,然後從中心裁剪;

// 返回4-D Mat維度順序:NCHW

// cv::Mat blob = cv::dnn::blobFromImage(image, 1., cv::Size(224, 224), cv::Scalar(0, 0, 0), false, false);

cv::Mat blob = cv::dnn::blobFromImage(image);

// 設定輸入

net.setInput(blob);

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// 前向推理

cv::Mat preds = net.forward();

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1);

std::cout << "Inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

// 結果歸一化(每個batch分別求softmax)

softmax(preds, preds);

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(preds, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

className = classNameList[labelIndex];

confidence = probability;

// std::cout << "class:" << className << endl << "confidence:" << confidence << endl;

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = chrono::duration_cast<std::chrono::microseconds>(end3 - start);

std::cout << "opencv_dnn 推理時間:" << (ms / 1000.0).count() << "ms" << std::endl;

}

// 多圖並行推理(動態batch)

bool ModelInference_Batch(std::vector<cv::Mat> srcImages, std::vector<string>& classNames, std::vector<float>& confidences)

{

auto start = chrono::high_resolution_clock::now();

// 預處理(尺寸變換、通道變換、歸一化)

std::vector<cv::Mat> images;

for (size_t i = 0; i < srcImages.size(); i++)

{

cv::Mat image = srcImages[i].clone();

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cv::resize(image, image, cv::Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

cv::subtract(image, mean, image);

cv::divide(image, std, image);

images.push_back(image);

}

cv::Mat blob = cv::dnn::blobFromImages(images);

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// 設定輸入

net.setInput(blob);

// 前向推理

cv::Mat preds = net.forward();

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1) / 100.0;

std::cout << "Inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

int rows = preds.size[0]; // batch

int cols = preds.size[1]; // 類別數(每一個類別的得分)

for (int row = 0; row < rows; row++)

{

cv::Mat scores(1, cols, CV_32FC1, preds.ptr<float>(row));

softmax(scores, scores); // 結果歸一化

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(scores, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

classNames.push_back(classNameList[labelIndex]);

confidences.push_back(probability);

}

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = chrono::duration_cast<std::chrono::microseconds>(end3 - start);

std::cout << "opencv_dnn batch" << rows << " 推理時間:" << (ms / 1000.0).count() << "ms" << std::endl;

}

int main(int argc, char** argv)

{

// 模型初始化

ModelInit(onnxPath);

// 讀取影象

vector<string> filenames;

glob(imagePath, filenames);

// 單圖推理測試

for (int n = 0; n < filenames.size(); n++)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

cv::Mat src = imread(filenames[n]);

std::string classname;

float confidence;

for (int i = 0; i < 101; i++) {

if (i==1)

start = chrono::high_resolution_clock::now();

ModelInference(src, classname, confidence);

}

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "opencv_dnn 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

// 批次(動態batch)推理測試

std::vector<cv::Mat> srcImages;

for (int n = 0; n < filenames.size(); n++)

{

cv::Mat image = imread(filenames[n]);

srcImages.push_back(image);

if ((n + 1) % batchSize == 0 || n == filenames.size() - 1)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

for (int i = 0; i < 101; i++) {

if (i == 1)

start = chrono::high_resolution_clock::now();

std::vector<std::string> classNames;

std::vector<float> confidences;

ModelInference_Batch(srcImages, classNames, confidences);

}

srcImages.clear();

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "opencv_dnn batch" << batchSize << " 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

}

return 0;

}

3.2 選擇CPU/GPU

OpenCV DNN切換CPU和GPU推理,只需要通過下邊兩行程式碼設定計算後臺和計算裝置。

CPU推理

net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV); net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

GPU推理

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA); net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

以下兩點需要注意:

- 在不做任何設定的情況下,預設使用CPU進行推理。

- 在設定為GPU推理時,如果電腦沒有搜尋到CUDA環境,則會自動轉換成CPU進行推理。

3.3 多輸出模型推理

當模型有多個輸出時,使用forward的過載方法,返回Mat型別的陣列:

// 模型多輸出 std::vector<cv::Mat> preds; net.forward(preds); cv::Mat pred1 = preds[0]; cv::Mat pred2 = preds[1];

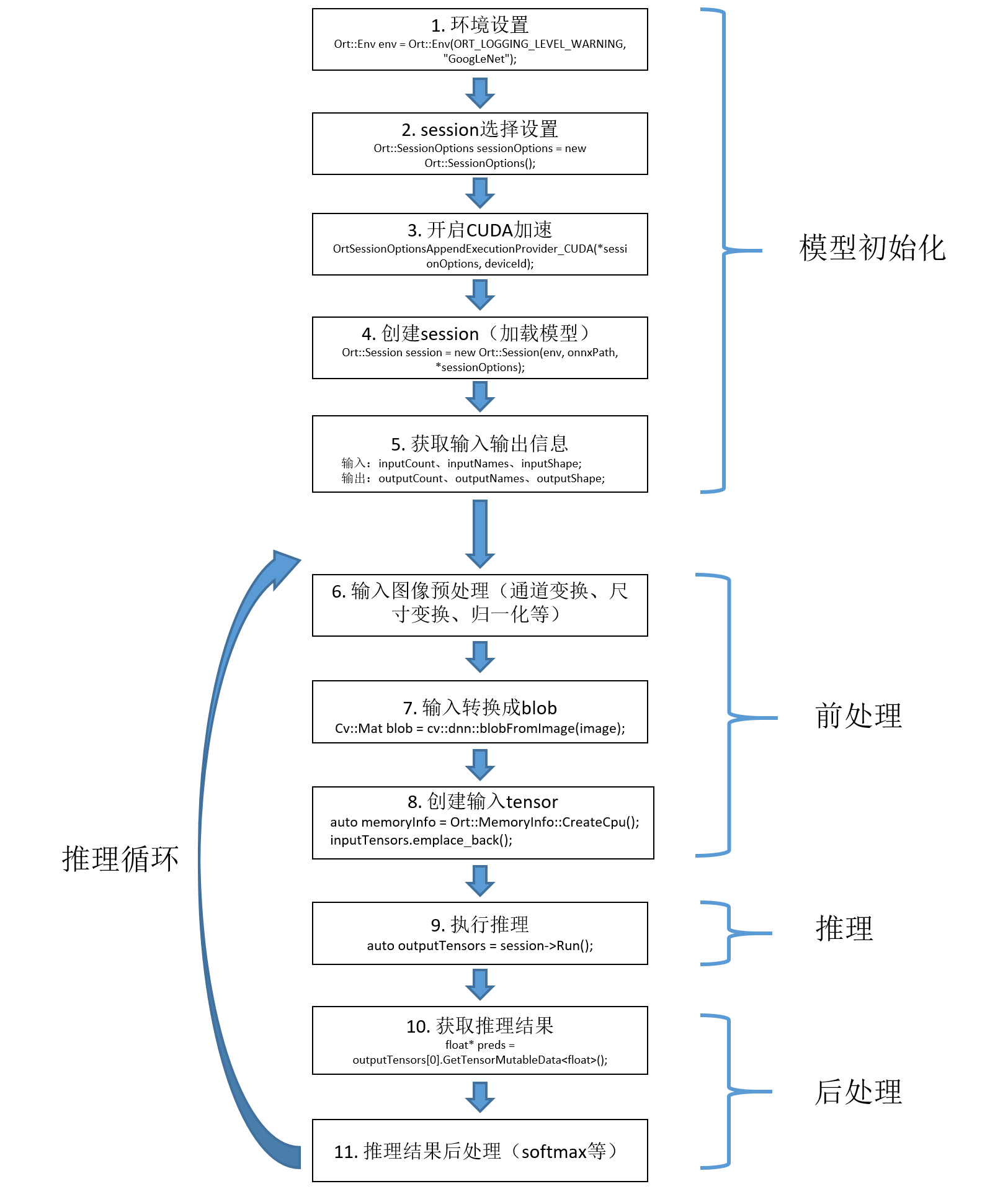

4. ONNXRuntime部署GoogLeNet

4.1 推理過程及程式碼實現

程式碼:

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <onnxruntime_cxx_api.h>

#include <vector>

#include <fstream>

#include <chrono>

using namespace std;

using namespace cv;

using namespace Ort;

// C++表示字串的方式:char*、string、wchar_t*、wstring、字串陣列

const wchar_t* onnxPath = L"E:/inference-master/models/GoogLeNet/googlenet-pretrained_batch1.onnx";

std::string imagePath = "E:/inference-master/images/catdog";

std::string classNamesPath = "E:/inference-master/imagenet-classes.txt"; // 標籤名稱列表(類名)

std::vector<std::string> classNameList; // 標籤名,可以從檔案讀取

int batchSize = 1;

Ort::Env env{ nullptr };

Ort::SessionOptions* sessionOptions;

Ort::Session* session;

size_t inputCount;

size_t outputCount;

std::vector<const char*> inputNames;

std::vector<const char*> outputNames;

std::vector<int64_t> inputShape;

std::vector<int64_t> outputShape;

// 對陣列元素求softmax

std::vector<float> softmax(std::vector<float> input)

{

float total = 0;

for (auto x : input)

total += exp(x);

std::vector<float> result;

for (auto x : input)

result.push_back(exp(x) / total);

return result;

}

int softmax(const cv::Mat& src, cv::Mat& dst)

{

float max = 0.0;

float sum = 0.0;

max = *max_element(src.begin<float>(), src.end<float>());

cv::exp((src - max), dst);

sum = cv::sum(dst)[0];

dst /= sum;

return 0;

}

// 前(預)處理(通道變換、標準化等)

void PreProcess(cv::Mat srcImage, cv::Mat& dstImage)

{

// 通道變換,BGR->RGB

cvtColor(srcImage, dstImage, cv::COLOR_BGR2RGB);

resize(dstImage, dstImage, Size(224, 224));

// 影象歸一化

dstImage.convertTo(dstImage, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

subtract(dstImage, mean, dstImage);

divide(dstImage, std, dstImage);

}

// 模型初始化

int ModelInit(const wchar_t* onnxPath, bool useCuda, int deviceId)

{

// 讀取標籤名稱

std::ifstream fin(classNamesPath.c_str());

std::string strLine;

classNameList.clear();

while (getline(fin, strLine))

classNameList.push_back(strLine);

fin.close();

// 環境設定,控制檯輸出設定

env = Ort::Env(ORT_LOGGING_LEVEL_WARNING, "GoogLeNet");

sessionOptions = new Ort::SessionOptions();

// 設定執行緒數

sessionOptions->SetIntraOpNumThreads(16);

// 優化等級:啟用所有可能的優化

sessionOptions->SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

if (useCuda) {

// 開啟CUDA加速,需要cuda_provider_factory.h標頭檔案

OrtSessionOptionsAppendExecutionProvider_CUDA(*sessionOptions, deviceId);

}

// 建立session

session = new Ort::Session(env, onnxPath, *sessionOptions);

// 獲取輸入輸出數量

inputCount = session->GetInputCount();

outputCount = session->GetOutputCount();

std::cout << "Number of inputs = " << inputCount << std::endl;

std::cout << "Number of outputs = " << outputCount << std::endl;

// 獲取輸入輸出名稱

Ort::AllocatorWithDefaultOptions allocator;

const char* inputName = session->GetInputName(0, allocator);

const char* outputName = session->GetOutputName(0, allocator);

inputNames = { inputName };

outputNames = { outputName };

std::cout << "Name of inputs = " << inputName << std::endl;

std::cout << "Name of outputs = " << outputName << std::endl;

// 獲取輸入輸出維度資訊,返回型別std::vector<int64_t>

inputShape = session->GetInputTypeInfo(0).GetTensorTypeAndShapeInfo().GetShape();

outputShape = session->GetOutputTypeInfo(0).GetTensorTypeAndShapeInfo().GetShape();

std::cout << "Shape of inputs = " << "(" << inputShape[0] << "," << inputShape[1] << "," << inputShape[2] << "," << inputShape[3] << ")" << std::endl;

std::cout << "Shape of outputs = " << "(" << outputShape[0] << "," << outputShape[1] << ")" << std::endl;

return 0;

}

// 單圖推理

void ModelInference(cv::Mat srcImage, std::string& className, float& confidence)

{

auto start = chrono::high_resolution_clock::now();

// 輸入影象預處理

cv::Mat image;

//PreProcess(srcImage, image); // 這裡使用呼叫函數的方式,處理時間莫名變長很多,很奇怪

// 通道變換,BGR->RGB

cvtColor(srcImage, image, cv::COLOR_BGR2RGB);

resize(image, image, Size(224, 224));

// 影象歸一化

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

subtract(image, mean, image);

divide(image, std, image);

cv::Mat blob = cv::dnn::blobFromImage(image);

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// 建立輸入tensor

auto memoryInfo = Ort::MemoryInfo::CreateCpu(OrtAllocatorType::OrtArenaAllocator, OrtMemType::OrtMemTypeDefault);

std::vector<Ort::Value> inputTensors;

inputTensors.emplace_back(Ort::Value::CreateTensor<float>(memoryInfo,

blob.ptr<float>(), blob.total(), inputShape.data(), inputShape.size()));

// 推理

auto outputTensors = session->Run(Ort::RunOptions{ nullptr },

inputNames.data(), inputTensors.data(), inputCount, outputNames.data(), outputCount);

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1);

std::cout << "Inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

// 獲取輸出

float* preds = outputTensors[0].GetTensorMutableData<float>(); // 也可以使用outputTensors.front();

int64_t numClasses = outputShape[1];

cv::Mat output = cv::Mat_<float>(1, numClasses);

for (int j = 0; j < numClasses; j++) {

output.at<float>(0, j) = preds[j];

}

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(output, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

className = classNameList[1];

confidence = probability;

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = chrono::duration_cast<std::chrono::microseconds>(end3 - start);

std::cout << "onnxruntime單圖推理時間:" << (ms / 1000.0).count() << "ms" << std::endl;

}

// 單圖推理

void ModelInference_Batch(std::vector<cv::Mat> srcImages, std::vector<string>& classNames, std::vector<float>& confidences)

{

auto start = chrono::high_resolution_clock::now();

// 輸入影象預處理

std::vector<cv::Mat> images;

for (size_t i = 0; i < srcImages.size(); i++)

{

cv::Mat image = srcImages[i].clone();

// 通道變換,BGR->RGB

cvtColor(image, image, cv::COLOR_BGR2RGB);

resize(image, image, Size(224, 224));

// 影象歸一化

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

subtract(image, mean, image);

divide(image, std, image);

images.push_back(image);

}

// 影象轉blob格式

cv::Mat blob = cv::dnn::blobFromImages(images);

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// 建立輸入tensor

std::vector<Ort::Value> inputTensors;

auto memoryInfo = Ort::MemoryInfo::CreateCpu(OrtAllocatorType::OrtArenaAllocator, OrtMemType::OrtMemTypeDefault);

inputTensors.emplace_back(Ort::Value::CreateTensor<float>(memoryInfo,

blob.ptr<float>(), blob.total(), inputShape.data(), inputShape.size()));

// 推理

std::vector<Ort::Value> outputTensors = session->Run(Ort::RunOptions{ nullptr },

inputNames.data(), inputTensors.data(), inputCount, outputNames.data(), outputCount);

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1)/100;

std::cout << "inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

// 獲取輸出

float* preds = outputTensors[0].GetTensorMutableData<float>(); // 也可以使用outputTensors.front();

// cout << preds[0] << "," << preds[1] << "," << preds[1000] << "," << preds[1001] << endl;

int batch = outputShape[0];

int numClasses = outputShape[1];

cv::Mat output(batch, numClasses, CV_32FC1, preds);

int rows = output.size[0]; // batch

int cols = output.size[1]; // 類別數(每一個類別的得分)

for (int row = 0; row < rows; row++)

{

cv::Mat scores(1, cols, CV_32FC1, output.ptr<float>(row));

softmax(scores, scores); // 結果歸一化

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(scores, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

classNames.push_back(classNameList[labelIndex]);

confidences.push_back(probability);

}

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = chrono::duration_cast<std::chrono::microseconds>(end3 - start);

std::cout << "onnxruntime單圖推理時間:" << (ms / 1000.0).count() << "ms" << std::endl;

}

int main(int argc, char** argv)

{

// 模型初始化

ModelInit(onnxPath, true, 0);

// 讀取影象

std::vector<std::string> filenames;

cv::glob(imagePath, filenames);

// 單圖推理測試

for (int i = 0; i < filenames.size(); i++)

{

// 每張圖重複執行100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

cv::Mat srcImage = imread(filenames[i]);

std::string className;

float confidence;

for (int n = 0; n < 101; n++) {

if (n == 1)

start = chrono::high_resolution_clock::now();

ModelInference(srcImage, className, confidence);

}

// 顯示

cv::putText(srcImage, className + ":" + std::to_string(confidence),

cv::Point(10, 20), FONT_HERSHEY_SIMPLEX, 0.6, cv::Scalar(0, 0, 255), 1, 1);

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "onnxruntime 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

// 批次推理測試

std::vector<cv::Mat> srcImages;

for (int i = 0; i < filenames.size(); i++)

{

cv::Mat image = imread(filenames[i]);

srcImages.push_back(image);

if ((i + 1) % batchSize == 0 || i == filenames.size() - 1)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

for (int n = 0; n < 101; n++) {

if (n == 1)

start = chrono::high_resolution_clock::now(); // 首次推理耗時很久

std::vector<std::string> classNames;

std::vector<float> confidences;

ModelInference_Batch(srcImages, classNames, confidences);

}

srcImages.clear();

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "onnxruntime batch" << batchSize << " 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

}

return 0;

}

注意:ORT支援多圖並行推理,但是要求轉出onnx的時候batch就要使用固定數值。動態batch(即batch=-1)的onnx檔案是不支援推理的。

4.2 選擇CPU/GPU

使用GPU推理,只需要新增一行程式碼:

if (useCuda) {

// 開啟CUDA加速

OrtSessionOptionsAppendExecutionProvider_CUDA(*sessionOptions, deviceId);

}

4.3 多輸入多輸出模型推理

推理步驟和單圖推理基本一致,需要在輸入tensor中依次新增所有的輸入。假設模型有兩個輸入和兩個輸出:

// 建立session

session2 = new Ort::Session(env1, onnxPath, sessionOptions1);

// 獲取模型輸入輸出資訊

inputCount2 = session2->GetInputCount();

outputCount2 = session2->GetOutputCount();

// 輸入和輸出各有兩個

Ort::AllocatorWithDefaultOptions allocator;

const char* inputName1 = session2->GetInputName(0, allocator);

const char* inputName2 = session2->GetInputName(1, allocator);

const char* outputName1 = session2->GetOutputName(0, allocator);

const char* outputName2 = session2->GetOutputName(1, allocator);

intputNames2 = { inputName1, inputName2 };

outputNames2 = { outputName1, outputName2 };

// 獲取輸入輸出維度資訊,返回型別std::vector<int64_t>

inputShape2_1 = session2->GetInputTypeInfo(0).GetTensorTypeAndShapeInfo().GetShape();

inputShape2_2 = session2->GetInputTypeInfo(1).GetTensorTypeAndShapeInfo().GetShape();

outputShape2_1 = session2->GetOutputTypeInfo(0).GetTensorTypeAndShapeInfo().GetShape();

outputShape2_2 = session2->GetOutputTypeInfo(1).GetTensorTypeAndShapeInfo().GetShape();

...

// 建立輸入tensor

auto memoryInfo = Ort::MemoryInfo::CreateCpu(OrtAllocatorType::OrtArenaAllocator, OrtMemType::OrtMemTypeDefault);

std::vector<Ort::Value> inputTensors;

inputTensors.emplace_back(Ort::Value::CreateTensor<float>(memoryInfo,

blob1.ptr<float>(), blob1.total(), inputShape2_1.data(), inputShape2_1.size()));

inputTensors.emplace_back(Ort::Value::CreateTensor<float>(memoryInfo,

blob2.ptr<float>(), blob2.total(), inputShape2_2.data(), inputShape2_2.size()));

// 推理

auto outputTensors = session2->Run(Ort::RunOptions{ nullptr },

intputNames2.data(), inputTensors.data(), inputCount2, outputNames2.data(), outputCount2);

// 獲取輸出

float* preds1 = outputTensors[0].GetTensorMutableData<float>();

float* preds2 = outputTensors[1].GetTensorMutableData<float>();

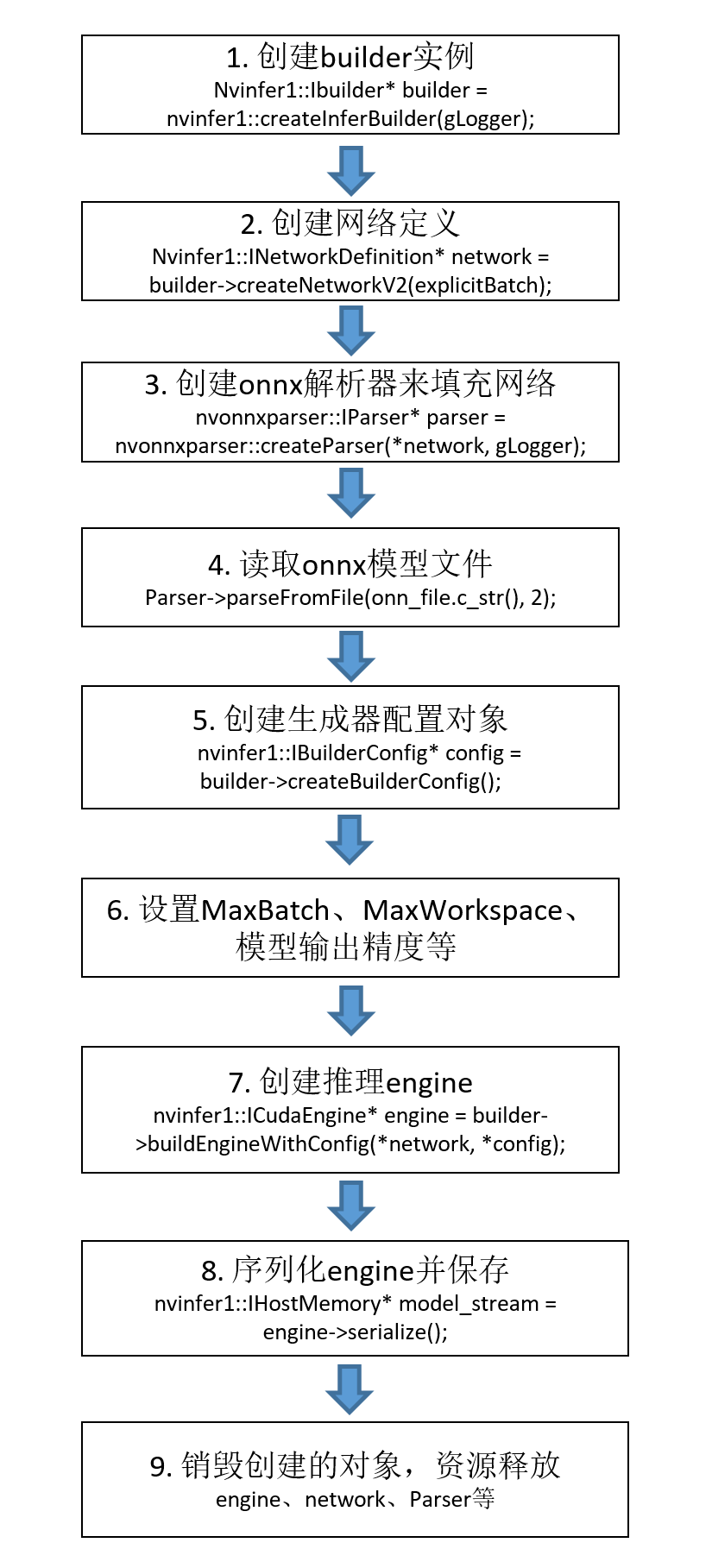

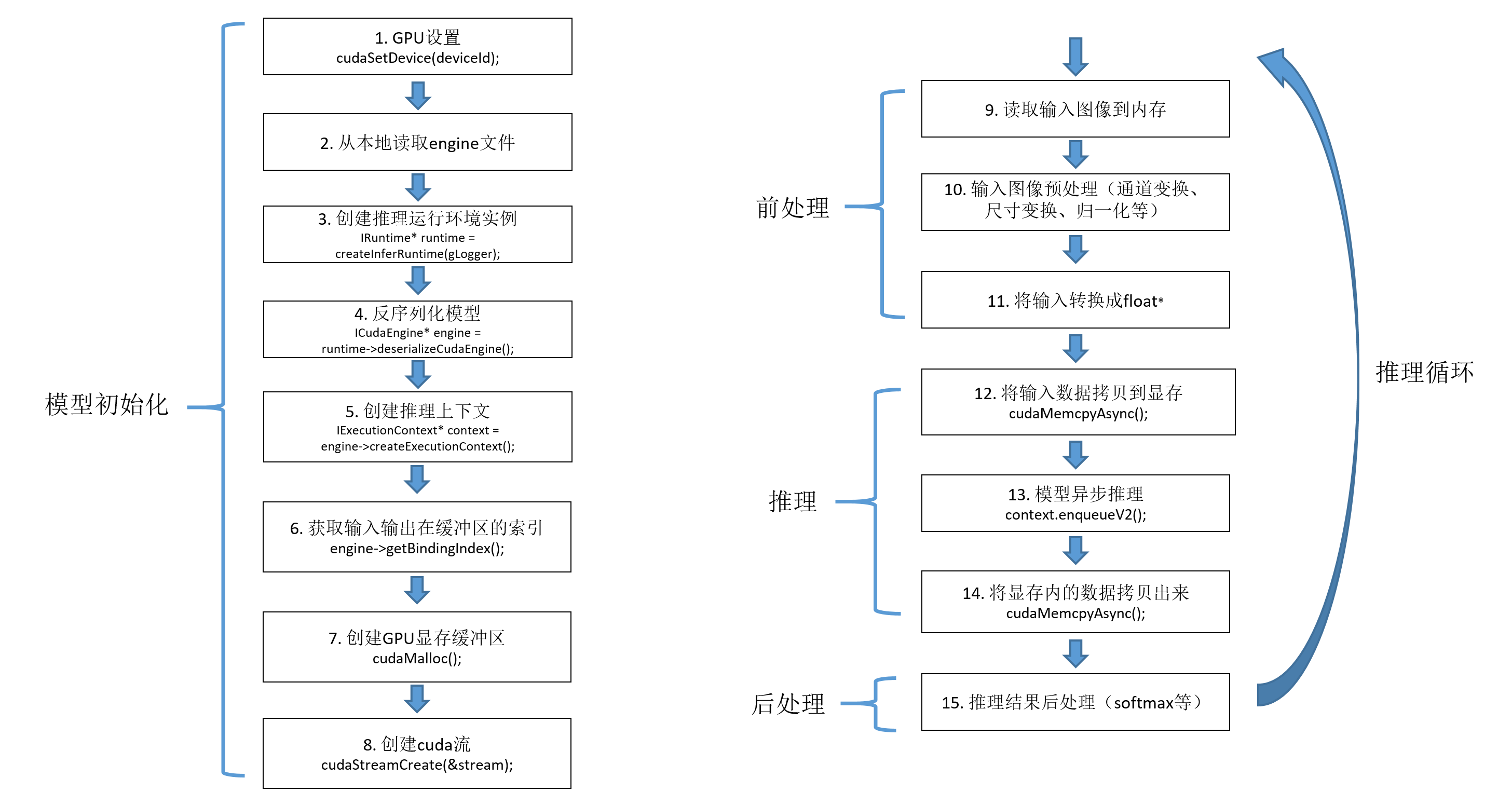

5. TensorRT部署GoogLeNet

TRT推理有兩種常見的方式:

- 通過官方安裝包裡邊的提供的trtexec.exe工具,從onnx檔案轉換得到trt檔案,然後執行推理;

- 由onnx檔案轉化得到engine檔案,再執行推理。

兩種方式原理一樣,這裡我們只介紹第二種方式。推理過程可分為兩階段:使用onnx構建推理engine和載入engine執行推理。

5.1 構建推理引擎(engine檔案)

engine的構建是TensorRT推理至關重要的一步,它特定於所構建的確切GPU模型,不能跨平臺或TensorRT版本移植。舉個簡單的例子,如果你在RTX3060上使用TensorRT 8.2.5構建了engine,那麼推理部署也必須要在RTX3060上進行,且要具備TensorRT 8.2.5環境。engine構建的大致流程如下:

engine的構建有很多種方式,這裡我們介紹常用的三種。我一般會選擇直接在Python中構建,這樣模型的訓練、轉onnx、轉engine都在Python端完成,方便且省事。

方法一:在Python中構建

import os

import sys

import logging

import argparse

import tensorrt as trt

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

# 延遲載入模式,cuda11.7新功能,設定為LAZY有可能會極大的降低記憶體和視訊記憶體的佔用

os.environ['CUDA_MODULE_LOADING'] = 'LAZY'

logging.basicConfig(level=logging.INFO)

logging.getLogger("EngineBuilder").setLevel(logging.INFO)

log = logging.getLogger("EngineBuilder")

class EngineBuilder:

"""

Parses an ONNX graph and builds a TensorRT engine from it.

"""

def __init__(self, batch_size=1, verbose=False, workspace=8):

"""

:param verbose: If enabled, a higher verbosity level will be set on the TensorRT logger.

:param workspace: Max memory workspace to allow, in Gb.

"""

# 1. 構建builder

self.trt_logger = trt.Logger(trt.Logger.INFO)

if verbose:

self.trt_logger.min_severity = trt.Logger.Severity.VERBOSE

trt.init_libnvinfer_plugins(self.trt_logger, namespace="")

self.builder = trt.Builder(self.trt_logger)

self.config = self.builder.create_builder_config() # 構造builder.config

self.config.max_workspace_size = workspace * (2 ** 30) # workspace分配

self.batch_size = batch_size

self.network = None

self.parser = None

def create_network(self, onnx_path):

"""

Parse the ONNX graph and create the corresponding TensorRT network definition.

:param onnx_path: The path to the ONNX graph to load.

"""

network_flags = (1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))

self.network = self.builder.create_network(network_flags)

self.parser = trt.OnnxParser(self.network, self.trt_logger)

onnx_path = os.path.realpath(onnx_path)

with open(onnx_path, "rb") as f:

if not self.parser.parse(f.read()):

log.error("Failed to load ONNX file: {}".format(onnx_path))

for error in range(self.parser.num_errors):

log.error(self.parser.get_error(error))

sys.exit(1)

# 獲取網路輸入輸出

inputs = [self.network.get_input(i) for i in range(self.network.num_inputs)]

outputs = [self.network.get_output(i) for i in range(self.network.num_outputs)]

log.info("Network Description")

for input in inputs:

self.batch_size = input.shape[0]

log.info("Input '{}' with shape {} and dtype {}".format(input.name, input.shape, input.dtype))

for output in outputs:

log.info("Output '{}' with shape {} and dtype {}".format(output.name, output.shape, output.dtype))

assert self.batch_size > 0

self.builder.max_batch_size = self.batch_size

def create_engine(self, engine_path, precision):

"""

Build the TensorRT engine and serialize it to disk.

:param engine_path: The path where to serialize the engine to.

:param precision: The datatype to use for the engine, either 'fp32', 'fp16' or 'int8'.

"""

engine_path = os.path.realpath(engine_path)

engine_dir = os.path.dirname(engine_path)

os.makedirs(engine_dir, exist_ok=True)

log.info("Building {} Engine in {}".format(precision, engine_path))

inputs = [self.network.get_input(i) for i in range(self.network.num_inputs)]

if precision == "fp16":

if not self.builder.platform_has_fast_fp16:

log.warning("FP16 is not supported natively on this platform/device")

else:

self.config.set_flag(trt.BuilderFlag.FP16)

with self.builder.build_engine(self.network, self.config) as engine, open(engine_path, "wb") as f:

log.info("Serializing engine to file: {:}".format(engine_path))

f.write(engine.serialize())

def main(args):

builder = EngineBuilder(args.batch_size, args.verbose, args.workspace)

builder.create_network(args.onnx)

builder.create_engine(args.engine, args.precision)

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("-o", "--onnx", default=r'googlenet-pretrained_batch8.onnx', help="The input ONNX model file to load")

parser.add_argument("-e", "--engine", default=r'googlenet-pretrained_batch8_from_py_3080_FP16.engine', help="The output path for the TRT engine")

parser.add_argument("-p", "--precision", default="fp16", choices=["fp32", "fp16", "int8"],

help="The precision mode to build in, either 'fp32', 'fp16' or 'int8', default: 'fp16'")

parser.add_argument("-b", "--batch_size", default=8, type=int, help="batch number of input")

parser.add_argument("-v", "--verbose", action="store_true", help="Enable more verbose log output")

parser.add_argument("-w", "--workspace", default=8, type=int, help="The max memory workspace size to allow in Gb, "

"default: 8")

args = parser.parse_args()

main(args)

生成fp16模型:引數precision設定為fp16即可。int8模型生成過程比較複雜,且對模型精度影響較大,用的不多,這裡暫不介紹。

parser.add_argument("-p", "--precision", default="fp16", choices=["fp32", "fp16", "int8"],

help="The precision mode to build in, either 'fp32', 'fp16' or 'int8', default: 'fp16'")

方法二:在C++中構建

#include "NvInfer.h"

#include "NvOnnxParser.h"

#include "cuda_runtime_api.h"

#include "logging.h"

#include <fstream>

#include <map>

#include <chrono>

#include <cmath>

#include <opencv2/opencv.hpp>

#include <fstream>

using namespace nvinfer1;

using namespace nvonnxparser;

using namespace std;

using namespace cv;

std::string onnxPath = "E:/inference-master/models/engine/googlenet-pretrained_batch.onnx";

std::string enginePath = "E:/inference-master/models/engine/googlenet-pretrained_batch_from_cpp.engine"; // 通過C++構建

static const int INPUT_H = 224;

static const int INPUT_W = 224;

static const int OUTPUT_SIZE = 1000;

static const int BATCH_SIZE = 25;

const char* INPUT_BLOB_NAME = "input";

const char* OUTPUT_BLOB_NAME = "output";

static Logger gLogger;

// onnx轉engine

void onnx_to_engine(std::string onnx_file_path, std::string engine_file_path, int type) {

// 建立builder範例,獲取cuda核心目錄以獲取最快的實現,用於建立config、network、engine的其他物件的核心類

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(gLogger);

const auto explicitBatch = 1U << static_cast<uint32_t>(nvinfer1::NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);

// 建立網路定義

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(explicitBatch);

// 建立onnx解析器來填充網路

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, gLogger);

// 讀取onnx模型檔案

parser->parseFromFile(onnx_file_path.c_str(), 2);

for (int i = 0; i < parser->getNbErrors(); ++i) {

std::cout << "load error: " << parser->getError(i)->desc() << std::endl;

}

printf("tensorRT load mask onnx model successfully!!!...\n");

// 建立生成器設定物件

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

builder->setMaxBatchSize(BATCH_SIZE); // 設定最大batch

config->setMaxWorkspaceSize(16 * (1 << 20)); // 設定最大工作空間大小

// 設定模型輸出精度,0代表FP32,1代表FP16

if (type == 1) {

config->setFlag(nvinfer1::BuilderFlag::kFP16);

}

// 建立推理引擎

nvinfer1::ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

// 將推理引擎儲存到本地

std::cout << "try to save engine file now~~~" << std::endl;

std::ofstream file_ptr(engine_file_path, std::ios::binary);

if (!file_ptr) {

std::cerr << "could not open plan output file" << std::endl;

return;

}

// 將模型轉化為檔案流資料

nvinfer1::IHostMemory* model_stream = engine->serialize();

// 將檔案儲存到本地

file_ptr.write(reinterpret_cast<const char*>(model_stream->data()), model_stream->size());

// 銷燬建立的物件

model_stream->destroy();

engine->destroy();

network->destroy();

parser->destroy();

std::cout << "convert onnx model to TensorRT engine model successfully!" << std::endl;

}

int main(int argc, char** argv)

{

// onnx轉engine

onnx_to_engine(onnxPath, enginePath, 0);

return 0;

}

方法三:使用官方安裝包bin目錄下的trtexec.exe工具構建

trtexec.exe --onnx=googlenet-pretrained_batch.onnx --saveEngine=googlenet-pretrained_batch_from_trt_trt853.engine --shapes=input:25x3x224x224

fp16模型:在後邊加--fp16即可

trtexec.exe --onnx=googlenet-pretrained_batch.onnx --saveEngine=googlenet-pretrained_batch_from_trt_trt853.engine --shapes=input:25x3x224x224 --fp16

5.2 讀取engine檔案並部署模型

推理程式碼:

#include "NvInfer.h"

#include "NvOnnxParser.h"

#include "cuda_runtime_api.h"

#include "logging.h"

#include <fstream>

#include <map>

#include <chrono>

#include <cmath>

#include <opencv2/opencv.hpp>

#include "cuda.h"

#include "assert.h"

#include "iostream"

using namespace nvinfer1;

using namespace nvonnxparser;

using namespace std;

using namespace cv;

#define CHECK(status) \

do\

{\

auto ret = (status);\

if (ret != 0)\

{\

std::cerr << "Cuda failure: " << ret << std::endl;\

abort();\

}\

} while (0)

std::string enginePath = "E:/inference-master/models/GoogLeNet/googlenet-pretrained_batch1_from_py_3080_FP32.engine";

std::string imagePath = "E:/inference-master/images/catdog";

std::string classNamesPath = "E:/inference-master/imagenet-classes.txt"; // 標籤名稱列表(類名)

std::vector<std::string> classNameList; // 標籤名列表

static const int INPUT_H = 224;

static const int INPUT_W = 224;

static const int CHANNEL = 3;

static const int OUTPUT_SIZE = 1000;

static const int BATCH_SIZE = 1;

const char* INPUT_BLOB_NAME = "input";

const char* OUTPUT_BLOB_NAME = "output";

static Logger gLogger;

IRuntime* runtime;

ICudaEngine* engine;

IExecutionContext* context;

void* gpu_buffers[2];

cudaStream_t stream;

const int inputIndex = 0;

const int outputIndex = 1;

// 提前申請記憶體,可節省推理時間

static float mydata[BATCH_SIZE * CHANNEL * INPUT_H * INPUT_W];

static float prob[BATCH_SIZE * OUTPUT_SIZE];

// 逐行求softmax

int softmax(const cv::Mat & src, cv::Mat & dst)

{

float max = 0.0;

float sum = 0.0;

cv::Mat tmpdst = cv::Mat::zeros(src.size(), src.type());

std::vector<cv::Mat> srcRows;

// 逐行求softmax

for (size_t i = 0; i < src.rows; i++)

{

cv::Mat tmpRow;

cv::Mat dataRow = src.row(i).clone();

max = *std::max_element(dataRow.begin<float>(), dataRow.end<float>());

cv::exp((dataRow - max), tmpRow);

sum = cv::sum(tmpRow)[0];

tmpRow /= sum;

srcRows.push_back(tmpRow);

cv::vconcat(srcRows, tmpdst);

}

dst = tmpdst.clone();

return 0;

}

// onnx轉engine

void onnx_to_engine(std::string onnx_file_path, std::string engine_file_path, int type) {

// 建立builder範例,獲取cuda核心目錄以獲取最快的實現,用於建立config、network、engine的其他物件的核心類

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(gLogger);

const auto explicitBatch = 1U << static_cast<uint32_t>(nvinfer1::NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);

// 建立網路定義

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(explicitBatch);

// 建立onnx解析器來填充網路

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, gLogger);

// 讀取onnx模型檔案

parser->parseFromFile(onnx_file_path.c_str(), 2);

for (int i = 0; i < parser->getNbErrors(); ++i) {

std::cout << "load error: " << parser->getError(i)->desc() << std::endl;

}

printf("tensorRT load mask onnx model successfully!!!...\n");

// 建立生成器設定物件

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

builder->setMaxBatchSize(BATCH_SIZE); // 設定最大batch

config->setMaxWorkspaceSize(16 * (1 << 20)); // 設定最大工作空間大小

// 設定模型輸出精度

if (type == 1) {

config->setFlag(nvinfer1::BuilderFlag::kFP16);

}

if (type == 2) {

config->setFlag(nvinfer1::BuilderFlag::kINT8);

}

// 建立推理引擎

nvinfer1::ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

// 將推理引擎儲存到本地

std::cout << "try to save engine file now~~~" << std::endl;

std::ofstream file_ptr(engine_file_path, std::ios::binary);

if (!file_ptr) {

std::cerr << "could not open plan output file" << std::endl;

return;

}

// 將模型轉化為檔案流資料

nvinfer1::IHostMemory* model_stream = engine->serialize();

// 將檔案儲存到本地

file_ptr.write(reinterpret_cast<const char*>(model_stream->data()), model_stream->size());

// 銷燬建立的物件

model_stream->destroy();

engine->destroy();

network->destroy();

parser->destroy();

std::cout << "convert onnx model to TensorRT engine model successfully!" << std::endl;

}

// 模型推理:包括建立GPU視訊記憶體緩衝區、設定模型輸入及模型推理

void doInference(IExecutionContext& context, const void* input, float* output, int batchSize)

{

//auto start = chrono::high_resolution_clock::now();

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CHECK(cudaMemcpyAsync(gpu_buffers[inputIndex], input, batchSize * 3 * INPUT_H * INPUT_W * sizeof(float), cudaMemcpyHostToDevice, stream));

// context.enqueue(batchSize, buffers, stream, nullptr);

context.enqueueV2(gpu_buffers, stream, nullptr);

//auto end1 = std::chrono::high_resolution_clock::now();

//auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

//std::cout << "推理: " << (ms1 / 1000.0).count() << "ms" << std::endl;

CHECK(cudaMemcpyAsync(output, gpu_buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

//size_t dest_pitch = 0;

//CHECK(cudaMemcpy2D(output, dest_pitch, buffers[outputIndex], batchSize * sizeof(float), batchSize, OUTPUT_SIZE, cudaMemcpyDeviceToHost));

cudaStreamSynchronize(stream);

//auto end2 = std::chrono::high_resolution_clock::now();

//auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - start)/100.0;

//std::cout << "cuda-host: " << (ms2 / 1000.0).count() << "ms" << std::endl;

}

// 結束推理,釋放資源

void GpuMemoryRelease()

{

// Release stream and buffers

cudaStreamDestroy(stream);

CHECK(cudaFree(gpu_buffers[0]));

CHECK(cudaFree(gpu_buffers[1]));

// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();

}

// GoogLeNet模型初始化

void ModelInit(std::string enginePath, int deviceId)

{

// 設定GPU

cudaSetDevice(deviceId);

// 從本地讀取engine模型檔案

char* trtModelStream{ nullptr };

size_t size{ 0 };

std::ifstream file(enginePath, std::ios::binary);

if (file.good()) {

file.seekg(0, file.end); // 將讀指標從檔案末尾開始移動0個位元組

size = file.tellg(); // 返回讀指標的位置,此時讀指標的位置就是檔案的位元組數

file.seekg(0, file.beg); // 將讀指標從檔案開頭開始移動0個位元組

trtModelStream = new char[size];

assert(trtModelStream);

file.read(trtModelStream, size);

file.close();

}

// 建立推理執行環境範例

runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

// 反序列化模型

engine = runtime->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engine != nullptr);

// 建立推理上下文

context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

// Create stream

CHECK(cudaStreamCreate(&stream));

// Pointers to input and output device buffers to pass to engine.

// Engine requires exactly IEngine::getNbBindings() number of buffers.

assert(engine.getNbBindings() == 2);

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

// Create GPU buffers on device

CHECK(cudaMalloc(&gpu_buffers[inputIndex], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CHECK(cudaMalloc(&gpu_buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

// 讀取標籤名稱

ifstream fin(classNamesPath.c_str());

string strLine;

classNameList.clear();

while (getline(fin, strLine))

classNameList.push_back(strLine);

fin.close();

}

// 單圖推理

bool ModelInference(cv::Mat srcImage, std::string& className, float& confidence)

{

auto start = chrono::high_resolution_clock::now();

cv::Mat image = srcImage.clone();

// 預處理(尺寸變換、通道變換、歸一化)

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cv::resize(image, image, cv::Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

cv::subtract(image, mean, image);

cv::divide(image, std, image);

// cv::Mat blob = cv::dnn::blobFromImage(image);

// 下邊程式碼比上邊blobFromImages速度更快

for (int r = 0; r < INPUT_H; r++)

{

float* rowData = image.ptr<float>(r);

for (int c = 0; c < INPUT_W; c++)

{

mydata[0 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c];

mydata[1 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c + 1];

mydata[2 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c + 2];

}

}

// 模型推理

// doInference(*context, blob.data, prob, BATCH_SIZE);

doInference(*context, mydata, prob, BATCH_SIZE);

// 推理結果後處理

cv::Mat preds = cv::Mat(BATCH_SIZE, OUTPUT_SIZE, CV_32FC1, (float*)prob);

softmax(preds, preds);

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(preds, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

className = classNameList[labelIndex];

confidence = probability;

std::cout << "class:" << className << endl << "confidence:" << confidence << endl;

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start);

std::cout << "Inference time by TensorRT:" << (ms / 1000.0).count() << "ms" << std::endl;

return 0;

}

// GoogLeNet模型推理

bool ModelInference_Batch(std::vector<cv::Mat> srcImages, std::vector<std::string>& classNames, std::vector<float>& confidences)

{

auto start = std::chrono::high_resolution_clock::now();

if (srcImages.size() != BATCH_SIZE) return false;

// 預處理(尺寸變換、通道變換、歸一化)

std::vector<cv::Mat> images;

for (size_t i = 0; i < srcImages.size(); i++)

{

cv::Mat image = srcImages[i].clone();

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cv::resize(image, image, cv::Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

cv::subtract(image, mean, image);

cv::divide(image, std, image);

images.push_back(image);

}

// 影象轉blob格式

// cv::Mat blob = cv::dnn::blobFromImages(images);

// 下邊程式碼比上邊blobFromImages速度更快

for (int b = 0; b < BATCH_SIZE; b++)

{

cv::Mat image = images[b];

for (int r = 0; r < INPUT_H; r++)

{

float* rowData = image.ptr<float>(r);

for (int c = 0; c < INPUT_W; c++)

{

mydata[b * CHANNEL * INPUT_H * INPUT_W + 0 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c];

mydata[b * CHANNEL * INPUT_H * INPUT_W + 1 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c + 1];

mydata[b * CHANNEL * INPUT_H * INPUT_W + 2 * INPUT_H * INPUT_W + r * INPUT_W + c] = rowData[CHANNEL * c + 2];

}

}

}

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// 執行推理

doInference(*context, mydata, prob, BATCH_SIZE);

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1);

std::cout << "Inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

// 推理結果後處理

cv::Mat result = cv::Mat(BATCH_SIZE, OUTPUT_SIZE, CV_32FC1, (float*)prob);

softmax(result, result);

for (int r = 0; r < BATCH_SIZE; r++)

{

cv::Mat scores = result.row(r).clone();

cv::Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(scores, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

classNames.push_back(classNameList[labelIndex]);

confidences.push_back(probability);

}

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = std::chrono::duration_cast<std::chrono::microseconds>(end3 - start);

std::cout << "TensorRT batch" << BATCH_SIZE << " 推理時間:" << (ms / 1000.0).count() << "ms" << std::endl;

return true;

}

int main(int argc, char** argv)

{

// onnx轉engine

// onnx_to_engine(onnxPath, enginePath, 0);

// 模型初始化

ModelInit(enginePath, 0);

// 讀取影象

vector<string> filenames;

cv::glob(imagePath, filenames);

// 單圖推理測試

for (int n = 0; n < filenames.size(); n++)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

cv::Mat src = imread(filenames[n]);

std::string className;

float confidence;

for (int i = 0; i < 101; i++) {

if (i == 1)

start = chrono::high_resolution_clock::now();

ModelInference(src, className, confidence);

}

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "TensorRT 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

// 批次(動態batch)推理測試

std::vector<cv::Mat> srcImages;

int okNum = 0, ngNum = 0;

for (int n = 0; n < filenames.size(); n++)

{

cv::Mat image = imread(filenames[n]);

srcImages.push_back(image);

if ((n + 1) % BATCH_SIZE == 0 || n == filenames.size() - 1)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

for (int i = 0; i < 101; i++) {

if (i == 1)

start = chrono::high_resolution_clock::now();

std::vector<std::string> classNames;

std::vector<float> confidences;

ModelInference_Batch(srcImages, classNames, confidences);

for (int j = 0; j < classNames.size(); j++)

{

if (classNames[j] == "0")

okNum++;

else

ngNum++;

}

}

srcImages.clear();

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "TensorRT " << BATCH_SIZE << " 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

}

GpuMemoryRelease();

std::cout << "all_num = " << filenames.size() << endl << "okNum = " << okNum << endl << "ngNum = " << ngNum << endl;

return 0;

}

5.3 fp32、fp16模型對比測試

fp16模型推理結果幾乎和fp32一致,但是卻較大的節約了視訊記憶體和記憶體佔用,同時推理速度也有明顯的提升。

6. OpenVINO部署GoogLeNet

6.1 推理過程及程式碼

程式碼:

/* 推理過程

* 1. Create OpenVINO-Runtime Core

* 2. Compile Model

* 3. Create Inference Request

* 4. Set Inputs

* 5. Start Inference

* 6. Process inference Results

*/

#include <opencv2/opencv.hpp>

#include <openvino/openvino.hpp>

#include <inference_engine.hpp>

#include <chrono>

#include <fstream>

using namespace std;

using namespace InferenceEngine;

using namespace cv;

std::string onnxPath = "E:/inference-master/models/GoogLeNet/googlenet-pretrained_batch1.onnx";

std::string imagePath = "E:/inference-master/images/catdog";

std::string classNamesPath = "E:/inference-master/imagenet-classes.txt"; // 標籤名稱列表(類名)

ov::InferRequest inferRequest;

std::vector<std::string> classNameList; // 標籤名,可以從檔案讀取

int batchSize = 1;

// softmax,輸入輸出為陣列

std::vector<float> softmax(std::vector<float> input)

{

float total = 0;

for (auto x : input)

total += exp(x);

std::vector<float> result;

for (auto x : input)

result.push_back(exp(x) / total);

return result;

}

// softmax,輸入輸出為Mat

int softmax(const cv::Mat& src, cv::Mat& dst)

{

float max = 0.0;

float sum = 0.0;

max = *max_element(src.begin<float>(), src.end<float>());

cv::exp((src - max), dst);

sum = cv::sum(dst)[0];

dst /= sum;

return 0;

}

// 模型初始化

void ModelInit(string onnxPath)

{

// Step 1: 建立一個Core物件

ov::Core core;

// 列印當前裝置

std::vector<std::string> availableDevices = core.get_available_devices();

for (int i = 0; i < availableDevices.size(); i++)

printf("supported device name: %s\n", availableDevices[i].c_str());

// Step 2: 讀取模型

std::shared_ptr<ov::Model> model = core.read_model(onnxPath);

// Step 3: 載入模型到CPU

ov::CompiledModel compiled_model = core.compile_model(model, "CPU");

// 設定推理範例並行數為5個

//core.set_property("CPU", ov::streams::num(10));

// 設定推理範例數為自動分配

//core.set_property("CPU", ov::streams::num(ov::streams::AUTO));

// 推理範例數按計算資源平均分配

//core.set_property("CPU", ov::streams::num(ov::streams::NUMA));

// 設定推理範例的執行緒並行數為10

// core.set_property("CPU", ov::inference_num_threads(20));

// Step 4: 建立推理請求

inferRequest = compiled_model.create_infer_request();

// 讀取標籤名稱

ifstream fin(classNamesPath.c_str());

string strLine;

classNameList.clear();

while (getline(fin, strLine))

classNameList.push_back(strLine);

fin.close();

}

// 單圖推理

void ModelInference(cv::Mat srcImage, std::string& className, float& confidence )

{

auto start = chrono::high_resolution_clock::now();

// Step 5: 將輸入資料填充到輸入tensor

// 通過索引獲取輸入tensor

ov::Tensor input_tensor = inferRequest.get_input_tensor(0);

// 通過名稱獲取輸入tensor

// ov::Tensor input_tensor = infer_request.get_tensor("input");

// 預處理

cv::Mat image = srcImage.clone();

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

resize(image, image, Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

Scalar mean(0.485, 0.456, 0.406);

Scalar std(0.229, 0.224, 0.225);

subtract(image, mean, image);

divide(image, std, image);

// HWC -> NCHW

ov::Shape tensor_shape = input_tensor.get_shape();

const size_t channels = tensor_shape[1];

const size_t height = tensor_shape[2];

const size_t width = tensor_shape[3];

float* image_data = input_tensor.data<float>();

for (size_t r = 0; r < height; r++) {

for (size_t c = 0; c < width * channels; c++) {

int w = (r * width * channels + c) / channels;

int mod = (r * width * channels + c) % channels; // 0,1,2

image_data[mod * width * height + w] = image.at<float>(r, c);

}

}

// --------------- Step 6: Start inference ---------------

inferRequest.infer();

// --------------- Step 7: Process the inference results ---------------

// model has only one output

auto output_tensor = inferRequest.get_output_tensor();

float* detection = (float*)output_tensor.data();

ov::Shape out_shape = output_tensor.get_shape();

int batch = output_tensor.get_shape()[0];

int num_classes = output_tensor.get_shape()[1];

cv::Mat result(batch, num_classes, CV_32F, detection);

softmax(result, result);

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(result, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::milliseconds>(end - start);

std::cout << "openvino單張推理時間:" << ms.count() << "ms" << std::endl;

}

// 多圖並行推理(動態batch)

void ModelInference_Batch(std::vector<cv::Mat> srcImages, std::vector<string>& classNames, std::vector<float>& confidences)

{

auto start = chrono::high_resolution_clock::now();

// Step 5: 將輸入資料填充到輸入tensor

// 通過索引獲取輸入tensor

ov::Tensor input_tensor = inferRequest.get_input_tensor(0);

// 通過名稱獲取輸入tensor

// ov::Tensor input_tensor = infer_request.get_tensor("input");

// 預處理(尺寸變換、通道變換、歸一化)

std::vector<cv::Mat> images;

for (size_t i = 0; i < srcImages.size(); i++)

{

cv::Mat image = srcImages[i].clone();

cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cv::resize(image, image, cv::Size(224, 224));

image.convertTo(image, CV_32FC3, 1.0 / 255.0);

cv::Scalar mean(0.485, 0.456, 0.406);

cv::Scalar std(0.229, 0.224, 0.225);

cv::subtract(image, mean, image);

cv::divide(image, std, image);

images.push_back(image);

}

ov::Shape tensor_shape = input_tensor.get_shape();

const size_t batch = tensor_shape[0];

const size_t channels = tensor_shape[1];

const size_t height = tensor_shape[2];

const size_t width = tensor_shape[3];

float* image_data = input_tensor.data<float>();

// 影象轉blob格式(速度比下邊畫素操作方式更快)

cv::Mat blob = cv::dnn::blobFromImages(images);

memcpy(image_data, blob.data, batch * 3 * height * width * sizeof(float));

// NHWC -> NCHW

//for (size_t b = 0; b < batch; b++){

// for (size_t r = 0; r < height; r++) {

// for (size_t c = 0; c < width * channels; c++) {

// int w = (r * width * channels + c) / channels;

// int mod = (r * width * channels + c) % channels; // 0,1,2

// image_data[b * 3 * width * height + mod * width * height + w] = images[b].at<float>(r, c);

// }

// }

//}

auto end1 = std::chrono::high_resolution_clock::now();

auto ms1 = std::chrono::duration_cast<std::chrono::microseconds>(end1 - start);

std::cout << "PreProcess time: " << (ms1 / 1000.0).count() << "ms" << std::endl;

// --------------- Step 6: Start inference ---------------

inferRequest.infer();

auto end2 = std::chrono::high_resolution_clock::now();

auto ms2 = std::chrono::duration_cast<std::chrono::microseconds>(end2 - end1)/100;

std::cout << "Inference time: " << (ms2 / 1000.0).count() << "ms" << std::endl;

// --------------- Step 7: Process the inference results ---------------

// model has only one output

auto output_tensor = inferRequest.get_output_tensor();

float* detection = (float*)output_tensor.data();

ov::Shape out_shape = output_tensor.get_shape();

int num_classes = output_tensor.get_shape()[1];

cv::Mat output(batch, num_classes, CV_32F, detection);

int rows = output.size[0]; // batch

int cols = output.size[1]; // 類別數(每一個類別的得分)

for (int row = 0; row < rows; row++)

{

cv::Mat scores(1, cols, CV_32FC1, output.ptr<float>(row));

softmax(scores, scores); // 結果歸一化

Point minLoc, maxLoc;

double minValue = 0, maxValue = 0;

cv::minMaxLoc(scores, &minValue, &maxValue, &minLoc, &maxLoc);

int labelIndex = maxLoc.x;

double probability = maxValue;

classNames.push_back(classNameList[labelIndex]);

confidences.push_back(probability);

}

auto end3 = std::chrono::high_resolution_clock::now();

auto ms3 = std::chrono::duration_cast<std::chrono::microseconds>(end3 - end2);

std::cout << "PostProcess time: " << (ms3 / 1000.0).count() << "ms" << std::endl;

auto ms = chrono::duration_cast<std::chrono::milliseconds>(end3 - start);

std::cout << "openvino單張推理時間:" << ms.count() << "ms" << std::endl;

}

int main(int argc, char** argv)

{

// 模型初始化

ModelInit(onnxPath);

// 讀取影象

vector<string> filenames;

glob(imagePath, filenames);

// 單圖推理測試

for (int n = 0; n < filenames.size(); n++)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

for (int i = 0; i < 101; i++) {

if (i == 1)

start = chrono::high_resolution_clock::now();

cv::Mat src = imread(filenames[n]);

std::string className;

float confidence;

ModelInference(src, className, confidence);

}

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100.0;

std::cout << "opencv_dnn 單圖平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

std::vector<cv::Mat> srcImages;

for (int i = 0; i < filenames.size(); i++)

{

cv::Mat image = imread(filenames[i]);

srcImages.push_back(image);

if ((i + 1) % batchSize == 0 || i == filenames.size() - 1)

{

// 重複100次,計算平均時間

auto start = chrono::high_resolution_clock::now();

for (int i = 0; i < 101; i++) {

if (i == 1)

start = chrono::high_resolution_clock::now();

std::vector<std::string> classNames;

std::vector<float> confidences;

ModelInference_Batch(srcImages, classNames, confidences);

}

srcImages.clear();

auto end = chrono::high_resolution_clock::now();

auto ms = chrono::duration_cast<std::chrono::microseconds>(end - start) / 100;

std::cout << "openvino batch" << batchSize << " 平均推理時間:---------------------" << (ms / 1000.0).count() << "ms" << std::endl;

}

}

return 0;

}

注意:OV支援多圖並行推理,但是要求轉出onnx的時候batch就要使用固定數值。動態batch(即batch=-1)的onnx檔案會報錯。

6.2 遇到的問題

理論:OpenVINO是基於CPU推理最佳的方式。

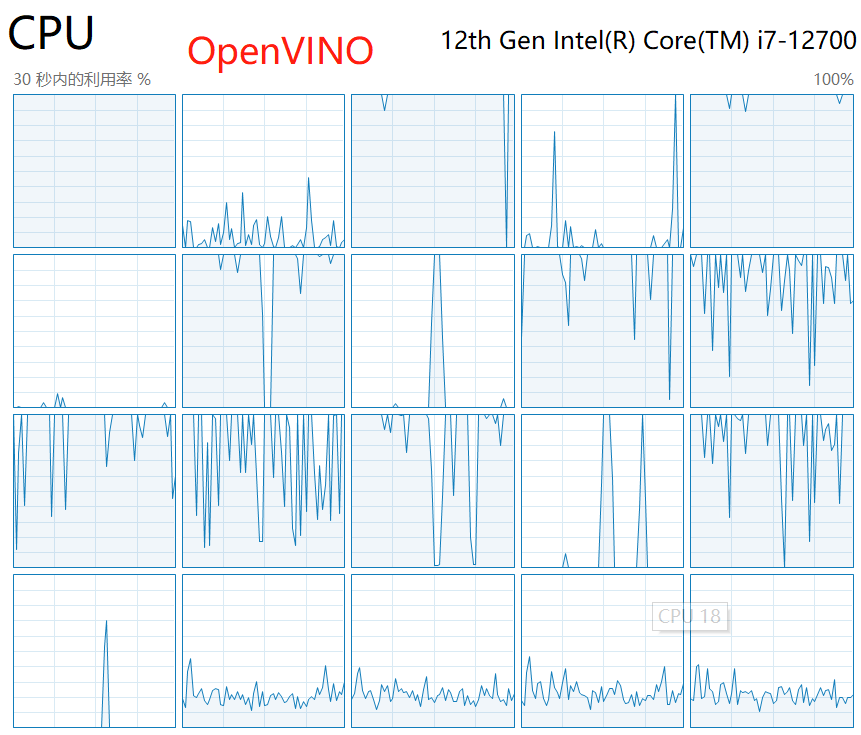

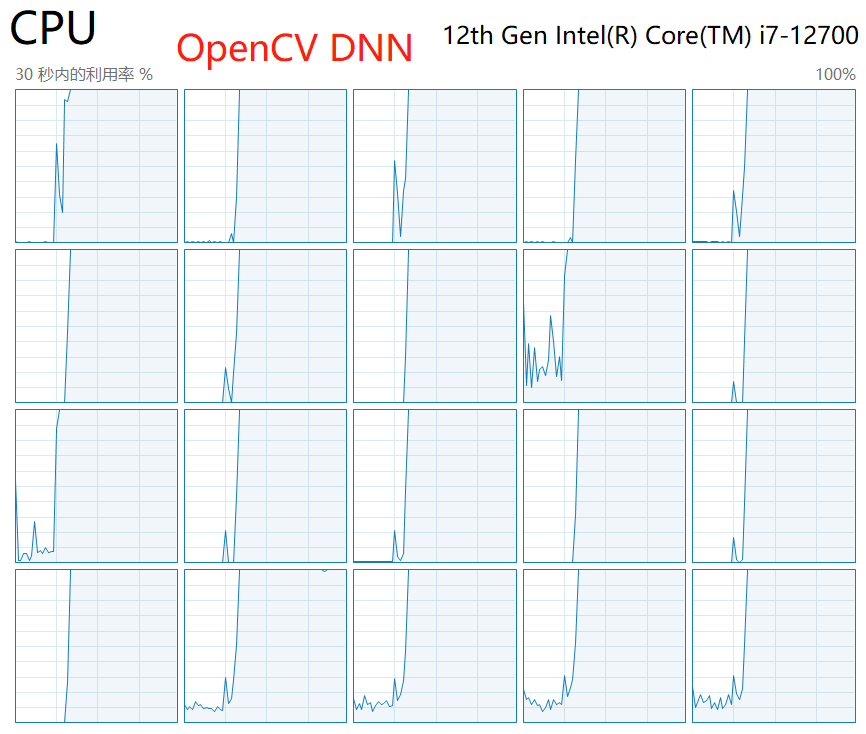

實測:在測試OpenVINO的過程中,我們發現OpenVINO推理對於CPU的利用率遠沒有OpenCV DNN和ONNXRuntime高,這也是隨著batch數量增加,OV在CPU上的推理速度反而不如DNN和ORT的主要原因。嘗試過網上的多種優化方式,比如設定執行緒數並行數等等,未取得任何改善。如下圖,在OpenVINO推理過程中,始終只有一半的CPU處於活躍狀態;而OnnxRuntime或者OpenCV DNN推理時,所有的CPU均處於活躍狀態。

7. 四種推理方式對比測試

深度學習領域常用的基於CPU/GPU的推理方式有OpenCV DNN、ONNXRuntime、TensorRT以及OpenVINO。這幾種方式的推理過程可以統一用下圖來概述。整體可分為模型初始化部分和推理部分,後者包括步驟2-5。

以GoogLeNet模型為例,測得幾種推理方式在推理部分的耗時如下:

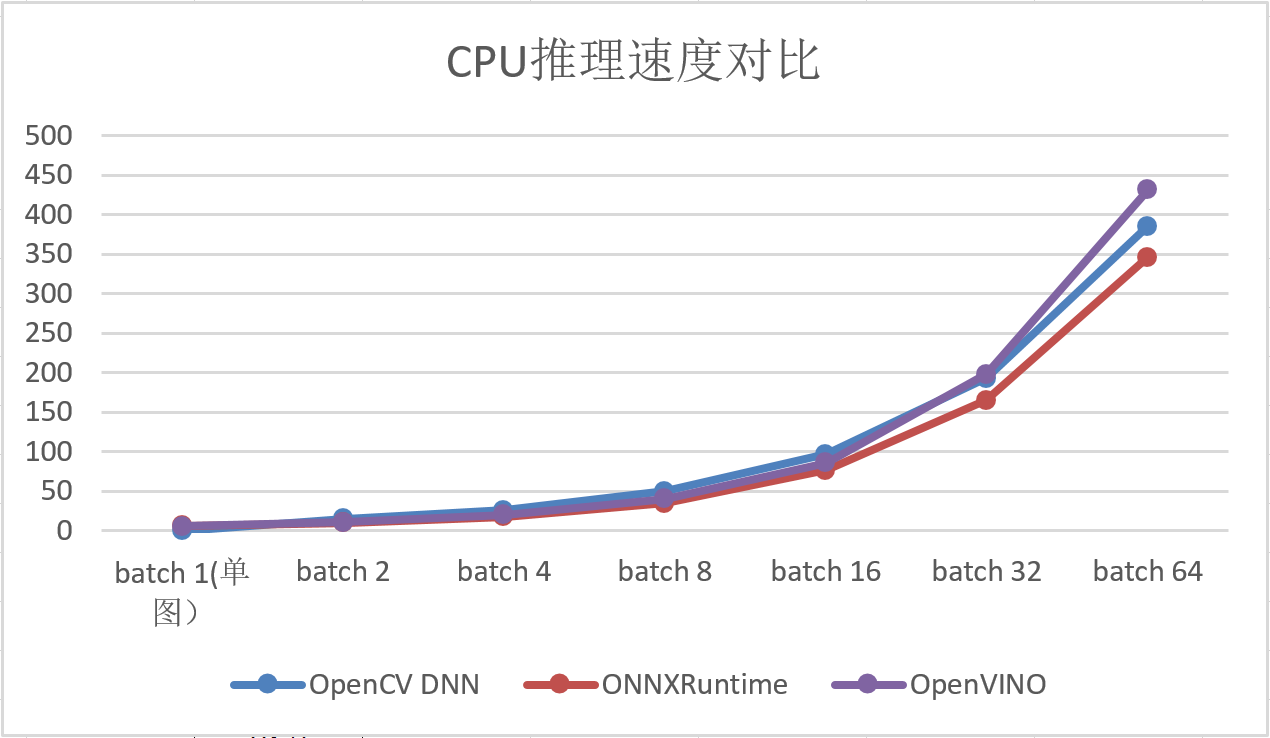

基於CPU推理:

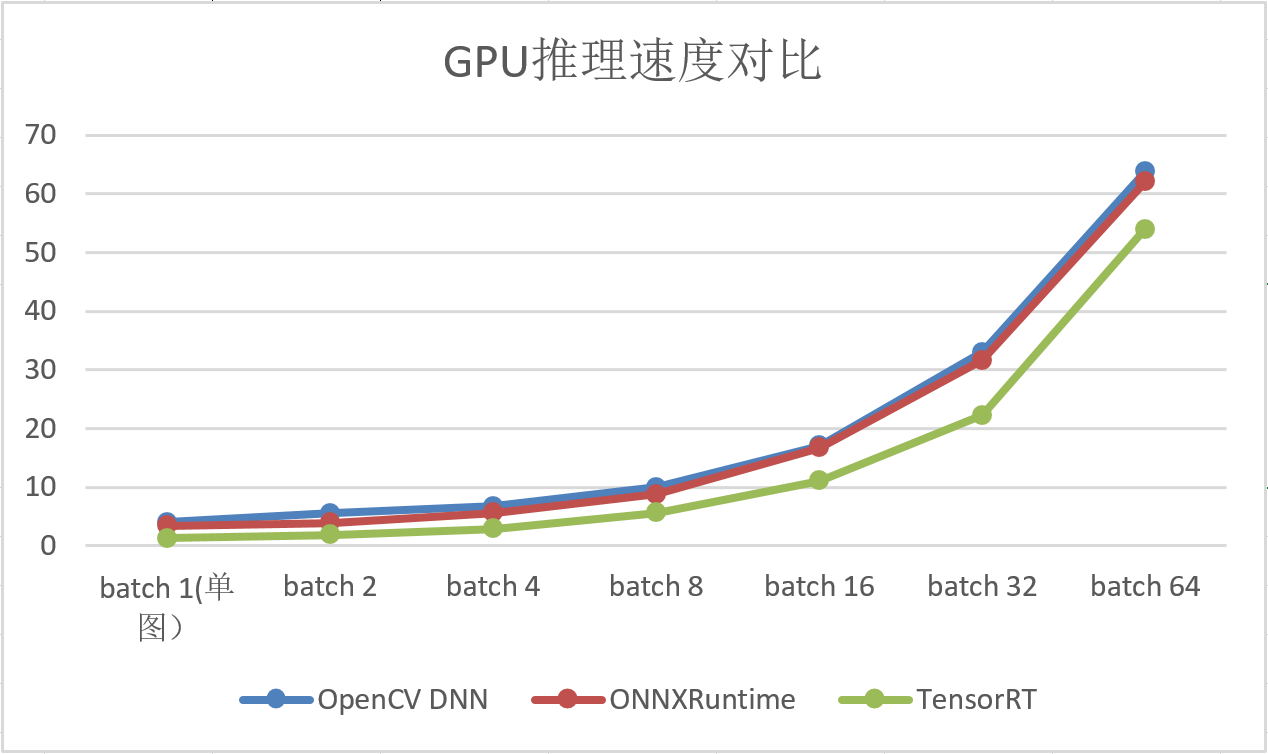

基於GPU推理:

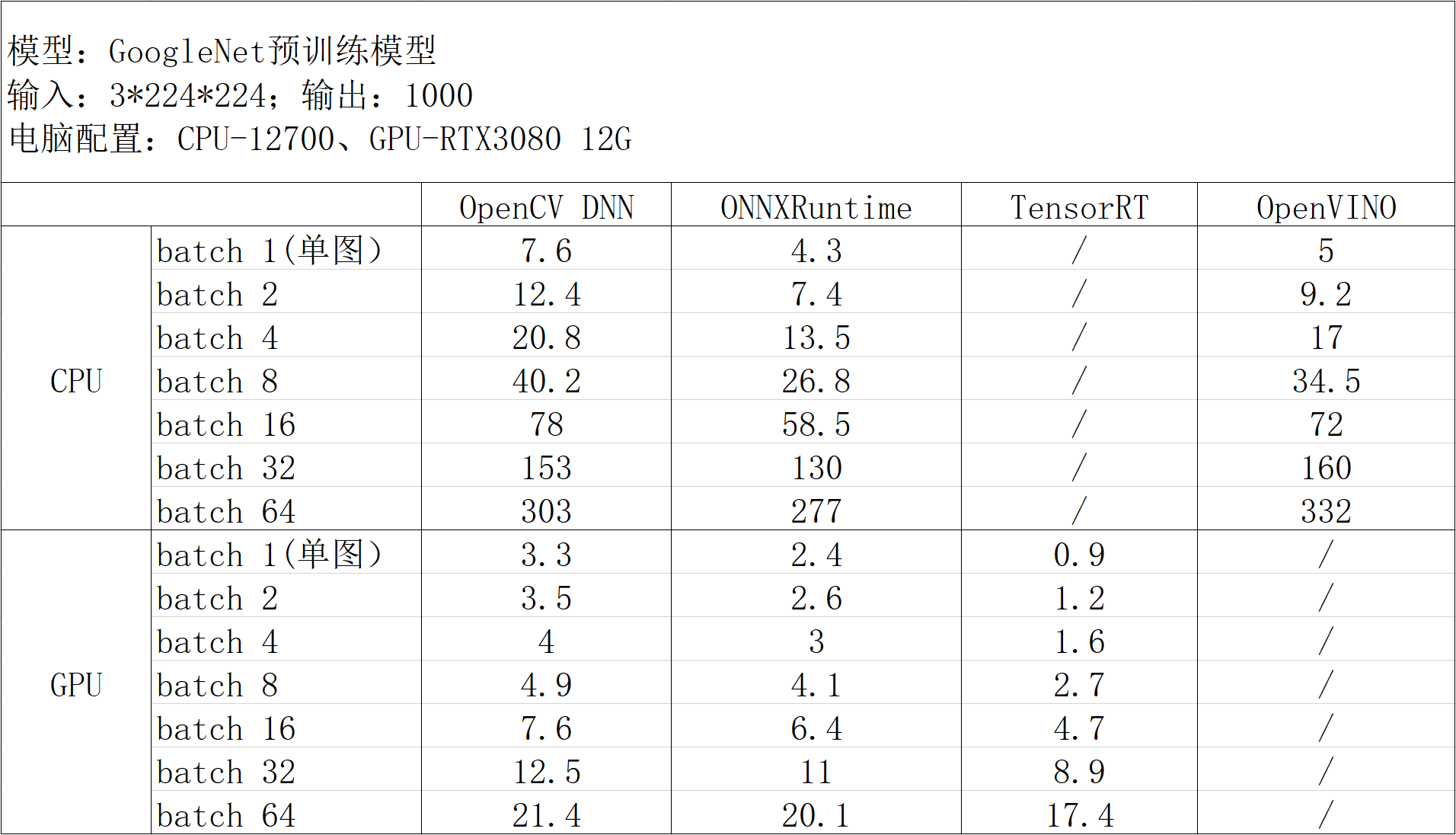

不論採用何種推理方式,同一網路的前處理和後處理過程基本都是一致的。所以,為了更直觀的對比幾種推理方式的速度,我們拋去前後處理,只統計圖中實際推理部分,即3、4、5這三個過程的執行時間。

同樣是GoogLeNet網路,步驟3-5的執行時間對比如下:

注:OpenVINO-CPU測試中始終只使用了一半數量的核心,各種優化設定都沒有改善。

最終結論:

- GPU加速首選TensorRT;

- CPU加速,單圖推理首選OpenVINO,多圖並行推理可選擇ONNXRuntime;

- 如果需要兼具CPU和GPU推理功能,可選擇ONNXRuntime。

參考資料

1. openvino2022版安裝設定與C++SDK開發詳解

2. https://github.com/NVIDIA/TensorRT

3. https://github.com/wang-xinyu/tensorrtx

4. 【TensorRT】TensorRT 部署Yolov5模型(C++)