深度學習--LSTM網路、使用方法、實戰情感分類問題

2023-04-26 15:00:42

深度學習--LSTM網路、使用方法、實戰情感分類問題

1.LSTM基礎

長短期記憶網路(Long Short-Term Memory,簡稱LSTM),是RNN的一種,為了解決RNN存在長期依賴問題而設計出來的。

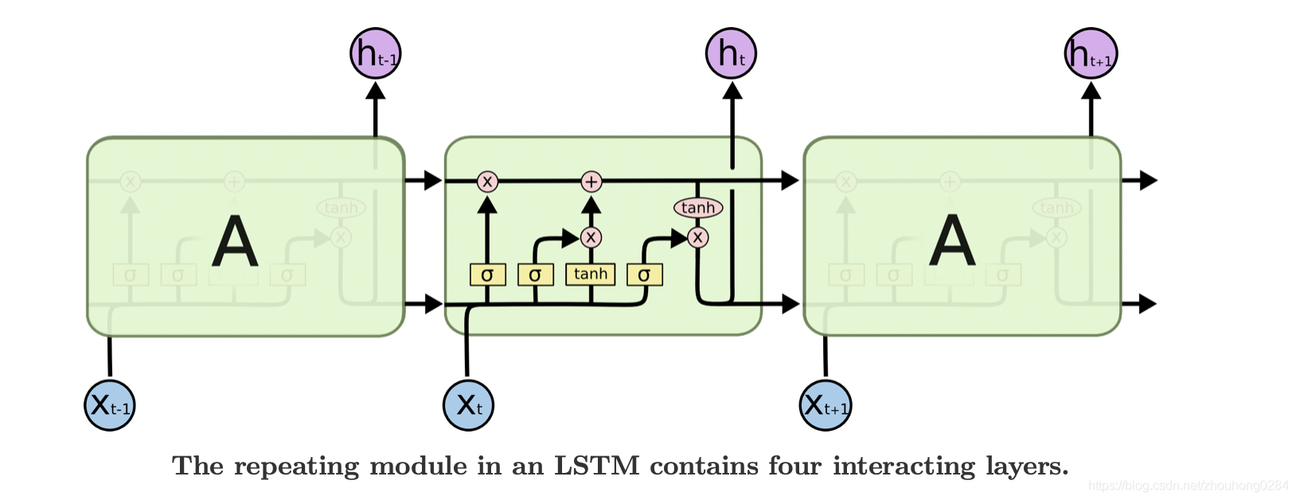

LSTM的基本結構:

2.LSTM的具體說明

LSTM與RNN的結構相比,在引數更新的過程中,增加了三個門,由左到右分別是遺忘門(也稱記憶門)、輸入門、輸出門。

圖片來源:

1.點乘操作決定多少資訊可以傳送過去,當為0時,不傳送;當為1時,全部傳送。

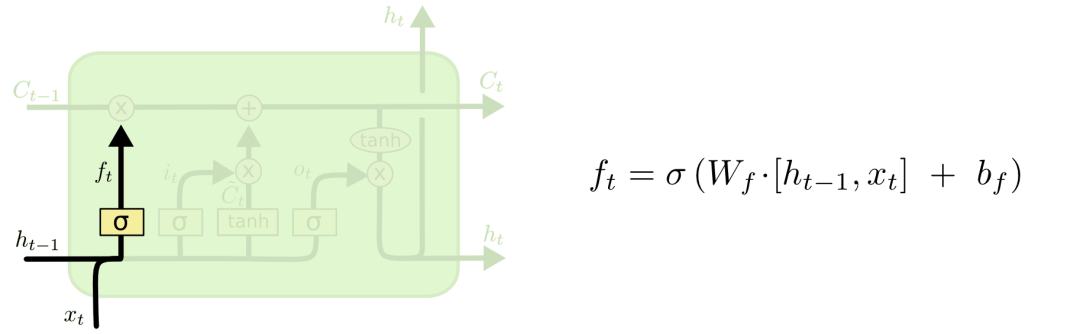

2.1 遺忘門

對於輸入xt和ht-1,遺忘門會輸出一個值域為[0, 1]的數位,放進Ct−1中。當為0時,全部刪除;當為1時,全部保留。

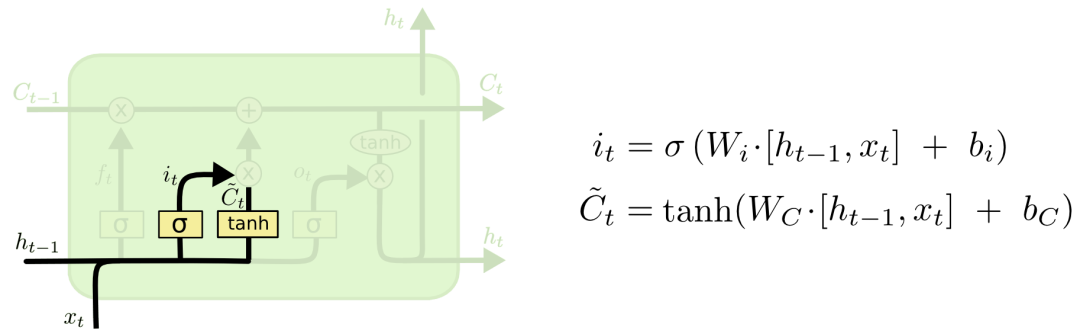

2.2 輸入門

對於對於輸入xt和ht-1,輸入門會選擇資訊的去留,並且通過tanh啟用函數更新臨時Ct

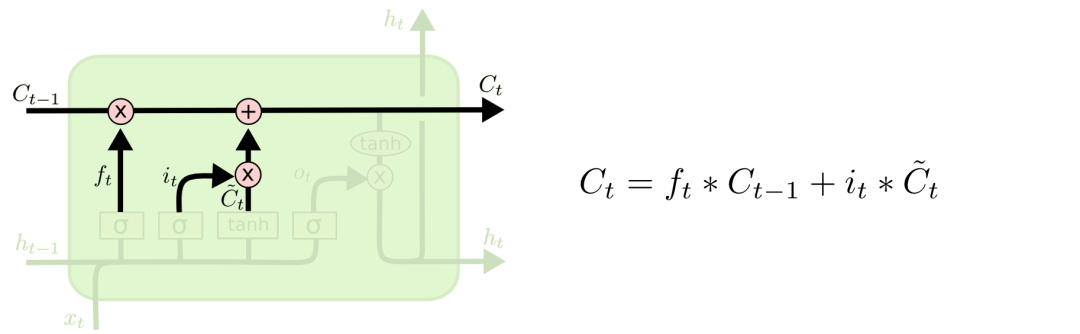

通過遺忘門和輸入門輸出累加,更新最終的Ct

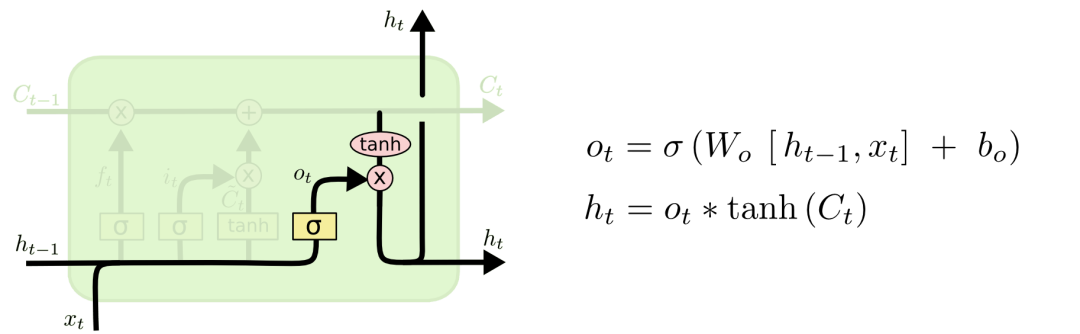

2.3輸出門

通過Ct和輸出門,更新memory

3.PyTorch的LSTM使用方法

-

__ init __(input _ size, hidden_size,num _layers)

-

LSTM.foward():

out,[ht,ct] = lstm(x,[ht-1,ct-1])

x:[一句話單詞數,batch幾句話,表示的維度]

h/c:[層數,batch,記憶(引數)的維度]

out:[一句話單詞數,batch,引數的維度]

import torch

import torch.nn as nn

lstm = nn.LSTM(input_size = 100,hidden_size = 20,num_layers = 4)

print(lstm)

#LSTM(100, 20, num_layers=4)

x = torch.randn(10,3,100)

out,(h,c)=lstm(x)

print(out.shape,h.shape,c.shape)

#torch.Size([10, 3, 20]) torch.Size([4, 3, 20]) torch.Size([4, 3, 20])

單層使用方法:

cell = nn.LSTMCell(input_size = 100,hidden_size=20)

x = torch.randn(10,3,100)

h = torch.zeros(3,20)

c = torch.zeros(3,20)

for xt in x:

h,c = cell(xt,[h,c])

print(h.shape,c.shape)

#torch.Size([3, 20]) torch.Size([3, 20])

LSTM實戰--情感分類問題

Google CoLab環境,需要魔法。

import torch

from torch import nn, optim

from torchtext import data, datasets

print('GPU:', torch.cuda.is_available())

torch.manual_seed(123)

TEXT = data.Field(tokenize='spacy')

LABEL = data.LabelField(dtype=torch.float)

train_data, test_data = datasets.IMDB.splits(TEXT, LABEL)

print('len of train data:', len(train_data))

print('len of test data:', len(test_data))

print(train_data.examples[15].text)

print(train_data.examples[15].label)

# word2vec, glove

TEXT.build_vocab(train_data, max_size=10000, vectors='glove.6B.100d')

LABEL.build_vocab(train_data)

batchsz = 30

device = torch.device('cuda')

train_iterator, test_iterator = data.BucketIterator.splits(

(train_data, test_data),

batch_size = batchsz,

device=device

)

class RNN(nn.Module):

def __init__(self, vocab_size, embedding_dim, hidden_dim):

"""

"""

super(RNN, self).__init__()

# [0-10001] => [100]

self.embedding = nn.Embedding(vocab_size, embedding_dim)

# [100] => [256]

self.rnn = nn.LSTM(embedding_dim, hidden_dim, num_layers=2,

bidirectional=True, dropout=0.5)

# [256*2] => [1]

self.fc = nn.Linear(hidden_dim*2, 1)

self.dropout = nn.Dropout(0.5)

def forward(self, x):

"""

x: [seq_len, b] vs [b, 3, 28, 28]

"""

# [seq, b, 1] => [seq, b, 100]

embedding = self.dropout(self.embedding(x))

# output: [seq, b, hid_dim*2]

# hidden/h: [num_layers*2, b, hid_dim]

# cell/c: [num_layers*2, b, hid_di]

output, (hidden, cell) = self.rnn(embedding)

# [num_layers*2, b, hid_dim] => 2 of [b, hid_dim] => [b, hid_dim*2]

hidden = torch.cat([hidden[-2], hidden[-1]], dim=1)

# [b, hid_dim*2] => [b, 1]

hidden = self.dropout(hidden)

out = self.fc(hidden)

return out

rnn = RNN(len(TEXT.vocab), 100, 256)

pretrained_embedding = TEXT.vocab.vectors

print('pretrained_embedding:', pretrained_embedding.shape)

rnn.embedding.weight.data.copy_(pretrained_embedding)

print('embedding layer inited.')

optimizer = optim.Adam(rnn.parameters(), lr=1e-3)

criteon = nn.BCEWithLogitsLoss().to(device)

rnn.to(device)

import numpy as np

def binary_acc(preds, y):

"""

get accuracy

"""

preds = torch.round(torch.sigmoid(preds))

correct = torch.eq(preds, y).float()

acc = correct.sum() / len(correct)

return acc

def train(rnn, iterator, optimizer, criteon):

avg_acc = []

rnn.train()

for i, batch in enumerate(iterator):

# [seq, b] => [b, 1] => [b]

pred = rnn(batch.text).squeeze(1)

#

loss = criteon(pred, batch.label)

acc = binary_acc(pred, batch.label).item()

avg_acc.append(acc)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i%10 == 0:

print(i, acc)

avg_acc = np.array(avg_acc).mean()

print('avg acc:', avg_acc)

def eval(rnn, iterator, criteon):

avg_acc = []

rnn.eval()

with torch.no_grad():

for batch in iterator:

# [b, 1] => [b]

pred = rnn(batch.text).squeeze(1)

#

loss = criteon(pred, batch.label)

acc = binary_acc(pred, batch.label).item()

avg_acc.append(acc)

avg_acc = np.array(avg_acc).mean()

print('>>test:', avg_acc)

for epoch in range(10):

eval(rnn, test_iterator, criteon)

train(rnn, train_iterator, optimizer, criteon)