基於kubeasz部署高可用k8s叢集

在部署高可用k8s之前,我們先來說一說單master架構和多master架構,以及多master架構中各元件工作邏輯

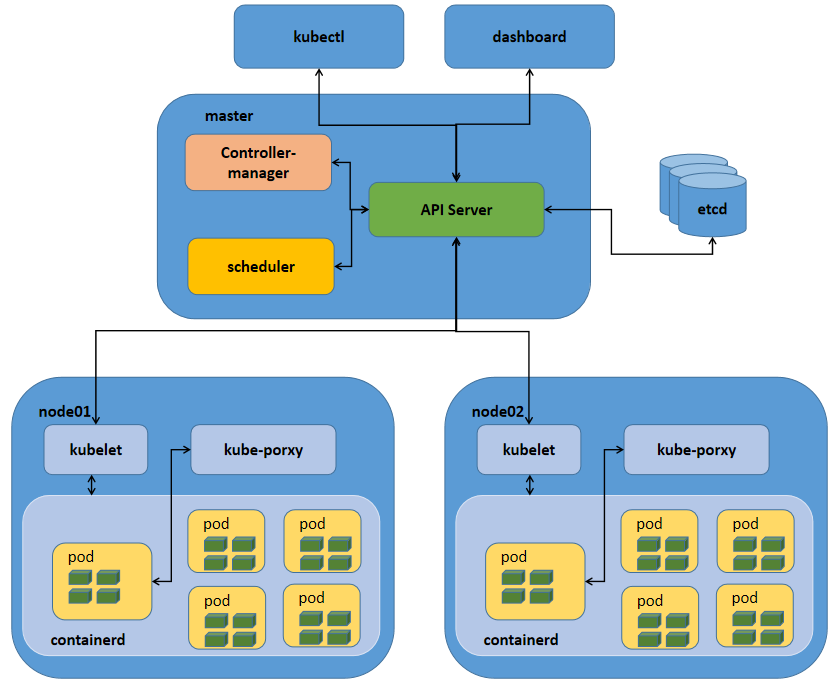

k8s單master架構

提示:這種單master節點的架構,通常只用於測試環境,生產環境絕對不允許;這是因為k8s叢集master的節點是單點,一旦master節點宕機,將導致整個叢集不可用;其次單master節點apiServer是效能瓶頸;從上圖我們就可以看到,master節點所有元件和node節點中的kubelet和使用者端kubectl、dashboard都會連線apiserver,同時apiserver還要負責往etcd中更新或讀取資料,對使用者端的請求做認證、准入控制等;很顯然apiserver此時是非常忙碌的,極易成為整個K8S叢集的瓶頸;所以不推薦在生產環境中使用單master架構;

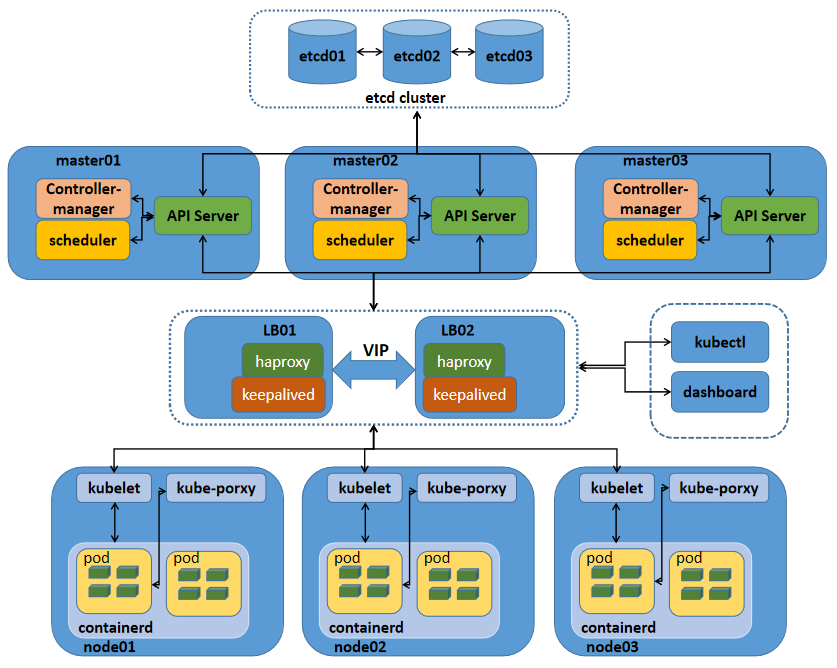

k8s多master架構

提示:k8s高可用主要是對master節點元件高可用;其中apiserver高可用的邏輯就是通過啟用多個範例來對apiserver做高可用;apiserver從某種角度講它應該是一個有狀態服務,但為了降低apiserver的複雜性,apiserver將資料儲存到etcd中,從而使得apiserver從有狀態服務變成了一個無狀態服務;所以高可用apiserver我們只需要啟用多個範例通過一個負載均衡器來反向代理多個apiserver,使用者端和node的節點的kubelet通過負載均衡器來連線apiserver即可;對於controller-manager、scheduler這兩個元件來說,高可用的邏輯也是啟用多個範例來實現的,不同與apiserver,這兩個元件由於工作邏輯的獨特性,一個k8s叢集中有且只有一個controller-manager和scheduler在工作,所以啟動多個範例它們必須工作在主備模式,即一個active,多個backup的模式;它們通過分散式鎖的方式實現內部選舉,決定誰來工作,最終搶到分散式鎖(k8s叢集endpoint)的controller-manager、scheduler成為active狀態代表叢集controller-manager、scheduler元件工作,搶到鎖的controller-manager和scheduler會週期性的向apiserver通告自己的心跳資訊,以維護自己active狀態,避免其他controller-manager、scheduler進行搶佔;其他controller-manager、scheduler收到活動的controller-manager、scheduler通過的心跳資訊後自動切換為backup狀態;一旦在規定時間備用controller-manager、scheduler沒有收到活動的controller-manager、scheduler的心跳,此時就會觸發選舉,重複上述過程;

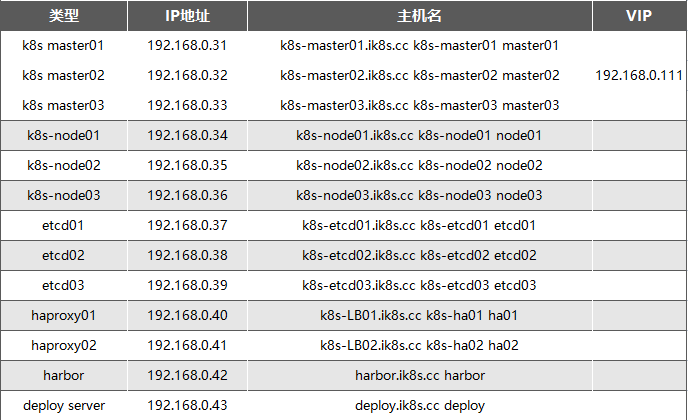

伺服器規劃

基礎環境部署

重新生成machine-id

root@k8s-deploy:~# cat /etc/machine-id 1d2aeda997bd417c838377e601fd8e10 root@k8s-deploy:~# rm -rf /etc/machine-id && dbus-uuidgen --ensure=/etc/machine-id && cat /etc/machine-id 8340419bb01397bf654c596f6443cabf root@k8s-deploy:~#

提示:如果你的環境是通過某一個虛擬機器器基於快照克隆出來的虛擬機器器,很有可能對應machine-id一樣,可以通過上述命令將對應虛擬機器器的machine-id修改成不一樣;注意上述命令不能再crt中同時對多個虛擬機器器執行,同時對多個虛擬機器器執行,生成的machine-id是一樣的;

核心引數優化

root@deploy:~# cat /etc/sysctl.conf net.ipv4.ip_forward=1 vm.max_map_count=262144 kernel.pid_max=4194303 fs.file-max=1000000 net.ipv4.tcp_max_tw_buckets=6000 net.netfilter.nf_conntrack_max=2097152 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0

系統資源限制

root@deploy:~# tail -10 /etc/security/limits.conf root soft core unlimited root hard core unlimited root soft nproc 1000000 root hard nproc 1000000 root soft nofile 1000000 root hard nofile 1000000 root soft memlock 32000 root hard memlock 32000 root soft msgqueue 8192000 root hard msgqueue 8192000 root@deploy:~#

核心模組掛載

root@deploy:~# cat /etc/modules-load.d/modules.conf # /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. ip_vs ip_vs_lc ip_vs_lblc ip_vs_lblcr ip_vs_rr ip_vs_wrr ip_vs_sh ip_vs_dh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh ip_tables ip_set ipt_set ipt_rpfilter ipt_REJECT ipip xt_set br_netfilter nf_conntrack overlay root@deploy:~#

禁用SWAP

root@deploy:~# free -mh

total used free shared buff/cache available

Mem: 3.8Gi 249Mi 3.3Gi 1.0Mi 244Mi 3.3Gi

Swap: 3.8Gi 0B 3.8Gi

root@deploy:~# swapoff -a

root@deploy:~# sed -i '/swap/s@^@#@' /etc/fstab

root@deploy:~# cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

# / was on /dev/ubuntu-vg/ubuntu-lv during curtin installation

/dev/disk/by-id/dm-uuid-LVM-yecQxSAXrKdCNj1XNrQeaacvLAmKdL5SVadOXV0zHSlfkdpBEsaVZ9erw8Ac9gpm / ext4 defaults 0 1

# /boot was on /dev/sda2 during curtin installation

/dev/disk/by-uuid/80fe59b8-eb79-4ce9-a87d-134bc160e976 /boot ext4 defaults 0 1

#/swap.img none swap sw 0 0

root@deploy:~#

提示:以上操作建議在每個節點都做一下,然後把所有節點都重啟;

1、基於keepalived及haproxy部署高可用負載均衡

下載安裝keepalived和haproxy

root@k8s-ha01:~#apt update && apt install keepalived haproxy -y root@k8s-ha02:~#apt update && apt install keepalived haproxy -y

在ha01上建立/etc/keepalived/keepalived.conf

root@k8s-ha01:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface ens160

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.111 dev ens160 label ens160:0

}

}

root@k8s-ha01:~#

將組態檔複製給ha02

root@k8s-ha01:~# scp /etc/keepalived/keepalived.conf ha02:/etc/keepalived/keepalived.conf keepalived.conf 100% 545 896.6KB/s 00:00 root@k8s-ha01:~#

在ha02上編輯/etc/keepalived/keepalived.conf

提示:ha02上主要修改優先順序和宣告角色狀態,如上圖所示;

在ha02上啟動keepalived並設定開機啟動

root@k8s-ha02:~# systemctl start keepalived root@k8s-ha02:~# systemctl enable keepalived Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable keepalived root@k8s-ha02:~#

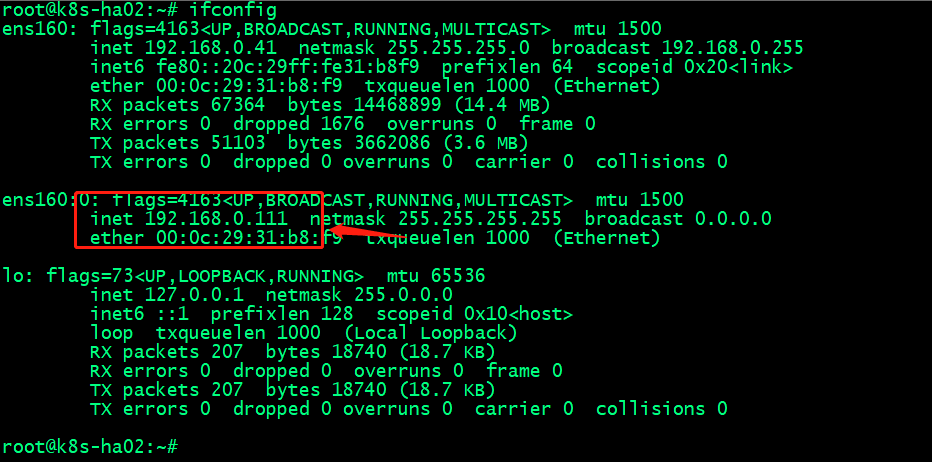

驗證:在ha02上檢視對應vip是否存在?

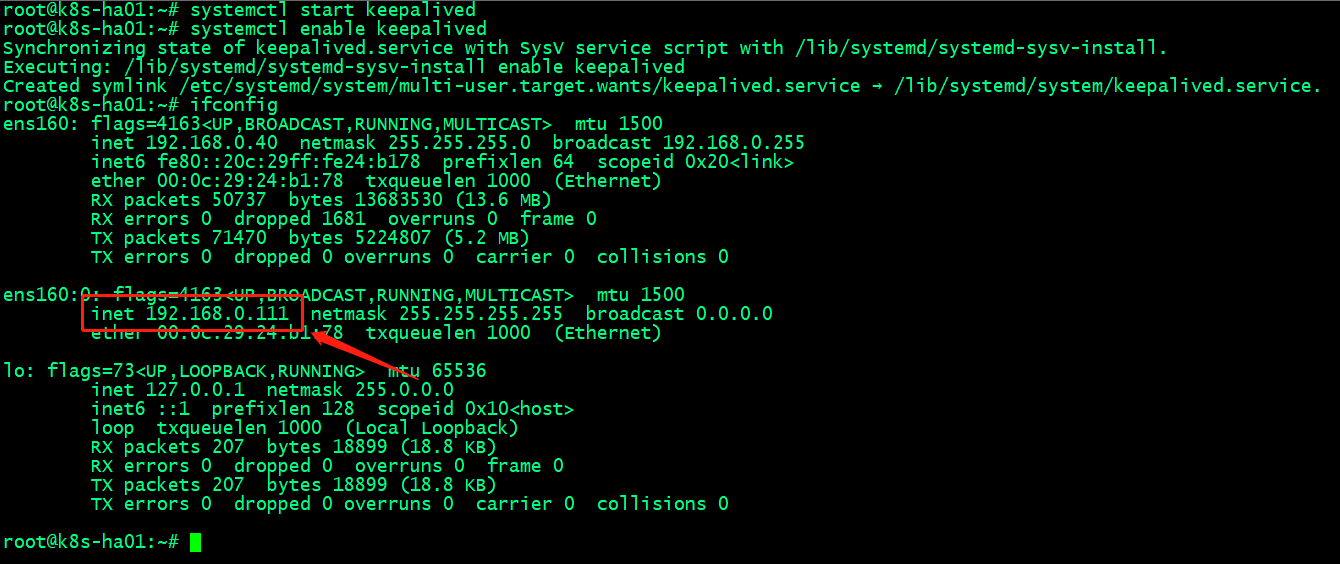

在ha01啟動keepalived並設定為開機啟動,看看對應vip是否會漂移至ha01呢?

提示:可以看到在ha01上啟動keepalived以後,對應vip就漂移到ha01上了;這是因為ha01上的keepalived的優先順序要比ha02高;

測試:停止ha01上的keepalived,看看vip是否會漂移至ha02上呢?

提示:能夠看到在ha01停止keepalived以後,對應vip會自動漂移至ha02;

驗證:用叢集其他主機ping vip看看對應是否能夠ping通呢?

root@k8s-node03:~# ping 192.168.0.111 PING 192.168.0.111 (192.168.0.111) 56(84) bytes of data. 64 bytes from 192.168.0.111: icmp_seq=1 ttl=64 time=2.04 ms 64 bytes from 192.168.0.111: icmp_seq=2 ttl=64 time=1.61 ms ^C --- 192.168.0.111 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1005ms rtt min/avg/max/mdev = 1.611/1.827/2.043/0.216 ms root@k8s-node03:~#

提示:能夠用叢集其他主機ping vip說明vip是可用的,至此keepalived設定好了;

設定haproxy

編輯/etc/haproxy/haproxy.cfg

root@k8s-ha01:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s_apiserver_6443

bind 192.168.0.111:6443

mode tcp

#balance leastconn

server k8s-master01 192.168.0.31:6443 check inter 2000 fall 3 rise 5

server k8s-master02 192.168.0.32:6443 check inter 2000 fall 3 rise 5

server k8s-master03 192.168.0.33:6443 check inter 2000 fall 3 rise 5

root@k8s-ha01:~#

把上述設定複製給ha02

root@k8s-ha01:~# scp /etc/haproxy/haproxy.cfg ha02:/etc/haproxy/haproxy.cfg haproxy.cfg 100% 1591 1.7MB/s 00:00 root@k8s-ha01:~#

在ha01上啟動haproxy,並將haproxy設定為開機啟動

root@k8s-ha01:~# systemctl start haproxy

Job for haproxy.service failed because the control process exited with error code.

See "systemctl status haproxy.service" and "journalctl -xeu haproxy.service" for details.

root@k8s-ha01:~# systemctl status haproxy

× haproxy.service - HAProxy Load Balancer

Loaded: loaded (/lib/systemd/system/haproxy.service; disabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Sat 2023-04-22 12:13:34 UTC; 6s ago

Docs: man:haproxy(1)

file:/usr/share/doc/haproxy/configuration.txt.gz

Process: 1281 ExecStartPre=/usr/sbin/haproxy -Ws -f $CONFIG -c -q $EXTRAOPTS (code=exited, status=0/SUCCESS)

Process: 1283 ExecStart=/usr/sbin/haproxy -Ws -f $CONFIG -p $PIDFILE $EXTRAOPTS (code=exited, status=1/FAILURE)

Main PID: 1283 (code=exited, status=1/FAILURE)

CPU: 141ms

Apr 22 12:13:34 k8s-ha01.ik8s.cc systemd[1]: haproxy.service: Scheduled restart job, restart counter is at 5.

Apr 22 12:13:34 k8s-ha01.ik8s.cc systemd[1]: Stopped HAProxy Load Balancer.

Apr 22 12:13:34 k8s-ha01.ik8s.cc systemd[1]: haproxy.service: Start request repeated too quickly.

Apr 22 12:13:34 k8s-ha01.ik8s.cc systemd[1]: haproxy.service: Failed with result 'exit-code'.

Apr 22 12:13:34 k8s-ha01.ik8s.cc systemd[1]: Failed to start HAProxy Load Balancer.

root@k8s-ha01:~#

提示:上面報錯是因為預設情況下核心不允許監聽本機不存在的socket,我們需要修改核心引數允許本機監聽不存在的socket;

修改核心引數

root@k8s-ha01:~# sysctl -a |grep bind net.ipv4.ip_autobind_reuse = 0 net.ipv4.ip_nonlocal_bind = 0 net.ipv6.bindv6only = 0 net.ipv6.ip_nonlocal_bind = 0 root@k8s-ha01:~# echo "net.ipv4.ip_nonlocal_bind = 1">> /etc/sysctl.conf root@k8s-ha01:~# cat /etc/sysctl.conf net.ipv4.ip_forward=1 vm.max_map_count=262144 kernel.pid_max=4194303 fs.file-max=1000000 net.ipv4.tcp_max_tw_buckets=6000 net.netfilter.nf_conntrack_max=2097152 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 net.ipv4.ip_nonlocal_bind = 1 root@k8s-ha01:~# sysctl -p net.ipv4.ip_forward = 1 vm.max_map_count = 262144 kernel.pid_max = 4194303 fs.file-max = 1000000 net.ipv4.tcp_max_tw_buckets = 6000 net.netfilter.nf_conntrack_max = 2097152 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness = 0 net.ipv4.ip_nonlocal_bind = 1 root@k8s-ha01:~#

驗證:重啟haproxy 看看是能夠正常監聽6443?

root@k8s-ha01:~# systemctl restart haproxy

root@k8s-ha01:~# systemctl status haproxy

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/lib/systemd/system/haproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2023-04-22 12:19:50 UTC; 7s ago

Docs: man:haproxy(1)

file:/usr/share/doc/haproxy/configuration.txt.gz

Process: 1441 ExecStartPre=/usr/sbin/haproxy -Ws -f $CONFIG -c -q $EXTRAOPTS (code=exited, status=0/SUCCESS)

Main PID: 1443 (haproxy)

Tasks: 5 (limit: 4571)

Memory: 70.1M

CPU: 309ms

CGroup: /system.slice/haproxy.service

├─1443 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

└─1445 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1443]: [WARNING] (1443) : parsing [/etc/haproxy/haproxy.cfg:23] : 'option httplog' not usable with proxy 'k8s

_apiserver_6443' (needs 'mode http'). Falling back to 'option tcplog'.

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1443]: [NOTICE] (1443) : New worker #1 (1445) forked

Apr 22 12:19:50 k8s-ha01.ik8s.cc systemd[1]: Started HAProxy Load Balancer.

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1445]: [WARNING] (1445) : Server k8s_apiserver_6443/k8s-master01 is DOWN, reason: Layer4 connection problem,

info: "Connection refused", check duration: 0ms. 2 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1445]: [NOTICE] (1445) : haproxy version is 2.4.18-0ubuntu1.3

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1445]: [NOTICE] (1445) : path to executable is /usr/sbin/haproxy

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1445]: [ALERT] (1445) : sendmsg()/writev() failed in logger #1: No such file or directory (errno=2)

Apr 22 12:19:50 k8s-ha01.ik8s.cc haproxy[1445]: [WARNING] (1445) : Server k8s_apiserver_6443/k8s-master02 is DOWN, reason: Layer4 connection problem,

info: "Connection refused", check duration: 0ms. 1 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

Apr 22 12:19:51 k8s-ha01.ik8s.cc haproxy[1445]: [WARNING] (1445) : Server k8s_apiserver_6443/k8s-master03 is DOWN, reason: Layer4 connection problem,

info: "Connection refused", check duration: 0ms. 0 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

Apr 22 12:19:51 k8s-ha01.ik8s.cc haproxy[1445]: [ALERT] (1445) : proxy 'k8s_apiserver_6443' has no server available!

root@k8s-ha01:~# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 192.168.0.111:6443 0.0.0.0:*

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

root@k8s-ha01:~#

提示:可用看到修改核心引數以後,重啟haproxy對應vip的6443就在本地監聽了;對應ha02也需要修改核心引數,然後將haproxy啟動並設定為開機啟動;

重啟ha02上面的haproxy並設定為開機啟動

root@k8s-ha02:~# systemctl restart haproxy root@k8s-ha02:~# systemctl enable haproxy Synchronizing state of haproxy.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable haproxy root@k8s-ha02:~# ss -tnl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* LISTEN 0 4096 192.168.0.111:6443 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* root@k8s-ha02:~#

提示:現在不管vip在那個節點,對應請求都會根據vip遷移而隨之遷移;至此基於keepalived及haproxy部署高可用負載均衡器就部署完成;

2、部署https harbor服務提供映象的分發

在harbor伺服器上設定docker-ce的源

root@harbor:~# apt-get update && apt-get -y install apt-transport-https ca-certificates curl software-properties-common && curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - && add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" && apt-get -y update

在harbor伺服器上安裝docker和docker-compose

root@harbor:~# apt-cache madison docker-ce docker-ce | 5:23.0.3-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.2-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.1-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.0-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.24~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.23~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.22~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.21~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.20~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.19~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.18~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.17~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.16~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.15~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.14~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.13~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages root@harbor:~# apt install -y docker-ce=5:20.10.19~3-0~ubuntu-jammy

驗證docker版本

oot@harbor:~# docker version Client: Docker Engine - Community Version: 23.0.3 API version: 1.41 (downgraded from 1.42) Go version: go1.19.7 Git commit: 3e7cbfd Built: Tue Apr 4 22:05:48 2023 OS/Arch: linux/amd64 Context: default Server: Docker Engine - Community Engine: Version: 20.10.19 API version: 1.41 (minimum version 1.12) Go version: go1.18.7 Git commit: c964641 Built: Thu Oct 13 16:44:47 2022 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.6.20 GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38 runc: Version: 1.1.5 GitCommit: v1.1.5-0-gf19387a docker-init: Version: 0.19.0 GitCommit: de40ad0 root@harbor:~#

下載docker-compose二進位制檔案

root@harbor:~# wget https://github.com/docker/compose/releases/download/v2.17.2/docker-compose-linux-x86_64 -o /usr/local/bin/docker-compose

給docker-compose二進位制檔案加上可執行許可權,並驗證docker-compose的版本

root@harbor:~# cd /usr/local/bin/ root@harbor:/usr/local/bin# ll total 53188 drwxr-xr-x 2 root root 4096 Apr 22 07:05 ./ drwxr-xr-x 10 root root 4096 Feb 17 17:19 ../ -rw-r--r-- 1 root root 54453847 Apr 22 07:03 docker-compose root@harbor:/usr/local/bin# chmod a+x docker-compose root@harbor:/usr/local/bin# cd root@harbor:~# docker-compose -v Docker Compose version v2.17.2 root@harbor:~#

下載harbor離線安裝包

root@harbor:~# wget https://github.com/goharbor/harbor/releases/download/v2.8.0/harbor-offline-installer-v2.8.0.tgz

建立存放harbor離線安裝包目錄,並將離線安裝包解壓於對應目錄

root@harbor:~# ls harbor-offline-installer-v2.8.0.tgz root@harbor:~# mkdir /app root@harbor:~# tar xf harbor-offline-installer-v2.8.0.tgz -C /app/ root@harbor:~# cd /app/ root@harbor:/app# ls harbor root@harbor:/app#

建立存放證書的目錄certs

root@harbor:/app# ls harbor root@harbor:/app# mkdir certs root@harbor:/app# ls certs harbor root@harbor:/app#

上傳證書

root@harbor:/app/certs# ls 9529909_harbor.ik8s.cc_nginx.zip root@harbor:/app/certs# unzip 9529909_harbor.ik8s.cc_nginx.zip Archive: 9529909_harbor.ik8s.cc_nginx.zip Aliyun Certificate Download inflating: 9529909_harbor.ik8s.cc.pem inflating: 9529909_harbor.ik8s.cc.key root@harbor:/app/certs# ls 9529909_harbor.ik8s.cc.key 9529909_harbor.ik8s.cc.pem 9529909_harbor.ik8s.cc_nginx.zip root@harbor:/app/certs#

複製harbor設定模板為harbor.yaml

root@harbor:/app/certs# cd .. root@harbor:/app# ls certs harbor root@harbor:/app# cd harbor/ root@harbor:/app/harbor# ls LICENSE common.sh harbor.v2.8.0.tar.gz harbor.yml.tmpl install.sh prepare root@harbor:/app/harbor# cp harbor.yml.tmpl harbor.yml root@harbor:/app/harbor#

編輯harbor.yaml檔案

root@harbor:/app/harbor# grep -v "#" harbor.yml | grep -v "^#"

hostname: harbor.ik8s.cc

http:

port: 80

https:

port: 443

certificate: /app/certs/9529909_harbor.ik8s.cc.pem

private_key: /app/certs/9529909_harbor.ik8s.cc.key

harbor_admin_password: admin123.com

database:

password: root123

max_idle_conns: 100

max_open_conns: 900

conn_max_lifetime: 5m

conn_max_idle_time: 0

data_volume: /data

trivy:

ignore_unfixed: false

skip_update: false

offline_scan: false

security_check: vuln

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 3

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor

_version: 2.8.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- trivy

upload_purging:

enabled: true

age: 168h

interval: 24h

dryrun: false

cache:

enabled: false

expire_hours: 24

root@harbor:/app/harbor#

提示:上述組態檔修改了hostname,這個主要用來指定證書中站點域名,這個必須和證書籤發時指定的域名一樣;其次是證書和私鑰的路徑以及harbor預設登入密碼;

根據組態檔中指定路徑來建立存放harbor的資料目錄

root@harbor:/app/harbor# grep "data_volume" harbor.yml data_volume: /data root@harbor:/app/harbor# mkdir /data root@harbor:/app/harbor#

提示:為了避免資料丟失建議這個目錄是掛載網路檔案系統,如nfs;

執行harbor部署

root@harbor:/app/harbor# ./install.sh --with-notary --with-trivy

[Step 0]: checking if docker is installed ...

Note: docker version: 23.0.3

[Step 1]: checking docker-compose is installed ...

Note: Docker Compose version v2.17.2

[Step 2]: loading Harbor images ...

17d981d1fd47: Loading layer [==================================================>] 37.78MB/37.78MB

31886c65da47: Loading layer [==================================================>] 99.07MB/99.07MB

22b8b3f55675: Loading layer [==================================================>] 3.584kB/3.584kB

e0d07daed386: Loading layer [==================================================>] 3.072kB/3.072kB

192e4941b719: Loading layer [==================================================>] 2.56kB/2.56kB

ea466c659008: Loading layer [==================================================>] 3.072kB/3.072kB

0a9da2a9c15e: Loading layer [==================================================>] 3.584kB/3.584kB

b8d43ab61309: Loading layer [==================================================>] 20.48kB/20.48kB

Loaded image: goharbor/harbor-log:v2.8.0

91ff9ec8c599: Loading layer [==================================================>] 5.762MB/5.762MB

7b3b74d0bc46: Loading layer [==================================================>] 9.137MB/9.137MB

415c34d8de89: Loading layer [==================================================>] 14.47MB/14.47MB

d5f96f4cee68: Loading layer [==================================================>] 29.29MB/29.29MB

2e13bf4c5a45: Loading layer [==================================================>] 22.02kB/22.02kB

3065ef318899: Loading layer [==================================================>] 14.47MB/14.47MB

Loaded image: goharbor/notary-signer-photon:v2.8.0

10b1cdff4db0: Loading layer [==================================================>] 5.767MB/5.767MB

8ca511ff01d7: Loading layer [==================================================>] 4.096kB/4.096kB

c561ee469bc5: Loading layer [==================================================>] 17.57MB/17.57MB

88b0cf5853d2: Loading layer [==================================================>] 3.072kB/3.072kB

f68cc37aeda4: Loading layer [==================================================>] 31.01MB/31.01MB

f96735fc99d1: Loading layer [==================================================>] 49.37MB/49.37MB

Loaded image: goharbor/harbor-registryctl:v2.8.0

117dfa0ad222: Loading layer [==================================================>] 8.91MB/8.91MB

fad9e0a04e3e: Loading layer [==================================================>] 25.92MB/25.92MB

b5e945e047c5: Loading layer [==================================================>] 4.608kB/4.608kB

5b87a66594e3: Loading layer [==================================================>] 26.71MB/26.71MB

Loaded image: goharbor/harbor-exporter:v2.8.0

844a11bc472a: Loading layer [==================================================>] 91.99MB/91.99MB

329ec42b7278: Loading layer [==================================================>] 3.072kB/3.072kB

479889c4a17d: Loading layer [==================================================>] 59.9kB/59.9kB

9d7cf0ba93a4: Loading layer [==================================================>] 61.95kB/61.95kB

Loaded image: goharbor/redis-photon:v2.8.0

d78edf9b37a0: Loading layer [==================================================>] 5.762MB/5.762MB

6b1886c87164: Loading layer [==================================================>] 9.137MB/9.137MB

8e04b3ba3694: Loading layer [==================================================>] 15.88MB/15.88MB

1859c0f529e2: Loading layer [==================================================>] 29.29MB/29.29MB

5a4832cc0365: Loading layer [==================================================>] 22.02kB/22.02kB

32e5f34311d8: Loading layer [==================================================>] 15.88MB/15.88MB

Loaded image: goharbor/notary-server-photon:v2.8.0

157c80352244: Loading layer [==================================================>] 44.11MB/44.11MB

ae9e333084d9: Loading layer [==================================================>] 65.86MB/65.86MB

9172f69ba869: Loading layer [==================================================>] 24.09MB/24.09MB

78d4f0b7a9dd: Loading layer [==================================================>] 65.54kB/65.54kB

66e1120f8426: Loading layer [==================================================>] 2.56kB/2.56kB

d2e29dcfd3b2: Loading layer [==================================================>] 1.536kB/1.536kB

e2862979f5b1: Loading layer [==================================================>] 12.29kB/12.29kB

f060948ced19: Loading layer [==================================================>] 2.621MB/2.621MB

4f1f83dea031: Loading layer [==================================================>] 416.8kB/416.8kB

Loaded image: goharbor/prepare:v2.8.0

b757f0470527: Loading layer [==================================================>] 8.909MB/8.909MB

45f3777da07b: Loading layer [==================================================>] 3.584kB/3.584kB

1ec69429b88c: Loading layer [==================================================>] 2.56kB/2.56kB

54ae653f9340: Loading layer [==================================================>] 47.5MB/47.5MB

2b5374d50351: Loading layer [==================================================>] 48.29MB/48.29MB

Loaded image: goharbor/harbor-jobservice:v2.8.0

138a75d30165: Loading layer [==================================================>] 6.295MB/6.295MB

37678a05d20c: Loading layer [==================================================>] 4.096kB/4.096kB

62ab39a1f583: Loading layer [==================================================>] 3.072kB/3.072kB

dc2c8ea056cc: Loading layer [==================================================>] 191.2MB/191.2MB

eef0034d3cf6: Loading layer [==================================================>] 14.03MB/14.03MB

8ef49e77e2da: Loading layer [==================================================>] 206MB/206MB

Loaded image: goharbor/trivy-adapter-photon:v2.8.0

7b11ded34da7: Loading layer [==================================================>] 5.767MB/5.767MB

db79ebbe62ed: Loading layer [==================================================>] 4.096kB/4.096kB

7008de4e1efa: Loading layer [==================================================>] 3.072kB/3.072kB

78e690f643e2: Loading layer [==================================================>] 17.57MB/17.57MB

c59eb6af140b: Loading layer [==================================================>] 18.36MB/18.36MB

Loaded image: goharbor/registry-photon:v2.8.0

697d673e9002: Loading layer [==================================================>] 91.15MB/91.15MB

73dc6648b3fc: Loading layer [==================================================>] 6.097MB/6.097MB

7c040ff2580b: Loading layer [==================================================>] 1.233MB/1.233MB

Loaded image: goharbor/harbor-portal:v2.8.0

ed7088f4a42d: Loading layer [==================================================>] 8.91MB/8.91MB

5fb2a39a2645: Loading layer [==================================================>] 3.584kB/3.584kB

eed4c9aebbc2: Loading layer [==================================================>] 2.56kB/2.56kB

5a03baf4cc2c: Loading layer [==================================================>] 59.24MB/59.24MB

23c80bc54f04: Loading layer [==================================================>] 5.632kB/5.632kB

f7e397f31506: Loading layer [==================================================>] 115.7kB/115.7kB

78504c142fac: Loading layer [==================================================>] 44.03kB/44.03kB

ec904722ce15: Loading layer [==================================================>] 60.19MB/60.19MB

4746711ff0cc: Loading layer [==================================================>] 2.56kB/2.56kB

Loaded image: goharbor/harbor-core:v2.8.0

6460b77e3fdb: Loading layer [==================================================>] 123.4MB/123.4MB

19620cea1000: Loading layer [==================================================>] 22.57MB/22.57MB

d9674d59d34c: Loading layer [==================================================>] 5.12kB/5.12kB

f3b8b5f2a0b2: Loading layer [==================================================>] 6.144kB/6.144kB

948463f03a69: Loading layer [==================================================>] 3.072kB/3.072kB

674b9b213d01: Loading layer [==================================================>] 2.048kB/2.048kB

77dff9e1e728: Loading layer [==================================================>] 2.56kB/2.56kB

7c74827c6695: Loading layer [==================================================>] 2.56kB/2.56kB

254ef4a11cdc: Loading layer [==================================================>] 2.56kB/2.56kB

23cb6acbaaad: Loading layer [==================================================>] 9.728kB/9.728kB

Loaded image: goharbor/harbor-db:v2.8.0

6ca8f9c9b7ce: Loading layer [==================================================>] 91.15MB/91.15MB

Loaded image: goharbor/nginx-photon:v2.8.0

[Step 3]: preparing environment ...

[Step 4]: preparing harbor configs ...

prepare base dir is set to /app/harbor

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

Generated and saved secret to file: /data/secret/keys/secretkey

Successfully called func: create_root_cert

Successfully called func: create_root_cert

Successfully called func: create_cert

Copying certs for notary signer

Copying nginx configuration file for notary

Generated configuration file: /config/nginx/conf.d/notary.upstream.conf

Generated configuration file: /config/nginx/conf.d/notary.server.conf

Generated configuration file: /config/notary/server-config.postgres.json

Generated configuration file: /config/notary/server_env

Generated and saved secret to file: /data/secret/keys/defaultalias

Generated configuration file: /config/notary/signer_env

Generated configuration file: /config/notary/signer-config.postgres.json

Generated configuration file: /config/trivy-adapter/env

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

Note: stopping existing Harbor instance ...

[Step 5]: starting Harbor ...

➜

Notary will be deprecated as of Harbor v2.6.0 and start to be removed in v2.8.0 or later.

You can use cosign for signature instead since Harbor v2.5.0.

Please see discussion here for more details. https://github.com/goharbor/harbor/discussions/16612

[+] Running 15/15

✔ Network harbor_harbor Created 0.2s

✔ Network harbor_notary-sig Created 0.2s

✔ Network harbor_harbor-notary Created 0.1s

✔ Container harbor-log Started 5.3s

✔ Container harbor-db Started 7.2s

✔ Container redis Started 6.4s

✔ Container registry Started 6.3s

✔ Container harbor-portal Started 6.9s

✔ Container registryctl Started 6.4s

✔ Container trivy-adapter Started 6.0s

✔ Container harbor-core Started 7.4s

✔ Container notary-signer Started 7.1s

✔ Container nginx Started 8.9s

✔ Container harbor-jobservice Started 8.8s

✔ Container notary-server Started 7.2s

✔ ----Harbor has been installed and started successfully.----

root@harbor:/app/harbor#

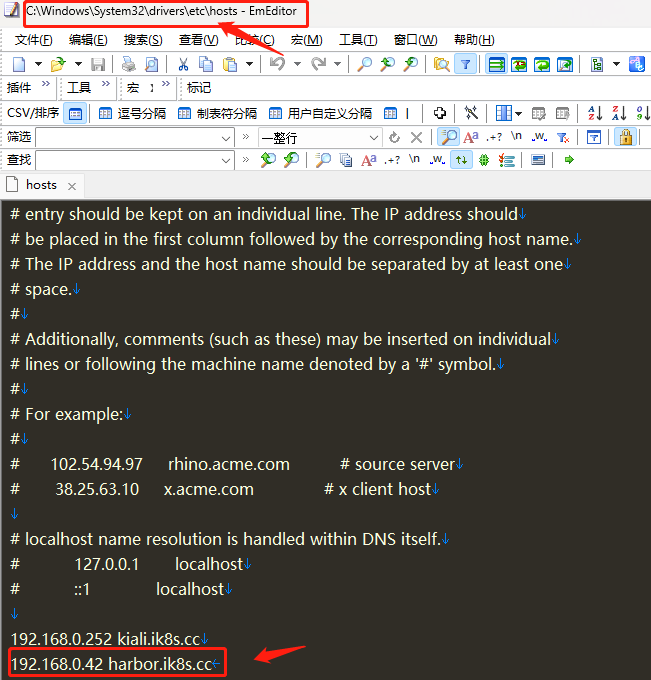

域名解析,將證書籤發時的域名指向harbor伺服器

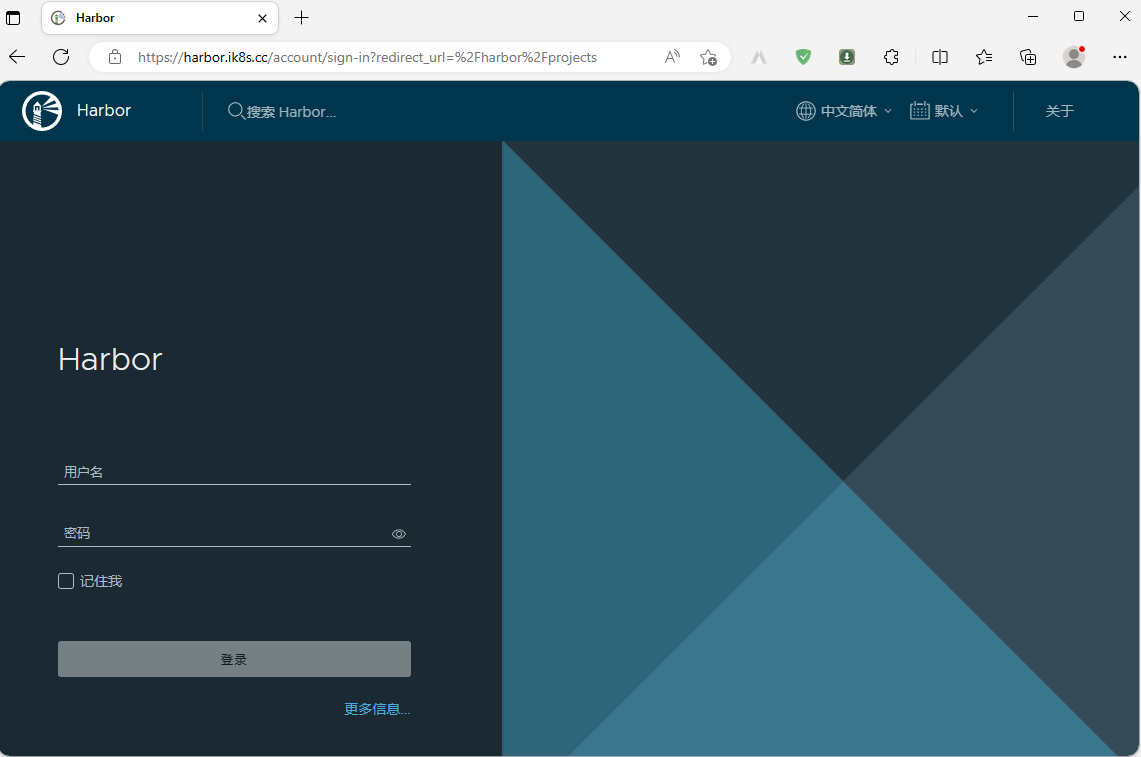

驗證:通過windows的瀏覽器存取harbor.ik8s.cc,看看對應harbor是否能夠正常存取到?

使用我設定的密碼登入harbor,看看是否可用正常登入?

建立專案是否可用正常建立?

提示:可用看到我們在web網頁上能夠成功建立專案;

擴充套件:給harbor提供service檔案,實現開機自動啟動

root@harbor:/app/harbor# cat /usr/lib/systemd/system/harbor.service [Unit] Description=Harbor After=docker.service systemd-networkd.service systemd-resolved.service Requires=docker.service Documentation=http://github.com/vmware/harbor [Service] Type=simple Restart=on-failure RestartSec=5 ExecStart=/usr/local/bin/docker-compose -f /app/harbor/docker-compose.yml up ExecStop=/usr/local/bin/docker-compose -f /app/harbor/docker-compose.yml down [Install] WantedBy=multi-user.target root@harbor:/app/harbor#

載入harbor.service重啟harbor並設定harbor開機自啟動

root@harbor:/app/harbor# systemctl root@harbor:/app/harbor# systemctl daemon-reload root@harbor:/app/harbor# systemctl restart harbor root@harbor:/app/harbor# systemctl enable harbor Created symlink /etc/systemd/system/multi-user.target.wants/harbor.service → /lib/systemd/system/harbor.service. root@harbor:/app/harbor#

3、測試基於nerdctl可以登入https harbor並能實現進行分發

nerdctl登入harbor

root@k8s-node02:~# nerdctl login harbor.ik8s.cc Enter Username: admin Enter Password: WARN[0005] skipping verifying HTTPS certs for "harbor.ik8s.cc" WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-node02:~#

提示:這裡也需要做域名解析;

測試從node02本地向harbor上傳映象

root@k8s-node02:~# nerdctl pull nginx WARN[0000] skipping verifying HTTPS certs for "docker.io" docker.io/library/nginx:latest: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:63b44e8ddb83d5dd8020327c1f40436e37a6fffd3ef2498a6204df23be6e7e94: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:f2fee5c7194cbbfb9d2711fa5de094c797a42a51aa42b0c8ee8ca31547c872b1: done |++++++++++++++++++++++++++++++++++++++| config-sha256:6efc10a0510f143a90b69dc564a914574973223e88418d65c1f8809e08dc0a1f: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:75576236abf5959ff23b741ed8c4786e244155b9265db5e6ecda9d8261de529f: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:26c5c85e47da3022f1bdb9a112103646c5c29517d757e95426f16e4bd9533405: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:8c767bdbc9aedd4bbf276c6f28aad18251cceacb768967c5702974ae1eac23cd: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:78e14bb05fd35b58587cd0c5ca2c2eb12b15031633ec30daa21c0ea3d2bb2a15: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:4f3256bdf66bf00bcec08043e67a80981428f0e0de12f963eac3c753b14d101d: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:2019c71d56550b97ce01e0b6ef8e971fec705186f2927d2cb109ac3e18edb0ac: done |++++++++++++++++++++++++++++++++++++++| elapsed: 41.1s total: 54.4 M (1.3 MiB/s) root@k8s-node02:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE nginx latest 63b44e8ddb83 10 seconds ago linux/amd64 149.8 MiB 54.4 MiB <none> <none> 63b44e8ddb83 10 seconds ago linux/amd64 149.8 MiB 54.4 MiB root@k8s-node02:~# nerdctl tag nginx harbor.ik8s.cc/baseimages/nginx:v1 root@k8s-node02:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE nginx latest 63b44e8ddb83 51 seconds ago linux/amd64 149.8 MiB 54.4 MiB harbor.ik8s.cc/baseimages/nginx v1 63b44e8ddb83 5 seconds ago linux/amd64 149.8 MiB 54.4 MiB <none> <none> 63b44e8ddb83 51 seconds ago linux/amd64 149.8 MiB 54.4 MiB root@k8s-node02:~# nerdctl push harbor.ik8s.cc/baseimages/nginx:v1 INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.list.v2+json, sha256:c45a31532f8fcd4db2302631bc1644322aa43c396fabbf3f9e9038ff09688c26) WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" index-sha256:c45a31532f8fcd4db2302631bc1644322aa43c396fabbf3f9e9038ff09688c26: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:f2fee5c7194cbbfb9d2711fa5de094c797a42a51aa42b0c8ee8ca31547c872b1: done |++++++++++++++++++++++++++++++++++++++| config-sha256:6efc10a0510f143a90b69dc564a914574973223e88418d65c1f8809e08dc0a1f: done |++++++++++++++++++++++++++++++++++++++| elapsed: 4.2 s total: 9.6 Ki (2.3 KiB/s) root@k8s-node02:~#

驗證:在web網頁上看我們上傳的nginx:v1映象是否在倉庫裡?

測試從harbor倉庫下載映象到本地

root@k8s-node03:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE root@k8s-node03:~# nerdctl login harbor.ik8s.cc Enter Username: admin Enter Password: WARN[0004] skipping verifying HTTPS certs for "harbor.ik8s.cc" WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-node03:~# nerdctl pull harbor.ik8s.cc/baseimages/nginx:v1 WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" harbor.ik8s.cc/baseimages/nginx:v1: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:c45a31532f8fcd4db2302631bc1644322aa43c396fabbf3f9e9038ff09688c26: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:f2fee5c7194cbbfb9d2711fa5de094c797a42a51aa42b0c8ee8ca31547c872b1: done |++++++++++++++++++++++++++++++++++++++| config-sha256:6efc10a0510f143a90b69dc564a914574973223e88418d65c1f8809e08dc0a1f: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:75576236abf5959ff23b741ed8c4786e244155b9265db5e6ecda9d8261de529f: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:2019c71d56550b97ce01e0b6ef8e971fec705186f2927d2cb109ac3e18edb0ac: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:26c5c85e47da3022f1bdb9a112103646c5c29517d757e95426f16e4bd9533405: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:8c767bdbc9aedd4bbf276c6f28aad18251cceacb768967c5702974ae1eac23cd: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:4f3256bdf66bf00bcec08043e67a80981428f0e0de12f963eac3c753b14d101d: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:78e14bb05fd35b58587cd0c5ca2c2eb12b15031633ec30daa21c0ea3d2bb2a15: done |++++++++++++++++++++++++++++++++++++++| elapsed: 7.3 s total: 54.4 M (7.4 MiB/s) root@k8s-node03:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE harbor.ik8s.cc/baseimages/nginx v1 c45a31532f8f 5 seconds ago linux/amd64 149.8 MiB 54.4 MiB <none> <none> c45a31532f8f 5 seconds ago linux/amd64 149.8 MiB 54.4 MiB root@k8s-node03:~#

通過上述測試,可以看到我們部署的harbor倉庫能夠實現上傳和下載images;至此基於商用公司的免費證書搭建https harbor倉庫就完成了;

4、基於kubeasz部署高可用kubernetes叢集

部署節點部署環境初始化

本次我們使用kubeasz專案來部署二進位制高可用k8s叢集;專案地址:https://github.com/easzlab/kubeasz;該專案使用ansible-playbook實現自動化,提供一件安裝指令碼,也可以分步驟執行安裝各元件;所以部署節點首先要安裝好ansible,其次該專案使用docker下載部署k8s過程中的各種映象以及二進位制,所以部署節點docker也需要安裝好,當然如果你的部署節點沒有安裝docker,它也會自動幫你安裝;

部署節點設定docker源

root@deploy:~# apt-get update && apt-get -y install apt-transport-https ca-certificates curl software-properties-common && curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - && add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" && apt-get -y update

部署節點安裝ansible、docker

root@deploy:~# apt-cache madison ansible docker-ce ansible | 2.10.7+merged+base+2.10.8+dfsg-1 | http://mirrors.aliyun.com/ubuntu jammy/universe amd64 Packages ansible | 2.10.7+merged+base+2.10.8+dfsg-1 | http://mirrors.aliyun.com/ubuntu jammy/universe Sources docker-ce | 5:23.0.4-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.3-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.2-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.1-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:23.0.0-1~ubuntu.22.04~jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.24~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.23~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.22~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.21~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.20~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.19~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.18~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.17~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.16~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.15~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.14~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages docker-ce | 5:20.10.13~3-0~ubuntu-jammy | https://mirrors.aliyun.com/docker-ce/linux/ubuntu jammy/stable amd64 Packages root@deploy:~# apt install ansible docker-ce -y

部署節點安裝sshpass命令⽤於同步公鑰到各k8s伺服器

root@deploy:~# apt install sshpass -y

部署節點生成金鑰對

root@deploy:~# ssh-keygen -t rsa-sha2-512 -b 4096 Generating public/private rsa-sha2-512 key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa Your public key has been saved in /root/.ssh/id_rsa.pub The key fingerprint is: SHA256:uZ7jOnS/r0FNsPRpvvachoFwrUo2X0wbJ2Ve/wm596I [email protected] The key's randomart image is: +---[RSA 4096]----+ | o | | . + .o .| | ..=+...| | ...==oo .| | So.=o=o o| | . =oo =o o.| | . +.=..oo. .| | ..o.oo.oo..| | .++.o+Eo+. | +----[SHA256]-----+ root@deploy:~#

編寫分發公鑰指令碼

root@k8s-deploy:~# cat pub-key-scp.sh

#!/bin/bash

#⽬標主機列表

HOSTS="

192.168.0.31

192.168.0.32

192.168.0.33

192.168.0.34

192.168.0.35

192.168.0.36

192.168.0.37

192.168.0.38

192.168.0.39

"

REMOTE_PORT="22"

REMOTE_USER="root"

REMOTE_PASS="admin"

for REMOTE_HOST in ${HOSTS};do

REMOTE_CMD="echo ${REMOTE_HOST} is successfully!"

#新增目標遠端主機的公鑰

ssh-keyscan -p "${REMOTE_PORT}" "${REMOTE_HOST}" >> ~/.ssh/known_hosts

#通過sshpass設定免祕鑰登入、並建立python3軟連線

sshpass -p "${REMOTE_PASS}" ssh-copy-id "${REMOTE_USER}@${REMOTE_HOST}"

ssh ${REMOTE_HOST} ln -sv /usr/bin/python3 /usr/bin/python

echo ${REMOTE_HOST} 免祕鑰設定完成!

done

root@k8s-deploy:~#

執行指令碼分發ssh公鑰至master、node、etcd節點實現免金鑰登入

root@deploy:~# sh pub-key-scp.sh

驗證:在deploy節點,ssh連線k8s叢集任意主機,看看是否能夠正常免密登入?

root@k8s-deploy:~# ssh 192.168.0.33 Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-70-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage This system has been minimized by removing packages and content that are not required on a system that users do not log into. To restore this content, you can run the 'unminimize' command. Last login: Sat Apr 22 11:45:25 2023 from 192.168.0.232 root@k8s-master03:~# exit logout Connection to 192.168.0.33 closed. root@k8s-deploy:~#

提示:能夠正常免密登入對應主機,表示上述指令碼實現免密登入沒有問題;

下載kubeasz專案安裝指令碼

root@deploy:~# apt install git -y

root@deploy:~# export release=3.5.2

root@deploy:~# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

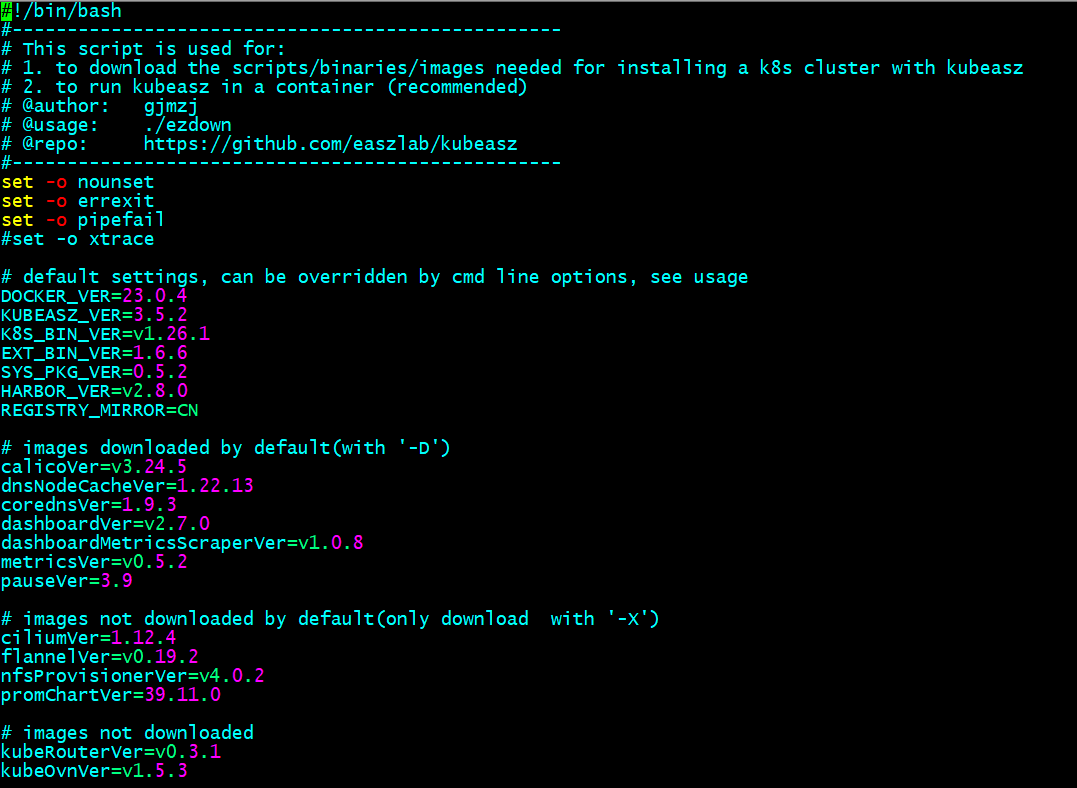

編輯ezdown

提示:編輯ezdown指令碼主要是定義安裝下載元件的版本,根據自己環境來客製化對應版本就好;

給指令碼新增執行許可權

root@k8s-deploy:~# chmod a+x ezdown root@k8s-deploy:~# ll ezdown -rwxr-xr-x 1 root root 25433 Feb 9 15:11 ezdown* root@k8s-deploy:~#

執行指令碼,下載kubeasz專案及元件

root@deploy:~# ./ezdown -D

提示:執行ezdown指令碼它會下載一些映象和二進位制工具等,並將下載的二進位制工具和kubeasz專案存放在/etc/kubeasz/目錄中;

root@k8s-deploy:~# ll /etc/kubeasz/ total 140 drwxrwxr-x 13 root root 4096 Apr 22 07:59 ./ drwxr-xr-x 83 root root 4096 Apr 22 11:53 ../ drwxrwxr-x 3 root root 4096 Feb 9 15:14 .github/ -rw-rw-r-- 1 root root 301 Feb 9 14:50 .gitignore -rw-rw-r-- 1 root root 5556 Feb 9 14:50 README.md -rw-rw-r-- 1 root root 20304 Feb 9 14:50 ansible.cfg drwxr-xr-x 3 root root 4096 Apr 22 07:50 bin/ drwxr-xr-x 3 root root 4096 Apr 22 07:59 clusters/ drwxrwxr-x 8 root root 4096 Feb 9 15:14 docs/ drwxr-xr-x 2 root root 4096 Apr 22 07:59 down/ drwxrwxr-x 2 root root 4096 Feb 9 15:14 example/ -rwxrwxr-x 1 root root 26174 Feb 9 14:50 ezctl* -rwxrwxr-x 1 root root 25433 Feb 9 14:50 ezdown* drwxrwxr-x 10 root root 4096 Feb 9 15:14 manifests/ drwxrwxr-x 2 root root 4096 Feb 9 15:14 pics/ drwxrwxr-x 2 root root 4096 Apr 22 08:07 playbooks/ drwxrwxr-x 22 root root 4096 Feb 9 15:14 roles/ drwxrwxr-x 2 root root 4096 Feb 9 15:14 tools/ root@k8s-deploy:~#

檢視ezctl工具的使用幫助

root@deploy:~# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl --help

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:

list to list all of the managed clusters

checkout <cluster> to switch default kubeconfig of the cluster

new <cluster> to start a new k8s deploy with name 'cluster'

setup <cluster> <step> to setup a cluster, also supporting a step-by-step way

start <cluster> to start all of the k8s services stopped by 'ezctl stop'

stop <cluster> to stop all of the k8s services temporarily

upgrade <cluster> to upgrade the k8s cluster

destroy <cluster> to destroy the k8s cluster

backup <cluster> to backup the cluster state (etcd snapshot)

restore <cluster> to restore the cluster state from backups

start-aio to quickly setup an all-in-one cluster with default settings

Cluster ops:

add-etcd <cluster> <ip> to add a etcd-node to the etcd cluster

add-master <cluster> <ip> to add a master node to the k8s cluster

add-node <cluster> <ip> to add a work node to the k8s cluster

del-etcd <cluster> <ip> to delete a etcd-node from the etcd cluster

del-master <cluster> <ip> to delete a master node from the k8s cluster

del-node <cluster> <ip> to delete a work node from the k8s cluster

Extra operation:

kca-renew <cluster> to force renew CA certs and all the other certs (with caution)

kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s cluster

Use "ezctl help <command>" for more information about a given command.

root@deploy:/etc/kubeasz#

使用ezctl工具生成組態檔和hosts檔案

root@k8s-deploy:~# cd /etc/kubeasz/ root@k8s-deploy:/etc/kubeasz# ./ezctl new k8s-cluster01 2023-04-22 13:27:51 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster01 2023-04-22 13:27:51 DEBUG set versions 2023-04-22 13:27:51 DEBUG disable registry mirrors 2023-04-22 13:27:51 DEBUG cluster k8s-cluster01: files successfully created. 2023-04-22 13:27:51 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster01/hosts' 2023-04-22 13:27:51 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster01/config.yml' root@k8s-deploy:/etc/kubeasz#

編輯ansible hosts組態檔

root@k8s-deploy:/etc/kubeasz# cat /etc/kubeasz/clusters/k8s-cluster01/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.0.37

192.168.0.38

192.168.0.39

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

192.168.0.31 k8s_nodename='192.168.0.31'

192.168.0.32 k8s_nodename='192.168.0.32'

#192.168.0.33 k8s_nodename='192.168.0.33'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

192.168.0.34 k8s_nodename='192.168.0.34'

192.168.0.35 k8s_nodename='192.168.0.35'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-32767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster01"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Default 'k8s_nodename' is empty

k8s_nodename=''

root@k8s-deploy:/etc/kubeasz#

提示:上述hosts組態檔主要用來指定etcd節點、master節點、node節點、vip、執行時、網路元件型別、service IP與pod IP範圍等設定資訊。

編輯cluster config.yml檔案

root@k8s-deploy:/etc/kubeasz# cat /etc/kubeasz/clusters/k8s-cluster01/config.yml

############################

# prepare

############################

# 可選離線安裝系統軟體包 (offline|online)

INSTALL_SOURCE: "online"

# 可選進行系統安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# force to recreate CA and other certs, not suggested to set 'true'

CHANGE_CA: false

# kubeconfig 設定引數

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.26.1"

# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

{%- else -%} \

{{ inventory_hostname }} \

{%- endif -%}"

############################

# role:etcd

############################

# 設定不同的wal目錄,可以避免磁碟io競爭,提高效能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]啟用容器倉庫映象

ENABLE_MIRROR_REGISTRY: true

# [containerd]基礎容器映象

SANDBOX_IMAGE: "harbor.ik8s.cc/baseimages/pause:3.9"

# [containerd]容器持久化儲存目錄

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器儲存目錄

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]開啟Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP倉庫

INSECURE_REG: '["http://easzlab.io.local:5000"]'

############################

# role:kube-master

############################

# k8s 叢集 master 節點證書設定,可以新增多個ip和域名(比如增加公網ip和域名)

MASTER_CERT_HOSTS:

- "192.168.0.111"

- "kubeapi.ik8s.cc"

#- "www.test.com"

# node 節點上 pod 網段掩碼長度(決定每個節點最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 引數,那麼它將讀取該設定為每個節點分配pod網段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目錄

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node節點最大pod 數

MAX_PODS: 200

# 設定為kube元件(kubelet,kube-proxy,dockerd等)預留的資源量

# 數值設定詳見templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建議草率開啟 system-reserved, 除非你基於長期監控,瞭解系統的資源佔用狀況;

# 並且隨著系統執行時間,需要適當增加資源預留,數值設定詳見templates/kubelet-config.yaml.j2

# 系統預留設定基於 4c/8g 虛機,最小化安裝系統服務,如果使用高效能物理機可以適當增加預留

# 另外,叢集安裝時候apiserver等資源佔用會短時較大,建議至少預留1g記憶體

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]設定flannel 後端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel]

flannel_ver: "v0.19.2"

# ------------------------------------------- calico

# [calico] IPIP隧道模式可選項有: [Always, CrossSubnet, Never],跨子網可以設定為Always與CrossSubnet(公有云建議使用always比較省事,其他的話需要修改各自公有云的網路設定,具體可以參考各個公有云說明)

# 其次CrossSubnet為隧道+BGP路由混合模式可以提升網路效能,同子網設定為Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]設定 calico-node使用的host IP,bgp鄰居通過該地址建立,可手工指定也可以自動發現

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]設定calico 網路 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]設定calico 是否使用route reflectors

# 如果叢集規模超過50個節點,建議啟用該特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 設定route reflectors的節點,如果未設定預設使用叢集master節點

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支援calico 版本: ["3.19", "3.23"]

calico_ver: "v3.24.5"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]映象版本

cilium_ver: "1.12.4"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]選擇 OVN DB and OVN Control Plane 節點,預設為第一個master節點

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]離線映象tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始終開啟 ipinip;自有環境可以設定為 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支援開關

FIREWALL_ENABLE: true

# [kube-router]kube-router 映象版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自動安裝

dns_install: "no"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.22.13"

# 設定 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自動安裝

metricsserver_install: "no"

metricsVer: "v0.5.2"

# dashboard 自動安裝

dashboard_install: "no"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自動安裝

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "39.11.0"

# nfs-provisioner 自動安裝

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自動安裝

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本號

HARBOR_VER: "v2.6.3"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: true

root@k8s-deploy:/etc/kubeasz#

提示:上述組態檔主要定義了CA和證書的過期時長、kubeconfig設定引數、k8s叢集版本、etcd資料存放目錄、執行時引數、masster證書名稱、node節點pod網段子網掩碼長度、kubelet根目錄、node節點最大pod數量、網路外掛相關引數設定以及叢集外掛安裝相關設定;

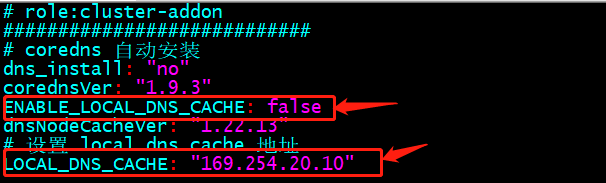

提示:這裡需要注意一點,雖然我們沒有自動安裝coredns,但是這兩個變數需要設定下,如果ENABLE_LOCAL_DNS_CACHE的值是true,下面的LOCAL_DNS_CACHE就寫成對應coredns服務的IP地址;如果ENABLE_LOCAL_DNS_CACHE的值是false,後面的LOCAL_DNS_CACHE是誰的IP地址就無所謂了;

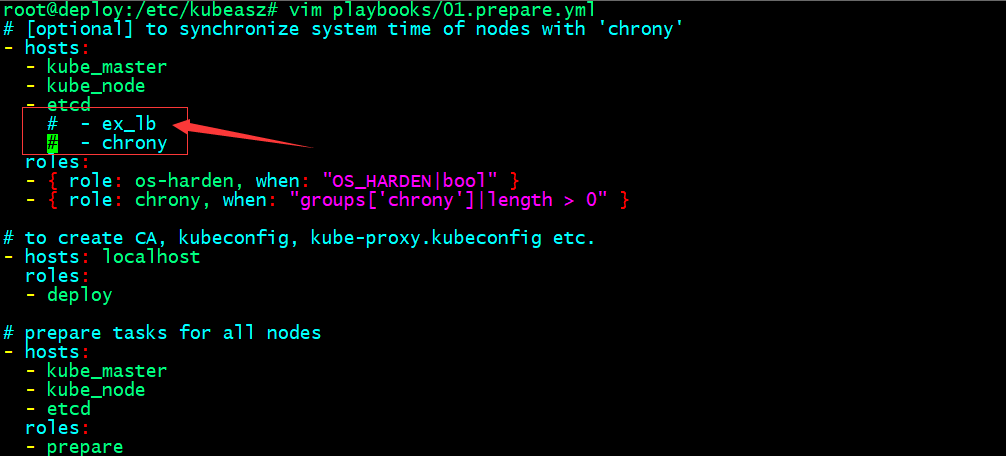

編輯系統基礎初始化主機設定

提示:註釋掉上述ex_lb和chrony表示這兩個主機我們主機定義,不需要通過kubeasz來幫我們初始化;即系統初始化,只針對master、node、etcd這三類節點來做;

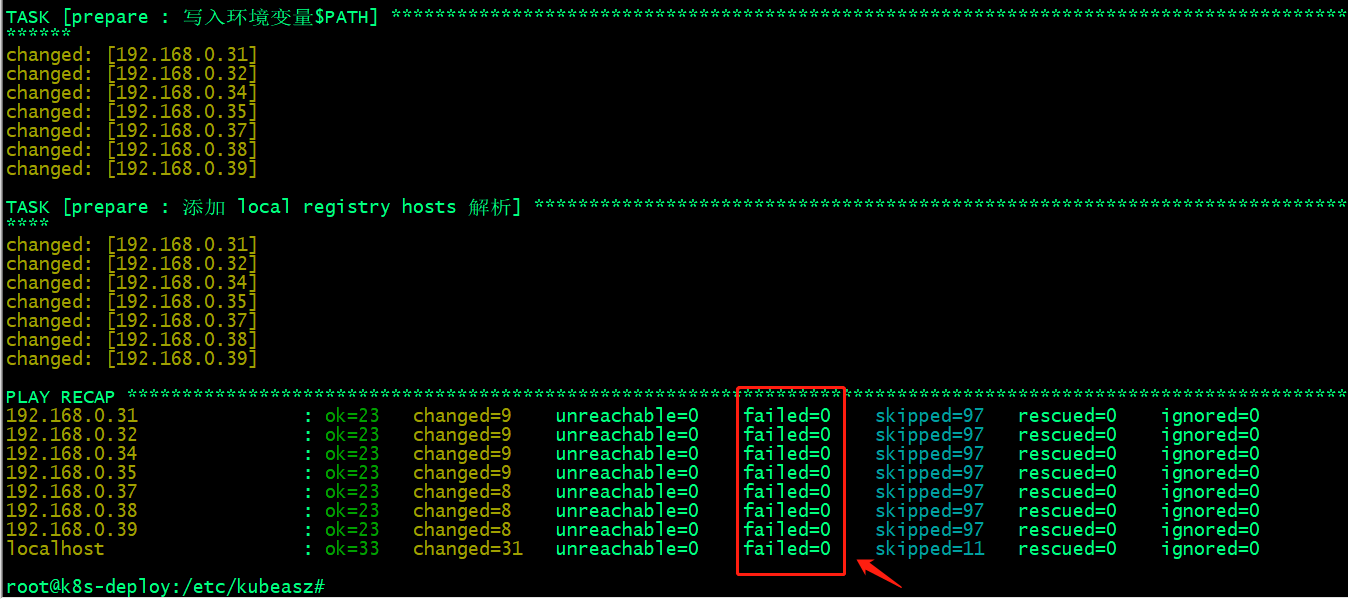

準備CA和基礎環境初始化

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster01 01

提示:執行上述命令,反饋failed都是0,表示指定節點的初始化環境準備就緒,接下來我們就可以進行第二步部署etcd節點;

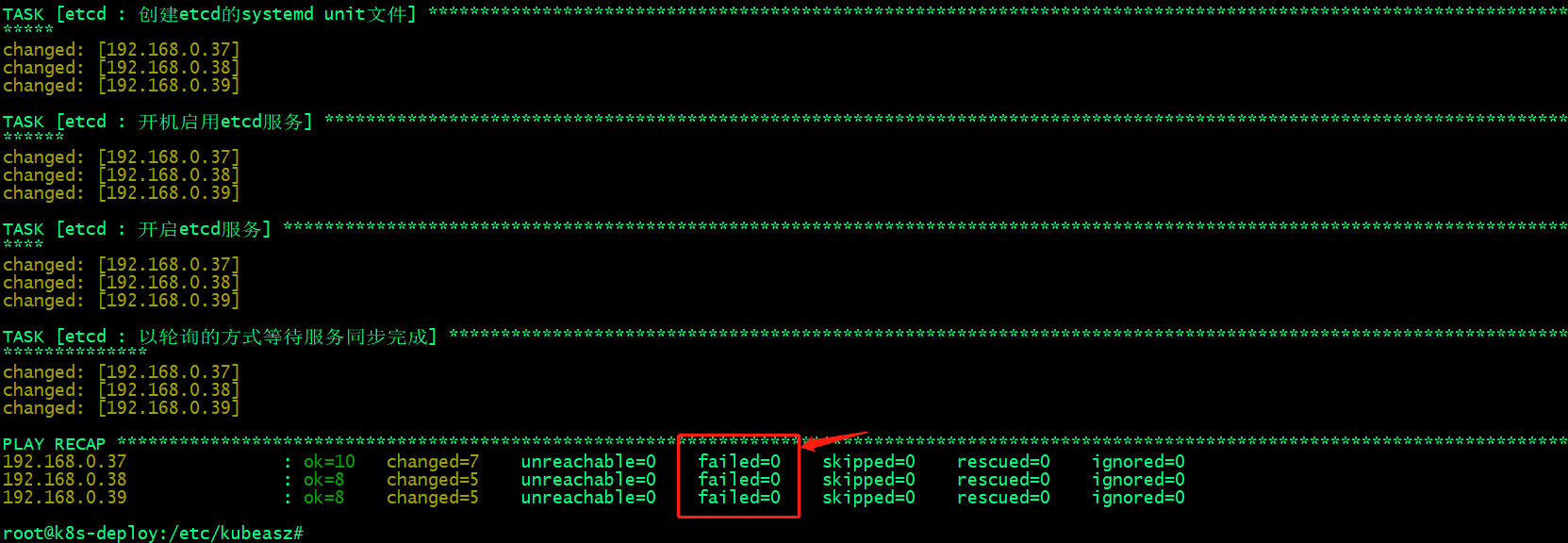

部署etcd叢集

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster01 02

提示:這裡報錯說不能/usr/bin/python沒有找到,導致不能獲取到/etc/kubeasz/clusters/k8s-cluster01/ssl/etcd-csr.json資訊;

解決辦法,在部署節點上將/usr/bin/python3軟連線至/usr/bin/python;

root@deploy:/etc/kubeasz# ln -sv /usr/bin/python3 /usr/bin/python '/usr/bin/python' -> '/usr/bin/python3' root@deploy:/etc/kubeasz#

再次執行上述部署步驟

驗證etcd叢集是否正常?

root@k8s-etcd01:~# export NODE_IPS="192.168.0.37 192.168.0.38 192.168.0.39"

root@k8s-etcd01:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.0.37:2379 is healthy: successfully committed proposal: took = 32.64189ms

https://192.168.0.38:2379 is healthy: successfully committed proposal: took = 30.249623ms

https://192.168.0.39:2379 is healthy: successfully committed proposal: took = 32.747586ms

root@k8s-etcd01:~#

提示:能夠看到上面的健康狀態成功,表示etcd叢集服務正常;

部署容器執行時containerd

驗證基礎容器映象

root@deploy:/etc/kubeasz# grep SANDBOX_IMAGE ./clusters/* -R ./clusters/k8s-cluster01/config.yml:SANDBOX_IMAGE: "harbor.ik8s.cc/baseimages/pause:3.9" root@deploy:/etc/kubeasz#

下載基礎映象到本地,然後更換標籤,上傳至harbor之上

root@deploy:/etc/kubeasz# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 3.9: Pulling from google_containers/pause 61fec91190a0: Already exists Digest: sha256:7031c1b283388d2c2e09b57badb803c05ebed362dc88d84b480cc47f72a21097 Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 root@deploy:/etc/kubeasz# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 harbor.ik8s.cc/baseimages/pause:3.9 root@deploy:/etc/kubeasz# docker login harbor.ik8s.cc Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@deploy:/etc/kubeasz# docker push harbor.ik8s.cc/baseimages/pause:3.9 The push refers to repository [harbor.ik8s.cc/baseimages/pause] e3e5579ddd43: Pushed 3.9: digest: sha256:0fc1f3b764be56f7c881a69cbd553ae25a2b5523c6901fbacb8270307c29d0c4 size: 526 root@deploy:/etc/kubeasz#

設定harbor映象倉庫域名解析-公司有DNS伺服器進⾏域名解析

提示:編輯/etc/kubeasz/roles/containerd/tasks/main.yml檔案在block設定段裡面任意找個地方將其上述任務加上即可;

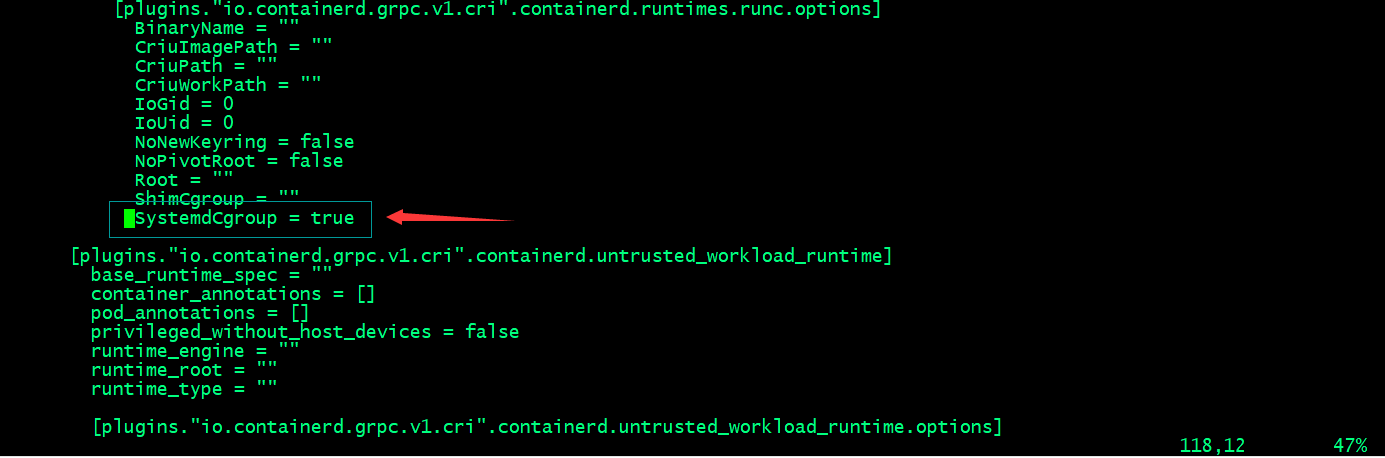

編輯/etc/kubeasz/roles/containerd/templates/config.toml.j2⾃定義containerd設定⽂件模板;

提示:這個引數在ubuntu2204上一定要改成true;否者會出現k8spod不斷重啟的現象;一般和kubelet保持一致;

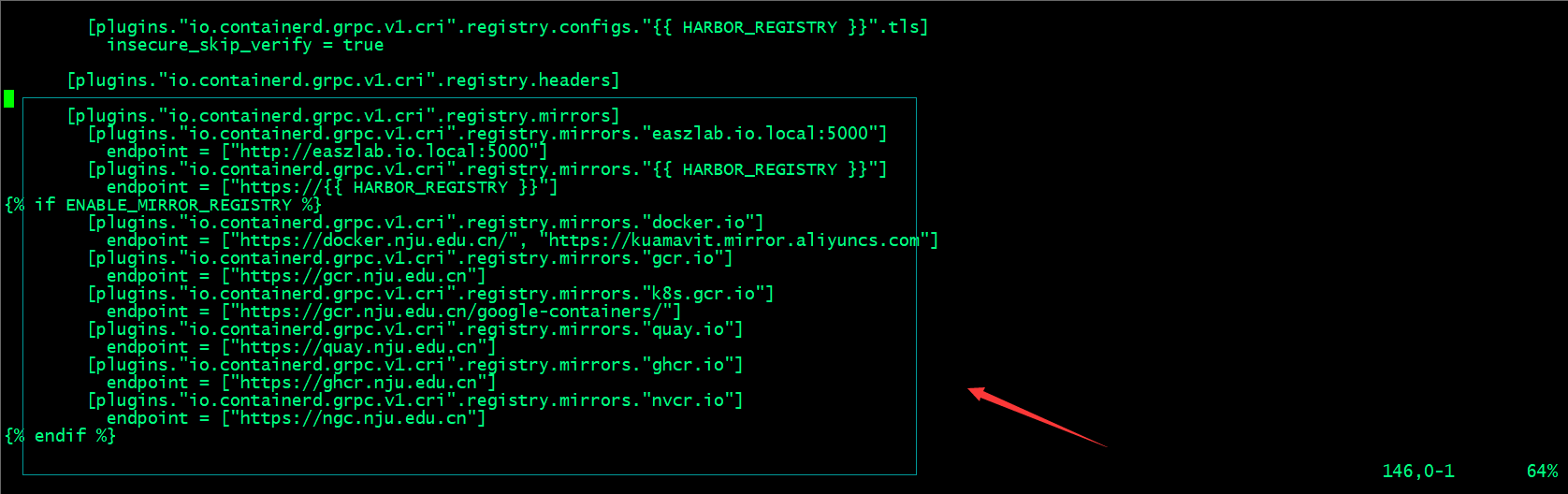

提示:這裡可以根據自己的環境來設定相應的映象加速地址;

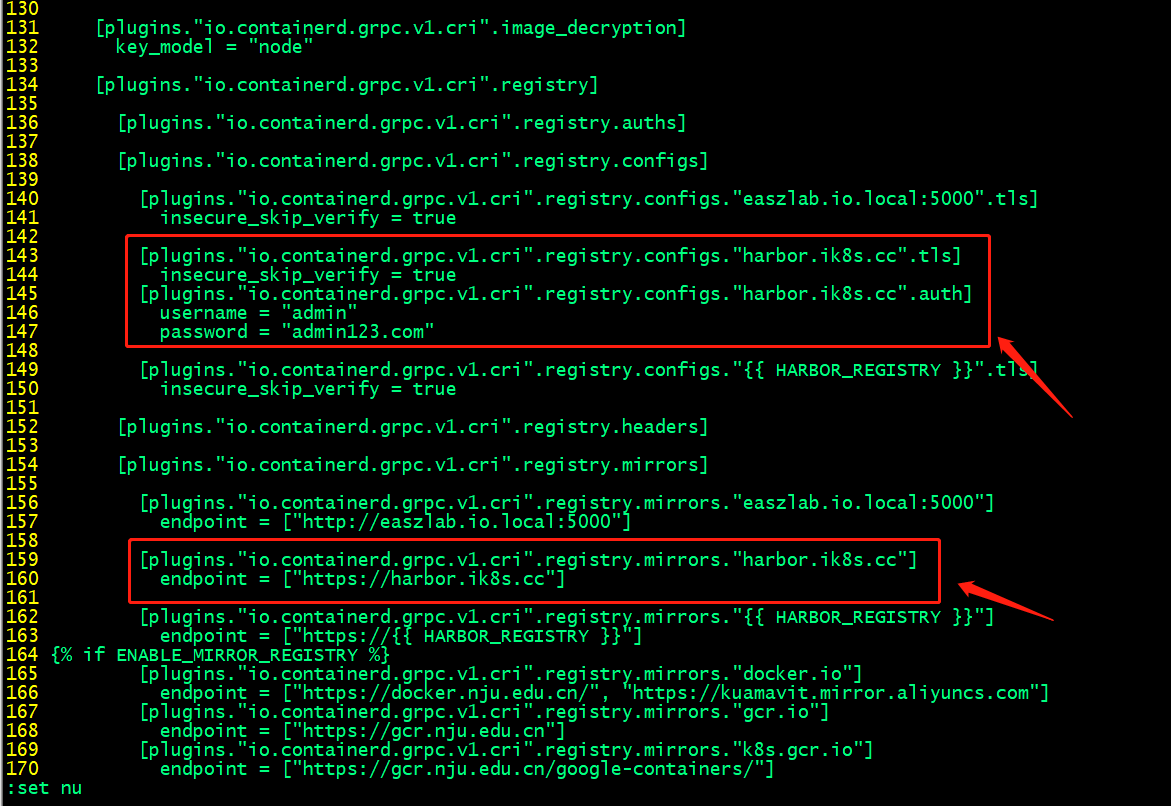

私有https/http映象倉庫設定下載認證

提示:如果你的映象倉庫是一個私有(不是公開的倉庫,即下載映象需要使用者名稱和密碼的倉庫)https/http倉庫,新增上述設定containerd在下載對應倉庫中的映象,會拿這裡設定的使用者名稱密碼去下載映象;

設定nerdctl使用者端

提示:編輯/etc/kubeasz/roles/containerd/tasks/main.yml檔案加上nerdctl設定相關任務;

在部署節點準備nerdctl工具二進位制檔案和依賴檔案、組態檔

root@k8s-deploy:/etc/kubeasz/bin/containerd-bin# ll total 184572 drwxr-xr-x 2 root root 4096 Jan 26 01:51 ./ drwxr-xr-x 3 root root 4096 Apr 22 13:17 ../ -rwxr-xr-x 1 root root 51529720 Dec 19 16:53 containerd* -rwxr-xr-x 1 root root 7254016 Dec 19 16:53 containerd-shim* -rwxr-xr-x 1 root root 9359360 Dec 19 16:53 containerd-shim-runc-v1* -rwxr-xr-x 1 root root 9375744 Dec 19 16:53 containerd-shim-runc-v2* -rwxr-xr-x 1 root root 22735256 Dec 19 16:53 containerd-stress* -rwxr-xr-x 1 root root 52586151 Dec 14 07:20 crictl* -rwxr-xr-x 1 root root 26712216 Dec 19 16:53 ctr* -rwxr-xr-x 1 root root 9431456 Aug 25 2022 runc* root@k8s-deploy:/etc/kubeasz/bin/containerd-bin# tar xf /root/nerdctl-1.3.0-linux-amd64.tar.gz -C . root@k8s-deploy:/etc/kubeasz/bin/containerd-bin# ll total 208940 drwxr-xr-x 2 root root 4096 Apr 22 14:00 ./ drwxr-xr-x 3 root root 4096 Apr 22 13:17 ../ -rwxr-xr-x 1 root root 51529720 Dec 19 16:53 containerd* -rwxr-xr-x 1 root root 21622 Apr 5 12:21 containerd-rootless-setuptool.sh* -rwxr-xr-x 1 root root 7032 Apr 5 12:21 containerd-rootless.sh* -rwxr-xr-x 1 root root 7254016 Dec 19 16:53 containerd-shim* -rwxr-xr-x 1 root root 9359360 Dec 19 16:53 containerd-shim-runc-v1* -rwxr-xr-x 1 root root 9375744 Dec 19 16:53 containerd-shim-runc-v2* -rwxr-xr-x 1 root root 22735256 Dec 19 16:53 containerd-stress* -rwxr-xr-x 1 root root 52586151 Dec 14 07:20 crictl* -rwxr-xr-x 1 root root 26712216 Dec 19 16:53 ctr* -rwxr-xr-x 1 root root 24920064 Apr 5 12:22 nerdctl* -rwxr-xr-x 1 root root 9431456 Aug 25 2022 runc* root@k8s-deploy:/etc/kubeasz/bin/containerd-bin# cat /etc/kubeasz/roles/containerd/templates/nerdctl.toml.j2 namespace = "k8s.io" debug = false debug_full = false insecure_registry = true root@k8s-deploy:/etc/kubeasz/bin/containerd-bin#

提示:準備好nerdctl相關檔案以後,對應就可以執行部署容器執行時containerd的任務;

執⾏部署容器執行時containerd

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster01 03

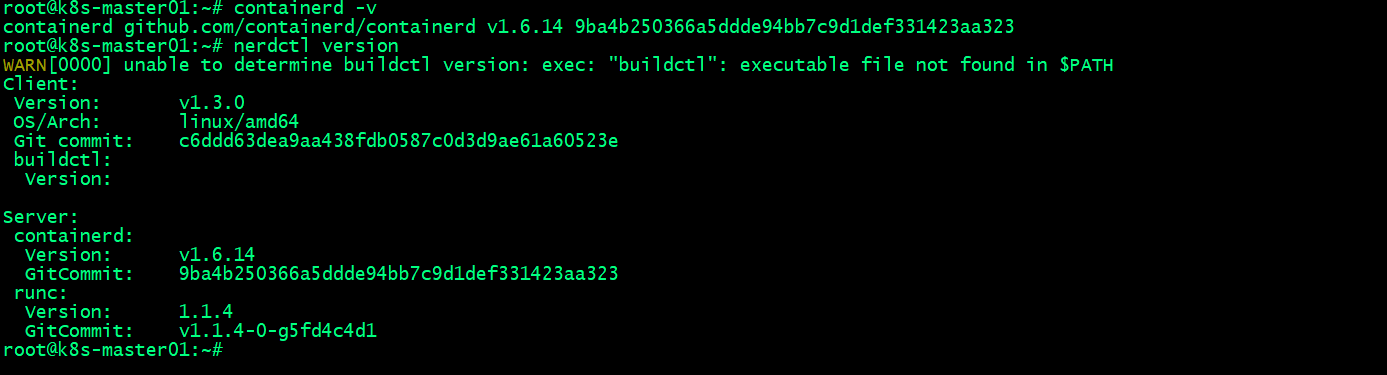

驗證:在node節點或master節點驗證containerd的版本資訊,以及nerdctl的版本資訊

提示:在master節點或node節點能夠檢視到containerd和nerdctl的版本資訊,說明容器執行時containerd部署完成;

測試:在master節點使用nerdctl 下載映象,看看是否可以正常下載?

root@k8s-master01:~# nerdctl pull ubuntu:22.04 WARN[0000] skipping verifying HTTPS certs for "docker.io" docker.io/library/ubuntu:22.04: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:67211c14fa74f070d27cc59d69a7fa9aeff8e28ea118ef3babc295a0428a6d21: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:7a57c69fe1e9d5b97c5fe649849e79f2cfc3bf11d10bbd5218b4eb61716aebe6: done |++++++++++++++++++++++++++++++++++++++| config-sha256:08d22c0ceb150ddeb2237c5fa3129c0183f3cc6f5eeb2e7aa4016da3ad02140a: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:2ab09b027e7f3a0c2e8bb1944ac46de38cebab7145f0bd6effebfe5492c818b6: done |++++++++++++++++++++++++++++++++++++++| elapsed: 28.4s total: 28.2 M (1015.6 KiB/s) root@k8s-master01:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE ubuntu 22.04 67211c14fa74 6 seconds ago linux/amd64 83.4 MiB 28.2 MiB <none> <none> 67211c14fa74 6 seconds ago linux/amd64 83.4 MiB 28.2 MiB root@k8s-master01:~#

測試:在master節點登入harbor倉庫

root@k8s-master01:~# nerdctl login harbor.ik8s.cc Enter Username: admin Enter Password: WARN[0005] skipping verifying HTTPS certs for "harbor.ik8s.cc" WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-master01:~#

測試:在master節點上向harbor上傳映象是否正常呢?

root@k8s-master01:~# nerdctl pull ubuntu:22.04 WARN[0000] skipping verifying HTTPS certs for "docker.io" docker.io/library/ubuntu:22.04: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:67211c14fa74f070d27cc59d69a7fa9aeff8e28ea118ef3babc295a0428a6d21: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:7a57c69fe1e9d5b97c5fe649849e79f2cfc3bf11d10bbd5218b4eb61716aebe6: done |++++++++++++++++++++++++++++++++++++++| config-sha256:08d22c0ceb150ddeb2237c5fa3129c0183f3cc6f5eeb2e7aa4016da3ad02140a: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:2ab09b027e7f3a0c2e8bb1944ac46de38cebab7145f0bd6effebfe5492c818b6: done |++++++++++++++++++++++++++++++++++++++| elapsed: 22.3s total: 28.2 M (1.3 MiB/s) root@k8s-master01:~# nerdctl login harbor.ik8s.cc WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-master01:~# nerdctl tag ubuntu:22.04 harbor.ik8s.cc/baseimages/ubuntu:22.04 root@k8s-master01:~# nerdctl push harbor.ik8s.cc/baseimages/ubuntu:22.04 INFO[0000] pushing as a reduced-platform image (application/vnd.oci.image.index.v1+json, sha256:730821bd93846fe61e18dd16cb476ef8d11489bab10a894e8acc7eb0405cc68e) WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" index-sha256:730821bd93846fe61e18dd16cb476ef8d11489bab10a894e8acc7eb0405cc68e: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:7a57c69fe1e9d5b97c5fe649849e79f2cfc3bf11d10bbd5218b4eb61716aebe6: done |++++++++++++++++++++++++++++++++++++++| config-sha256:08d22c0ceb150ddeb2237c5fa3129c0183f3cc6f5eeb2e7aa4016da3ad02140a: done |++++++++++++++++++++++++++++++++++++++| elapsed: 0.5 s total: 2.9 Ki (5.9 KiB/s) root@k8s-master01:~#

測試:在node節點上登入harbor,下載剛才上傳的ubuntu:22.04映象,看看是否可以正常下載?

root@k8s-node01:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE root@k8s-node01:~# nerdctl login harbor.ik8s.cc WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-node01:~# nerdctl pull harbor.ik8s.cc/baseimages/ubuntu:22.04 WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc" harbor.ik8s.cc/baseimages/ubuntu:22.04: resolved |++++++++++++++++++++++++++++++++++++++| index-sha256:730821bd93846fe61e18dd16cb476ef8d11489bab10a894e8acc7eb0405cc68e: done |++++++++++++++++++++++++++++++++++++++| manifest-sha256:7a57c69fe1e9d5b97c5fe649849e79f2cfc3bf11d10bbd5218b4eb61716aebe6: done |++++++++++++++++++++++++++++++++++++++| config-sha256:08d22c0ceb150ddeb2237c5fa3129c0183f3cc6f5eeb2e7aa4016da3ad02140a: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:2ab09b027e7f3a0c2e8bb1944ac46de38cebab7145f0bd6effebfe5492c818b6: done |++++++++++++++++++++++++++++++++++++++| elapsed: 4.7 s total: 28.2 M (6.0 MiB/s) root@k8s-node01:~# nerdctl images REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE harbor.ik8s.cc/baseimages/ubuntu 22.04 730821bd9384 5 seconds ago linux/amd64 83.4 MiB 28.2 MiB <none> <none> 730821bd9384 5 seconds ago linux/amd64 83.4 MiB 28.2 MiB root@k8s-node01:~#

提示:能夠在master或node節點上正常使用nerdctl上傳映象到harbor,從harbor下載映象到本地,說明我們部署的容器執行時containerd就沒有問題了;接下就可以部署k8s master節點;

部署k8s master節點

root@deploy:/etc/kubeasz# cat roles/kube-master/tasks/main.yml

- name: 下載 kube_master 二進位制

copy: src={{ base_dir }}/bin/{{ item }} dest={{ bin_dir }}/{{ item }} mode=0755

with_items:

- kube-apiserver

- kube-controller-manager

- kube-scheduler

- kubectl

tags: upgrade_k8s

- name: 分發controller/scheduler kubeconfig組態檔

copy: src={{ cluster_dir }}/{{ item }} dest=/etc/kubernetes/{{ item }}

with_items:

- kube-controller-manager.kubeconfig

- kube-scheduler.kubeconfig

tags: force_change_certs

- name: 建立 kubernetes 證書籤名請求

template: src=kubernetes-csr.json.j2 dest={{ cluster_dir }}/ssl/kubernetes-csr.json

tags: change_cert, force_change_certs

connection: local

- name: 建立 kubernetes 證書和私鑰

shell: "cd {{ cluster_dir }}/ssl && {{ base_dir }}/bin/cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes kubernetes-csr.json | {{ base_dir }}/bin/cfssljson -bare kubernetes"

tags: change_cert, force_change_certs

connection: local

# 建立aggregator proxy相關證書

- name: 建立 aggregator proxy證書籤名請求

template: src=aggregator-proxy-csr.json.j2 dest={{ cluster_dir }}/ssl/aggregator-proxy-csr.json

connection: local

tags: force_change_certs

- name: 建立 aggregator-proxy證書和私鑰

shell: "cd {{ cluster_dir }}/ssl && {{ base_dir }}/bin/cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes aggregator-proxy-csr.json | {{ base_dir }}/bin/cfssljson -bare aggregator-proxy"

connection: local

tags: force_change_certs

- name: 分發 kubernetes證書

copy: src={{ cluster_dir }}/ssl/{{ item }} dest={{ ca_dir }}/{{ item }}

with_items:

- ca.pem

- ca-key.pem

- kubernetes.pem

- kubernetes-key.pem

- aggregator-proxy.pem

- aggregator-proxy-key.pem

tags: change_cert, force_change_certs

- name: 替換 kubeconfig 的 apiserver 地址

lineinfile:

dest: "{{ item }}"

regexp: "^ server"

line: " server: https://127.0.0.1:{{ SECURE_PORT }}"

with_items:

- "/etc/kubernetes/kube-controller-manager.kubeconfig"

- "/etc/kubernetes/kube-scheduler.kubeconfig"

tags: force_change_certs

- name: 建立 master 服務的 systemd unit 檔案

template: src={{ item }}.j2 dest=/etc/systemd/system/{{ item }}

with_items:

- kube-apiserver.service

- kube-controller-manager.service

- kube-scheduler.service

tags: restart_master, upgrade_k8s

- name: enable master 服務

shell: systemctl enable kube-apiserver kube-controller-manager kube-scheduler

ignore_errors: true

- name: 啟動 master 服務

shell: "systemctl daemon-reload && systemctl restart kube-apiserver && \

systemctl restart kube-controller-manager && systemctl restart kube-scheduler"

tags: upgrade_k8s, restart_master, force_change_certs

# 輪詢等待kube-apiserver啟動完成

- name: 輪詢等待kube-apiserver啟動

shell: "systemctl is-active kube-apiserver.service"

register: api_status

until: '"active" in api_status.stdout'

retries: 10

delay: 3

tags: upgrade_k8s, restart_master, force_change_certs

# 輪詢等待kube-controller-manager啟動完成

- name: 輪詢等待kube-controller-manager啟動

shell: "systemctl is-active kube-controller-manager.service"

register: cm_status

until: '"active" in cm_status.stdout'

retries: 8

delay: 3

tags: upgrade_k8s, restart_master, force_change_certs

# 輪詢等待kube-scheduler啟動完成

- name: 輪詢等待kube-scheduler啟動

shell: "systemctl is-active kube-scheduler.service"

register: sch_status

until: '"active" in sch_status.stdout'

retries: 8

delay: 3

tags: upgrade_k8s, restart_master, force_change_certs

- block:

- name: 複製kubectl.kubeconfig

shell: 'cd {{ cluster_dir }} && cp -f kubectl.kubeconfig {{ K8S_NODENAME }}-kubectl.kubeconfig'

tags: upgrade_k8s, restart_master, force_change_certs

- name: 替換 kubeconfig 的 apiserver 地址

lineinfile:

dest: "{{ cluster_dir }}/{{ K8S_NODENAME }}-kubectl.kubeconfig"

regexp: "^ server"

line: " server: https://{{ inventory_hostname }}:{{ SECURE_PORT }}"

tags: upgrade_k8s, restart_master, force_change_certs

- name: 輪詢等待master服務啟動完成

command: "{{ base_dir }}/bin/kubectl --kubeconfig={{ cluster_dir }}/{{ K8S_NODENAME }}-kubectl.kubeconfig get node"

register: result

until: result.rc == 0

retries: 5

delay: 6

tags: upgrade_k8s, restart_master, force_change_certs

- name: 獲取user:kubernetes是否已經繫結對應角色

shell: "{{ base_dir }}/bin/kubectl get clusterrolebindings|grep kubernetes-crb || echo 'notfound'"

register: crb_info

run_once: true

- name: 建立user:kubernetes角色系結

command: "{{ base_dir }}/bin/kubectl create clusterrolebinding kubernetes-crb --clusterrole=system:kubelet-api-admin --user=kubernetes"

run_once: true

when: "'notfound' in crb_info.stdout"

connection: local

root@deploy:/etc/kubeasz#

提示:上述kubeasz專案,部署master節點的任務,主要做了下載master節點所需的二進位制元件,分發組態檔,證書金鑰等檔案、service檔案,最後啟動服務;如果我們需要自定義任務,可以修改上述檔案來實現;

執行部署master節點

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster01 04

在部署節點驗證master節點是否可用獲取到node資訊?

root@k8s-deploy:/etc/kubeasz# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.0.31 Ready,SchedulingDisabled master 2m6s v1.26.1 192.168.0.32 Ready,SchedulingDisabled master 2m6s v1.26.1 root@k8s-deploy:/etc/kubeasz#

提示:能夠使用kubectl命令獲取到節點資訊,表示master部署成功;

部署k8s node節點

root@deploy:/etc/kubeasz# cat roles/kube-node/tasks/main.yml

- name: 建立kube_node 相關目錄

file: name={{ item }} state=directory

with_items:

- /var/lib/kubelet

- /var/lib/kube-proxy

- name: 下載 kubelet,kube-proxy 二進位制和基礎 cni plugins

copy: src={{ base_dir }}/bin/{{ item }} dest={{ bin_dir }}/{{ item }} mode=0755

with_items:

- kubectl

- kubelet

- kube-proxy

- bridge

- host-local

- loopback

tags: upgrade_k8s

- name: 新增 kubectl 自動補全

lineinfile:

dest: ~/.bashrc

state: present

regexp: 'kubectl completion'

line: 'source <(kubectl completion bash) # generated by kubeasz'

##----------kubelet 設定部分--------------

# 建立 kubelet 相關證書及 kubelet.kubeconfig

- import_tasks: create-kubelet-kubeconfig.yml

tags: force_change_certs

- name: 準備 cni組態檔

template: src=cni-default.conf.j2 dest=/etc/cni/net.d/10-default.conf

- name: 建立kubelet的組態檔

template: src=kubelet-config.yaml.j2 dest=/var/lib/kubelet/config.yaml

tags: upgrade_k8s, restart_node

- name: 建立kubelet的systemd unit檔案

template: src=kubelet.service.j2 dest=/etc/systemd/system/kubelet.service

tags: upgrade_k8s, restart_node

- name: 開機啟用kubelet 服務

shell: systemctl enable kubelet

ignore_errors: true

- name: 開啟kubelet 服務

shell: systemctl daemon-reload && systemctl restart kubelet

tags: upgrade_k8s, restart_node, force_change_certs

##-------kube-proxy部分----------------

- name: 分發 kube-proxy.kubeconfig組態檔

copy: src={{ cluster_dir }}/kube-proxy.kubeconfig dest=/etc/kubernetes/kube-proxy.kubeconfig

tags: force_change_certs

- name: 替換 kube-proxy.kubeconfig 的 apiserver 地址

lineinfile:

dest: /etc/kubernetes/kube-proxy.kubeconfig

regexp: "^ server"

line: " server: {{ KUBE_APISERVER }}"

tags: force_change_certs

- name: 建立kube-proxy 設定

template: src=kube-proxy-config.yaml.j2 dest=/var/lib/kube-proxy/kube-proxy-config.yaml

tags: reload-kube-proxy, restart_node, upgrade_k8s

- name: 建立kube-proxy 服務檔案

template: src=kube-proxy.service.j2 dest=/etc/systemd/system/kube-proxy.service

tags: reload-kube-proxy, restart_node, upgrade_k8s

- name: 開機啟用kube-proxy 服務

shell: systemctl enable kube-proxy

ignore_errors: true

- name: 開啟kube-proxy 服務

shell: systemctl daemon-reload && systemctl restart kube-proxy

tags: reload-kube-proxy, upgrade_k8s, restart_node, force_change_certs

# 設定k8s_nodename 在/etc/hosts 地址解析

- name: 設定k8s_nodename 在/etc/hosts 地址解析

lineinfile:

dest: /etc/hosts

state: present

regexp: "{{ K8S_NODENAME }}"

line: "{{ inventory_hostname }} {{ K8S_NODENAME }}"

delegate_to: "{{ item }}"

with_items: "{{ groups.kube_master }}"

when: "inventory_hostname != K8S_NODENAME"

# 輪詢等待kube-proxy啟動完成

- name: 輪詢等待kube-proxy啟動

shell: "systemctl is-active kube-proxy.service"

register: kubeproxy_status

until: '"active" in kubeproxy_status.stdout'

retries: 4

delay: 2

tags: reload-kube-proxy, upgrade_k8s, restart_node, force_change_certs

# 輪詢等待kubelet啟動完成

- name: 輪詢等待kubelet啟動

shell: "systemctl is-active kubelet.service"

register: kubelet_status

until: '"active" in kubelet_status.stdout'

retries: 4

delay: 2

tags: reload-kube-proxy, upgrade_k8s, restart_node, force_change_certs

- name: 輪詢等待node達到Ready狀態

shell: "{{ base_dir }}/bin/kubectl get node {{ K8S_NODENAME }}|awk 'NR>1{print $2}'"

register: node_status

until: node_status.stdout == "Ready" or node_status.stdout == "Ready,SchedulingDisabled"

retries: 8

delay: 8

tags: upgrade_k8s, restart_node, force_change_certs

connection: local

- block:

- name: Setting worker role name

shell: "{{ base_dir }}/bin/kubectl label node {{ K8S_NODENAME }} kubernetes.io/role=node --overwrite"

- name: Setting master role name

shell: "{{ base_dir }}/bin/kubectl label node {{ K8S_NODENAME }} kubernetes.io/role=master --overwrite"

when: "inventory_hostname in groups['kube_master']"

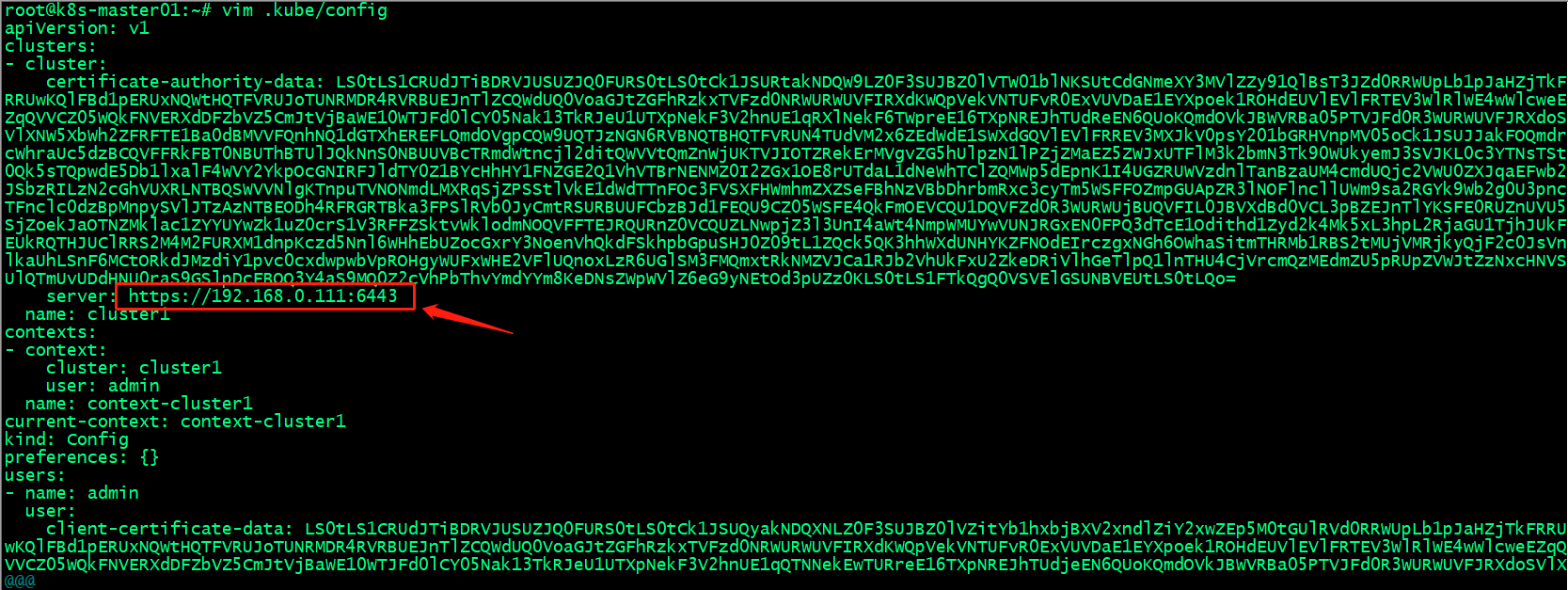

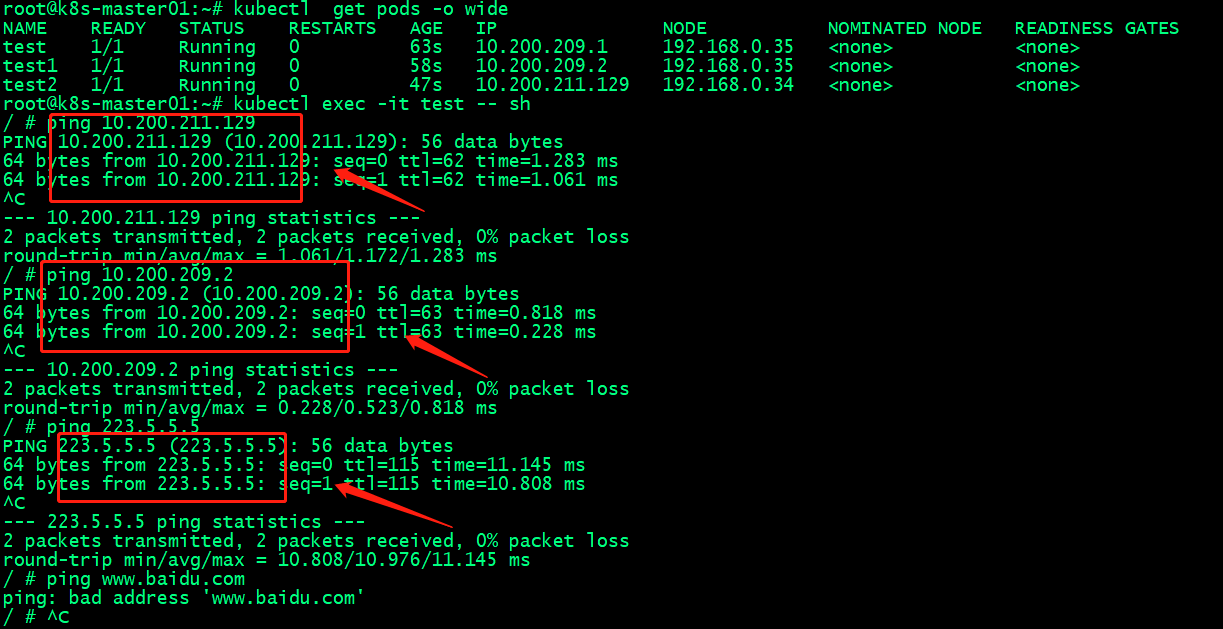

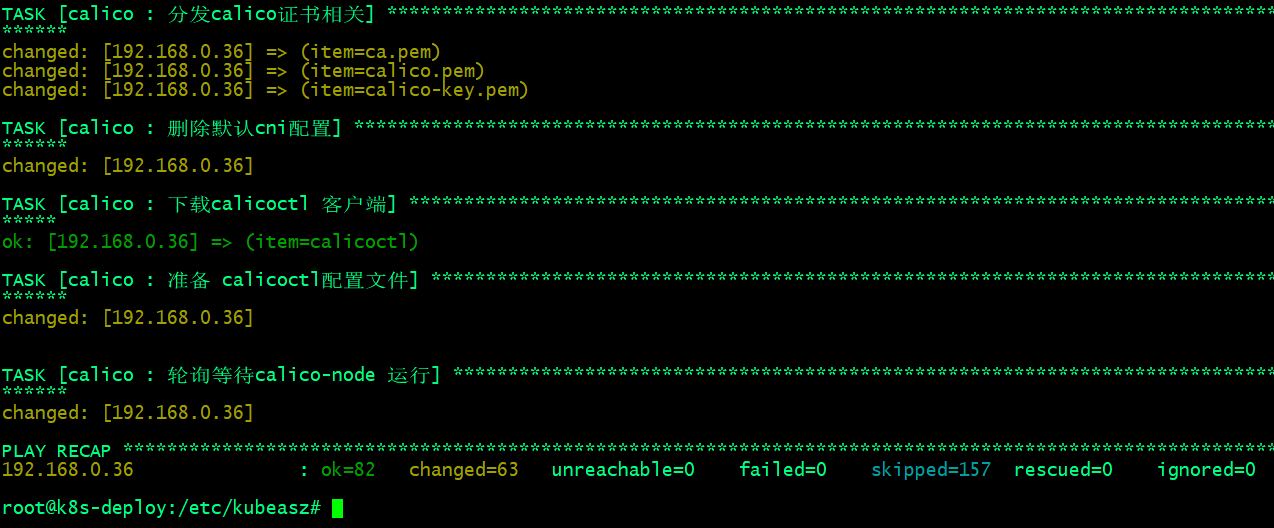

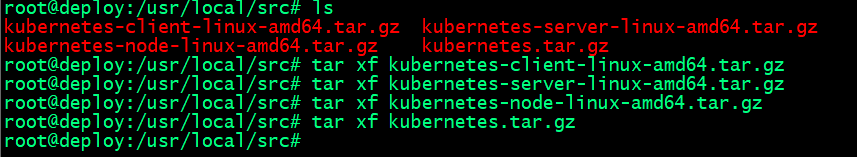

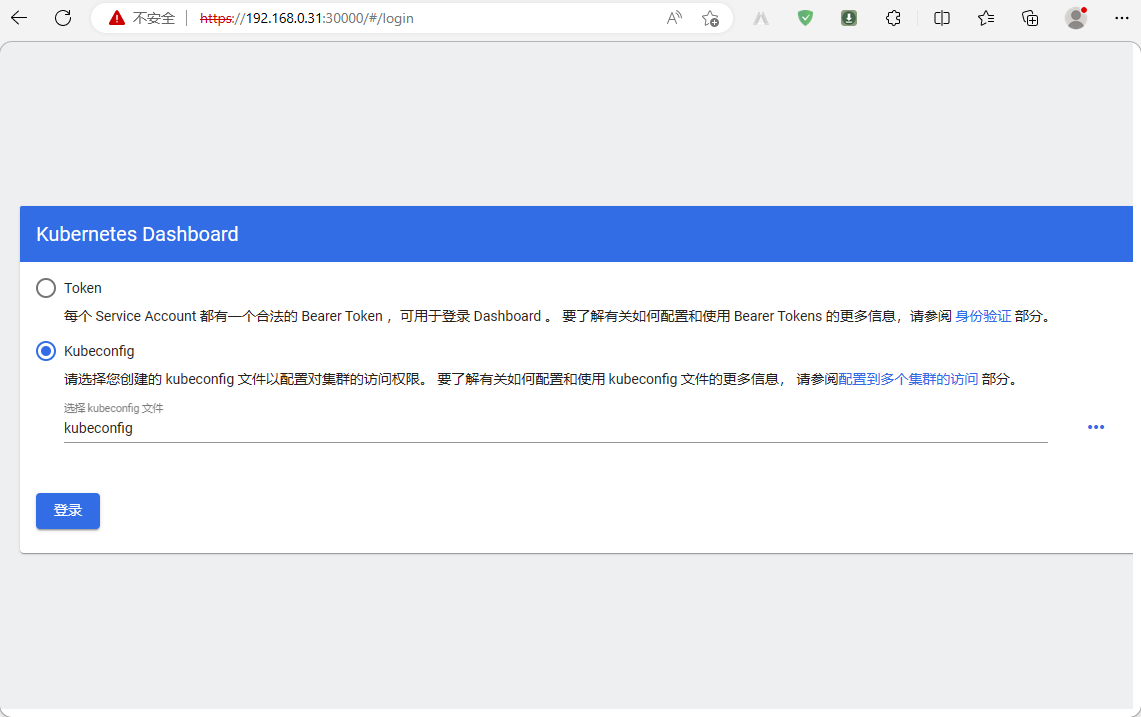

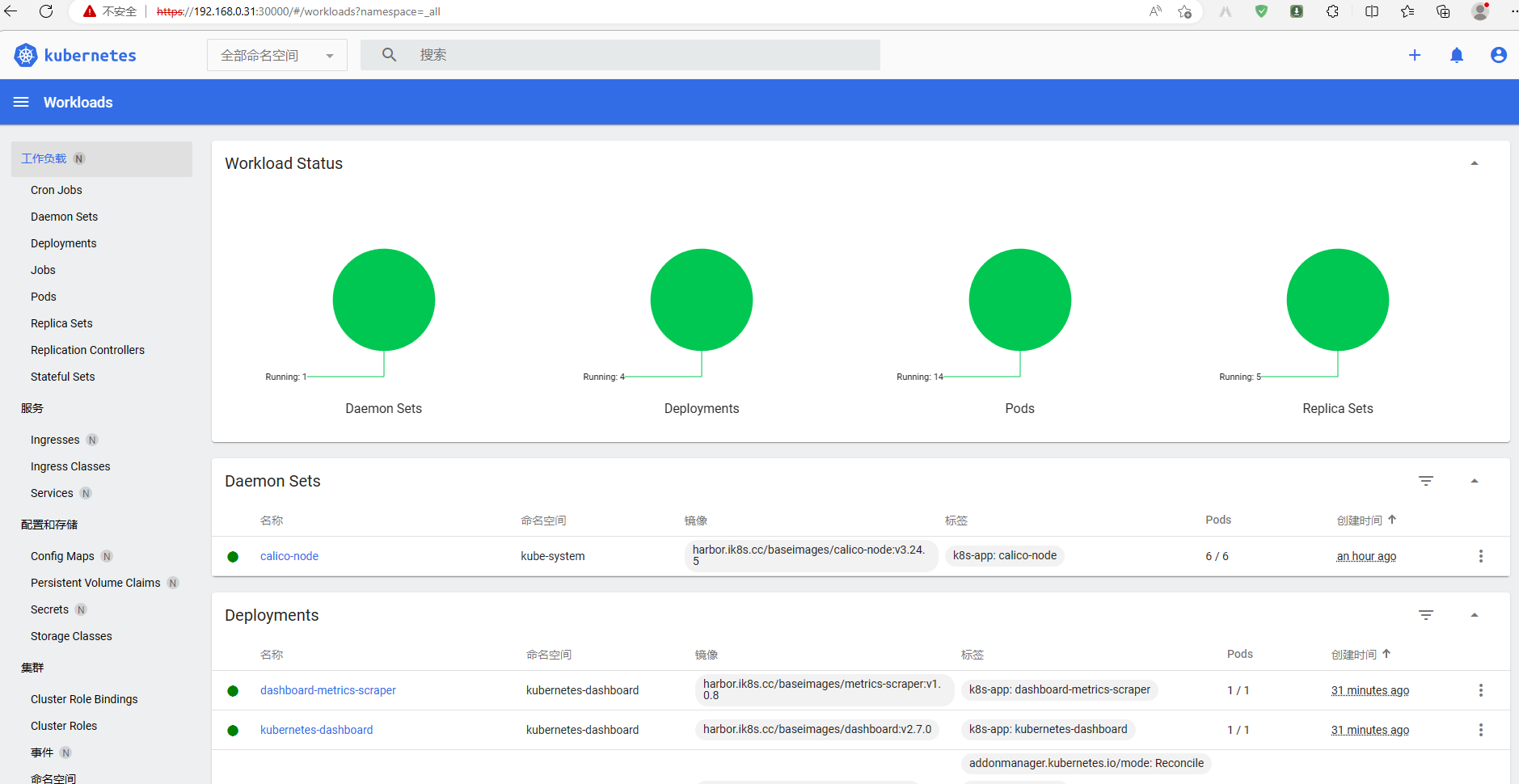

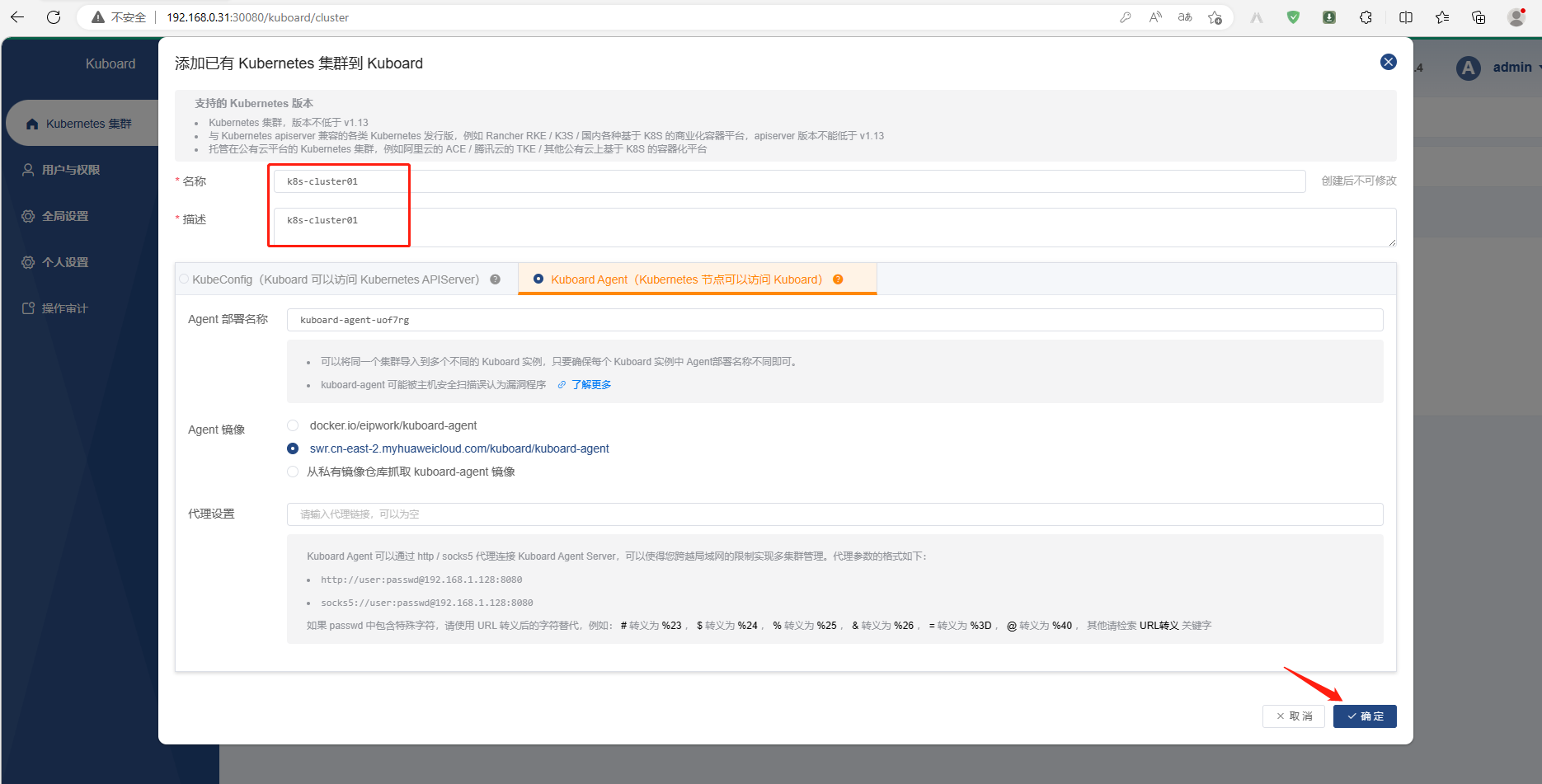

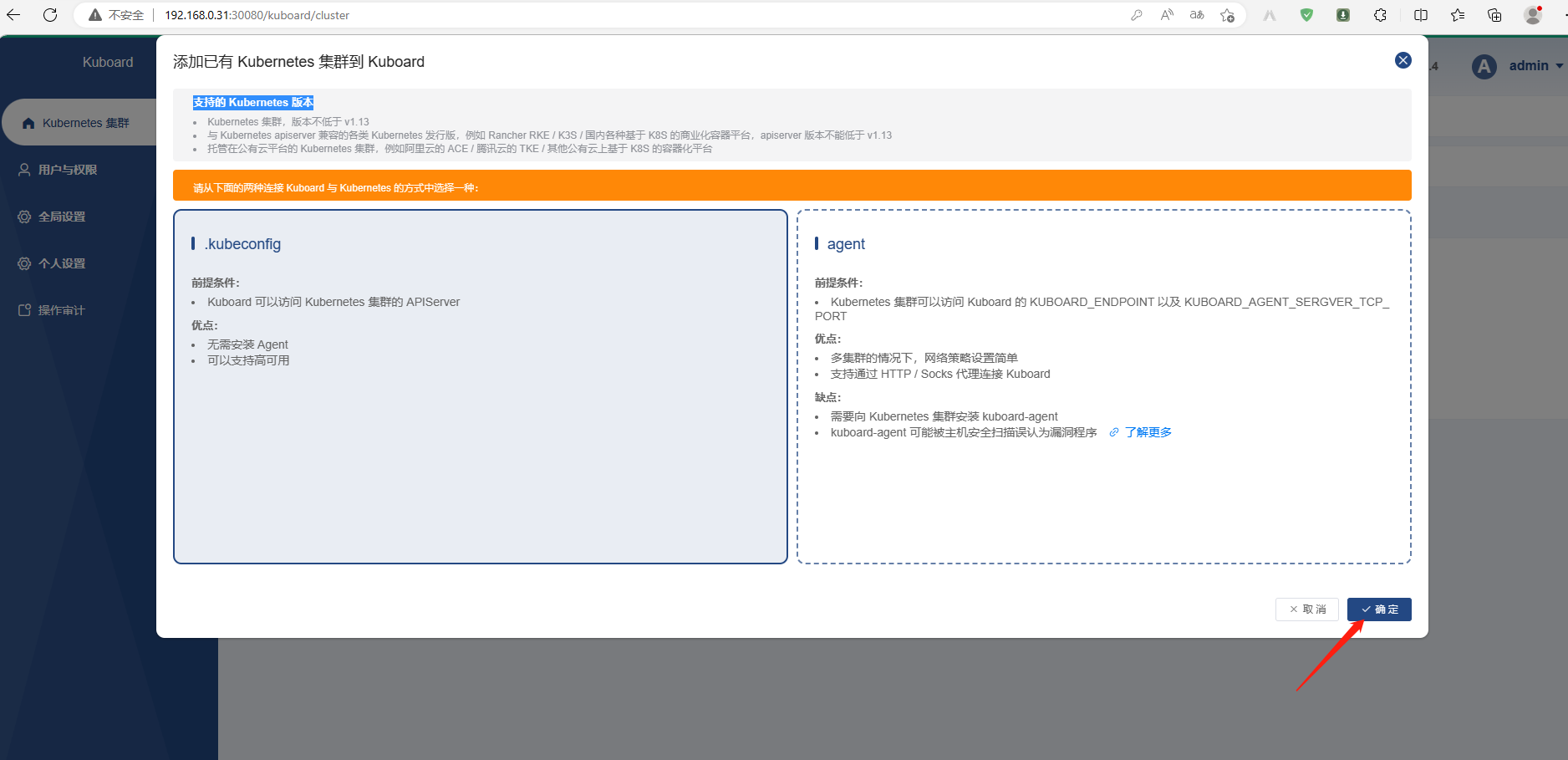

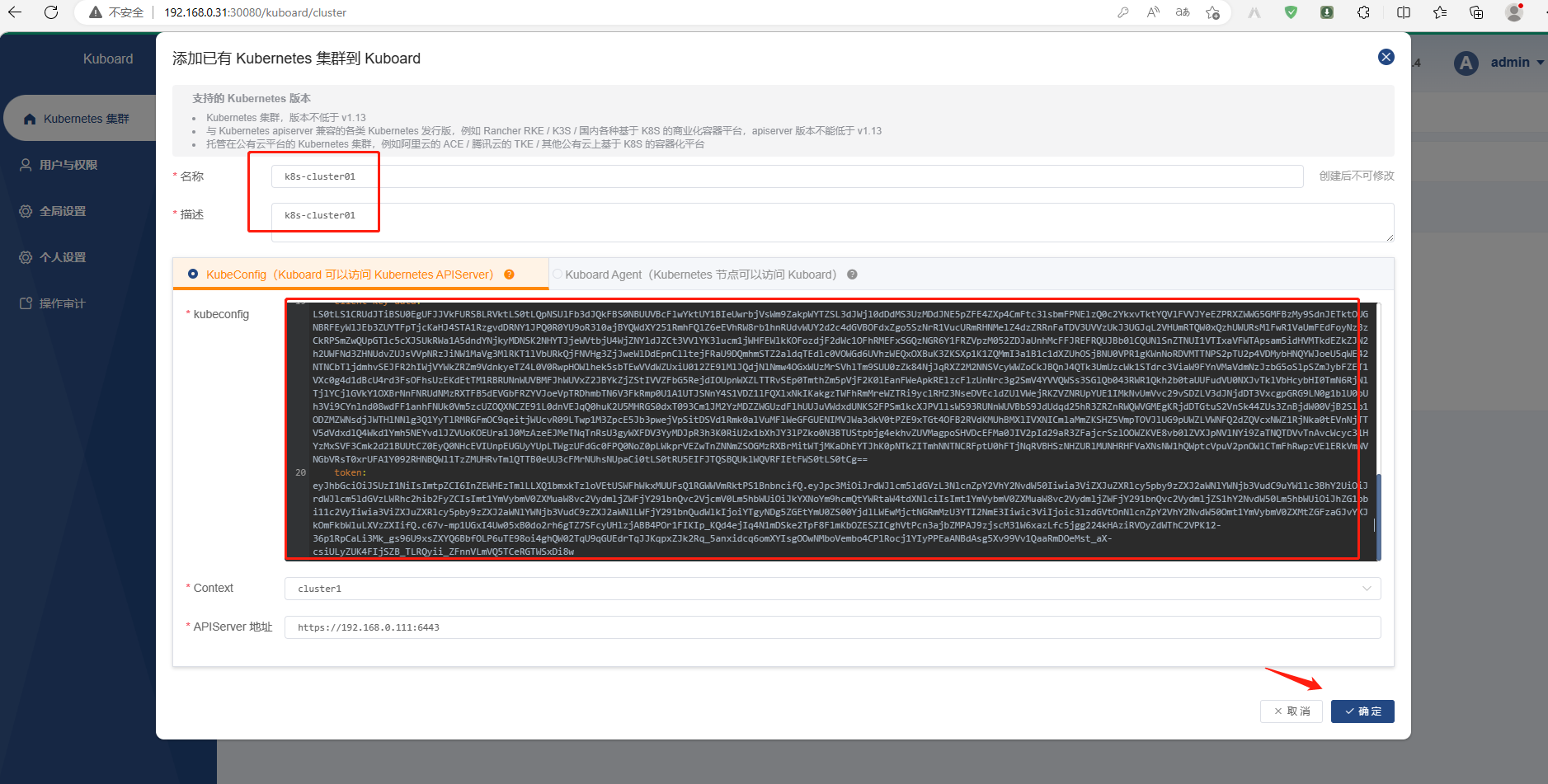

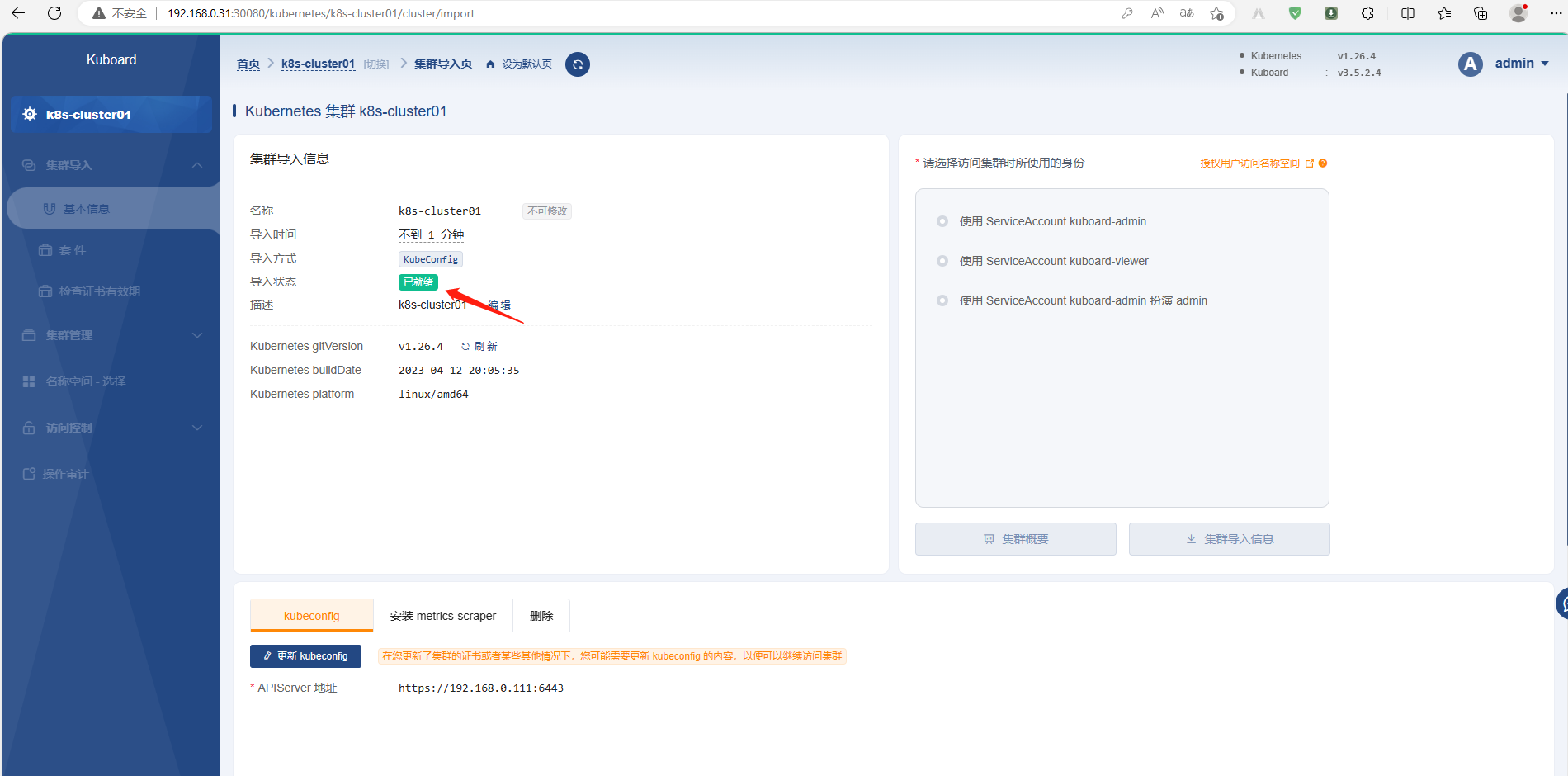

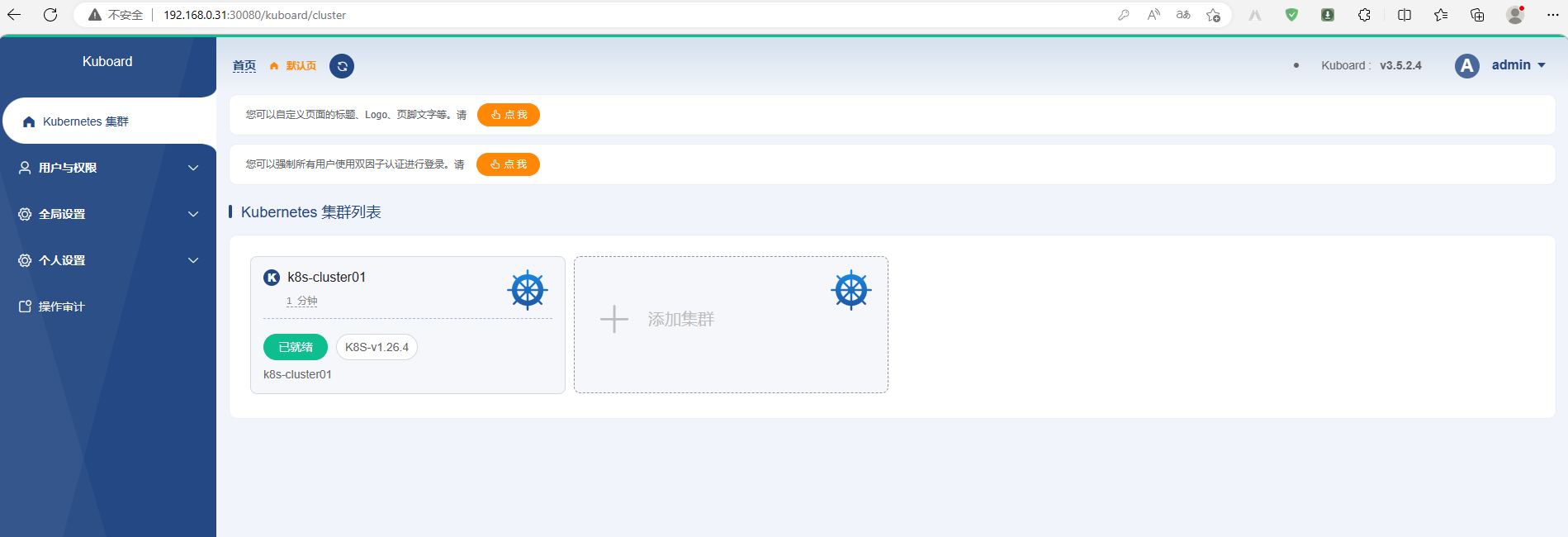

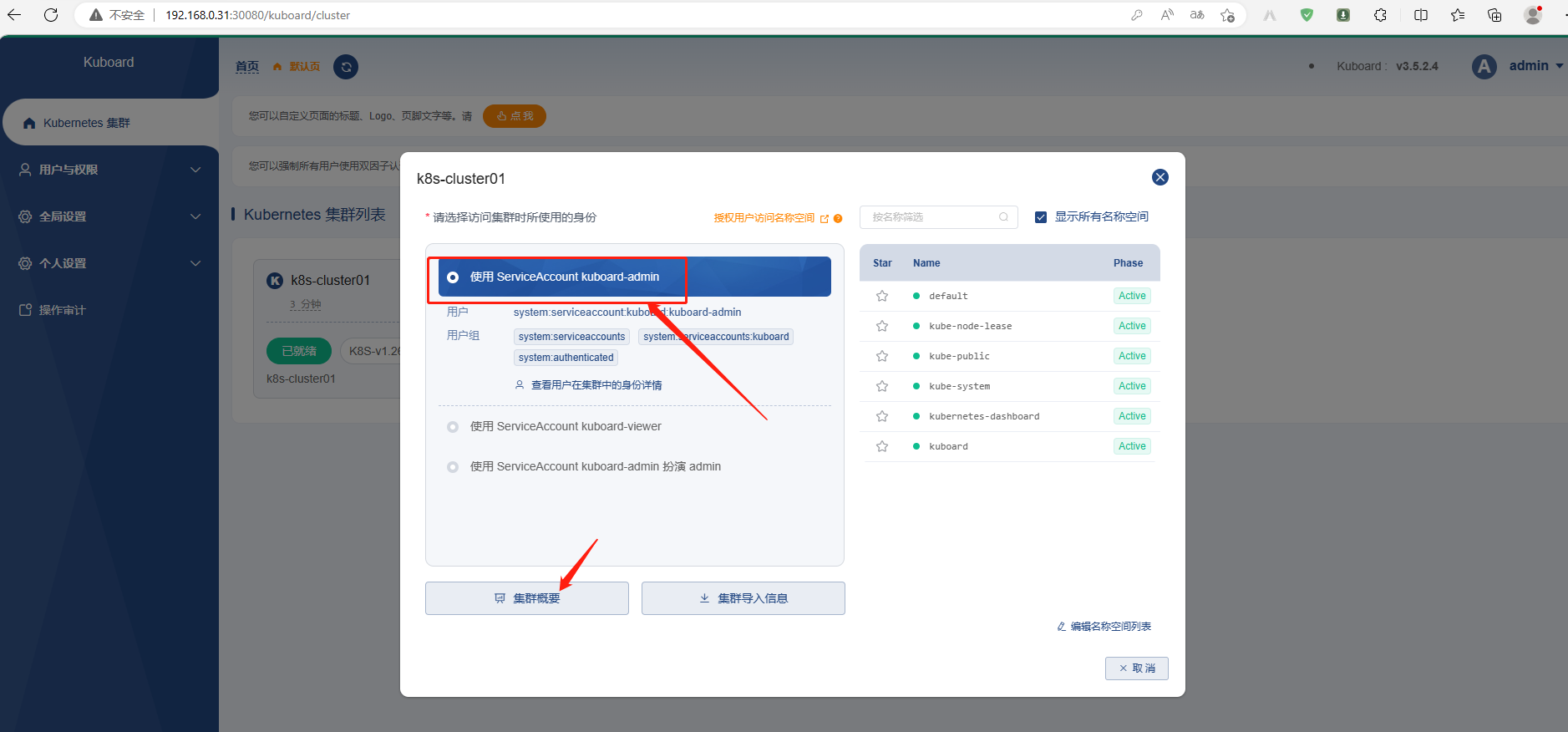

- name: Making master nodes SchedulingDisabled