應用部署引起上游服務抖動問題分析及優化實踐方案

作者:京東物流 朱永昌

背景介紹

本文主要圍繞應用部署引起上游服務抖動問題展開,結合百川分流系統範例,提供分析、解決思路,並提供一套切實可行的實踐方案。

百川分流系統作為交易訂單中心的專用閘道器,為交易訂單中心提供統一的對外標準服務(包括接單、修改、取消、回傳等),對內則基於設定規則將流量分發到不同業務線的應用上。隨著越來越多的流量切入百川系統,因系統部署引起服務抖動導致上游系統呼叫超時的問題也逐漸凸顯出來。為提供穩定的交易服務系統,提升系統可用率,需要對該問題進行優化。

經調研,集團內部現有兩種預熱方案:

(1)JSF官方提供的預熱方案;

(2)行雲編排部署結合錄製回放的預熱方案。兩種方法均無法達到預期效果。

關於方案

(1)首先,使用的前提條件是JSF消費端必需升級JSF版本到1.7.6,百川分流系統上游呼叫方有幾十個,推動所有呼叫方升級版本比較困難;其次,JSF平臺預熱規則以介面緯度進行設定,百川分流系統對外提供46個介面,設定複雜;最關鍵的是該方案的預熱規則設定的是在一個固定預熱週期(比如1分鐘)內某個介面的預熱權重(接收呼叫量比例),簡單理解就是小流量試跑,這就決定了該方案無法對系統資源進行充分預熱,預熱週期過後全部流量進入依然會因需要建立或初始化資源引起服務抖動,對於交易接單服務來說,抖動就會導致接單失敗,有卡單風險。

關於方案

(2)通過錄制線上流量進行壓測回放來實現預熱,適合讀介面,但對於寫介面如果不做特殊處理會影響線上資料;針對這個問題,目前的解決方案是通過壓測標識來識別壓測預熱流量,但交易業務邏輯複雜,下游依賴繁多,相關係統目前並不支援。單獨改造的話,介面多、風險高。

基於以上情況,我們通過百川分流系統部署引起上游服務抖動這個範例,追蹤其表象線索,深入研讀JSF原始碼,最終找到導致服務抖動的關鍵因素,開發了一套更加有效的預熱方案,驗證結果表明該方案預熱效果明顯,服務呼叫方方法效能MAX值降低90%,降到了超時時間範圍內,消除了因機器部署引起上游呼叫超時的問題。

問題現象

系統上線部署期間,純配接單服務上游呼叫方反饋接單服務抖動,出現呼叫超時現象。

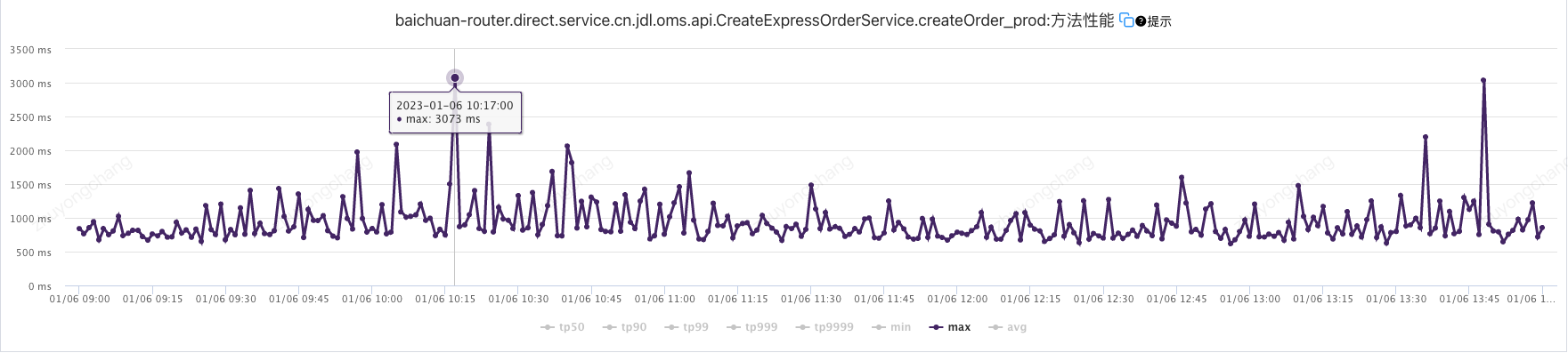

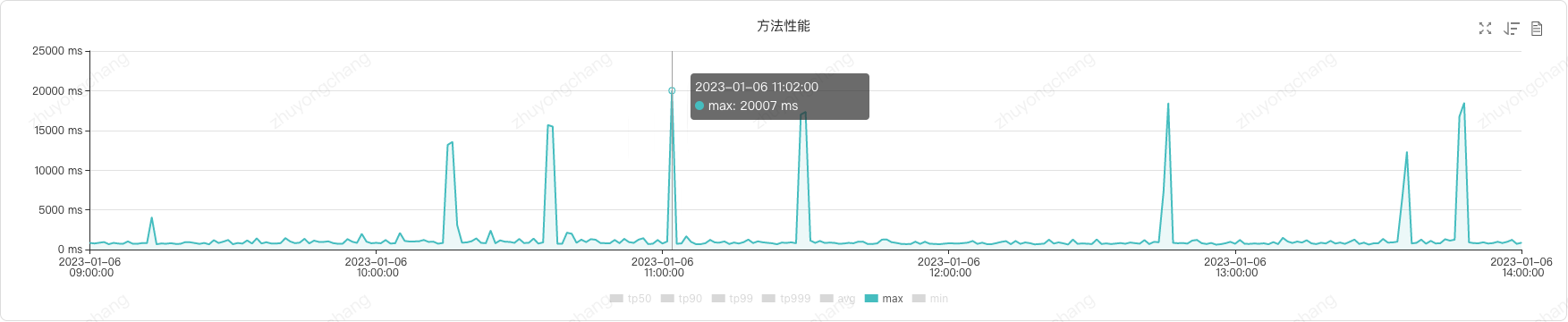

檢視此服務UMP打點,發現此服務的方法效能監控MAX值最大3073ms,未超過呼叫方設定的超時時間10000ms(如圖1所示)

圖1 服務內部監控打點

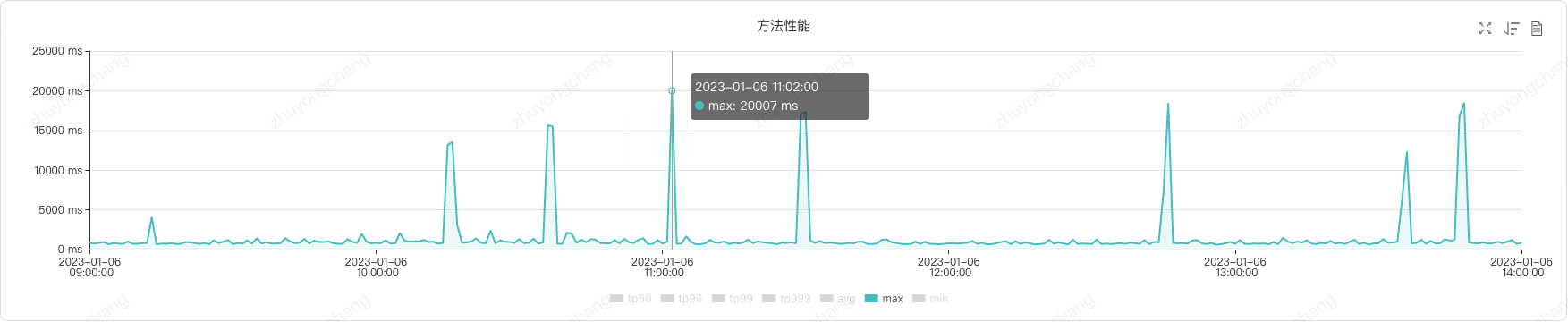

檢視此服務PFinder效能監控,發現上游呼叫方應用呼叫此服務的方法效能監控MAX值多次超過10000ms(可以直接檢視呼叫方的UMP打點,若呼叫方無法提供UMP打點時,也可藉助PFinder的應用拓撲功能進行檢視,如圖2所示)

圖2 服務外部監控打點

分析思路

從上述問題現象可以看出,在系統上線部署期間服務提供方介面效能MAX值並無明顯抖動,但服務呼叫方介面效能MAX值抖動明顯。由此,可以確定耗時不在服務提供方內部處理邏輯上,而是在進入服務提供方內部處理邏輯之前(或者之後),那麼在之前或者之後具體都經歷了什麼呢?我們不著急回答這個問題,先基於現有的一些線索逐步進行追蹤探索。

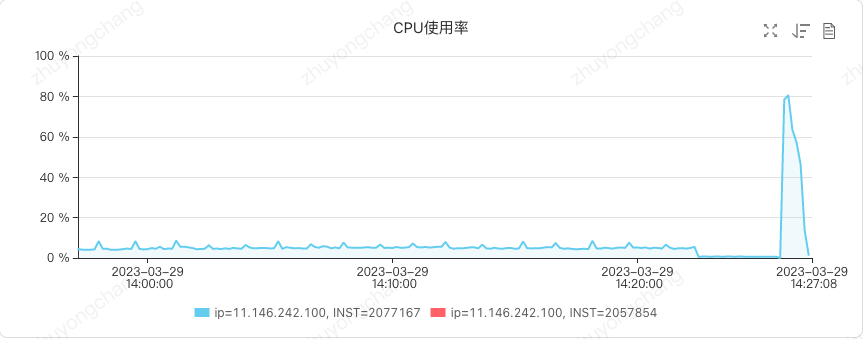

線索一:部署過程中機器CPU會有短暫飆升(如圖3所示)

如果此時有請求呼叫到當前機器,介面效能勢必會受到影響。因此,考慮機器部署完成且待機器CPU平穩後再上線JSF服務,這可以通過調整JSF延遲釋出引數來實現。具體設定如下:

<jsf:provider id="createExpressOrderService"

interface="cn.jdl.oms.api.CreateExpressOrderService"

ref="createExpressOrderServiceImpl"

register="true"

concurrents="400"

alias="${provider.express.oms}"

// 延遲釋出2分鐘

delay="120000">

</jsf:provider>

然而,實踐證明JSF服務確實延遲了2分鐘才上線(如圖4所示),且此時CPU已經處於平穩狀態,但是JSF上線瞬間又引起了CPU的二次飆升,同時呼叫方仍然會出現服務呼叫超時的現象。

圖3 機器部署過程CPU短暫飆升

圖4 部署和JSF上線瞬間均導致CPU飆升

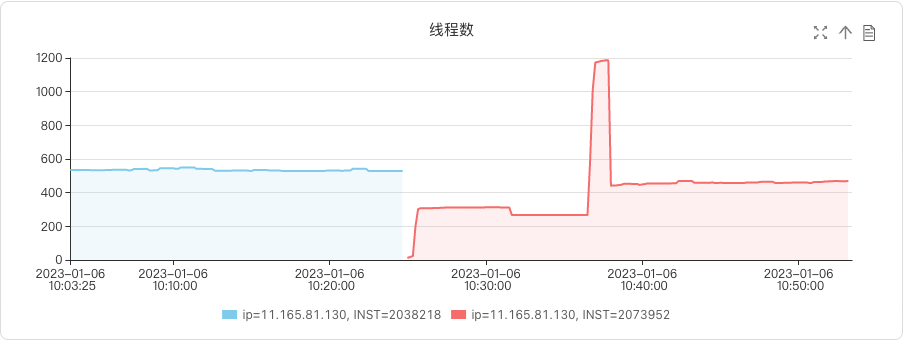

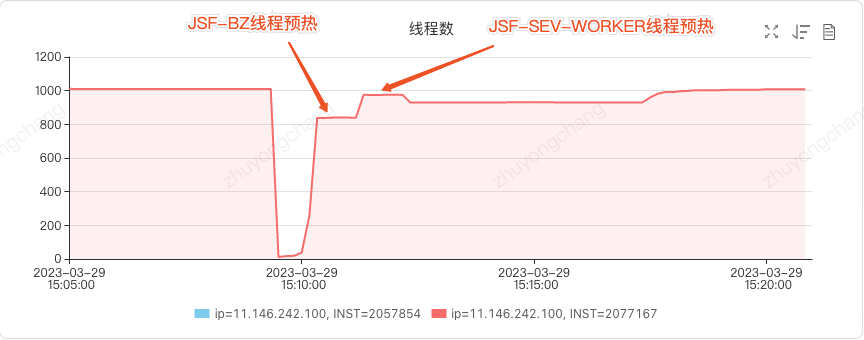

線索二:JSF上線瞬間JVM執行緒數飆升(如圖5所示)

圖5 JSF上線瞬間執行緒數飆升

使用jstack命令工具檢視執行緒堆疊,可以發現數量增長最多的執行緒是JSF-BZ執行緒,且都處於阻塞等待狀態:

"JSF-BZ-22000-137-T-350" #1038 daemon prio=5 os_prio=0 tid=0x00007f02bcde9000 nid=0x6fff waiting on condition [0x00007efa10284000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x0000000640b359e8> (a java.util.concurrent.SynchronousQueue$TransferStack)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.SynchronousQueue$TransferStack.awaitFulfill(SynchronousQueue.java:458)

at java.util.concurrent.SynchronousQueue$TransferStack.transfer(SynchronousQueue.java:362)

at java.util.concurrent.SynchronousQueue.take(SynchronousQueue.java:924)

at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1067)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1127)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

"JSF-BZ-22000-137-T-349" #1037 daemon prio=5 os_prio=0 tid=0x00007f02bcde7000 nid=0x6ffe waiting on condition [0x00007efa10305000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x0000000640b359e8> (a java.util.concurrent.SynchronousQueue$TransferStack)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.SynchronousQueue$TransferStack.awaitFulfill(SynchronousQueue.java:458)

at java.util.concurrent.SynchronousQueue$TransferStack.transfer(SynchronousQueue.java:362)

at java.util.concurrent.SynchronousQueue.take(SynchronousQueue.java:924)

at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1067)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1127)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

"JSF-BZ-22000-137-T-348" #1036 daemon prio=5 os_prio=0 tid=0x00007f02bcdd8000 nid=0x6ffd waiting on condition [0x00007efa10386000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x0000000640b359e8> (a java.util.concurrent.SynchronousQueue$TransferStack)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.SynchronousQueue$TransferStack.awaitFulfill(SynchronousQueue.java:458)

at java.util.concurrent.SynchronousQueue$TransferStack.transfer(SynchronousQueue.java:362)

at java.util.concurrent.SynchronousQueue.take(SynchronousQueue.java:924)

at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1067)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1127)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

...

通過關鍵字「JSF-BZ」可以在JSF原始碼中檢索,可以找到關於「JSF-BZ」執行緒池初始化原始碼如下:

private static synchronized ThreadPoolExecutor initPool(ServerTransportConfig transportConfig) {

final int minPoolSize, aliveTime, port = transportConfig.getPort();

int maxPoolSize = transportConfig.getServerBusinessPoolSize();

String poolType = transportConfig.getServerBusinessPoolType();

if ("fixed".equals(poolType)) { minPoolSize = maxPoolSize;

aliveTime = 0;

} else if ("cached".equals(poolType)) { minPoolSize = 20;

maxPoolSize = Math.max(minPoolSize, maxPoolSize);

aliveTime = 60000;

} else { throw new IllegalConfigureException(21401, "server.threadpool", poolType);

}

String queueType = transportConfig.getPoolQueueType();

int queueSize = transportConfig.getPoolQueueSize();

boolean isPriority = "priority".equals(queueType);

BlockingQueue<Runnable> configQueue = ThreadPoolUtils.buildQueue(queueSize, isPriority);

NamedThreadFactory threadFactory = new NamedThreadFactory("JSF-BZ-" + port, true);

RejectedExecutionHandler handler = new RejectedExecutionHandler() {

private int i = 1;

public void rejectedExecution(Runnable r, ThreadPoolExecutor executor) { if (this.i++ % 7 == 0) {

this.i = 1;

BusinessPool.LOGGER.warn("[JSF-23002]Task:{} has been reject for ThreadPool exhausted! pool:{}, active:{}, queue:{}, taskcnt: {}", new Object[] { r, Integer.valueOf(executor.getPoolSize()), Integer.valueOf(executor.getActiveCount()), Integer.valueOf(executor.getQueue().size()), Long.valueOf(executor.getTaskCount()) });

}

RejectedExecutionException err = new RejectedExecutionException("[JSF-23003]Biz thread pool of provider has bean exhausted, the server port is " + port);

ProviderErrorHook.getErrorHookInstance().onProcess(new ProviderErrorEvent(err));

throw err;

}

};

LOGGER.debug("Build " + poolType + " business pool for port " + port + " [min: " + minPoolSize + " max:" + maxPoolSize + " queueType:" + queueType + " queueSize:" + queueSize + " aliveTime:" + aliveTime + "]");

return new ThreadPoolExecutor(minPoolSize, maxPoolSize, aliveTime, TimeUnit.MILLISECONDS, configQueue, (ThreadFactory)threadFactory, handler);

}

public static BlockingQueue<Runnable> buildQueue(int size, boolean isPriority) {

BlockingQueue<Runnable> queue;

if (size == 0) {

queue = new SynchronousQueue<Runnable>();

}

else if (isPriority) {

queue = (size < 0) ? new PriorityBlockingQueue<Runnable>() : new PriorityBlockingQueue<Runnable>(size);

} else {

queue = (size < 0) ? new LinkedBlockingQueue<Runnable>() : new LinkedBlockingQueue<Runnable>(size);

}

return queue;

}

另外,JSF官方檔案關於執行緒池的說明如下:

結合JSF原始碼以及JSF官方檔案說明,可以知道JSF-BZ執行緒池的阻塞佇列用的是SynchronousQueue,這是一個同步阻塞佇列,其中每個put必須等待一個take,反之亦然。JSF-BZ執行緒池預設使用的是伸縮無佇列執行緒池,初始執行緒數為20個,那麼在JSF上線的瞬間,大批次並行請求進入,初始化執行緒遠不夠用,因此新建了大量執行緒。

既然知道了是由於JSF執行緒池初始化執行緒數量不足導致的,那麼我們可以考慮在應用啟動時對JSF執行緒池進行預熱,也就是說在應用啟動時建立足夠數量的執行緒備用。通過查閱JSF原始碼,我們找到了如下方式實現JSF執行緒池的預熱:

// 從Spring上下文獲取JSF ServerBean,可能有多個

Map<String, ServerBean> serverBeanMap = applicationContext.getBeansOfType(ServerBean.class);

if (CollectionUtils.isEmpty(serverBeanMap)) {

log.error("application preheat, jsf thread pool preheat failed, serverBeanMap is empty.");

return;

}

// 遍歷所有serverBean,分別做預熱處理

serverBeanMap.forEach((serverBeanName, serverBean) -> {

if (Objects.isNull(serverBean)) {

log.error("application preheat, jsf thread pool preheat failed, serverBean is null, serverBeanName:{}", serverBeanName);

return;

}

// 啟動ServerBean,啟動後才可以獲取到Server

serverBean.start();

Server server = serverBean.getServer();

if (Objects.isNull(server)) {

log.error("application preheat, jsf thread pool preheat failed, JSF Server is null, serverBeanName:{}", serverBeanName);

return;

}

ServerTransportConfig serverTransportConfig = server.getTransportConfig();

if (Objects.isNull(serverTransportConfig)) {

log.error("application preheat, jsf thread pool preheat failed, serverTransportConfig is null, serverBeanName:{}", serverBeanName);

return;

}

// 獲取JSF業務執行緒池

ThreadPoolExecutor businessPool = BusinessPool.getBusinessPool(serverTransportConfig);

if (Objects.isNull(businessPool)) {

log.error("application preheat, jsf biz pool preheat failed, businessPool is null, serverBeanName:{}", serverBeanName);

return;

}

int corePoolSize = businessPool.getCorePoolSize();

int maxCorePoolSize = Math.max(corePoolSize, 500);

if (maxCorePoolSize > corePoolSize) {

// 設定JSF server核心執行緒數

businessPool.setCorePoolSize(maxCorePoolSize);

}

// 初始化JSF業務執行緒池所有核心執行緒

if (businessPool.getPoolSize() < maxCorePoolSize) {

businessPool.prestartAllCoreThreads();

}

}

線索三:JSF-BZ執行緒池預熱完成後,JSF上線瞬間JVM執行緒數仍有升高

繼續使用jstack命令工具檢視執行緒堆疊,對比後可以發現數量有增長的執行緒是JSF-SEV-WORKER執行緒:

"JSF-SEV-WORKER-139-T-129" #1295 daemon prio=5 os_prio=0 tid=0x00007ef66000b800 nid=0x7289 runnable [0x00007ef627cf8000]

java.lang.Thread.State: RUNNABLE

at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method)

at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269)

at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79)

at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86)

- locked <0x0000000644f558b8> (a io.netty.channel.nio.SelectedSelectionKeySet)

- locked <0x0000000641eaaca0> (a java.util.Collections$UnmodifiableSet)

- locked <0x0000000641eaab88> (a sun.nio.ch.EPollSelectorImpl)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:101)

at io.netty.channel.nio.SelectedSelectionKeySetSelector.select(SelectedSelectionKeySetSelector.java:68)

at io.netty.channel.nio.NioEventLoop.select(NioEventLoop.java:805)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:457)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

"JSF-SEV-WORKER-139-T-128" #1293 daemon prio=5 os_prio=0 tid=0x00007ef60c002800 nid=0x7288 runnable [0x00007ef627b74000]

java.lang.Thread.State: RUNNABLE

at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method)

at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269)

at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79)

at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86)

- locked <0x0000000641ea7450> (a io.netty.channel.nio.SelectedSelectionKeySet)

- locked <0x0000000641e971e8> (a java.util.Collections$UnmodifiableSet)

- locked <0x0000000641e970d0> (a sun.nio.ch.EPollSelectorImpl)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:101)

at io.netty.channel.nio.SelectedSelectionKeySetSelector.select(SelectedSelectionKeySetSelector.java:68)

at io.netty.channel.nio.NioEventLoop.select(NioEventLoop.java:805)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:457)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

"JSF-SEV-WORKER-139-T-127" #1291 daemon prio=5 os_prio=0 tid=0x00007ef608001000 nid=0x7286 runnable [0x00007ef627df9000]

java.lang.Thread.State: RUNNABLE

at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method)

at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269)

at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79)

at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86)

- locked <0x0000000641e93998> (a io.netty.channel.nio.SelectedSelectionKeySet)

- locked <0x0000000641e83730> (a java.util.Collections$UnmodifiableSet)

- locked <0x0000000641e83618> (a sun.nio.ch.EPollSelectorImpl)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:101)

at io.netty.channel.nio.SelectedSelectionKeySetSelector.select(SelectedSelectionKeySetSelector.java:68)

at io.netty.channel.nio.NioEventLoop.select(NioEventLoop.java:805)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:457)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

那麼JSF-SEV-WORKER執行緒是做什麼的?我們是不是也可以對它做預熱操作?帶著這些疑問,再次查閱JSF原始碼:

private synchronized EventLoopGroup initChildEventLoopGroup() {

NioEventLoopGroup nioEventLoopGroup = null;

int threads = (this.childNioEventThreads > 0) ? this.childNioEventThreads : Math.max(8, Constants.DEFAULT_IO_THREADS);

NamedThreadFactory threadName = new NamedThreadFactory("JSF-SEV-WORKER", isDaemon());

EventLoopGroup eventLoopGroup = null;

if (isUseEpoll()) {

EpollEventLoopGroup epollEventLoopGroup = new EpollEventLoopGroup(threads, (ThreadFactory)threadName);

} else {

nioEventLoopGroup = new NioEventLoopGroup(threads, (ThreadFactory)threadName);

}

return (EventLoopGroup)nioEventLoopGroup;

}

從JSF原始碼中可以看出JSF-SEV-WORKER執行緒是JSF內部使用Netty處理網路通訊建立的執行緒,仔細研讀JSF原始碼同樣可以找到預熱JSF-SEV-WORKER執行緒的方法,程式碼如下:

// 通過serverTransportConfig獲取NioEventLoopGroup

// 其中,serverTransportConfig的獲取方式可參考JSF-BZ執行緒預熱程式碼

NioEventLoopGroup eventLoopGroup = (NioEventLoopGroup) serverTransportConfig.getChildEventLoopGroup();

int threadSize = this.jsfSevWorkerThreads;

while (threadSize-- > 0) {

new Thread(() -> {

// 通過手工提交任務的方式建立JSF-SEV-WORKER執行緒達到預熱效果

eventLoopGroup.submit(() -> log.info("submit thread to netty by hand, threadName:{}", Thread.currentThread().getName()));

}).start();

}

JSF-BZ執行緒、JSF-SEV-WORKER執行緒預熱效果如下圖所示:

圖6 JSF-BZ/JSF-SEV-WORKER執行緒預熱效果

挖掘原始碼線索

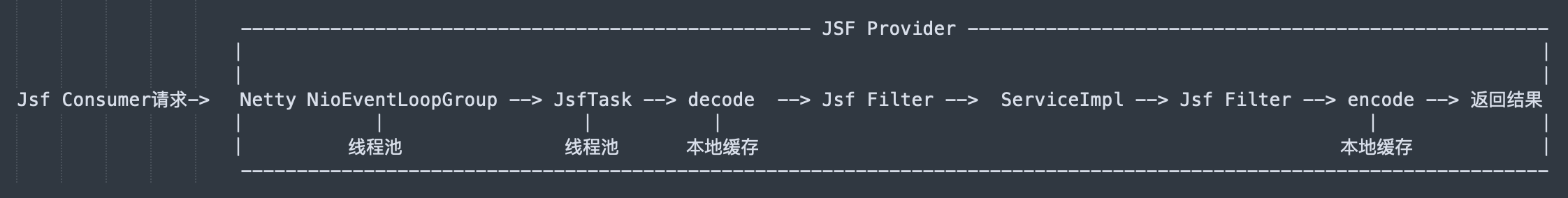

至此,經過JSF延遲釋出、JSF內部執行緒池預熱後,系統部署引起服務呼叫方抖動超時的現象有一定緩解(從原來的10000ms-20000ms降低到5000ms-10000ms),雖然說是有效果,但還有些不盡如人意。應該還是有優化空間的,現在是時候考慮我們最開始留下的那個疑問了:「服務呼叫方在進入服務提供方內部處理邏輯之前(或者之後),具體都經歷了什麼?」。最容易想到的肯定是中間經過了網路,但是網路因素基本可以排除,因為在部署過程中機器網路效能正常,那麼還有哪些影響因素呢?此時我們還是要回歸到JSF原始碼中去尋找線索。

圖7 JSF原始碼中Provider內部處理過程

經過仔細研讀JSF原始碼,我們可以發現JSF內部對於介面出入參有一系列編碼、解碼、序列化、反序列化的操作,而且在這些操作中我們有了驚喜的發現:本地快取,部分原始碼如下:

DESC_CLASS_CACHE

private static final ConcurrentMap<String, Class<?>> DESC_CLASS_CACHE = new ConcurrentHashMap<String, Class<?>>();

private static Class<?> desc2class(ClassLoader cl, String desc) throws ClassNotFoundException {

switch (desc.charAt(0)) {

case 'V':

return void.class;

case 'Z': return boolean.class;

case 'B': return byte.class;

case 'C': return char.class;

case 'D': return double.class;

case 'F': return float.class;

case 'I': return int.class;

case 'J': return long.class;

case 'S': return short.class;

case 'L':

desc = desc.substring(1, desc.length() - 1).replace('/', '.');

break;

case '[':

desc = desc.replace('/', '.');

break;

default:

throw new ClassNotFoundException("Class not found: " + desc);

}

if (cl == null)

cl = ClassLoaderUtils.getCurrentClassLoader();

Class<?> clazz = DESC_CLASS_CACHE.get(desc);

if (clazz == null) {

clazz = Class.forName(desc, true, cl);

DESC_CLASS_CACHE.put(desc, clazz);

}

return clazz;

}

NAME_CLASS_CACHE

private static final ConcurrentMap<String, Class<?>> NAME_CLASS_CACHE = new ConcurrentHashMap<String, Class<?>>();

private static Class<?> name2class(ClassLoader cl, String name) throws ClassNotFoundException {

int c = 0, index = name.indexOf('[');

if (index > 0) {

c = (name.length() - index) / 2;

name = name.substring(0, index);

}

if (c > 0) {

StringBuilder sb = new StringBuilder();

while (c-- > 0) {

sb.append("[");

}

if ("void".equals(name)) { sb.append('V'); }

else if ("boolean".equals(name)) { sb.append('Z'); }

else if ("byte".equals(name)) { sb.append('B'); }

else if ("char".equals(name)) { sb.append('C'); }

else if ("double".equals(name)) { sb.append('D'); }

else if ("float".equals(name)) { sb.append('F'); }

else if ("int".equals(name)) { sb.append('I'); }

else if ("long".equals(name)) { sb.append('J'); }

else if ("short".equals(name)) { sb.append('S'); }

else { sb.append('L').append(name).append(';'); }

name = sb.toString();

}

else {

if ("void".equals(name)) return void.class;

if ("boolean".equals(name)) return boolean.class;

if ("byte".equals(name)) return byte.class;

if ("char".equals(name)) return char.class;

if ("double".equals(name)) return double.class;

if ("float".equals(name)) return float.class;

if ("int".equals(name)) return int.class;

if ("long".equals(name)) return long.class;

if ("short".equals(name)) return short.class;

}

if (cl == null)

cl = ClassLoaderUtils.getCurrentClassLoader();

Class<?> clazz = NAME_CLASS_CACHE.get(name);

if (clazz == null) {

clazz = Class.forName(name, true, cl);

NAME_CLASS_CACHE.put(name, clazz);

}

return clazz;

}

SerializerCache

private ConcurrentHashMap _cachedSerializerMap;

public Serializer getSerializer(Class<?> cl) throws HessianProtocolException {

Serializer serializer = (Serializer)_staticSerializerMap.get(cl);

if (serializer != null) {

return serializer;

}

if (this._cachedSerializerMap != null) {

serializer = (Serializer)this._cachedSerializerMap.get(cl);

if (serializer != null) {

return serializer;

}

}

int i = 0;

for (; serializer == null && this._factories != null && i < this._factories.size();

i++) {

AbstractSerializerFactory factory = this._factories.get(i);

serializer = factory.getSerializer(cl);

}

if (serializer == null)

{

if (isZoneId(cl)) {

ZoneIdSerializer zoneIdSerializer = ZoneIdSerializer.getInstance();

} else if (isEnumSet(cl)) {

serializer = EnumSetSerializer.getInstance();

} else if (JavaSerializer.getWriteReplace(cl) != null) {

serializer = new JavaSerializer(cl, this._loader);

}

else if (HessianRemoteObject.class.isAssignableFrom(cl)) {

serializer = new RemoteSerializer();

}

else if (Map.class.isAssignableFrom(cl)) {

if (this._mapSerializer == null) {

this._mapSerializer = new MapSerializer();

}

serializer = this._mapSerializer;

} else if (Collection.class.isAssignableFrom(cl)) {

if (this._collectionSerializer == null) {

this._collectionSerializer = new CollectionSerializer();

}

serializer = this._collectionSerializer;

} else if (cl.isArray()) {

serializer = new ArraySerializer();

} else if (Throwable.class.isAssignableFrom(cl)) {

serializer = new ThrowableSerializer(cl, getClassLoader());

} else if (InputStream.class.isAssignableFrom(cl)) {

serializer = new InputStreamSerializer();

} else if (Iterator.class.isAssignableFrom(cl)) {

serializer = IteratorSerializer.create();

} else if (Enumeration.class.isAssignableFrom(cl)) {

serializer = EnumerationSerializer.create();

} else if (Calendar.class.isAssignableFrom(cl)) {

serializer = CalendarSerializer.create();

} else if (Locale.class.isAssignableFrom(cl)) {

serializer = LocaleSerializer.create();

} else if (Enum.class.isAssignableFrom(cl)) {

serializer = new EnumSerializer(cl);

}

}

if (serializer == null) {

serializer = getDefaultSerializer(cl);

}

if (this._cachedSerializerMap == null) {

this._cachedSerializerMap = new ConcurrentHashMap<Object, Object>(8);

}

this._cachedSerializerMap.put(cl, serializer);

return serializer;

}

DeserializerCache

private ConcurrentHashMap _cachedDeserializerMap;

public Deserializer getDeserializer(Class<?> cl) throws HessianProtocolException {

Deserializer deserializer = (Deserializer)_staticDeserializerMap.get(cl);

if (deserializer != null) {

return deserializer;

}

if (this._cachedDeserializerMap != null) {

deserializer = (Deserializer)this._cachedDeserializerMap.get(cl);

if (deserializer != null) {

return deserializer;

}

}

int i = 0;

for (; deserializer == null && this._factories != null && i < this._factories.size();

i++) {

AbstractSerializerFactory factory = this._factories.get(i);

deserializer = factory.getDeserializer(cl);

}

if (deserializer == null)

if (Collection.class.isAssignableFrom(cl)) {

deserializer = new CollectionDeserializer(cl);

}

else if (Map.class.isAssignableFrom(cl)) {

deserializer = new MapDeserializer(cl);

}

else if (cl.isInterface()) {

deserializer = new ObjectDeserializer(cl);

}

else if (cl.isArray()) {

deserializer = new ArrayDeserializer(cl.getComponentType());

}

else if (Enumeration.class.isAssignableFrom(cl)) {

deserializer = EnumerationDeserializer.create();

}

else if (Enum.class.isAssignableFrom(cl)) {

deserializer = new EnumDeserializer(cl);

}

else if (Class.class.equals(cl)) {

deserializer = new ClassDeserializer(this._loader);

} else {

deserializer = getDefaultDeserializer(cl);

}

if (this._cachedDeserializerMap == null) {

this._cachedDeserializerMap = new ConcurrentHashMap<Object, Object>(8);

}

this._cachedDeserializerMap.put(cl, deserializer);

return deserializer;

}

如上述原始碼所示,我們找到了四個本地快取,遺憾的是,這四個本地快取都是私有的,我們並不能直接對其進行初始化。但是我們還是從原始碼中找到了可以間接對這四個本地快取進行初始化預熱的方法,程式碼如下:

DESC_CLASS_CACHE、NAME_CLASS_CACHE預熱程式碼

// DESC_CLASS_CACHE預熱

ReflectUtils.desc2classArray(ReflectUtils.getDesc(Class.forName("cn.jdl.oms.express.model.CreateExpressOrderRequest")));

// NAME_CLASS_CACHE預熱

ReflectUtils.name2class("cn.jdl.oms.express.model.CreateExpressOrderRequest");

SerializerCache、DeserializerCache預熱程式碼

public class JsfSerializerFactoryPreheat extends HessianSerializerFactory {

public static void doPreheat(String className) {

try {

// 序列化

JsfSerializerFactoryPreheat.SERIALIZER_FACTORY.getSerializer(Class.forName("cn.jdl.oms.express.model.CreateExpressOrderRequest"));

// 反序列化

JsfSerializerFactoryPreheat.SERIALIZER_FACTORY.getDeserializer(Class.forName(className));

} catch (Exception e) {

// do nothing

log.error("JsfSerializerFactoryPreheat failed:", e);

}

}

}

由JSF原始碼對於介面出入參編碼、解碼、序列化、反序列化操作,我們又想到應用介面內部有對出入參進行Fastjson序列化的操作,而且Fastjson序列化時需要初始化SerializeConfig,對效能會有一定影響(可參考

https://www.ktanx.com/blog/p/3181)。我們可以通過以下程式碼對Fastjson進行初始化預熱:

JSON.parseObject(JSON.toJSONString(Class.forName("cn.jdl.oms.express.model.CreateExpressOrderRequest").newInstance()), Class.forName("cn.jdl.oms.express.model.CreateExpressOrderRequest"));

到目前為止,我們針對應用啟動預熱做了以下工作:

•JSF延遲釋出

•JSF-BZ執行緒池預熱

•JSF-SEV-WORKER執行緒預熱

•JSF編碼、解碼、序列化、反序列化快取預熱

•Fastjson初始化預熱

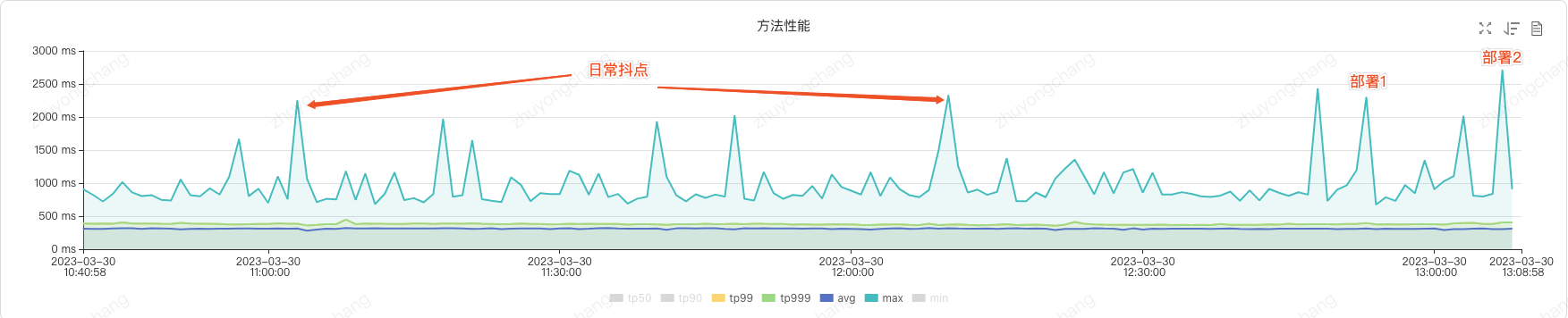

經過以上預熱操作,應用部署引起服務抖動的現象得到了明顯改善,由治理前的10000ms-20000ms降低到了 2000ms-3000ms(略高於日常流量抖動幅度)。

解決方案

基於以上分析,將JSF執行緒池預熱、本地快取預熱、Fastjson預熱整合打包,提供了一個簡單可用的預熱小工具,Jar包已上傳私服,如有意向請參考使用說明:應用啟動預熱工具使用說明。

應用部署導致服務抖動屬於一個共性問題,針對此問題目前有如下可選方案:

1、JSF官方提供的預熱方案(

https://cf.jd.com/pages/viewpage.action?pageId=1132755015)

原理:利用JSF1.7.6的預熱策略動態下發,通過伺服器負載均衡能力,對於上線需要預熱的介面進行流量權重調整,小流量試跑,達到預熱目的。

優點:平臺設定即可,接入成本低。

缺點:按權重預熱,資源預熱不充分;需要服務呼叫方JSF版本升級到1.7.6,對於上游呼叫方較多的情況下推動版本升級困難。

2、流量錄製回放預熱方案

原理:錄製線上真實流量,然後通過壓測的方式將流量回放到新部署機器達到預熱目的。

優點:結合了行雲部署編排,下線、部署、預熱、上線,以壓測的方式可以使得預熱更加充分。

缺點:使用流程較繁瑣;僅對讀介面友好,寫介面需要關注資料是否對線上有影響。

3、本文方案

原理:通過對服務提供方JSF執行緒池、本地快取、Fastjson進行初始化的方式進行系統預熱。

優點:資源預熱充分;使用簡單,支援自定義擴充套件。

缺點:對除JSF以外的其他中介軟體如Redis、ES等暫不支援,但可以通過自定義擴充套件實現。

預熱效果

預熱前:

預熱後:

使用本文提供的預熱工具,預熱前後對比效果明顯,如上圖所示,呼叫方方法效能MAX值從原來的10000ms-20000ms降低到了2000ms-3000ms,已經基本接近日常MAX抖點。

總結

應用部署引起上游服務抖動是一個常見問題,如果上游系統對服務抖動比較敏感,或會因此造成業務影響的話,這個問題還是需要引起我們足夠的重視與關注。本文涉及的百川分流系統,單純對外提供JSF服務,且無其他中介軟體的引入,特點是介面多,呼叫量大。

此問題在系統執行前期並不明顯,上線部署上游基本無感,但隨著呼叫量的增長,問題才逐漸凸顯出來,如果單純通過擴容也是可以緩解這個問題,但是這樣會帶來很大的資源浪費,違背「降本」的原則。為此,從已有線索出發,逐步深挖JSF原始碼,對執行緒池、本地快取等在系統啟動時進行充分初始化預熱操作,從而有效降低JSF上線瞬間的服務抖動。