Yolov5——訓練目標檢測模型

專案的克隆

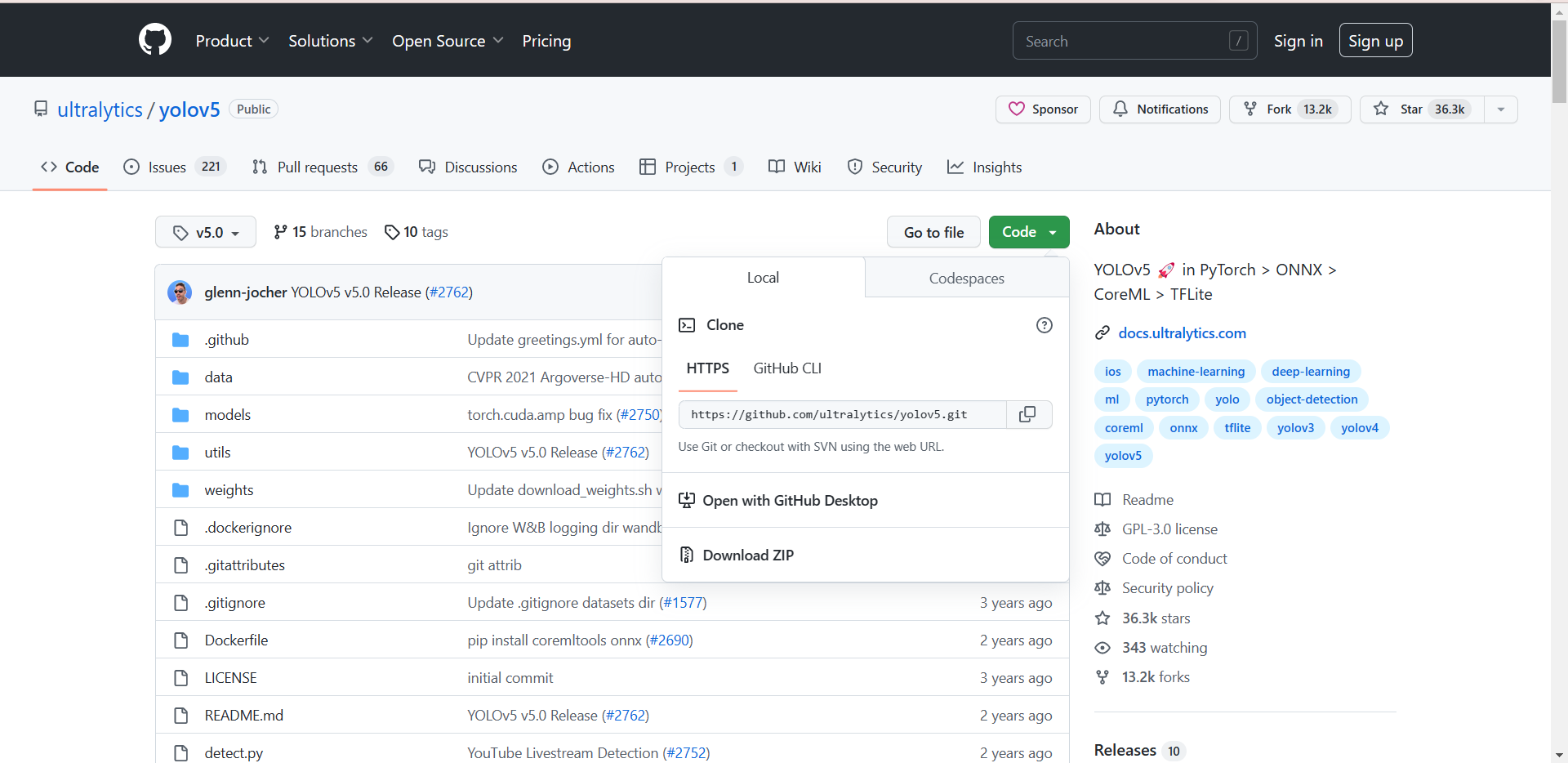

開啟yolov5官網(GitHub - ultralytics/yolov5 at v5.0),下載yolov5的專案:

環境的安裝(免額外安裝CUDA和cudnn)

開啟anaconda的終端,建立新的名為yolov5的環境(python選擇3.8版本):

conda create -n yolov5 python=3.8

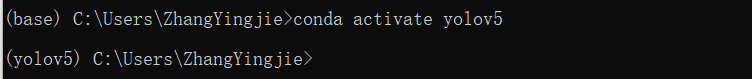

執行如下命令,啟用這個環境:

conda activate yolov5

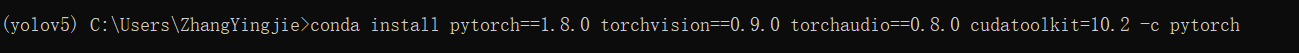

開啟pytorch的官網,選擇自己顯示卡對應的pytorch版本(我的顯示卡為GTX1650,這裡選擇1.8.0pytorch版本):

選擇CUDA版本(這裡我選擇10.2),複製命令到anaconda終端執行:

至此pytorch環境安裝完成,接下來驗證CUDA和cudnn版本,開啟Ptcharm,執行如下程式碼:

import torch print(torch.cuda.is_available()) print(torch.backends.cudnn.is_available()) print(torch.cuda_version) print(torch.backends.cudnn.version())

輸出如下結果表示安裝成功:

利用labelimg標註資料集:

labelimg的安裝:

開啟cmd命令控制檯,輸入如下的命令下載labelimg相關的依賴:

pip install labelimg -i https://pypi.tuna.tsinghua.edu.cn/simple

資料準備:

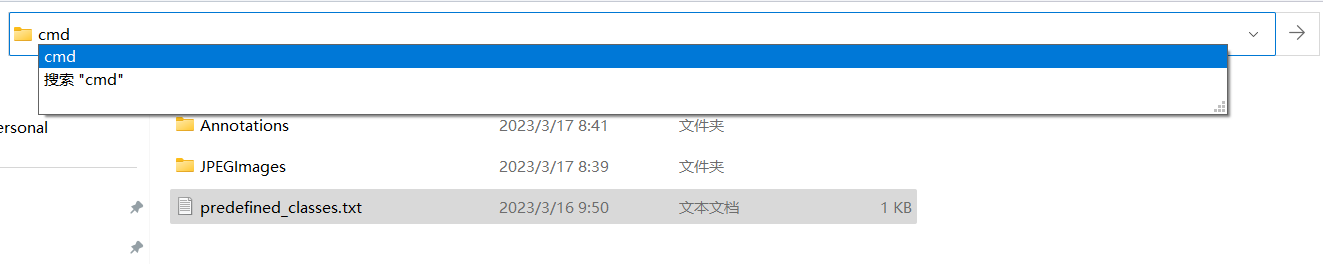

新建一個名為VOC2007的資料夾,在裡面建立一個名為JPEGImages的資料夾存放需要打標籤的圖片檔案;再建立一個名為Annotations的資料夾存放標註的標籤檔案;最後建立一個名為 predefined_classes.txt 的txt檔案來存放所要標註的類別名稱(這裡我的類別一共有6類,分別是fanbingbing,jiangwen,liangjiahui,liuyifei,zhangziyi,zhoujielun):

進入到剛剛建立的VOC2007路徑,執行cmd命令:

輸入如下的命令開啟labelimg並初始化predefined_classes.txt裡面定義的類:

labelimg JPEGImages predefined_classes.txt

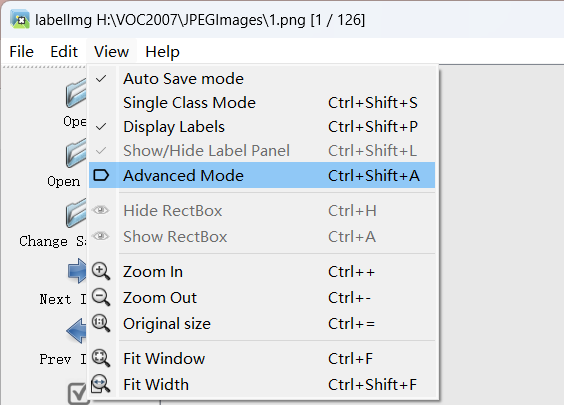

開啟view設定,勾選如下選項(建議勾選):

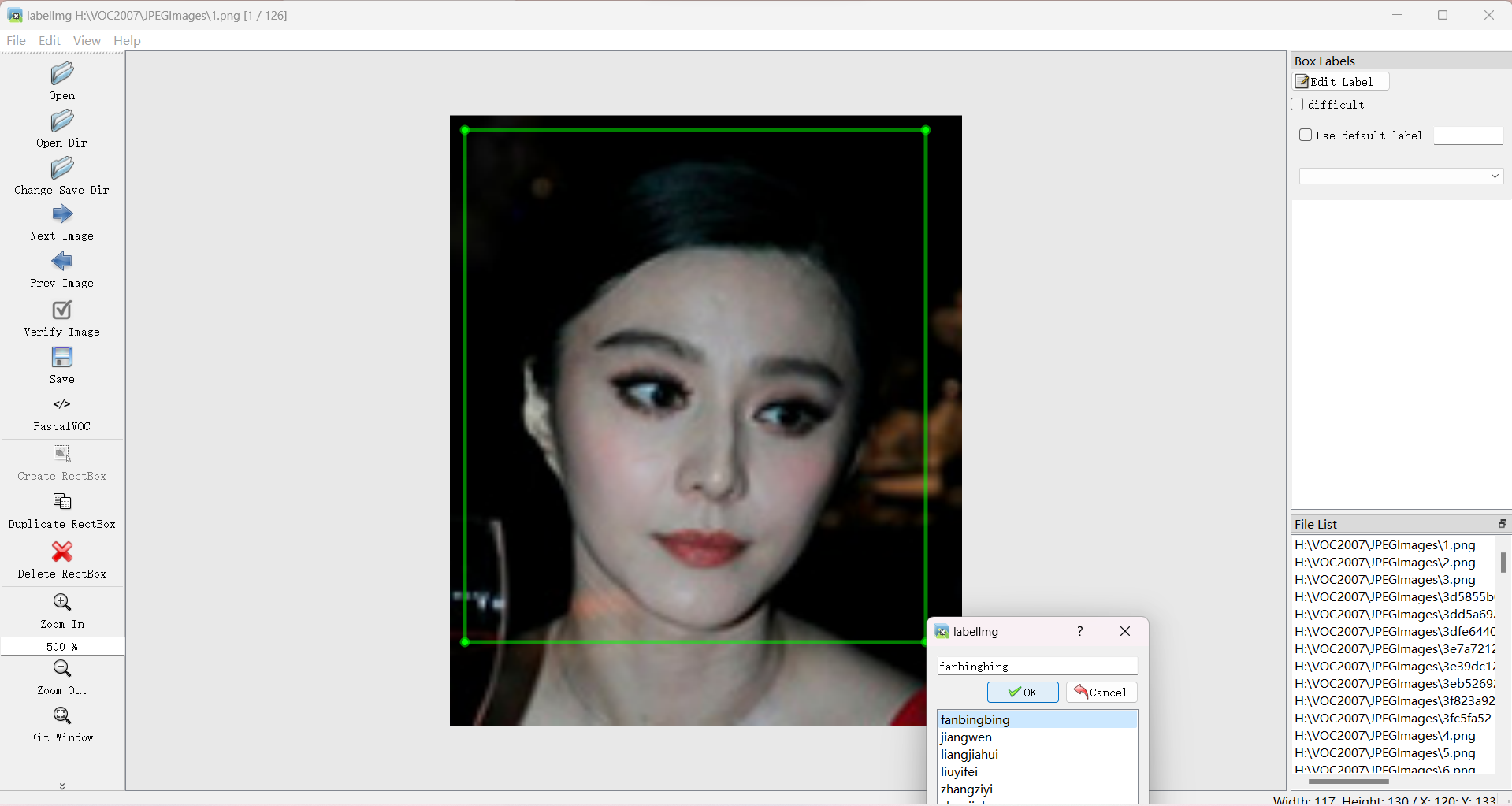

標註資料:

按下快捷鍵W調出標註十字架,選擇需要標註的物件區域,並定義自己要標註的類別:

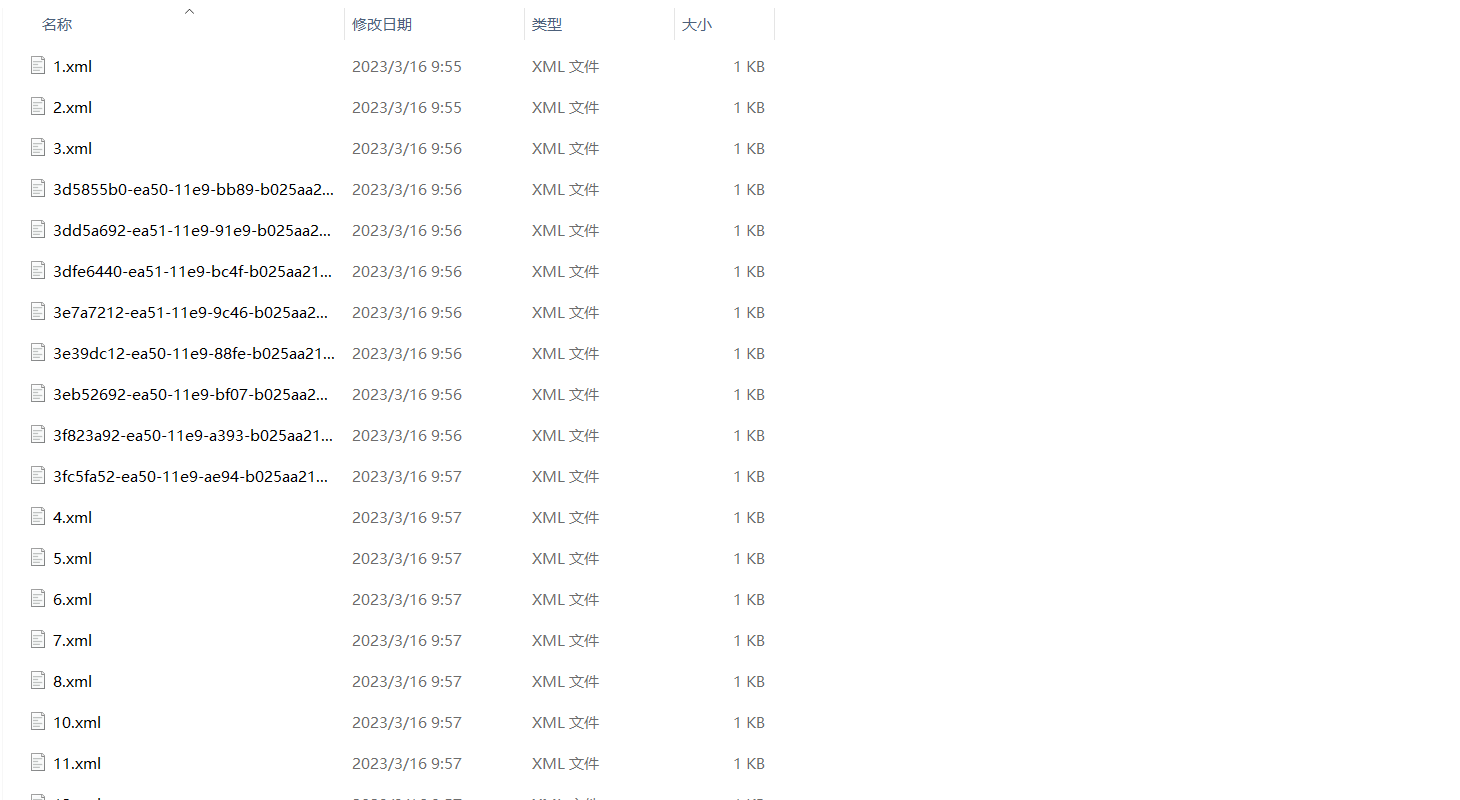

打完標籤後的圖片會在Annotations 資料夾下生成對應的xml檔案:

資料集格式轉化及訓練集和驗證集劃分

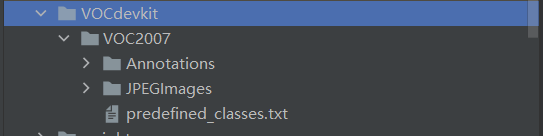

利用pycharm開啟從yolov5官網下載的yolov5專案,在該專案目錄下建立名為VOCdevkit的資料夾,並將剛才的VOC2007資料夾放入:

在VOCdevkit的同級目錄下建立新的python檔案,執行如下程式碼:

(注:classes裡面必須正確填寫xml裡面已經標註好的類這裡為classes = ["fanbingbing", "jiangwen", "liangjiahui", "liuyifei", "zhangziyi", "zhoujielun"])

import xml.etree.ElementTree as ET import pickle import os from os import listdir, getcwd from os.path import join import random from shutil import copyfile classes = ["fanbingbing", "jiangwen", "liangjiahui", "liuyifei", "zhangziyi", "zhoujielun"] # classes=["ball"] TRAIN_RATIO = 80 def clear_hidden_files(path): dir_list = os.listdir(path) for i in dir_list: abspath = os.path.join(os.path.abspath(path), i) if os.path.isfile(abspath): if i.startswith("._"): os.remove(abspath) else: clear_hidden_files(abspath) def convert(size, box): dw = 1. / size[0] dh = 1. / size[1] x = (box[0] + box[1]) / 2.0 y = (box[2] + box[3]) / 2.0 w = box[1] - box[0] h = box[3] - box[2] x = x * dw w = w * dw y = y * dh h = h * dh return (x, y, w, h) def convert_annotation(image_id): in_file = open("F:/Yolov5/yolov5_offical/yolov5-master/VOCdevkit/VOC2007/Annotations/%s.xml" % image_id) out_file = open('F:/Yolov5/yolov5_offical/yolov5-master/VOCdevkit/VOC2007/YOLOLabels/%s.txt' % image_id, 'w') tree = ET.parse(in_file) root = tree.getroot() size = root.find('size') w = int(size.find('width').text) h = int(size.find('height').text) for obj in root.iter('object'): difficult = obj.find('difficult').text cls = obj.find('name').text if cls not in classes or int(difficult) == 1: continue cls_id = classes.index(cls) xmlbox = obj.find('bndbox') b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text)) bb = convert((w, h), b) out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n') in_file.close() out_file.close() wd = os.getcwd() wd = os.getcwd() data_base_dir = os.path.join(wd, "F:/Yolov5/yolov5_offical/yolov5-master/VOCdevkit/") if not os.path.isdir(data_base_dir): os.mkdir(data_base_dir) work_sapce_dir = os.path.join(data_base_dir, "VOC2007/") if not os.path.isdir(work_sapce_dir): os.mkdir(work_sapce_dir) annotation_dir = os.path.join(work_sapce_dir, "Annotations/") if not os.path.isdir(annotation_dir): os.mkdir(annotation_dir) clear_hidden_files(annotation_dir) image_dir = os.path.join(work_sapce_dir, "JPEGImages/") if not os.path.isdir(image_dir): os.mkdir(image_dir) clear_hidden_files(image_dir) yolo_labels_dir = os.path.join(work_sapce_dir, "YOLOLabels/") if not os.path.isdir(yolo_labels_dir): os.mkdir(yolo_labels_dir) clear_hidden_files(yolo_labels_dir) yolov5_images_dir = os.path.join(data_base_dir, "images/") if not os.path.isdir(yolov5_images_dir): os.mkdir(yolov5_images_dir) clear_hidden_files(yolov5_images_dir) yolov5_labels_dir = os.path.join(data_base_dir, "labels/") if not os.path.isdir(yolov5_labels_dir): os.mkdir(yolov5_labels_dir) clear_hidden_files(yolov5_labels_dir) yolov5_images_train_dir = os.path.join(yolov5_images_dir, "train/") if not os.path.isdir(yolov5_images_train_dir): os.mkdir(yolov5_images_train_dir) clear_hidden_files(yolov5_images_train_dir) yolov5_images_test_dir = os.path.join(yolov5_images_dir, "val/") if not os.path.isdir(yolov5_images_test_dir): os.mkdir(yolov5_images_test_dir) clear_hidden_files(yolov5_images_test_dir) yolov5_labels_train_dir = os.path.join(yolov5_labels_dir, "train/") if not os.path.isdir(yolov5_labels_train_dir): os.mkdir(yolov5_labels_train_dir) clear_hidden_files(yolov5_labels_train_dir) yolov5_labels_test_dir = os.path.join(yolov5_labels_dir, "val/") if not os.path.isdir(yolov5_labels_test_dir): os.mkdir(yolov5_labels_test_dir) clear_hidden_files(yolov5_labels_test_dir) train_file = open(os.path.join(wd, "yolov5_train.txt"), 'w') test_file = open(os.path.join(wd, "yolov5_val.txt"), 'w') train_file.close() test_file.close() train_file = open(os.path.join(wd, "yolov5_train.txt"), 'a') test_file = open(os.path.join(wd, "yolov5_val.txt"), 'a') list_imgs = os.listdir(image_dir) # list image files prob = random.randint(1, 100) print("Probability: %d" % prob) for i in range(0, len(list_imgs)): path = os.path.join(image_dir, list_imgs[i]) if os.path.isfile(path): image_path = image_dir + list_imgs[i] voc_path = list_imgs[i] (nameWithoutExtention, extention) = os.path.splitext(os.path.basename(image_path)) (voc_nameWithoutExtention, voc_extention) = os.path.splitext(os.path.basename(voc_path)) annotation_name = nameWithoutExtention + '.xml' annotation_path = os.path.join(annotation_dir, annotation_name) label_name = nameWithoutExtention + '.txt' label_path = os.path.join(yolo_labels_dir, label_name) prob = random.randint(1, 100) print("Probability: %d" % prob) if (prob < TRAIN_RATIO): # train dataset if os.path.exists(annotation_path): train_file.write(image_path + '\n') convert_annotation(nameWithoutExtention) # convert label copyfile(image_path, yolov5_images_train_dir + voc_path) copyfile(label_path, yolov5_labels_train_dir + label_name) else: # test dataset if os.path.exists(annotation_path): test_file.write(image_path + '\n') convert_annotation(nameWithoutExtention) # convert label copyfile(image_path, yolov5_images_test_dir + voc_path) copyfile(label_path, yolov5_labels_test_dir + label_name) train_file.close() test_file.close()

程式碼執行完成後目錄結構如下:

下載預訓練權重:

開啟這個網址下載預訓練權重,這裡選擇yolov5s.pt。

訓練模型

修改資料組態檔:

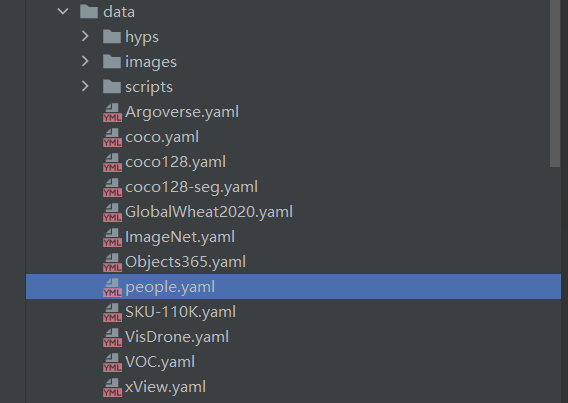

找到data目錄下的voc.yaml檔案,將該檔案複製一份,重新命名為people.yaml:

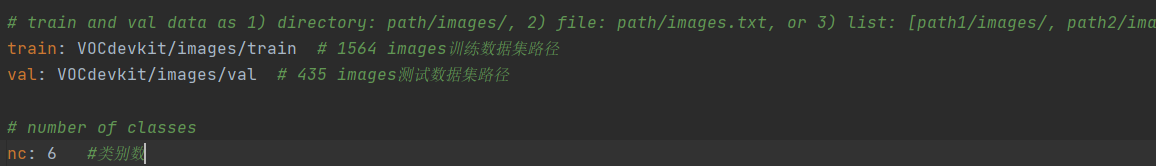

開啟people.yaml,修改相關引數(train,val,nc):

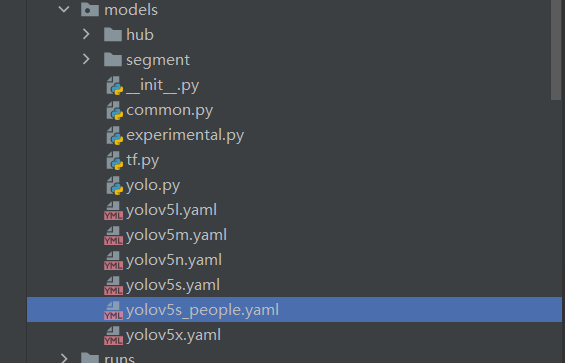

修改模型組態檔:

找到models目錄下的yolov5s.yaml檔案,將該檔案複製一份,重新命名為yolov5s_people.yaml:

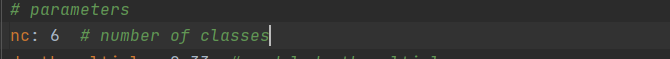

開啟yolov5_people.yaml,修改相關引數(nc):

訓練模型:

開啟train.py,修改如下引數:

weights:權重的路徑

cfg:yolov5s_people.yaml路徑

data:people.yaml路徑

epochs:訓練的輪數

batch-size:每次輸入圖片數量(根據自己電腦情況修改)

workers:最大工作核心數(根據自己電腦情況修改)

執行train.py函數訓練自己的模型。

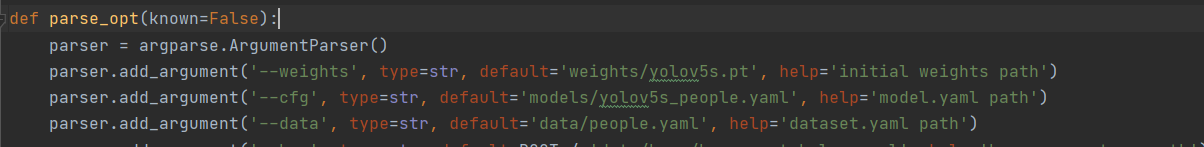

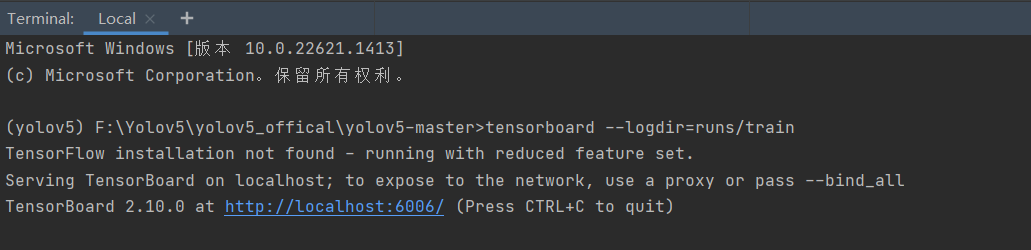

tensorbord檢視引數

開啟pycharm的命令控制終端,執行如下命令:

tensorboard --logdir=runs/train

推理測試

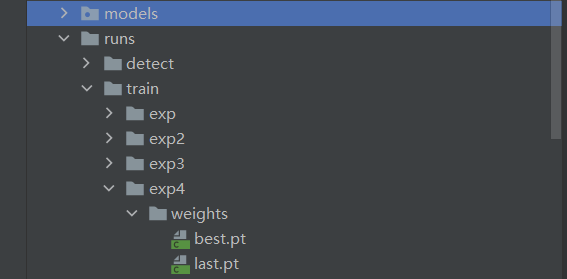

模型訓練完成後,會在主目錄下產生一個名為runs的資料夾,在runs/train/exp/weights目錄下會產生兩個權重檔案,一個是最後一輪的權重檔案,一個是最好的權重檔案。

開啟detect.py檔案,修改相關引數:

weights:權重路徑(這裡選擇best.pt)

source:測試資料路徑,可以是圖片/視訊,也可以是'0'(電腦自帶攝像頭)

行detect.py進行測試,測試結果會儲存在runs/detect/exp目錄下: