【RocketMQ】Dledger紀錄檔複製原始碼分析

訊息儲存

在 【RocketMQ】訊息的儲存一文中提到,Broker收到訊息後會呼叫CommitLog的asyncPutMessage方法寫入訊息,在DLedger模式下使用的是DLedgerCommitLog,進入asyncPutMessages方法,主要處理邏輯如下:

- 呼叫

serialize方法將訊息資料序列化; - 構建批次訊息追加請求

BatchAppendEntryRequest,並設定上一步序列化的訊息資料; - 呼叫

handleAppend方法提交訊息追加請求,進行訊息寫入;

public class DLedgerCommitLog extends CommitLog {

@Override

public CompletableFuture<PutMessageResult> asyncPutMessages(MessageExtBatch messageExtBatch) {

// ...

AppendMessageResult appendResult;

BatchAppendFuture<AppendEntryResponse> dledgerFuture;

EncodeResult encodeResult;

// 將訊息資料序列化

encodeResult = this.messageSerializer.serialize(messageExtBatch);

if (encodeResult.status != AppendMessageStatus.PUT_OK) {

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, new AppendMessageResult(encodeResult

.status)));

}

putMessageLock.lock();

msgIdBuilder.setLength(0);

long elapsedTimeInLock;

long queueOffset;

int msgNum = 0;

try {

beginTimeInDledgerLock = this.defaultMessageStore.getSystemClock().now();

queueOffset = getQueueOffsetByKey(encodeResult.queueOffsetKey, tranType);

encodeResult.setQueueOffsetKey(queueOffset, true);

// 建立批次追加訊息請求

BatchAppendEntryRequest request = new BatchAppendEntryRequest();

request.setGroup(dLedgerConfig.getGroup()); // 設定group

request.setRemoteId(dLedgerServer.getMemberState().getSelfId());

// 從EncodeResult中獲取序列化的訊息資料

request.setBatchMsgs(encodeResult.batchData);

// 呼叫handleAppend將資料寫入

AppendFuture<AppendEntryResponse> appendFuture = (AppendFuture<AppendEntryResponse>) dLedgerServer.handleAppend(request);

if (appendFuture.getPos() == -1) {

log.warn("HandleAppend return false due to error code {}", appendFuture.get().getCode());

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.OS_PAGECACHE_BUSY, new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR)));

}

// ...

} catch (Exception e) {

log.error("Put message error", e);

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR)));

} finally {

beginTimeInDledgerLock = 0;

putMessageLock.unlock();

}

// ...

});

}

}

序列化

在serialize方法中,主要是將訊息資料序列化到記憶體buffer,由於訊息可能有多條,所以開啟迴圈讀取每一條資料進行序列化:

- 讀取總資料大小、魔數和CRC校驗和,這三步是為了讓buffer的讀取指標向後移動;

- 讀取FLAG,記在flag變數;

- 讀取訊息長度,記在bodyLen變數;

- 接下來是訊息內容開始位置,將開始位置記錄在bodyPos變數;

- 從訊息內容開始位置,讀取訊息內容計算CRC校驗和;

- 更改buffer讀取指標位置,將指標從bodyPos開始移動bodyLen個位置,也就是跳過訊息內容,繼續讀取下一個資料;

- 讀取訊息屬性長度,記錄訊息屬性開始位置;

- 獲取主題資訊並計算資料的長度;

- 計算訊息長度,並根據訊息長度分配記憶體;

- 校驗訊息長度是否超過限制;

- 初始化記憶體空間,將訊息的相關內容依次寫入;

- 返回序列化結果

EncodeResult;

class MessageSerializer {

public EncodeResult serialize(final MessageExtBatch messageExtBatch) {

// 設定Key:top+queueId

String key = messageExtBatch.getTopic() + "-" + messageExtBatch.getQueueId();

int totalMsgLen = 0;

// 獲取訊息資料

ByteBuffer messagesByteBuff = messageExtBatch.wrap();

List<byte[]> batchBody = new LinkedList<>();

// 獲取系統標識

int sysFlag = messageExtBatch.getSysFlag();

int bornHostLength = (sysFlag & MessageSysFlag.BORNHOST_V6_FLAG) == 0 ? 4 + 4 : 16 + 4;

int storeHostLength = (sysFlag & MessageSysFlag.STOREHOSTADDRESS_V6_FLAG) == 0 ? 4 + 4 : 16 + 4;

// 分配記憶體

ByteBuffer bornHostHolder = ByteBuffer.allocate(bornHostLength);

ByteBuffer storeHostHolder = ByteBuffer.allocate(storeHostLength);

// 是否有剩餘資料未讀取

while (messagesByteBuff.hasRemaining()) {

// 讀取總大小

messagesByteBuff.getInt();

// 讀取魔數

messagesByteBuff.getInt();

// 讀取CRC校驗和

messagesByteBuff.getInt();

// 讀取FLAG

int flag = messagesByteBuff.getInt();

// 讀取訊息長度

int bodyLen = messagesByteBuff.getInt();

// 記錄訊息內容開始位置

int bodyPos = messagesByteBuff.position();

// 從訊息內容開始位置,讀取訊息內容計算CRC校驗和

int bodyCrc = UtilAll.crc32(messagesByteBuff.array(), bodyPos, bodyLen);

// 更改位置,將指標從bodyPos開始移動bodyLen個位置,也就是跳過訊息內容,繼續讀取下一個資料

messagesByteBuff.position(bodyPos + bodyLen);

// 讀取訊息屬性長度

short propertiesLen = messagesByteBuff.getShort();

// 記錄訊息屬性位置

int propertiesPos = messagesByteBuff.position();

// 更改位置,跳過訊息屬性

messagesByteBuff.position(propertiesPos + propertiesLen);

// 獲取主題資訊

final byte[] topicData = messageExtBatch.getTopic().getBytes(MessageDecoder.CHARSET_UTF8);

// 主題位元組陣列長度

final int topicLength = topicData.length;

// 計算訊息長度

final int msgLen = calMsgLength(messageExtBatch.getSysFlag(), bodyLen, topicLength, propertiesLen);

// 根據訊息長度分配記憶體

ByteBuffer msgStoreItemMemory = ByteBuffer.allocate(msgLen);

// 如果超過了最大訊息大小

if (msgLen > this.maxMessageSize) {

CommitLog.log.warn("message size exceeded, msg total size: " + msgLen + ", msg body size: " +

bodyLen

+ ", maxMessageSize: " + this.maxMessageSize);

throw new RuntimeException("message size exceeded");

}

// 更新總長度

totalMsgLen += msgLen;

// 如果超過了最大訊息大小

if (totalMsgLen > maxMessageSize) {

throw new RuntimeException("message size exceeded");

}

// 初始化記憶體空間

this.resetByteBuffer(msgStoreItemMemory, msgLen);

// 1 寫入長度

msgStoreItemMemory.putInt(msgLen);

// 2 寫入魔數

msgStoreItemMemory.putInt(DLedgerCommitLog.MESSAGE_MAGIC_CODE);

// 3 寫入CRC校驗和

msgStoreItemMemory.putInt(bodyCrc);

// 4 寫入QUEUEID

msgStoreItemMemory.putInt(messageExtBatch.getQueueId());

// 5 寫入FLAG

msgStoreItemMemory.putInt(flag);

// 6 寫入佇列偏移量QUEUEOFFSET

msgStoreItemMemory.putLong(0L);

// 7 寫入物理偏移量

msgStoreItemMemory.putLong(0);

// 8 寫入系統標識SYSFLAG

msgStoreItemMemory.putInt(messageExtBatch.getSysFlag());

// 9 寫入訊息產生的時間戳

msgStoreItemMemory.putLong(messageExtBatch.getBornTimestamp());

// 10 BORNHOST

resetByteBuffer(bornHostHolder, bornHostLength);

msgStoreItemMemory.put(messageExtBatch.getBornHostBytes(bornHostHolder));

// 11 寫入訊息儲存時間戳

msgStoreItemMemory.putLong(messageExtBatch.getStoreTimestamp());

// 12 STOREHOSTADDRESS

resetByteBuffer(storeHostHolder, storeHostLength);

msgStoreItemMemory.put(messageExtBatch.getStoreHostBytes(storeHostHolder));

// 13 RECONSUMETIMES

msgStoreItemMemory.putInt(messageExtBatch.getReconsumeTimes());

// 14 Prepared Transaction Offset

msgStoreItemMemory.putLong(0);

// 15 寫入訊息內容長度

msgStoreItemMemory.putInt(bodyLen);

if (bodyLen > 0) {

// 寫入訊息內容

msgStoreItemMemory.put(messagesByteBuff.array(), bodyPos, bodyLen);

}

// 16 寫入主題

msgStoreItemMemory.put((byte) topicLength);

msgStoreItemMemory.put(topicData);

// 17 寫入屬性長度

msgStoreItemMemory.putShort(propertiesLen);

if (propertiesLen > 0) {

msgStoreItemMemory.put(messagesByteBuff.array(), propertiesPos, propertiesLen);

}

// 建立位元組陣列

byte[] data = new byte[msgLen];

msgStoreItemMemory.clear();

msgStoreItemMemory.get(data);

// 加入到訊息集合

batchBody.add(data);

}

// 返回結果

return new EncodeResult(AppendMessageStatus.PUT_OK, key, batchBody, totalMsgLen);

}

}

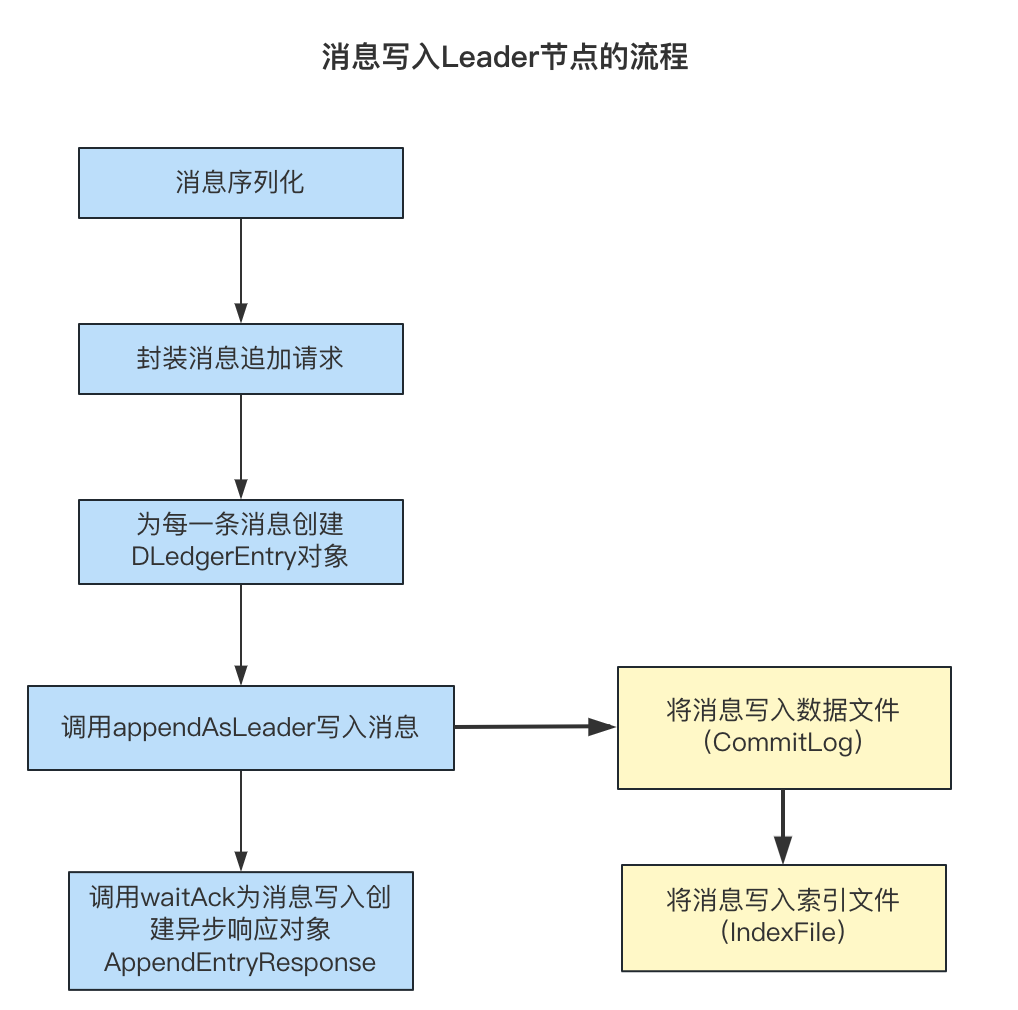

寫入訊息

將訊息資料序列化之後,封裝了訊息追加請求,呼叫handleAppend方法寫入訊息,處理邏輯如下:

- 獲取當前的Term,判斷當前Term對應的寫入請求數量是否超過了最大值,如果未超過進入下一步,如果超過,設定響應狀態為

LEADER_PENDING_FULL表示處理的訊息追加請求數量過多,拒絕處理當前請求; - 校驗是否是批次請求:

- 如果是:遍歷每一個訊息,為訊息建立

DLedgerEntry物件,呼叫appendAsLeader將訊息寫入到Leader節點, 並呼叫waitAck為最後最後一條訊息建立非同步響應物件; - 如果不是:直接為訊息建立

DLedgerEntry物件,呼叫appendAsLeader將訊息寫入到Leader節點並呼叫waitAck建立非同步響應物件;

- 如果是:遍歷每一個訊息,為訊息建立

public class DLedgerServer implements DLedgerProtocolHander {

@Override

public CompletableFuture<AppendEntryResponse> handleAppend(AppendEntryRequest request) throws IOException {

try {

PreConditions.check(memberState.getSelfId().equals(request.getRemoteId()), DLedgerResponseCode.UNKNOWN_MEMBER, "%s != %s", request.getRemoteId(), memberState.getSelfId());

PreConditions.check(memberState.getGroup().equals(request.getGroup()), DLedgerResponseCode.UNKNOWN_GROUP, "%s != %s", request.getGroup(), memberState.getGroup());

// 校驗是否是Leader節點,如果不是Leader丟擲NOT_LEADER異常

PreConditions.check(memberState.isLeader(), DLedgerResponseCode.NOT_LEADER);

PreConditions.check(memberState.getTransferee() == null, DLedgerResponseCode.LEADER_TRANSFERRING);

// 獲取當前的Term

long currTerm = memberState.currTerm();

// 判斷Pengding請求的數量

if (dLedgerEntryPusher.isPendingFull(currTerm)) {

AppendEntryResponse appendEntryResponse = new AppendEntryResponse();

appendEntryResponse.setGroup(memberState.getGroup());

// 設定響應結果LEADER_PENDING_FULL

appendEntryResponse.setCode(DLedgerResponseCode.LEADER_PENDING_FULL.getCode());

// 設定Term

appendEntryResponse.setTerm(currTerm);

appendEntryResponse.setLeaderId(memberState.getSelfId()); // 設定LeaderID

return AppendFuture.newCompletedFuture(-1, appendEntryResponse);

} else {

if (request instanceof BatchAppendEntryRequest) { // 批次

BatchAppendEntryRequest batchRequest = (BatchAppendEntryRequest) request;

if (batchRequest.getBatchMsgs() != null && batchRequest.getBatchMsgs().size() != 0) {

long[] positions = new long[batchRequest.getBatchMsgs().size()];

DLedgerEntry resEntry = null;

int index = 0;

// 遍歷每一個訊息

Iterator<byte[]> iterator = batchRequest.getBatchMsgs().iterator();

while (iterator.hasNext()) {

// 建立DLedgerEntry

DLedgerEntry dLedgerEntry = new DLedgerEntry();

// 設定訊息內容

dLedgerEntry.setBody(iterator.next());

// 寫入訊息

resEntry = dLedgerStore.appendAsLeader(dLedgerEntry);

positions[index++] = resEntry.getPos();

}

// 為最後一個dLedgerEntry建立非同步響應物件

BatchAppendFuture<AppendEntryResponse> batchAppendFuture =

(BatchAppendFuture<AppendEntryResponse>) dLedgerEntryPusher.waitAck(resEntry, true);

batchAppendFuture.setPositions(positions);

return batchAppendFuture;

}

throw new DLedgerException(DLedgerResponseCode.REQUEST_WITH_EMPTY_BODYS, "BatchAppendEntryRequest" +

" with empty bodys");

} else { // 普通訊息

DLedgerEntry dLedgerEntry = new DLedgerEntry();

// 設定訊息內容

dLedgerEntry.setBody(request.getBody());

// 寫入訊息

DLedgerEntry resEntry = dLedgerStore.appendAsLeader(dLedgerEntry);

// 等待響應,建立非同步響應物件

return dLedgerEntryPusher.waitAck(resEntry, false);

}

}

} catch (DLedgerException e) {

// ...

}

}

}

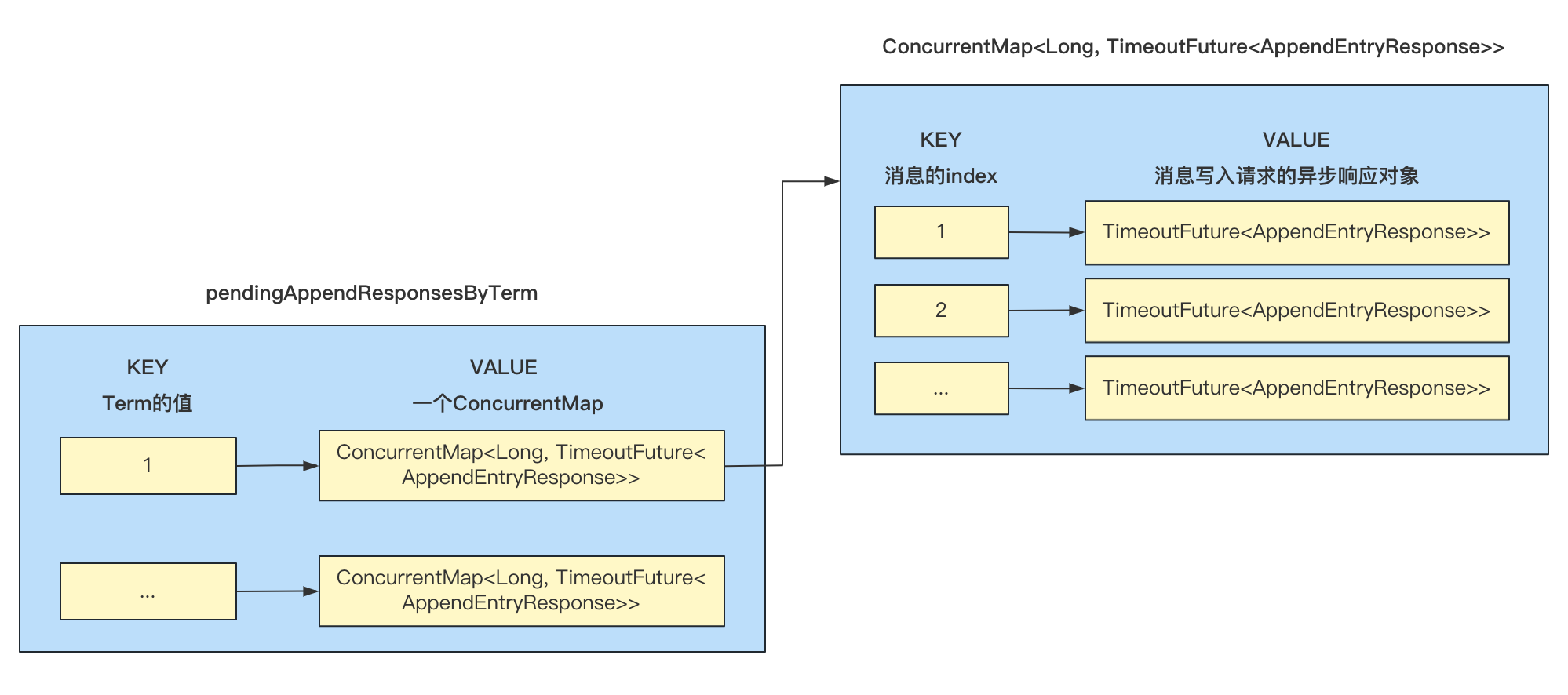

pendingAppendResponsesByTerm

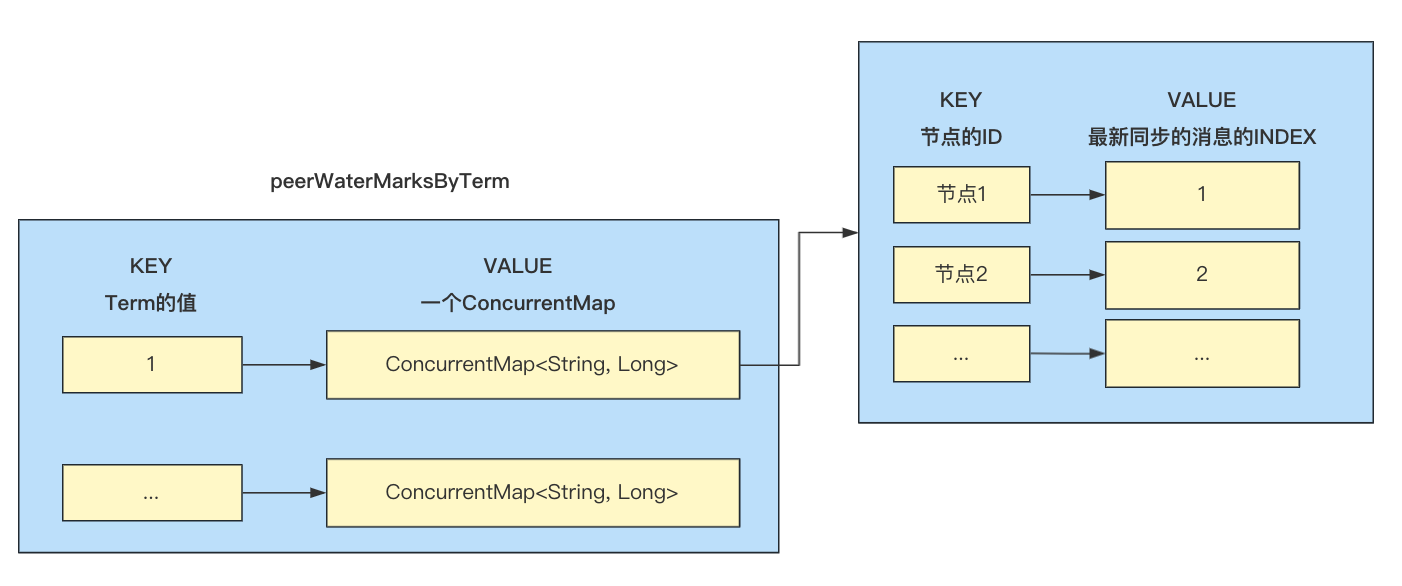

DLedgerEntryPusher中有一個pendingAppendResponsesByTerm成員變數,KEY為Term的值,VALUE是一個ConcurrentHashMap,KEY為訊息的index(每條訊息的編號,從0開始,後面會提到),ConcurrentMap的KEY為訊息的index,value為此條訊息寫入請求的非同步響應物件AppendEntryResponse:

呼叫isPendingFull方法的時候,會先校驗當前Term是否在pendingAppendResponsesByTerm中有對應的值,如果沒有,建立一個ConcurrentHashMap進行初始化,否則獲取對應的ConcurrentHashMap裡面資料的個數,與MaxPendingRequestsNum做對比,校驗是否超過了最大值:

public class DLedgerEntryPusher {

// 外層的KEY為Term的值,value是一個ConcurrentMap

// ConcurrentMap的KEY為訊息的index,value為此條訊息寫入請求的非同步響應物件AppendEntryResponse

private Map<Long, ConcurrentMap<Long, TimeoutFuture<AppendEntryResponse>>> pendingAppendResponsesByTerm = new ConcurrentHashMap<>();

public boolean isPendingFull(long currTerm) {

// 校驗currTerm是否在pendingAppendResponsesByTerm中

checkTermForPendingMap(currTerm, "isPendingFull");

// 判斷當前Term對應的寫入請求數量是否超過了最大值

return pendingAppendResponsesByTerm.get(currTerm).size() > dLedgerConfig.getMaxPendingRequestsNum();

}

private void checkTermForPendingMap(long term, String env) {

// 如果pendingAppendResponsesByTerm不包含

if (!pendingAppendResponsesByTerm.containsKey(term)) {

logger.info("Initialize the pending append map in {} for term={}", env, term);

// 建立一個ConcurrentHashMap加入到pendingAppendResponsesByTerm

pendingAppendResponsesByTerm.putIfAbsent(term, new ConcurrentHashMap<>());

}

}

}

pendingAppendResponsesByTerm的值是在什麼時候加入的?

在寫入Leader節點之後,呼叫DLedgerEntryPusher的waitAck方法(後面會講到)的時候,如果叢集中有多個節點,會為當前的請求建立AppendFuture<AppendEntryResponse>響應物件加入到pendingAppendResponsesByTerm中,所以可以通過pendingAppendResponsesByTerm中存放的響應物件數量判斷當前Term有多少個在等待的寫入請求:

// 建立響應物件

AppendFuture<AppendEntryResponse> future;

// 建立AppendFuture

if (isBatchWait) {

// 批次

future = new BatchAppendFuture<>(dLedgerConfig.getMaxWaitAckTimeMs());

} else {

future = new AppendFuture<>(dLedgerConfig.getMaxWaitAckTimeMs());

}

future.setPos(entry.getPos());

// 將建立的AppendFuture物件加入到pendingAppendResponsesByTerm中

CompletableFuture<AppendEntryResponse> old = pendingAppendResponsesByTerm.get(entry.getTerm()).put(entry.getIndex(), future);

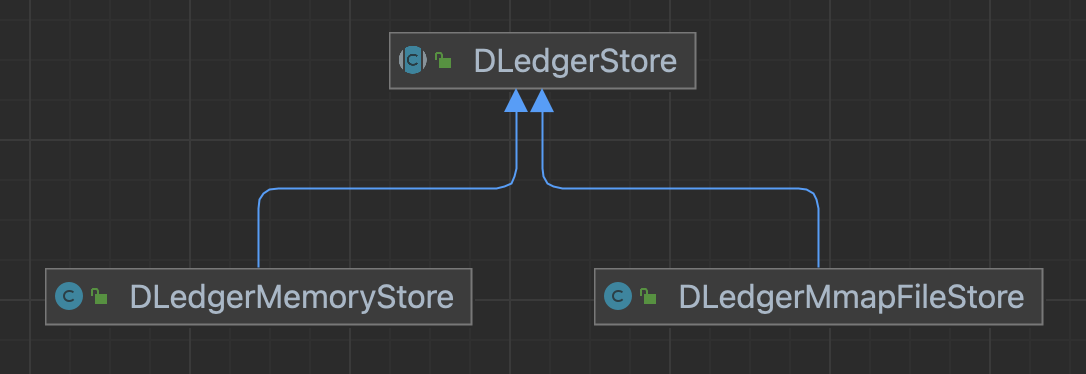

寫入Leader

DLedgerStore有兩個實現類,分別為DLedgerMemoryStore(基於記憶體儲存)和DLedgerMmapFileStore(基於Mmap檔案對映):

在createDLedgerStore方法中可以看到,是根據設定的儲存型別進行選擇的:

public class DLedgerServer implements DLedgerProtocolHander {

public DLedgerServer(DLedgerConfig dLedgerConfig) {

this.dLedgerConfig = dLedgerConfig;

this.memberState = new MemberState(dLedgerConfig);

// 根據設定中的StoreType建立DLedgerStore

this.dLedgerStore = createDLedgerStore(dLedgerConfig.getStoreType(), this.dLedgerConfig, this.memberState);

// ...

}

// 建立DLedgerStore

private DLedgerStore createDLedgerStore(String storeType, DLedgerConfig config, MemberState memberState) {

if (storeType.equals(DLedgerConfig.MEMORY)) {

return new DLedgerMemoryStore(config, memberState);

} else {

return new DLedgerMmapFileStore(config, memberState);

}

}

}

appendAsLeader

接下來以DLedgerMmapFileStore為例,看下appendAsLeader的處理邏輯:

-

進行Leader節點校驗和磁碟已滿校驗;

-

獲取紀錄檔資料buffer(dataBuffer)和索引資料buffer(indexBuffer),會先將內容寫入buffer,再將buffer內容寫入檔案;

-

將entry訊息內容寫入dataBuffer;

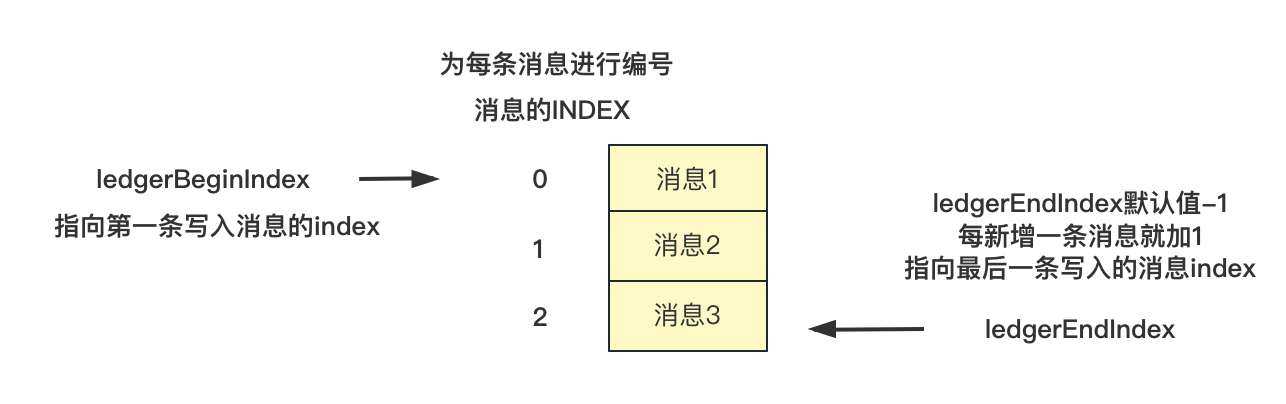

-

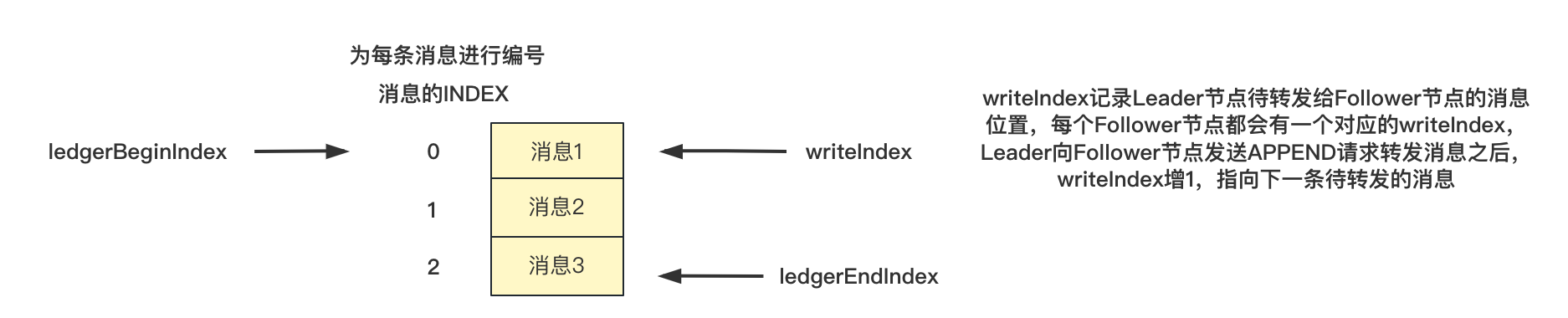

設定訊息的index(為每條訊息進行了編號),為ledgerEndIndex + 1,ledgerEndIndex初始值為-1,新增一條訊息ledgerEndIndex的值也會增1,ledgerEndIndex是隨著訊息的增加而遞增的,寫入成功之後會更新ledgerEndIndex的值,ledgerEndIndex記錄最後一條成功寫入訊息的index;

-

呼叫dataFileList的append方法將dataBuffer內容寫入紀錄檔檔案,返回資料在檔案中的偏移量;

-

將索引資訊寫入indexBuffer;

-

呼叫indexFileList的append方法將indexBuffer內容寫入索引檔案;

-

ledgerEndIndex加1;

-

設定ledgerEndTerm的值為當前Term;

-

呼叫

updateLedgerEndIndexAndTerm方法更新MemberState中記錄的LedgerEndIndex和LedgerEndTerm的值,LedgerEndIndex會在FLUSH的時候,將內容寫入到檔案進行持久化儲存。

public class DLedgerMmapFileStore extends DLedgerStore {

// 紀錄檔資料buffer

private ThreadLocal<ByteBuffer> localEntryBuffer;

// 索引資料buffer

private ThreadLocal<ByteBuffer> localIndexBuffer;

@Override

public DLedgerEntry appendAsLeader(DLedgerEntry entry) {

// Leader校驗判斷當前節點是否是Leader

PreConditions.check(memberState.isLeader(), DLedgerResponseCode.NOT_LEADER);

// 磁碟是否已滿校驗

PreConditions.check(!isDiskFull, DLedgerResponseCode.DISK_FULL);

// 獲取紀錄檔資料buffer

ByteBuffer dataBuffer = localEntryBuffer.get();

// 獲取索引資料buffer

ByteBuffer indexBuffer = localIndexBuffer.get();

// 將entry訊息內容寫入dataBuffer

DLedgerEntryCoder.encode(entry, dataBuffer);

int entrySize = dataBuffer.remaining();

synchronized (memberState) {

PreConditions.check(memberState.isLeader(), DLedgerResponseCode.NOT_LEADER, null);

PreConditions.check(memberState.getTransferee() == null, DLedgerResponseCode.LEADER_TRANSFERRING, null);

// 設定訊息的index,為ledgerEndIndex + 1

long nextIndex = ledgerEndIndex + 1;

// 設定訊息的index

entry.setIndex(nextIndex);

// 設定Term

entry.setTerm(memberState.currTerm());

// 設定魔數

entry.setMagic(CURRENT_MAGIC);

// 設定Term的Index

DLedgerEntryCoder.setIndexTerm(dataBuffer, nextIndex, memberState.currTerm(), CURRENT_MAGIC);

long prePos = dataFileList.preAppend(dataBuffer.remaining());

entry.setPos(prePos);

PreConditions.check(prePos != -1, DLedgerResponseCode.DISK_ERROR, null);

DLedgerEntryCoder.setPos(dataBuffer, prePos);

for (AppendHook writeHook : appendHooks) {

writeHook.doHook(entry, dataBuffer.slice(), DLedgerEntry.BODY_OFFSET);

}

// 將dataBuffer內容寫入紀錄檔檔案,返回資料的位置

long dataPos = dataFileList.append(dataBuffer.array(), 0, dataBuffer.remaining());

PreConditions.check(dataPos != -1, DLedgerResponseCode.DISK_ERROR, null);

PreConditions.check(dataPos == prePos, DLedgerResponseCode.DISK_ERROR, null);

// 將索引資訊寫入indexBuffer

DLedgerEntryCoder.encodeIndex(dataPos, entrySize, CURRENT_MAGIC, nextIndex, memberState.currTerm(), indexBuffer);

// 將indexBuffer內容寫入索引檔案

long indexPos = indexFileList.append(indexBuffer.array(), 0, indexBuffer.remaining(), false);

PreConditions.check(indexPos == entry.getIndex() * INDEX_UNIT_SIZE, DLedgerResponseCode.DISK_ERROR, null);

if (logger.isDebugEnabled()) {

logger.info("[{}] Append as Leader {} {}", memberState.getSelfId(), entry.getIndex(), entry.getBody().length);

}

// ledgerEndIndex自增

ledgerEndIndex++;

// 設定ledgerEndTerm的值為當前Term

ledgerEndTerm = memberState.currTerm();

if (ledgerBeginIndex == -1) {

// 更新ledgerBeginIndex

ledgerBeginIndex = ledgerEndIndex;

}

// 更新LedgerEndIndex和LedgerEndTerm

updateLedgerEndIndexAndTerm();

return entry;

}

}

}

更新LedgerEndIndex和LedgerEndTerm

在訊息寫入Leader之後,會呼叫getLedgerEndIndex和getLedgerEndTerm法獲取DLedgerMmapFileStore中記錄的LedgerEndIndex和LedgerEndTerm的值,然後更新到MemberState中:

public abstract class DLedgerStore {

protected void updateLedgerEndIndexAndTerm() {

if (getMemberState() != null) {

// 呼叫MemberState的updateLedgerIndexAndTerm進行更新

getMemberState().updateLedgerIndexAndTerm(getLedgerEndIndex(), getLedgerEndTerm());

}

}

}

public class MemberState {

private volatile long ledgerEndIndex = -1;

private volatile long ledgerEndTerm = -1;

// 更新ledgerEndIndex和ledgerEndTerm

public void updateLedgerIndexAndTerm(long index, long term) {

this.ledgerEndIndex = index;

this.ledgerEndTerm = term;

}

}

waitAck

在訊息寫入Leader節點之後,由於Leader節點需要向Follwer節點轉發紀錄檔,這個過程是非同步處理的,所以會在waitAck方法中為訊息的寫入建立非同步響應物件,主要處理邏輯如下:

- 呼叫

updatePeerWaterMark更新水位線,因為Leader節點需要將紀錄檔轉發給各個Follower,這個水位線其實是記錄每個節點訊息的複製進度,也就是複製到哪條訊息,將訊息的index記錄下來,這裡更新的是Leader節點最新寫入訊息的index,後面會看到Follower節點的更新; - 如果叢集中只有一個節點,建立

AppendEntryResponse返回響應; - 如果叢集中有多個節點,由於紀錄檔轉發是非同步進行的,所以建立非同步響應物件

AppendFuture<AppendEntryResponse>,並將建立的物件加入到pendingAppendResponsesByTerm中,pendingAppendResponsesByTerm的資料就是在這裡加入的;

這裡再區分一下pendingAppendResponsesByTerm和peerWaterMarksByTerm:

pendingAppendResponsesByTerm中記錄的是每條訊息寫入請求的非同步響應物件AppendEntryResponse,因為要等待叢集中大多數節點的響應,所以使用了非同步處理,之後獲取處理結果。

peerWaterMarksByTerm中記錄的是每個節點的訊息複製進度,儲存的是每個節點最後一條成功寫入的訊息的index。

public class DLedgerEntryPusher {

public CompletableFuture<AppendEntryResponse> waitAck(DLedgerEntry entry, boolean isBatchWait) {

// 更新當前節點最新寫入訊息的index

updatePeerWaterMark(entry.getTerm(), memberState.getSelfId(), entry.getIndex());

// 如果叢集中只有一個節點

if (memberState.getPeerMap().size() == 1) {

// 建立響應

AppendEntryResponse response = new AppendEntryResponse();

response.setGroup(memberState.getGroup());

response.setLeaderId(memberState.getSelfId());

response.setIndex(entry.getIndex());

response.setTerm(entry.getTerm());

response.setPos(entry.getPos());

if (isBatchWait) {

return BatchAppendFuture.newCompletedFuture(entry.getPos(), response);

}

return AppendFuture.newCompletedFuture(entry.getPos(), response);

} else {

// pendingAppendResponsesByTerm

checkTermForPendingMap(entry.getTerm(), "waitAck");

// 響應物件

AppendFuture<AppendEntryResponse> future;

// 建立AppendFuture

if (isBatchWait) {

// 批次

future = new BatchAppendFuture<>(dLedgerConfig.getMaxWaitAckTimeMs());

} else {

future = new AppendFuture<>(dLedgerConfig.getMaxWaitAckTimeMs());

}

future.setPos(entry.getPos());

// 將建立的AppendFuture物件加入到pendingAppendResponsesByTerm中

CompletableFuture<AppendEntryResponse> old = pendingAppendResponsesByTerm.get(entry.getTerm()).put(entry.getIndex(), future);

if (old != null) {

logger.warn("[MONITOR] get old wait at index={}", entry.getIndex());

}

return future;

}

}

}

紀錄檔複製

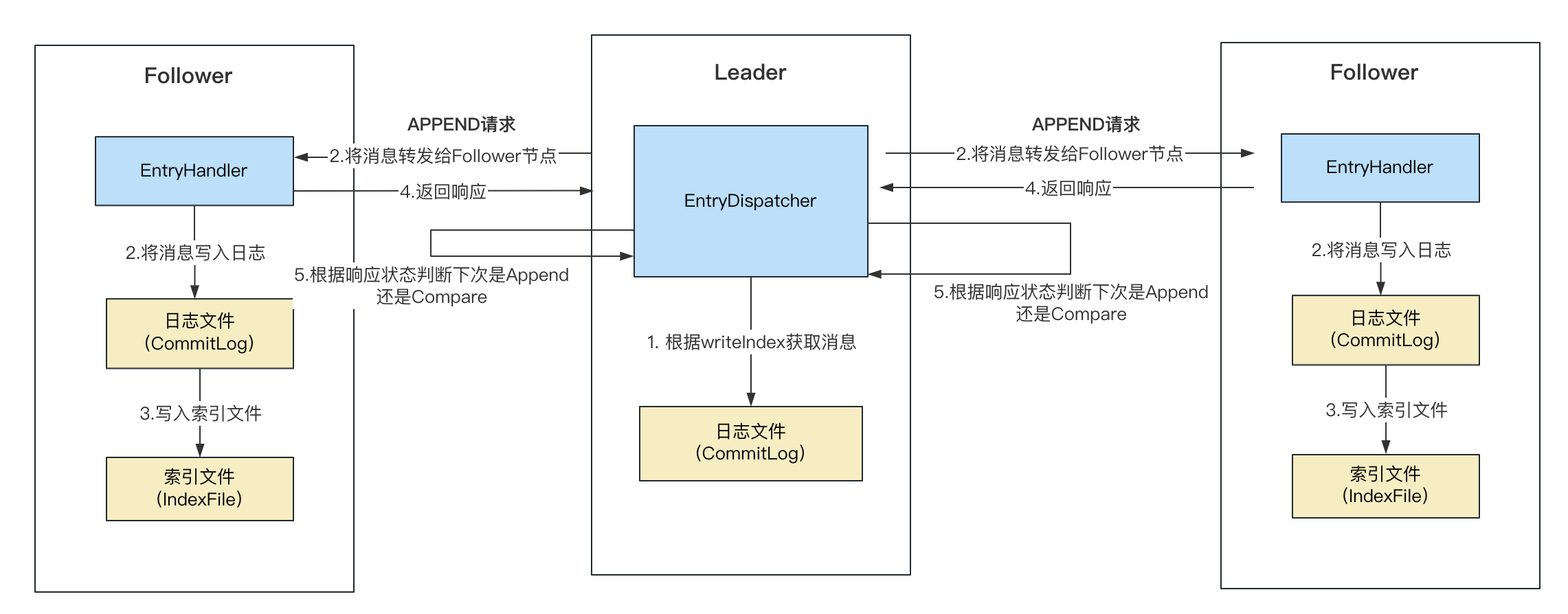

訊息寫入Leader之後,Leader節點會將訊息轉發給其他Follower節點,這個過程是非同步進行處理的,接下來看下訊息的複製過程。

在DLedgerEntryPusher的startup方法中會啟動以下執行緒:

EntryDispatcher:用於Leader節點向Follwer節點轉發紀錄檔;EntryHandler:用於Follower節點處理Leader節點傳送的紀錄檔;QuorumAckChecker:用於Leader節點等待Follower節點同步;

需要注意的是,Leader節點會為每個Follower節點建立EntryDispatcher轉發器,每一個EntryDispatcher負責一個節點的紀錄檔轉發,多個節點之間是並行處理的。

public class DLedgerEntryPusher {

public DLedgerEntryPusher(DLedgerConfig dLedgerConfig, MemberState memberState, DLedgerStore dLedgerStore,

DLedgerRpcService dLedgerRpcService) {

this.dLedgerConfig = dLedgerConfig;

this.memberState = memberState;

this.dLedgerStore = dLedgerStore;

this.dLedgerRpcService = dLedgerRpcService;

for (String peer : memberState.getPeerMap().keySet()) {

if (!peer.equals(memberState.getSelfId())) {

// 為叢集中除當前節點以外的其他節點建立EntryDispatcher

dispatcherMap.put(peer, new EntryDispatcher(peer, logger));

}

}

// 建立EntryHandler

this.entryHandler = new EntryHandler(logger);

// 建立QuorumAckChecker

this.quorumAckChecker = new QuorumAckChecker(logger);

}

public void startup() {

// 啟動EntryHandler

entryHandler.start();

// 啟動QuorumAckChecker

quorumAckChecker.start();

// 啟動EntryDispatcher

for (EntryDispatcher dispatcher : dispatcherMap.values()) {

dispatcher.start();

}

}

}

EntryDispatcher(紀錄檔轉發)

EntryDispatcher用於Leader節點向Follower轉發紀錄檔,它繼承了ShutdownAbleThread,所以會啟動執行緒處理紀錄檔轉發,入口在doWork方法中。

在doWork方法中,首先呼叫checkAndFreshState校驗節點的狀態,這一步主要是校驗當前節點是否是Leader節點以及更改訊息的推播型別,如果不是Leader節點結束處理,如果是Leader節點,對訊息的推播型別進行判斷:

APPEND:訊息追加,用於向Follower轉發訊息,批次訊息呼叫doBatchAppend,否則呼叫doAppend處理;COMPARE:訊息對比,一般出現在資料不一致的情況下,此時呼叫doCompare對比訊息;

public class DLedgerEntryPusher {

// 紀錄檔轉發執行緒

private class EntryDispatcher extends ShutdownAbleThread {

@Override

public void doWork() {

try {

// 檢查狀態

if (!checkAndFreshState()) {

waitForRunning(1);

return;

}

// 如果是APPEND型別

if (type.get() == PushEntryRequest.Type.APPEND) {

// 如果開啟了批次追加

if (dLedgerConfig.isEnableBatchPush()) {

doBatchAppend();

} else {

doAppend();

}

} else {

// 比較

doCompare();

}

Thread.yield();

} catch (Throwable t) {

DLedgerEntryPusher.logger.error("[Push-{}]Error in {} writeIndex={} compareIndex={}", peerId, getName(), writeIndex, compareIndex, t);

// 出現異常轉為COMPARE

changeState(-1, PushEntryRequest.Type.COMPARE);

DLedgerUtils.sleep(500);

}

}

}

}

狀態檢查(checkAndFreshState)

如果Term與memberState記錄的不一致或者LeaderId為空或者LeaderId與memberState的不一致,會呼叫changeState方法,將訊息的推播型別更改為COMPARE,並將compareIndex置為-1:

public class DLedgerEntryPusher {

private class EntryDispatcher extends ShutdownAbleThread {

private long term = -1;

private String leaderId = null;

private boolean checkAndFreshState() {

// 如果不是Leader節點

if (!memberState.isLeader()) {

return false;

}

// 如果Term與memberState記錄的不一致或者LeaderId為空或者LeaderId與memberState的不一致

if (term != memberState.currTerm() || leaderId == null || !leaderId.equals(memberState.getLeaderId())) {

synchronized (memberState) { // 加鎖

if (!memberState.isLeader()) {

return false;

}

PreConditions.check(memberState.getSelfId().equals(memberState.getLeaderId()), DLedgerResponseCode.UNKNOWN);

term = memberState.currTerm();

leaderId = memberState.getSelfId();

// 更改狀態為COMPARE

changeState(-1, PushEntryRequest.Type.COMPARE);

}

}

return true;

}

private synchronized void changeState(long index, PushEntryRequest.Type target) {

logger.info("[Push-{}]Change state from {} to {} at {}", peerId, type.get(), target, index);

switch (target) {

case APPEND:

compareIndex = -1;

updatePeerWaterMark(term, peerId, index);

quorumAckChecker.wakeup();

writeIndex = index + 1;

if (dLedgerConfig.isEnableBatchPush()) {

resetBatchAppendEntryRequest();

}

break;

case COMPARE:

// 如果設定COMPARE狀態成功

if (this.type.compareAndSet(PushEntryRequest.Type.APPEND, PushEntryRequest.Type.COMPARE)) {

compareIndex = -1; // compareIndex改為-1

if (dLedgerConfig.isEnableBatchPush()) {

batchPendingMap.clear();

} else {

pendingMap.clear();

}

}

break;

case TRUNCATE:

compareIndex = -1;

break;

default:

break;

}

type.set(target);

}

}

}

Leader節點訊息轉發

如果處於APPEND狀態,Leader節點會向Follower節點傳送Append請求,將訊息轉發給Follower節點,doAppend方法的處理邏輯如下:

-

呼叫

checkAndFreshState進行狀態檢查; -

判斷推播型別是否是

APPEND,如果不是終止處理; -

writeIndex為待轉發訊息的Index,預設值為-1,判斷是否大於LedgerEndIndex,如果大於呼叫doCommit向Follower節點傳送COMMIT請求更新committedIndex(後面再說);

這裡可以看出轉發紀錄檔的時候也使用了一個計數器writeIndex來記錄待轉發訊息的index,每次根據writeIndex的值從紀錄檔中取出訊息進行轉發,轉發成後更新writeIndex的值(自增)指向下一條資料。

-

如果pendingMap中的大小超過了最大限制maxPendingSize的值,或者上次檢查時間超過了1000ms(有較長的時間未進行清理),進行過期資料清理(這一步主要就是為了清理資料):

pendingMap是一個ConcurrentMap,KEY為訊息的INDEX,value為該條訊息向Follwer節點轉發的時間(doAppendInner方法中會將資料加入到pendingMap);

- 前面知道peerWaterMark的資料記錄了每個節點的訊息複製進度,這裡根據Term和節點ID獲取對應的複製進度(最新複製成功的訊息的index)記在peerWaterMark變數中;

- 遍歷pendingMap,與peerWaterMark的值對比,peerWaterMark之前的訊息表示都已成功的寫入完畢,所以小於peerWaterMark說明已過期可以被清理掉,將資料從pendingMap移除達到清理空間的目的;

- 更新檢查時間lastCheckLeakTimeMs的值為當前時間;

-

呼叫

doAppendInner方法轉發訊息; -

更新writeIndex的值,做自增操作指向下一條待轉發的訊息index;

public class DLedgerEntryPusher {

private class EntryDispatcher extends ShutdownAbleThread {

// 待轉發訊息的Index,預設值為-1

private long writeIndex = -1;

// KEY為訊息的INDEX,value為該條訊息向Follwer節點轉發的時間

private ConcurrentMap<Long, Long> pendingMap = new ConcurrentHashMap<>();

private void doAppend() throws Exception {

while (true) {

// 校驗狀態

if (!checkAndFreshState()) {

break;

}

// 如果不是APPEND狀態,終止

if (type.get() != PushEntryRequest.Type.APPEND) {

break;

}

// 判斷待轉發訊息的Index是否大於LedgerEndIndex

if (writeIndex > dLedgerStore.getLedgerEndIndex()) {

doCommit(); // 向Follower節點傳送COMMIT請求更新

doCheckAppendResponse();

break;

}

// 如果pendingMap中的大小超過了maxPendingSize,或者上次檢查時間超過了1000ms

if (pendingMap.size() >= maxPendingSize || (DLedgerUtils.elapsed(lastCheckLeakTimeMs) > 1000)) {

// 根據節點peerId獲取複製進度

long peerWaterMark = getPeerWaterMark(term, peerId);

// 遍歷pendingMap

for (Long index : pendingMap.keySet()) {

// 如果index小於peerWaterMark

if (index < peerWaterMark) {

// 移除

pendingMap.remove(index);

}

}

// 更新檢查時間

lastCheckLeakTimeMs = System.currentTimeMillis();

}

if (pendingMap.size() >= maxPendingSize) {

doCheckAppendResponse();

break;

}

// 同步訊息

doAppendInner(writeIndex);

// 更新writeIndex的值

writeIndex++;

}

}

}

}

getPeerWaterMark

peerWaterMarksByTerm

peerWaterMarksByTerm中記錄了紀錄檔轉發的進度,KEY為Term,VALUE為ConcurrentMap,ConcurrentMap中的KEY為Follower節點的ID(peerId),VALUE為該節點已經同步完畢的最新的那條訊息的index:

呼叫getPeerWaterMark方法的時候,首先會呼叫checkTermForWaterMark檢查peerWaterMarksByTerm是否存在資料,如果不存在, 建立ConcurrentMap,並遍歷叢集中的節點,加入到ConcurrentMap,其中KEY為節點的ID,value為預設值-1,當訊息成功寫入Follower節點後,會呼叫updatePeerWaterMark更同步進度:

public class DLedgerEntryPusher {

// 記錄Follower節點的同步進度,KEY為Term,VALUE為ConcurrentMap

// ConcurrentMap中的KEY為Follower節點的ID(peerId),VALUE為該節點已經同步完畢的最新的那條訊息的index

private Map<Long, ConcurrentMap<String, Long>> peerWaterMarksByTerm = new ConcurrentHashMap<>();

// 獲取節點的同步進度

public long getPeerWaterMark(long term, String peerId) {

synchronized (peerWaterMarksByTerm) {

checkTermForWaterMark(term, "getPeerWaterMark");

return peerWaterMarksByTerm.get(term).get(peerId);

}

}

private void checkTermForWaterMark(long term, String env) {

// 如果peerWaterMarksByTerm不存在

if (!peerWaterMarksByTerm.containsKey(term)) {

logger.info("Initialize the watermark in {} for term={}", env, term);

// 建立ConcurrentMap

ConcurrentMap<String, Long> waterMarks = new ConcurrentHashMap<>();

// 對叢集中的節點進行遍歷

for (String peer : memberState.getPeerMap().keySet()) {

// 初始化,KEY為節點的PEER,VALUE為-1

waterMarks.put(peer, -1L);

}

// 加入到peerWaterMarksByTerm

peerWaterMarksByTerm.putIfAbsent(term, waterMarks);

}

}

// 更新水位線

private void updatePeerWaterMark(long term, String peerId, long index) {

synchronized (peerWaterMarksByTerm) {

// 校驗

checkTermForWaterMark(term, "updatePeerWaterMark");

// 如果之前的水位線小於當前的index進行更新

if (peerWaterMarksByTerm.get(term).get(peerId) < index) {

peerWaterMarksByTerm.get(term).put(peerId, index);

}

}

}

}

轉發訊息

doAppendInner的處理邏輯如下:

- 根據訊息的index從紀錄檔獲取訊息Entry;

- 呼叫buildPushRequest方法構建紀錄檔轉發請求PushEntryRequest,在請求中設定了訊息entry、當前Term、Leader節點的commitIndex(最後一條得到叢集中大多數節點響應的訊息index)等資訊;

- 呼叫dLedgerRpcService的push方法將請求傳送給Follower節點;

- 將本條訊息對應的index加入到pendingMap中記錄訊息的傳送時間(key為訊息的index,value為當前時間);

- 等待Follower節點返回響應:

(1)如果響應狀態為SUCCESS, 表示節點寫入成功:- 從pendingMap中移除本條訊息index的資訊;

- 更新當前節點的複製進度,也就是updatePeerWaterMark中的值;

- 呼叫quorumAckChecker的wakeup,喚醒QuorumAckChecker執行緒;

(2)如果響應狀態為INCONSISTENT_STATE,表示Follower節點資料出現了不一致的情況,需要呼叫changeState更改狀態為COMPARE;

private class EntryDispatcher extends ShutdownAbleThread {

private void doAppendInner(long index) throws Exception {

// 根據index從紀錄檔獲取訊息Entry

DLedgerEntry entry = getDLedgerEntryForAppend(index);

if (null == entry) {

return;

}

checkQuotaAndWait(entry);

// 構建紀錄檔轉發請求PushEntryRequest

PushEntryRequest request = buildPushRequest(entry, PushEntryRequest.Type.APPEND);

// 新增紀錄檔轉發請求,傳送給Follower節點

CompletableFuture<PushEntryResponse> responseFuture = dLedgerRpcService.push(request);

// 加入到pendingMap中,key為訊息的index,value為當前時間

pendingMap.put(index, System.currentTimeMillis());

responseFuture.whenComplete((x, ex) -> {

try {

// 處理請求響應

PreConditions.check(ex == null, DLedgerResponseCode.UNKNOWN);

DLedgerResponseCode responseCode = DLedgerResponseCode.valueOf(x.getCode());

switch (responseCode) {

case SUCCESS: // 如果成功

// 從pendingMap中移除

pendingMap.remove(x.getIndex());

// 更新updatePeerWaterMark

updatePeerWaterMark(x.getTerm(), peerId, x.getIndex());

// 喚醒

quorumAckChecker.wakeup();

break;

case INCONSISTENT_STATE: // 如果響應狀態為INCONSISTENT_STATE

logger.info("[Push-{}]Get INCONSISTENT_STATE when push index={} term={}", peerId, x.getIndex(), x.getTerm());

changeState(-1, PushEntryRequest.Type.COMPARE); // 轉為COMPARE狀態

break;

default:

logger.warn("[Push-{}]Get error response code {} {}", peerId, responseCode, x.baseInfo());

break;

}

} catch (Throwable t) {

logger.error("", t);

}

});

lastPushCommitTimeMs = System.currentTimeMillis();

}

private PushEntryRequest buildPushRequest(DLedgerEntry entry, PushEntryRequest.Type target) {

PushEntryRequest request = new PushEntryRequest(); // 建立PushEntryRequest

request.setGroup(memberState.getGroup());

request.setRemoteId(peerId);

request.setLeaderId(leaderId);

// 設定Term

request.setTerm(term);

// 設定訊息

request.setEntry(entry);

request.setType(target);

// 設定commitIndex,最後一條得到叢集中大多數節點響應的訊息index

request.setCommitIndex(dLedgerStore.getCommittedIndex());

return request;

}

}

為了便於將Leader節點的轉發和Follower節點的處理邏輯串起來,這裡新增了Follower對APPEND請求的處理連結,Follower處理APPEND請求。

Leader節點訊息比較

處於以下兩種情況之一時,會認為資料出現了不一致的情況,將狀態更改為Compare:

(1)Leader節點在呼叫checkAndFreshState檢查的時候,發現當前Term與memberState記錄的不一致或者LeaderId為空或者LeaderId與memberState記錄的LeaderId不一致;

(2)Follower節點在處理訊息APPEND請求在進行校驗的時候(Follower節點請求校驗連結),發現資料出現了不一致,會在請求的響應中設定不一致的狀態INCONSISTENT_STATE,通知Leader節點;

在COMPARE狀態下,會呼叫doCompare方法向Follower節點傳送比較請求,處理邏輯如下:

- 呼叫

checkAndFreshState校驗狀態; - 判斷是否是

COMPARE或者TRUNCATE請求,如果不是終止處理; - 如果compareIndex為-1(changeState方法將狀態改為COMPARE時中會將compareIndex置為-1),獲取LedgerEndIndex作為compareIndex的值進行更新;

- 如果compareIndex的值大於LedgerEndIndex或者小於LedgerBeginIndex,依舊使用LedgerEndIndex作為compareIndex的值,所以單獨加一個判斷條件應該是為了列印紀錄檔,與第3步做區分;

- 根據compareIndex獲取訊息entry物件,呼叫buildPushRequest方法構建COMPARE請求;

- 向Follower節點推播建COMPARE請求進行比較,這裡可以快速跳轉到Follwer節點對COMPARE請求的處理;

狀態更改為COMPARE之後,compareIndex的值會被初始化為-1,在doCompare中,會將compareIndex的值更改為Leader節點的最後一條寫入的訊息,也就是LedgerEndIndex的值,發給Follower節點進行對比。

向Follower節點發起請求後,等待COMPARE請求返回響應,請求中會將Follower節點最後成功寫入的訊息的index設定在響應物件的EndIndex變數中,第一條寫入的訊息記錄在BeginIndex變數中:

-

請求響應成功:

- 如果compareIndex與follower返回請求中的EndIndex相等,表示沒有資料不一致的情況,將狀態更改為APPEND;

- 其他情況,將truncateIndex的值置為compareIndex;

-

如果請求中返回的EndIndex小於當前節點的LedgerBeginIndex,或者BeginIndex大於LedgerEndIndex,也就是follower與leader的index不相交時, 將truncateIndex設定為Leader的BeginIndex;

根據程式碼中的註釋來看,這種情況通常發生在Follower節點出現故障了很長一段時間,在此期間Leader節點刪除了一些過期的訊息;

-

compareIndex比follower的BeginIndex小,將truncateIndex設定為Leader的BeginIndex;

根據程式碼中的註釋來看,這種情況請通常發生在磁碟出現故障的時候。

-

其他情況,將compareIndex的值減一,從上一條訊息開始繼續對比;

-

如果truncateIndex的值不為-1,呼叫doTruncate方法進行處理;

public class DLedgerEntryPusher {

private class EntryDispatcher extends ShutdownAbleThread {

private void doCompare() throws Exception {

while (true) {

// 校驗狀態

if (!checkAndFreshState()) {

break;

}

// 如果不是COMPARE請求也不是TRUNCATE請求

if (type.get() != PushEntryRequest.Type.COMPARE

&& type.get() != PushEntryRequest.Type.TRUNCATE) {

break;

}

// 如果compareIndex為-1並且LedgerEndIndex為-1

if (compareIndex == -1 && dLedgerStore.getLedgerEndIndex() == -1) {

break;

}

// 如果compareIndex為-1

if (compareIndex == -1) {

// 獲取LedgerEndIndex作為compareIndex

compareIndex = dLedgerStore.getLedgerEndIndex();

logger.info("[Push-{}][DoCompare] compareIndex=-1 means start to compare", peerId);

} else if (compareIndex > dLedgerStore.getLedgerEndIndex() || compareIndex < dLedgerStore.getLedgerBeginIndex()) {

logger.info("[Push-{}][DoCompare] compareIndex={} out of range {}-{}", peerId, compareIndex, dLedgerStore.getLedgerBeginIndex(), dLedgerStore.getLedgerEndIndex());

// 依舊獲取LedgerEndIndex作為compareIndex,這裡應該是為了列印紀錄檔所以單獨又加了一個if條件

compareIndex = dLedgerStore.getLedgerEndIndex();

}

// 根據compareIndex獲取訊息

DLedgerEntry entry = dLedgerStore.get(compareIndex);

PreConditions.check(entry != null, DLedgerResponseCode.INTERNAL_ERROR, "compareIndex=%d", compareIndex);

// 構建COMPARE請求

PushEntryRequest request = buildPushRequest(entry, PushEntryRequest.Type.COMPARE);

// 傳送COMPARE請求

CompletableFuture<PushEntryResponse> responseFuture = dLedgerRpcService.push(request);

// 獲取響應結果

PushEntryResponse response = responseFuture.get(3, TimeUnit.SECONDS);

PreConditions.check(response != null, DLedgerResponseCode.INTERNAL_ERROR, "compareIndex=%d", compareIndex);

PreConditions.check(response.getCode() == DLedgerResponseCode.INCONSISTENT_STATE.getCode() || response.getCode() == DLedgerResponseCode.SUCCESS.getCode()

, DLedgerResponseCode.valueOf(response.getCode()), "compareIndex=%d", compareIndex);

long truncateIndex = -1;

// 如果返回成功

if (response.getCode() == DLedgerResponseCode.SUCCESS.getCode()) {

// 如果compareIndex與 follower的EndIndex相等

if (compareIndex == response.getEndIndex()) {

// 改為APPEND狀態

changeState(compareIndex, PushEntryRequest.Type.APPEND);

break;

} else {

// 將truncateIndex設定為compareIndex

truncateIndex = compareIndex;

}

} else if (response.getEndIndex() < dLedgerStore.getLedgerBeginIndex()

|| response.getBeginIndex() > dLedgerStore.getLedgerEndIndex()) {

/*

The follower's entries does not intersect with the leader.

This usually happened when the follower has crashed for a long time while the leader has deleted the expired entries.

Just truncate the follower.

*/

// 如果請求中返回的EndIndex小於當前節點的LedgerBeginIndex,或者BeginIndex大於LedgerEndIndex

// 當follower與leader的index不相交時,這種情況通常Follower節點出現故障了很長一段時間,在此期間Leader節點刪除了一些過期的訊息

// 將truncateIndex設定為Leader的BeginIndex

truncateIndex = dLedgerStore.getLedgerBeginIndex();

} else if (compareIndex < response.getBeginIndex()) {

/*

The compared index is smaller than the follower's begin index.

This happened rarely, usually means some disk damage.

Just truncate the follower.

*/

// compareIndex比follower的BeginIndex小,通常發生在磁碟出現故障的時候

// 將truncateIndex設定為Leader的BeginIndex

truncateIndex = dLedgerStore.getLedgerBeginIndex();

} else if (compareIndex > response.getEndIndex()) {

/*

The compared index is bigger than the follower's end index.

This happened frequently. For the compared index is usually starting from the end index of the leader.

*/

// compareIndex比follower的EndIndex大

// compareIndexx設定為Follower的EndIndex

compareIndex = response.getEndIndex();

} else {

/*

Compare failed and the compared index is in the range of follower's entries.

*/

// 比較失敗

compareIndex--;

}

// 如果compareIndex比當前節點的LedgerBeginIndex小

if (compareIndex < dLedgerStore.getLedgerBeginIndex()) {

truncateIndex = dLedgerStore.getLedgerBeginIndex();

}

// 如果truncateIndex的值不為-1,呼叫doTruncate開始刪除

if (truncateIndex != -1) {

changeState(truncateIndex, PushEntryRequest.Type.TRUNCATE);

doTruncate(truncateIndex);

break;

}

}

}

}

}

在doTruncate方法中,會構建TRUNCATE請求設定truncateIndex(要刪除的訊息的index),傳送給Follower節點,通知Follower節點將資料不一致的那條訊息刪除,如果響應成功,可以看到接下來呼叫了changeState將狀態改為APPEND,在changeState中,呼叫了updatePeerWaterMark更新節點的複製進度為出現資料不一致的那條訊息的index,同時也更新了writeIndex,下次從writeIndex處重新給Follower節點傳送APPEND請求進行訊息寫入:

private class EntryDispatcher extends ShutdownAbleThread {

private void doTruncate(long truncateIndex) throws Exception {

PreConditions.check(type.get() == PushEntryRequest.Type.TRUNCATE, DLedgerResponseCode.UNKNOWN);

DLedgerEntry truncateEntry = dLedgerStore.get(truncateIndex);

PreConditions.check(truncateEntry != null, DLedgerResponseCode.UNKNOWN);

logger.info("[Push-{}]Will push data to truncate truncateIndex={} pos={}", peerId, truncateIndex, truncateEntry.getPos());

// 構建TRUNCATE請求

PushEntryRequest truncateRequest = buildPushRequest(truncateEntry, PushEntryRequest.Type.TRUNCATE);

// 向Folower節點傳送TRUNCATE請求

PushEntryResponse truncateResponse = dLedgerRpcService.push(truncateRequest).get(3, TimeUnit.SECONDS);

PreConditions.check(truncateResponse != null, DLedgerResponseCode.UNKNOWN, "truncateIndex=%d", truncateIndex);

PreConditions.check(truncateResponse.getCode() == DLedgerResponseCode.SUCCESS.getCode(), DLedgerResponseCode.valueOf(truncateResponse.getCode()), "truncateIndex=%d", truncateIndex);

lastPushCommitTimeMs = System.currentTimeMillis();

// 更改回APPEND狀態

changeState(truncateIndex, PushEntryRequest.Type.APPEND);

}

private synchronized void changeState(long index, PushEntryRequest.Type target) {

logger.info("[Push-{}]Change state from {} to {} at {}", peerId, type.get(), target, index);

switch (target) {

case APPEND:

compareIndex = -1;

// 更新節點的複製進度,改為出現資料不一致的那條訊息的index

updatePeerWaterMark(term, peerId, index);

// 喚醒quorumAckChecker

quorumAckChecker.wakeup();

// 更新writeIndex

writeIndex = index + 1;

if (dLedgerConfig.isEnableBatchPush()) {

resetBatchAppendEntryRequest();

}

break;

// ...

}

type.set(target);

}

}

EntryHandler

EntryHandler用於Follower節點處理Leader傳送的訊息請求,對請求的處理在handlePush方法中,根據請求型別的不同做如下處理:

- 如果是

APPEND請求,將請求加入到writeRequestMap中; - 如果是

COMMIT請求,將請求加入到compareOrTruncateRequests; - 如果是

COMPARE或者TRUNCATE,將請求加入到compareOrTruncateRequests;

handlePush方法中,並沒有直接處理請求,而是將不同型別的請求加入到不同的請求集合中,請求的處理是另外一個執行緒在doWork方法中處理的。

public class DLedgerEntryPusher {

private class EntryHandler extends ShutdownAbleThread {

ConcurrentMap<Long, Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>>> writeRequestMap = new ConcurrentHashMap<>();

BlockingQueue<Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>>> compareOrTruncateRequests = new ArrayBlockingQueue<Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>>>(100);

public CompletableFuture<PushEntryResponse> handlePush(PushEntryRequest request) throws Exception {

CompletableFuture<PushEntryResponse> future = new TimeoutFuture<>(1000);

switch (request.getType()) {

case APPEND: // 如果是Append

if (request.isBatch()) {

PreConditions.check(request.getBatchEntry() != null && request.getCount() > 0, DLedgerResponseCode.UNEXPECTED_ARGUMENT);

} else {

PreConditions.check(request.getEntry() != null, DLedgerResponseCode.UNEXPECTED_ARGUMENT);

}

long index = request.getFirstEntryIndex();

// 將請求加入到writeRequestMap

Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>> old = writeRequestMap.putIfAbsent(index, new Pair<>(request, future));

if (old != null) {

logger.warn("[MONITOR]The index {} has already existed with {} and curr is {}", index, old.getKey().baseInfo(), request.baseInfo());

future.complete(buildResponse(request, DLedgerResponseCode.REPEATED_PUSH.getCode()));

}

break;

case COMMIT: // 如果是提交

// 加入到compareOrTruncateRequests

compareOrTruncateRequests.put(new Pair<>(request, future));

break;

case COMPARE:

case TRUNCATE:

PreConditions.check(request.getEntry() != null, DLedgerResponseCode.UNEXPECTED_ARGUMENT);

writeRequestMap.clear();

// 加入到compareOrTruncateRequests

compareOrTruncateRequests.put(new Pair<>(request, future));

break;

default:

logger.error("[BUG]Unknown type {} from {}", request.getType(), request.baseInfo());

future.complete(buildResponse(request, DLedgerResponseCode.UNEXPECTED_ARGUMENT.getCode()));

break;

}

wakeup();

return future;

}

}

}

EntryHandler同樣繼承了ShutdownAbleThread,所以會啟動執行緒執行doWork方法,在doWork方法中對請求進行了處理:

-

如果compareOrTruncateRequests不為空,對請求型別進行判斷:

- TRUNCATE:呼叫handleDoTruncate處理;

- COMPARE:呼叫handleDoCompare處理;

- COMMIT:呼叫handleDoCommit處理;

-

如果不是第1種情況,會認為是APPEND請求:

(1)LedgerEndIndex記錄了最後一條成功寫入訊息的index,對其 + 1表示下一條待寫入訊息的index;

(2)根據待寫入訊息的index從writeRequestMap獲取資料,如果獲取為空,呼叫checkAbnormalFuture進行檢查;

(3)獲取不為空,呼叫handleDoAppend方法處理訊息寫入;

這裡可以看出,Follower是從當前記錄的最後一條成功寫入的index(LedgerEndIndex),進行加1來處理下一條需要寫入的訊息的。

public class DLedgerEntryPusher {

private class EntryHandler extends ShutdownAbleThread {

@Override

public void doWork() {

try {

// 判斷是否是Follower

if (!memberState.isFollower()) {

waitForRunning(1);

return;

}

// 如果compareOrTruncateRequests不為空

if (compareOrTruncateRequests.peek() != null) {

Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>> pair = compareOrTruncateRequests.poll();

PreConditions.check(pair != null, DLedgerResponseCode.UNKNOWN);

switch (pair.getKey().getType()) {

case TRUNCATE: // TRUNCATE

handleDoTruncate(pair.getKey().getEntry().getIndex(), pair.getKey(), pair.getValue());

break;

case COMPARE: // COMPARE

handleDoCompare(pair.getKey().getEntry().getIndex(), pair.getKey(), pair.getValue());

break;

case COMMIT: // COMMIT

handleDoCommit(pair.getKey().getCommitIndex(), pair.getKey(), pair.getValue());

break;

default:

break;

}

} else {

// 設定訊息Index,為最後一條成功寫入的訊息index + 1

long nextIndex = dLedgerStore.getLedgerEndIndex() + 1;

// 從writeRequestMap取出請求

Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>> pair = writeRequestMap.remove(nextIndex);

// 如果獲取的請求為空,呼叫checkAbnormalFuture進行檢查

if (pair == null) {

checkAbnormalFuture(dLedgerStore.getLedgerEndIndex());

waitForRunning(1);

return;

}

PushEntryRequest request = pair.getKey();

if (request.isBatch()) {

handleDoBatchAppend(nextIndex, request, pair.getValue());

} else {

// 處理

handleDoAppend(nextIndex, request, pair.getValue());

}

}

} catch (Throwable t) {

DLedgerEntryPusher.logger.error("Error in {}", getName(), t);

DLedgerUtils.sleep(100);

}

}

}

Follower資料不一致檢查

checkAbnormalFuture

方法用於檢查資料的一致性,處理邏輯如下: 1. 如果距離上次檢查的時間未超過1000ms,直接返回; 2. 更新檢查時間lastCheckFastForwardTimeMs的值; 3. 如果writeRequestMap為空表示目前沒有寫入請求,暫不需要處理; 4. 呼叫`checkAppendFuture`方法進行檢查;public class DLedgerEntryPusher {

private class EntryHandler extends ShutdownAbleThread {

/**

* The leader does push entries to follower, and record the pushed index. But in the following conditions, the push may get stopped.

* * If the follower is abnormally shutdown, its ledger end index may be smaller than before. At this time, the leader may push fast-forward entries, and retry all the time.

* * If the last ack is missed, and no new message is coming in.The leader may retry push the last message, but the follower will ignore it.

* @param endIndex

*/

private void checkAbnormalFuture(long endIndex) {

// 如果距離上次檢查的時間未超過1000ms

if (DLedgerUtils.elapsed(lastCheckFastForwardTimeMs) < 1000) {

return;

}

// 更新檢查時間

lastCheckFastForwardTimeMs = System.currentTimeMillis();

// 如果writeRequestMap表示沒有寫入請求,暫不需要處理

if (writeRequestMap.isEmpty()) {

return;

}

// 檢查

checkAppendFuture(endIndex);

}

}

}

checkAppendFuture方法中的入參endIndex,表示當前待寫入訊息的index,也就是當前節點記錄的最後一條成功寫入的index(LedgerEndIndex)值加1,方法的處理邏輯如下:

-

將

minFastForwardIndex初始化為最大值,minFastForwardIndex用於找到最小的那個出現資料不一致的訊息index; -

遍歷

writeRequestMap,處理每一個正在進行中的寫入請求:

(1)由於訊息可能是批次的,所以獲取當前請求中的第一條訊息index,記為firstEntryIndex;

(2)獲取當前請求中的最後一條訊息index,記為lastEntryIndex;

(3)如果lastEntryIndex如果小於等於endIndex的值,進行如下處理:- 對比請求中的訊息與當前節點儲存的訊息是否一致,如果是批次訊息,遍歷請求中的每一個訊息,並根據訊息的index從當前節的紀錄檔中獲取訊息進行對比,由於endIndex之前的訊息都已成功寫入,對應的寫入請求還在writeRequestMap中表示可能由於某些原因未能從writeRequestMap中移除,所以如果資料對比一致的情況下可以將對應的請求響應設定為完成,並從writeRequestMap中移除;如果對比不一致,進入到例外處理,構建響應請求,狀態設定為INCONSISTENT_STATE,通知Leader節點出現了資料不一致的情況;

(4)如果第一條訊息firstEntryIndex與endIndex + 1相等(這裡不太明白為什麼不是與endIndex 相等而是需要加1),表示該請求是endIndex之後的訊息請求,結束本次檢查;

(5)判斷當前請求的處理時間是否超時,如果未超時,繼續處理下一個請求,如果超時進入到下一步;

(6)走到這裡,如果firstEntryIndex比minFastForwardIndex小,說明出現了資料不一致的情況,此時更新minFastForwardIndex,記錄最小的那個資料不一致訊息的index; -

如果minFastForwardIndex依舊是MAX_VALUE,表示沒有資料不一致的訊息,直接返回;

-

根據minFastForwardIndex從writeRequestMap獲取請求,如果獲取為空,直接返回,否則呼叫

buildBatchAppendResponse方法構建請求響應,表示資料出現了不一致,在響應中通知Leader節點;

private class EntryHandler extends ShutdownAbleThread {

private void checkAppendFuture(long endIndex) {

// 初始化為最大值

long minFastForwardIndex = Long.MAX_VALUE;

// 遍歷writeRequestMap的value

for (Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>> pair : writeRequestMap.values()) {

// 獲取每個請求裡面的第一條訊息index

long firstEntryIndex = pair.getKey().getFirstEntryIndex();

// 獲取每個請求裡面的最後一條訊息index

long lastEntryIndex = pair.getKey().getLastEntryIndex();

// 如果小於等於endIndex

if (lastEntryIndex <= endIndex) {

try {

if (pair.getKey().isBatch()) { // 批次請求

// 遍歷所有的訊息

for (DLedgerEntry dLedgerEntry : pair.getKey().getBatchEntry()) {

// 校驗與當前節點儲存的訊息是否一致

PreConditions.check(dLedgerEntry.equals(dLedgerStore.get(dLedgerEntry.getIndex())), DLedgerResponseCode.INCONSISTENT_STATE);

}

} else {

DLedgerEntry dLedgerEntry = pair.getKey().getEntry();

// 校驗請求中的訊息與當前節點儲存的訊息是否一致

PreConditions.check(dLedgerEntry.equals(dLedgerStore.get(dLedgerEntry.getIndex())), DLedgerResponseCode.INCONSISTENT_STATE);

}

// 設定完成

pair.getValue().complete(buildBatchAppendResponse(pair.getKey(), DLedgerResponseCode.SUCCESS.getCode()));

logger.warn("[PushFallBehind]The leader pushed an batch append entry last index={} smaller than current ledgerEndIndex={}, maybe the last ack is missed", lastEntryIndex, endIndex);

} catch (Throwable t) {

logger.error("[PushFallBehind]The leader pushed an batch append entry last index={} smaller than current ledgerEndIndex={}, maybe the last ack is missed", lastEntryIndex, endIndex, t);

// 如果出現了異常,向Leader節點傳送資料不一致的請求

pair.getValue().complete(buildBatchAppendResponse(pair.getKey(), DLedgerResponseCode.INCONSISTENT_STATE.getCode()));

}

// 處理之後從writeRequestMap移除

writeRequestMap.remove(pair.getKey().getFirstEntryIndex());

continue;

}

// 如果firstEntryIndex與endIndex + 1相等,表示該請求是endIndex之後的訊息請求,結束本次檢查

if (firstEntryIndex == endIndex + 1) {

return;

}

// 判斷響應是否超時,如果未超時,繼續處理下一個

TimeoutFuture<PushEntryResponse> future = (TimeoutFuture<PushEntryResponse>) pair.getValue();

if (!future.isTimeOut()) {

continue;

}

// 如果firstEntryIndex比minFastForwardIndex小

if (firstEntryIndex < minFastForwardIndex) {

// 更新minFastForwardIndex

minFastForwardIndex = firstEntryIndex;

}

}

// 如果minFastForwardIndex依舊是MAX_VALUE,表示沒有資料不一致的訊息,直接返回

if (minFastForwardIndex == Long.MAX_VALUE) {

return;

}

// 根據minFastForwardIndex獲取請求

Pair<PushEntryRequest, CompletableFuture<PushEntryResponse>> pair = writeRequestMap.get(minFastForwardIndex);

if (pair == null) { // 如果未獲取到直接返回

return;

}

logger.warn("[PushFastForward] ledgerEndIndex={} entryIndex={}", endIndex, minFastForwardIndex);

// 向Leader返回響應,響應狀態為INCONSISTENT_STATE

pair.getValue().complete(buildBatchAppendResponse(pair.getKey(), DLedgerResponseCode.INCONSISTENT_STATE.getCode()));

}

private PushEntryResponse buildBatchAppendResponse(PushEntryRequest request, int code) {

PushEntryResponse response = new PushEntryResponse();

response.setGroup(request.getGroup());

response.setCode(code);

response.setTerm(request.getTerm());

response.setIndex(request.getLastEntryIndex());

// 設定當前節點的LedgerBeginIndex

response.setBeginIndex(dLedgerStore.getLedgerBeginIndex());

// 設定LedgerEndIndex

response.setEndIndex(dLedgerStore.getLedgerEndIndex());

return response;

}

}

Follower節點訊息寫入

handleDoAppend

handleDoAppend方法用於處理Append請求,將Leader轉發的訊息寫入到紀錄檔檔案: 1. 從請求中獲取訊息Entry,**呼叫appendAsFollower方法將訊息寫入檔案**; 2. **呼叫updateCommittedIndex方法將Leader請求中攜帶的commitIndex更新到Follower本地**,後面在講`QuorumAckChecker`時候會提到;public class DLedgerEntryPusher {

private class EntryHandler extends ShutdownAbleThread {

private void handleDoAppend(long writeIndex, PushEntryRequest request,

CompletableFuture<PushEntryResponse> future) {

try {

PreConditions.check(writeIndex == request.getEntry().getIndex(), DLedgerResponseCode.INCONSISTENT_STATE);

// 將訊息寫入紀錄檔

DLedgerEntry entry = dLedgerStore.appendAsFollower(request.getEntry(), request.getTerm(), request.getLeaderId());

PreConditions.check(entry.getIndex() == writeIndex, DLedgerResponseCode.INCONSISTENT_STATE);

future.complete(buildResponse(request, DLedgerResponseCode.SUCCESS.getCode()));

// 更新CommitIndex

dLedgerStore.updateCommittedIndex(request.getTerm(), request.getCommitIndex());

} catch (Throwable t) {

logger.error("[HandleDoWrite] writeIndex={}", writeIndex, t);

future.complete(buildResponse(request, DLedgerResponseCode.INCONSISTENT_STATE.getCode()));

}

}

}

}

寫入檔案

同樣以DLedgerMmapFileStore為例,看下appendAsFollower方法的處理過程,前面已經講過appendAsLeader的處理邏輯,他們的處理過程相似,基本就是將entry內容寫入buffer,然後再將buffer寫入資料檔案和索引檔案,這裡不再贅述:

public class DLedgerMmapFileStore extends DLedgerStore {

@Override

public DLedgerEntry appendAsFollower(DLedgerEntry entry, long leaderTerm, String leaderId) {

PreConditions.check(memberState.isFollower(), DLedgerResponseCode.NOT_FOLLOWER, "role=%s", memberState.getRole());

PreConditions.check(!isDiskFull, DLedgerResponseCode.DISK_FULL);

// 獲取資料Buffer

ByteBuffer dataBuffer = localEntryBuffer.get();

// 獲取索引Buffer

ByteBuffer indexBuffer = localIndexBuffer.get();

// encode

DLedgerEntryCoder.encode(entry, dataBuffer);

int entrySize = dataBuffer.remaining();

synchronized (memberState) {

PreConditions.check(memberState.isFollower(), DLedgerResponseCode.NOT_FOLLOWER, "role=%s", memberState.getRole());

long nextIndex = ledgerEndIndex + 1;

PreConditions.check(nextIndex == entry.getIndex(), DLedgerResponseCode.INCONSISTENT_INDEX, null);

PreConditions.check(leaderTerm == memberState.currTerm(), DLedgerResponseCode.INCONSISTENT_TERM, null);

PreConditions.check(leaderId.equals(memberState.getLeaderId()), DLedgerResponseCode.INCONSISTENT_LEADER, null);

// 寫入資料檔案

long dataPos = dataFileList.append(dataBuffer.array(), 0, dataBuffer.remaining());

PreConditions.check(dataPos == entry.getPos(), DLedgerResponseCode.DISK_ERROR, "%d != %d", dataPos, entry.getPos());

DLedgerEntryCoder.encodeIndex(dataPos, entrySize, entry.getMagic(), entry.getIndex(), entry.getTerm(), indexBuffer);

// 寫入索引檔案

long indexPos = indexFileList.append(indexBuffer.array(), 0, indexBuffer.remaining(), false);

PreConditions.check(indexPos == entry.getIndex() * INDEX_UNIT_SIZE, DLedgerResponseCode.DISK_ERROR, null);

ledgerEndTerm = entry.getTerm();

ledgerEndIndex = entry.getIndex();

if (ledgerBeginIndex == -1) {

ledgerBeginIndex = ledgerEndIndex;

}

updateLedgerEndIndexAndTerm();

return entry;

}

}

}

Compare

handleDoCompare

用於處理COMPARE請求,compareIndex為需要比較的index,處理邏輯如下:- 進行校驗,主要判斷compareIndex與請求中的Index是否一致,以及請求型別是否是COMPARE;

- 根據compareIndex獲取訊息Entry;

- 構建響應內容,在響應中設定當前節點以及同步的訊息的BeginIndex和EndIndex;

public class DLedgerEntryPusher {

private class EntryHandler extends ShutdownAbleThread {

private CompletableFuture<PushEntryResponse> handleDoCompare(long compareIndex, PushEntryRequest request,

CompletableFuture<PushEntryResponse> future) {

try {

// 校驗compareIndex與請求中的Index是否一致

PreConditions.check(compareIndex == request.getEntry().getIndex(), DLedgerResponseCode.UNKNOWN);

// 校驗請求型別是否是COMPARE

PreConditions.check(request.getType() == PushEntryRequest.Type.COMPARE, DLedgerResponseCode.UNKNOWN);

// 獲取Entry

DLedgerEntry local = dLedgerStore.get(compareIndex);

// 校驗請求中的Entry與原生的是否一致

PreConditions.check(request.getEntry().equals(local), DLedgerResponseCode.INCONSISTENT_STATE);

// 構建請求響應,這裡返回成功,說明資料沒有出現不一致

future.complete(buildResponse(request, DLedgerResponseCode.SUCCESS.getCode()));

} catch (Throwable t) {

logger.error("[HandleDoCompare] compareIndex={}", compareIndex, t);

future.complete(buildResponse(request, DLedgerResponseCode.INCONSISTENT_STATE.getCode()));

}

return future;

}

private PushEntryResponse buildResponse(PushEntryRequest request, int code) {

// 構建請求響應

PushEntryResponse response = new PushEntryResponse();

response.setGroup(request.getGroup());

// 設定響應狀態

response.setCode(code);

// 設定Term

response.setTerm(request.getTerm());

// 如果不是COMMIT

if (request.getType() != PushEntryRequest.Type.COMMIT) {

// 設定Index

response.setIndex(request.getEntry().getIndex());

}

// 設定BeginIndex

response.setBeginIndex(dLedgerStore.getLedgerBeginIndex());

// 設定EndIndex

response.setEndIndex(dLedgerStore.getLedgerEndIndex());

return response;

}

}

}

Truncate

Follower節點對Truncate的請求處理在handleDoTruncate方法中,主要是根據Leader節點傳送的truncateIndex,進行資料刪除,將truncateIndex之後的訊息從紀錄檔中刪除:

private class EntryDispatcher extends ShutdownAbleThread {

// truncateIndex為待刪除的訊息的index

private CompletableFuture<PushEntryResponse> handleDoTruncate(long truncateIndex, PushEntryRequest request,

CompletableFuture<PushEntryResponse> future) {

try {

logger.info("[HandleDoTruncate] truncateIndex={} pos={}", truncateIndex, request.getEntry().getPos());

PreConditions.check(truncateIndex == request.getEntry().getIndex(), DLedgerResponseCode.UNKNOWN);

PreConditions.check(request.getType() == PushEntryRequest.Type.TRUNCATE, DLedgerResponseCode.UNKNOWN);

// 進行刪除

long index = dLedgerStore.truncate(request.getEntry(), request.getTerm(), request.getLeaderId());

PreConditions.check(index == truncateIndex, DLedgerResponseCode.INCONSISTENT_STATE);

future.complete(buildResponse(request, DLedgerResponseCode.SUCCESS.getCode()));

// 更新committedIndex

dLedgerStore.updateCommittedIndex(request.getTerm(), request.getCommitIndex());

} catch (Throwable t) {

logger.error("[HandleDoTruncate] truncateIndex={}", truncateIndex, t);

future.complete(buildResponse(request, DLedgerResponseCode.INCONSISTENT_STATE.getCode()));

}

return future;

}

}

Commit

前面講到Leader節點會向Follower節點傳送COMMIT請求,COMMIT請求主要是更新Follower節點原生的committedIndex的值,記錄叢集中最新的那條獲取大多數響應的訊息的index,在後面QuorumAckChecker中還會看到:

private class EntryHandler extends ShutdownAbleThread {

private CompletableFuture<PushEntryResponse> handleDoCommit(long committedIndex, PushEntryRequest request,

CompletableFuture<PushEntryResponse> future) {

try {

PreConditions.check(committedIndex == request.getCommitIndex(), DLedgerResponseCode.UNKNOWN);

PreConditions.check(request.getType() == PushEntryRequest.Type.COMMIT, DLedgerResponseCode.UNKNOWN);

// 更新committedIndex

dLedgerStore.updateCommittedIndex(request.getTerm(), committedIndex);

future.complete(buildResponse(request, DLedgerResponseCode.SUCCESS.getCode()));

} catch (Throwable t) {

logger.error("[HandleDoCommit] committedIndex={}", request.getCommitIndex(), t);

future.complete(buildResponse(request, DLedgerResponseCode.UNKNOWN.getCode()));

}

return future;

}

}

QuorumAckChecker

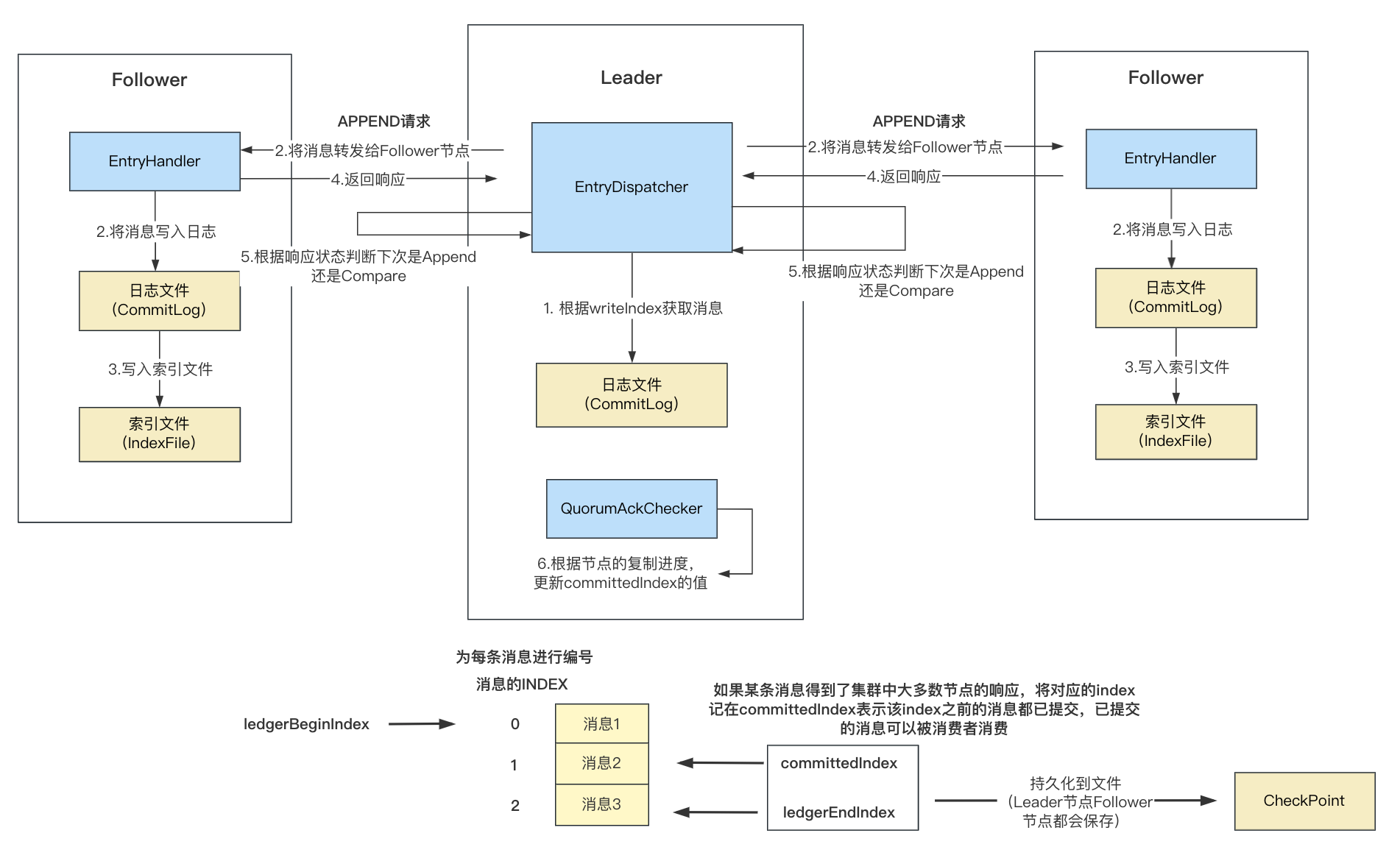

QuorumAckChecker用於Leader節點等待Follower節點複製完畢,處理邏輯如下:

-

如果pendingAppendResponsesByTerm的個數大於1,對其進行遍歷,如果KEY的值與當前Term不一致,說明資料已過期,將過期資料置為完成狀態並從pendingAppendResponsesByTerm中移除;

-

如果peerWaterMarksByTerm個數大於1,對其進行遍歷,同樣找出與當前TERM不一致的資料,進行清理;

-

獲取當前Term的peerWaterMarks,peerWaterMarks記錄了每個Follower節點的紀錄檔複製進度,對所有的複製進度進行排序,取出處於中間位置的那個進度值,也就是訊息的index值,這裡不太好理解,舉個例子,假如一個Leader有5個Follower節點,當前Term為1:

{ "1" : { // TERM的值,對應peerWaterMarks中的Key "節點1" : "1", // 節點1複製到第1條訊息 "節點2" : "1", // 節點2複製到第1條訊息 "節點3" : "2", // 節點3複製到第2條訊息 "節點4" : "3", // 節點4複製到第3條訊息 "節點5" : "3" // 節點5複製到第3條訊息 } }對所有Follower節點的複製進度倒序排序之後的list如下:

[3, 3, 2, 1, 1]取5 / 2 的整數部分為2,也就是下標為2處的值,對應節點3的複製進度(訊息index為2),記錄在quorumIndex變數中,節點4和5對應的訊息進度大於訊息2的,所以對於訊息2,叢集已經有三個節點複製成功,滿足了叢集中大多數節點複製成功的條件。

如果要判斷某條訊息是否叢集中大多數節點已經成功寫入,一種常規的處理方法,對每個節點的複製進度進行判斷,記錄已經複製成功的節點個數,這樣需要每次遍歷整個節點,效率比較低,所以這裡RocketMQ使用了一種更高效的方式來判斷某個訊息是否獲得了叢集中大多數節點的響應。

-

quorumIndex之前的訊息都以成功複製,此時就可以更新提交點,呼叫

updateCommittedIndex方法更新CommitterIndex的值; -

處理處於quorumIndex和lastQuorumIndex(上次quorumIndex的值)之間的資料,比如上次lastQuorumIndex的值為1,本次quorumIndex為2,由於quorumIndex之前的訊息已經獲得了叢集中大多數節點的響應,所以處於quorumIndex和lastQuorumIndex的資料需要清理,從pendingAppendResponsesByTerm中移除,並記錄數量ackNum;

-

如果ackNum為0,表示quorumIndex與lastQuorumIndex相等,從quorumIndex + 1處開始,判斷訊息的寫入請求是否已經超時,如果超時設定WAIT_QUORUM_ACK_TIMEOUT並返回響應;這一步主要是為了處理超時的請求;

-

如果上次校驗時間超過1000ms或者needCheck為true,更新節點的複製進度,遍歷當前term所有的請求響應,如果小於quorumIndex,將其設定成完成狀態並移除響應,表示已完成,這一步主要是處理已經寫入成功的訊息對應的響應物件AppendEntryResponse,是否由於某些原因未移除,如果是需要進行清理;

-

更新lastQuorumIndex的值;

private class QuorumAckChecker extends ShutdownAbleThread {

@Override

public void doWork() {

try {

if (DLedgerUtils.elapsed(lastPrintWatermarkTimeMs) > 3000) {

logger.info("[{}][{}] term={} ledgerBegin={} ledgerEnd={} committed={} watermarks={}",

memberState.getSelfId(), memberState.getRole(), memberState.currTerm(), dLedgerStore.getLedgerBeginIndex(), dLedgerStore.getLedgerEndIndex(), dLedgerStore.getCommittedIndex(), JSON.toJSONString(peerWaterMarksByTerm));

lastPrintWatermarkTimeMs = System.currentTimeMillis();

}

// 如果不是Leader

if (!memberState.isLeader()) {

waitForRunning(1);

return;

}

// 獲取當前的Term

long currTerm = memberState.currTerm();

checkTermForPendingMap(currTerm, "QuorumAckChecker");

checkTermForWaterMark(currTerm, "QuorumAckChecker");

// 如果pendingAppendResponsesByTerm的個數大於1

if (pendingAppendResponsesByTerm.size() > 1) {

// 遍歷,處理與當前TERM不一致的資料

for (Long term : pendingAppendResponsesByTerm.keySet()) {

// 如果與當前Term一致

if (term == currTerm) {

continue;

}

// 對VALUE進行遍歷

for (Map.Entry<Long, TimeoutFuture<AppendEntryResponse>> futureEntry : pendingAppendResponsesByTerm.get(term).entrySet()) {

// 建立AppendEntryResponse

AppendEntryResponse response = new AppendEntryResponse();

response.setGroup(memberState.getGroup());

response.setIndex(futureEntry.getKey());

response.setCode(DLedgerResponseCode.TERM_CHANGED.getCode());

response.setLeaderId(memberState.getLeaderId());

logger.info("[TermChange] Will clear the pending response index={} for term changed from {} to {}", futureEntry.getKey(), term, currTerm);

// 設定完成

futureEntry.getValue().complete(response);

}

// 移除

pendingAppendResponsesByTerm.remove(term);

}

}

// 處理與當前TERM不一致的資料

if (peerWaterMarksByTerm.size() > 1) {

for (Long term : peerWaterMarksByTerm.keySet()) {

if (term == currTerm) {

continue;

}

logger.info("[TermChange] Will clear the watermarks for term changed from {} to {}", term, currTerm);

peerWaterMarksByTerm.remove(term);

}

}

// 獲取當前Term的peerWaterMarks,也就是每個Follower節點的複製進度

Map<String, Long> peerWaterMarks = peerWaterMarksByTerm.get(currTerm);

// 對value進行排序

List<Long> sortedWaterMarks = peerWaterMarks.values()

.stream()

.sorted(Comparator.reverseOrder())

.collect(Collectors.toList());

// 取中位數

long quorumIndex = sortedWaterMarks.get(sortedWaterMarks.size() / 2);

// 中位數之前的訊息都已同步成功,此時更新CommittedIndex

dLedgerStore.updateCommittedIndex(currTerm, quorumIndex);

// 獲取當前Term的紀錄檔轉發請求響應

ConcurrentMap<Long, TimeoutFuture<AppendEntryResponse>> responses = pendingAppendResponsesByTerm.get(currTerm);

boolean needCheck = false;

int ackNum = 0;

// 從quorumIndex開始,向前遍歷,處理處於quorumIndex和lastQuorumIndex(上次quorumIndex的值)之間的資料

for (Long i = quorumIndex; i > lastQuorumIndex; i--) {

try {

// 從responses中移除

CompletableFuture<AppendEntryResponse> future = responses.remove(i);

if (future == null) { // 如果響應為空,needCheck置為true

needCheck = true;

break;

} else if (!future.isDone()) { // 如果未完成

AppendEntryResponse response = new AppendEntryResponse();

response.setGroup(memberState.getGroup());

response.setTerm(currTerm);

response.setIndex(i);

response.setLeaderId(memberState.getSelfId());

response.setPos(((AppendFuture) future).getPos());

future.complete(response);

}

// 記錄ACK節點的數量

ackNum++;

} catch (Throwable t) {

logger.error("Error in ack to index={} term={}", i, currTerm, t);

}

}

// 如果ackNum為0,表示quorumIndex與lastQuorumIndex相等

// 這一步主要是為了處理超時的請求

if (ackNum == 0) {

// 從quorumIndex + 1處開始處理

for (long i = quorumIndex + 1; i < Integer.MAX_VALUE; i++) {

TimeoutFuture<AppendEntryResponse> future = responses.get(i);

if (future == null) { // 如果為空,表示還沒有第i條訊息,結束迴圈

break;

} else if (future.isTimeOut()) { // 如果第i條訊息的請求已經超時

AppendEntryResponse response = new AppendEntryResponse();

response.setGroup(memberState.getGroup());

// 設定超時狀態WAIT_QUORUM_ACK_TIMEOUT

response.setCode(DLedgerResponseCode.WAIT_QUORUM_ACK_TIMEOUT.getCode());

response.setTerm(currTerm);

response.setIndex(i);

response.setLeaderId(memberState.getSelfId());

// 設定完成

future.complete(response);

} else {

break;

}

}

waitForRunning(1);

}

// 如果上次校驗時間超過1000ms或者needCheck為true

// 這一步主要是處理已經寫入成功的訊息對應的響應物件AppendEntryResponse,是否由於某些原因未移除,如果是需要進行清理

if (DLedgerUtils.elapsed(lastCheckLeakTimeMs) > 1000 || needCheck) {

// 更新節點的複製進度

updatePeerWaterMark(currTerm, memberState.getSelfId(), dLedgerStore.getLedgerEndIndex());

// 遍歷當前term所有的請求響應

for (Map.Entry<Long, TimeoutFuture<AppendEntryResponse>> futureEntry : responses.entrySet()) {

// 如果小於quorumIndex

if (futureEntry.getKey() < quorumIndex) {

AppendEntryResponse response = new AppendEntryResponse();

response.setGroup(memberState.getGroup());

response.setTerm(currTerm);

response.setIndex(futureEntry.getKey());

response.setLeaderId(memberState.getSelfId());

response.setPos(((AppendFuture) futureEntry.getValue()).getPos());

futureEntry.getValue().complete(response);

// 移除

responses.remove(futureEntry.getKey());

}

}

lastCheckLeakTimeMs = System.currentTimeMillis();

}

// 更新lastQuorumIndex

lastQuorumIndex = quorumIndex;

} catch (Throwable t) {

DLedgerEntryPusher.logger.error("Error in {}", getName(), t);

DLedgerUtils.sleep(100);

}

}

}

持久化

Leader節點在某個訊息的寫入得到叢集中大多數Follower節點的響應之後,會呼叫updateCommittedIndex將訊息的index記在committedIndex中,上面也提到過,Follower節點在收到Leader節點的APPEND請求的時候,也會將請求中設定的Leader節點的committedIndex更新到本地。

在持久化檢查點的persistCheckPoint方法中,會將LedgerEndIndex和committedIndex寫入到檔案(ChecktPoint)進行持久化(Broker停止或者FLUSH的時候):

ledgerEndIndex:Leader或者Follower節點最後一條成功寫入的訊息的index;

committedIndex:如果某條訊息轉發給Follower節點之後得到了叢集中大多數節點的響應成功,將對應的index記在committedIndex表示該index之前的訊息都已提交,已提交的訊息可以被消費者消費,Leader節點會將值設定在APPEND請求中傳送給Follower節點進行更新或者傳送COMMIT請求進行更新;

public class DLedgerMmapFileStore extends DLedgerStore {

public void updateCommittedIndex(long term, long newCommittedIndex) {

if (newCommittedIndex == -1

|| ledgerEndIndex == -1

|| term < memberState.currTerm()

|| newCommittedIndex == this.committedIndex) {

return;

}

if (newCommittedIndex < this.committedIndex

|| newCommittedIndex < this.ledgerBeginIndex) {

logger.warn("[MONITOR]Skip update committed index for new={} < old={} or new={} < beginIndex={}", newCommittedIndex, this.committedIndex, newCommittedIndex, this.ledgerBeginIndex);

return;

}

// 獲取ledgerEndIndex

long endIndex = ledgerEndIndex;

// 如果新的提交index大於最後一條訊息的index

if (newCommittedIndex > endIndex) {

// 更新

newCommittedIndex = endIndex;

}

Pair<Long, Integer> posAndSize = getEntryPosAndSize(newCommittedIndex);

PreConditions.check(posAndSize != null, DLedgerResponseCode.DISK_ERROR);

this.committedIndex = newCommittedIndex;

this.committedPos = posAndSize.getKey() + posAndSize.getValue();

}

// 持久化檢查點

void persistCheckPoint() {

try {

Properties properties = new Properties();

// 設定LedgerEndIndex

properties.put(END_INDEX_KEY, getLedgerEndIndex());

// 設定committedIndex

properties.put(COMMITTED_INDEX_KEY, getCommittedIndex());

String data = IOUtils.properties2String(properties);

// 將資料寫入檔案

IOUtils.string2File(data, dLedgerConfig.getDefaultPath() + File.separator + CHECK_POINT_FILE);

} catch (Throwable t) {

logger.error("Persist checkpoint failed", t);

}

}

}

參考

【中介軟體興趣圈】原始碼分析 RocketMQ DLedger(多副本) 之紀錄檔複製(傳播)

RocketMQ版本:4.9.3