grafana展示的CPU利用率與實際不符的問題探究

問題描述

最近看了一個虛機的CPU使用情況,使用mpstat -P ALL命令檢視系統的CPU情況(該系統只有一個CPU core),發現該CPU的%usr長期維持在70%左右,且%sys也長期維持在20%左右:

03:56:29 AM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle

03:56:34 AM all 67.11 0.00 24.83 0.00 0.00 8.05 0.00 0.00 0.00 0.00

03:56:34 AM 0 67.11 0.00 24.83 0.00 0.00 8.05 0.00 0.00 0.00 0.00

mpstat命令展示的CPU結果和top命令一致

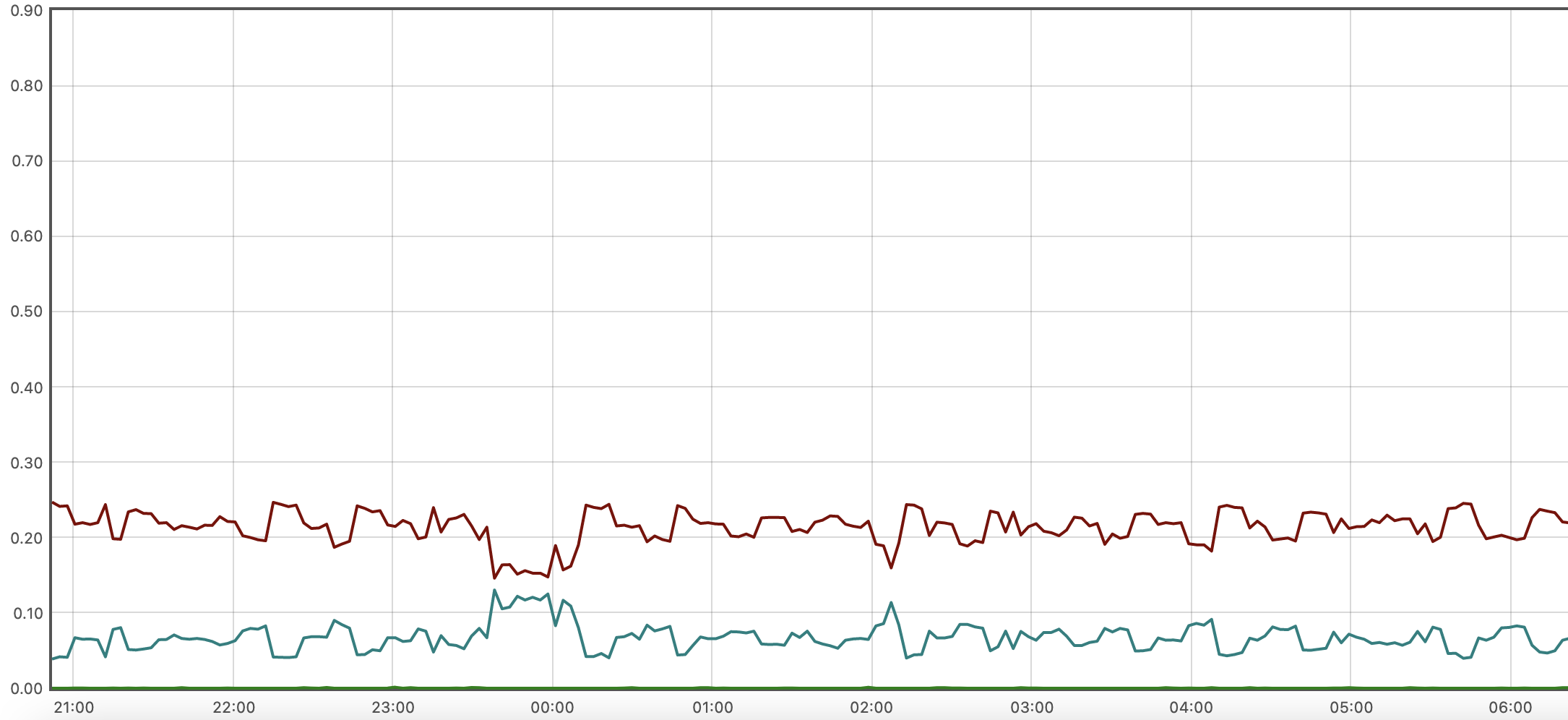

但通過Grafana檢視發現該機器的%usr和%sys均低於實際情況。如下圖棕色曲線為usr,藍色曲線為sys:

Grafana 的表示式如下:

avg by (mode, instance) (irate(node_cpu_seconds_total{instance=~"$instance", mode=~"user|system|iowait"}[$__rate_interval]))

問題解決

嘗試解決

一開始懷疑是node-exporter版本問題,但檢視node-exporter的release notes並沒有相關bug,在切換為最新版本之後,問題也沒有解決。

調研node-exporter運作方式

大部分與系統相關的prometheus指標都是直接從系統指標檔案中讀取並轉換過來的。node-exporter中與CPU相關的指標就讀取自/proc/stat,其中與CPU相關的內容就是下面的前兩行,每行十列資料,分別表示User、Nice、System、Idle、Iowait、IRQ SoftIRQ、Steal、 Guest 、GuestNice

# cat /proc/stat

cpu 18651720 282843 9512262 493780943 10294540 0 2239778 0 0 0

cpu0 18651720 282843 9512262 493780943 10294540 0 2239778 0 0 0

intr 227141952 99160476 9 0 0 2772 0 0 0 0 0 0 0 157 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

ctxt 4027171429

btime 1671775036

processes 14260129

procs_running 5

procs_blocked 0

softirq 1727699538 0 816653671 1 233469155 45823320 0 52888978 0 0 578864413

node-exporter並沒有做什麼運算,它只是將這十列資料除以userHZ(100),打上mode標籤之後轉換為prometheus格式的指標:

node_cpu_seconds_total{cpu="0", instance="redis:9100", mode="user"} 244328.77

mpstat命令的計算方式

那mpstat是如何計算不同mode的CPU利用率呢?

在mpstat的原始碼中可以看到,mode為User的計算方式如下,涉及三個引數:

scc: 當前取樣到的CPU資訊,對應/proc/stat中的CPU資訊scp: 上一次取樣到的CPU資訊,對應/proc/stat中的CPU資訊deltot_jiffies: 兩次CPU取樣之間的jiffies(下面介紹什麼是jiffies)

ll_sp_value(scp->cpu_user - scp->cpu_guest,

scc->cpu_user - scc->cpu_guest, deltot_jiffies)

ll_sp_value函數的定義如下,它使用了宏定義SP_VALUE:

/*

***************************************************************************

* Workaround for CPU counters read from /proc/stat: Dyn-tick kernels

* have a race issue that can make those counters go backward.

***************************************************************************

*/

double ll_sp_value(unsigned long long value1, unsigned long long value2,

unsigned long long itv)

{

if (value2 < value1)

return (double) 0;

else

return SP_VALUE(value1, value2, itv);

}

SP_VALUE的定義如下:

/* With S_VALUE macro, the interval of time (@p) is given in 1/100th of a second */

#define S_VALUE(m,n,p) (((double) ((n) - (m))) / (p) * 100)

/* Define SP_VALUE() to normalize to % */

#define SP_VALUE(m,n,p) (((double) ((n) - (m))) / (p) * 100)

/*

根據SP_VALUE定義可以看到兩次CPU取樣獲取到的mode為User的CPU佔用率計算方式為:(((double) ((scp->cpu_user - scp->cpu_guest) - (scp->cpu_user - scp->cpu_guest))) / (deltot_jiffies) * 100)

下面函數用於計算deltot_jiffies,可以看到jiffies其實就是/proc/stat中的CPU數值單位:

/*

***************************************************************************

* Since ticks may vary slightly from CPU to CPU, we'll want

* to recalculate itv based on this CPU's tick count, rather

* than that reported by the "cpu" line. Otherwise we

* occasionally end up with slightly skewed figures, with

* the skew being greater as the time interval grows shorter.

*

* IN:

* @scc Current sample statistics for current CPU.

* @scp Previous sample statistics for current CPU.

*

* RETURNS:

* Interval of time based on current CPU, expressed in jiffies.

*

* USED BY:

* sar, sadf, mpstat

***************************************************************************

*/

unsigned long long get_per_cpu_interval(struct stats_cpu *scc,

struct stats_cpu *scp)

{

unsigned long long ishift = 0LL;

if ((scc->cpu_user - scc->cpu_guest) < (scp->cpu_user - scp->cpu_guest)) {

/*

* Sometimes the nr of jiffies spent in guest mode given by the guest

* counter in /proc/stat is slightly higher than that included in

* the user counter. Update the interval value accordingly.

*/

ishift += (scp->cpu_user - scp->cpu_guest) -

(scc->cpu_user - scc->cpu_guest);

}

if ((scc->cpu_nice - scc->cpu_guest_nice) < (scp->cpu_nice - scp->cpu_guest_nice)) {

/*

* Idem for nr of jiffies spent in guest_nice mode.

*/

ishift += (scp->cpu_nice - scp->cpu_guest_nice) -

(scc->cpu_nice - scc->cpu_guest_nice);

}

/*

* Workaround for CPU coming back online: With recent kernels

* some fields (user, nice, system) restart from their previous value,

* whereas others (idle, iowait) restart from zero.

* For the latter we need to set their previous value to zero to

* avoid getting an interval value < 0.

* (I don't know how the other fields like hardirq, steal... behave).

* Don't assume the CPU has come back from offline state if previous

* value was greater than ULLONG_MAX - 0x7ffff (the counter probably

* overflew).

*/

if ((scc->cpu_iowait < scp->cpu_iowait) && (scp->cpu_iowait < (ULLONG_MAX - 0x7ffff))) {

/*

* The iowait value reported by the kernel can also decrement as

* a result of inaccurate iowait tracking. Waiting on IO can be

* first accounted as iowait but then instead as idle.

* Therefore if the idle value during the same period did not

* decrease then consider this is a problem with the iowait

* reporting and correct the previous value according to the new

* reading. Otherwise, treat this as CPU coming back online.

*/

if ((scc->cpu_idle > scp->cpu_idle) || (scp->cpu_idle >= (ULLONG_MAX - 0x7ffff))) {

scp->cpu_iowait = scc->cpu_iowait;

}

else {

scp->cpu_iowait = 0;

}

}

if ((scc->cpu_idle < scp->cpu_idle) && (scp->cpu_idle < (ULLONG_MAX - 0x7ffff))) {

scp->cpu_idle = 0;

}

/*

* Don't take cpu_guest and cpu_guest_nice into account

* because cpu_user and cpu_nice already include them.

*/

return ((scc->cpu_user + scc->cpu_nice +

scc->cpu_sys + scc->cpu_iowait +

scc->cpu_idle + scc->cpu_steal +

scc->cpu_hardirq + scc->cpu_softirq) -

(scp->cpu_user + scp->cpu_nice +

scp->cpu_sys + scp->cpu_iowait +

scp->cpu_idle + scp->cpu_steal +

scp->cpu_hardirq + scp->cpu_softirq) +

ishift);

}

從上面計算方式可以看到,deltot_jiffies近似可以認為是兩次CPU取樣的所有mode總和之差,以下表為例:

User Nice System Idle Iowait IRQ SoftIRQ Steal Guest GuestNice

cpu 18424040 281581 9443941 493688502 10284789 0 2221013 0 0 0 # 第一次取樣,作為scp

cpu 18424137 281581 9443954 493688502 10284789 0 2221020 0 0 0 # 第二次取樣,作為scc

deltot_jiffies的計算方式為:

(18424137+281581+9443954+493688502+10284789) - (18424040+281581+9443941+493688502+2221013) + 0 = 117

那麼根據取樣到的資料,可以得出當前虛擬上的mode為User的CPU佔用率為:(((double) ((18424137 - 0) - (18424040 - 0))) / (117) * 100)=82.9%,與預期相符。

再回頭看下出問題的Grafana表示式,可以看出其計算的是mode為User的CPU的變動趨勢,而不是CPU佔用率,按照mpstat的計算方式,該mode的佔用率的近似計算方式如下:

increase(node_cpu_seconds_total{mode="user", instance="drg1-prd-dragon-redis-sentinel-data-1:9100"}[10m])/on (cpu,instance)(increase(node_cpu_seconds_total{mode="user", instance="drg1-prd-dragon-redis-sentinel-data-1:9100"}[10m])+ on (cpu,instance) increase(node_cpu_seconds_total{mode="system", instance="drg1-prd-dragon-redis-sentinel-data-1:9100"}[10m]))

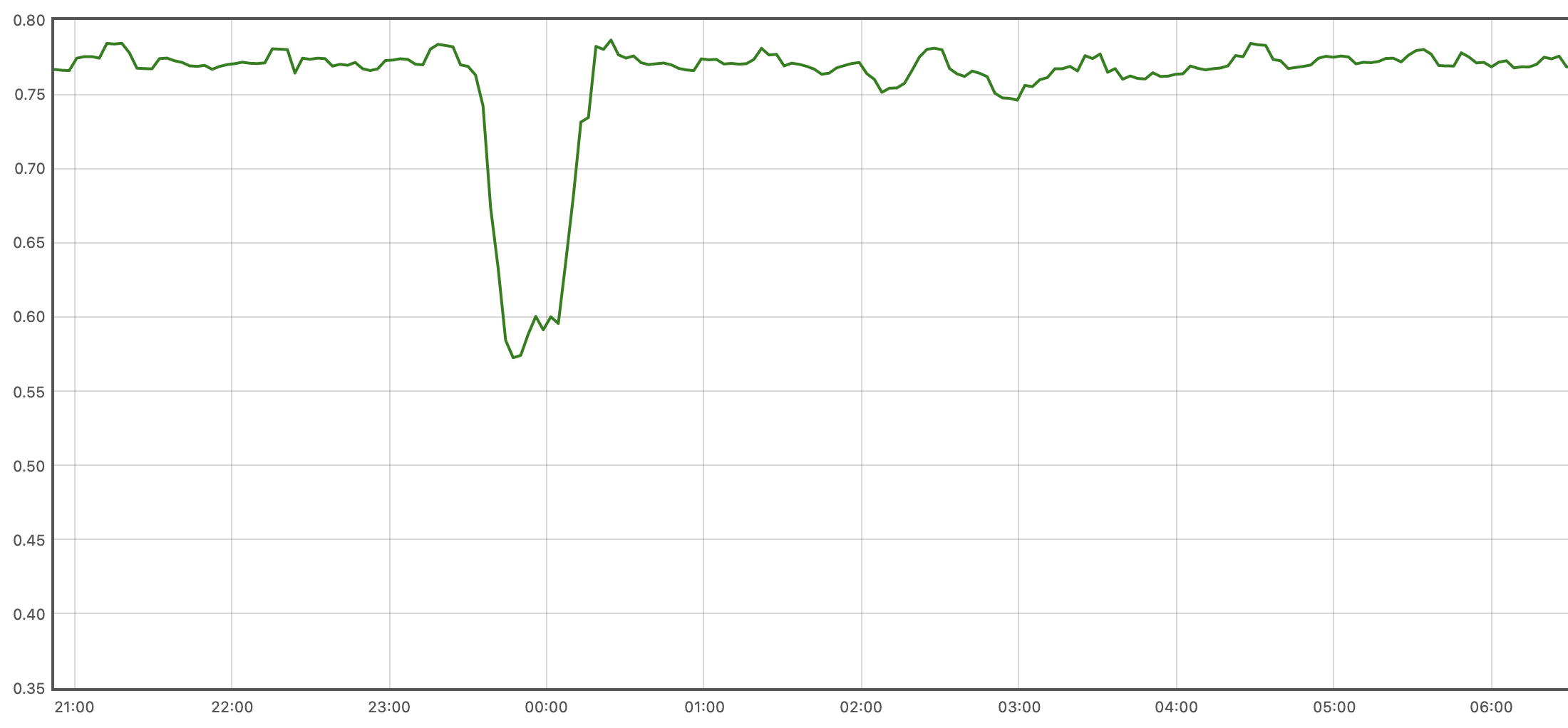

得出的mode為User的CPU佔用率曲線圖如下,與mpstat展示結果相同:

如果有必要的話,可以建立新的指標,用於準確表達CPU佔用率。

本文來自部落格園,作者:charlieroro,轉載請註明原文連結:https://www.cnblogs.com/charlieroro/p/17152138.html