Diffusers中基於Stable Diffusion的哪些影象操作

2023-02-24 12:02:24

基於Stable Diffusion的哪些影象操作們:

- Text-To-Image generation:

StableDiffusionPipeline - Image-to-Image text guided generation:

StableDiffusionImg2ImgPipeline - In-painting:

StableDiffusionInpaintPipeline - text-guided image super-resolution:

StableDiffusionUpscalePipeline - generate variations from an input image:

StableDiffusionImageVariationPipeline - image editing by following text instructions:

StableDiffusionInstructPix2PixPipeline - ......

輔助函數

import requests

from PIL import Image

from io import BytesIO

def show_images(imgs, rows=1, cols=3):

assert len(imgs) == rows*cols

w_ori, h_ori = imgs[0].size

for img in imgs:

w_new, h_new = img.size

if w_new != w_ori or h_new != h_ori:

w_ori = max(w_ori, w_new)

h_ori = max(h_ori, h_new)

grid = Image.new('RGB', size=(cols*w_ori, rows*h_ori))

grid_w, grid_h = grid.size

for i, img in enumerate(imgs):

grid.paste(img, box=(i%cols*w_ori, i//cols*h_ori))

return grid

def download_image(url):

response = requests.get(url)

return Image.open(BytesIO(response.content)).convert("RGB")

Text-To-Image

根據文字生成影象,在diffusers使用StableDiffusionPipeline實現,必要輸入為prompt,範例程式碼:

from diffusers import StableDiffusionPipeline

image_pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4")

device = "cuda"

image_pipe.to(device)

prompt = ["a photograph of an astronaut riding a horse"] * 3

out_images = image_pipe(prompt).images

for i, out_image in enumerate(out_images):

out_image.save("astronaut_rides_horse" + str(i) + ".png")

範例輸出:

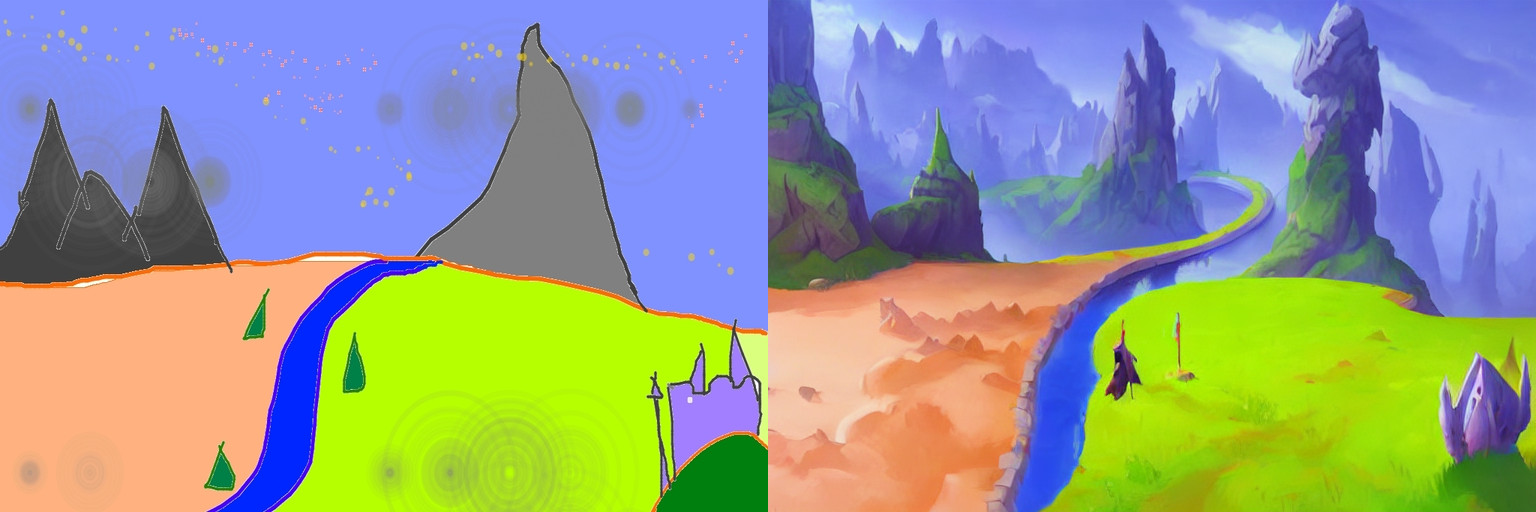

Image-To-Image

根據文字prompt和原始影象,生成新的影象。在diffusers中使用StableDiffusionImg2ImgPipeline類實現,可以看到,pipeline的必要輸入有兩個:prompt和init_image。範例程式碼:

import torch

from diffusers import StableDiffusionImg2ImgPipeline

device = "cuda"

model_id_or_path = "runwayml/stable-diffusion-v1-5"

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

pipe = pipe.to(device)

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

init_image = download_image(url)

init_image = init_image.resize((768, 512))

prompt = "A fantasy landscape, trending on artstation"

images = pipe(prompt=prompt, image=init_image, strength=0.75, guidance_scale=7.5).images

grid_img = show_images([init_image, images[0]], 1, 2)

grid_img.save("fantasy_landscape.png")

範例輸出:

In-painting

給定一個mask影象和一句提示,可編輯給定影象的特定部分。使用StableDiffusionInpaintPipeline來實現,輸入包含三部分:原始影象,mask影象和一個prompt,

範例程式碼:

from diffusers import StableDiffusionInpaintPipeline

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

init_image = download_image(img_url).resize((512, 512))

mask_image = download_image(mask_url).resize((512, 512))

pipe = StableDiffusionInpaintPipeline.from_pretrained("runwayml/stable-diffusion-inpainting", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "Face of a yellow cat, high resolution, sitting on a park bench"

images = pipe(prompt=prompt, image=init_image, mask_image=mask_image).images

grid_img = show_images([init_image, mask_image, images[0]], 1, 3)

grid_img.save("overture-creations.png")

範例輸出:

Upscale

對低解析度影象進行超解析度,使用StableDiffusionUpscalePipeline來實現,必要輸入為prompt和低解析度影象(low-resolution image),範例程式碼:

from diffusers import StableDiffusionUpscalePipeline

# load model and scheduler

model_id = "stabilityai/stable-diffusion-x4-upscaler"

pipeline = StableDiffusionUpscalePipeline.from_pretrained(model_id, torch_dtype=torch.float16, cache_dir="./models/")

pipeline = pipeline.to("cuda")

# let's download an image

url = "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/sd2-upscale/low_res_cat.png"

low_res_img = download_image(url)

low_res_img = low_res_img.resize((128, 128))

prompt = "a white cat"

upscaled_image = pipeline(prompt=prompt, image=low_res_img).images[0]

grid_img = show_images([low_res_img, upscaled_image], 1, 2)

grid_img.save("a_white_cat.png")

print("low_res_img size: ", low_res_img.size)

print("upscaled_image size: ", upscaled_image.size)

範例輸出,預設將一個128 x 128的小貓影象超分為一個512 x 512的:

預設是將原始尺寸的長和寬均放大四倍,即:

input: 128 x 128 ==> output: 512 x 512

input: 64 x 256 ==> output: 256 x 1024

...

個人感覺,prompt沒有起什麼作用,隨便寫吧。

關於此模型的詳情,參考。

Instruct-Pix2Pix

根據輸入的指令prompt對影象進行編輯,使用StableDiffusionInstructPix2PixPipeline來實現,必要輸入包括prompt和image,範例程式碼如下:

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16, cache_dir="./models/")

pipe = pipe.to("cuda")

url = "https://huggingface.co/datasets/diffusers/diffusers-images-docs/resolve/main/mountain.png"

image = download_image(url)

prompt = "make the mountains snowy"

images = pipe(prompt, image=image, num_inference_steps=20, image_guidance_scale=1.5, guidance_scale=7).images

grid_img = show_images([image, images[0]], 1, 2)

grid_img.save("snowy_mountains.png")

範例輸出: