[DuckDB] 多核運算元並行的原始碼解析

DuckDB 是近年來頗受關注的OLAP資料庫,號稱是OLAP領域的SQLite,以精巧簡單,效能優異而著稱。筆者前段時間在調研Doris的Pipeline的運算元並行方案,而DuckDB基於論文《Morsel-Driven Parallelism: A NUMA-Aware Query Evaluation Framework for the Many-Core Age》實現SQL運算元的高效並行化的Pipeline執行引擎,所以筆者花了一些時間進行了學習和總結,這裡結合了Mark Raasveldt進行的分享和原始程式碼來一一剖析DuckDB在執行運算元並行上的具體實現。

1. 基礎知識

問題1:並行task的數目由什麼決定 ?

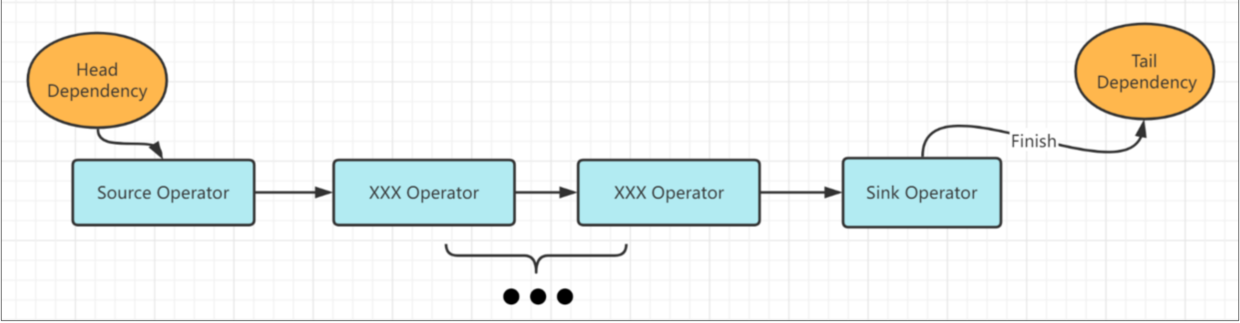

Pipeline的核心是:Morsel-Driven,資料是拆分成了小部分的資料。所以並行Task的核心是:能夠利用多執行緒來處理資料,每一個資料拆分為小部分,所以拆分並行的數目由Source決定。

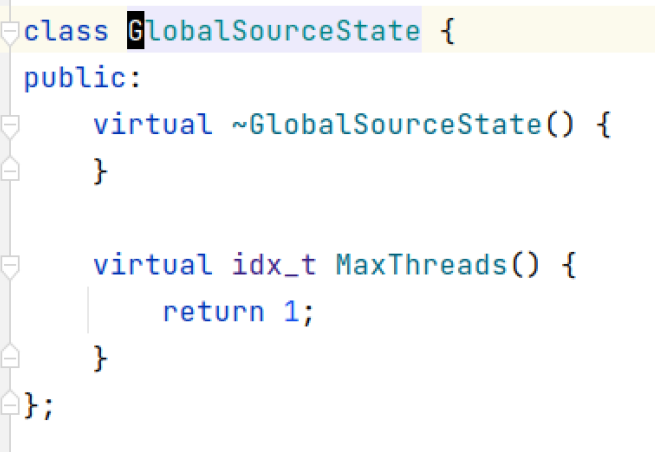

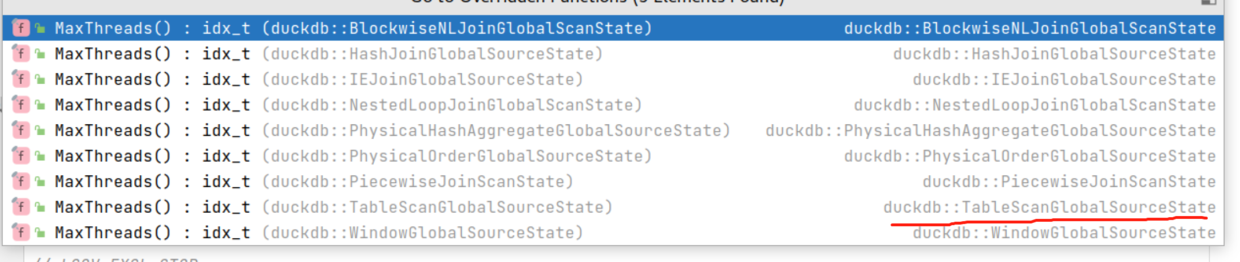

DuckDB在GlobalSource上實現了一個虛擬函式MaxThread來決定task數目:

每一個運算元的GlobalSource抽象了自己的並行度:

問題2:並行task的怎麼樣進行多執行緒同步:

- 多執行緒的競爭只會發生在SinkOperator上,也就是Pipeline的尾端。

- parallelism-aware的演演算法需要實現在Sink端

- 其他的非Sink operators (比如:Hash Join Probe, Projection, Filter等), 不需要感知多執行緒同步的問題

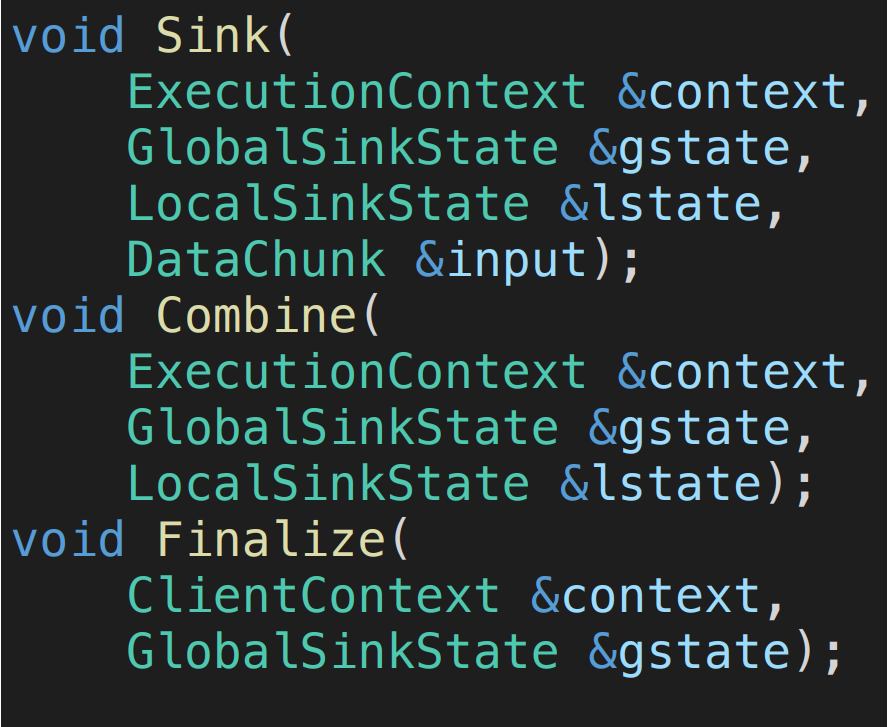

問題3:DuckDB的是如何抽象介面的:

Sink的Opeartor 定義了兩種型別:GlobalState, LocalState

- GlobalState: 每個查詢的Operator全域性只有一個

GlobalSinkState,記錄全域性部分的資訊

class PhysicalOperator {

public:

unique_ptr<GlobalSinkState> sink_state;

- LocalState: 每個查詢的PipelineExecutor都有一個

LocalSinkState,都是區域性私有

//! The Pipeline class represents an execution pipeline

class PipelineExecutor {

private:

//! The local sink state (if any)

unique_ptr<LocalSinkState> local_sink_state;

後續會詳細解析不同的sink之間的LocalState和GlobalState如何配合的,核心部分如下:

Sink :處理LocalState的資料

Combine:合併LocalState到GlobalState之中

2. 核心運算元的並行

這部分進行各個運算元的原始碼剖析,筆者在原始碼的關鍵部分加上了中文註釋,以方便大家的理解

Sort運算元

- Sink介面:這裡需要注意的是DuckDB排序是進行了列轉行的工作的,後續讀取時需要行轉列。Sink這部分相當於實現了部分資料的排序工作。

SinkResultType PhysicalOrder::Sink(ExecutionContext &context, GlobalSinkState &gstate_p, LocalSinkState &lstate_p,

DataChunk &input) const {

auto &lstate = (OrderLocalSinkState &)lstate_p;

// keys 是排序的列block,payload是輸出的排序後資料,這裡呼叫LocalState的SinkChunk,進行資料的轉行,

local_sort_state.SinkChunk(keys, payload);

// 資料達到記憶體閾值的時候進行基數排序處理,排序之後的結果存入LocalState的原生的SortedBlock中

if (local_sort_state.SizeInBytes() >= gstate.memory_per_thread) {

local_sort_state.Sort(global_sort_state, true);

}

return SinkResultType::NEED_MORE_INPUT;

}

- Combine介面: 加鎖,拷貝sorted block到Global State

void PhysicalOrder::Combine(ExecutionContext &context, GlobalSinkState &gstate_p, LocalSinkState &lstate_p) const {

auto &gstate = (OrderGlobalSinkState &)gstate_p;

auto &lstate = (OrderLocalSinkState &)lstate_p;

// 排序剩餘記憶體中不滿的資料

local_sort_state.Sort(*this, external || !local_sort_state.sorted_blocks.empty());

// Append local state sorted data to this global state

lock_guard<mutex> append_guard(lock);

for (auto &sb : local_sort_state.sorted_blocks) {

sorted_blocks.push_back(move(sb));

}

}

- MergeTask:啟動核數相同的task來進行Merge (這裡可以看出DuckDB對於多執行緒的使用是很激進的), 這裡是通過Event的機制實現的

void Schedule() override {

auto &context = pipeline->GetClientContext();

idx_t num_threads = ts.NumberOfThreads();

vector<unique_ptr<Task>> merge_tasks;

for (idx_t tnum = 0; tnum < num_threads; tnum++) {

merge_tasks.push_back(make_unique<PhysicalOrderMergeTask>(shared_from_this(), context, gstate));

}

SetTasks(move(merge_tasks));

}

class PhysicalOrderMergeTask : public ExecutorTask {

public:

TaskExecutionResult ExecuteTask(TaskExecutionMode mode) override {

// Initialize merge sorted and iterate until done

auto &global_sort_state = state.global_sort_state;

MergeSorter merge_sorter(global_sort_state, BufferManager::GetBufferManager(context));

// 加鎖,獲取兩路,不斷進行兩路歸併,最終完成全域性排序。

while (true) {

{

lock_guard<mutex> pair_guard(state.lock);

if (state.pair_idx == state.num_pairs) {

break;

}

GetNextPartition();

}

MergePartition();

}

event->FinishTask();

return TaskExecutionResult::TASK_FINISHED;

}

聚合運算元(這裡分析的是Prefetch Agg Operator運算元)

- Sink介面:和Sort運算元一樣,這裡拆分為

Group Chunk和Aggregate Input Chunk,可以理解為代表聚合時的key與value列。注意此時Sink介面上的聚合是在LocalSinkState上完成的。

SinkResultType PhysicalPerfectHashAggregate::Sink(ExecutionContext &context, GlobalSinkState &state,

LocalSinkState &lstate_p, DataChunk &input) const {

lstate.ht->AddChunk(group_chunk, aggregate_input_chunk);

}

void PerfectAggregateHashTable::AddChunk(DataChunk &groups, DataChunk &payload) {

auto address_data = FlatVector::GetData<uintptr_t>(addresses);

memset(address_data, 0, groups.size() * sizeof(uintptr_t));

D_ASSERT(groups.ColumnCount() == group_minima.size());

// 計算group key列對應的entry的位置

idx_t current_shift = total_required_bits;

for (idx_t i = 0; i < groups.ColumnCount(); i++) {

current_shift -= required_bits[i];

ComputeGroupLocation(groups.data[i], group_minima[i], address_data, current_shift, groups.size());

}

// 通過data加上面的entry位置 + tuple的偏移量,計算出對應的記憶體地址,並進行init

idx_t needs_init = 0;

for (idx_t i = 0; i < groups.size(); i++) {

D_ASSERT(address_data[i] < total_groups);

const auto group = address_data[i];

address_data[i] = uintptr_t(data) + address_data[i] * tuple_size;

}

RowOperations::InitializeStates(layout, addresses, sel, needs_init);

// after finding the group location we update the aggregates

idx_t payload_idx = 0;

auto &aggregates = layout.GetAggregates();

for (idx_t aggr_idx = 0; aggr_idx < aggregates.size(); aggr_idx++) {

auto &aggregate = aggregates[aggr_idx];

auto input_count = (idx_t)aggregate.child_count;

// 進行聚合的Update操作

RowOperations::UpdateStates(aggregate, addresses, payload, payload_idx, payload.size());

}

}

- Combine介面: 加鎖,merge

local hash table與global hash table

void PhysicalPerfectHashAggregate::Combine(ExecutionContext &context, GlobalSinkState &gstate_p,

LocalSinkState &lstate_p) const {

auto &lstate = (PerfectHashAggregateLocalState &)lstate_p;

auto &gstate = (PerfectHashAggregateGlobalState &)gstate_p;

lock_guard<mutex> l(gstate.lock);

gstate.ht->Combine(*lstate.ht);

}

// local state的地址vector

Vector source_addresses(LogicalType::POINTER);

// global state的地址vector

Vector target_addresses(LogicalType::POINTER);

auto source_addresses_ptr = FlatVector::GetData<data_ptr_t>(source_addresses);

auto target_addresses_ptr = FlatVector::GetData<data_ptr_t>(target_addresses);

// 遍歷所有hash table的表,然後進行合併對應能夠合併的key

data_ptr_t source_ptr = other.data;

data_ptr_t target_ptr = data;

idx_t combine_count = 0;

idx_t reinit_count = 0;

const auto &reinit_sel = *FlatVector::IncrementalSelectionVector();

for (idx_t i = 0; i < total_groups; i++) {

auto has_entry_source = other.group_is_set[i];

// we only have any work to do if the source has an entry for this group

if (has_entry_source) {

auto has_entry_target = group_is_set[i];

if (has_entry_target) {

// both source and target have an entry: need to combine

source_addresses_ptr[combine_count] = source_ptr;

target_addresses_ptr[combine_count] = target_ptr;

combine_count++;

if (combine_count == STANDARD_VECTOR_SIZE) {

RowOperations::CombineStates(layout, source_addresses, target_addresses, combine_count);

combine_count = 0;

}

} else {

group_is_set[i] = true;

// only source has an entry for this group: we can just memcpy it over

memcpy(target_ptr, source_ptr, tuple_size);

// we clear this entry in the other HT as we "consume" the entry here

other.group_is_set[i] = false;

}

}

source_ptr += tuple_size;

target_ptr += tuple_size;

}

// 做對應的merge操作

RowOperations::CombineStates(layout, source_addresses, target_addresses, combine_count);

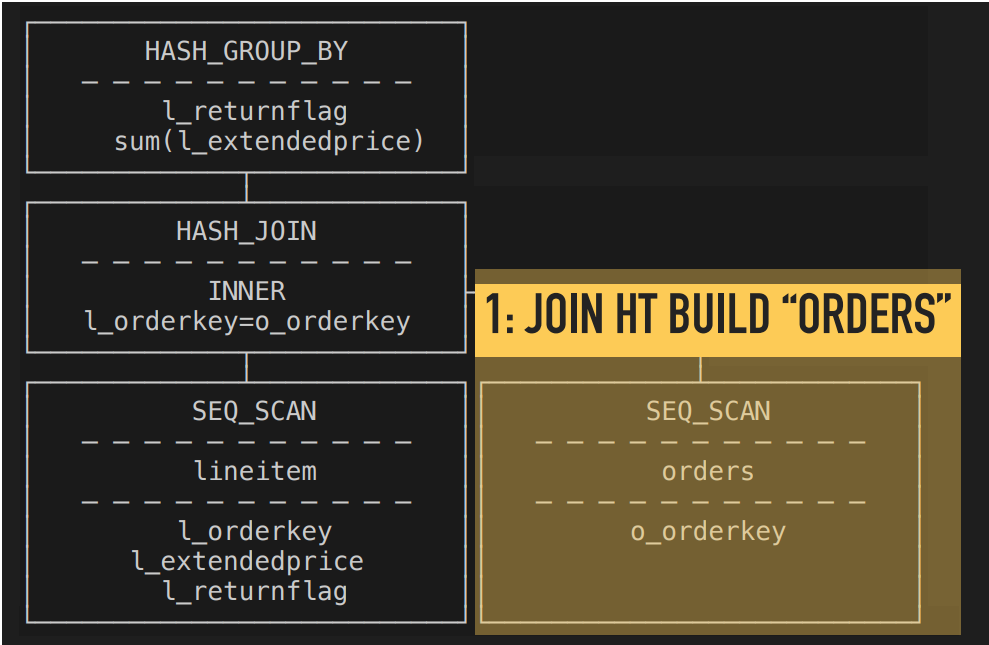

Join運算元

- Sink介面:和Sort運算元一樣,注意此時Sink介面上的hash 表是在LocalSinkState上完成的。

SinkResultType PhysicalHashJoin::Sink(ExecutionContext &context, GlobalSinkState &gstate_p, LocalSinkState &lstate_p,

DataChunk &input) const {

auto &gstate = (HashJoinGlobalSinkState &)gstate_p;

auto &lstate = (HashJoinLocalSinkState &)lstate_p;

lstate.join_keys.Reset();

lstate.build_executor.Execute(input, lstate.join_keys);

// build the HT

auto &ht = *lstate.hash_table;

if (!right_projection_map.empty()) {

// there is a projection map: fill the build chunk with the projected columns

lstate.build_chunk.Reset();

lstate.build_chunk.SetCardinality(input);

for (idx_t i = 0; i < right_projection_map.size(); i++) {

lstate.build_chunk.data[i].Reference(input.data[right_projection_map[i]]);

}

// 構建local state的hash 表

ht.Build(lstate.join_keys, lstate.build_chunk)

return SinkResultType::NEED_MORE_INPUT;

}

- Combine介面: 加鎖,拷貝local state的hash表到global state

void PhysicalHashJoin::Combine(ExecutionContext &context, GlobalSinkState &gstate_p, LocalSinkState &lstate_p) const {

auto &gstate = (HashJoinGlobalSinkState &)gstate_p;

auto &lstate = (HashJoinLocalSinkState &)lstate_p;

if (lstate.hash_table) {

lock_guard<mutex> local_ht_lock(gstate.lock);

gstate.local_hash_tables.push_back(move(lstate.hash_table));

}

}

- MergeTask:啟動核數相同的task來進行Hash table的Merge (這裡可以看出DuckDB對於多執行緒的使用是很激進的), 每個任務merge一部分Block(DuckDB之中的行資料,落盤使用)

void Schedule() override {

auto &context = pipeline->GetClientContext();

vector<unique_ptr<Task>> finalize_tasks;

auto &ht = *sink.hash_table;

const auto &block_collection = ht.GetBlockCollection();

const auto &blocks = block_collection.blocks;

const auto num_blocks = blocks.size();

if (block_collection.count < PARALLEL_CONSTRUCT_THRESHOLD && !context.config.verify_parallelism) {

// Single-threaded finalize

finalize_tasks.push_back(

make_unique<HashJoinFinalizeTask>(shared_from_this(), context, sink, 0, num_blocks, false));

} else {

// Parallel finalize

idx_t num_threads = TaskScheduler::GetScheduler(context).NumberOfThreads();

auto blocks_per_thread = MaxValue<idx_t>((num_blocks + num_threads - 1) / num_threads, 1);

idx_t block_idx = 0;

for (idx_t thread_idx = 0; thread_idx < num_threads; thread_idx++) {

auto block_idx_start = block_idx;

auto block_idx_end = MinValue<idx_t>(block_idx_start + blocks_per_thread, num_blocks);

finalize_tasks.push_back(make_unique<HashJoinFinalizeTask>(shared_from_this(), context, sink,

block_idx_start, block_idx_end, true));

block_idx = block_idx_end;

if (block_idx == num_blocks) {

break;

}

}

}

SetTasks(move(finalize_tasks));

}

template <bool PARALLEL>

static inline void InsertHashesLoop(atomic<data_ptr_t> pointers[], const hash_t indices[], const idx_t count,

const data_ptr_t key_locations[], const idx_t pointer_offset) {

for (idx_t i = 0; i < count; i++) {

auto index = indices[i];

if (PARALLEL) {

data_ptr_t head;

do {

head = pointers[index];

Store<data_ptr_t>(head, key_locations[i] + pointer_offset);

} while (!std::atomic_compare_exchange_weak(&pointers[index], &head, key_locations[i]));

} else {

// set prev in current key to the value (NOTE: this will be nullptr if there is none)

Store<data_ptr_t>(pointers[index], key_locations[i] + pointer_offset);

// set pointer to current tuple

pointers[index] = key_locations[i];

}

}

}

- 並行掃描hash表,進行outer資料的處理:

void PhysicalHashJoin::GetData(ExecutionContext &context, DataChunk &chunk, GlobalSourceState &gstate_p,

LocalSourceState &lstate_p) const {

auto &sink = (HashJoinGlobalSinkState &)*sink_state;

auto &gstate = (HashJoinGlobalSourceState &)gstate_p;

auto &lstate = (HashJoinLocalSourceState &)lstate_p;

sink.scanned_data = true;

if (!sink.external) {

if (IsRightOuterJoin(join_type)) {

{

lock_guard<mutex> guard(gstate.lock);

// 拆解掃描部分hash表的資料

lstate.ScanFullOuter(sink, gstate);

}

// 掃描hash表讀取資料

sink.hash_table->GatherFullOuter(chunk, lstate.addresses, lstate.full_outer_found_entries);

}

return;

}

}

void HashJoinLocalSourceState::ScanFullOuter(HashJoinGlobalSinkState &sink, HashJoinGlobalSourceState &gstate) {

auto &fo_ss = gstate.full_outer_scan;

idx_t scan_index_before = fo_ss.scan_index;

full_outer_found_entries = sink.hash_table->ScanFullOuter(fo_ss, addresses);

idx_t scanned = fo_ss.scan_index - scan_index_before;

full_outer_in_progress = scanned;

}

小結

- DuckDB在多執行緒同步,核心就是在Combine的時候:加鎖,並行是通過原子變數的方式實現並行寫入hash表的操作

- 通過

local/global拆分私有記憶體和公共記憶體,並行的基礎是在私有記憶體上進行運算,同步的部分主要在公有記憶體的更新

3. Spill To Disk的實現

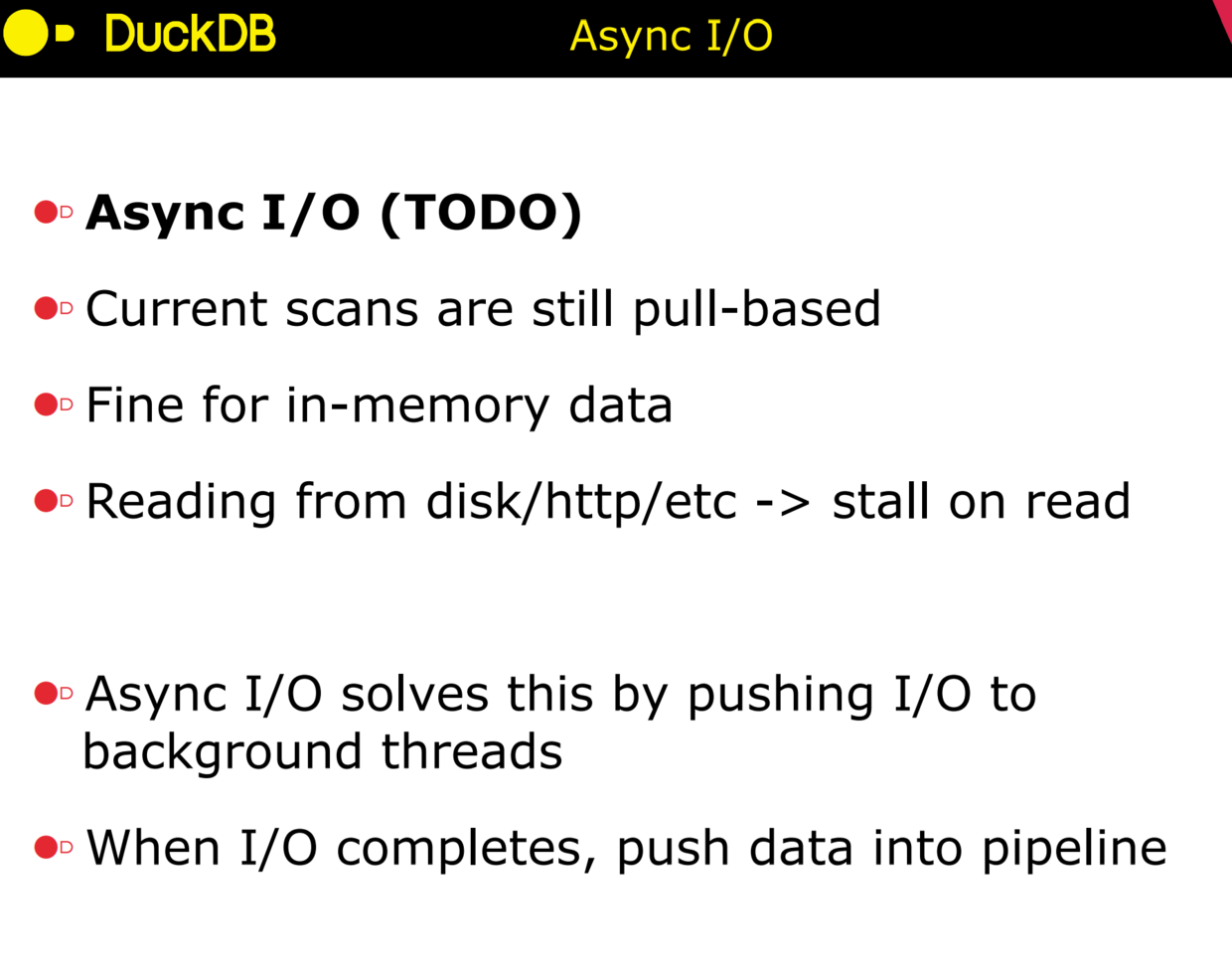

DuckDB並沒有如筆者預期的實現非同步IO, 所以任意的執行執行緒是有可能Stall在系統的I/O排程上的,我想大概率是DuckDB本身的定位對於高並行場景的支援不是那麼敏感所導致的。這裡他們也作為了後續TODO的計劃之一。

4. 參考資料

Push-Based Execution in DuckDB