深度學習之殘差網路

資料下載

連結:https://pan.baidu.com/s/1mTqblxzWcYIRF7_kk8MQQA

提取碼:7x6w

資料的下載真的很感謝(14條訊息) 【中文】【吳恩達課後程式設計作業】Course 4 - 折積神經網路 - 第二週作業_何寬的部落格-CSDN部落格

【博主使用的python版本:3.6.8】

對於此作業,您將使用 Keras。

在進入問題之前,請執行下面的單元格以載入所需的包。

import tensorflow as tf import numpy as np import scipy.misc from tensorflow.keras.applications.resnet_v2 import ResNet50V2 from tensorflow.keras.preprocessing import image from tensorflow.keras.applications.resnet_v2 import preprocess_input, decode_predictions from tensorflow.keras import layers from tensorflow.keras.layers import Input, Add, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D, AveragePooling2D, MaxPooling2D, GlobalMaxPooling2D from tensorflow.keras.models import Model, load_model from resnets_utils import * from tensorflow.keras.initializers import random_uniform, glorot_uniform, constant, identity from tensorflow.python.framework.ops import EagerTensor from matplotlib.pyplot import imshow from test_utils import summary, comparator import public_tests

- 非常深的神經網路的問題

- 非常深度網路的主要好處是它可以表示非常複雜的功能。它還可以學習許多不同抽象級別的特徵,從邊緣(在較淺的層,更接近輸入)到非常複雜的特徵(在更深的層,更接近輸出)。

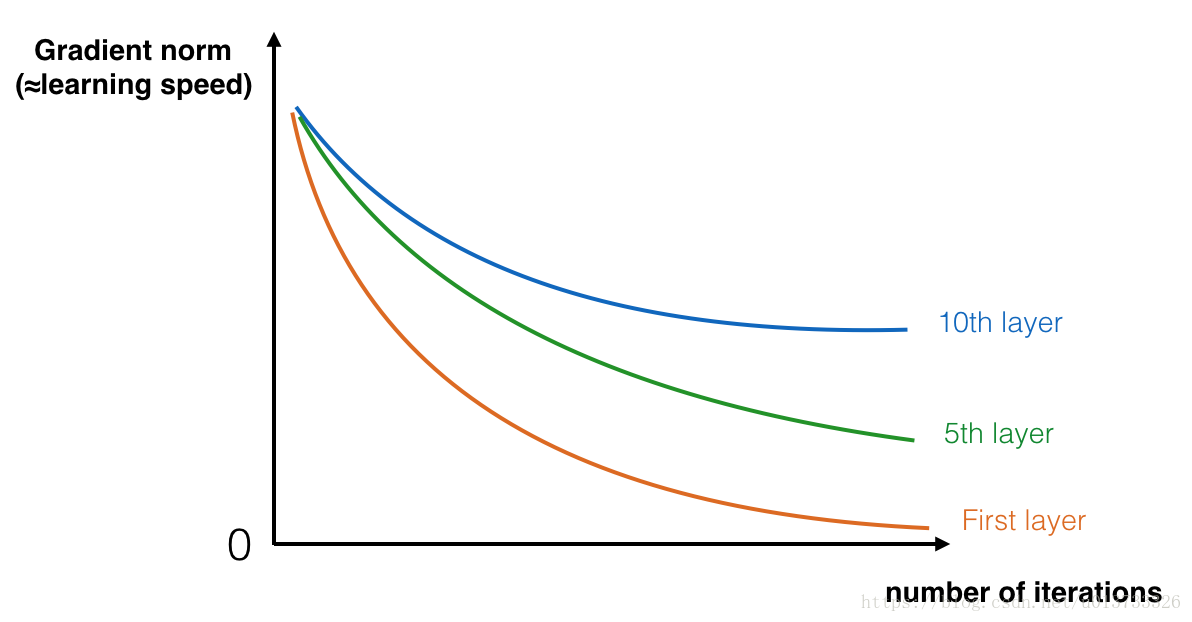

- 但是,使用更深的網路並不總是有幫助。訓練梯度的一個巨大障礙是梯度消失:非常深的網路通常有一個梯度訊號,很快就會歸零,從而使梯度下降變得非常慢。

- 更具體地說,在梯度下降期間,當您從最後一層反向傳播回第一層時,您將乘以每一步的權重矩陣,因此梯度可以呈指數級迅速減小到零(或者在極少數情況下,指數級快速增長並「爆炸」,因為獲得非常大的值)。

- 因此,在訓練過程中,您可能會看到隨著訓練的進行,較淺層的梯度的大小(或範數)會非常迅速地減小到零,如下所示:

構建一個殘差網路

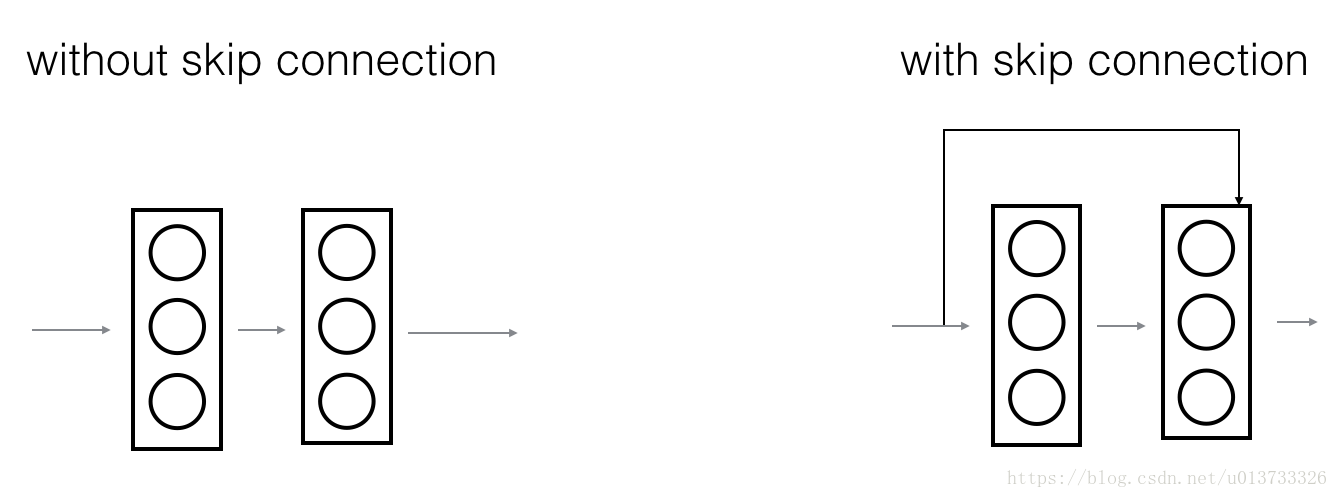

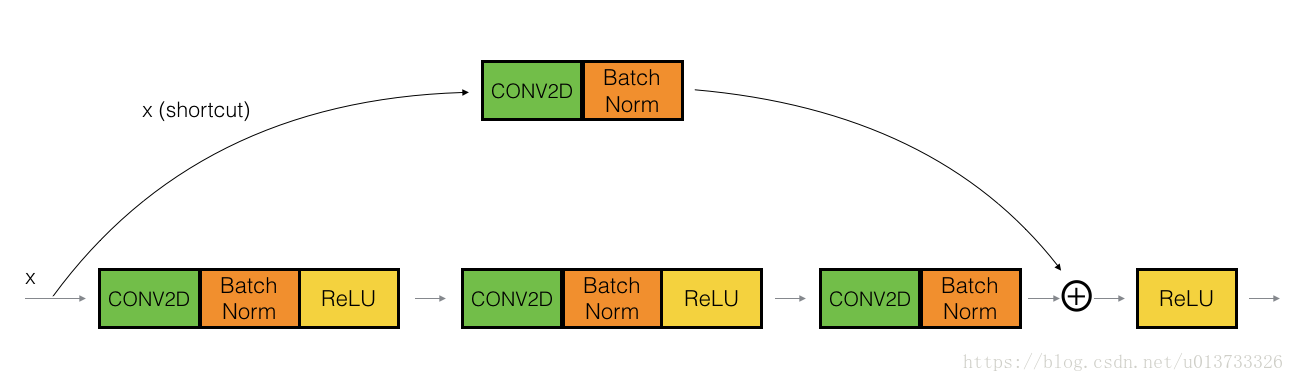

- 左圖顯示了通過網路的「主要路徑」。右側的影象將快捷方式新增到主路徑。通過將這些 ResNet 塊堆疊在一起,您可以形成一個非常深的網路。

- 講座提到,使用帶有快捷方式的 ResNet 塊也使其中一個塊學習恆等函數變得非常容易。這意味著您可以堆疊額外的 ResNet 塊,而幾乎沒有損害訓練集效能的風險。

- 在這一點上,還有一些證據表明,學習恆等函數的便利性解釋了ResNets的卓越效能,甚至超過了跳過連線對梯度消失的幫助。

- ResNet 中使用兩種主要型別的塊,主要取決於輸入/輸出尺寸是相同還是不同。您將實現它們:「標識塊」和「折積塊」。

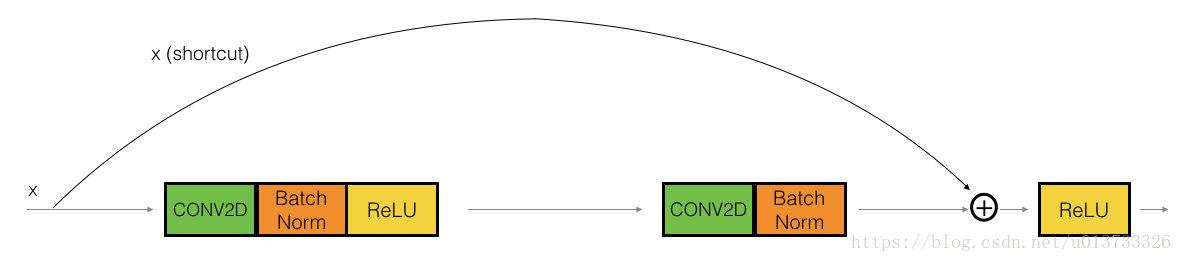

恆等塊(Identity block)

恆等塊是 ResNet 中使用的標準塊,對應於輸入啟用(例如a[L])與輸出啟用(例如a[L+!])具有相同維度的情況。為了充實 ResNet 身份塊中發生的不同步驟,下面是一個顯示各個步驟的替代圖表:

上面的路徑是「捷徑」。較低的路徑是「主要路徑」。在此圖中,請注意每層中的 CONV2D 和 ReLU 步驟。為了加快訓練速度,新增了 BatchNorm 步驟。不要擔心這很難實現 - 你會看到BatchNorm只是Keras中的一行程式碼!

在本練習中,您將實際實現此標識塊的一個功能稍微強大的版本,其中跳過連線「跳過」3 個隱藏層而不是 2 個層。它看起來像這樣:

主路徑的第一部分:

- 第一個 CONV2D 具有F1形狀為 (1,1) 和步幅為 (1,1) 的過濾器。它的填充是「有效的」。使用 0 作為隨機統一初始化的種子:

kernel_initializer = initializer(seed=0). - 第一個 BatchNorm 是規範化「channel」軸。

- 然後應用 ReLU 啟用函數。這沒有超引數。

主路徑的第二部分:

- 第二個 CONV2D 具有F2形狀(f,f)和步幅為 (1,1) 的過濾器。它的填充是「相同的」。使用 0 作為隨機統一初始化的種子:kernel_initializer = initializer(seed=0)

- 第二個 BatchNorm 是規範化「channel」軸。

- 然後應用 ReLU 啟用函數。這沒有超引數。

主路徑的第三部分:

- 第三個 CONV2D 具有F3形狀 (1,1) 和步幅為 (1,1) 的過濾器。它的填充是「相同的」。使用 0 作為隨機統一初始化的種子:kernel_initializer = initializer(seed=0)

- 第三個 BatchNorm 是規範化「channel」軸。

- 然後應用 ReLU 啟用函數。這沒有超引數。

主路徑的最後一部分:

- 第 3 層 X 的X_shortcut和輸出相加。

- 提示:語法看起來像 Add()([var1,var2])

- 然後應用 ReLU 啟用函數。這沒有超引數。

接下來我們就要實現殘差網路的恆等塊了

我們已將初始值設定項引數新增到函數中。此引數接收一個初始值設定項函數,類似於包 tensorflow.keras.initializers 或任何其他自定義初始值設定項中包含的函數。預設情況下,它將設定為random_uniform

請記住,這些函數接受種子引數,該引數可以是所需的任何值,但在此筆電中必須將其設定為 0 才能進行評分。

下面是實際使用函數式 API 的強大功能來建立快捷方式路徑的地方:

def identity_block(X, f, filters, training=True, initializer=random_uniform): """ 實現圖 4 中定義的恆等塊 Arguments: X -- 形狀的輸入張量(m、n_H_prev、n_W_prev、n_C_prev) f -- 整數,指定主路徑中間 CONV 視窗的形狀 filters -- python 整數列表,定義主路徑的 CONV 層中的過濾器數量 訓練 -- True:在訓練模式下行為 錯誤:在推理模式下行為 初始值設定項 -- 設定圖層的初始權重。等於隨機統一初始值設定項 Returns: X -- output of the identity block, tensor of shape (n_H, n_W, n_C) """ # Retrieve Filters F1, F2, F3 = filters # Save the input value. You'll need this later to add back to the main path. X_shortcut = X cache = [] # 主路徑的第一個組成部分 X = Conv2D(filters = F1, kernel_size = 1, strides = (1,1), padding = 'valid', kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training = training) # Default axis X = Activation('relu')(X) ### START CODE HERE ## Second component of main path (≈3 lines) X = Conv2D(filters = F2, kernel_size = f,strides = (1, 1),padding='same',kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training=training) X = Activation('relu')(X) ## Third component of main path (≈2 lines) X = Conv2D(filters = F3, kernel_size = 1, strides = (1, 1), padding='valid', kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training=training) ## Final step: Add shortcut value to main path, and pass it through a RELU activation (≈2 lines) X = Add()([X_shortcut,X]) X = Activation('relu')(X) ### END CODE HERE return X

我們來測試一下:

np.random.seed(1) X1 = np.ones((1, 4, 4, 3)) * -1 X2 = np.ones((1, 4, 4, 3)) * 1 X3 = np.ones((1, 4, 4, 3)) * 3 #按著X1,X2,X3的順序排序 X = np.concatenate((X1, X2, X3), axis = 0).astype(np.float32) A3 = identity_block(X, f=2, filters=[4, 4, 3], initializer=lambda seed=0:constant(value=1), training=False) print('\033[1mWith training=False\033[0m\n') A3np = A3.numpy() print(np.around(A3.numpy()[:,(0,-1),:,:].mean(axis = 3), 5)) resume = A3np[:,(0,-1),:,:].mean(axis = 3) print(resume[1, 1, 0]) print('\n\033[1mWith training=True\033[0m\n') np.random.seed(1) A4 = identity_block(X, f=2, filters=[3, 3, 3], initializer=lambda seed=0:constant(value=1), training=True) print(np.around(A4.numpy()[:,(0,-1),:,:].mean(axis = 3), 5)) public_tests.identity_block_test(identity_block)

With training=False

[[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]]

[[192.71234 192.71234 192.71234 96.85617]

[ 96.85617 96.85617 96.85617 48.92808]]

[[578.1371 578.1371 578.1371 290.5685 ]

[290.5685 290.5685 290.5685 146.78426]]]

96.85617

With training=True

[[[0. 0. 0. 0. ]

[0. 0. 0. 0. ]]

[[0.40739 0.40739 0.40739 0.40739]

[0.40739 0.40739 0.40739 0.40739]]

[[4.99991 4.99991 4.99991 3.25948]

[3.25948 3.25948 3.25948 2.40739]]]

All tests passed!

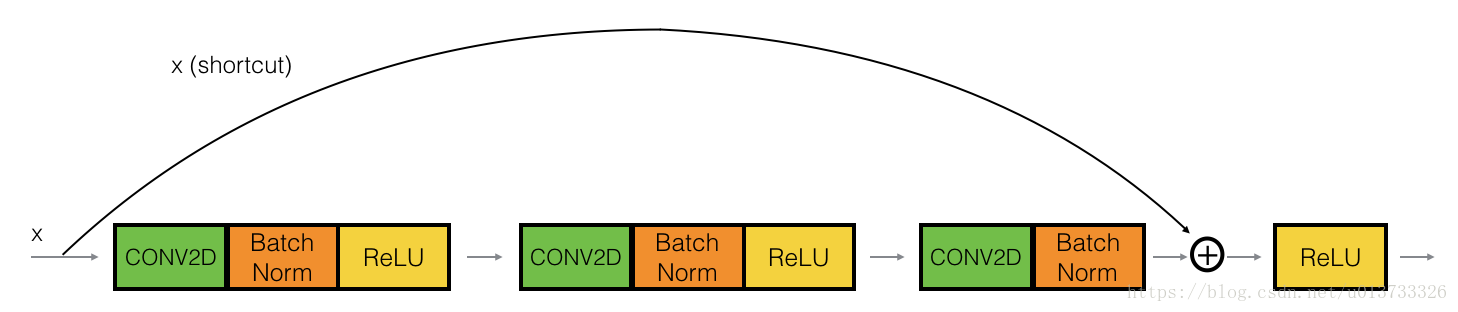

折積塊

ResNet「折積塊」是第二種塊型別。當輸入和輸出維度不匹配時,可以使用這種型別的塊。與標識塊的區別在於快捷方式路徑中有一個 CONV2D 層:

- 快捷路徑中的 CONV2D 層用於將輸入調整為不同的維度,以便尺寸在將快捷方式值新增回主路徑所需的最終新增中匹配。(這與講座中討論的矩陣的作用類似。)

- 例如,要將啟用維度的高度和寬度減少 2 倍,可以使用步幅為 2 的 1x1 折積。

- 快捷方式路徑上的 CONV2D 層不使用任何非線性啟用函數。它的主要作用是僅應用一個(學習的)線性函數來減小輸入的維度,以便維度與後面的加法步驟相匹配。

- 對於前面的練習,出於評分目的需要額外的初始值設定項引數,並且預設情況下已將其設定為 glorot_uniform

主路徑的第一個組成部分:

- 第一個 CONV2D 具有F1個形狀為 (1,1) 和步幅為 (s,s) 的過濾器。它的填充是「有效的」。使用 0 作為種子glorot_uniform kernel_initializer = 初始值設定項(seed=0)。

- 第一個 BatchNorm 是規範化「通道」軸。

- 然後應用 ReLU 啟用函數。這沒有超引數。

主路徑的第二個組成部分:

- 第二個 CONV2D 具有F2個形狀 (f,f) 和步幅為 (1,1) 的過濾器。它的填充是「相同的」。使用 0 作為種子glorot_uniform kernel_initializer = 初始值設定項(seed=0)。

- 第二個 BatchNorm 是規範化「通道」軸。

- 然後應用 ReLU 啟用函數。這沒有超引數。

主路徑的第三個組成部分:

- 第三個 CONV2D 具有F3個形狀為 (1,1) 和步幅為 (1,1) 的過濾器。它的填充是「有效的」。使用 0 作為種子glorot_uniform kernel_initializer = 初始值設定項(seed=0)。

- 第三個 BatchNorm 是規範化「通道」軸。請注意,此元件中沒有 ReLU 啟用函數。

快捷方式路徑:

- CONV2D 具有F3個形狀為 (1,1) 和步幅為 (s,s) 的過濾器。它的填充是「有效的」。使用 0 作為種子glorot_uniform kernel_initializer = 初始值設定項(seed=0)。

- BatchNorm正在規範化「通道」軸。

最後一步:

- 快捷方式和主路徑值相加。

- 然後應用 ReLU 啟用函數。這沒有超引數。

def convolutional_block(X, f, filters, s = 2, training=True, initializer=glorot_uniform): """ 圖 4 中定義的折積塊的實現 Arguments: X -- 形狀的輸入張量(m、n_H_prev、n_W_prev、n_C_prev) f -- 整數,指定主路徑中間 CONV 視窗的形狀 filters -- python 整數列表,定義主路徑的 CONV 層中的過濾器數量 s -- 整數,指定要使用的步幅 訓練 -- True:在訓練模式下行為 錯誤:在推理模式下行為 初始值設定項 -- 設定圖層的初始權重。等於 Glorot 統一初始值設定項, 也稱為澤維爾均勻初始值設定項。 Returns: X -- output of the convolutional block, tensor of shape (n_H, n_W, n_C) """ # Retrieve Filters F1, F2, F3 = filters # Save the input value X_shortcut = X ##### MAIN PATH ##### # First component of main path glorot_uniform(seed=0) X = Conv2D(filters = F1, kernel_size = 1, strides = (s, s), padding='valid', kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training=training) X = Activation('relu')(X) ### START CODE HERE ## Second component of main path (≈3 lines) X = Conv2D(filters = F2, kernel_size = f,strides = (1, 1),padding='same',kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training=training) X = Activation('relu')(X) ## Third component of main path (≈2 lines) X = Conv2D(filters = F3, kernel_size = 1, strides = (1, 1), padding='valid', kernel_initializer = initializer(seed=0))(X) X = BatchNormalization(axis = 3)(X, training=training) ##### SHORTCUT PATH ##### (≈2 lines) X_shortcut = Conv2D(filters = F3, kernel_size = 1, strides = (s, s), padding='valid', kernel_initializer = initializer(seed=0))(X_shortcut) X_shortcut = BatchNormalization(axis = 3)(X_shortcut, training=training) ### END CODE HERE # Final step: Add shortcut value to main path (Use this order [X, X_shortcut]), and pass it through a RELU activation X = Add()([X, X_shortcut]) X = Activation('relu')(X) return X

我們測試一下:

from outputs import convolutional_block_output1, convolutional_block_output2 np.random.seed(1) #X = np.random.randn(3, 4, 4, 6).astype(np.float32) X1 = np.ones((1, 4, 4, 3)) * -1 X2 = np.ones((1, 4, 4, 3)) * 1 X3 = np.ones((1, 4, 4, 3)) * 3 X = np.concatenate((X1, X2, X3), axis = 0).astype(np.float32) A = convolutional_block(X, f = 2, filters = [2, 4, 6], training=False) assert type(A) == EagerTensor, "Use only tensorflow and keras functions" assert tuple(tf.shape(A).numpy()) == (3, 2, 2, 6), "Wrong shape." assert np.allclose(A.numpy(), convolutional_block_output1), "Wrong values when training=False." print(A[0]) B = convolutional_block(X, f = 2, filters = [2, 4, 6], training=True) assert np.allclose(B.numpy(), convolutional_block_output2), "Wrong values when training=True." print('\033[92mAll tests passed!')

tf.Tensor(

[[[0. 0.66683817 0. 0. 0.88853896 0.5274254 ]

[0. 0.65053666 0. 0. 0.89592844 0.49965227]]

[[0. 0.6312079 0. 0. 0.8636247 0.47643146]

[0. 0.5688321 0. 0. 0.85534114 0.41709304]]], shape=(2, 2, 6), dtype=float32)

All tests passed!

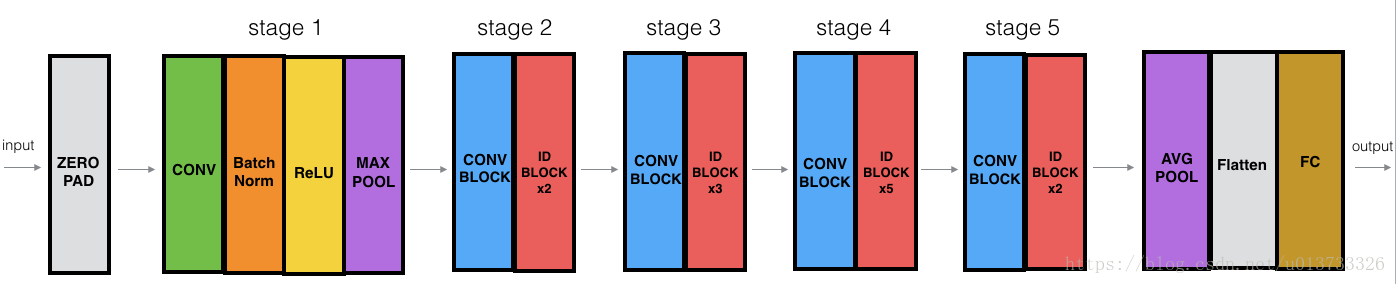

構建你的第一個殘差網路(50層)

您現在擁有構建非常深入的 ResNet 所需的塊。下圖詳細描述了該神經網路的架構。圖中的「ID BLOCK」代表「身份塊」,「ID BLOCK x3」表示您應該將 3 個恆等快塊堆疊在一起。

零填充用 (3,3) 的填充填充輸入

步驟一:

- 2D 折積有 64 個形狀為 (7,7) 的過濾器,並使用 (2,2) 的步幅。

- BatchNorm 應用於輸入的「通道」軸。

- 最大池化使用 (3,3) 視窗和 (2,2) 步幅。

步驟二:

- 折積塊使用三組大小為 [64,64,256] 的過濾器,「f」為 3,「s」 為 1。

- 2 個身份塊使用三組大小為 [64,64,256] 的過濾器,「f」為 3。

步驟三:

- 折積塊使用三組大小為 [128,128,512] 的過濾器,「f」為 3,「s」 為 2。

- 3 個身份塊使用三組大小為 [128,128,512] 的過濾器,「f」為 3。

步驟四:

- 折積塊使用三組大小為 [256, 256, 1024] 的濾波器,「f」 為 3,「s」 為 2。

- 這 5 個身份塊使用三組大小為 [256、256、1024] 的過濾器,「f」為 3。

步驟五:

- 折積塊使用三組大小為 [512, 512, 2048] 的濾波器,「f」為 3,「s」 為 2。

- 2 個身份塊使用三組大小為 [512, 512, 2048] 的過濾器,「f」為 3。

二維平均池化使用形狀為 (2,2) 的視窗。

「扁平化」層沒有任何超引數。

全連線(密集)層使用 softmax 啟用將其輸入減少到類數。

def ResNet50(input_shape = (64, 64, 3), classes = 6): """ 流行的 ResNet50 架構的分階段實現: CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3 -> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> FLATTEN -> DENSE Arguments: input_shape -- shape of the images of the dataset classes -- integer, number of classes Returns: model -- a Model() instance in Keras """ # Define the input as a tensor with shape input_shape X_input = Input(input_shape) # Zero-Padding X = ZeroPadding2D((3, 3))(X_input) # Stage 1 X = Conv2D(64, (7, 7), strides = (2, 2), kernel_initializer = glorot_uniform(seed=0))(X) X = BatchNormalization(axis = 3)(X) X = Activation('relu')(X) X = MaxPooling2D((3, 3), strides=(2, 2))(X) # Stage 2 X = convolutional_block(X, f = 3, filters = [64, 64, 256], s = 1) X = identity_block(X, 3, [64, 64, 256]) X = identity_block(X, 3, [64, 64, 256]) ### START CODE HERE ## Stage 3 (≈4 lines) X = convolutional_block(X, f = 3, filters = [128,128,512], s = 2) X = identity_block(X, 3, [128,128,512]) X = identity_block(X, 3, [128,128,512]) X = identity_block(X, 3, [128,128,512]) ## Stage 4 (≈6 lines) X = convolutional_block(X, f = 3, filters = [256, 256, 1024], s = 2) X = identity_block(X, 3, [256, 256, 1024]) X = identity_block(X, 3, [256, 256, 1024]) X = identity_block(X, 3, [256, 256, 1024]) X = identity_block(X, 3, [256, 256, 1024]) X = identity_block(X, 3, [256, 256, 1024]) ## Stage 5 (≈3 lines) X = convolutional_block(X, f = 3, filters = [512, 512, 2048], s = 2) X = identity_block(X, 3, [512, 512, 2048]) X = identity_block(X, 3, [512, 512, 2048]) ## AVGPOOL (≈1 line). Use "X = AveragePooling2D(...)(X)" X = AveragePooling2D((2, 2))(X) ### END CODE HERE # output layer X = Flatten()(X) X = Dense(classes, activation='softmax', kernel_initializer = glorot_uniform(seed=0))(X) # Create model model = Model(inputs = X_input, outputs = X) return model

模型建立完成了,我們來看一下引數

model = ResNet50(input_shape = (64, 64, 3), classes = 6) print(model.summary())

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 64, 64, 3)] 0

__________________________________________________________________________________________________

zero_padding2d (ZeroPadding2D) (None, 70, 70, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 32, 32, 64) 9472 zero_padding2d[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 32, 32, 64) 256 conv2d_20[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 32, 32, 64) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 15, 15, 64) 0 activation_18[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 15, 15, 64) 4160 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 15, 15, 64) 256 conv2d_21[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 15, 15, 64) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 15, 15, 64) 36928 activation_19[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 15, 15, 64) 256 conv2d_22[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 15, 15, 64) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 15, 15, 256) 16640 activation_20[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 15, 15, 256) 16640 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 15, 15, 256) 1024 conv2d_23[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 15, 15, 256) 1024 conv2d_24[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 15, 15, 256) 0 batch_normalization_23[0][0]

batch_normalization_24[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 15, 15, 256) 0 add_6[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 15, 15, 64) 16448 activation_21[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 15, 15, 64) 256 conv2d_25[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 15, 15, 64) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 15, 15, 64) 36928 activation_22[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 15, 15, 64) 256 conv2d_26[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 15, 15, 64) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 15, 15, 256) 16640 activation_23[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 15, 15, 256) 1024 conv2d_27[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 15, 15, 256) 0 activation_21[0][0]

batch_normalization_27[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 15, 15, 256) 0 add_7[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 15, 15, 64) 16448 activation_24[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 15, 15, 64) 256 conv2d_28[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 15, 15, 64) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 15, 15, 64) 36928 activation_25[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 15, 15, 64) 256 conv2d_29[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 15, 15, 64) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 15, 15, 256) 16640 activation_26[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 15, 15, 256) 1024 conv2d_30[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 15, 15, 256) 0 activation_24[0][0]

batch_normalization_30[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 15, 15, 256) 0 add_8[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 8, 8, 128) 32896 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 8, 8, 128) 512 conv2d_31[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 8, 8, 128) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 8, 8, 128) 147584 activation_28[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 8, 8, 128) 512 conv2d_32[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 8, 8, 128) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 8, 8, 512) 66048 activation_29[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 8, 8, 512) 131584 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 8, 8, 512) 2048 conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 8, 8, 512) 2048 conv2d_34[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 8, 8, 512) 0 batch_normalization_33[0][0]

batch_normalization_34[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 8, 8, 512) 0 add_9[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 8, 8, 128) 65664 activation_30[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 8, 8, 128) 512 conv2d_35[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 8, 8, 128) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 8, 8, 128) 147584 activation_31[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 8, 8, 128) 512 conv2d_36[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 8, 8, 128) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 8, 8, 512) 66048 activation_32[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 8, 8, 512) 2048 conv2d_37[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 8, 8, 512) 0 activation_30[0][0]

batch_normalization_37[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 8, 8, 512) 0 add_10[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 8, 8, 128) 65664 activation_33[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 8, 8, 128) 512 conv2d_38[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 8, 8, 128) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 8, 8, 128) 147584 activation_34[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 8, 8, 128) 512 conv2d_39[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 8, 8, 128) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 8, 8, 512) 66048 activation_35[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 8, 8, 512) 2048 conv2d_40[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 8, 8, 512) 0 activation_33[0][0]

batch_normalization_40[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 8, 8, 512) 0 add_11[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 8, 8, 128) 65664 activation_36[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 8, 8, 128) 512 conv2d_41[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 8, 8, 128) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 8, 8, 128) 147584 activation_37[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 8, 8, 128) 512 conv2d_42[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 8, 8, 128) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 8, 8, 512) 66048 activation_38[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 8, 8, 512) 2048 conv2d_43[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 8, 8, 512) 0 activation_36[0][0]

batch_normalization_43[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 8, 8, 512) 0 add_12[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 4, 4, 256) 131328 activation_39[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 4, 4, 256) 1024 conv2d_44[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 4, 4, 256) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 4, 4, 256) 590080 activation_40[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 4, 4, 256) 1024 conv2d_45[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 4, 4, 256) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 4, 4, 1024) 263168 activation_41[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 4, 4, 1024) 525312 activation_39[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 4, 4, 1024) 4096 conv2d_46[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 4, 4, 1024) 4096 conv2d_47[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 4, 4, 1024) 0 batch_normalization_46[0][0]

batch_normalization_47[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 4, 4, 1024) 0 add_13[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 4, 4, 256) 262400 activation_42[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 4, 4, 256) 1024 conv2d_48[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 4, 4, 256) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 4, 4, 256) 590080 activation_43[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 4, 4, 256) 1024 conv2d_49[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 4, 4, 256) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 4, 4, 1024) 263168 activation_44[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 4, 4, 1024) 4096 conv2d_50[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 4, 4, 1024) 0 activation_42[0][0]

batch_normalization_50[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 4, 4, 1024) 0 add_14[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 4, 4, 256) 262400 activation_45[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 4, 4, 256) 1024 conv2d_51[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 4, 4, 256) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 4, 4, 256) 590080 activation_46[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 4, 4, 256) 1024 conv2d_52[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 4, 4, 256) 0 batch_normalization_52[0][0]

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 4, 4, 1024) 263168 activation_47[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 4, 4, 1024) 4096 conv2d_53[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 4, 4, 1024) 0 activation_45[0][0]

batch_normalization_53[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 4, 4, 1024) 0 add_15[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 4, 4, 256) 262400 activation_48[0][0]

__________________________________________________________________________________________________

batch_normalization_54 (BatchNo (None, 4, 4, 256) 1024 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 4, 4, 256) 0 batch_normalization_54[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 4, 4, 256) 590080 activation_49[0][0]

__________________________________________________________________________________________________

batch_normalization_55 (BatchNo (None, 4, 4, 256) 1024 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 4, 4, 256) 0 batch_normalization_55[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 4, 4, 1024) 263168 activation_50[0][0]

__________________________________________________________________________________________________

batch_normalization_56 (BatchNo (None, 4, 4, 1024) 4096 conv2d_56[0][0]

__________________________________________________________________________________________________

add_16 (Add) (None, 4, 4, 1024) 0 activation_48[0][0]

batch_normalization_56[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 4, 4, 1024) 0 add_16[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 4, 4, 256) 262400 activation_51[0][0]

__________________________________________________________________________________________________

batch_normalization_57 (BatchNo (None, 4, 4, 256) 1024 conv2d_57[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 4, 4, 256) 0 batch_normalization_57[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 4, 4, 256) 590080 activation_52[0][0]

__________________________________________________________________________________________________

batch_normalization_58 (BatchNo (None, 4, 4, 256) 1024 conv2d_58[0][0]

__________________________________________________________________________________________________

activation_53 (Activation) (None, 4, 4, 256) 0 batch_normalization_58[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 4, 4, 1024) 263168 activation_53[0][0]

__________________________________________________________________________________________________

batch_normalization_59 (BatchNo (None, 4, 4, 1024) 4096 conv2d_59[0][0]

__________________________________________________________________________________________________

add_17 (Add) (None, 4, 4, 1024) 0 activation_51[0][0]

batch_normalization_59[0][0]

__________________________________________________________________________________________________

activation_54 (Activation) (None, 4, 4, 1024) 0 add_17[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 4, 4, 256) 262400 activation_54[0][0]

__________________________________________________________________________________________________

batch_normalization_60 (BatchNo (None, 4, 4, 256) 1024 conv2d_60[0][0]

__________________________________________________________________________________________________

activation_55 (Activation) (None, 4, 4, 256) 0 batch_normalization_60[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 4, 4, 256) 590080 activation_55[0][0]

__________________________________________________________________________________________________

batch_normalization_61 (BatchNo (None, 4, 4, 256) 1024 conv2d_61[0][0]

__________________________________________________________________________________________________

activation_56 (Activation) (None, 4, 4, 256) 0 batch_normalization_61[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 4, 4, 1024) 263168 activation_56[0][0]

__________________________________________________________________________________________________

batch_normalization_62 (BatchNo (None, 4, 4, 1024) 4096 conv2d_62[0][0]

__________________________________________________________________________________________________

add_18 (Add) (None, 4, 4, 1024) 0 activation_54[0][0]

batch_normalization_62[0][0]

__________________________________________________________________________________________________

activation_57 (Activation) (None, 4, 4, 1024) 0 add_18[0][0]

__________________________________________________________________________________________________

conv2d_63 (Conv2D) (None, 2, 2, 512) 524800 activation_57[0][0]

__________________________________________________________________________________________________

batch_normalization_63 (BatchNo (None, 2, 2, 512) 2048 conv2d_63[0][0]

__________________________________________________________________________________________________

activation_58 (Activation) (None, 2, 2, 512) 0 batch_normalization_63[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 2, 2, 512) 2359808 activation_58[0][0]

__________________________________________________________________________________________________

batch_normalization_64 (BatchNo (None, 2, 2, 512) 2048 conv2d_64[0][0]

__________________________________________________________________________________________________

activation_59 (Activation) (None, 2, 2, 512) 0 batch_normalization_64[0][0]

__________________________________________________________________________________________________

conv2d_65 (Conv2D) (None, 2, 2, 2048) 1050624 activation_59[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 2, 2, 2048) 2099200 activation_57[0][0]

__________________________________________________________________________________________________

batch_normalization_65 (BatchNo (None, 2, 2, 2048) 8192 conv2d_65[0][0]

__________________________________________________________________________________________________

batch_normalization_66 (BatchNo (None, 2, 2, 2048) 8192 conv2d_66[0][0]

__________________________________________________________________________________________________

add_19 (Add) (None, 2, 2, 2048) 0 batch_normalization_65[0][0]

batch_normalization_66[0][0]

__________________________________________________________________________________________________

activation_60 (Activation) (None, 2, 2, 2048) 0 add_19[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 2, 2, 512) 1049088 activation_60[0][0]

__________________________________________________________________________________________________

batch_normalization_67 (BatchNo (None, 2, 2, 512) 2048 conv2d_67[0][0]

__________________________________________________________________________________________________

activation_61 (Activation) (None, 2, 2, 512) 0 batch_normalization_67[0][0]

__________________________________________________________________________________________________

conv2d_68 (Conv2D) (None, 2, 2, 512) 2359808 activation_61[0][0]

__________________________________________________________________________________________________

batch_normalization_68 (BatchNo (None, 2, 2, 512) 2048 conv2d_68[0][0]

__________________________________________________________________________________________________

activation_62 (Activation) (None, 2, 2, 512) 0 batch_normalization_68[0][0]

__________________________________________________________________________________________________

conv2d_69 (Conv2D) (None, 2, 2, 2048) 1050624 activation_62[0][0]

__________________________________________________________________________________________________

batch_normalization_69 (BatchNo (None, 2, 2, 2048) 8192 conv2d_69[0][0]

__________________________________________________________________________________________________

add_20 (Add) (None, 2, 2, 2048) 0 activation_60[0][0]

batch_normalization_69[0][0]

__________________________________________________________________________________________________

activation_63 (Activation) (None, 2, 2, 2048) 0 add_20[0][0]

__________________________________________________________________________________________________

conv2d_70 (Conv2D) (None, 2, 2, 512) 1049088 activation_63[0][0]

__________________________________________________________________________________________________

batch_normalization_70 (BatchNo (None, 2, 2, 512) 2048 conv2d_70[0][0]

__________________________________________________________________________________________________

activation_64 (Activation) (None, 2, 2, 512) 0 batch_normalization_70[0][0]

__________________________________________________________________________________________________

conv2d_71 (Conv2D) (None, 2, 2, 512) 2359808 activation_64[0][0]

__________________________________________________________________________________________________

batch_normalization_71 (BatchNo (None, 2, 2, 512) 2048 conv2d_71[0][0]

__________________________________________________________________________________________________

activation_65 (Activation) (None, 2, 2, 512) 0 batch_normalization_71[0][0]

__________________________________________________________________________________________________

conv2d_72 (Conv2D) (None, 2, 2, 2048) 1050624 activation_65[0][0]

__________________________________________________________________________________________________

batch_normalization_72 (BatchNo (None, 2, 2, 2048) 8192 conv2d_72[0][0]

__________________________________________________________________________________________________

add_21 (Add) (None, 2, 2, 2048) 0 activation_63[0][0]

batch_normalization_72[0][0]

__________________________________________________________________________________________________

activation_66 (Activation) (None, 2, 2, 2048) 0 add_21[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 1, 1, 2048) 0 activation_66[0][0]

__________________________________________________________________________________________________

flatten (Flatten) (None, 2048) 0 average_pooling2d[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 6) 12294 flatten[0][0]

==================================================================================================

Total params: 23,600,006

Trainable params: 23,546,886

Non-trainable params: 53,120

__________________________________________________________________________________________________

None

from outputs import ResNet50_summary model = ResNet50(input_shape = (64, 64, 3), classes = 6)

實體化結束,下面我們對模型進行編譯

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

接下來我們就是載入資料集並進行訓練

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset() # Normalize image vectors X_train = X_train_orig / 255. X_test = X_test_orig / 255. # Convert training and test labels to one hot matrices Y_train = convert_to_one_hot(Y_train_orig, 6).T Y_test = convert_to_one_hot(Y_test_orig, 6).T print ("number of training examples = " + str(X_train.shape[0])) print ("number of test examples = " + str(X_test.shape[0])) print ("X_train shape: " + str(X_train.shape)) print ("Y_train shape: " + str(Y_train.shape)) print ("X_test shape: " + str(X_test.shape)) print ("Y_test shape: " + str(Y_test.shape))

number of training examples = 1080 number of test examples = 120 X_train shape: (1080, 64, 64, 3) Y_train shape: (1080, 6) X_test shape: (120, 64, 64, 3) Y_test shape: (120, 6)

model.fit(X_train, Y_train, epochs = 10, batch_size = 32)

Epoch 1/10 34/34 [==============================] - 10s 129ms/step - loss: 2.2749 - accuracy: 0.4417 Epoch 2/10 34/34 [==============================] - 3s 92ms/step - loss: 1.0135 - accuracy: 0.6889 Epoch 3/10 34/34 [==============================] - 3s 92ms/step - loss: 0.3830 - accuracy: 0.8694 Epoch 4/10 34/34 [==============================] - 3s 91ms/step - loss: 0.2390 - accuracy: 0.9241 Epoch 5/10 34/34 [==============================] - 3s 91ms/step - loss: 0.1527 - accuracy: 0.9519 0s - loss: 0.1 Epoch 6/10 34/34 [==============================] - 3s 91ms/step - loss: 0.1050 - accuracy: 0.9648 Epoch 7/10 34/34 [==============================] - 3s 91ms/step - loss: 0.1824 - accuracy: 0.9444 Epoch 8/10 34/34 [==============================] - 3s 92ms/step - loss: 0.5906 - accuracy: 0.8074 Epoch 9/10 34/34 [==============================] - 3s 91ms/step - loss: 0.4195 - accuracy: 0.8676 Epoch 10/10 34/34 [==============================] - 3s 91ms/step - loss: 0.3377 - accuracy: 0.9194

我們對模型進行評估

preds = model.evaluate(X_test, Y_test) print ("Loss = " + str(preds[0])) print ("Test Accuracy = " + str(preds[1]))

4/4 [==============================] - 1s 31ms/step - loss: 0.4844 - accuracy: 0.8417 Loss = 0.4843602478504181 Test Accuracy = 0.8416666388511658

博主已經在手勢資料集上訓練了自己的RESNET50模型的權重,你可以使用下面的程式碼載並執行博主的訓練模型,

pre_trained_model = tf.keras.models.load_model('resnet50.h5')

然後測試一下博主訓練出來的權值:

preds = pre_trained_model.evaluate(X_test, Y_test) print ("Loss = " + str(preds[0])) print ("Test Accuracy = " + str(preds[1]))

使用自己的圖片做測試

img_path = 'C:/Users/Style/Desktop/kun.png' img = image.load_img(img_path, target_size=(64, 64)) x = image.img_to_array(img) x = np.expand_dims(x, axis=0) x = x/255.0 print('Input image shape:', x.shape) imshow(img) prediction = model.predict(x) print("Class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = ", prediction) print("Class:", np.argmax(prediction))

Input image shape: (1, 64, 64, 3) Class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = [[0.01301673 0.8742848 0.00662233 0.05449386 0.02079306 0.03078919]] Class: 1

pre_trained_model.summary()