如何使用OpenCV+MMPEAG開啟攝像頭,顯示的同時推播RTMP流。

2022-12-15 15:00:21

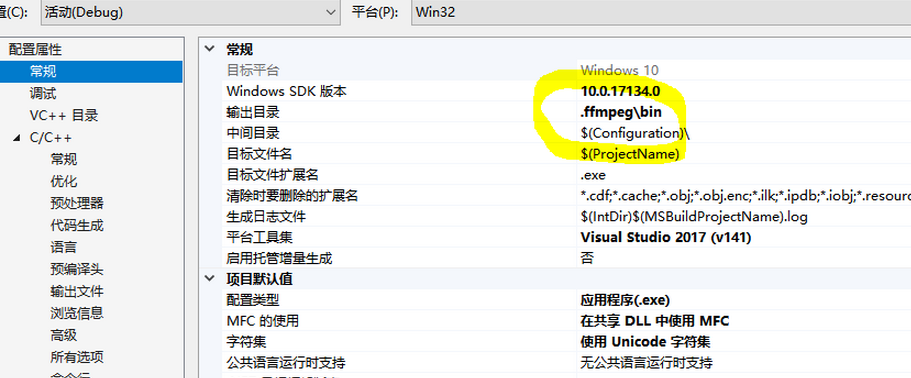

注意,設定中:

程式碼詳解:

1、 char* outUrl = "rtmp://localhost/live/livestream"; 這個地址,是AMS(Adeobe Media Server)的預設地址。 2、 //註冊所有的編解碼器 avcodec_register_all(); //註冊所有的封裝器 av_register_all(); //註冊所有網路協定 avformat_network_init(); //開啟攝像頭 VideoCapture cam; namedWindow("video"); Mat frame; //畫素格式轉換上下文 SwsContext* vsc = NULL; //輸出的資料結構 AVFrame* yuv = NULL; //編碼器上下文 AVCodecContext* vc = NULL; //rtmp flv 封裝器 AVFormatContext* ic = NULL; 宣告好多變數,是OpenCV & MMPEAG 正常執行所需要的。 3、 try { /// 1. Open Cam // 這裡預設開啟的是攝像頭0,並獲得攝像頭引數 cam.open(0); if (!cam.isOpened()) { throw exception("cam open failed"); } cout << "cam open sucess"<< endl; int inWidth = cam.get(CAP_PROP_FRAME_WIDTH); int inHeight = cam.get(CAP_PROP_FRAME_HEIGHT); int fps = cam.get(CAP_PROP_FPS); if (fps == 0) { fps = 25; } cout << fps<< endl; /// 2. 初始化 SwsContext(轉換格式上下文) vsc = sws_getCachedContext(vsc, inWidth, inHeight, AV_PIX_FMT_BGR24, inWidth, inHeight, AV_PIX_FMT_YUV420P, SWS_BICUBIC, 0, 0, 0 ); if (!vsc) { throw exception("sws_getCachedContext failed"); } ///3.初始化輸出的資料結構 yuv = av_frame_alloc(); yuv->format = AV_PIX_FMT_YUV420P; yuv->width = inWidth; yuv->height = inHeight; yuv->pts = 0; //分配 YUV 空間 int ret = av_frame_get_buffer(yuv, 32); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } ///4. 初始化編碼器上下文 //a. 找到編碼器,這裡全部基於MMPEAG AVCodec* codec = avcodec_find_encoder(AV_CODEC_ID_H264); if (!codec) { throw exception("Can't find H.264 encoder"); } //b. 建立編碼器上下文 vc = avcodec_alloc_context3(codec); if (!vc) { throw exception("avcodec_alloc_context3 failed"); } //c. 設定編碼器引數 vc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER; vc->codec_id = codec->id; vc->thread_count = 8; vc->bit_rate = 50 * 1024 * 8; //video size(bits) per second: 50kByte vc->width = inWidth; vc->height = inHeight; vc->time_base = { 1,fps };//used to calculate pts: pts*time_base = second vc->framerate = { fps,1 }; vc->gop_size = 50;// for how many frames there is a I frame(關鍵幀) vc->max_b_frames = 0;//if these is no B frames, the orders of both decoding and presentation will be the same vc->pix_fmt = AV_PIX_FMT_YUV420P; //d. 開啟編碼器上下文(Open encodder context) ret = avcodec_open2(vc, 0, 0); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } cout << "avcodec_open2 successed!"<< endl; ///5. 輸出封裝器和頻流設定 //a. Create context for MUX ret = avformat_alloc_output_context2(&ic, 0, "flv", outUrl); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } //b. Add video stream AVStream* vs = avformat_new_stream(ic, NULL); if (!vs) { throw exception("avformat_new_stream failed"); } vs->codecpar->codec_tag = 0; // copy parameter from Encoder to MUX avcodec_parameters_from_context(vs->codecpar, vc); av_dump_format(ic, 0, outUrl, 1); ///6. Open rtmp output IO(開啟輸出IO) ret = avio_open(&ic->pb, outUrl, AVIO_FLAG_WRITE); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } //write mux header ret = avformat_write_header(ic, NULL); // after this operation the stream's time_base will also be changed, not vc->time_base anymore if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } AVPacket pack; memset(&pack, 0, sizeof(pack)); int vpts = 0; //住迴圈,讀入->顯示->轉碼 for (;;) { ///從cam中讀取書 if (!cam.grab()) { continue; } if (!cam.retrieve(frame)) { continue; } imshow("video", frame); waitKey(1); /// convert RGB to YUV // Input data structure--RGB uint8_t* indata[AV_NUM_DATA_POINTERS] = { 0 };//srcStride indata[0] = frame.data; int inlinesize[AV_NUM_DATA_POINTERS] = { 0 };//srcSlice //一行(寬)資料的位元組數 inlinesize[0] = frame.cols * frame.elemSize(); int h = sws_scale(vsc, indata, inlinesize, 0, frame.rows, yuv->data, yuv->linesize); if (h <= 0) { continue; } ///Mux YUV to flv h.264 yuv->pts = vpts; vpts++; ret = avcodec_send_frame(vc, yuv); if (ret != 0) { continue; } ret = avcodec_receive_packet(vc, &pack); if (ret != 0 || pack.size > 0) { cout << '*' <<pack.size<< flush; } else { continue; } ///推流 pack.pts = av_rescale_q(pack.pts, vc->time_base, vs->time_base); pack.dts = av_rescale_q(pack.dts, vc->time_base, vs->time_base); ret = av_interleaved_write_frame(ic, &pack); if (ret == 0) { cout << '#'<< flush; } } } catch (exception &ex) { if (cam.isOpened()) cam.release(); if (vsc) { sws_freeContext(vsc); vsc = NULL; } if (vc) { avio_closep(&ic->pb); avcodec_free_context(&vc); } cerr << ex.what() << endl; } getchar();

在一段程式碼中解決所有問題,可能不是一個很好的習慣,我需要做一些改變。

這裡對程式碼進行了封裝,使得可以簡化成目前情況:

#include <opencv2/core.hpp> #include <opencv2/imgcodecs.hpp> #include <opencv2/highgui.hpp> #include <iostream> #include "XMediaEncode.h" #include "XRtmp.h" extern "C" { #include <libswscale/swscale.h> #include <libavcodec/avcodec.h> #include <libavformat/avformat.h> } using namespace cv; using namespace std; //初始化畫素格式上下文 void test004() { //相機的rtsp url char *inUrl = "rtsp://admin:@192.168.10.30:554/ch0_0.264"; VideoCapture cam; namedWindow("video"); //畫素格式轉換上下文 SwsContext *vsc = NULL; try { //////////////////////////////////////////////////////////////// /// 1 使用opencv開啟rtsp相機 cam.open(inUrl); if (!cam.isOpened()) { throw exception("cam open failed!"); } cout << inUrl << " cam open success" << endl; int inWidth = (int)cam.get(CAP_PROP_FRAME_WIDTH); int inHeight = (int)cam.get(CAP_PROP_FRAME_HEIGHT); int fps = (int)cam.get(CAP_PROP_FPS); ///2 初始化格式轉換上下文 vsc = sws_getCachedContext(vsc, inWidth, inHeight, AV_PIX_FMT_BGR24, //源寬、高、畫素格式 inWidth, inHeight, AV_PIX_FMT_YUV420P,//目標寬、高、畫素格式 SWS_BICUBIC, // 尺寸變化使用演演算法 0, 0, 0 ); if (!vsc) { throw exception("sws_getCachedContext failed!"); } Mat frame; for (;;) { ///讀取rtsp視訊幀,解碼視訊幀 if (!cam.grab()) { continue; } ///yuv轉換為rgb if (!cam.retrieve(frame)) { continue; } imshow("video", frame); waitKey(1); } } catch (exception &ex) { if (cam.isOpened()) cam.release(); if (vsc) { sws_freeContext(vsc); vsc = NULL; } cerr << ex.what() << endl; } getchar(); } //rtsp資料來源到rtmp推流 要重點複習 void test005() { cout << "void test005()!" << endl; //相機的rtsp url char *inUrl = "rtsp://admin:@192.168.10.30:554/ch0_0.264"; //nginx-rtmp 直播伺服器rtmp推流URL char *outUrl = "rtmp://192.168.10.181/live"; //註冊所有的編解碼器 avcodec_register_all(); //註冊所有的封裝器 av_register_all(); //註冊所有網路協定 avformat_network_init(); VideoCapture cam; Mat frame; namedWindow("video"); //畫素格式轉換上下文 SwsContext *vsc = NULL; //輸出的資料結構 AVFrame *yuv = NULL; //編碼器上下文 AVCodecContext *vc = NULL; //rtmp flv 封裝器 AVFormatContext *ic = NULL; try { //////////////////////////////////////////////////////////////// /// 1 使用opencv開啟rtsp相機 cam.open(inUrl); if (!cam.isOpened()) { throw exception("cam open failed!"); } cout << inUrl << " cam open success" << endl; int inWidth = (int)cam.get(CAP_PROP_FRAME_WIDTH); int inHeight = (int)cam.get(CAP_PROP_FRAME_HEIGHT); int fps = (int)cam.get(CAP_PROP_FPS); ///2 初始化格式轉換上下文 vsc = sws_getCachedContext(vsc, inWidth, inHeight, AV_PIX_FMT_BGR24, //源寬、高、畫素格式 inWidth, inHeight, AV_PIX_FMT_YUV420P,//目標寬、高、畫素格式 SWS_BICUBIC, // 尺寸變化使用演演算法 0, 0, 0 ); if (!vsc) { throw exception("sws_getCachedContext failed!"); } ///3 初始化輸出的資料結構 yuv = av_frame_alloc(); yuv->format = AV_PIX_FMT_YUV420P; yuv->width = inWidth; yuv->height = inHeight; yuv->pts = 0; //分配yuv空間 int ret = av_frame_get_buffer(yuv, 32); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } ///4 初始化編碼上下文,分為以下三步 //a 找到編碼器 AVCodec *codec = avcodec_find_encoder(AV_CODEC_ID_H264); if (!codec) { throw exception("Can`t find h264 encoder!"); } //b 建立編碼器上下文 vc = avcodec_alloc_context3(codec); if (!vc) { throw exception("avcodec_alloc_context3 failed!"); } //c 設定編碼器引數 vc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER; //全域性引數 vc->codec_id = codec->id; vc->thread_count = 8; vc->bit_rate = 50 * 1024 * 8;//壓縮後每秒視訊的bit位大小 50kB vc->width = inWidth; vc->height = inHeight; vc->time_base = { 1,fps }; vc->framerate = { fps,1 }; //畫面組的大小,多少幀一個關鍵幀 vc->gop_size = 50; vc->max_b_frames = 0; vc->pix_fmt = AV_PIX_FMT_YUV420P; //d 開啟編碼器上下文 ret = avcodec_open2(vc, 0, 0); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } cout << "avcodec_open2 success!" << endl; ///5 輸出封裝器和視訊流設定 //a 建立輸出封裝器上下文 ret = avformat_alloc_output_context2(&ic, 0, "flv", outUrl); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } //b 新增視訊流 AVStream *vs = avformat_new_stream(ic, NULL); if (!vs) { throw exception("avformat_new_stream failed"); } vs->codecpar->codec_tag = 0; //從編碼器複製引數 avcodec_parameters_from_context(vs->codecpar, vc); av_dump_format(ic, 0, outUrl, 1); ///開啟rtmp 的網路輸出IO ret = avio_open(&ic->pb, outUrl, AVIO_FLAG_WRITE); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } //寫入封裝頭 ret = avformat_write_header(ic, NULL); if (ret != 0) { char buf[1024] = { 0 }; av_strerror(ret, buf, sizeof(buf) - 1); throw exception(buf); } AVPacket pack; memset(&pack, 0, sizeof(pack)); int vpts = 0; //死迴圈 for (;;) { ///讀取rtsp視訊幀,解碼視訊幀 if (!cam.grab()) { continue; } ///yuv轉換為rgb if (!cam.retrieve(frame)) { continue; } imshow("video", frame); waitKey(1); ///rgb to yuv //輸入的資料結構 uint8_t *indata[AV_NUM_DATA_POINTERS] = { 0 }; //indata[0] bgrbgrbgr //plane indata[0] bbbbb indata[1]ggggg indata[2]rrrrr indata[0] = frame.data; int insize[AV_NUM_DATA_POINTERS] = { 0 }; //一行(寬)資料的位元組數 insize[0] = frame.cols * frame.elemSize(); int h = sws_scale(vsc, indata, insize, 0, frame.rows, //源資料 yuv->data, yuv->linesize); if (h <= 0) { continue; } cout << h << " " << flush; ///h264編碼 yuv->pts = vpts; vpts++; ret = avcodec_send_frame(vc, yuv); if (ret != 0) continue; ret = avcodec_receive_packet(vc, &pack); if (ret != 0 || pack.size > 0) { cout << "*" << pack.size << flush; } else { continue; } //推流 pack.pts = av_rescale_q(pack.pts, vc->time_base, vs->time_base); pack.dts = av_rescale_q(pack.dts, vc->time_base, vs->time_base); pack.duration = av_rescale_q(pack.duration, vc->time_base, vs->time_base); ret = av_interleaved_write_frame(ic, &pack); if (ret == 0) { cout << "#" << flush; } } } catch (exception &ex) { if (cam.isOpened()) cam.release(); if (vsc) { sws_freeContext(vsc); vsc = NULL; } if (vc) { avio_closep(&ic->pb); avcodec_free_context(&vc); } cerr << ex.what() << endl; } getchar(); } //opencv_rtsp_to_rtmp_class封裝重構程式碼 要重點複習 void test006() { cout << "void test006()!" << endl; //相機的rtsp url char *inUrl = "rtsp://admin:@192.168.10.30:554/ch0_0.264"; //nginx-rtmp 直播伺服器rtmp推流URL char *outUrl = "rtmp://192.168.10.181/live"; //編碼器和畫素格式轉換 XMediaEncode *me = XMediaEncode::Get(0); //封裝和推流物件 XRtmp *xr = XRtmp::Get(0); VideoCapture cam; Mat frame; namedWindow("video"); int ret = 0; try { //////////////////////////////////////////////////////////////// /// 1 使用opencv開啟rtsp相機 cam.open(inUrl); if (!cam.isOpened()) { throw exception("cam open failed!"); } cout << inUrl << " cam open success" << endl; int inWidth = (int)cam.get(CAP_PROP_FRAME_WIDTH); int inHeight = (int)cam.get(CAP_PROP_FRAME_HEIGHT); int fps = (int)cam.get(CAP_PROP_FPS); ///2 初始化格式轉換上下文 ///3 初始化輸出的資料結構 me->inWidth = inWidth; me->inHeight = inHeight; me->outWidth = inWidth; me->outHeight = inHeight; me->InitScale(); ///4 初始化編碼上下文 //a 找到編碼器 if (!me->InitVideoCodec()) { throw exception("InitVideoCodec failed!"); } ///5 輸出封裝器和視訊流設定 xr->Init(outUrl); //新增視訊流 xr->AddStream(me->vc); xr->SendHead(); for (;;) { ///讀取rtsp視訊幀,解碼視訊幀 if (!cam.grab()) { continue; } ///yuv轉換為rgb if (!cam.retrieve(frame)) { continue; } //imshow("video", frame); //waitKey(1); ///rgb to yuv me->inPixSize = frame.elemSize(); AVFrame *yuv = me->RGBToYUV((char*)frame.data); if (!yuv) continue; ///h264編碼 AVPacket *pack = me->EncodeVideo(yuv); if (!pack) continue; xr->SendFrame(pack); } } catch (exception &ex) { if (cam.isOpened()) cam.release(); cerr << ex.what() << endl; } getchar(); } int main(int argc, char *argv[]) { //test000(); //test001(); //test002(); //test003(); //test004(); //test005(); test006(); return 0; }

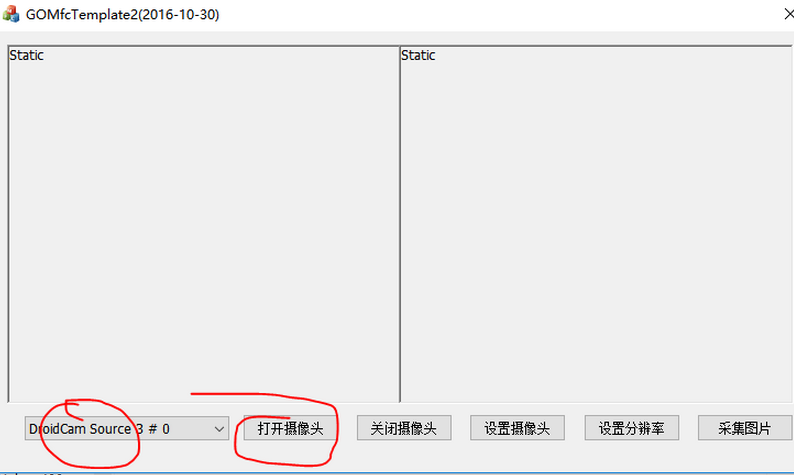

具體操作:

——————————————————————————————————————

選擇攝像頭並且開啟攝像頭;

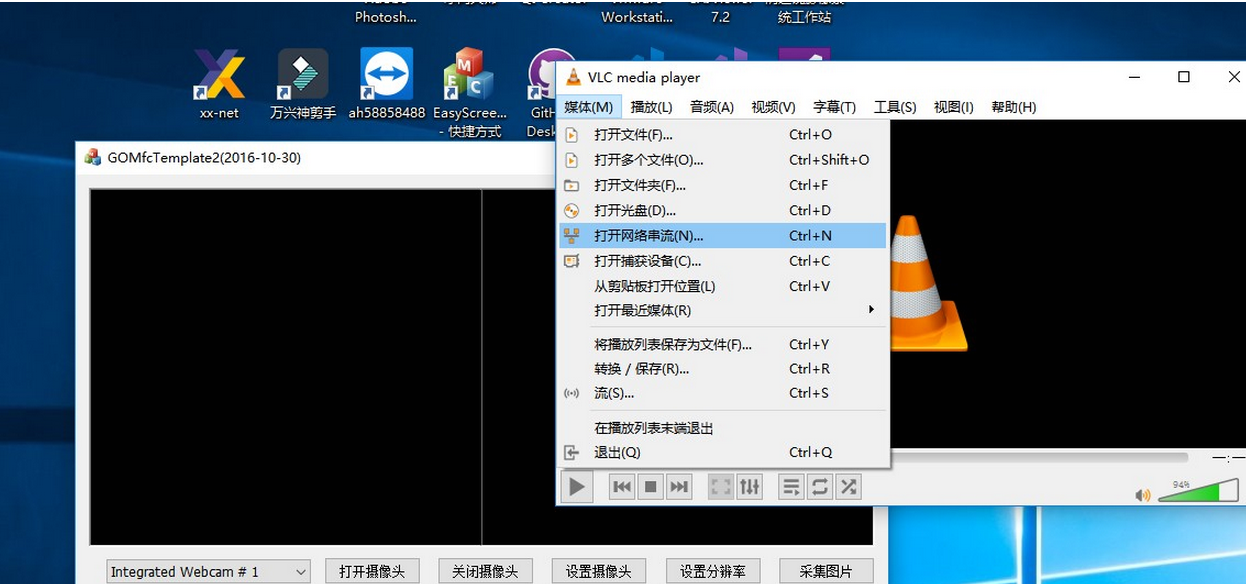

vlc開啟網路串流

輸入:

rtmp://localhost/live/livestream

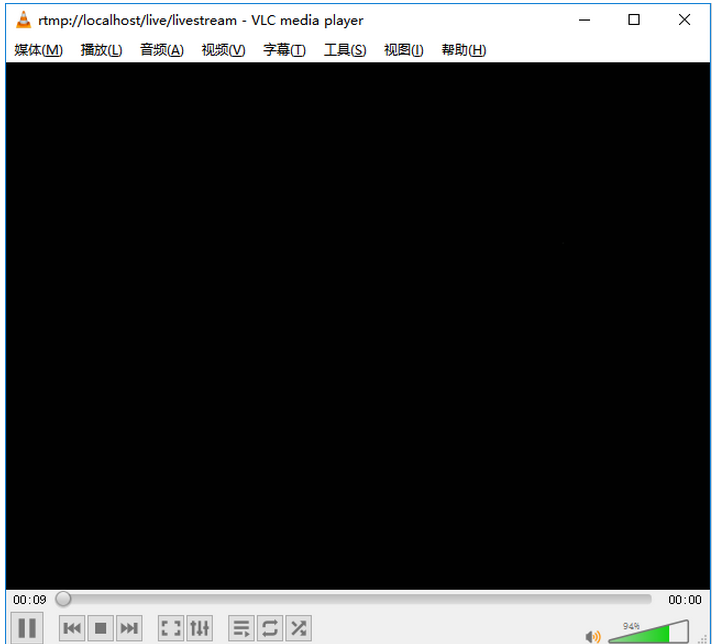

串流攝像頭內容:

參考資料《利用ffmpeg和opencv進行視訊的解碼播放》

文中程式碼:

https://files.cnblogs.com/files/blogs/758212/opencv_rtsp2rtmp-master.rar

https://files.cnblogs.com/files/blogs/758212/main.js