遙感影象識別(標註)軟體實現

2022-12-14 12:01:06

遙感影象識別已經有很多成熟的模型和實現,這裡我們選擇yolov5_obb和dota資料集,以說明並實現一種思路:那就是先識別、再標註、再訓練的過程。鑑於領域內資料往往比較封閉,對此類資料的標註實現難度較大,所以需要模型遷移。首先基於已經訓練的成果,實現初步標繪;而後通過人在迴路的修正,獲得精確的結果,最後重新投入到資料訓練過程中去。通過這種方式,獲得專用資料模型,解決客製化問題。

一、yolov5_obb簡介

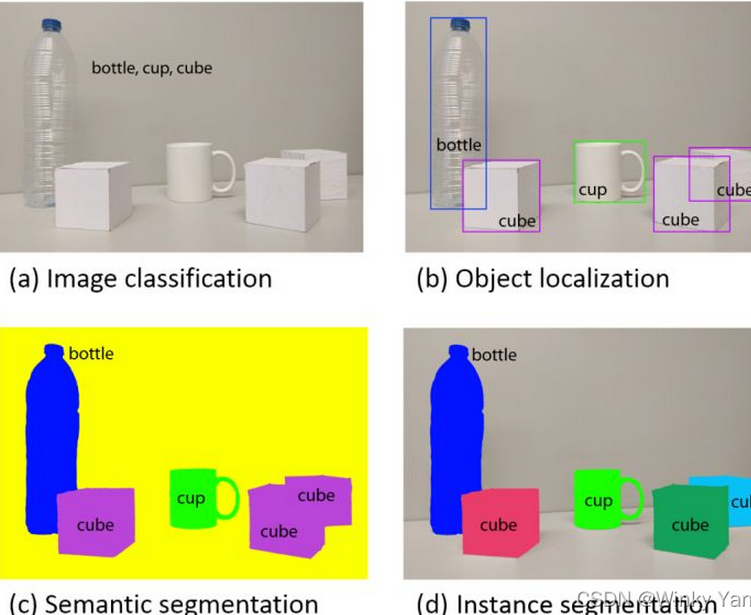

普遍認為,計算機視覺影象識別四大基本任務,其中:

(a)影象分類(目標檢測):一張影象中是否含某種物體

(b)物體定位(目標檢測與目標識別):確定目標位置和所屬類別。

(c)語意分割(目標分割和目標分類):對影象進行畫素級分類,預測每個畫素屬於的類別,不區分個體;(所有的CUBE一個顏色)

(d)範例分割(目標分割和目標識別):定點陣圖中每個物體,並進行畫素級標註,區分不同個體;(CUBE都是不同顏色)

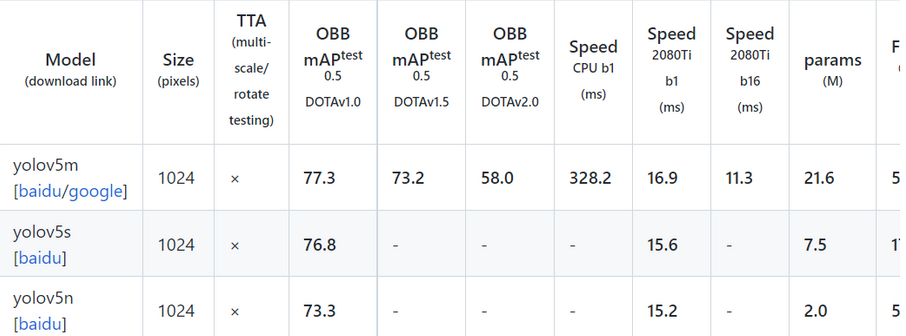

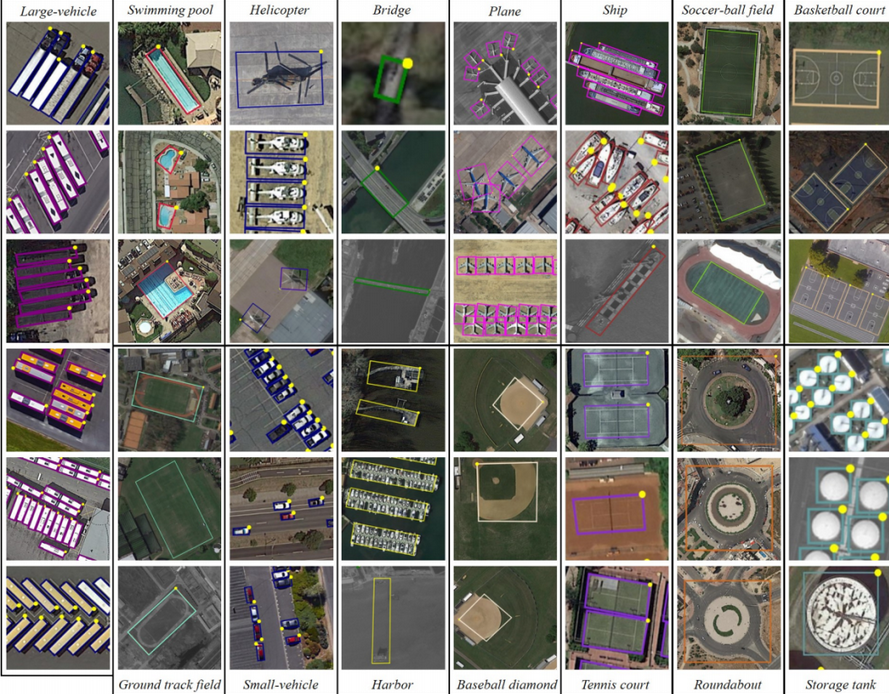

遙感影像識別屬於物體定位(object localization)範疇。一般區分2階段和1階段方法,其中yolo為典型實現。其中yolov5版本由於官方的持續支援和擴充套件,是比較成熟的版本。基於此實現的 yolov5_obb(YOLOv5_DOTA無人機/遙感旋轉目標檢測專案程式碼 hukaixuan19970627/yolov5_obb: yolov5 + csl_label)的推斷,用於解決遙感資料集上傾斜目標的識別。作者實現了在 Dota資料集上yolov5m/yolov5s/yoov5n的150Epoch的推斷結果,可供遷移模型使用。

關於DOTA 資料集可進一步瞭解: (386條訊息) DOTA 資料集:2806 張遙感影象,近 19 萬個標註範例_HyperAI超神經的部落格-CSDN部落格

值得關注的是CSL旋轉標註方法,參考資料《旋轉目標檢測方法解讀(CSL, ECCV2020) 旋轉目標檢測方法解讀(CSL, ECCV2020) - 知乎 (zhihu.com)》

二、模型推斷(c++實現)

基於已經初步訓練的結果,進一步實現標註結果展現。模型推斷的實現是相對困難的,主要原因是因為在實現的過程中缺乏有效的偵錯工具。基於已經能夠成功執行的程式碼去進行修改是問題有效的解決方法,其中hpc203對推斷程式碼進行了改造,並且提供了大量案例。需要注意的是,yolov5_obb對yolov5的程式碼進行了改造,所以前面的相關操作都需要基於yolov5_obb實現。相關內容整理如下:

1、yolov5_Dota yolov5_Dota | Kaggle 這個是訓練的工具,需要使用特定的torch版本,直接基於kaggle就可以執行。

2、https://gitee.com/jsxyhelu2020/yolov5_obb_static.git 這個是修改的程式碼:

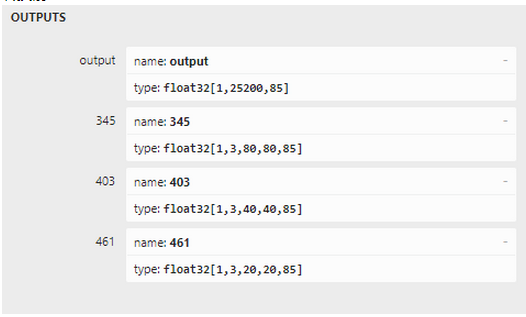

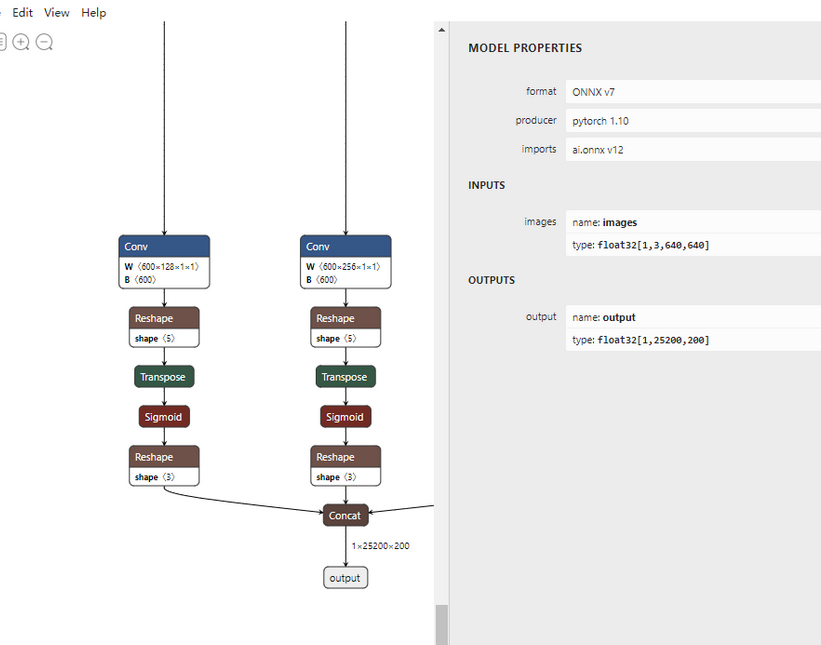

3、從hpc203做出修改上來看,模型輸出的結果,由4路轉換為1路,可以藉助netron進行觀測。

轉換前:

轉換後:

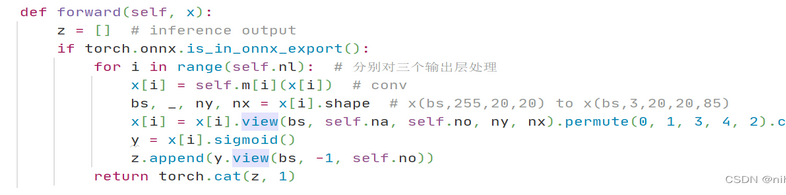

4、hpc203對onnx檔案轉換生成的修改,進入models/yolo.py,進入到Detect類的forward函數裡,插入程式碼

if torch.onnx.is_in_onnx_export(): for i in range(self.nl): # 分別對三個輸出層處理 x[i] = self.m[i](x[i]) # conv bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85) x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous() y = x[i].sigmoid() z.append(y.view(bs, -1, self.no)) return torch.cat(z, 1)

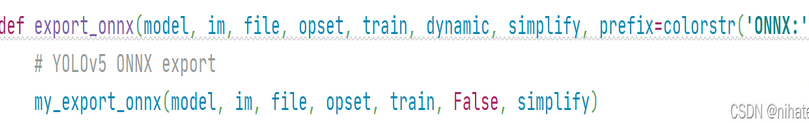

在export.py裡,自定義了一個匯出onnx檔案的函數,程式碼片段如下

def my_export_onnx(model, im, file, opset, train, dynamic, simplify, prefix=colorstr('ONNX:')): print('anchors:', model.yaml['anchors']) # wtxt = open('class.names', 'w') #for name in model.names: # wtxt.write(name+'\n') # wtxt.close() # YOLOv5 ONNX export print(im.shape) if not dynamic: f = os.path.splitext(file)[0] + '.onnx' torch.onnx.export(model, im, f, verbose=False, opset_version=12, input_names=['images'], output_names=['output']) else: f = os.path.splitext(file)[0] + '_dynamic.onnx' torch.onnx.export(model, im, f, verbose=False, opset_version=12, input_names=['images'], output_names=['output'], dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # shape(1,3,640,640) 'output': {0: 'batch', 1: 'anchors'} # shape(1,25200,85) }) try: import cv2 net = cv2.dnn.readNet(f) except: exit(f'export {f} failed') exit(f'export {f} sucess')

在官方定義的export_onnx函數裡插入呼叫這個函數,程式碼截圖如下:

python export.py --weights=yolov5s.pt --include=onnx --imgsz=640 python export.py --weights=yolov5s6.pt --include=onnx --imgsz=1280

就能成功生成.onnx檔案,並且opencv的dnn模組能讀取onnx檔案做推理。這兩處修改都是淺表的修改,對輸入輸出層這塊進行了一些修改,但是確實是起到了相應的作用。

三、識別(標註)軟體設計

由於需要較多互動操作,選擇Csharp編寫介面,基於GOCW,通過clr的方式呼叫opencv,這些是比較成熟的方法。GOCW的相關內容可以參考Github。在實現的過程中,對軟體介面進行了進一步的細化實現,

這些按鈕分別對應功能如下:

開啟:開啟資料圖片

截圖:通過螢幕截圖獲得

執行推斷:呼叫識別演演算法

獲得標註:獲得標註的資料。這一點在下一小節中具體說明

儲存:儲存結果

比對:標註前和標註後進行比對

返回:返回標註前狀態。

截圖:通過螢幕截圖獲得

執行推斷:呼叫識別演演算法

獲得標註:獲得標註的資料。這一點在下一小節中具體說明

儲存:儲存結果

比對:標註前和標註後進行比對

返回:返回標註前狀態。

軟體具體操作過程可以參考視訊。

四、資料標註

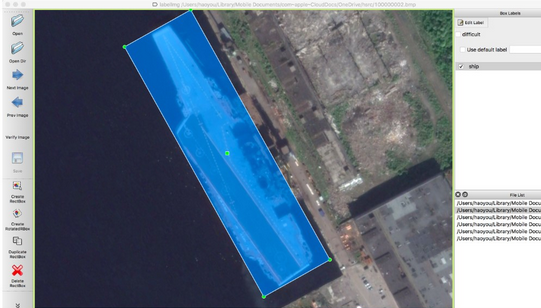

在現有實現的資料預識別基礎上,需對識別結果進行進一步標註和修正。我曾經思考是否需要自己開發相關功能,但是很快打消了這個思路。

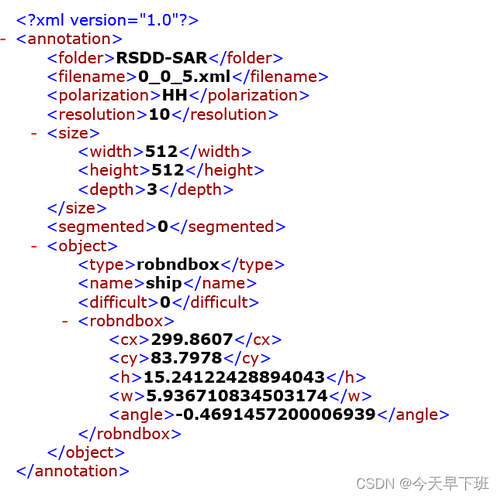

實現格式轉換,藉助已有工具來實現,應該是更合理的方法。經過調研,發現roLabelImg 是專用的旋轉標註工具:

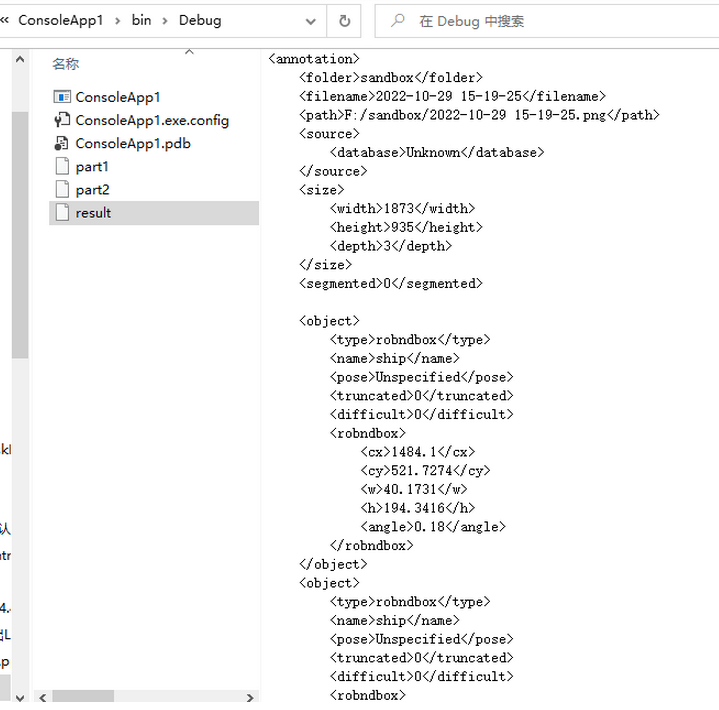

它的資料格式是這樣的:

<annotation verified="yes"> <folder>hsrc</folder> <filename>100000001</filename> <path>/Users/haoyou/Library/Mobile Documents/com~apple~CloudDocs/OneDrive/hsrc/100000001.bmp</path> <source> <database>Unknown</database> </source> <size> <width>1166</width> <height>753</height> <depth>3</depth> </size> <segmented>0</segmented> <object> <type>bndbox</type> <name>ship</name> <pose>Unspecified</pose> <truncated>0</truncated> <difficult>0</difficult> <bndbox> <xmin>178</xmin> <ymin>246</ymin> <xmax>974</xmax> <ymax>504</ymax> </bndbox> </object> <object> <type>robndbox</type> <name>ship</name> <pose>Unspecified</pose> <truncated>0</truncated> <difficult>0</difficult> <robndbox> <cx>580.7887</cx> <cy>343.2913</cy> <w>775.0449</w> <h>170.2159</h> <angle>2.889813</angle> </robndbox> </object> </annotation>

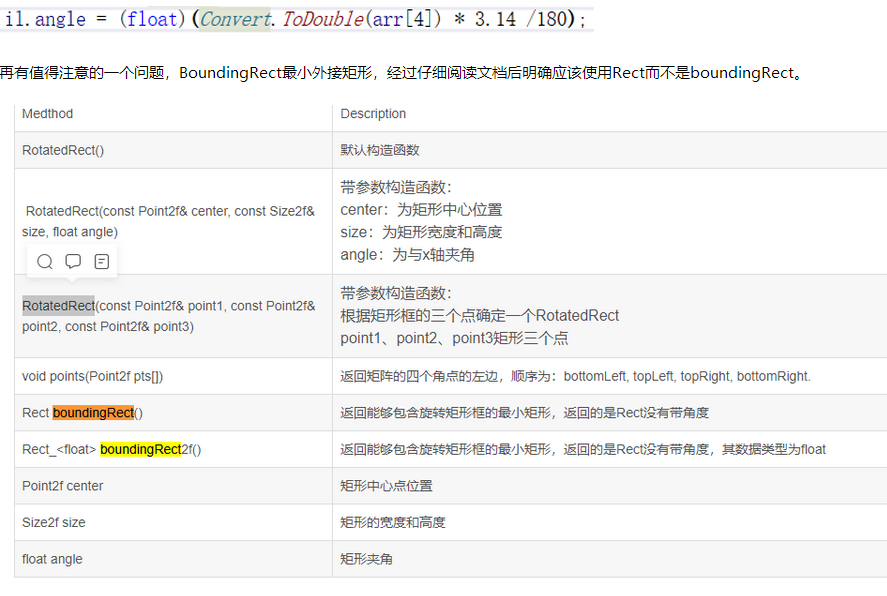

那麼需要當前的Csharp識別(標註)工具能夠生成該格式的資料,使用XmlWriter結合文書處理的方法進行進一步融合,最終結果雖然不高效,但是穩定可控的。

using System; using System.Collections.Generic; using System.IO; using System.Text; using System.Xml; using System.Xml.Serialization; namespace ConsoleApp1 { [XmlRoot("annotation")] public class AnnotationHead { public string folder; public string filename; public string path; public Source source; public Size size; public string segmented; } public class Size { public string width; public string height; public string depth; } public class Source { public string database; } [XmlRoot("object")] public class @object { public string type; public string name; public string pose; public string truncated; public string difficult; public robndClass robndbox; } public class robndClass { public float cx; public float cy; public float w; public float h; public float angle; } public class Test { public static void Main() { //輸出規則 XmlWriterSettings settings = new XmlWriterSettings(); settings.Indent = true; settings.IndentChars = " "; settings.NewLineChars = "\r\n"; settings.Encoding = Encoding.UTF8; settings.OmitXmlDeclaration = true; // 不生成宣告頭 XmlSerializerNamespaces namespaces = new XmlSerializerNamespaces(); namespaces.Add(string.Empty, string.Empty); //輸出物件 FileStream stream = new FileStream("part1.xml", FileMode.Create); /////////////////////////Part1/////////////////////////////////// AnnotationHead an = new AnnotationHead(); an.folder = "sandbox"; an.filename = "2022-10-29 15-19-25"; an.path = "F:/sandbox/2022-10-29 15-19-25.png"; Source source = new Source(); source.database = "Unknown"; an.source = source; Size size = new Size(); size.width = "1873"; size.height = "935"; size.depth = "3"; an.size = size; an.segmented = "0"; //實施輸出 XmlWriter xmlWriter = XmlWriter.Create(stream, settings); XmlSerializer serializer = new XmlSerializer(typeof(AnnotationHead)); serializer.Serialize(xmlWriter, an, namespaces); //目標銷燬 xmlWriter.Close(); stream.Close(); /////////////////////////Part2/////////////////////////////////// stream = new FileStream("part2.xml", FileMode.Create); //定義方法 robndClass il = new robndClass(); il.cx = (float)1484.1; il.cy = (float)521.7274; il.w = (float)40.1731; il.h = (float)194.3416; il.angle = (float)0.18; @object o = new @object(); o.type = "robndbox"; o.name = "ship"; o.pose = "Unspecified"; o.truncated = "0"; o.difficult = "0"; o.robndbox = il; List<@object> objectList = new List<@object>(); objectList.Add(o); objectList.Add(o); objectList.Add(o); serializer = new XmlSerializer(typeof(List<@object>)); XmlWriter xmlWriter2 = XmlWriter.Create(stream, settings); serializer.Serialize(xmlWriter2, objectList, namespaces); xmlWriter2.Close(); stream.Close(); ///////////////////////////merge////////////////////////////////////////// StreamReader sr = new StreamReader("part1.xml"); string strPart1 = sr.ReadToEnd(); strPart1 = strPart1.Substring(0, strPart1.Length - 13); sr.Close(); sr = new StreamReader("part2.xml"); string strPart2 = sr.ReadToEnd(); strPart2 = strPart2.Substring(15, strPart2.Length - 31); sr.Close(); string strOut = strPart1 + strPart2 + "</annotation>"; ////////////////////////////輸出/////////////////////////////////////// using (StreamWriter sw = new StreamWriter("result.xml")) { sw.Write(strOut); } } } }

進一步重構和融合:

public static void Main() { AnnotationHead an = new AnnotationHead(); an.folder = "sandbox"; an.filename = "2022-10-29 15-19-25"; an.path = "F:/sandbox/2022-10-29 15-19-25.png"; Source source = new Source(); source.database = "Unknown"; an.source = source; Size size = new Size(); size.width = "18443"; size.height = "935"; size.depth = "3"; an.size = size; an.segmented = "0"; robndClass il = new robndClass(); il.cx = (float)1484.1; il.cy = (float)521.7274; il.w = (float)40.1731; il.h = (float)194.3416; il.angle = (float)0.18; @object o = new @object(); o.type = "robndbox"; o.name = "ship"; o.pose = "Unspecified"; o.truncated = "0"; o.difficult = "0"; o.robndbox = il; List<@object> objectList = new List<@object>(); objectList.Add(o); objectList.Add(o); objectList.Add(o); objectList.Add(o); objectList.Add(o); AnnotationClass ac = new AnnotationClass(an,objectList,"r2.xml"); ac.action(); } } using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.Xml; using System.IO; using System.Xml.Serialization; namespace ConsoleApp1 { [XmlRoot("annotation")] public class AnnotationHead { public string folder; public string filename; public string path; public Source source; public Size size; public string segmented; } public class Size { public string width; public string height; public string depth; } public class Source { public string database; } [XmlRoot("object")] public class @object { public string type; public string name; public string pose; public string truncated; public string difficult; public robndClass robndbox; } public class robndClass { public float cx; public float cy; public float w; public float h; public float angle; } class AnnotationClass { private string strOutName;//輸出檔名稱 private AnnotationHead an; private List<@object> objectList; //建構函式 public AnnotationClass(AnnotationHead annotatonHead, List<@object> olist, string strName = "result.xml") { an = annotatonHead; strOutName = strName; objectList = olist; } public void action() { //輸出規則 XmlWriterSettings settings = new XmlWriterSettings(); settings.Indent = true; settings.IndentChars = " "; settings.NewLineChars = "\r\n"; settings.Encoding = Encoding.UTF8; settings.OmitXmlDeclaration = true; // 不生成宣告頭 XmlSerializerNamespaces namespaces = new XmlSerializerNamespaces(); namespaces.Add(string.Empty, string.Empty); //輸出物件 FileStream stream = new FileStream("part1.xml", FileMode.Create); /////////////////////////Part1/////////////////////////////////// //實施輸出 XmlWriter xmlWriter = XmlWriter.Create(stream, settings); XmlSerializer serializer = new XmlSerializer(typeof(AnnotationHead)); serializer.Serialize(xmlWriter, an, namespaces); //目標銷燬 xmlWriter.Close(); stream.Close(); /////////////////////////Part2/////////////////////////////////// stream = new FileStream("part2.xml", FileMode.Create); //定義方法 serializer = new XmlSerializer(typeof(List<@object>)); XmlWriter xmlWriter2 = XmlWriter.Create(stream, settings); serializer.Serialize(xmlWriter2, objectList, namespaces); xmlWriter2.Close(); stream.Close(); ///////////////////////////merge////////////////////////////////////////// StreamReader sr = new StreamReader("part1.xml"); string strPart1 = sr.ReadToEnd(); strPart1 = strPart1.Substring(0, strPart1.Length - 13); sr.Close(); sr = new StreamReader("part2.xml"); string strPart2 = sr.ReadToEnd(); strPart2 = strPart2.Substring(15, strPart2.Length - 31); sr.Close(); string strOut = strPart1 + strPart2 + "</annotation>"; ////////////////////////////輸出/////////////////////////////////////// using (StreamWriter sw = new StreamWriter(strOutName)) { sw.Write(strOut); } } } }

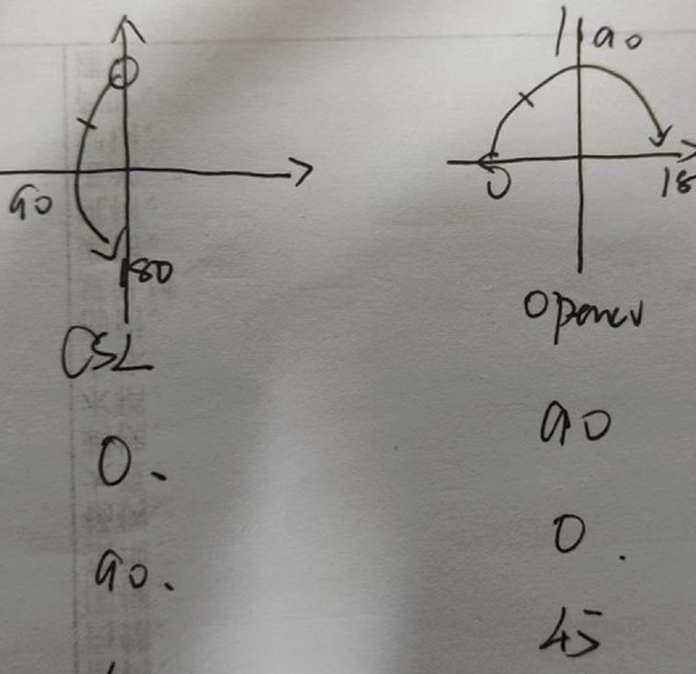

這裡值得注意的一點是,CSL和OpenCV中角度轉換的對應關係應該是:

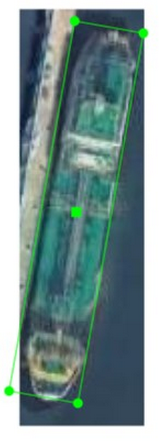

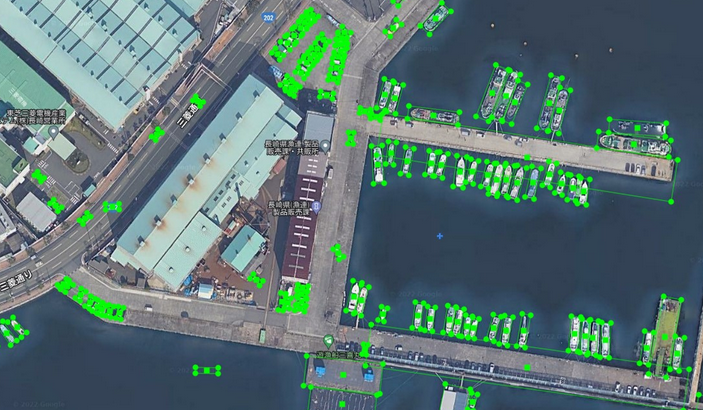

經過修改後,顯示的結果也是準確的:

在全部圖片上顯示的效果為:

五、結果轉換

標註的roImageLabel格式的資料,最後要轉換為yolov5_obb的格式,並且匯入到Dota資料集中。這塊由於實際專案還未涉及,整編一些現有的資料,已經存在相關的工具:

BboxToolkit is a light codebase collecting some practical functions for the special-shape detection, such as oriented detection. The whole project is written by python, which can run in different platform without compliation. We use this project to support the oriented detection benchmark OBBDetection.

下載安裝好後,設定BboxToolkit/tools路徑下的split_configs/dota1_0/ss_train.json檔案。根據需求更改引數。更改BboxToolkit/BboxToolkit/datasets/misc.py檔案中的dota1_0中的類別

現在有xywhθ標註的資料集,要轉換成dota標註的格式

使用如下程式碼,路徑需要自己修改:

import math import shutil import os import numpy as np import xml.etree.ElementTree as et dataset_dir = r'D:\dataset\sar\RSDD\RSDD-SAR\JPEGImages' ana_dir = r'D:\dataset\sar\RSDD\RSDD-SAR\Annotations' save_dir = r'D:\dataset\sar\RSDD\dota' data_type = 'test' train_img_dir = r'D:\dataset\sar\RSDD\RSDD-SAR\ImageSets\test.txt' f1 = open(train_img_dir, 'r') train_img = f1.readlines() def rota(center_x1, center_y1, x, y, w, h, a): # 旋轉中心點,旋轉中心點,框的w,h,旋轉角 # a = (math.pi * a) / 180 # 角度轉弧度 x1, y1 = x - w / 2, y - h / 2 # 旋轉前左上 x2, y2 = x + w / 2, y - h / 2 # 旋轉前右上 x3, y3 = x + w / 2, y + h / 2 # 旋轉前右下 x4, y4 = x - w / 2, y + h / 2 # 旋轉前左下 px1 = (x1 - center_x1) * math.cos(a) - (y1 - center_y1) * math.sin(a) + center_x1 # 旋轉後左上 py1 = (x1 - center_x1) * math.sin(a) + (y1 - center_y1) * math.cos(a) + center_y1 px2 = (x2 - center_x1) * math.cos(a) - (y2 - center_y1) * math.sin(a) + center_x1 # 旋轉後右上 py2 = (x2 - center_x1) * math.sin(a) + (y2 - center_y1) * math.cos(a) + center_y1 px3 = (x3 - center_x1) * math.cos(a) - (y3 - center_y1) * math.sin(a) + center_x1 # 旋轉後右下 py3 = (x3 - center_x1) * math.sin(a) + (y3 - center_y1) * math.cos(a) + center_y1 px4 = (x4 - center_x1) * math.cos(a) - (y4 - center_y1) * math.sin(a) + center_x1 # 旋轉後左下 py4 = (x4 - center_x1) * math.sin(a) + (y4 - center_y1) * math.cos(a) + center_y1 return px1, py1, px2, py2, px3, py3, px4, py4 # 旋轉後的四個點,左上,右上,右下,左下 for img in train_img: shutil.copy(os.path.join(dataset_dir, img[:-1] + '.jpg'), os.path.join(save_dir, data_type, 'images', img[:-1] + '.jpg')) xml_file = open(os.path.join(ana_dir, img[:-1] + '.xml'), encoding='utf-8') tree = et.parse(xml_file) root = tree.getroot() with open(os.path.join(save_dir, data_type, 'labelTxt', img[:-1] + '.txt'), 'w') as f: f.write('imagesource:GoogleEarth\ngsd:NaN\n') for obj in root.iter('object'): cls = obj.find('name').text box = obj.find('robndbox') x_c = box.find('cx').text y_c = box.find('cy').text h = box.find('h').text w = box.find('w').text theta = box.find('angle').text box = list(map(np.float16, [x_c, y_c, h, w, theta])) box = rota(box[0], box[1], *box) box = list(map(int, box)) box = list(map(str, box)) f.write(' '.join(box)) f.write(' ' + cls + ' 0\n')

六、矛盾困難

未來專案真實使用的場景,其影象體量肯定和dota資料集是有差距的,這樣預識別肯定是有難度和錯誤的。但是首先通過小批次標註實現部分識別結果,而後進行迭代的方法肯定是需要實現的。此外,我們可以考慮呼叫融合比如easydl的方法功能。最為關鍵的,是找到實際需要的應用場景,才能將這個流程打通,只有這樣才能夠獲得有用的資料集。

總結專案,關鍵點如下:

1、yolov5_obb的c++推斷編寫;

2、識別結果在roimagelabel和yolov5格式之間相關轉換;

3、csharp編寫介面,c++呼叫OpenCV實現影象處理的融合。