零基礎入門資料探勘——二手車交易價格預測:baseline

零基礎入門資料探勘 - 二手車交易價格預測

賽題理解

比賽要求參賽選手根據給定的資料集,建立模型,二手汽車的交易價格。

賽題以預測二手車的交易價格為任務,資料集報名後可見並可下載,該資料來自某交易平臺的二手車交易記錄,總資料量超過40w,包含31列變數資訊,其中15列為匿名變數。為了保證比賽的公平性,將會從中抽取15萬條作為訓練集,5萬條作為測試集A,5萬條作為測試集B,同時會對name、model、brand和regionCode等資訊進行脫敏。

資料形式

訓練資料集具有的特徵如下:

- name - 汽車編碼

- regDate - 汽車註冊時間

- model - 車型編碼

- brand - 品牌

- bodyType - 車身型別

- fuelType - 燃油型別

- gearbox - 變速箱

- power - 汽車功率

- kilometer - 汽車行駛公里

- notRepairedDamage - 汽車有尚未修復的損壞

- regionCode - 看車地區編碼

- seller - 銷售方

- offerType - 報價型別

- creatDate - 廣告發布時間

- price - 汽車價格(目標列)

- v_0', 'v_1', 'v_2', 'v_3', 'v_4', 'v_5', 'v_6', 'v_7', 'v_8', 'v_9', 'v_10', 'v_11', 'v_12', 'v_13','v_14'(根據汽車的評論、標籤等大量資訊得到的embedding向量)【人工構造 匿名特徵】

預測指標

賽題要求採用mae作為評價指標

具體演演算法

匯入相關庫

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import missingno as msno

import scipy.stats as st

import warnings

warnings.filterwarnings('ignore')

# 解決中文顯示問題

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

資料分析

先讀入資料:

train_data = pd.read_csv("used_car_train_20200313.csv", sep = " ")

用excel開啟可以看到每一行資料都放下一個單元格中,彼此之間用空格分隔,因此此處需要指定sep為空格,才能夠正確讀入資料。

觀看一下資料:

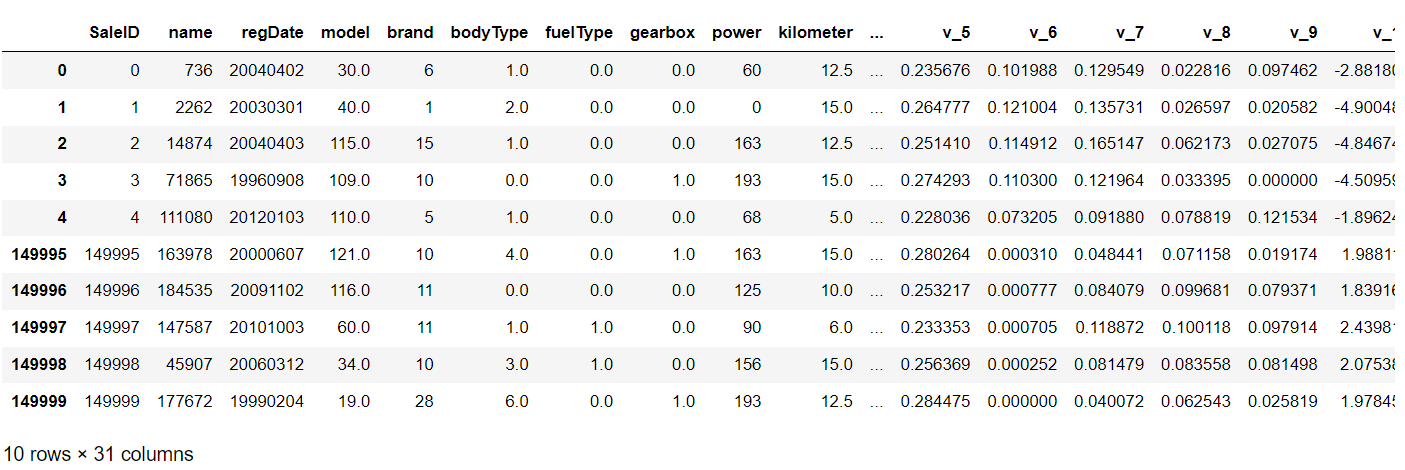

train_data.head(5).append(train_data.tail(5))

那麼下面就開始對資料進行分析。

train_data.columns.values

array(['SaleID', 'name', 'regDate', 'model', 'brand', 'bodyType',

'fuelType', 'gearbox', 'power', 'kilometer', 'notRepairedDamage',

'regionCode', 'seller', 'offerType', 'creatDate', 'price', 'v_0',

'v_1', 'v_2', 'v_3', 'v_4', 'v_5', 'v_6', 'v_7', 'v_8', 'v_9',

'v_10', 'v_11', 'v_12', 'v_13', 'v_14'], dtype=object)

以上為資料具有的具體特徵,那麼可以先初步探索一下每個特徵的數值型別以及取值等。

train_data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 150000 entries, 0 to 149999

Data columns (total 31 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 SaleID 150000 non-null int64

1 name 150000 non-null int64

2 regDate 150000 non-null int64

3 model 149999 non-null float64

4 brand 150000 non-null int64

5 bodyType 145494 non-null float64

6 fuelType 141320 non-null float64

7 gearbox 144019 non-null float64

8 power 150000 non-null int64

9 kilometer 150000 non-null float64

10 notRepairedDamage 150000 non-null object

11 regionCode 150000 non-null int64

12 seller 150000 non-null int64

13 offerType 150000 non-null int64

14 creatDate 150000 non-null int64

15 price 150000 non-null int64

16 v_0 150000 non-null float64

17 v_1 150000 non-null float64

18 v_2 150000 non-null float64

19 v_3 150000 non-null float64

20 v_4 150000 non-null float64

21 v_5 150000 non-null float64

22 v_6 150000 non-null float64

23 v_7 150000 non-null float64

24 v_8 150000 non-null float64

25 v_9 150000 non-null float64

26 v_10 150000 non-null float64

27 v_11 150000 non-null float64

28 v_12 150000 non-null float64

29 v_13 150000 non-null float64

30 v_14 150000 non-null float64

dtypes: float64(20), int64(10), object(1)

memory usage: 35.5+ MB

可以看到除了notRepairedDamage是object型別,其他都是int或者float型別,同時可以看到部分特徵還是存在缺失值的,因此這也是後續處理的重要方向。下面檢視缺失值的情況:

train_data.isnull().sum()

SaleID 0

name 0

regDate 0

model 1

brand 0

bodyType 4506

fuelType 8680

gearbox 5981

power 0

kilometer 0

notRepairedDamage 0

regionCode 0

seller 0

offerType 0

creatDate 0

price 0

v_0 0

v_1 0

v_2 0

v_3 0

v_4 0

v_5 0

v_6 0

v_7 0

v_8 0

v_9 0

v_10 0

v_11 0

v_12 0

v_13 0

v_14 0

dtype: int64

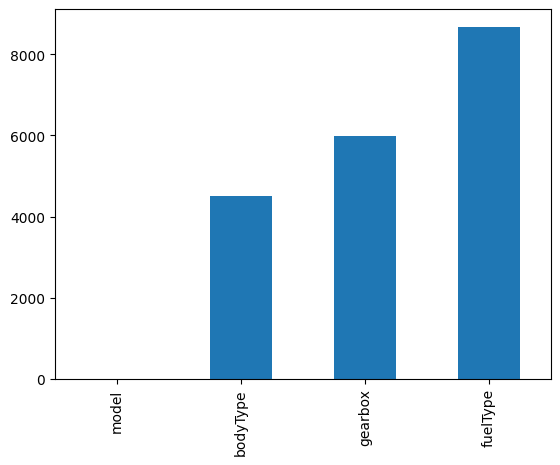

可以看到是部分特徵存在較多的缺失值的,因此這是需要處理的部分,下面對缺失值的數目進行視覺化展示:

missing = train_data.isnull().sum()

missing = missing[missing > 0]

missing.sort_values(inplace = True)

missing.plot.bar()

我們也可用多種方式來檢視缺失值:

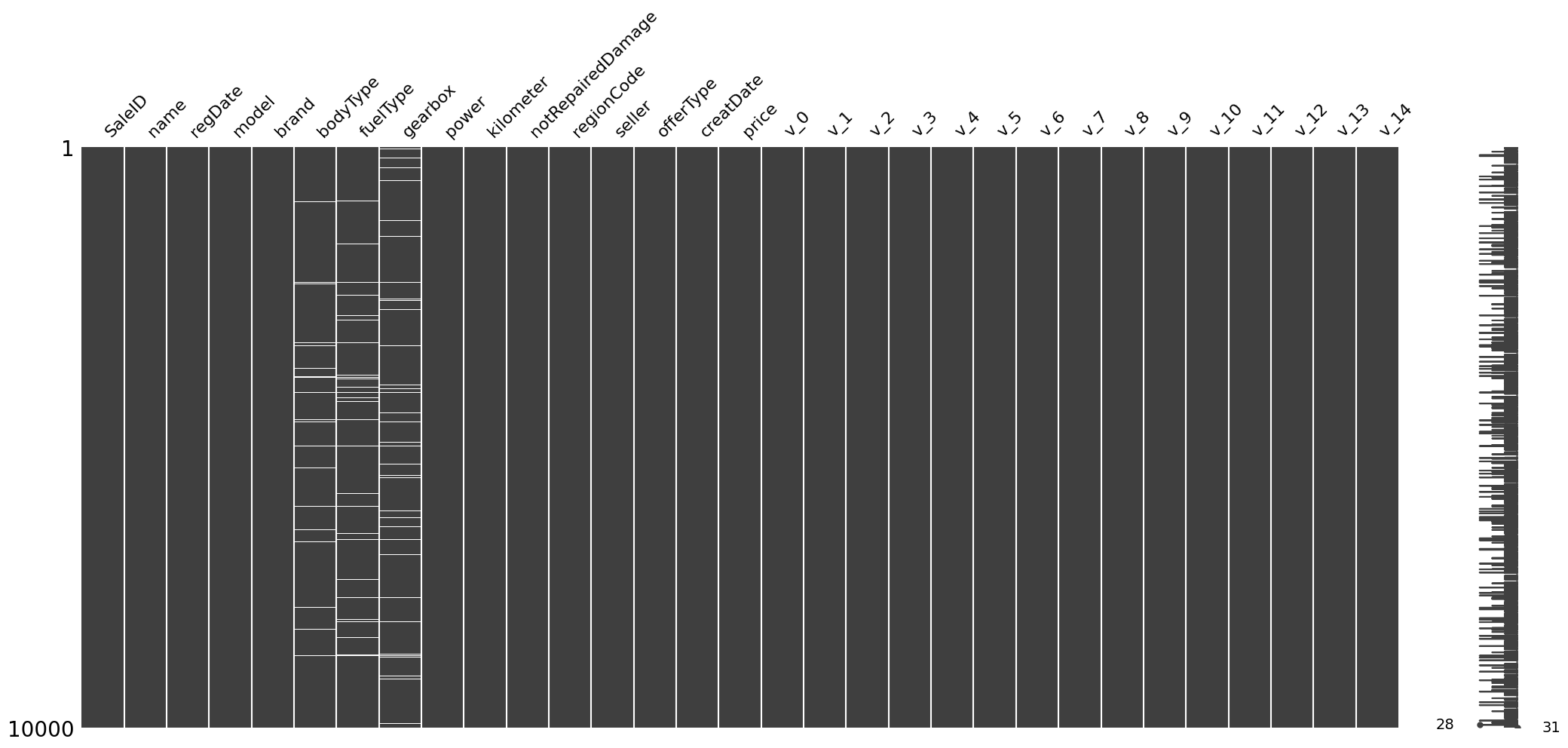

msno.matrix(train_data.sample(10000))

這種圖中的白線代表為缺失值,可以看到中間的三個特徵存在較多白線,說明其取樣10000個的話其中仍然存在較多缺失值。

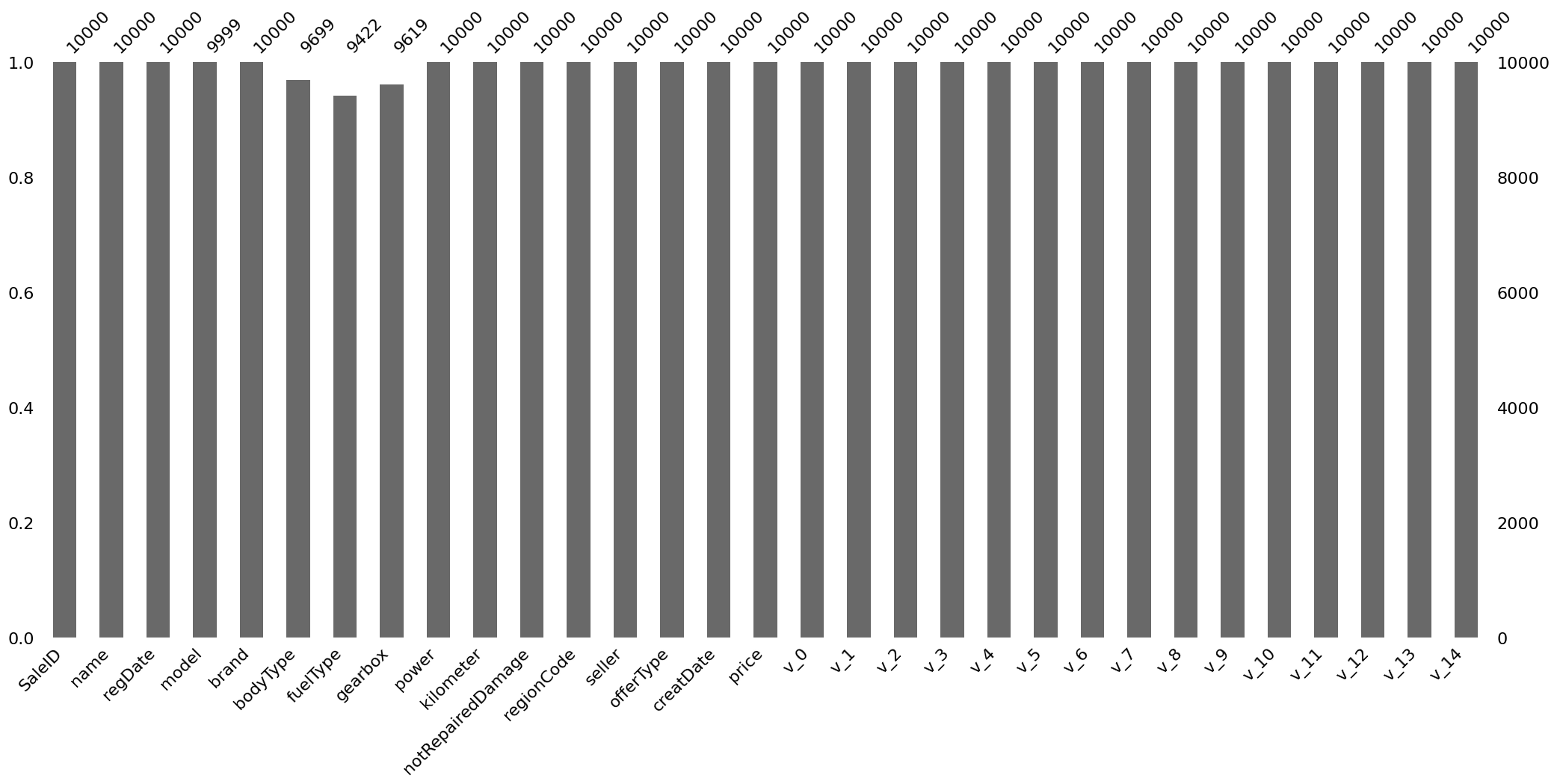

msno.bar(train_data.sample(10000))

上圖中同樣是那三個特徵,非缺失值的個數也明顯比其他特徵少。

再回到最開始的資料型別處,我們可以發現notRepairedDamage特徵的型別為object,因此我們可以來觀察其具有幾種取值:

train_data['notRepairedDamage'].value_counts()

0.0 111361

- 24324

1.0 14315

Name: notRepairedDamage, dtype: int64

可以看到其存在"-"取值,這也可以認為是一種缺失值,因此我們可以將"-"轉換為nan,然後再統一對nan進行處理。

而為了測試資料集也得到了相同的處理,因此讀入資料集併合並:

test_data = pd.read_csv("used_car_testB_20200421.csv", sep = " ")

train_data["origin"] = "train"

test_data["origin"] = "test"

data = pd.concat([train_data, test_data], axis = 0, ignore_index = True)

得到的data資料,是具有20000萬條資料。那麼可以統一對該資料集的notRepairedDamage特徵進行處理:

data['notRepairedDamage'].replace("-", np.nan, inplace = True)

data['notRepairedDamage'].value_counts()

0.0 148585

1.0 19022

Name: notRepairedDamage, dtype: int64

可以看到"-"已經被替換成了nan,因此在計數時沒有被考慮在內。

而以下這兩種特徵的類別嚴重不平衡,這種情況可以認為它們對於結果的預測並不會起到什麼作用:

data['seller'].value_counts()

0 199999

1 1

Name: seller, dtype: int64

data["offerType"].value_counts()

0 200000

Name: offerType, dtype: int64

因此可以對這兩個特徵進行刪除:

del data["seller"]

del data["offerType"]

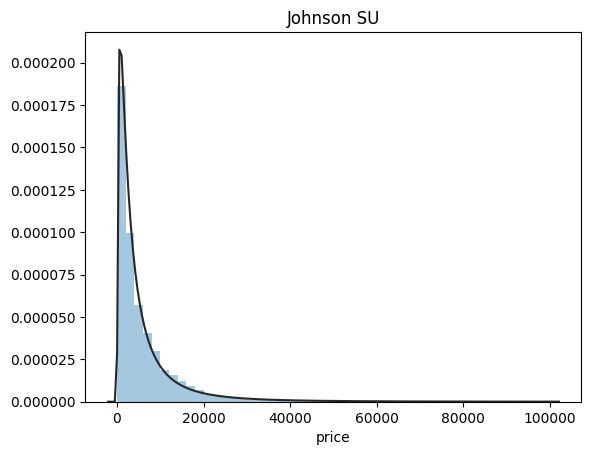

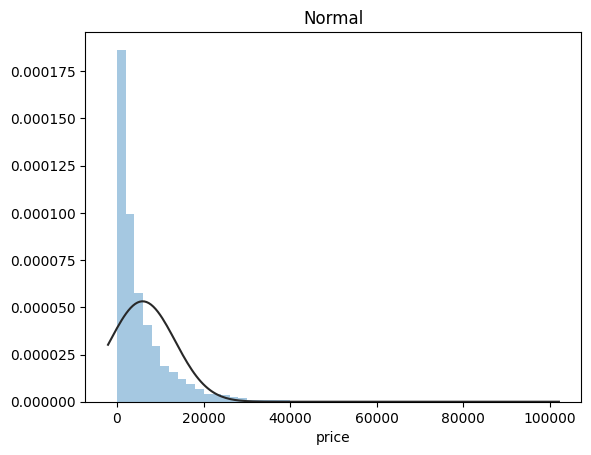

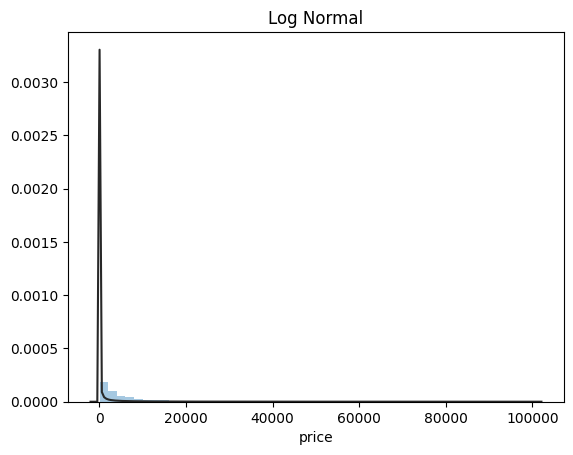

以上是對特徵的初步分析,那麼接下來我們對目標列,也就是預測價格進行進一步的分析,先觀察其分佈情況:

target = train_data['price']

plt.figure(1)

plt.title('Johnson SU')

sns.distplot(target, kde=False, fit=st.johnsonsu)

plt.figure(2)

plt.title('Normal')

sns.distplot(target, kde=False, fit=st.norm)

plt.figure(3)

plt.title('Log Normal')

sns.distplot(target, kde=False, fit=st.lognorm)

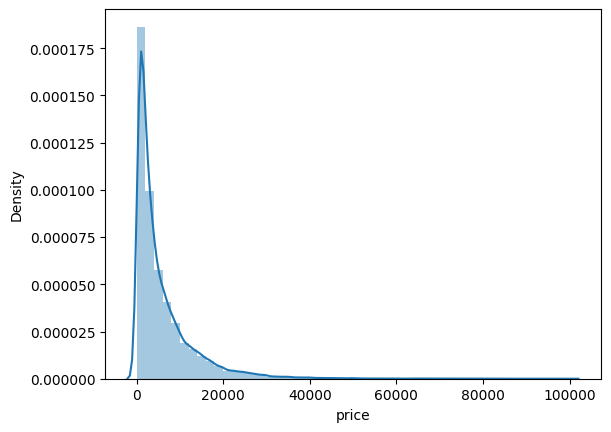

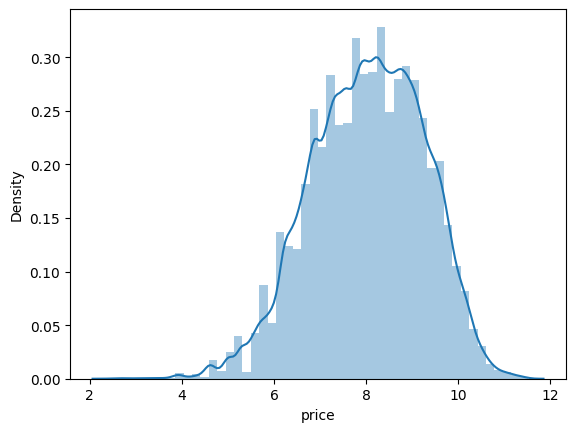

我們可以看到價格的分佈是極其不均勻的,這對預測是不利的,部分取值較為極端的例子將會對模型產生較大的影響,並且大部分模型及演演算法都希望預測的分佈能夠儘可能地接近正態分佈,因此後期需要進行處理,那我們可以從偏度和峰度兩個正態分佈的角度來觀察:

sns.distplot(target);

print("偏度: %f" % target.skew())

print("峰度: %f" % target.kurt())

偏度: 3.346487

峰度: 18.995183

對這種資料分佈的處理,通常可以用log來進行壓縮轉換:

# 需要將其轉為正態分佈

sns.distplot(np.log(target))

print("偏度: %f" % np.log(target).skew())

print("峰度: %f" % np.log(target).kurt())

偏度: -0.265100

峰度: -0.171801

可以看到,經過log變換之後其分佈相對好了很多,比較接近正態分佈了。

接下來,我們對不同型別的特徵進行觀察,分別對類別特徵和數位特徵來觀察。由於這裡沒有在數值型別上加以區分,因此我們需要人工挑選:

numeric_features = ['power', 'kilometer', 'v_0', 'v_1', 'v_2', 'v_3',

'v_4', 'v_5', 'v_6', 'v_7', 'v_8', 'v_9', 'v_10',

'v_11', 'v_12', 'v_13','v_14' ]

categorical_features = ['name', 'model', 'brand', 'bodyType', 'fuelType','gearbox', 'notRepairedDamage', 'regionCode',]

那麼對於類別型特徵,我們可以檢視其具有多少個取值,是否能夠轉換one-hot向量:

# 對於類別型的特徵需要檢視其取值有多少個,能不能轉換為onehot

for feature in categorical_features:

print(feature,"特徵有{}個取值".format(train_data[feature].nunique()))

print(train_data[feature].value_counts())

name 特徵有99662個取值

387 282

708 282

55 280

1541 263

203 233

...

26403 1

28450 1

32544 1

102174 1

184730 1

Name: name, Length: 99662, dtype: int64

model 特徵有248個取值

0.0 11762

19.0 9573

4.0 8445

1.0 6038

29.0 5186

...

242.0 2

209.0 2

245.0 2

240.0 2

247.0 1

Name: model, Length: 248, dtype: int64

brand 特徵有40個取值

0 31480

4 16737

14 16089

10 14249

1 13794

6 10217

9 7306

5 4665

13 3817

11 2945

3 2461

7 2361

16 2223

8 2077

25 2064

27 2053

21 1547

15 1458

19 1388

20 1236

12 1109

22 1085

26 966

30 940

17 913

24 772

28 649

32 592

29 406

37 333

2 321

31 318

18 316

36 228

34 227

33 218

23 186

35 180

38 65

39 9

Name: brand, dtype: int64

bodyType 特徵有8個取值

0.0 41420

1.0 35272

2.0 30324

3.0 13491

4.0 9609

5.0 7607

6.0 6482

7.0 1289

Name: bodyType, dtype: int64

fuelType 特徵有7個取值

0.0 91656

1.0 46991

2.0 2212

3.0 262

4.0 118

5.0 45

6.0 36

Name: fuelType, dtype: int64

gearbox 特徵有2個取值

0.0 111623

1.0 32396

Name: gearbox, dtype: int64

notRepairedDamage 特徵有2個取值

0.0 111361

1.0 14315

Name: notRepairedDamage, dtype: int64

regionCode 特徵有7905個取值

419 369

764 258

125 137

176 136

462 134

...

7081 1

7243 1

7319 1

7742 1

7960 1

Name: regionCode, Length: 7905, dtype: int64

可以看到name和regionCode 有很多個取值,因此不能轉換為onthot,其他是可以的。

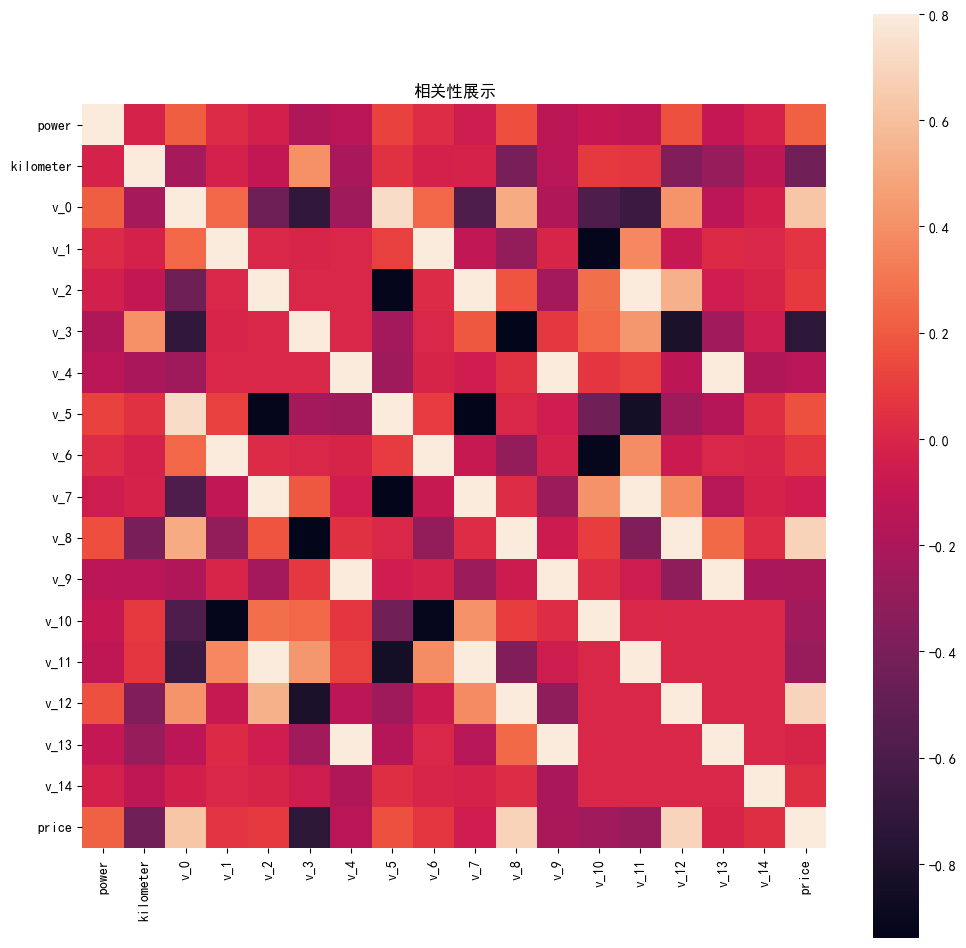

而對於數值特徵,我們可以來檢視其與價格之間的相關性關係,這也有利於我們判斷哪些特徵更加重要:

numeric_features.append("price")

price_numeric = train_data[numeric_features]

correlation_score = price_numeric.corr() # 得到是一個特徵數*特徵數的矩陣,元素都行和列對應特徵之間的相關性

correlation_score['price'].sort_values(ascending = False)

price 1.000000

v_12 0.692823

v_8 0.685798

v_0 0.628397

power 0.219834

v_5 0.164317

v_2 0.085322

v_6 0.068970

v_1 0.060914

v_14 0.035911

v_13 -0.013993

v_7 -0.053024

v_4 -0.147085

v_9 -0.206205

v_10 -0.246175

v_11 -0.275320

kilometer -0.440519

v_3 -0.730946

Name: price, dtype: float64

可以看到,例如v14,v13,v1,v7這種跟price之間的相關係數實在是過低,如果是在計算資源有限的情況下可以考慮捨棄這部分特徵。我們也可以直觀的展示相關性:

fig,ax = plt.subplots(figsize = (12,12))

plt.title("相關性展示")

sns.heatmap(correlation_score, square = True, vmax = 0.8)

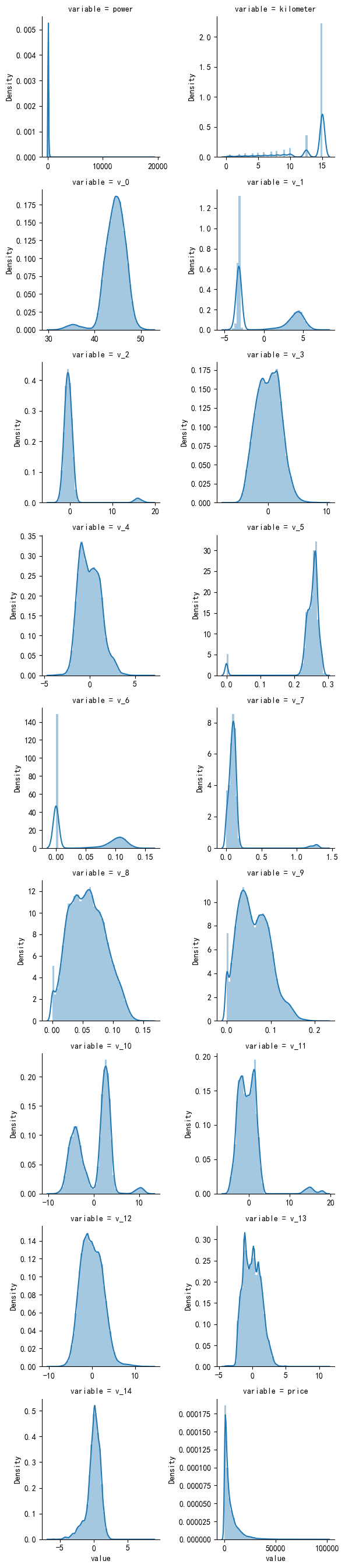

對於數值特徵來說,我們同樣關心其分佈,下面先具體分析再說明分佈的重要性:

# 檢視特徵值的偏度和峰度

for col in numeric_features:

print("{:15}\t Skewness:{:05.2f}\t Kurtosis:{:06.2f}".format(col,

train_data[col].skew(),

train_data[col].kurt()))

power Skewness:65.86 Kurtosis:5733.45

kilometer Skewness:-1.53 Kurtosis:001.14

v_0 Skewness:-1.32 Kurtosis:003.99

v_1 Skewness:00.36 Kurtosis:-01.75

v_2 Skewness:04.84 Kurtosis:023.86

v_3 Skewness:00.11 Kurtosis:-00.42

v_4 Skewness:00.37 Kurtosis:-00.20

v_5 Skewness:-4.74 Kurtosis:022.93

v_6 Skewness:00.37 Kurtosis:-01.74

v_7 Skewness:05.13 Kurtosis:025.85

v_8 Skewness:00.20 Kurtosis:-00.64

v_9 Skewness:00.42 Kurtosis:-00.32

v_10 Skewness:00.03 Kurtosis:-00.58

v_11 Skewness:03.03 Kurtosis:012.57

v_12 Skewness:00.37 Kurtosis:000.27

v_13 Skewness:00.27 Kurtosis:-00.44

v_14 Skewness:-1.19 Kurtosis:002.39

price Skewness:03.35 Kurtosis:019.00

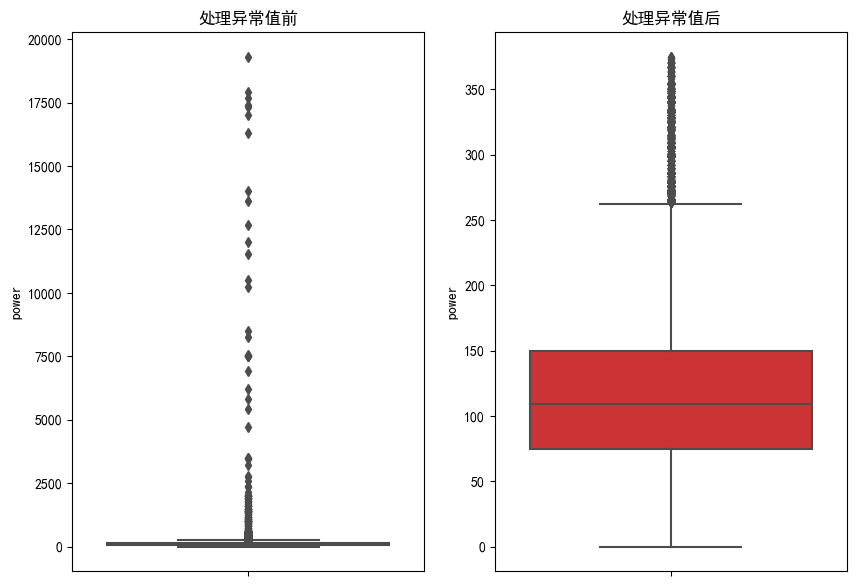

可以看到power特徵的偏度和峰度都非常大,那麼把分佈圖畫出來:

f = pd.melt(train_data, value_vars=numeric_features)

# 這裡相當於f是一個兩列的矩陣,第一列是原來特徵

# 第二列是特徵對應的取值,例如power有n個取值,那麼它會佔據n行,這樣疊在一起

g = sns.FacetGrid(f, col="variable", col_wrap=2, sharex=False, sharey=False)

#g 是產生一個物件,可以用來應用各種圖面畫圖,map應用

# 第一個引數就是dataframe資料,但是要求是長資料,也就是melt處理完的資料

# 第二個引數是用來畫圖依據的列,valiable是melt處理完,那些特徵的列名稱

# 而那些值的列名稱為value

# 第三個引數col_wrap是代表分成多少列

g = g.map(sns.distplot, "value")

關於melt的使用可以看使用Pandas melt()重塑DataFrame - 知乎 (zhihu.com),我覺得講得非常容易理解。

可以看到power的分佈非常不均勻,那麼跟price同樣,如果出現較大極端值的power,就會對結果產生非常嚴重的影響,這就使得在學習的時候關於power 的權重設定非常不好做。因此後續也需要對這部分進行處理。而匿名的特徵的分佈相對來說會比較均勻一點,後續可能就不需要進行處理了。

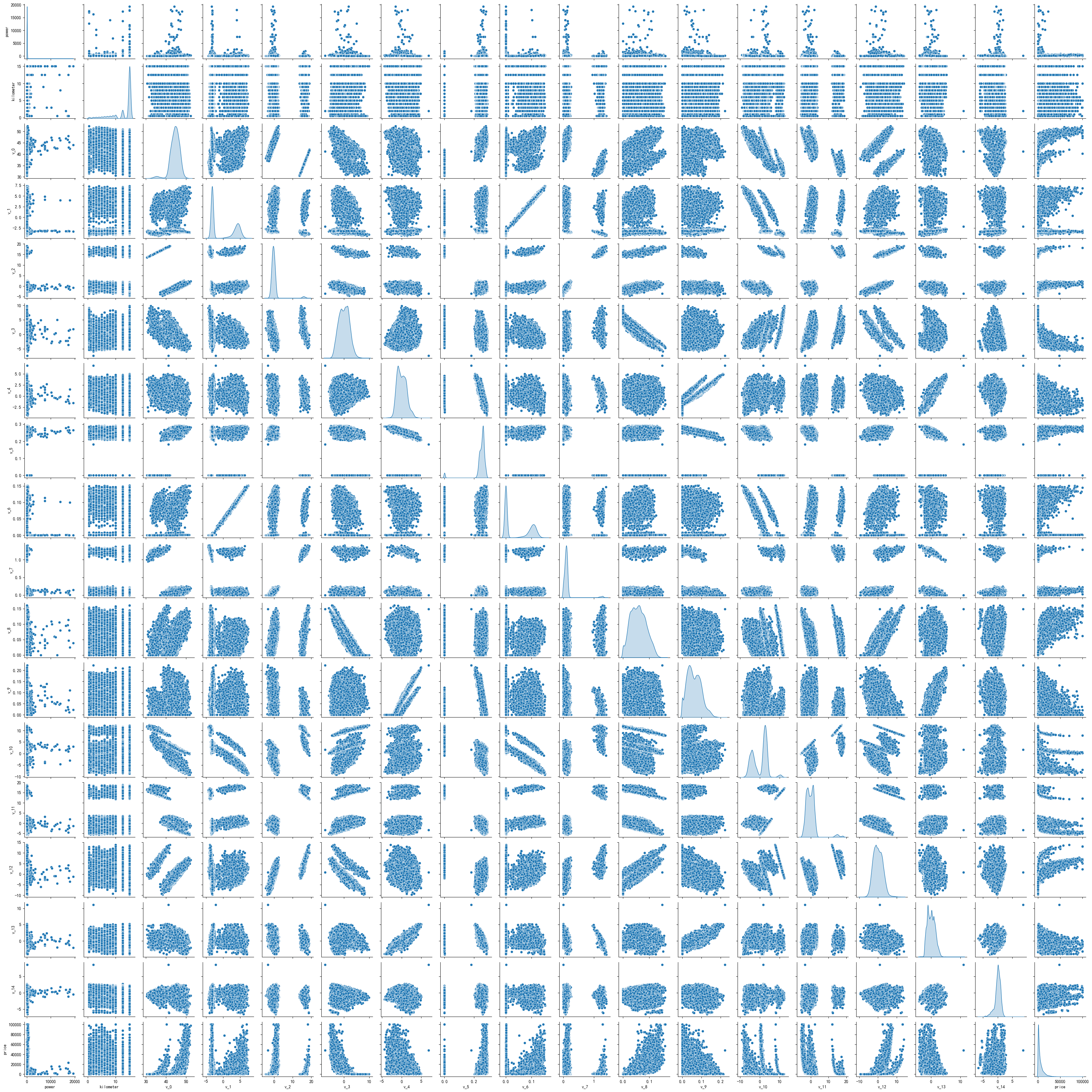

還可以通過散點圖來觀察兩兩之間大概的關係分佈:

sns.pairplot(train_data[numeric_features], size = 2, kind = "scatter",diag_kind = "kde")

(這部分就自己看自己發揮吧)

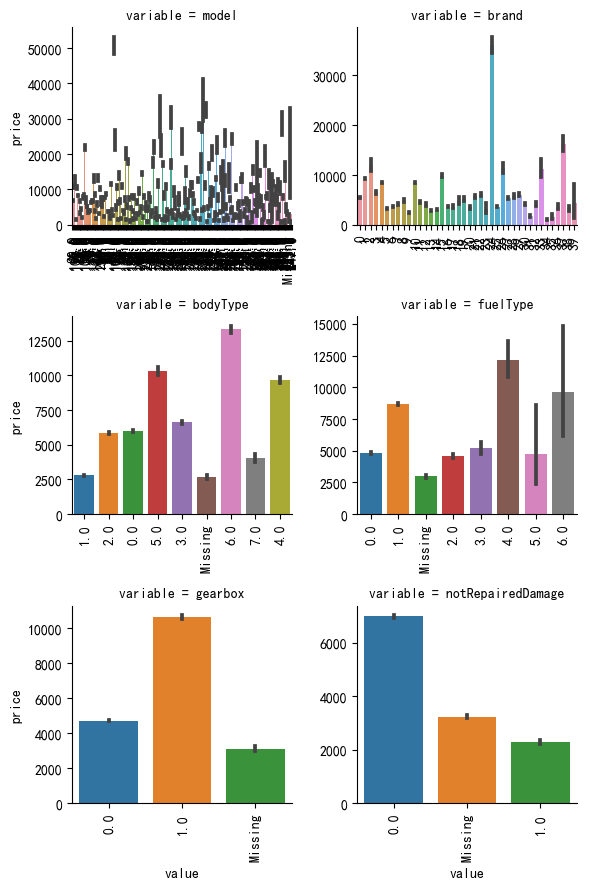

下面繼續回到類別型特徵,由於其中存在nan不方便我們畫圖展示,因此我們可以先將nan進行替換,方便畫圖展示:

# 下面對類別特徵做處理

categorical_features_2 = ['model',

'brand',

'bodyType',

'fuelType',

'gearbox',

'notRepairedDamage']

for c in categorical_features_2:

train_data[c] = train_data[c].astype("category")

# 將這些的型別轉換為分類型別,不保留原來的int或者float型別

if train_data[c].isnull().any():

# 如果該列存在nan的話

train_data[c] = train_data[c].cat.add_categories(['Missing'])

# 增加一個新的分類為missing,用它來填充那些nan,代表缺失值,

# 這樣在後面畫圖方便展示

train_data[c] = train_data[c].fillna('Missing')

下面通過箱型圖來對類別特徵的每個取值進行直觀展示:

def bar_plot(x, y, **kwargs):

sns.barplot(x = x, y = y)

x = plt.xticks(rotation = 90)

f = pd.melt(train_data, id_vars = ['price'], value_vars = categorical_features_2)

g = sns.FacetGrid(f, col = 'variable', col_wrap = 2, sharex = False, sharey = False)

g = g.map(bar_plot, "value", "price")

這可以看到類別型特徵相對來說分佈也不會出現極端情況。

特徵工程

在特徵處理中,最重要的我覺得是對異常資料的處理。之前我們已經看到了power特徵的分佈尤為不均勻,那麼這部分有兩種處理方式,一種是對極端值進行捨去,一部分是採用log的方式進行壓縮,那麼這裡都進行介紹。

首先是對極端值進行捨去,那麼可以採用箱型圖來協助判斷,下面封裝一個函數實現:

# 主要就是power的值分佈太過於異常,那麼可以對一些進行處理,刪除掉

# 下面定義一個函數用來處理異常值

def outliers_proc(data, col_name, scale = 3):

# data:原資料

# col_name:要處理異常值的列名稱

# scale:用來控制刪除尺度的

def box_plot_outliers(data_ser, box_scale):

iqr = box_scale * (data_ser.quantile(0.75) - data_ser.quantile(0.25))

# quantile是取出資料對應分位數的數值

val_low = data_ser.quantile(0.25) - iqr # 下界

val_up = data_ser.quantile(0.75) + iqr # 上界

rule_low = (data_ser < val_low) # 篩選出小於下界的索引

rule_up = (data_ser > val_up) # 篩選出大於上界的索引

return (rule_low, rule_up),(val_low, val_up)

data_n = data.copy()

data_series = data_n[col_name] # 取出對應資料

rule, values = box_plot_outliers(data_series, box_scale = scale)

index = np.arange(data_series.shape[0])[rule[0] | rule[1]]

# 先產生0到n-1,然後再用索引把其中處於異常值的索引取出來

print("Delete number is {}".format(len(index)))

data_n = data_n.drop(index) # 整行資料都丟棄

data_n.reset_index(drop = True, inplace = True) # 重新設定索引

print("Now column number is:{}".format(data_n.shape[0]))

index_low = np.arange(data_series.shape[0])[rule[0]]

outliers = data_series.iloc[index_low] # 小於下界的值

print("Description of data less than the lower bound is:")

print(pd.Series(outliers).describe())

index_up = np.arange(data_series.shape[0])[rule[1]]

outliers = data_series.iloc[index_up]

print("Description of data larger than the lower bound is:")

print(pd.Series(outliers).describe())

fig, axes = plt.subplots(1,2,figsize = (10,7))

ax1 = sns.boxplot(y = data[col_name], data = data, palette = "Set1", ax = axes[0])

ax1.set_title("處理異常值前")

ax2 = sns.boxplot(y = data_n[col_name], data = data_n, palette = "Set1", ax = axes[1])

ax2.set_title("處理異常值後")

return data_n

我們應用於power資料集嘗試:

train_data_delete_after = outliers_proc(train_data, "power", scale =3)

Delete number is 963

Now column number is:149037

Description of data less than the lower bound is:

count 0.0

mean NaN

std NaN

min NaN

25% NaN

50% NaN

75% NaN

max NaN

Name: power, dtype: float64

Description of data larger than the lower bound is:

count 963.000000

mean 846.836968

std 1929.418081

min 376.000000

25% 400.000000

50% 436.000000

75% 514.000000

max 19312.000000

Name: power, dtype: float64

可以看到總共刪除了900多條資料,使得最終的箱型圖也正常許多。

那麼另外一種方法就是採用log進行壓縮,但這裡因為我還想用power進行資料分桶,構造出一個power等級的特徵,因此我就先構造再進行壓縮:

bin_power = [i*10 for i in range(31)]

data["power_bin"] = pd.cut(data["power"],bin_power,right = False,labels = False)

這種方法就是將power按照bin_power的數值進行分段,最低一段在新特徵中取值為1,以此類推,但是這樣會導致大於最大一段的取值為nan,也就是power取值大於300的在power_bin中取值為nan,因此可以設定其等級為31來處理:

data['power_bin'] = data['power_bin'].fillna(31)

那麼對於power現在就可以用log進行壓縮了:

data['power'] = np.log(data['power'] + 1)

接下來進行新特徵的構造。

首先是使用時間,我們可以用creatDate減去regDate來表示:

data["use_time"] = (pd.to_datetime(data['creatDate'],format = "%Y%m%d",errors = "coerce")

- pd.to_datetime(data["regDate"], format = "%Y%m%d", errors = "coerce")).dt.days

# errors是當格式轉換錯誤就賦予nan

而這種處理方法由於部分資料日期的缺失,會導致存在缺失值,那麼我的處理方法是填充為均值,但是測試集的填充也需要用訓練資料集的均值來填充,因此我放到後面劃分的時候再來處理。

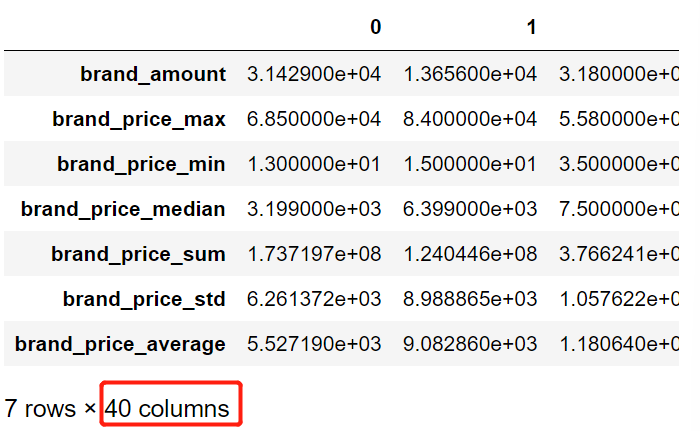

下面是對品牌的銷售統計量創造特徵,因為要計算某個品牌的銷售均值、最大值、方差等等資料,因此我們需要在訓練資料集上計算,測試資料集是未知的,計算完畢後再根據品牌一一對應填上數值即可:

# 計算某個品牌的各種統計數目量

train_gb = train_data.groupby("brand")

all_info = {}

for kind, kind_data in train_gb:

info = {}

kind_data = kind_data[kind_data["price"] > 0]

# 把價格小於0的可能存在的異常值去除

info["brand_amount"] = len(kind_data) # 該品牌的數量

info["brand_price_max"] = kind_data.price.max() # 該品牌價格最大值

info["brand_price_min"] = kind_data.price.min() # 該品牌價格最小值

info["brand_price_median"] = kind_data.price.median() # 該品牌價格中位數

info["brand_price_sum"] = kind_data.price.sum() # 該品牌價格總和

info["brand_price_std"] = kind_data.price.std() # 方差

info["brand_price_average"] = round(kind_data.price.sum() / (len(kind_data) + 1), 2)

# 均值,保留兩位小數

all_info[kind] = info

brand_feature = pd.DataFrame(all_info).T.reset_index().rename(columns = {"index":"brand"})

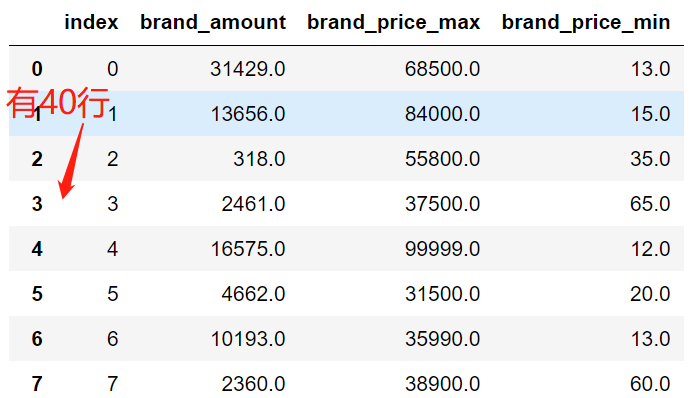

這裡的brand_feature獲得方法可能有點複雜,我一步步解釋出來:

brand_feature = pd.DataFrame(all_info)

brand_feature

這裡是7個統計量特徵作為索引,然後有40列代表有40個品牌。

brand_feature = pd.DataFrame(all_info).T.reset_index()

brand_feature

轉置後重新設定索引,也就是:

將品牌統計量作為列,然後加入一個列為index,可以認為是品牌的取值。

brand_feature = pd.DataFrame(all_info).T.reset_index().rename(columns = {"index":"brand"})

brand_feature

這一個就是將index更名為brand,這一列就是品牌的取值,方便我們後續融合到data中:

data = data.merge(brand_feature, how='left', on='brand')

這就是將data中的brand取值和剛才那個矩陣中的取值一一對應,然後取出對應的特徵各個值,作為新的特徵。

接下來需要對大部分資料進行歸一化:

def max_min(x):

return (x - np.min(x)) / (np.max(x) - np.min(x))

for feature in ["brand_amount","brand_price_average","brand_price_max",

"brand_price_median","brand_price_min","brand_price_std",

"brand_price_sum","power","kilometer"]:

data[feature] = max_min(data[feature])

對類別特徵進行encoder:

# 對類別特徵轉換為onehot

data = pd.get_dummies(data, columns=['model', 'brand', 'bodyType','fuelType','gearbox',

'notRepairedDamage', 'power_bin'],dummy_na=True)

對沒用的特徵可以進行刪除了:

data = data.drop(['creatDate',"regDate", "regionCode"], axis = 1)

至此,關於特徵的處理工作基本上就完成了,但是這只是簡單的處理方式,可以去探索更加深度的特徵資訊(我不會哈哈哈哈)。

建立模型

先處理資料集:

use_feature = [x for x in data.columns if x not in ['SaleID',"name","price","origin"]]

target = data[data["origin"] == "train"]["price"]

target_lg = (np.log(target+1))

train_x = data[data["origin"] == "train"][use_feature]

test_x = data[data["origin"] == "test"][use_feature]

train_x["use_time"] = train_x["use_time"].fillna(train_x["use_time"].mean())

test_x["use_time"] = test_x["use_time"].fillna(train_x["use_time"].mean())# 用訓練資料集的均值填充

train_x.shape

(150000, 371)

可以看看訓練資料是否還存在缺失值:

test_x.isnull().sum()

power 0

kilometer 0

v_0 0

v_1 0

v_2 0

v_3 0

v_4 0

v_5 0

v_6 0

v_7 0

v_8 0

v_9 0

v_10 0

v_11 0

v_12 0

v_13 0

v_14 0

use_time 0

brand_amount 0

brand_price_max 0

brand_price_min 0

brand_price_median 0

brand_price_sum 0

brand_price_std 0

brand_price_average 0

model_0.0 0

model_1.0 0

model_2.0 0

model_3.0 0

model_4.0 0

model_5.0 0

model_6.0 0

model_7.0 0

model_8.0 0

model_9.0 0

model_10.0 0

model_11.0 0

model_12.0 0

model_13.0 0

model_14.0 0

model_15.0 0

model_16.0 0

model_17.0 0

model_18.0 0

model_19.0 0

model_20.0 0

model_21.0 0

model_22.0 0

model_23.0 0

model_24.0 0

model_25.0 0

model_26.0 0

model_27.0 0

model_28.0 0

model_29.0 0

model_30.0 0

model_31.0 0

model_32.0 0

model_33.0 0

model_34.0 0

model_35.0 0

model_36.0 0

model_37.0 0

model_38.0 0

model_39.0 0

model_40.0 0

model_41.0 0

model_42.0 0

model_43.0 0

model_44.0 0

model_45.0 0

model_46.0 0

model_47.0 0

model_48.0 0

model_49.0 0

model_50.0 0

model_51.0 0

model_52.0 0

model_53.0 0

model_54.0 0

model_55.0 0

model_56.0 0

model_57.0 0

model_58.0 0

model_59.0 0

model_60.0 0

model_61.0 0

model_62.0 0

model_63.0 0

model_64.0 0

model_65.0 0

model_66.0 0

model_67.0 0

model_68.0 0

model_69.0 0

model_70.0 0

model_71.0 0

model_72.0 0

model_73.0 0

model_74.0 0

model_75.0 0

model_76.0 0

model_77.0 0

model_78.0 0

model_79.0 0

model_80.0 0

model_81.0 0

model_82.0 0

model_83.0 0

model_84.0 0

model_85.0 0

model_86.0 0

model_87.0 0

model_88.0 0

model_89.0 0

model_90.0 0

model_91.0 0

model_92.0 0

model_93.0 0

model_94.0 0

model_95.0 0

model_96.0 0

model_97.0 0

model_98.0 0

model_99.0 0

model_100.0 0

model_101.0 0

model_102.0 0

model_103.0 0

model_104.0 0

model_105.0 0

model_106.0 0

model_107.0 0

model_108.0 0

model_109.0 0

model_110.0 0

model_111.0 0

model_112.0 0

model_113.0 0

model_114.0 0

model_115.0 0

model_116.0 0

model_117.0 0

model_118.0 0

model_119.0 0

model_120.0 0

model_121.0 0

model_122.0 0

model_123.0 0

model_124.0 0

model_125.0 0

model_126.0 0

model_127.0 0

model_128.0 0

model_129.0 0

model_130.0 0

model_131.0 0

model_132.0 0

model_133.0 0

model_134.0 0

model_135.0 0

model_136.0 0

model_137.0 0

model_138.0 0

model_139.0 0

model_140.0 0

model_141.0 0

model_142.0 0

model_143.0 0

model_144.0 0

model_145.0 0

model_146.0 0

model_147.0 0

model_148.0 0

model_149.0 0

model_150.0 0

model_151.0 0

model_152.0 0

model_153.0 0

model_154.0 0

model_155.0 0

model_156.0 0

model_157.0 0

model_158.0 0

model_159.0 0

model_160.0 0

model_161.0 0

model_162.0 0

model_163.0 0

model_164.0 0

model_165.0 0

model_166.0 0

model_167.0 0

model_168.0 0

model_169.0 0

model_170.0 0

model_171.0 0

model_172.0 0

model_173.0 0

model_174.0 0

model_175.0 0

model_176.0 0

model_177.0 0

model_178.0 0

model_179.0 0

model_180.0 0

model_181.0 0

model_182.0 0

model_183.0 0

model_184.0 0

model_185.0 0

model_186.0 0

model_187.0 0

model_188.0 0

model_189.0 0

model_190.0 0

model_191.0 0

model_192.0 0

model_193.0 0

model_194.0 0

model_195.0 0

model_196.0 0

model_197.0 0

model_198.0 0

model_199.0 0

model_200.0 0

model_201.0 0

model_202.0 0

model_203.0 0

model_204.0 0

model_205.0 0

model_206.0 0

model_207.0 0

model_208.0 0

model_209.0 0

model_210.0 0

model_211.0 0

model_212.0 0

model_213.0 0

model_214.0 0

model_215.0 0

model_216.0 0

model_217.0 0

model_218.0 0

model_219.0 0

model_220.0 0

model_221.0 0

model_222.0 0

model_223.0 0

model_224.0 0

model_225.0 0

model_226.0 0

model_227.0 0

model_228.0 0

model_229.0 0

model_230.0 0

model_231.0 0

model_232.0 0

model_233.0 0

model_234.0 0

model_235.0 0

model_236.0 0

model_237.0 0

model_238.0 0

model_239.0 0

model_240.0 0

model_241.0 0

model_242.0 0

model_243.0 0

model_244.0 0

model_245.0 0

model_246.0 0

model_247.0 0

model_nan 0

brand_0.0 0

brand_1.0 0

brand_2.0 0

brand_3.0 0

brand_4.0 0

brand_5.0 0

brand_6.0 0

brand_7.0 0

brand_8.0 0

brand_9.0 0

brand_10.0 0

brand_11.0 0

brand_12.0 0

brand_13.0 0

brand_14.0 0

brand_15.0 0

brand_16.0 0

brand_17.0 0

brand_18.0 0

brand_19.0 0

brand_20.0 0

brand_21.0 0

brand_22.0 0

brand_23.0 0

brand_24.0 0

brand_25.0 0

brand_26.0 0

brand_27.0 0

brand_28.0 0

brand_29.0 0

brand_30.0 0

brand_31.0 0

brand_32.0 0

brand_33.0 0

brand_34.0 0

brand_35.0 0

brand_36.0 0

brand_37.0 0

brand_38.0 0

brand_39.0 0

brand_nan 0

bodyType_0.0 0

bodyType_1.0 0

bodyType_2.0 0

bodyType_3.0 0

bodyType_4.0 0

bodyType_5.0 0

bodyType_6.0 0

bodyType_7.0 0

bodyType_nan 0

fuelType_0.0 0

fuelType_1.0 0

fuelType_2.0 0

fuelType_3.0 0

fuelType_4.0 0

fuelType_5.0 0

fuelType_6.0 0

fuelType_nan 0

gearbox_0.0 0

gearbox_1.0 0

gearbox_nan 0

notRepairedDamage_0.0 0

notRepairedDamage_1.0 0

notRepairedDamage_nan 0

power_bin_0.0 0

power_bin_1.0 0

power_bin_2.0 0

power_bin_3.0 0

power_bin_4.0 0

power_bin_5.0 0

power_bin_6.0 0

power_bin_7.0 0

power_bin_8.0 0

power_bin_9.0 0

power_bin_10.0 0

power_bin_11.0 0

power_bin_12.0 0

power_bin_13.0 0

power_bin_14.0 0

power_bin_15.0 0

power_bin_16.0 0

power_bin_17.0 0

power_bin_18.0 0

power_bin_19.0 0

power_bin_20.0 0

power_bin_21.0 0

power_bin_22.0 0

power_bin_23.0 0

power_bin_24.0 0

power_bin_25.0 0

power_bin_26.0 0

power_bin_27.0 0

power_bin_28.0 0

power_bin_29.0 0

power_bin_31.0 0

power_bin_nan 0

dtype: int64

可以看到都沒有缺失值了,因此接下來可以用來選擇模型了。

由於現實原因(電腦跑不動xgboost)因此我選擇了lightGBM和隨機森林、梯度提升決策樹三種,然後再用模型融合,具體程式碼如下:

from sklearn import metrics

import matplotlib.pyplot as plt

from sklearn.metrics import roc_auc_score, roc_curve, mean_squared_error,mean_absolute_error, f1_score

import lightgbm as lgb

import xgboost as xgb

from sklearn.ensemble import RandomForestRegressor as rfr

from sklearn.model_selection import KFold, StratifiedKFold,GroupKFold, RepeatedKFold

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn import preprocessing

from sklearn.metrics import mean_absolute_error

from sklearn.ensemble import GradientBoostingRegressor as gbr

from sklearn.linear_model import LinearRegression as lr

lightGBM:

lgb_param = { # 這是訓練的參數列

"num_leaves":7,

"min_data_in_leaf": 20, # 一個葉子上最小分配到的數量,用來處理過擬合

"objective": "regression", # 設定型別為迴歸

"max_depth": -1, # 限制樹的最大深度,-1代表沒有限制

"learning_rate": 0.003,

"boosting": "gbdt", # 用gbdt演演算法

"feature_fraction": 0.50, # 每次迭代時使用18%的特徵參與建樹,引入特徵子空間的多樣性

"bagging_freq": 1, # 每一次迭代都執行bagging

"bagging_fraction": 0.55, # 每次bagging在不進行重取樣的情況下隨機選擇55%資料訓練

"bagging_seed": 1,

"metric": 'mean_absolute_error',

"lambda_l1": 0.5,

"lambda_l2": 0.5,

"verbosity": -1 # 列印訊息的詳細程度

}

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state = 4)

# 產生一個容器,可以用來對對資料集進行打亂的5次切分,以此來進行五折交叉驗證

valid_lgb = np.zeros(len(train_x))

predictions_lgb = np.zeros(len(test_x))

for fold_, (train_idx, valid_idx) in enumerate(folds.split(train_x, target)):

# 切分後返回的訓練集和驗證集的索引

print("fold n{}".format(fold_+1)) # 當前第幾折

train_data_now = lgb.Dataset(train_x.iloc[train_idx], target_lg[train_idx])

valid_data_now = lgb.Dataset(train_x.iloc[valid_idx], target_lg[valid_idx])

# 取出資料並轉換為lgb的資料

num_round = 10000

lgb_model = lgb.train(lgb_param, train_data_now, num_round,

valid_sets=[train_data_now, valid_data_now], verbose_eval=500,

early_stopping_rounds = 800)

valid_lgb[valid_idx] = lgb_model.predict(train_x.iloc[valid_idx],

num_iteration=lgb_model.best_iteration)

predictions_lgb += lgb_model.predict(test_x, num_iteration=

lgb_model.best_iteration) / folds.n_splits

# 這是將預測概率進行平均

print("CV score: {:<8.8f}".format(mean_absolute_error(valid_lgb, target_lg)))

這裡需要注意我進入訓練時split用的是target,而在其中價格用的是target_lg,因為target是原始的價格,可以認為是離散的取值,但是我target_lg經過np.log之後,我再用target_lg進行split時就會報錯,為:

Supported target types are: ('binary', 'multiclass'). Got 'continuous' instead.

我認為是np.nan將其轉換為了連續型數值,而不是原來的離散型數值取值,因此我只能用target去產生切片索引。

CV score: 0.15345674

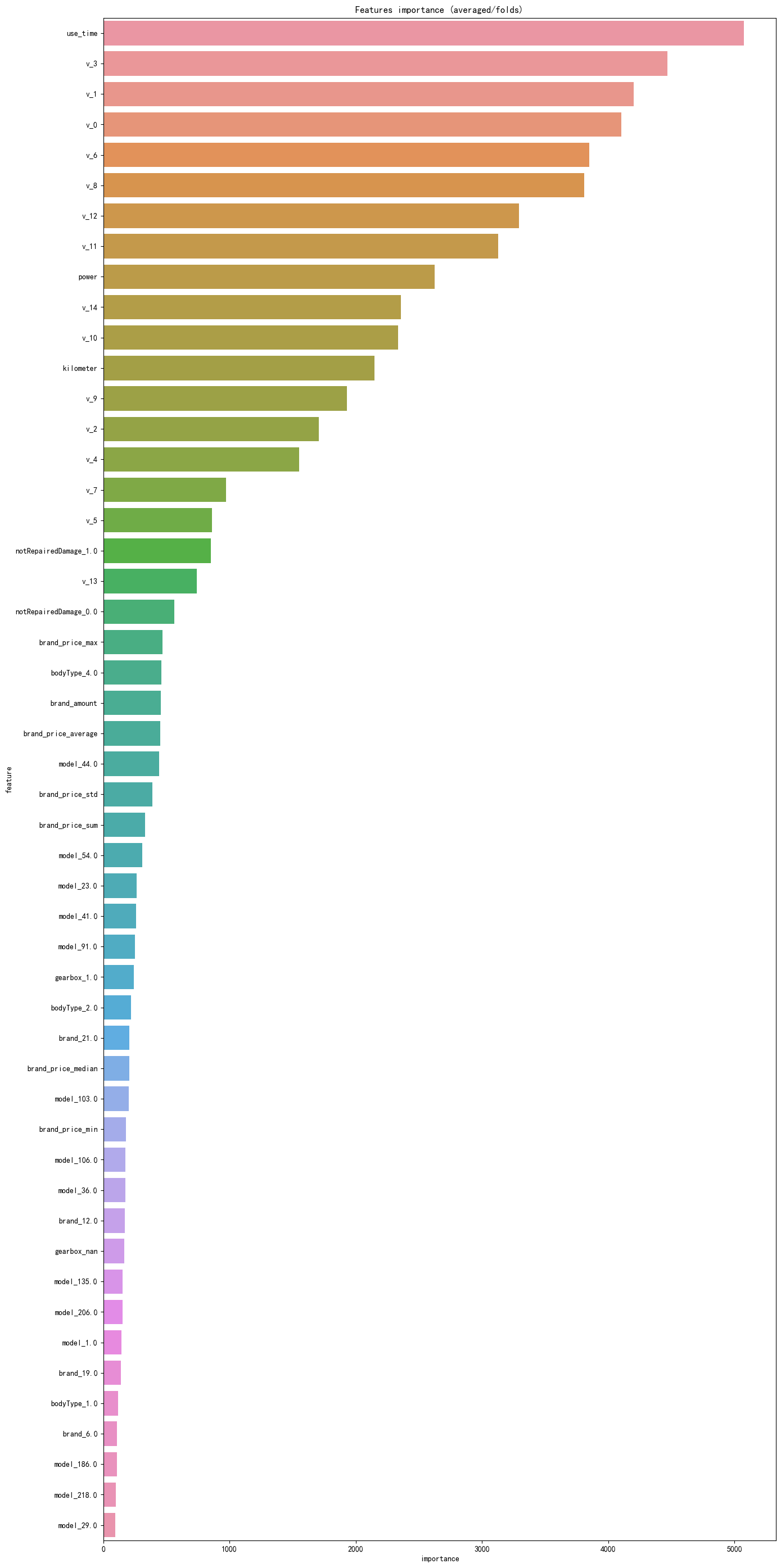

同樣,觀察一下特徵重要性:

pd.set_option("display.max_columns", None) # 設定可以顯示的最大行和最大列

pd.set_option('display.max_rows', None) # 如果超過就顯示省略號,none表示不省略

#設定value的顯示長度為100,預設為50

pd.set_option('max_colwidth',100)

# 建立,然後只有一列就是剛才所使用的的特徵

df = pd.DataFrame(train_x.columns.tolist(), columns=['feature'])

df['importance'] = list(lgb_model.feature_importance())

df = df.sort_values(by='importance', ascending=False) # 降序排列

plt.figure(figsize = (14,28))

sns.barplot(x='importance', y='feature', data = df.head(50))# 取出前五十個畫圖

plt.title('Features importance (averaged/folds)')

plt.tight_layout() # 自動調整適應範圍

可以看到使用時間遙遙領先。

隨機森林:

#RandomForestRegressor隨機森林

folds = KFold(n_splits=5, shuffle=True, random_state=2019)

valid_rfr = np.zeros(len(train_x))

predictions_rfr = np.zeros(len(test_x))

for fold_, (trn_idx, val_idx) in enumerate(folds.split(train_x, target)):

print("fold n°{}".format(fold_+1))

tr_x = train_x.iloc[trn_idx]

tr_y = target_lg[trn_idx]

rfr_model = rfr(n_estimators=1600,max_depth=9, min_samples_leaf=9,

min_weight_fraction_leaf=0.0,max_features=0.25,

verbose=1,n_jobs=-1) #並行化

#verbose = 0 為不在標準輸出流輸出紀錄檔資訊

#verbose = 1 為輸出進度條記錄

#verbose = 2 為每個epoch輸出一行記錄

rfr_model.fit(tr_x,tr_y)

valid_rfr[val_idx] = rfr_model.predict(train_x.iloc[val_idx])

predictions_rfr += rfr_model.predict(test_x) / folds.n_splits

print("CV score: {:<8.8f}".format(mean_absolute_error(valid_rfr, target_lg)))

CV score: 0.17160127

梯度提升:

#GradientBoostingRegressor梯度提升決策樹

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=2018)

valid_gbr = np.zeros(len(train_x))

predictions_gbr = np.zeros(len(test_x))

for fold_, (trn_idx, val_idx) in enumerate(folds.split(train_x, target)):

print("fold n°{}".format(fold_+1))

tr_x = train_x.iloc[trn_idx]

tr_y = target_lg[trn_idx]

gbr_model = gbr(n_estimators=100, learning_rate=0.1,subsample=0.65 ,max_depth=7,

min_samples_leaf=20, max_features=0.22,verbose=1)

gbr_model.fit(tr_x,tr_y)

valid_gbr[val_idx] = gbr_model.predict(train_x.iloc[val_idx])

predictions_gbr += gbr_model.predict(test_x) / folds.n_splits

print("CV score: {:<8.8f}".format(mean_absolute_error(valid_gbr, target_lg)))

CV score: 0.14386158

下面用邏輯迴歸對這三種模型進行融合:

train_stack2 = np.vstack([valid_lgb, valid_rfr, valid_gbr]).transpose()

test_stack2 = np.vstack([predictions_lgb, predictions_rfr,predictions_gbr]).transpose()

#交叉驗證:5折,重複2次

folds_stack = RepeatedKFold(n_splits=5, n_repeats=2, random_state=7)

valid_stack2 = np.zeros(train_stack2.shape[0])

predictions_lr2 = np.zeros(test_stack2.shape[0])

for fold_, (trn_idx, val_idx) in enumerate(folds_stack.split(train_stack2,target)):

print("fold {}".format(fold_))

trn_data, trn_y = train_stack2[trn_idx], target_lg.iloc[trn_idx].values

val_data, val_y = train_stack2[val_idx], target_lg.iloc[val_idx].values

#Kernel Ridge Regression

lr2 = lr()

lr2.fit(trn_data, trn_y)

valid_stack2[val_idx] = lr2.predict(val_data)

predictions_lr2 += lr2.predict(test_stack2) / 10

print("CV score: {:<8.8f}".format(mean_absolute_error(target_lg.values, valid_stack2)))

CV score: 0.14343221

那麼就可以將預測結果先經過exp得到真正結果就去提交啦!

prediction_test = np.exp(predictions_lr2) - 1

test_submission = pd.read_csv("used_car_testB_20200421.csv", sep = " ")

test_submission["price"] = prediction_test

feature_submission = ["SaleID","price"]

sub = test_submission[feature_submission]

sub.to_csv("mysubmission.csv",index = False)

上述是直接指定引數,那麼接下來我會對lightGBM進行調參,看看是否能夠取得更好的結果:

# 下面對lightgbm調參

# 構建資料集

train_y = target_lg

x_train, x_valid, y_train, y_valid = train_test_split(train_x, train_y,

random_state = 1, test_size = 0.2)

# 資料轉換

lgb_train = lgb.Dataset(x_train, y_train, free_raw_data = False)

lgb_valid = lgb.Dataset(x_valid, y_valid, reference=lgb_train,free_raw_data=False)

# 設定初始引數

params = {

"boosting_type":"gbdt",

"objective":"regression",

"metric":"mae",

"nthread":4,

"learning_rate":0.1,

"verbosity": -1

}

# 交叉驗證調參

print("交叉驗證")

min_mae = 10000

best_params = {}

print("調參1:提高準確率")

for num_leaves in range(5,100,5):

for max_depth in range(3,10,1):

params["num_leaves"] = num_leaves

params["max_depth"] = max_depth

cv_results = lgb.cv(params, lgb_train,seed = 1,nfold =5,

metrics=["mae"], early_stopping_rounds = 15,stratified=False,

verbose_eval = True)

mean_mae = pd.Series(cv_results['l1-mean']).max()

boost_rounds = pd.Series(cv_results["l1-mean"]).idxmax()

if mean_mae <= min_mae:

min_mae = mean_mae

best_params["num_leaves"] = num_leaves

best_params["max_depth"] = max_depth

if "num_leaves" and "max_depth" in best_params.keys():

params["num_leaves"] = best_params["num_leaves"]

params["max_depth"] = best_params["max_depth"]

print("調參2:降低過擬合")

for max_bin in range(5,256,10):

for min_data_in_leaf in range(1,102,10):

params['max_bin'] = max_bin

params['min_data_in_leaf'] = min_data_in_leaf

cv_results = lgb.cv(

params,

lgb_train,

seed=1,

nfold=5,

metrics=['mae'],

early_stopping_rounds=10,

verbose_eval=True,

stratified=False

)

mean_mae = pd.Series(cv_results['l1-mean']).max()

boost_rounds = pd.Series(cv_results['l1-mean']).idxmax()

if mean_mae <= min_mae:

min_mae = mean_mae

best_params['max_bin']= max_bin

best_params['min_data_in_leaf'] = min_data_in_leaf

if 'max_bin' and 'min_data_in_leaf' in best_params.keys():

params['min_data_in_leaf'] = best_params['min_data_in_leaf']

params['max_bin'] = best_params['max_bin']

print("調參3:降低過擬合")

for feature_fraction in [0.6,0.7,0.8,0.9,1.0]:

for bagging_fraction in [0.6,0.7,0.8,0.9,1.0]:

for bagging_freq in range(0,50,5):

params['feature_fraction'] = feature_fraction

params['bagging_fraction'] = bagging_fraction

params['bagging_freq'] = bagging_freq

cv_results = lgb.cv(

params,

lgb_train,

seed=1,

nfold=5,

metrics=['mae'],

early_stopping_rounds=10,

verbose_eval=True,

stratified=False

)

mean_mae = pd.Series(cv_results['l1-mean']).max()

boost_rounds = pd.Series(cv_results['l1-mean']).idxmax()

if mean_mae <= min_mae:

min_mae = mean_mae

best_params['feature_fraction'] = feature_fraction

best_params['bagging_fraction'] = bagging_fraction

best_params['bagging_freq'] = bagging_freq

if 'feature_fraction' and 'bagging_fraction' and 'bagging_freq' in best_params.keys():

params['feature_fraction'] = best_params['feature_fraction']

params['bagging_fraction'] = best_params['bagging_fraction']

params['bagging_freq'] = best_params['bagging_freq']

print("調參4:降低過擬合")

for lambda_l1 in [1e-5,1e-3,1e-1,0.0,0.1,0.3,0.5,0.7,0.9,1.0]:

for lambda_l2 in [1e-5,1e-3,1e-1,0.0,0.1,0.4,0.6,0.7,0.9,1.0]:

params['lambda_l1'] = lambda_l1

params['lambda_l2'] = lambda_l2

cv_results = lgb.cv(

params,

lgb_train,

seed=1,

nfold=5,

metrics=['mae'],

early_stopping_rounds=10,

verbose_eval=True,

stratified=False

)

mean_mae = pd.Series(cv_results['l1-mean']).max()

boost_rounds = pd.Series(cv_results['l1-mean']).idxmax()

if mean_mae <= min_mae:

min_mae = mean_mae

best_params['lambda_l1'] = lambda_l1

best_params['lambda_l2'] = lambda_l2

if 'lambda_l1' and 'lambda_l2' in best_params.keys():

params['lambda_l1'] = best_params['lambda_l1']

params['lambda_l2'] = best_params['lambda_l2']

print("調參5:降低過擬合2")

for min_split_gain in [0.0,0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1.0]:

params['min_split_gain'] = min_split_gain

cv_results = lgb.cv(

params,

lgb_train,

seed=1,

nfold=5,

metrics=['mae'],

early_stopping_rounds=10,

verbose_eval=True,

stratified=False

)

mean_mae = pd.Series(cv_results['l1-mean']).max()

boost_rounds = pd.Series(cv_results['l1-mean']).idxmax()

if mean_mae <= min_mae:

min_mae = mean_mae

best_params['min_split_gain'] = min_split_gain

if 'min_split_gain' in best_params.keys():

params['min_split_gain'] = best_params['min_split_gain']

print(best_params)

注意在lgb.cv中要設定引數stratified=False,同樣是之間那個連續與離散的問題!

{'num_leaves': 95, 'max_depth': 9, 'max_bin': 215, 'min_data_in_leaf': 71, 'feature_fraction': 1.0, 'bagging_fraction': 1.0, 'bagging_freq': 45, 'lambda_l1': 0.0, 'lambda_l2': 0.0, 'min_split_gain': 1.0}

那麼再用該模型做出預測:

best_params["verbosity"] = -1

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state = 4)

# 產生一個容器,可以用來對對資料集進行打亂的5次切分,以此來進行五折交叉驗證

valid_lgb = np.zeros(len(train_x))

predictions_lgb = np.zeros(len(test_x))

for fold_, (train_idx, valid_idx) in enumerate(folds.split(train_x, target)):

# 切分後返回的訓練集和驗證集的索引

print("fold n{}".format(fold_+1)) # 當前第幾折

train_data_now = lgb.Dataset(train_x.iloc[train_idx], target_lg[train_idx])

valid_data_now = lgb.Dataset(train_x.iloc[valid_idx], target_lg[valid_idx])

# 取出資料並轉換為lgb的資料

num_round = 10000

lgb_model = lgb.train(best_params, train_data_now, num_round,

valid_sets=[train_data_now, valid_data_now], verbose_eval=500,

early_stopping_rounds = 800)

valid_lgb[valid_idx] = lgb_model.predict(train_x.iloc[valid_idx],

num_iteration=lgb_model.best_iteration)

predictions_lgb += lgb_model.predict(test_x, num_iteration=

lgb_model.best_iteration) / folds.n_splits

# 這是將預測概率進行平均

print("CV score: {:<8.8f}".format(mean_absolute_error(valid_lgb, target_lg)))

CV score: 0.14548046

再用模型融合,同樣的程式碼,得到:

CV score: 0.14071899

完成!