OpenCV之C++經典案例

四個案例實戰

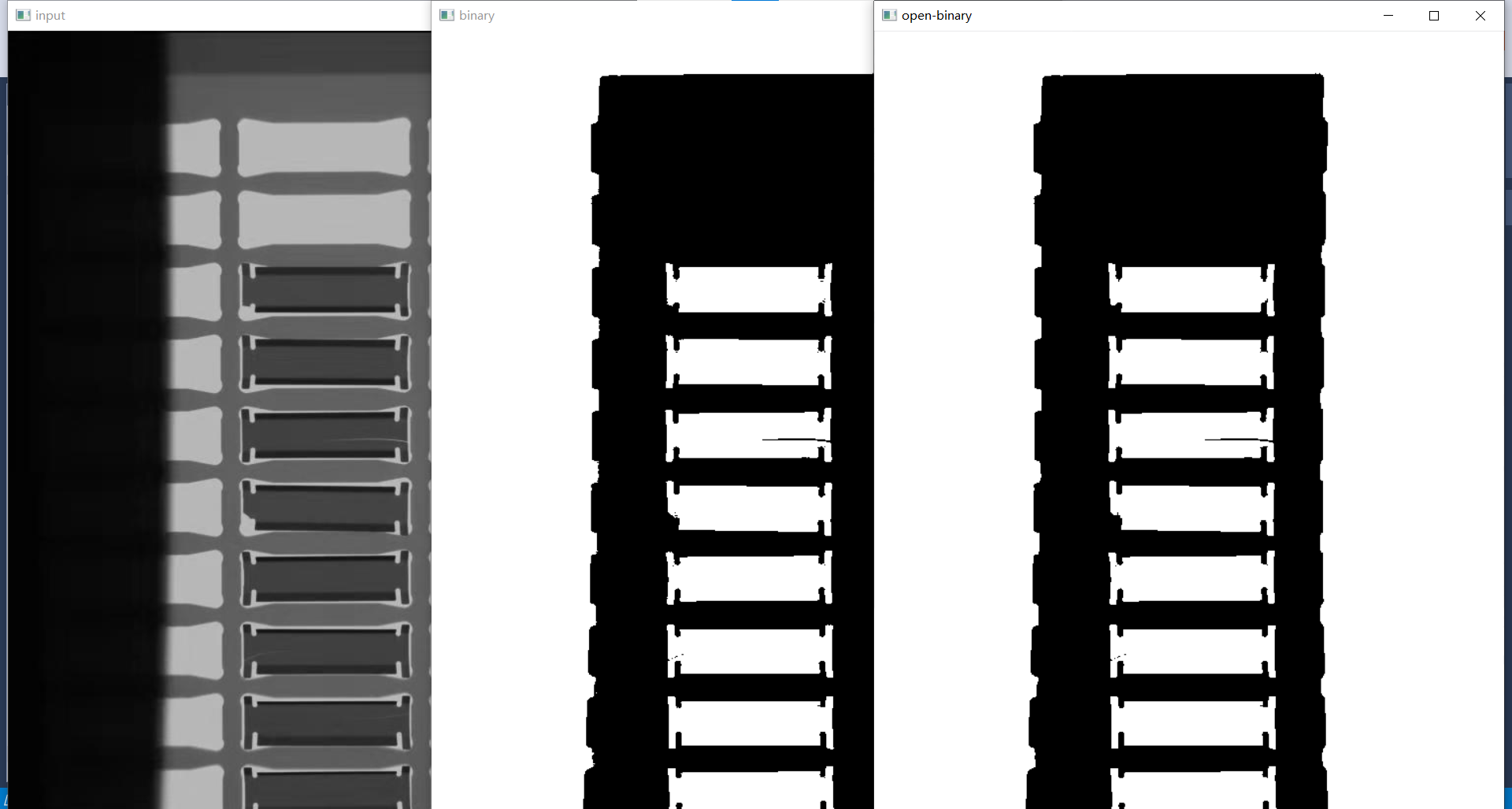

1、刀片缺陷檢測

2、自定義物件檢測

3、實時二維條碼檢測

4、影象分割與色彩提取

1、刀片缺陷檢測

問題分析

解決思路

- 嘗試二值影象分析

- 模板匹配技術

程式碼實現

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

Mat tpl;

void sort_box(vector<Rect> &boxes);

void detect_defect(Mat &binary, vector<Rect> rects, vector<Rect> &defect);

int main(int argc, char** argv) {

Mat src = imread("D:/images/ce_01.jpg");

if (src.empty()) {

printf("could not load image file...");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

//影象二值化

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU); //全域性閾值

imshow("binary", binary);

//定義結構元素,進行開操作去除小的干擾點

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(binary, binary, MORPH_OPEN, se);

imshow("open-binary", binary);

//輪廓發現

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

vector<Rect> rects;

findContours(binary, contours, hierarchy, RETR_LIST, CHAIN_APPROX_SIMPLE);

int height = src.rows;

for (size_t t = 0; t < contours.size(); t++) {

Rect rect = boundingRect(contours[t]);

double area = contourArea(contours[t]);

if (rect.height > (height / 2)) {

continue;

}

if (area < 150) {

continue;

}

rects.push_back(rect); //不知道rects大小的情況下,向rects中放入rect

//rectangle(src, rect, Scalar(0, 255, 0), 2, 8, 0); //繪製矩形

//drawContours(src, contours, t, Scalar(0, 0, 255), 2, 8); //繪製輪廓

}

sort_box(rects);

tpl = binary(rects[1]);

//for (int i = 0; i < rects.size(); i++) {

// putText(src, format("%d", i), rects[i].tl(), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1, 8);

//}

vector<Rect> defects;

detect_defect(binary, rects, defects);

for (int i = 0; i < defects.size(); i++) { //將檢測到的缺陷部分繪製出來

rectangle(src, defects[i], Scalar(0, 0, 255), 2, 8, 0);

putText(src, "bad", defects[i].tl(), FONT_HERSHEY_PLAIN, 1.0, Scalar(0, 255, 0), 1, 8);

}

imshow("result", src);

waitKey(0);

return 0;

}

void sort_box(vector<Rect> &boxes) {

int size = boxes.size();

for (int i = 0; i < size; i++) {

for (int j = i; j < size; j++) {

int x = boxes[j].x;

int y = boxes[j].y;

if (y < boxes[i].y) {

Rect temp = boxes[i];

boxes[i] = boxes[j];

boxes[j] = temp;

}

}

}

}

void detect_defect(Mat &binary, vector<Rect> rects, vector<Rect> &defect) {

int h = tpl.rows;

int w = tpl.cols;

int size = rects.size();

for (int i = 0; i < size; i++) {

//構建diff

Mat roi = binary(rects[i]);

resize(roi, roi, tpl.size()); //將roi大小統一

Mat mask;

subtract(tpl, roi, mask);

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1)); //開操作去除微小差異

morphologyEx(mask, mask, MORPH_OPEN, se);

threshold(mask, mask, 0, 255, THRESH_BINARY); //將獲取的mask二值化

imshow("mask", mask);

waitKey(0);

//根據diff查詢缺陷,閾值化

int count = 0;

for (int row = 0; row < h; row++) {

for (int col = 0; col < w; col++) {

int pv = mask.at<uchar>(row, col); //獲取每一個畫素值,如果等於255則count+1

if (pv == 255) {

count++;

}

}

}

//填充一個畫素塊

int mh = mask.rows + 2;

int mw = mask.cols + 2;

Mat m1 = Mat::zeros(Size(mw, mh), mask.type());

Rect mroi; //將mask複製到m1的mroi區域,並使mroi區域四周各有一個畫素值為0

mroi.x = 1;

mroi.y = 1;

mroi.height = mask.rows;

mroi.width = mask.cols;

mask.copyTo(m1(mroi));

//輪廓分析,對每個矩形中的差異進行過濾

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(m1, contours, hierarchy, RETR_LIST, CHAIN_APPROX_SIMPLE); //查詢每一個矩形中微小的差異輪廓

bool find = false;

for (size_t t = 0; t < contours.size(); t++) { //迴圈判斷矩形中的差異區域有無滿足要求的,如果有則find=true

Rect rect = boundingRect(contours[t]);

float ratio = (float)rect.width / ((float)rect.height); //計算矩形寬高比

//將寬高比>4的並且位於上下邊緣的差異區域過濾

if (ratio > 4.0 && (rect.y < 5 || (m1.rows - (rect.height + rect.y)) < 10)) { //將邊緣的白色區域過濾

continue;

}

double area = contourArea(contours[t]);

if (area > 10) {

printf("ratio:%.2f,area:%.2f \n", ratio, area);

find = true;

}

}

if (count > 50 && find) { //如果等於255的畫素個數>50並且符合以上判斷要求,就將該矩形放入缺陷容器defect中

printf("count:%d \n", count);

defect.push_back(rects[i]);

}

}

//返回結果

}

效果:

1、影象二值化並開操作

2、獲取每個刀片區域並排序

3、根據與模板差異的畫素個數篩選有缺陷的刀片

4、根據每個刀片區域與模板的差異部位寬高比、位置及畫素個數篩選有缺陷的刀片

2、自定義物件檢測

解決思路

- OpenCV中物件檢測類問題

- 模板匹配

- 特徵匹配

- 特徵 + 機器學習

- 選擇HOG特徵 + SVM機器學習生成模型

- 開窗檢測

HOG特徵

- 灰度影象轉換

- 梯度計算

- 分網格的梯度方向直方圖

- 塊描述子

- 塊描述子歸一化

- 特徵資料與檢測視窗

- 匹配方法

-

根據塊的形狀不一樣HOG特徵分為C-HOG和R-HOG

-

基於 L2 實現塊描述子歸一化,歸一化因子計算:

SVM簡要介紹

- 線性不可分對映為線性可分離

- 核函數:線性、高斯、多項式等

首先svm演演算法,當遇到分佈比較雜亂的函數時,可以進行升維處理,將二維不好處理的問題改為三維,是一個比較好的辦法;

此外,svm分割資料的操作也比較合理,劃分邊界及區域在經過一些複雜的函數計算什麼的,可以算出劃分的邊界的位置,劃分好邊界線,之後便可以劃分邊界區域,這樣區分樣本的時候就會事半功倍了。

對於升維進行計算資料的話,是存在一個核函數的,具體的講解如下:

當樣本在原始空間線性不可分時,可將樣本從原始空間對映到一個更高維的特徵空間,使得樣本在這個特徵空間內線性可分。而引入這樣的對映後,所要求解的對偶問題的求解中,無需求解真正的對映函數,而只需要知道其核函數。

核函數的定義:K(x,y)=<ϕ(x),ϕ(y)>,即在特徵空間的內積等於它們在原始樣本空間中通過核函數 K 計算的結果。一方面資料變成了高維空間中線性可分的資料,另一方面不需要求解具體的對映函數,只需要給定具體的核函數即可,這樣使得求解的難度大大降低。

程式碼實現

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace cv::ml;

using namespace std;

string positive_dir = "D:/images/elec_watchzip/elec_watch/positive";

string negative_dir = "D:/images/elec_watchzip/elec_watch/negative";

void get_hog_descriptor(Mat &image, vector<float> &desc);

void generate_dataset(Mat &trainData, Mat &labels);

void svm_train(Mat &trainData, Mat &labels);

int main(int argc, char** argv) {

//read data and generate dataset

Mat trainData = Mat::zeros(Size(3780, 26), CV_32FC1);

Mat labels = Mat::zeros(Size(1, 26), CV_32SC1);

generate_dataset(trainData, labels);

//SVM train and save model

svm_train(trainData, labels);

//load model

Ptr<SVM> svm = SVM::load("D:/images/elec_watchzip/elec_watch/hog_elec.xml"); //讀取訓練好的模型

//detect custom object

Mat test = imread("D:/images/elec_watchzip/elec_watch/test/scene_01.jpg");

resize(test, test, Size(0, 0), 0.2, 0.2); //重新設定影象大小dsize與(fx、fy)不能同時為0

imshow("input", test);

Rect winRect;

winRect.width = 64;

winRect.height = 128;

int sum_x = 0;

int sum_y = 0;

int count = 0;

//開窗檢測...

for (int row = 64; row < test.rows - 64; row += 4) {

for (int col = 32; col < test.cols - 32; col += 4) {

winRect.x = col - 32;

winRect.y = row - 64;

vector<float> fv;

Mat img = test(winRect);

get_hog_descriptor(img, fv);

Mat one_row = Mat::zeros(Size(fv.size(), 1), CV_32FC1);

for (int i = 0; i < fv.size(); i++) {

one_row.at<float>(0, i) = fv[i];

}

float result = svm->predict(one_row);

if (result > 0) {

//rectangle(test, winRect, Scalar(0, 0, 255), 1, 8, 0);

count += 1;

sum_x += winRect.x;

sum_y += winRect.y;

}

}

}

//顯示box

winRect.x = sum_x / count;

winRect.y = sum_y / count;

rectangle(test, winRect, Scalar(255, 0, 0), 2, 8, 0);

imshow("object detection result", test);

waitKey(0);

return 0;

}

void get_hog_descriptor(Mat &image, vector<float> &desc) {

HOGDescriptor hog; //HOG描述子

int h = image.rows;

int w = image.cols;

float rate = 64.0 / w;

Mat img, gray;

resize(image, img, Size(64, int(rate*h))); //保證寬為64,同時寬高比例與原圖相同

cvtColor(img, gray, COLOR_BGR2GRAY);

Mat result = Mat::zeros(Size(64, 128), CV_8UC1);

result = Scalar(127);

Rect roi;

roi.x = 0;

roi.width = 64;

roi.y = (128 - gray.rows) / 2;

roi.height = gray.rows;

gray.copyTo(result(roi));

hog.compute(result, desc, Size(8, 8), Size(0, 0));

printf("desc len:%d\n", desc.size());

}

void generate_dataset(Mat &trainData, Mat &labels) {

vector<String> images;

glob(positive_dir, images); //掃描目錄,得到所有正樣本

int pos_num = images.size();

for (int i = 0; i < images.size(); i++) {

Mat image = imread(images[i].c_str());

vector<float> fv;

get_hog_descriptor(image, fv);

for (int j = 0; j < fv.size(); j++) {

trainData.at<float>(i, j) = fv[j];

}

labels.at<int>(i, 0) = 1;

}

images.clear();

glob(negative_dir, images);

for (int i = 0; i < images.size(); i++) {

Mat image = imread(images[i].c_str());

vector<float> fv;

get_hog_descriptor(image, fv);

for (int j = 0; j < fv.size(); j++) {

trainData.at<float>(i + pos_num, j) = fv[j];

}

labels.at<int>(i + pos_num, 0) = -1;

}

}

void svm_train(Mat &trainData, Mat &labels) {

printf("\n start SVM training... \n");

Ptr<SVM> svm = SVM::create();

svm->setC(2.67); //值越大,分類模型越複雜

svm->setType(SVM::C_SVC); //分類器型別

svm->setKernel(SVM::LINEAR); //線性核心,速度快

svm->setGamma(5.383); //線性核心可以忽略,其他核心需要

svm->train(trainData, ROW_SAMPLE, labels); //按行讀取

clog << "....[Done]" << endl;

printf("end train...\n");

//save xml

svm->save("D:/images/elec_watchzip/elec_watch/hog_elec.xml"); //儲存路徑

}

效果:

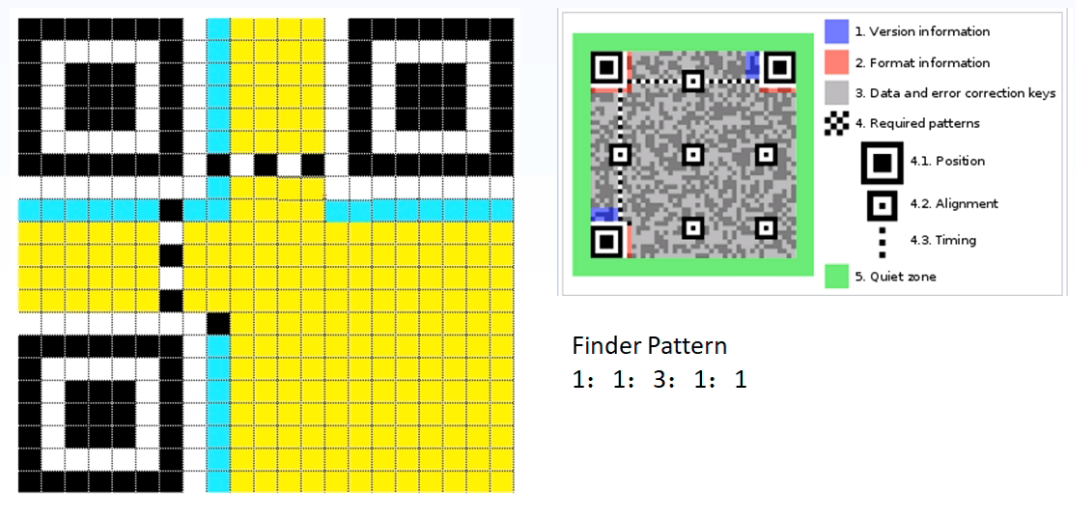

3、二維條碼檢測與定位

二維定位檢測知識點:

- 二維條碼特徵

- 影象二值化

- 輪廓提取

- 透視變換

- 幾何分析

二維條碼特徵

影象二值化與輪廓分析

- 全域性或者區域性閾值選擇

- 全域性閾值分割

- 最外層輪廓與多層輪廓

- 面積與幾何形狀過濾

- 透視變換與單應性矩陣

幾何分析

- 尋找每個正方形

- 尋找X方向1 : 1 : 3 : 1 : 1結構

- 尋找Y方向比率結構

- 得到輸出結果

演演算法流程設計

- 面積太小不能識別排除

程式碼層面知識點與執行

- minAreaRect

- findHomography

- warpPerspective

程式碼實現

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void scanAndDetectQRCode(Mat & image);

bool isXCorner(Mat &image);

bool isYCorner(Mat &image);

Mat transformCorner(Mat &image, RotatedRect &rect);

int main(int argc, char** argv) {

// Mat src = imread("D:/images/qrcode.png");

Mat src = imread("D:/images/qrcode_07.png");

if (src.empty()) {

printf("could not load image file...");

return -1;

}

namedWindow("input", WINDOW_AUTOSIZE);

imshow("input", src);

scanAndDetectQRCode(src);

waitKey(0);

return 0;

}

void scanAndDetectQRCode(Mat & image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

// detect rectangle now

vector<vector<Point>> contours;

vector<Vec4i> hireachy;

Moments monents;

findContours(binary.clone(), contours, hireachy, RETR_LIST, CHAIN_APPROX_SIMPLE, Point());

Mat result = Mat::zeros(image.size(), CV_8UC1);

for (size_t t = 0; t < contours.size(); t++) {

double area = contourArea(contours[t]);

if (area < 100) continue; //將面積<100的輪廓去掉

RotatedRect rect = minAreaRect(contours[t]);

float w = rect.size.width;

float h = rect.size.height;

float rate = min(w, h) / max(w, h);

if (rate > 0.85 && w < image.cols / 4 && h < image.rows / 4) { //根據寬高比進行過濾

Mat qr_roi = transformCorner(image, rect);

// 根據矩形特徵進行幾何分析

if (isXCorner(qr_roi)) {

drawContours(image, contours, static_cast<int>(t), Scalar(255, 0, 0), 2, 8);

drawContours(result, contours, static_cast<int>(t), Scalar(255), 2, 8);

}

}

}

// scan all key points

vector<Point> pts;

for (int row = 0; row < result.rows; row++) {

for (int col = 0; col < result.cols; col++) {

int pv = result.at<uchar>(row, col);

if (pv == 255) {

pts.push_back(Point(col, row)); //向pts容器中新增白色畫素點座標

}

}

}

RotatedRect rrt = minAreaRect(pts); //獲取pts的最小外接矩形

Point2f vertices[4];

rrt.points(vertices);

pts.clear();

for (int i = 0; i < 4; i++) { //繪製最小外接矩形的四根線

line(image, vertices[i], vertices[(i + 1) % 4], Scalar(0, 255, 0), 2);

pts.push_back(vertices[i]);

}

Mat mask = Mat::zeros(result.size(), result.type()); //將result繪製成指定形狀

vector<vector<Point>> cpts;

cpts.push_back(pts);

drawContours(mask, cpts, 0, Scalar(255), -1, 8); //填充

Mat dst;

bitwise_and(image, image, dst, mask); //通過與操作,獲取二維條碼區域

imshow("detect result", image);

//imwrite("D:/case03.png", image);

imshow("result-mask", mask);

imshow("qrcode-roi", dst);

}

bool isXCorner(Mat &image) { //對找到的候選輪廓進行分析

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

int xb = 0, yb = 0;

int w1x = 0, w2x = 0;

int b1x = 0, b2x = 0;

int width = binary.cols;

int height = binary.rows;

int cy = height / 2;

int cx = width / 2;

int pv = binary.at<uchar>(cy, cx);

if (pv == 255) return false; //判斷中心畫素是否為黑色

// verfiy finder pattern

bool findleft = false, findright = false;

int start = 0, end = 0;

int offset = 0;

while (true) { //從中間畫素開始向兩側遍歷查詢

offset++;

if ((cx - offset) <= width / 8 || (cx + offset) >= width - 1) {

start = -1;

end = -1;

break;

}

pv = binary.at<uchar>(cy, cx - offset);

if (pv == 255) {

start = cx - offset;

findleft = true;

}

pv = binary.at<uchar>(cy, cx + offset);

if (pv == 255) {

end = cx + offset;

findright = true;

}

if (findleft && findright) { //當左右兩側都找到白色畫素時終止迴圈,start和end分別儲存起止座標

break;

}

}

if (start <= 0 || end <= 0) {

return false;

}

xb = end - start;

for (int col = start; col > 0; col--) {

pv = binary.at<uchar>(cy, col);

if (pv == 0) {

w1x = start - col;

break;

}

}

for (int col = end; col < width - 1; col++) {

pv = binary.at<uchar>(cy, col);

if (pv == 0) {

w2x = col - end;

break;

}

}

for (int col = (end + w2x); col < width; col++) {

pv = binary.at<uchar>(cy, col);

if (pv == 255) {

b2x = col - end - w2x;

break;

}

else {

b2x++;

}

}

for (int col = (start - w1x); col > 0; col--) {

pv = binary.at<uchar>(cy, col);

if (pv == 255) {

b1x = start - col - w1x;

break;

}

else {

b1x++;

}

}

float sum = xb + b1x + b2x + w1x + w2x;

//printf("xb : %d, b1x = %d, b2x = %d, w1x = %d, w2x = %d\n", xb , b1x , b2x , w1x , w2x);

xb = static_cast<int>((xb / sum)*7.0 + 0.5); //+0.5為了保證獲取四捨五入的值,避免浮點數轉換為0

b1x = static_cast<int>((b1x / sum)*7.0 + 0.5);

b2x = static_cast<int>((b2x / sum)*7.0 + 0.5);

w1x = static_cast<int>((w1x / sum)*7.0 + 0.5);

w2x = static_cast<int>((w2x / sum)*7.0 + 0.5);

printf("xb : %d, b1x = %d, b2x = %d, w1x = %d, w2x = %d\n", xb, b1x, b2x, w1x, w2x);

if ((xb == 3 || xb == 4) && b1x == b2x && w1x == w2x && w1x == b1x && b1x == 1) { // 1:1:3:1:1

return true;

}

else {

return false;

}

}

bool isYCorner(Mat &image) { //對中心畫素一側的畫素進行檢測,對黑白畫素個數分別計數,

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

int width = binary.cols;

int height = binary.rows;

int cy = height / 2;

int cx = width / 2;

int pv = binary.at<uchar>(cy, cx);

int bc = 0, wc = 0;

bool found = true;

for (int row = cy; row > 0; row--) {

pv = binary.at<uchar>(row, cx);

if (pv == 0 && found) {

bc++;

}

else if (pv == 255) {

found = false;

wc++;

}

}

bc = bc * 2;

if (bc <= wc) { //如果白色畫素個數大於等於黑色畫素個數的兩倍,返回false,黑色畫素個數兩倍正常是白色畫素個數5倍

return false;

}

return true;

}

Mat transformCorner(Mat &image, RotatedRect &rect) { //單一性矩陣與透視變換

int width = static_cast<int>(rect.size.width);

int height = static_cast<int>(rect.size.height);

Mat result = Mat::zeros(height, width, image.type());

Point2f vertices[4];

rect.points(vertices);

vector<Point> src_corners;

vector<Point> dst_corners;

dst_corners.push_back(Point(0, 0));

dst_corners.push_back(Point(width, 0));

dst_corners.push_back(Point(width, height)); // big trick

dst_corners.push_back(Point(0, height));

for (int i = 0; i < 4; i++) {

src_corners.push_back(vertices[i]);

}

Mat h = findHomography(src_corners, dst_corners);

warpPerspective(image, result, h, result.size());

return result;

}

過程分析

效果:

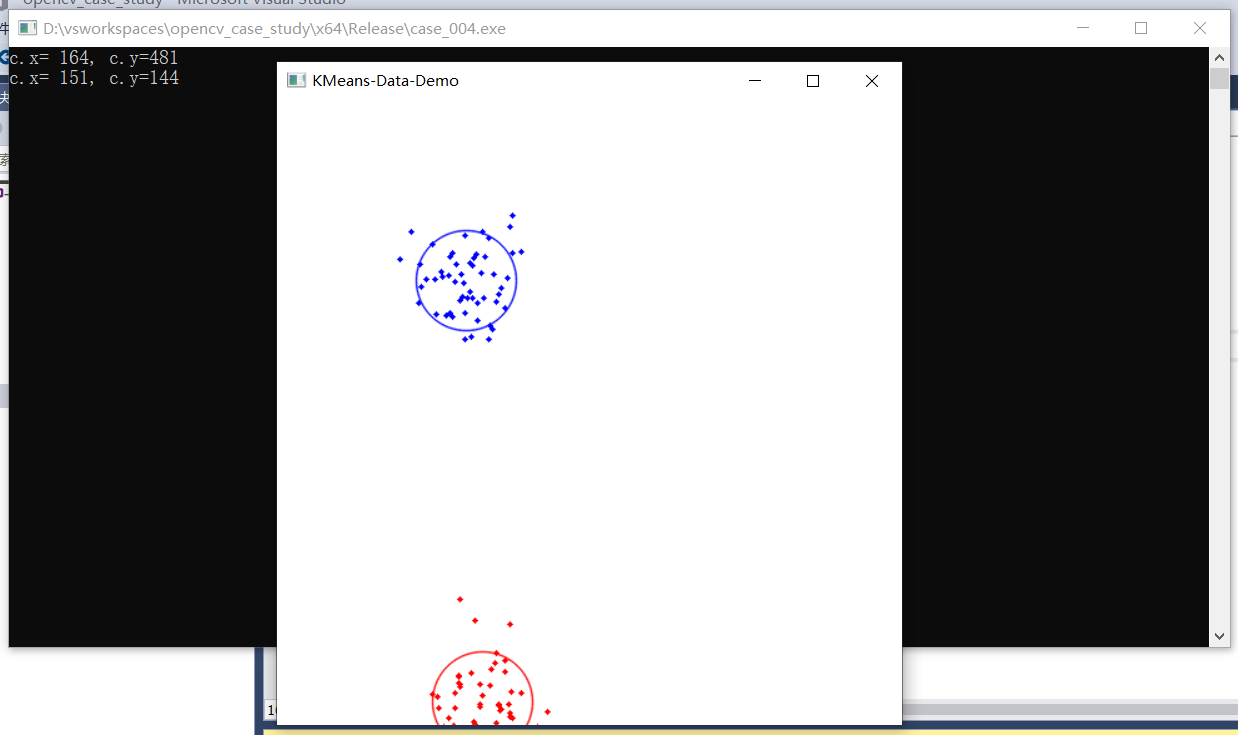

4、KMeans應用

- 資料聚類

- 影象聚類

- 背景替換

- 主色彩提取

KMeans聚類演演算法原理

- 聚類中心

- 根據距離分類

聚類和分類最大的不同在於,分類的目標是事先已知的,而聚類則不一樣,聚類事先不知道目標變數是什麼,類別沒有像分類那樣被預先定義出來,也就是聚類分組不需要提前被告知所劃分的組應該是什麼樣的,因為我們甚至可能都不知道我們再尋找什麼,所以聚類是用於知識發現而不是預測,所以,聚類有時也叫無監督學習。

KMeans演演算法是最常用的聚類演演算法,主要思想是:在給定K值和K個初始類簇中心點的情況下,把每個點(亦即資料記錄)分到離其最近的類簇中心點所代表的類簇中,所有點分配完畢之後,根據一個類簇內的所有點重新計算該類簇的中心點(取平均值),然後再迭代的進行分配點和更新類簇中心點的步驟,直至類簇中心點的變化很小,或者達到指定的迭代次數。

K-means過程:

- 首先選擇k個類別的中心點

- 對任意一個樣本,求其到各類中心的距離,將該樣本歸到距離最短的中心所在的類

- 聚好類後,重新計算每個聚類的中心點位置

- 重複2,3步驟迭代,直到k個類中心點的位置不變,或者達到一定的迭代次數,則迭代結束,否則繼續迭代

程式碼實現

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void kmeans_data_demo();

void kmeans_image_demo();

void kmeans_background_replace();

void kmeans_color_card();

int main(int argc, char** argv) {

// kmeans_data_demo();

// kmeans_image_demo();

// kmeans_background_replace();

kmeans_color_card();

return 0;

waitKey(0);

return 0;

}

void kmeans_data_demo() {

Mat img(500, 500, CV_8UC3);

RNG rng(12345);

Scalar colorTab[] = {

Scalar(0, 0, 255),

Scalar(255, 0, 0),

};

int numCluster = 2; //聚類個數

int sampleCount = rng.uniform(5, 500); //隨機產生的資料點個數,均勻分佈

Mat points(sampleCount, 1, CV_32FC2); //矩陣大小為:資料點個數*1,每個點有兩個維度

// 生成亂數

for (int k = 0; k < numCluster; k++) {

Point center;

center.x = rng.uniform(0, img.cols);

center.y = rng.uniform(0, img.rows);

//兩次迴圈產生亂數的縱座標範圍不同

Mat pointChunk = points.rowRange(k*sampleCount / numCluster,

k == numCluster - 1 ? sampleCount : (k + 1)*sampleCount / numCluster);

//使用指定範圍二維亂數填充矩陣,填充方式為均勻分佈或高斯分佈

rng.fill(pointChunk, RNG::NORMAL, Scalar(center.x, center.y), Scalar(img.cols*0.05, img.rows*0.05));

}

randShuffle(points, 1, &rng); //打亂亂數順序

// 使用KMeans

Mat labels;

Mat centers;

//將這些點分為2類,每個點有一個標籤,使用不同的初始聚類中心執行演演算法的次數,初始中心點選取方式

kmeans(points, numCluster, labels, TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1), 3, KMEANS_PP_CENTERS, centers);

// 用不同顏色顯示分類

img = Scalar::all(255);

for (int i = 0; i < sampleCount; i++) {

int index = labels.at<int>(i);

Point p = points.at<Point2f>(i);

circle(img, p, 2, colorTab[index], -1, 8); //對不同標籤的點按不同顏色進行填充

}

// 每個聚類的中心來繪製圓

for (int i = 0; i < centers.rows; i++) {

int x = centers.at<float>(i, 0);

int y = centers.at<float>(i, 1);

printf("c.x= %d, c.y=%d\n", x, y);

circle(img, Point(x, y), 40, colorTab[i], 1, LINE_AA);

}

imshow("KMeans-Data-Demo", img);

waitKey(0);

}

void kmeans_image_demo() {

Mat src = imread("D:/images/toux.jpg");

if (src.empty()) {

printf("could not load image...\n");

return;

}

namedWindow("input image", WINDOW_AUTOSIZE);

imshow("input image", src);

Vec3b colorTab[] = {

Vec3b(0, 0, 255),

Vec3b(0, 255, 0),

Vec3b(255, 0, 0),

Vec3b(0, 255, 255),

Vec3b(255, 0, 255)

};

int width = src.cols;

int height = src.rows;

int dims = src.channels();

// 初始化定義

int sampleCount = width * height;

int clusterCount = 3;

Mat labels;

Mat centers;

// RGB 資料轉換到樣本資料

Mat sample_data = src.reshape(3, sampleCount); //將輸入影象轉換到特定維數

Mat data;

sample_data.convertTo(data, CV_32F);

// 執行K-Means

TermCriteria criteria = TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1); //停止迭代判定條件,迭代10次,精度達到0.1

kmeans(data, clusterCount, labels, criteria, clusterCount, KMEANS_PP_CENTERS, centers);

// 顯示影象分割結果

int index = 0;

Mat result = Mat::zeros(src.size(), src.type());

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row * width + col;

int label = labels.at<int>(index, 0);

result.at<Vec3b>(row, col) = colorTab[label]; //按不同標籤對結果中的點設定不同顏色

}

}

imshow("KMeans-image-Demo", result);

waitKey(0);

}

void kmeans_background_replace() {

Mat src = imread("D:/images/toux.jpg");

if (src.empty()) {

printf("could not load image...\n");

return;

}

namedWindow("input image", WINDOW_AUTOSIZE);

imshow("input image", src);

int width = src.cols;

int height = src.rows;

int dims = src.channels();

// 初始化定義

int sampleCount = width * height;

int clusterCount = 3;

Mat labels;

Mat centers;

// RGB 資料轉換到樣本資料

Mat sample_data = src.reshape(3, sampleCount);

Mat data;

sample_data.convertTo(data, CV_32F);

// 執行K-Means

TermCriteria criteria = TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1);

kmeans(data, clusterCount, labels, criteria, clusterCount, KMEANS_PP_CENTERS, centers);

// 生成mask

Mat mask = Mat::zeros(src.size(), CV_8UC1);

int index = labels.at<int>(0, 0); //獲取(0,0)點的label,與(0,0)點相同label的部分為背景

labels = labels.reshape(1, height);

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

int c = labels.at<int>(row, col);

if (c == index) {

mask.at<uchar>(row, col) = 255; //將與(0,0)點相同label的部分畫素值設為255

}

}

}

imshow("mask", mask);

Mat se = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

dilate(mask, mask, se); //背景白色區域膨脹操作

// 生成高斯權重

GaussianBlur(mask, mask, Size(5, 5), 0); //通過高斯模糊,使輪廓邊緣過度自然

imshow("mask-blur", mask);

// 基於高斯權重影象融合

Mat result = Mat::zeros(src.size(), CV_8UC3);

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

float w1 = mask.at<uchar>(row, col) / 255.0;

Vec3b bgr = src.at<Vec3b>(row, col);

bgr[0] = w1 * 255.0 + bgr[0] * (1.0 - w1); //對bgr三通道進行分別融合

bgr[1] = w1 * 0 + bgr[1] * (1.0 - w1);

bgr[2] = w1 * 255.0 + bgr[2] * (1.0 - w1);

result.at<Vec3b>(row, col) = bgr;

}

}

imshow("background-replacement-demo", result);

waitKey(0);

}

void kmeans_color_card() {

Mat src = imread("D:/images/test.png");

if (src.empty()) {

printf("could not load image...\n");

return;

}

namedWindow("input image", WINDOW_AUTOSIZE);

imshow("input image", src);

int width = src.cols;

int height = src.rows;

int dims = src.channels();

// 初始化定義

int sampleCount = width * height;

int clusterCount = 4;

Mat labels;

Mat centers;

// RGB 資料轉換到樣本資料

Mat sample_data = src.reshape(3, sampleCount);

Mat data;

sample_data.convertTo(data, CV_32F);

// 執行K-Means

TermCriteria criteria = TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 10, 0.1);

kmeans(data, clusterCount, labels, criteria, clusterCount, KMEANS_PP_CENTERS, centers);

Mat card = Mat::zeros(Size(width, 50), CV_8UC3); //初始化一個 輸入影象寬*50 的色卡

vector<float> clusters(clusterCount);

// 生成色卡比率

for (int i = 0; i < labels.rows; i++) { //遍歷標籤

clusters[labels.at<int>(i, 0)]++;

}

for (int i = 0; i < clusters.size(); i++) { //將clusters對應位置儲存其對應比例

clusters[i] = clusters[i] / sampleCount;

}

int x_offset = 0;

// 繪製色卡

for (int x = 0; x < clusterCount; x++) {

Rect rect;

rect.x = x_offset;

rect.y = 0;

rect.height = 50;

rect.width = round(clusters[x] * width);

x_offset += rect.width;

int b = centers.at<float>(x, 0);

int g = centers.at<float>(x, 1);

int r = centers.at<float>(x, 2);

rectangle(card, rect, Scalar(b, g, r), -1, 8, 0);

}

imshow("Image Color Card", card);

waitKey(0);

}

效果:

1、KMeans聚類範例

2、使用KMeans根據影象顏色分割

3、影象背景平滑置換

4、獲取圖片中佔比最高的前四種顏色色卡