分散式儲存系統之Ceph叢集啟用Dashboard及使用Prometheus監控Ceph

前文我們瞭解了Ceph之上的RadosGW基礎使用相關話題,回顧請參考https://www.cnblogs.com/qiuhom-1874/p/16768998.html;今天我們來聊一聊Ceph啟用Dashboard和使用Prometheus監控Ceph相關話題;

MGR元件作用

Ceph在早些版本(L版之前)都是沒有MGR這個元件的,L版之前,ceph叢集相關監控資料都是靠mon節點完成,為了減輕mon節點的壓力,ceph叢集相關狀態資料的功能在L版之後被獨立出來,用MGR來負責,MGR主要作用是用於責跟蹤執行時指標和Ceph叢集的當前狀態,包括儲存利用率,當前效能指標和系統負載等,Ceph叢集資訊;

MGR是一個高度模組化的元件,很多功能都可以通過啟用或禁用模組來實現;其中Dashboard這個模組也被MGR所管理,預設情況下該模組並沒有被啟用;dashboard從字面意思就能知道該模組是提供一個面板;該面板能夠顯示ceph叢集相關狀態資料,比如有叢集的健康狀態、osd有多少個、儲存池有多少個,msd、mgr等等相關元件的狀態資訊;

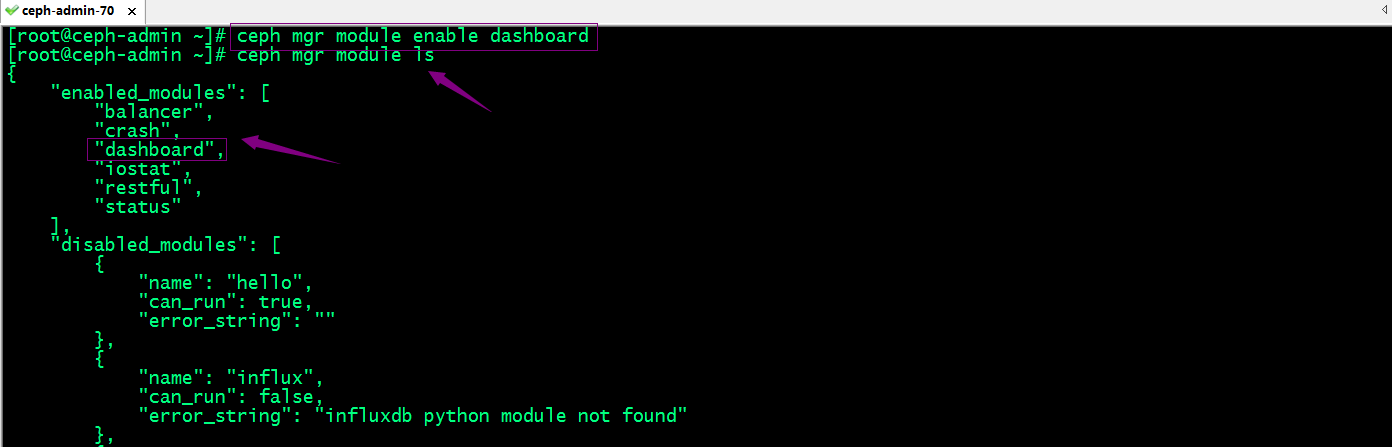

Ceph mgr 模組管理

列出mgr全部模組

[root@ceph-admin ~]# ceph mgr module ls

{

"enabled_modules": [

"balancer",

"crash",

"iostat",

"restful",

"status"

],

"disabled_modules": [

{

"name": "dashboard",

"can_run": true,

"error_string": ""

},

{

"name": "hello",

"can_run": true,

"error_string": ""

},

{

"name": "influx",

"can_run": false,

"error_string": "influxdb python module not found"

},

{

"name": "localpool",

"can_run": true,

"error_string": ""

},

{

"name": "prometheus",

"can_run": true,

"error_string": ""

},

{

"name": "selftest",

"can_run": true,

"error_string": ""

},

{

"name": "smart",

"can_run": true,

"error_string": ""

},

{

"name": "telegraf",

"can_run": true,

"error_string": ""

},

{

"name": "telemetry",

"can_run": true,

"error_string": ""

},

{

"name": "zabbix",

"can_run": true,

"error_string": ""

}

]

}

[root@ceph-admin ~]#

提示:ceph mgr module ls命令顯示了叢集啟用的模組和禁用的模組資訊;其中禁用模組中can_run值為true表示支援對應模組,但未啟用;can_run值為false表示不支援對應模組,error_string的值就是用來描述為什麼該模組不被支援;

Ceph mgr啟用dashboard模組

提示:dashboard模組預設是以https向外展示ceph叢集狀態相關資料;這意味著我們需要向dashboard提供證書,對應服務才能正常使用;或者我們禁用dashboard的ssl功能,讓其以http的方式對外提供服務;

禁用dashboard的ssl功能

ceph config set mgr mgr/dashboard/ssl false

提示:當然這種方式並不推薦;如果要使用ssl能夠我們就必須給dashboard提供證書,具體步驟如下;

使用自簽名證書

[root@ceph-admin ~]# ceph dashboard create-self-signed-cert Self-signed certificate created [root@ceph-admin ~]#

提示:使用自簽名證書,我們執行上述命令,對應ceph會自動生成一個自簽名證書給dashboard進行使用;

使用自定義證書

生成證書

[root@ceph-admin ~]# openssl req -new -nodes -x509 -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca Generating a 2048 bit RSA private key .....................................................................................................+++ .............+++ writing new private key to 'dashboard.key' ----- [root@ceph-admin ~]# ls CentOS-7-x86_64-Minimal-1708.iso client.abc.keyring client.libvirt-secret.xml dashboard.crt centos7.xml client.admin.cluster.keyring client.test.keyring dashboard.key ceph-deploy-ceph.log client.admin.keyring client.usera.keyring fsclient.key [root@ceph-admin ~]#

提示:可以看到在當前目錄生成了一個key檔案和證書檔案;

設定dashboard載入證書

[root@ceph-admin ~]# ceph config-key set mgr mgr/dashboard/crt -i dashboard.crt set mgr [root@ceph-admin ~]# ceph config-key set mgr mgr/dashboard/key -i dashboard.key set mgr [root@ceph-admin ~]#

設定dashboard監聽的地址和埠

[root@ceph-admin ~]# ceph config set mgr mgr/dashboard/server_addr 0.0.0.0 [root@ceph-admin ~]# ceph config set mgr mgr/dashboard/server_port 8443 [root@ceph-admin ~]#

驗證:檢視mgr所在主機是否正常監聽8443呢?

[root@ceph-admin ~]# ceph -s

cluster:

id: 7fd4a619-9767-4b46-9cee-78b9dfe88f34

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon01,ceph-mon02,ceph-mon03

mgr: ceph-mgr01(active), standbys: ceph-mon01, ceph-mgr02

mds: cephfs-2/2/2 up {0=ceph-mon01=up:active,1=ceph-mon02=up:active}, 1 up:standby-replay

osd: 10 osds: 10 up, 10 in

rgw: 2 daemons active

data:

pools: 12 pools, 480 pgs

objects: 515 objects, 847 MiB

usage: 13 GiB used, 887 GiB / 900 GiB avail

pgs: 480 active+clean

io:

client: 1.2 KiB/s rd, 2 op/s rd, 0 op/s wr

[root@ceph-admin ~]# ssh ceph-mgr01 'ss -tnlp|grep 8443'

LISTEN 0 5 [::]:8443 [::]:* users:(("ceph-mgr",pid=1091,fd=53))

[root@ceph-admin ~]#

提示:可以看到當前活動的mgr所在主機已經正常監聽8443埠;

當然我們也可分別設定不同mgr監聽不同ip地址和埠

ceph config set mgr mgr/dashboard/$name/server_addr $IP ceph config set mgr mgr/dashboard/$name/server_port $PORT

提示:我們只需將上述命令中$name更改成對應mgr範例的名稱,把$IP、$PORT更改為對應ip地址和埠即可;

存取對應主機的8443埠,看看對應服務是否可用?

提示:可以看到現在存取mgr所在主機的8443埠,對應服務是可以正常通過https存取的;

當然我們可以使用ceph mgr servers來確認對應存取dashboard的url

[root@ceph-admin ~]# ceph mgr services

{

"dashboard": "https://ceph-mgr01.ilinux.io:8443/"

}

[root@ceph-admin ~]#

提示:這裡直接使用主機名的方式和對應監聽埠組成的url,在使用者端存取要確定對應使用者端主機能夠解析對應主機名;

重啟Dashboard、

[root@ceph-admin ~]# ceph mgr module disable dashboard [root@ceph-admin ~]# ceph mgr module enable dashboard [root@ceph-admin ~]#

設定管理員認證資訊

命令格式:ceph dashboard set-login-credentials <username> <password>

[root@ceph-admin ~]# ceph dashboard set-login-credentials admin admin Username and password updated [root@ceph-admin ~]#

驗證:使用使用者名稱為admin,密碼為admin看看對應dashboard是否可登入?

提示:可以看到使用admin使用者和admin密碼是可以正常登入面板的;到此面板的啟用就到此為止;簡單說下這個面板吧,這個面板主要輸出一些監控指標資料,比如像儲存池,主機,rdb,cephfs等等資訊,它可以讓我們一目瞭然的瞭解ceph叢集各元件狀態,以及儲存狀態等等資訊;就是一個監控面板,其他沒有別的東西了;

使用Prometheus監控Ceph

Prometheus簡介

Prometheus是一套用go語言研發的開源的系統監控報警框架;它啟發於Google的borgmon監控系統,由工作在SoundCloud的Google前員工在2012年建立,作為社群開源專案進行開發,並於2015年正式釋出;2016年,Prometheus正式加入CNCF(Cloud Native Computing Foundation),成為受歡迎度僅次於iKubernetes的專案;作為新一代的監控框架,Prometheus具有以下特點;

1、強大的多維度資料模型;

1.1、時間序列資料通過metric名和鍵值對來區分;

1.2、所有的metrics都可以設定任意的多維標籤;

1.3、資料模型更隨意,不需要刻意設定為以點分割的字串;

1.4、可以對資料模型進行聚合,切割和切片操作;

1.5、持雙精度浮點型別,標籤可以設定為全unicode;

2、靈活而強大的查詢語句(ProQL):在同一個查詢語句,可以對多個metrics進行乘法、加法、連線、取分數位等操作;

3、易於管理:Prometheus Server是一個單獨的二進位制檔案,可直接在本地工作,不依賴於分散式儲存;

4、高效:平均每個取樣點僅佔3.5bytes,且一個Prometheus server可以處理百萬的metrics;

5、使用pull模式採集時間序列資料,這樣不僅有利於本機測試而且可以避免有問題的伺服器推播壞的metrics;

6、可以採用 push gateway的方式把時間序列資料推播至Prometheus server端;

7、可以通過伺服器發現或者靜態設定去獲取監控的targets;有多種視覺化圖形介面;易於伸縮;

需要指出的是,由於資料採集可能會有丟失,所以Prometheus 不適合對採集資料要100%精準的情形;但如果用於記錄時間序列資料,Prometheus具有很大的查詢優勢,此外,Prometheus 適用於微服務體系架構;

Prometheus架構圖

prometheus server:主服務,接受外部http請求,收集、儲存與查詢資料等;

prometheus targets: 靜態收集的目標服務資料;

service discovery:動態發現服務;

prometheus alerting:報警通知元件,用於處理告警資訊;

pushgateway:資料收集代理伺服器(類似於zabbix proxy);

data visualization and export: 資料視覺化與資料匯出(存取使用者端)

exporter:該元件主要作用是用來收集對應節點上的應用或主機指標資料的;不同應用有不同的export,比如,我們要收集mysql的指標資料,我們可以在對應mysql所在主機上部署mysql_exporter,我們要收集redis的指標資料,我們可以在對應主機上部署redis_exporter,要收集某個Linux節點主機的指標資料,我們可以部署node_exporter到對應主機;即我們要收集什麼指標資料,部署對應的exporter即可;

部署二進位制Prometheus server

下載Prometheus

[root@ceph-mgr02 ~]# wget https://github.com/prometheus/prometheus/releases/download/v2.37.1/prometheus-2.37.1.linux-amd64.tar.gz

建立執行Prometheus Server程序的系統使用者,併為其建立家目錄/var/lib/prometheus作為資料儲存目錄

[root@ceph-mgr02 ~]# useradd -r -m -d /var/lib/prometheus prometheus [root@ceph-mgr02 ~]# ll /var/lib/prometheus total 0 [root@ceph-mgr02 ~]# ll /var/lib/prometheus -d drwx------ 2 prometheus prometheus 62 Oct 9 21:40 /var/lib/prometheus [root@ceph-mgr02 ~]#

解壓Prometheus並做軟連線

[root@ceph-mgr02 ~]# tar xf prometheus-2.37.1.linux-amd64.tar.gz -C /usr/local/ [root@ceph-mgr02 ~]# cd /usr/local/ [root@ceph-mgr02 local]# ls bin etc games include lib lib64 libexec prometheus-2.37.1.linux-amd64 sbin share src [root@ceph-mgr02 local]# ln -s prometheus-2.37.1.linux-amd64 prometheus [root@ceph-mgr02 local]# ll total 0 drwxr-xr-x. 2 root root 6 Apr 11 2018 bin drwxr-xr-x. 2 root root 6 Apr 11 2018 etc drwxr-xr-x. 2 root root 6 Apr 11 2018 games drwxr-xr-x. 2 root root 6 Apr 11 2018 include drwxr-xr-x. 2 root root 6 Apr 11 2018 lib drwxr-xr-x. 2 root root 6 Apr 11 2018 lib64 drwxr-xr-x. 2 root root 6 Apr 11 2018 libexec lrwxrwxrwx 1 root root 29 Oct 9 21:42 prometheus -> prometheus-2.37.1.linux-amd64 drwxr-xr-x 4 3434 3434 132 Sep 12 21:08 prometheus-2.37.1.linux-amd64 drwxr-xr-x. 2 root root 6 Apr 11 2018 sbin drwxr-xr-x. 5 root root 49 Apr 11 2018 share drwxr-xr-x. 2 root root 6 Apr 11 2018 src [root@ceph-mgr02 local]#

建立Prometheus專用的Unit File

[root@ceph-mgr02 prometheus]# cat /usr/lib/systemd/system/prometheus.service [Unit] Description=The Prometheus 2 monitoring system and time series database. Documentation=https://prometheus.io After=network.target [Service] EnvironmentFile=-/etc/sysconfig/prometheus User=prometheus ExecStart=/usr/local/prometheus/prometheus \ --storage.tsdb.path=/var/lib/prometheus \ --config.file=/usr/local/prometheus/prometheus.yml \ --web.listen-address=0.0.0.0:9090 \ --web.external-url= Restart=on-failure StartLimitInterval=1 RestartSec=3 [Install] WantedBy=multi-user.target [root@ceph-mgr02 prometheus]#

啟動Prometheus server

[root@ceph-mgr02 prometheus]# systemctl daemon-reload

[root@ceph-mgr02 prometheus]# systemctl start prometheus

[root@ceph-mgr02 prometheus]# systemctl status prometheus

● prometheus.service - The Prometheus 2 monitoring system and time series database.

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-09 21:46:09 CST; 4s ago

Docs: https://prometheus.io

Main PID: 2258 (prometheus)

CGroup: /system.slice/prometheus.service

└─2258 /usr/local/prometheus/prometheus --storage.tsdb.path=/var/lib/prometheus --config.file=/usr/local/prometh...

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.691Z caller=head.go:536 level=info compo…6.545µs

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.691Z caller=head.go:542 level=info compo...hile"

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.691Z caller=head.go:613 level=info compo...ent=0

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.691Z caller=head.go:619 level=info compo…6.765µs

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.692Z caller=main.go:993 level=info fs_ty...MAGIC

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.692Z caller=main.go:996 level=info msg="...rted"

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.692Z caller=main.go:1177 level=info msg=...s.yml

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.693Z caller=main.go:1214 level=info msg="Comp…µs

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.693Z caller=main.go:957 level=info msg="...sts."

Oct 09 21:46:09 ceph-mgr02.ilinux.io prometheus[2258]: ts=2022-10-09T13:46:09.693Z caller=manager.go:941 level=info co...r..."

Hint: Some lines were ellipsized, use -l to show in full.

[root@ceph-mgr02 prometheus]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 172.16.30.75:6800 *:*

LISTEN 0 128 192.168.0.75:6800 *:*

LISTEN 0 128 172.16.30.75:6801 *:*

LISTEN 0 128 192.168.0.75:6801 *:*

LISTEN 0 128 192.168.0.75:6802 *:*

LISTEN 0 128 172.16.30.75:6802 *:*

LISTEN 0 128 192.168.0.75:6803 *:*

LISTEN 0 128 172.16.30.75:6803 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:8443 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:9090 [::]:*

[root@ceph-mgr02 prometheus]#

提示:Prometheus預設監聽在tcp9090埠;使用systemd的方式啟動Prometheus,如果沒有報錯,對應埠又處於監聽狀態說明Prometheus server就搭建好了;

部署node_exporter 收集ceph-mon01主機上的指標資料,以測試Prometheus 服務是否正常可用

[root@ceph-mon01 ~]# wget https://github.com/prometheus/node_exporter/releases/download/v1.4.0/node_exporter-1.4.0.linux-amd64.tar.gz

建立執行node_exporter程序的系統使用者,併為其建立家目錄/var/lib/prometheus作為資料儲存目錄

[root@ceph-mon01 ~]# useradd -r -m -d /var/lib/prometheus prometheus [root@ceph-mon01 ~]# ll /var/lib/prometheus/ -d drwx------ 2 prometheus prometheus 62 Oct 9 21:51 /var/lib/prometheus/ [root@ceph-mon01 ~]#

解壓壓縮包並做軟連線

[root@ceph-mon01 ~]# tar xf node_exporter-1.4.0.linux-amd64.tar.gz -C /usr/local/ [root@ceph-mon01 ~]# cd /usr/local/ [root@ceph-mon01 local]# ll total 0 drwxr-xr-x. 2 root root 6 Apr 11 2018 bin drwxr-xr-x. 2 root root 6 Apr 11 2018 etc drwxr-xr-x. 2 root root 6 Apr 11 2018 games drwxr-xr-x. 2 root root 6 Apr 11 2018 include drwxr-xr-x. 2 root root 6 Apr 11 2018 lib drwxr-xr-x. 2 root root 6 Apr 11 2018 lib64 drwxr-xr-x. 2 root root 6 Apr 11 2018 libexec drwxr-xr-x 2 3434 3434 56 Sep 26 20:39 node_exporter-1.4.0.linux-amd64 drwxr-xr-x. 2 root root 6 Apr 11 2018 sbin drwxr-xr-x. 5 root root 49 Apr 11 2018 share drwxr-xr-x. 2 root root 6 Apr 11 2018 src [root@ceph-mon01 local]# ln -s node_exporter-1.4.0.linux-amd64 node_exporter [root@ceph-mon01 local]# ll total 0 drwxr-xr-x. 2 root root 6 Apr 11 2018 bin drwxr-xr-x. 2 root root 6 Apr 11 2018 etc drwxr-xr-x. 2 root root 6 Apr 11 2018 games drwxr-xr-x. 2 root root 6 Apr 11 2018 include drwxr-xr-x. 2 root root 6 Apr 11 2018 lib drwxr-xr-x. 2 root root 6 Apr 11 2018 lib64 drwxr-xr-x. 2 root root 6 Apr 11 2018 libexec lrwxrwxrwx 1 root root 31 Oct 9 21:52 node_exporter -> node_exporter-1.4.0.linux-amd64 drwxr-xr-x 2 3434 3434 56 Sep 26 20:39 node_exporter-1.4.0.linux-amd64 drwxr-xr-x. 2 root root 6 Apr 11 2018 sbin drwxr-xr-x. 5 root root 49 Apr 11 2018 share drwxr-xr-x. 2 root root 6 Apr 11 2018 src [root@ceph-mon01 local]#

建立Unit File

[root@ceph-mon01 local]# cat /usr/lib/systemd/system/node_exporter.service [Unit] Description=Prometheus exporter for machine metrics, written in Go with pluggable metric collectors. Documentation=https://github.com/prometheus/node_exporter After=network.target [Service] EnvironmentFile=-/etc/sysconfig/node_exporter User=prometheus ExecStart=/usr/local/node_exporter/node_exporter \ $NODE_EXPORTER_OPTS Restart=on-failure StartLimitInterval=1 RestartSec=3 [Install] WantedBy=multi-user.target [root@ceph-mon01 local]#

啟動node_exporter

[root@ceph-mon01 local]# systemctl daemon-reload

[root@ceph-mon01 local]# systemctl start node_exporter

[root@ceph-mon01 local]# systemctl status node_exporter.service

● node_exporter.service - Prometheus exporter for machine metrics, written in Go with pluggable metric collectors.

Loaded: loaded (/usr/lib/systemd/system/node_exporter.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-09 21:58:12 CST; 6s ago

Docs: https://github.com/prometheus/node_exporter

Main PID: 3247 (node_exporter)

CGroup: /system.slice/node_exporter.service

└─3247 /usr/local/node_exporter/node_exporter

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...zone

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...time

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...imex

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...eues

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...name

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...stat

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...=xfs

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:115 level...=zfs

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=node_exporter.go:199 level...9100

Oct 09 21:58:12 ceph-mon01.ilinux.io node_exporter[3247]: ts=2022-10-09T13:58:12.121Z caller=tls_config.go:195 level=in...alse

Hint: Some lines were ellipsized, use -l to show in full.

[root@ceph-mon01 local]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 172.16.30.71:6803 *:*

LISTEN 0 128 192.168.0.71:6803 *:*

LISTEN 0 128 192.168.0.71:6804 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 128 *:7480 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:8443 *:*

LISTEN 0 128 192.168.0.71:6789 *:*

LISTEN 0 128 172.16.30.71:6800 *:*

LISTEN 0 128 192.168.0.71:6800 *:*

LISTEN 0 128 172.16.30.71:6801 *:*

LISTEN 0 128 192.168.0.71:6801 *:*

LISTEN 0 128 172.16.30.71:6802 *:*

LISTEN 0 128 192.168.0.71:6802 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:9100 [::]:*

[root@ceph-mon01 local]#

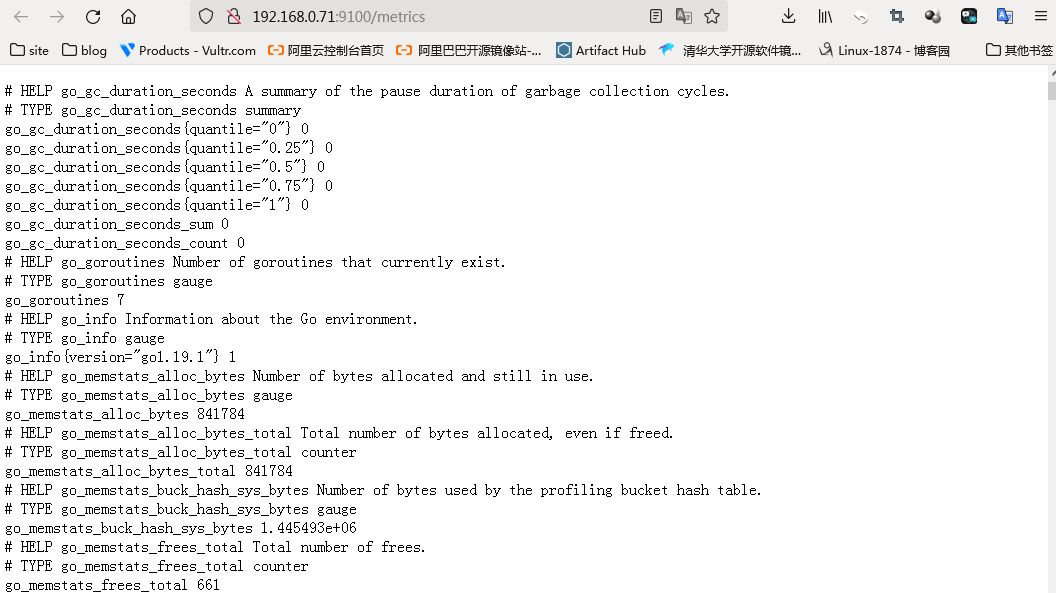

提示:node_exporter預設監聽在tcp的9100,通過這個埠加/metrics可以輸出對應採集到的指標資料;

驗證,存取ceph-mon01的9100,看看對應指標資料是否採集到了?

提示:可以看到存取192.168.0.71:9100/metrics能夠輸出很多指標資料,說明node_exproter部署沒有問題;

將node_exporter的指標資料匯入Prometheus server

在匯入node_exporter的指標資料之前,我們先來了解下Prometheus 組態檔

[root@ceph-mgr02 prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

[root@ceph-mgr02 prometheus]#

提示:Prometheus 組態檔主要由global、rule_files、scrape_configs、alerting、remote_write和remote_read幾個設定段組成;global:全域性設定段;scrape_configs:scrape設定集合,用於定義監控的目標物件(target)的集合,以及描述如何抓取(scrape)相關指標資料的設定引數;通常,每個scrape設定對應於一個單獨的作業(job),而每個targets可通過靜態設定(static_configs)直接給出定義,也可基於Prometheus支援的服務發現機制進行自動設定;alertmanager_configs:可由Prometheus使⽤的Alertmanager範例的集合,以及如何同這些Alertmanager互動的設定引數;每個Alertmanager可通過靜態設定(static_configs)直接給出定義,也可基於Prometheus⽀持的服務發現機制進行自動設定;remote_write:設定「遠端寫」機制,Prometheus需要將資料儲存於外部的儲存系統(例如InfluxDB)時定義此設定段,隨後Prometheus將樣本資料通過HTTP協定傳送給由URL指定介面卡(Adaptor);remote_read:設定「遠端讀」機制,Prometheus將接收到的查詢請求交給由URL指定介面卡Adpater)執行,Adapter將請求條件轉換為遠端儲存服務中的查詢請求,並將獲取的響應資料轉換為Prometheus可用的格式;

常用的全域性設定引數

global: # How frequently to scrape targets by default. [ scrape_interval: <duration> | default = 1m ] # How long until a scrape request times out. [ scrape_timeout: <duration> | default = 10s ] # How frequently to evaluate rules. [ evaluation_interval: <duration> | default = 1m ] # The labels to add to any time series or alerts when communicating with # external systems (federation, remote storage, Alertmanager). external_labels: [ <labelname>: <labelvalue> ... ] # Rule files specifies a list of globs. Rules and alerts are read from # all matching files. rule_files: [ - <filepath_glob> ... ] # A list of scrape configurations. scrape_configs: [ - <scrape_config> ... ] # Alerting specifies settings related to the Alertmanager. alerting: alert_relabel_configs: [ - <relabel_config> ... ] alertmanagers: [ - <alertmanager_config> ... ] # Settings related to the remote write feature. remote_write: [ - <remote_write> ... ] # Settings related to the remote read feature. remote_read: [ - <remote_read> ... ]

scrape設定段中,使用static_configs設定Job的語法格式

# The targets specified by the static config. targets: [ - '<host>' ] # Labels assigned to all metrics scraped from the targets. labels: [ <labelname>: <labelvalue> ... ]

使用file_sd_configs設定Job的語法格式

[

{

"targets": [ "<host>", ... ],

"labels": {

"<labelname>": "<labelvalue>", ...

}

},

...

]

將node_exporter指標資料納入Prometheus server中

提示:預設node_exporter是以/metrics輸出指標資料,如果需要修改了輸出路徑,需要在prometheus組態檔中用metrics_path來指定其路徑;

重啟Prometheus server

[root@ceph-mgr02 prometheus]# systemctl restart prometheus.service

[root@ceph-mgr02 prometheus]# systemctl status prometheus.service

● prometheus.service - The Prometheus 2 monitoring system and time series database.

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-09 22:20:41 CST; 9s ago

Docs: https://prometheus.io

Main PID: 2344 (prometheus)

CGroup: /system.slice/prometheus.service

└─2344 /usr/local/prometheus/prometheus --storage.tsdb.path=/var/lib/prometheus --config.file=/usr/local/prometh...

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.163Z caller=head.go:542 level=info compo...hile"

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.179Z caller=head.go:613 level=info compo...ent=1

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.179Z caller=head.go:613 level=info compo...ent=1

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.179Z caller=head.go:619 level=info compo…19721ms

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.180Z caller=main.go:993 level=info fs_ty...MAGIC

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.180Z caller=main.go:996 level=info msg="...rted"

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.180Z caller=main.go:1177 level=info msg=...s.yml

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.181Z caller=main.go:1214 level=info msg="Comp…µs

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.181Z caller=main.go:957 level=info msg="...sts."

Oct 09 22:20:41 ceph-mgr02.ilinux.io prometheus[2344]: ts=2022-10-09T14:20:41.181Z caller=manager.go:941 level=info co...r..."

Hint: Some lines were ellipsized, use -l to show in full.

[root@ceph-mgr02 prometheus]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 172.16.30.75:6800 *:*

LISTEN 0 128 192.168.0.75:6800 *:*

LISTEN 0 128 172.16.30.75:6801 *:*

LISTEN 0 128 192.168.0.75:6801 *:*

LISTEN 0 128 192.168.0.75:6802 *:*

LISTEN 0 128 172.16.30.75:6802 *:*

LISTEN 0 128 192.168.0.75:6803 *:*

LISTEN 0 128 172.16.30.75:6803 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:8443 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:9090 [::]:*

[root@ceph-mgr02 prometheus]#

存取Prometheus server所在主機的9090埠

提示:可以看到存取Prometheus 所在主機的9090埠,對應能夠查到node_exporter採集到資料;說明Prometheus server和node_exporter是部署是沒有問題;

Ceph mgr啟用Prometheus模組

Ceph Manager內建了眾多模組,包括prometheus模組,用於直接輸出Prometheus⻛格的指標資料。

提示:Prometheus模組預設監聽於TCP協定的9283埠;這裡需要說明一下,ceph只要啟用了Prometheus模組以後,只要執行的有mgr的節點,對應都會監聽9283埠;

驗證:檢視mgr執行主機是否監聽9283埠?對應埠是否可存取?

[root@ceph-admin ~]# ceph mgr services

{

"dashboard": "https://0.0.0.0:8443/",

"prometheus": "http://ceph-mgr01.ilinux.io:9283/"

}

[root@ceph-admin ~]# ssh ceph-mgr01 'ss -tnl'

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 5 *:8443 *:*

LISTEN 0 128 172.16.30.74:6800 *:*

LISTEN 0 128 192.168.0.74:6800 *:*

LISTEN 0 128 192.168.0.74:6801 *:*

LISTEN 0 128 172.16.30.74:6801 *:*

LISTEN 0 128 172.16.30.74:6802 *:*

LISTEN 0 128 192.168.0.74:6802 *:*

LISTEN 0 128 192.168.0.74:6803 *:*

LISTEN 0 128 172.16.30.74:6803 *:*

LISTEN 0 128 192.168.0.74:6804 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 [::]:9283 [::]:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

[root@ceph-admin ~]# curl http://ceph-mgr01.ilinux.io:9283/

<!DOCTYPE html>

<html>

<head><title>Ceph Exporter</title></head>

<body>

<h1>Ceph Exporter</h1>

<p><a href='/metrics'>Metrics</a></p>

</body>

</html>[root@ceph-admin ~]#

提示:可以看到對應ceph-mgr01的9283是處於監聽狀體,並且也是可以正常存取的;

將ceph exporter納入Prometheus server中去

重啟Prometheus server

[root@ceph-mgr02 prometheus]# systemctl restart prometheus.service

[root@ceph-mgr02 prometheus]# systemctl status prometheus.service

● prometheus.service - The Prometheus 2 monitoring system and time series database.

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-09 22:37:16 CST; 5s ago

Docs: https://prometheus.io

Main PID: 2481 (prometheus)

CGroup: /system.slice/prometheus.service

└─2481 /usr/local/prometheus/prometheus --storage.tsdb.path=/var/lib/prometheus --config.file=/usr/local/prometh...

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.347Z caller=head.go:613 level=info compo...ent=2

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.387Z caller=head.go:613 level=info compo...ent=2

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.393Z caller=head.go:613 level=info compo...ent=2

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.393Z caller=head.go:619 level=info compo…77763ms

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.394Z caller=main.go:993 level=info fs_ty...MAGIC

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.394Z caller=main.go:996 level=info msg="...rted"

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.394Z caller=main.go:1177 level=info msg=...s.yml

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.395Z caller=main.go:1214 level=info msg="Comp…µs

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.395Z caller=main.go:957 level=info msg="...sts."

Oct 09 22:37:16 ceph-mgr02.ilinux.io prometheus[2481]: ts=2022-10-09T14:37:16.395Z caller=manager.go:941 level=info co...r..."

Hint: Some lines were ellipsized, use -l to show in full.

[root@ceph-mgr02 prometheus]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 172.16.30.75:6800 *:*

LISTEN 0 128 192.168.0.75:6800 *:*

LISTEN 0 128 172.16.30.75:6801 *:*

LISTEN 0 128 192.168.0.75:6801 *:*

LISTEN 0 128 192.168.0.75:6802 *:*

LISTEN 0 128 172.16.30.75:6802 *:*

LISTEN 0 128 192.168.0.75:6803 *:*

LISTEN 0 128 172.16.30.75:6803 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:8443 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:9090 [::]:*

LISTEN 0 5 [::]:9283 [::]:*

[root@ceph-mgr02 prometheus]#

存取Prometheus server的9090埠,看看對應ceph exporter的指標資料是否已經有了?

提示:可以看到現在存取prometheus server 的9090埠多了許多以ceph開頭的指標,說明ceph exporter已經將資料傳送給Prometheus server;

部署Grafana展示ceph 指標資料

建立grafana的倉庫組態檔

[root@ceph-mgr02 prometheus]# cat /etc/yum.repos.d/grafana.repo [grafana] name=grafana baseurl=https://mirrors.aliyun.com/grafana/yum/rpm repo_gpgcheck=0 enabled=1 gpgcheck=0 [root@ceph-mgr02 prometheus]#

安裝grafana

[root@ceph-mgr02 prometheus]# yum install grafana -y

啟動grafana

[root@ceph-mgr02 prometheus]# systemctl start grafana-server.service [root@ceph-mgr02 prometheus]# ss -tnl State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 172.16.30.75:6800 *:* LISTEN 0 128 192.168.0.75:6800 *:* LISTEN 0 128 172.16.30.75:6801 *:* LISTEN 0 128 192.168.0.75:6801 *:* LISTEN 0 128 192.168.0.75:6802 *:* LISTEN 0 128 172.16.30.75:6802 *:* LISTEN 0 128 192.168.0.75:6803 *:* LISTEN 0 128 172.16.30.75:6803 *:* LISTEN 0 128 *:22 *:* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 5 *:8443 *:* LISTEN 0 128 [::]:22 [::]:* LISTEN 0 128 [::]:3000 [::]:* LISTEN 0 100 [::1]:25 [::]:* LISTEN 0 128 [::]:9090 [::]:* LISTEN 0 5 [::]:9283 [::]:* [root@ceph-mgr02 prometheus]#

提示:grafana預設監聽在tcp的3000埠,如果啟動沒有報錯,對應3000埠處於監聽,說明grafana就啟動起來了;

存取grafana所在主機的3000埠設定grafana

提示:grafana的預設使用者名稱是admin,密碼也是admin;第一進入要求更改密碼,當然我這裡測試不更改也行;不影響使用;

新增Prometheus資料來源

提示:這裡只需要填寫Prometheus server的地址即可;如果Prometheus開啟了認證,對應認證資訊也是需要填寫的;

匯入Prometheus摸板

提示:這個模板是展示Prometheus的一個專用面板,當然我們也可以自己定義展示那些資料;

下載ceph模板:https://grafana.com/grafana/dashboards/7056-ceph-cluster/;

匯入ceph模板

ok,基於Prometheus監控ceph的測試就到此為止;