分散式儲存系統之Ceph叢集CephFS基礎使用

前文我們瞭解了ceph之上的RBD介面使用相關話題,回顧請參考https://www.cnblogs.com/qiuhom-1874/p/16753098.html;今天我們來聊一聊ceph之上的另一個使用者端介面cephfs使用相關話題;

CephFS概述

檔案系統是至今在計算機領域中用到的儲存存取中最通用也是最普遍的介面;即便是我們前面聊到的RDB塊裝置,絕大多數都是格式化分割區掛載至檔案系統之上使用;使用純裸裝置的場景其實不多;為此,ceph在向外提供使用者端介面中也提供了檔案系統介面cephfs;不同於rbd的架構,cephfs需要在rados儲存叢集上啟動一個mds的程序來幫忙管理檔案系統的後設資料資訊;我們知道對於rados儲存系統來說,不管什麼方式的使用者端,儲存到rados之上的資料都會經由儲存池,然後儲存到對應的osd之上;對於mds(metadata server )來說,它需要工作為一個守護行程,為其使用者端提供檔案系統服務;使用者端的每一次存取操作,都會先聯絡mds,找對應的後設資料資訊;但是mds它自身不儲存任何後設資料資訊,檔案系統的後設資料資訊都會儲存到rados的一個儲存池當中,而檔案本身的資料儲存到另一個儲存池當中;這也意味著msd是一個無狀態服務,有點類似k8s裡的apiserver,自身不儲存資料,而是將資料儲存至etcd中,使得apiserver 成為一個無狀態服務;mds為一個無狀態服務,也就意味著可以有多個mds同時提供服務,相比傳統檔案儲存系統來講metadata server成為瓶頸的可能也就不復存在;

提示:CephFS依賴於專用的MDS(MetaData Server)元件管理後設資料資訊並向用戶端輸出一個倒置的樹狀層級結構;將後設資料快取於MDS的記憶體中, 把後設資料的更新紀錄檔於流式化後儲存在RADOS叢集上, 將層級結構中的的每個名稱空間對應地範例化成一個目錄且儲存為一個專有的RADOS物件;

CephFS架構

提示:cephfs是建構在libcephfs之上,libcephfs建構在librados之上,即cephfs工作在librados的頂端,向外提供檔案系統服務;它支援兩種方式的使用,一種是基於核心空間模組(ceph)掛載使用,一種是基於使用者空間FUSE來掛載使用;

建立CephFS

通過上述描述,cephfs的工作邏輯首先需要兩個儲存池來分別存放後設資料和資料;這個我們在前邊的ceph存取介面啟用一文中有聊到過,回顧請參考https://www.cnblogs.com/qiuhom-1874/p/16727620.html;我這裡不做過多說明;

檢視CephFS狀態

[root@ceph-admin ~]# ceph fs status cephfs cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 2286 | 280G | | cephfs-datapool | data | 0 | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [root@ceph-admin ~]#

CephFS使用者端賬號

啟用CephX認證的叢集上,CephFS的使用者端完成認證後方可掛載存取檔案系統;

[root@ceph-admin ~]# ceph auth get-or-create client.fsclient mon 'allow r' mds 'allow rw' osd 'allow rw pool=cephfs-datapool'

[client.fsclient]

key = AQDx2z5jgeqiIRAAIxQFz09BF99kcAYxiFwOWg==

[root@ceph-admin ~]# ceph auth get client.fsclient

exported keyring for client.fsclient

[client.fsclient]

key = AQDx2z5jgeqiIRAAIxQFz09BF99kcAYxiFwOWg==

caps mds = "allow rw"

caps mon = "allow r"

caps osd = "allow rw pool=cephfs-datapool"

[root@ceph-admin ~]#

提示:這裡需要注意,對於後設資料儲存池來說,它的使用者端是mds,對應資料的讀寫都是有mds來完成操作,對於cephfs的使用者端來說,他不需要任何操作後設資料儲存池的許可權,我們這裡只需要授權使用者對資料儲存池有讀寫許可權即可;對於mon節點來說,使用者只需要有讀的許可權就好,對mds有讀寫許可權就好;

儲存使用者賬號的金鑰資訊於secret檔案,用於使用者端掛載操作認證之用

[root@ceph-admin ~]# ceph auth print-key client.fsclient AQDx2z5jgeqiIRAAIxQFz09BF99kcAYxiFwOWg==[root@ceph-admin ~]# ceph auth print-key client.fsclient -o fsclient.key [root@ceph-admin ~]# cat fsclient.key AQDx2z5jgeqiIRAAIxQFz09BF99kcAYxiFwOWg==[root@ceph-admin ~]#

提示:這裡只需要匯出key的資訊就好,對於許可權資訊,使用者端用不到,使用者端拿著key去ceph上認證,對應許可權ceph是知道的;

將金鑰檔案需要儲存於掛載CephFS的使用者端主機上,我們可以使用scp的方式推到使用者端主機之上;使用者端主機除了要有這個key檔案之外,還需要有ceph叢集的組態檔

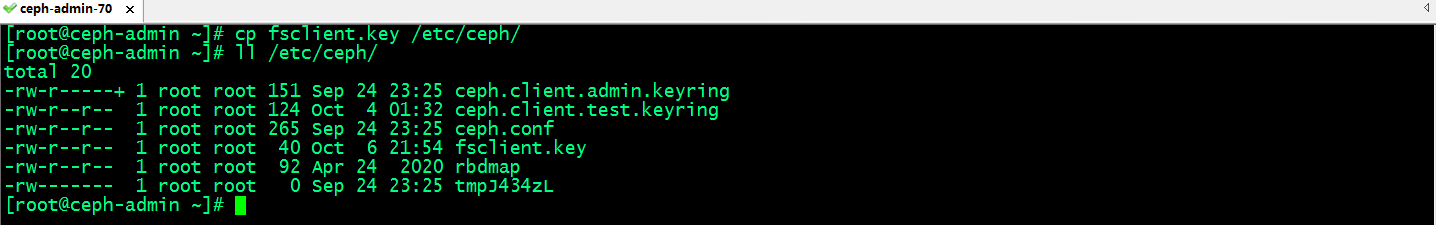

提示:我這裡以admin host作為使用者端使用,將對應key檔案複製到/etc/ceph/目錄下,對應使用核心模組掛載,指定mount到該目錄讀取對應key的資訊即可;

核心使用者端安裝必要工具和模組

1、核心模組ceph.ko

2、安裝ceph-common程式包

3、提供ceph.conf組態檔和用於認證的金鑰檔案

[root@ceph-admin ~]# ls /lib/modules/3.10.0-1160.76.1.el7.x86_64/kernel/fs/ceph/ ceph.ko.xz [root@ceph-admin ~]# modinfo ceph filename: /lib/modules/3.10.0-1160.76.1.el7.x86_64/kernel/fs/ceph/ceph.ko.xz license: GPL description: Ceph filesystem for Linux author: Patience Warnick <[email protected]> author: Yehuda Sadeh <[email protected]> author: Sage Weil <[email protected]> alias: fs-ceph retpoline: Y rhelversion: 7.9 srcversion: B1FF0EC5E9EF413CE8D9D1C depends: libceph intree: Y vermagic: 3.10.0-1160.76.1.el7.x86_64 SMP mod_unload modversions signer: CentOS Linux kernel signing key sig_key: C6:93:65:52:C5:A1:E9:97:0B:A2:4C:98:1A:C4:51:A6:BC:11:09:B9 sig_hashalgo: sha256 [root@ceph-admin ~]# yum info ceph-common Loaded plugins: fastestmirror Repository epel is listed more than once in the configuration Repository epel-debuginfo is listed more than once in the configuration Repository epel-source is listed more than once in the configuration Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Installed Packages Name : ceph-common Arch : x86_64 Epoch : 2 Version : 13.2.10 Release : 0.el7 Size : 44 M Repo : installed From repo : Ceph Summary : Ceph Common URL : http://ceph.com/ License : LGPL-2.1 and CC-BY-SA-3.0 and GPL-2.0 and BSL-1.0 and BSD-3-Clause and MIT Description : Common utilities to mount and interact with a ceph storage cluster. : Comprised of files that are common to Ceph clients and servers. [root@ceph-admin ~]# ls /etc/ceph/ ceph.client.admin.keyring ceph.client.test.keyring ceph.conf fsclient.key rbdmap tmpJ434zL [root@ceph-admin ~]#

mount掛載CephFS

[root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 [root@ceph-admin ~]# mount -t ceph ceph-mon01:6789,ceph-mon02:6789,ceph-mon03:6789:/ /mnt -o name=fsclient,secretfile=/etc/ceph/fsclient.key [root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 192.168.0.71:6789,172.16.30.71:6789,192.168.0.72:6789,172.16.30.72:6789,192.168.0.73:6789,172.16.30.73:6789:/ 281G 0 281G 0% /mnt [root@ceph-admin ~]# mount |tail -1 192.168.0.71:6789,172.16.30.71:6789,192.168.0.72:6789,172.16.30.72:6789,192.168.0.73:6789,172.16.30.73:6789:/ on /mnt type ceph (rw,relatime,name=fsclient,secret=<hidden>,acl,wsize=16777216) [root@ceph-admin ~]#

檢視掛載狀態

[root@ceph-admin ~]# stat -f /mnt

File: "/mnt"

ID: a0de3ae372c48f48 Namelen: 255 Type: ceph

Block size: 4194304 Fundamental block size: 4194304

Blocks: Total: 71706 Free: 71706 Available: 71706

Inodes: Total: 0 Free: -1

[root@ceph-admin ~]#

在/mnt上儲存資料,看看對應是否可以正常儲存?

[root@ceph-admin ~]# find /usr/share/ -type f -name '*.jpg' -exec cp {} /mnt \;

[root@ceph-admin ~]# ll /mnt

total 3392

-rw-r--r-- 1 root root 961243 Oct 6 22:17 day.jpg

-rw-r--r-- 1 root root 961243 Oct 6 22:17 default.jpg

-rw-r--r-- 1 root root 980265 Oct 6 22:17 morning.jpg

-rw-r--r-- 1 root root 569714 Oct 6 22:17 night.jpg

[root@ceph-admin ~]#

提示:可以看到我們可以正常向/mnt儲存檔案;

將掛載資訊寫入/etc/fstab組態檔

提示:這裡需要注意,寫入fstab檔案中,如果是網路檔案系統建議加上_netdev選項來指定該檔案系統為網路檔案系統,如果當系統啟動,如果掛載不上,超時後自動放棄掛載,否則系統會一直嘗試掛載,導致系統可能啟動不起來;

測試,取消/mnt的掛載,然後使用fstab組態檔掛載,看看是否可以正常掛載?

[root@ceph-admin ~]# umount /mnt [root@ceph-admin ~]# ls /mnt [root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 [root@ceph-admin ~]# mount -a [root@ceph-admin ~]# ls /mnt day.jpg default.jpg morning.jpg night.jpg [root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 192.168.0.71:6789,172.16.30.71:6789,192.168.0.72:6789,172.16.30.72:6789,192.168.0.73:6789,172.16.30.73:6789:/ 281G 0 281G 0% /mnt [root@ceph-admin ~]#

提示:可以看到我們使用mount -a是可以直接將cephfs掛載至/mnt之上,說明我們組態檔內容沒有問題;ok,到此基於核心空間模組(ceph.ko)掛載cephfs檔案系統的測試就完成了,接下來我們來說說使用使用者空間fuse掛載cephfs;

FUSE掛載CephFS

FUSE,全稱Filesystem in Userspace,用於非特權使用者能夠無需操作核心而建立檔案系統;使用者端主機環境準備,安裝ceph-fuse程式包,獲取到使用者端賬號的keyring檔案和ceph.conf組態檔即可;這裡就不需要ceph-common;

[root@ceph-admin ~]# yum install -y ceph-fuse Loaded plugins: fastestmirror Repository epel is listed more than once in the configuration Repository epel-debuginfo is listed more than once in the configuration Repository epel-source is listed more than once in the configuration Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Ceph | 1.5 kB 00:00:00 Ceph-noarch | 1.5 kB 00:00:00 base | 3.6 kB 00:00:00 ceph-source | 1.5 kB 00:00:00 epel | 4.7 kB 00:00:00 extras | 2.9 kB 00:00:00 updates | 2.9 kB 00:00:00 (1/4): extras/7/x86_64/primary_db | 249 kB 00:00:00 (2/4): epel/x86_64/updateinfo | 1.1 MB 00:00:00 (3/4): epel/x86_64/primary_db | 7.0 MB 00:00:01 (4/4): updates/7/x86_64/primary_db | 17 MB 00:00:02 Resolving Dependencies --> Running transaction check ---> Package ceph-fuse.x86_64 2:13.2.10-0.el7 will be installed --> Processing Dependency: fuse for package: 2:ceph-fuse-13.2.10-0.el7.x86_64 --> Running transaction check ---> Package fuse.x86_64 0:2.9.2-11.el7 will be installed --> Finished Dependency Resolution Dependencies Resolved ================================================================================================================================== Package Arch Version Repository Size ================================================================================================================================== Installing: ceph-fuse x86_64 2:13.2.10-0.el7 Ceph 490 k Installing for dependencies: fuse x86_64 2.9.2-11.el7 base 86 k Transaction Summary ================================================================================================================================== Install 1 Package (+1 Dependent package) Total download size: 576 k Installed size: 1.6 M Downloading packages: (1/2): fuse-2.9.2-11.el7.x86_64.rpm | 86 kB 00:00:00 (2/2): ceph-fuse-13.2.10-0.el7.x86_64.rpm | 490 kB 00:00:15 ---------------------------------------------------------------------------------------------------------------------------------- Total 37 kB/s | 576 kB 00:00:15 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : fuse-2.9.2-11.el7.x86_64 1/2 Installing : 2:ceph-fuse-13.2.10-0.el7.x86_64 2/2 Verifying : 2:ceph-fuse-13.2.10-0.el7.x86_64 1/2 Verifying : fuse-2.9.2-11.el7.x86_64 2/2 Installed: ceph-fuse.x86_64 2:13.2.10-0.el7 Dependency Installed: fuse.x86_64 0:2.9.2-11.el7 Complete! [root@ceph-admin ~]#

掛載CephFS

[root@ceph-admin ~]# ceph-fuse -n client.fsclient -m ceph-mon01:6789,ceph-mon02:6789,ceph-mon03:6789 /mnt 2022-10-06 23:13:17.185 7fae97fbec00 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.fsclient.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory 2022-10-06 23:13:17.185 7fae97fbec00 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication failed to fetch mon config (--no-mon-config to skip) [root@ceph-admin ~]#

提示:這裡提示我們在/etc/ceph/目錄下沒有找到對應使用者的keyring檔案;

匯出client.fsclient使用者金鑰資訊,並存放在/etc/ceph下取名為ceph.client.fsclient.keyring;

[root@ceph-admin ~]# ceph auth get client.fsclient -o /etc/ceph/ceph.client.fsclient.keyring

exported keyring for client.fsclient

[root@ceph-admin ~]# cat /etc/ceph/ceph.client.fsclient.keyring

[client.fsclient]

key = AQDx2z5jgeqiIRAAIxQFz09BF99kcAYxiFwOWg==

caps mds = "allow rw"

caps mon = "allow r"

caps osd = "allow rw pool=cephfs-datapool"

[root@ceph-admin ~]#

再次使用ceph-fuse掛載cephfs

[root@ceph-admin ~]# ceph-fuse -n client.fsclient -m ceph-mon01:6789,ceph-mon02:6789,ceph-mon03:6789 /mnt 2022-10-06 23:16:43.066 7fd51d9c0c00 -1 init, newargv = 0x55f0016ebd40 newargc=7 ceph-fuse[8096]: starting ceph client ceph-fuse[8096]: starting fuse [root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 ceph-fuse 281G 4.0M 281G 1% /mnt [root@ceph-admin ~]# ll /mnt total 3392 -rw-r--r-- 1 root root 961243 Oct 6 22:17 day.jpg -rw-r--r-- 1 root root 961243 Oct 6 22:17 default.jpg -rw-r--r-- 1 root root 980265 Oct 6 22:17 morning.jpg -rw-r--r-- 1 root root 569714 Oct 6 22:17 night.jpg [root@ceph-admin ~]#

將掛載資訊寫入/etc/fstab檔案中

提示:使用fuse方式掛載cephfs,對應檔案系統裝置為none,型別為fuse.ceph;掛載選項裡只需要指定用ceph.id(ceph授權的使用者名稱,不要字首client),ceph組態檔路徑;這裡不需要指定金鑰檔案,因為ceph.id指定的使用者名稱,ceph-fuse會自動到/etc/ceph/目錄下找對應檔名的keyring檔案來當作對應使用者名稱的金鑰檔案;

測試,使用fstab組態檔,看看是否可正常掛載?

[root@ceph-admin ~]# df Filesystem 1K-blocks Used Available Use% Mounted on devtmpfs 919632 0 919632 0% /dev tmpfs 931496 0 931496 0% /dev/shm tmpfs 931496 9744 921752 2% /run tmpfs 931496 0 931496 0% /sys/fs/cgroup /dev/mapper/centos-root 50827012 3718492 47108520 8% / /dev/sda1 520868 179572 341296 35% /boot tmpfs 186300 0 186300 0% /run/user/0 [root@ceph-admin ~]# ll /mnt total 0 [root@ceph-admin ~]# mount -a ceph-fuse[8770]: starting ceph client 2022-10-06 23:25:57.230 7ff21e3f7c00 -1 init, newargv = 0x5614f1bad9d0 newargc=9 ceph-fuse[8770]: starting fuse [root@ceph-admin ~]# mount |tail -2 fusectl on /sys/fs/fuse/connections type fusectl (rw,relatime) ceph-fuse on /mnt type fuse.ceph-fuse (rw,relatime,user_id=0,group_id=0,allow_other) [root@ceph-admin ~]# ll /mnt total 3392 -rw-r--r-- 1 root root 961243 Oct 6 22:17 day.jpg -rw-r--r-- 1 root root 961243 Oct 6 22:17 default.jpg -rw-r--r-- 1 root root 980265 Oct 6 22:17 morning.jpg -rw-r--r-- 1 root root 569714 Oct 6 22:17 night.jpg [root@ceph-admin ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 899M 0 899M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.6M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 3.6G 45G 8% / /dev/sda1 509M 176M 334M 35% /boot tmpfs 182M 0 182M 0% /run/user/0 ceph-fuse 281G 4.0M 281G 1% /mnt [root@ceph-admin ~]#

提示:可以看到使用mount -a 讀取組態檔也是可以正常掛載,說明我們組態檔中的內容沒有問題;

解除安裝檔案系統的方式

第一種我們可以使用umount 掛載點來實現解除安裝;

[root@ceph-admin ~]# mount |tail -1 ceph-fuse on /mnt type fuse.ceph-fuse (rw,relatime,user_id=0,group_id=0,allow_other) [root@ceph-admin ~]# ll /mnt total 3392 -rw-r--r-- 1 root root 961243 Oct 6 22:17 day.jpg -rw-r--r-- 1 root root 961243 Oct 6 22:17 default.jpg -rw-r--r-- 1 root root 980265 Oct 6 22:17 morning.jpg -rw-r--r-- 1 root root 569714 Oct 6 22:17 night.jpg [root@ceph-admin ~]# umount /mnt [root@ceph-admin ~]# ll /mnt total 0 [root@ceph-admin ~]#

第二種我們使用fusermount -u 掛載點來解除安裝

[root@ceph-admin ~]# mount -a ceph-fuse[9717]: starting ceph client 2022-10-06 23:40:55.540 7f169dbc4c00 -1 init, newargv = 0x55859177fa40 newargc=9 ceph-fuse[9717]: starting fuse [root@ceph-admin ~]# ll /mnt total 3392 -rw-r--r-- 1 root root 961243 Oct 6 22:17 day.jpg -rw-r--r-- 1 root root 961243 Oct 6 22:17 default.jpg -rw-r--r-- 1 root root 980265 Oct 6 22:17 morning.jpg -rw-r--r-- 1 root root 569714 Oct 6 22:17 night.jpg [root@ceph-admin ~]# fusermount -u /mnt [root@ceph-admin ~]# ll /mnt total 0 [root@ceph-admin ~]#

ok,到此基於使用者空間fuse方式掛載cephfs的測試就完成了;