謠言檢測(PLAN)——《Interpretable Rumor Detection in Microblogs by Attending to User Interactions》

論文資訊

論文標題:Interpretable Rumor Detection in Microblogs by Attending to User Interactions

論文作者:Ling Min Serena Khoo, Hai Leong Chieu, Zhong Qian, Jing Jiang

論文來源:2020,AAAI

論文地址:download

論文程式碼:download

Background

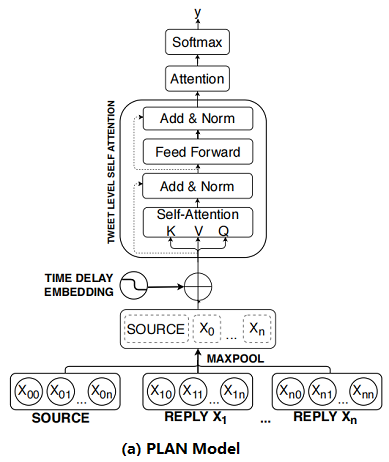

基於群體智慧的謠言檢測:Figure 1

本文觀點:基於樹結構的謠言檢測模型,往往忽略了 Branch 之間的互動。

1 Introduction

Motivation:a user posting a reply might be replying to the entire thread rather than to a specific user.

Mehtod:We propose a post-level attention model (PLAN) to model long distance interactions between tweets with the multi-head attention mechanism in a transformer network.

We investigated variants of this model:

-

- a structure aware self-attention model (StA-PLAN) that incorporates tree structure information in the transformer network;

- a hierarchical token and post-level attention model (StA-HiTPLAN) that learns a sentence representation with token-level self-attention.

-

- We utilize the attention weights from our model to provide both token-level and post-level explanations behind the model’s prediction. To the best of our knowledge, we are the first paper that has done this.

- We compare against previous works on two data sets - PHEME 5 events and Twitter15 and Twitter16 . Previous works only evaluated on one of the two data sets.

- Our proposed models could outperform current state-ofthe-art models for both data sets.

目前謠言檢測的型別:

(i) the content of the claim.

(ii) the bias and social network of the source of the claim.

(iii) fact checking with trustworthy sources.

(iv) community response to the claims.

2 Approaches

2.1 Recursive Neural Networks

觀點:謠言傳播樹通常是淺層的,一個使用者通常只回復一次 source post ,而後進行早期對話。

|

Dataset |

Twitter15 |

Twitter16 |

PHEME |

|

Tree-depth |

2.80 |

2.77 |

3.12 |

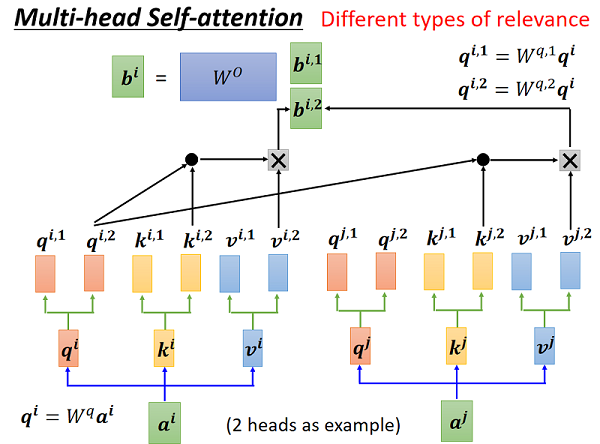

2.2 Transformer Networks

Transformer 中的注意機制使有效的遠端依賴關係建模成為可能。

Transformer 中的注意力機制:

$\alpha_{i j}=\operatorname{Compatibility}\left(q_{i}, k_{j}\right)=\operatorname{softmax}\left(\frac{q_{i} k_{j}^{T}}{\sqrt{d_{k}}}\right)\quad\quad\quad(1)$

$z_{i}=\sum_{j=1}^{n} \alpha_{i j} v_{j}\quad\quad\quad(2)$

2.3 Post-Level Attention Network (PLAN)

框架如下:

首先:將 Post 按時間順序排列;

其次:對每個 Post 使用 Max pool 得到 sentence embedding ;

然後:將 sentence embedding $X^{\prime}=\left(x_{1}^{\prime}, x_{2}^{\prime}, \ldots, x_{n}^{\prime}\right)$ 通過 $s$ 個多頭注意力模組 MHA 得到 $U=\left(u_{1}, u_{2}, \ldots, u_{n}\right)$;

最後:通過 attention 機制聚合這些輸出並使用全連線層進行預測 :

$\begin{array}{l}\alpha_{k}=\operatorname{softmax}\left(\gamma^{T} u_{k}\right) &\quad\quad\quad(3)\\v=\sum\limits _{k=0}^{m} \alpha_{k} u_{k} &\quad\quad\quad(4)\\p=\operatorname{softmax}\left(W_{p}^{T} v+b_{p}\right) &\quad\quad\quad(5)\end{array}$

where $\gamma \in \mathbb{R}^{d_{\text {model }}}, \alpha_{k} \in \mathbb{R}$,$W_{p} \in \mathbb{R}^{d_{\text {model }}, K}$,$b \in \mathbb{R}^{d_{\text {model }}}$,$u_{k}$ is the output after passing through $s$ number of MHA layers,$v$ and $p$ are the representation vector and prediction vector for $X$

回顧:

2.4 Structure Aware Post-Level Attention Network (StA-PLAN)

上述模型的問題:線性結構組織的推文容易失去結構資訊。

為了結合顯示樹結構的優勢和自注意力機制,本文擴充套件了 PLAN 模型,來包含結構資訊。

$\begin{array}{l}\alpha_{i j}=\operatorname{softmax}\left(\frac{q_{i} k_{j}^{T}+a_{i j}^{K}}{\sqrt{d_{k}}}\right)\\z_{i}=\sum\limits _{j=1}^{n} \alpha_{i j}\left(v_{j}+a_{i j}^{V}\right)\end{array}$

其中, $a_{i j}^{V}$ 和 $a_{i j}^{K}$ 是代表上述五種結構關係(i.e. parent, child, before, after and self) 的向量。

2.5 Structure Aware Hierarchical Token and Post-Level Attention Network (StA-HiTPLAN)

本文的PLAN 模型使用 max-pooling 來得到每條推文的句子表示,然而比較理想的方法是允許模型學習單詞向量的重要性。因此,本文提出了一個層次注意模型—— attention at a token-level then at a post-level。層次結構模型的概述如 Figure 2b 所示。

2.6 Time Delay Embedding

source post 建立的時候,reply 一般是抱持懷疑的狀態,而當 source post 釋出了一段時間後,reply 有著較高的趨勢顯示 post 是虛假的。因此,本文研究了 time delay information 對上述三種模型的影響。

To include time delay information for each tweet, we bin the tweets based on their latency from the time the source tweet was created. We set the total number of time bins to be 100 and each bin represents a 10 minutes interval. Tweets with latency of more than 1,000 minutes would fall into the last time bin. We used the positional encoding formula introduced in the transformer network to encode each time bin. The time delay embedding would be added to the sentence embedding of tweet. The time delay embedding, TDE, for each tweet is:

$\begin{array}{l}\mathrm{TDE}_{\text {pos }, 2 i} &=&\sin \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}} \\\mathrm{TDE}_{\text {pos }, 2 i+1} &=&\cos \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}}\end{array}$

where pos represents the time bin each tweet fall into and $p o s \in[0,100)$, $i$ refers to the dimension and $d_{\text {model }}$ refers to the total number of dimensions of the model.

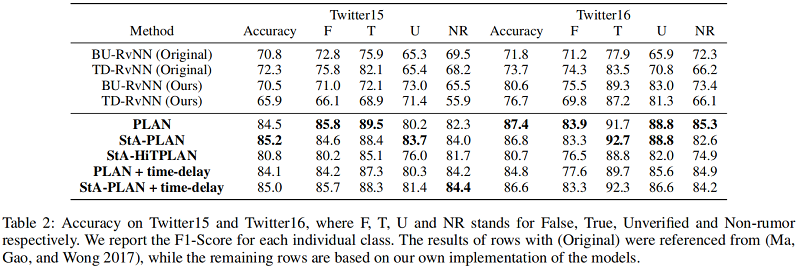

3 Experiments and Results

dataset

Result

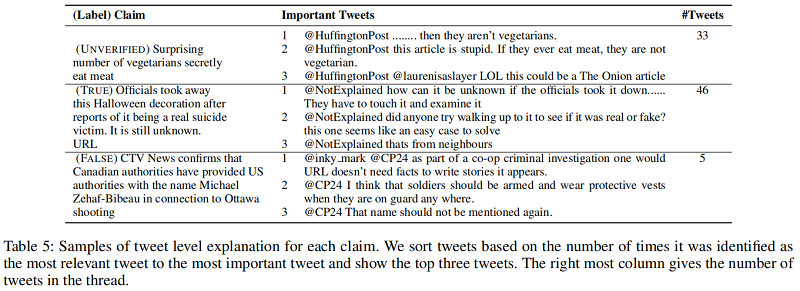

Post-Level Explanations

首先通過最後的 attention 層獲得最重要的推文 $tweet_{impt}$ ,然後從第 $i$ 個MHA層獲得該層的與 $tweet_{impt}$ 最相關的推文 $tweet _{rel,i}$ ,每篇推文可能被識別成最相關的推文多次,最後按照 被識別的次數排序,取前三名作為源推文的解釋。舉例如下:

Token-Level Explanation

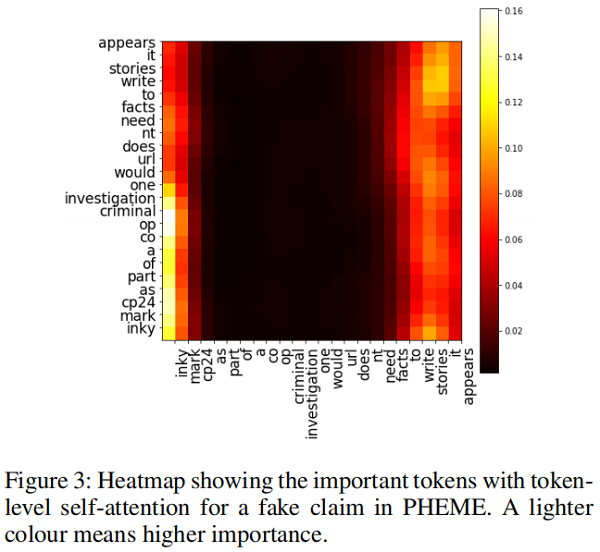

可以使用 token-level self-attention 的自注意力權重來進行 token-level 的解釋。比如評論 「@inky mark @CP24 as part of a co-op criminal investigation one would URL doesn’t need facts to write stories it appears.」中短語「facts to write stories it appears」表達了對源推文的質疑,下圖的自注意力權重圖可以看出大量權重集中在這一部分,這說明這個短語就可以作為一個解釋:

因上求緣,果上努力~~~~ 作者:關注我更新論文解讀,轉載請註明原文連結:https://www.cnblogs.com/BlairGrowing/p/16759157.html