分散式儲存系統之Ceph叢集狀態獲取及ceph組態檔說明

前文我們瞭解了Ceph的存取介面的啟用相關話題,回顧請參考https://www.cnblogs.com/qiuhom-1874/p/16727620.html;今天我們來聊一聊獲取ceph叢集狀態和ceph組態檔說明相關話題;

Ceph叢集狀態獲取常用命令

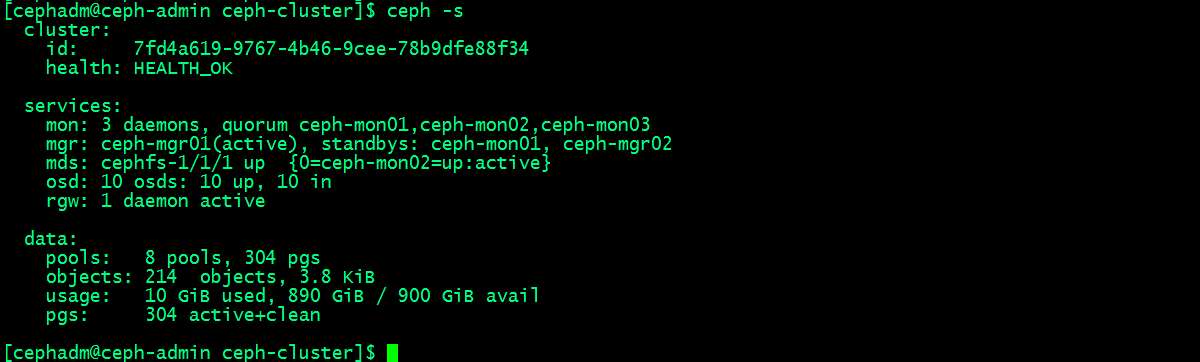

1、ceph -s :該命令用於輸出ceph叢集系統狀態資訊

提示:ceph -s主要輸出有三類資訊,一類是叢集相關資訊,比如叢集id,健康狀態;第二類是服務類相關資訊,比如叢集執行了幾個mon節點,幾個mgr節點,幾個mds,osd和rgw;這些服務都處於什麼樣的狀態等等;我們把這些資訊稱為叢集執行狀況,它可以讓我們一目瞭然的瞭解到叢集現有執行狀況;第三類資訊是資料儲存類的資訊;比如有多少個儲存池,和pg數量;usage用來展示叢集使用容量和剩餘容量以及總容量;這裡需要注意一點,叢集顯示的總磁碟大小,它不等於可以儲存這麼多物件資料;因為每一個物件資料都多個副本,所以真正能夠儲存物件資料的量應該根據副本的數量來計算;預設情況下,我們建立的儲存都是副本型儲存池,副本數量是3個(其中一個主,兩個從),即每一個物件資料都會儲存三份,所以真正能夠儲存物件資料的空間只有總空間的三分之一;

獲取叢集的即時狀態資訊

2、獲取pg的狀態

[cephadm@ceph-admin ceph-cluster]$ ceph pg stat 304 pgs: 304 active+clean; 3.8 KiB data, 10 GiB used, 890 GiB / 900 GiB avail [cephadm@ceph-admin ceph-cluster]$

3、獲取儲存池的狀態

[cephadm@ceph-admin ceph-cluster]$ ceph osd pool stats pool testpool id 1 nothing is going on pool rbdpool id 2 nothing is going on pool .rgw.root id 3 nothing is going on pool default.rgw.control id 4 nothing is going on pool default.rgw.meta id 5 nothing is going on pool default.rgw.log id 6 nothing is going on pool cephfs-metadatpool id 7 nothing is going on pool cephfs-datapool id 8 nothing is going on [cephadm@ceph-admin ceph-cluster]$

提示:如果後面沒有跟指定的儲存表示獲取所有儲存的狀態;

4、獲取儲存池大小和空間使用情況

[cephadm@ceph-admin ceph-cluster]$ ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

900 GiB 890 GiB 10 GiB 1.13

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

testpool 1 0 B 0 281 GiB 0

rbdpool 2 389 B 0 281 GiB 5

.rgw.root 3 1.1 KiB 0 281 GiB 4

default.rgw.control 4 0 B 0 281 GiB 8

default.rgw.meta 5 0 B 0 281 GiB 0

default.rgw.log 6 0 B 0 281 GiB 175

cephfs-metadatpool 7 2.2 KiB 0 281 GiB 22

cephfs-datapool 8 0 B 0 281 GiB 0

[cephadm@ceph-admin ceph-cluster]$

提示:ceph df輸出的內容主要分兩大段,第一段是global,全域性儲存空間用量情況;size表示總空間大小,avail表示剩餘空間大小;RAW USED表示已用到原始儲存空間;%RAW USED表示已用原始空間佔比重空間的比例;第二段是相關儲存空間使用情況;其中MAX AVAIL表示對應儲存池能夠使用的最大容量;OBJECTS表示該儲存池中物件的個數;

獲取儲存空間用量詳細情況

[cephadm@ceph-admin ceph-cluster]$ ceph df detail

GLOBAL:

SIZE AVAIL RAW USED %RAW USED OBJECTS

900 GiB 890 GiB 10 GiB 1.13 214

POOLS:

NAME ID QUOTA OBJECTS QUOTA BYTES USED %USED MAX AVAIL OBJECTS DIRTY READ WRITE RAW USED

testpool 1 N/A N/A 0 B 0 281 GiB 0 0 2 B 2 B 0 B

rbdpool 2 N/A N/A 389 B 0 281 GiB 5 5 75 B 19 B 1.1 KiB

.rgw.root 3 N/A N/A 1.1 KiB 0 281 GiB 4 4 66 B 4 B 3.4 KiB

default.rgw.control 4 N/A N/A 0 B 0 281 GiB 8 8 0 B 0 B 0 B

default.rgw.meta 5 N/A N/A 0 B 0 281 GiB 0 0 0 B 0 B 0 B

default.rgw.log 6 N/A N/A 0 B 0 281 GiB 175 175 7.2 KiB 4.8 KiB 0 B

cephfs-metadatpool 7 N/A N/A 2.2 KiB 0 281 GiB 22 22 0 B 45 B 6.7 KiB

cephfs-datapool 8 N/A N/A 0 B 0 281 GiB 0 0 0 B 0 B 0 B

[cephadm@ceph-admin ceph-cluster]$

5、檢查OSD和MON的狀態

[cephadm@ceph-admin ceph-cluster]$ ceph osd stat

10 osds: 10 up, 10 in; epoch: e99

[cephadm@ceph-admin ceph-cluster]$ ceph osd dump

epoch 99

fsid 7fd4a619-9767-4b46-9cee-78b9dfe88f34

created 2022-09-24 00:36:13.639715

modified 2022-09-25 12:33:15.111283

flags sortbitwise,recovery_deletes,purged_snapdirs

crush_version 25

full_ratio 0.95

backfillfull_ratio 0.9

nearfull_ratio 0.85

require_min_compat_client jewel

min_compat_client jewel

require_osd_release mimic

pool 1 'testpool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 16 pgp_num 16 last_change 42 flags hashpspool stripe_width 0

pool 2 'rbdpool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 81 flags hashpspool,selfmanaged_snaps stripe_width 0 application rbd

removed_snaps [1~3]

pool 3 '.rgw.root' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 84 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw

pool 4 'default.rgw.control' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 87 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw

pool 5 'default.rgw.meta' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 89 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw

pool 6 'default.rgw.log' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 91 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw

pool 7 'cephfs-metadatpool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 99 flags hashpspool stripe_width 0 application cephfs

pool 8 'cephfs-datapool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 99 flags hashpspool stripe_width 0 application cephfs

max_osd 10

osd.0 up in weight 1 up_from 67 up_thru 96 down_at 66 last_clean_interval [64,65) 192.168.0.71:6802/1361 172.16.30.71:6802/1361 172.16.30.71:6803/1361 192.168.0.71:6803/1361 exists,up bf3649af-e3f4-41a2-a5ce-8f1a316d344e

osd.1 up in weight 1 up_from 68 up_thru 96 down_at 66 last_clean_interval [64,65) 192.168.0.71:6800/1346 172.16.30.71:6800/1346 172.16.30.71:6801/1346 192.168.0.71:6801/1346 exists,up 7293a12a-7b4e-4c86-82dc-0acc15c3349e

osd.2 up in weight 1 up_from 67 up_thru 96 down_at 66 last_clean_interval [60,65) 192.168.0.72:6800/1389 172.16.30.72:6800/1389 172.16.30.72:6801/1389 192.168.0.72:6801/1389 exists,up 96c437c5-8e82-4486-910f-9e98d195e4f9

osd.3 up in weight 1 up_from 67 up_thru 96 down_at 66 last_clean_interval [60,65) 192.168.0.72:6802/1406 172.16.30.72:6802/1406 172.16.30.72:6803/1406 192.168.0.72:6803/1406 exists,up 4659d2a9-09c7-49d5-bce0-4d2e65f5198c

osd.4 up in weight 1 up_from 71 up_thru 96 down_at 68 last_clean_interval [59,66) 192.168.0.73:6802/1332 172.16.30.73:6802/1332 172.16.30.73:6803/1332 192.168.0.73:6803/1332 exists,up de019aa8-3d2a-4079-a99e-ec2da2d4edb9

osd.5 up in weight 1 up_from 71 up_thru 96 down_at 68 last_clean_interval [58,66) 192.168.0.73:6800/1333 172.16.30.73:6800/1333 172.16.30.73:6801/1333 192.168.0.73:6801/1333 exists,up 119c8748-af3b-4ac4-ac74-6171c90c82cc

osd.6 up in weight 1 up_from 69 up_thru 96 down_at 68 last_clean_interval [59,66) 192.168.0.74:6800/1306 172.16.30.74:6800/1306 172.16.30.74:6801/1306 192.168.0.74:6801/1306 exists,up 08d8dd8b-cdfe-4338-83c0-b1e2b5c2a799

osd.7 up in weight 1 up_from 69 up_thru 96 down_at 68 last_clean_interval [60,65) 192.168.0.74:6802/1301 172.16.30.74:6802/1301 172.16.30.74:6803/1301 192.168.0.74:6803/1301 exists,up 9de6cbd0-bb1b-49e9-835c-3e714a867393

osd.8 up in weight 1 up_from 73 up_thru 96 down_at 66 last_clean_interval [59,65) 192.168.0.75:6800/1565 172.16.30.75:6800/1565 172.16.30.75:6801/1565 192.168.0.75:6801/1565 exists,up 63aaa0b8-4e52-4d74-82a8-fbbe7b48c837

osd.9 up in weight 1 up_from 73 up_thru 96 down_at 66 last_clean_interval [59,65) 192.168.0.75:6802/1558 172.16.30.75:6802/1558 172.16.30.75:6803/1558 192.168.0.75:6803/1558 exists,up 6bf3204a-b64c-4808-a782-434a93ac578c

[cephadm@ceph-admin ceph-cluster]$

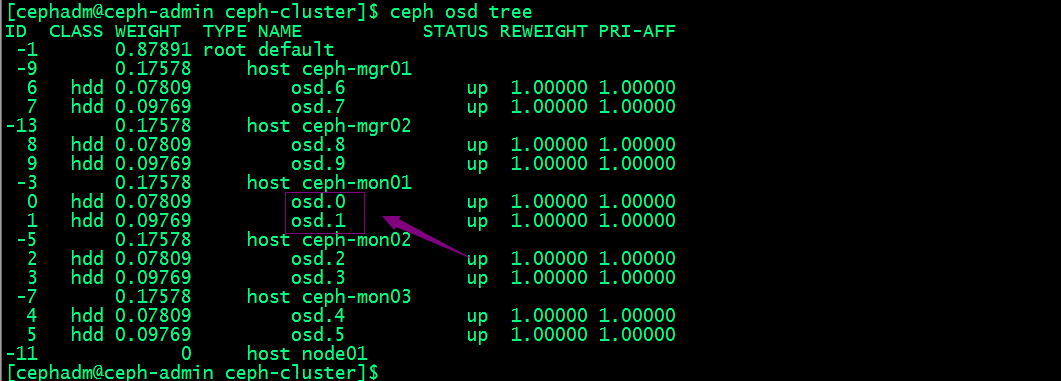

除了上述命令來檢查osd狀態,我們還可以根據OSD在CRUSH MPA中的位置檢視osd

[cephadm@ceph-admin ceph-cluster]$ ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.87891 root default -9 0.17578 host ceph-mgr01 6 hdd 0.07809 osd.6 up 1.00000 1.00000 7 hdd 0.09769 osd.7 up 1.00000 1.00000 -3 0.17578 host ceph-mon01 0 hdd 0.07809 osd.0 up 1.00000 1.00000 1 hdd 0.09769 osd.1 up 1.00000 1.00000 -5 0.17578 host ceph-mon02 2 hdd 0.07809 osd.2 up 1.00000 1.00000 3 hdd 0.09769 osd.3 up 1.00000 1.00000 -7 0.17578 host ceph-mon03 4 hdd 0.07809 osd.4 up 1.00000 1.00000 5 hdd 0.09769 osd.5 up 1.00000 1.00000 -11 0.17578 host node01 8 hdd 0.07809 osd.8 up 1.00000 1.00000 9 hdd 0.09769 osd.9 up 1.00000 1.00000 [cephadm@ceph-admin ceph-cluster]$

提示:從上面的輸出資訊我們可以看到每臺主機上osd編號情況,以及每個OSD的權重;

檢查mon節點狀態

[cephadm@ceph-admin ceph-cluster]$ ceph mon stat

e3: 3 mons at {ceph-mon01=192.168.0.71:6789/0,ceph-mon02=192.168.0.72:6789/0,ceph-mon03=192.168.0.73:6789/0}, election epoch 18, leader 0 ceph-mon01, quorum 0,1,2 ceph-mon01,ceph-mon02,ceph-mon03

[cephadm@ceph-admin ceph-cluster]$ ceph mon dump

dumped monmap epoch 3

epoch 3

fsid 7fd4a619-9767-4b46-9cee-78b9dfe88f34

last_changed 2022-09-24 01:56:24.196075

created 2022-09-24 00:36:13.210155

0: 192.168.0.71:6789/0 mon.ceph-mon01

1: 192.168.0.72:6789/0 mon.ceph-mon02

2: 192.168.0.73:6789/0 mon.ceph-mon03

[cephadm@ceph-admin ceph-cluster]$

提示:上述兩條命令都能顯示出叢集有多少個mon節點,以及對應節點的ip地址和監聽埠,以及mon節點編號等資訊;ceph mon stat除了能顯示有多少mon節點和mon的詳細資訊外,它還顯示領導節點的編號,以及選舉次數;

檢視仲裁狀態

[cephadm@ceph-admin ceph-cluster]$ ceph quorum_status

{"election_epoch":18,"quorum":[0,1,2],"quorum_names":["ceph-mon01","ceph-mon02","ceph-mon03"],"quorum_leader_name":"ceph-mon01","monmap":{"epoch":3,"fsid":"7fd4a619-9767-4b46-9cee-78b9dfe88f34","modified":"2022-09-24 01:56:24.196075","created":"2022-09-24 00:36:13.210155","features":{"persistent":["kraken","luminous","mimic","osdmap-prune"],"optional":[]},"mons":[{"rank":0,"name":"ceph-mon01","addr":"192.168.0.71:6789/0","public_addr":"192.168.0.71:6789/0"},{"rank":1,"name":"ceph-mon02","addr":"192.168.0.72:6789/0","public_addr":"192.168.0.72:6789/0"},{"rank":2,"name":"ceph-mon03","addr":"192.168.0.73:6789/0","public_addr":"192.168.0.73:6789/0"}]}}

[cephadm@ceph-admin ceph-cluster]$

使用管理通訊端查詢叢集狀態

Ceph的管理通訊端介面常用於查詢守護行程,通訊端預設儲存 於/var/run/ceph目錄,此介面的使用不能以遠端方式程序,只能在對應節點上使用;

命令的使用格式:ceph --admin-daemon /var/run/ceph/socket-name 命令;比如獲取幫助資訊 ceph --admin-daemon /var/run/ceph/socket-name help

[root@ceph-mon01 ~]# ceph --admin-daemon /var/run/ceph/ceph-osd.0.asok help

{

"calc_objectstore_db_histogram": "Generate key value histogram of kvdb(rocksdb) which used by bluestore",

"compact": "Commpact object store's omap. WARNING: Compaction probably slows your requests",

"config diff": "dump diff of current config and default config",

"config diff get": "dump diff get <field>: dump diff of current and default config setting <field>",

"config get": "config get <field>: get the config value",

"config help": "get config setting schema and descriptions",

"config set": "config set <field> <val> [<val> ...]: set a config variable",

"config show": "dump current config settings",

"config unset": "config unset <field>: unset a config variable",

"dump_blacklist": "dump blacklisted clients and times",

"dump_blocked_ops": "show the blocked ops currently in flight",

"dump_historic_ops": "show recent ops",

"dump_historic_ops_by_duration": "show slowest recent ops, sorted by duration",

"dump_historic_slow_ops": "show slowest recent ops",

"dump_mempools": "get mempool stats",

"dump_objectstore_kv_stats": "print statistics of kvdb which used by bluestore",

"dump_op_pq_state": "dump op priority queue state",

"dump_ops_in_flight": "show the ops currently in flight",

"dump_osd_network": "Dump osd heartbeat network ping times",

"dump_pgstate_history": "show recent state history",

"dump_reservations": "show recovery reservations",

"dump_scrubs": "print scheduled scrubs",

"dump_watchers": "show clients which have active watches, and on which objects",

"flush_journal": "flush the journal to permanent store",

"flush_store_cache": "Flush bluestore internal cache",

"get_command_descriptions": "list available commands",

"get_heap_property": "get malloc extension heap property",

"get_latest_osdmap": "force osd to update the latest map from the mon",

"get_mapped_pools": "dump pools whose PG(s) are mapped to this OSD.",

"getomap": "output entire object map",

"git_version": "get git sha1",

"heap": "show heap usage info (available only if compiled with tcmalloc)",

"help": "list available commands",

"injectdataerr": "inject data error to an object",

"injectfull": "Inject a full disk (optional count times)",

"injectmdataerr": "inject metadata error to an object",

"list_devices": "list OSD devices.",

"log dump": "dump recent log entries to log file",

"log flush": "flush log entries to log file",

"log reopen": "reopen log file",

"objecter_requests": "show in-progress osd requests",

"ops": "show the ops currently in flight",

"perf dump": "dump perfcounters value",

"perf histogram dump": "dump perf histogram values",

"perf histogram schema": "dump perf histogram schema",

"perf reset": "perf reset <name>: perf reset all or one perfcounter name",

"perf schema": "dump perfcounters schema",

"rmomapkey": "remove omap key",

"set_heap_property": "update malloc extension heap property",

"set_recovery_delay": "Delay osd recovery by specified seconds",

"setomapheader": "set omap header",

"setomapval": "set omap key",

"smart": "probe OSD devices for SMART data.",

"status": "high-level status of OSD",

"trigger_deep_scrub": "Trigger a scheduled deep scrub ",

"trigger_scrub": "Trigger a scheduled scrub ",

"truncobj": "truncate object to length",

"version": "get ceph version"

}

[root@ceph-mon01 ~]#

比如獲取mon01的版本資訊

[root@ceph-mon01 ~]# ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon01.asok version

{"version":"13.2.10","release":"mimic","release_type":"stable"}

[root@ceph-mon01 ~]#

獲取osd的狀態資訊

[root@ceph-mon01 ~]# ceph --admin-daemon /var/run/ceph/ceph-osd.0.asok status

{

"cluster_fsid": "7fd4a619-9767-4b46-9cee-78b9dfe88f34",

"osd_fsid": "bf3649af-e3f4-41a2-a5ce-8f1a316d344e",

"whoami": 0,

"state": "active",

"oldest_map": 1,

"newest_map": 114,

"num_pgs": 83

}

[root@ceph-mon01 ~]#

程序的執行時設定

我們可以使用ceph daemon命令來動態的設定ceph程序,即不停服務動態設定程序;

比如,獲取osd.0的公網地址

[root@ceph-mon01 ~]# ceph daemon osd.0 config get public_addr

{

"public_addr": "192.168.0.71:0/0"

}

[root@ceph-mon01 ~]#

獲取幫助資訊:命令格式:ceph daemon {daemon-type}.{id} help

[root@ceph-mon01 ~]# ceph daemon osd.1 help

{

"calc_objectstore_db_histogram": "Generate key value histogram of kvdb(rocksdb) which used by bluestore",

"compact": "Commpact object store's omap. WARNING: Compaction probably slows your requests",

"config diff": "dump diff of current config and default config",

"config diff get": "dump diff get <field>: dump diff of current and default config setting <field>",

"config get": "config get <field>: get the config value",

"config help": "get config setting schema and descriptions",

"config set": "config set <field> <val> [<val> ...]: set a config variable",

"config show": "dump current config settings",

"config unset": "config unset <field>: unset a config variable",

"dump_blacklist": "dump blacklisted clients and times",

"dump_blocked_ops": "show the blocked ops currently in flight",

"dump_historic_ops": "show recent ops",

"dump_historic_ops_by_duration": "show slowest recent ops, sorted by duration",

"dump_historic_slow_ops": "show slowest recent ops",

"dump_mempools": "get mempool stats",

"dump_objectstore_kv_stats": "print statistics of kvdb which used by bluestore",

"dump_op_pq_state": "dump op priority queue state",

"dump_ops_in_flight": "show the ops currently in flight",

"dump_osd_network": "Dump osd heartbeat network ping times",

"dump_pgstate_history": "show recent state history",

"dump_reservations": "show recovery reservations",

"dump_scrubs": "print scheduled scrubs",

"dump_watchers": "show clients which have active watches, and on which objects",

"flush_journal": "flush the journal to permanent store",

"flush_store_cache": "Flush bluestore internal cache",

"get_command_descriptions": "list available commands",

"get_heap_property": "get malloc extension heap property",

"get_latest_osdmap": "force osd to update the latest map from the mon",

"get_mapped_pools": "dump pools whose PG(s) are mapped to this OSD.",

"getomap": "output entire object map",

"git_version": "get git sha1",

"heap": "show heap usage info (available only if compiled with tcmalloc)",

"help": "list available commands",

"injectdataerr": "inject data error to an object",

"injectfull": "Inject a full disk (optional count times)",

"injectmdataerr": "inject metadata error to an object",

"list_devices": "list OSD devices.",

"log dump": "dump recent log entries to log file",

"log flush": "flush log entries to log file",

"log reopen": "reopen log file",

"objecter_requests": "show in-progress osd requests",

"ops": "show the ops currently in flight",

"perf dump": "dump perfcounters value",

"perf histogram dump": "dump perf histogram values",

"perf histogram schema": "dump perf histogram schema",

"perf reset": "perf reset <name>: perf reset all or one perfcounter name",

"perf schema": "dump perfcounters schema",

"rmomapkey": "remove omap key",

"set_heap_property": "update malloc extension heap property",

"set_recovery_delay": "Delay osd recovery by specified seconds",

"setomapheader": "set omap header",

"setomapval": "set omap key",

"smart": "probe OSD devices for SMART data.",

"status": "high-level status of OSD",

"trigger_deep_scrub": "Trigger a scheduled deep scrub ",

"trigger_scrub": "Trigger a scheduled scrub ",

"truncobj": "truncate object to length",

"version": "get ceph version"

}

[root@ceph-mon01 ~]#

提示:ceph daemon獲取某個程序的資訊時,需要在對應主機上用root執行命令;

動態設定程序引數有兩種方式,一種是通過mon向對應程序傳送設定,一種是通過admin socket傳送設定給程序

通過mon向對應程序傳送設定命令格式: ceph tell {daemon-type}.{daemon id or *} injectargs --{name} {value} [--{name} {value}]

[cephadm@ceph-admin ceph-cluster]$ ceph tell osd.1 injectargs '--debug-osd 0/5' [cephadm@ceph-admin ceph-cluster]$

提示:這種方式可以在叢集任意主機上執行;

通過admin socket的方式傳送設定命令格式:ceph daemon {daemon-type}.{id} set {name} {value}

[root@ceph-mon01 ~]# ceph daemon osd.0 config set debug_osd 0/5

{

"success": ""

}

[root@ceph-mon01 ~]#

提示:這種方式只能在程序所在主機上執行;

停止或重啟Ceph叢集步驟

停止ceph叢集步驟

1、告知Ceph叢集不要將OSD標記為out,命令:ceph osd set noout

[cephadm@ceph-admin ceph-cluster]$ ceph osd set noout noout is set [cephadm@ceph-admin ceph-cluster]$

2、按如下順序停止守護行程和節點:停止儲存使用者端--->閘道器,如rgw--->後設資料伺服器,MDS---> Ceph OSD---> Ceph Manager--->Ceph Monitor;然後關閉對應主機;

啟動ceph叢集步驟

1、以與停止過程相關的順序啟動節點:Ceph Monitor--->Ceph Manager--->Ceph OSD--->後設資料伺服器,MDS--->閘道器,如rgw---->儲存使用者端;

2、刪除noout標誌,命令ceph osd unset noout

[cephadm@ceph-admin ceph-cluster]$ ceph osd unset noout noout is unset [cephadm@ceph-admin ceph-cluster]$

提示:叢集重新啟動起來,需要將noout標記取消,以免但真正有osd故障時,能夠將osd及時下線,避免將對應存取資料的操作排程到對應osd上進行操作而引發的故障;

ceph是一個物件儲存叢集,在生產環境中,如有不慎可能導致不可預估的後果,所以停止和啟動順序都非常重要;上述過程主要是儘量減少丟失資料的機率,但不保證一定不丟資料;

Ceph組態檔ceph.conf說明

[cephadm@ceph-admin ceph-cluster]$ cat /etc/ceph/ceph.conf [global] fsid = 7fd4a619-9767-4b46-9cee-78b9dfe88f34 mon_initial_members = ceph-mon01 mon_host = 192.168.0.71 public_network = 192.168.0.0/24 cluster_network = 172.16.30.0/24 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx [cephadm@ceph-admin ceph-cluster]$

提示:ceph.conf組態檔嚴格遵守ini組態檔風格的語法和格式;其中井號‘#’和冒號‘;’用於註釋;ceph.conf主要有[global]、[osd]、[mon]、[client]這4個設定段組成;其中global設定段適用於全域性設定,即各元件的公共設定;【osd】設定段作用範圍是叢集所有osd都生效的設定;【mon】作用範圍是叢集所以mon都生效的設定;【client】作用範圍是所有使用者端,比如rbd、rgw;

mon和osd的獨有設定段

上面的【osd】和【mon】設定段都是針對所有osd和mon生效,如果我們只想設定單獨某一個osd或mon該怎麼設定呢?ceph.conf中我們使用[type.ID]來表示某一個osd或mon的設定;比如,我們只想設定osd.0,則我們可以在設定段裡寫[osd.0]來表示該段設定只針對osd.0生效;同樣mon也是同樣的邏輯,不同於osd的是,mon的ID不是數位;我們可以使用ceph mon dump來檢視mon的ID;

獲取osd的編號

提示:osd的編號都是數位,從0開始;

ceph.conf設定段生效優先順序

如果以上公共設定段裡的設定和專用設定段的設定重複,則專用設定段覆蓋公共設定段裡的設定,即專用設定段裡的設定生效;設定生效優先順序順序為:【global】小於【osd】、【mon】、【client】;【osd】小於【osd.ID】,【mon】小於【mon.a】;總之設定段作用範圍越小,越優先生效;

ceph組態檔生效優先順序

ceph啟動時會按如下順序查詢組態檔

1、$CEPH_CONF:該環境變數所指定的組態檔;

2、-c path/path :命令列使用-c選項指定的組態檔路徑;

3、/etc/ceph/ceph.conf:預設組態檔路徑

4、~/.ceph/config:當前使用者家目錄下.ceph/config檔案

5、./ceph.conf :當前使用者所在目錄下的ceph.conf檔案

組態檔生效順序是$CEPH_CONF---->-c path/path ---->/etc/ceph/ceph.conf---->~/.ceph/config---->./ceph.conf ;

Ceph組態檔常用的元引數

ceph組態檔支援用元引數來替換對應設定資訊,比如$cluster就表示當前Ceph叢集的名稱;$type表示當前服務的型別名稱;比如osd、mon;$id表示守護行程的識別符號,比如以osd.0來說,它的識別符號就是0;$host表示守護行程所在主機的主機名;$name表示當前服務的型別名稱和程序識別符號的組合;即$name=$type.$id;