分散式儲存系統之Ceph叢集存取介面啟用

前文我們使用ceph-deploy工具簡單拉起了ceph底層儲存叢集RADOS,回顧請參考https://www.cnblogs.com/qiuhom-1874/p/16724473.html;今天我們來聊一聊ceph叢集存取介面相關話題;

我們知道RADOS叢集是ceph底層儲存叢集,部署好RADOS叢集以後,預設只有RBD(Rados Block Device)介面;但是該介面並不能使用;這是因為在使用rados儲存叢集存取物件資料時,都是通過儲存池找到對應pg,然後pg找到對應的osd,由osd通過librados api介面將資料儲存到對應的osd所對應的磁碟裝置上;

啟用Ceph塊裝置介面(RBD)

對於RBD介面來說,使用者端基於librbd即可將RADOS儲存叢集用作塊裝置,不過,用於rbd的儲存池需要事先啟用rbd功能並進行初始化;

1、建立RBD儲存池

[root@ceph-admin ~]# ceph osd pool create rbdpool 64 64 pool 'rbdpool' created [root@ceph-admin ~]# ceph osd pool ls testpool rbdpool [root@ceph-admin ~]#

2、啟用RBD儲存池RBD功能

檢視ceph osd pool 幫助

Monitor commands:

=================

osd pool application disable <poolname> <app> {--yes- disables use of an application <app> on pool

i-really-mean-it} <poolname>

osd pool application enable <poolname> <app> {--yes-i- enable use of an application <app> [cephfs,rbd,rgw]

really-mean-it} on pool <poolname>

提示:ceph osd pool application disable 表示禁用對應儲存池上的對應介面功能,enbale 表示啟用對應功能;後面的APP值只接受cephfs、rbd和rgw;

[root@ceph-admin ~]# ceph osd pool application enable rbdpool rbd enabled application 'rbd' on pool 'rbdpool' [root@ceph-admin ~]#

3、初始化RBD儲存池

[root@ceph-admin ~]# rbd pool init -p rbdpool [root@ceph-admin ~]#

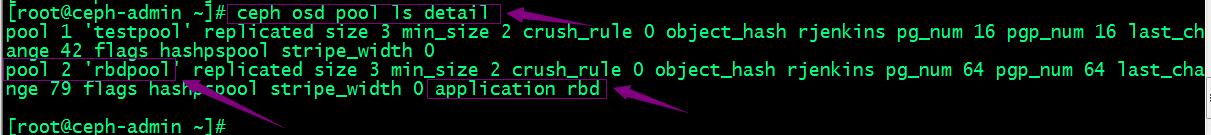

驗證rbdpool是否成功初始化,對應rbd應用是否啟用?

提示:使用ceph osd pool ls detail命令就能檢視儲存池詳細資訊;到此rbd儲存池就初始化完成;但是,rbd儲存池不能直接用於塊裝置,而是需要事先在其中按需建立映像(image),並把映像檔案作為塊裝置使用;

4、在rbd儲存池裡建立image

[root@ceph-admin ~]# rbd create --size 5G rbdpool/rbd-img01 [root@ceph-admin ~]# rbd ls rbdpool rbd-img01 [root@ceph-admin ~]#

檢視建立image的資訊

[root@ceph-admin ~]# rbd info rbdpool/rbd-img01

rbd image 'rbd-img01':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

id: d4466b8b4567

block_name_prefix: rbd_data.d4466b8b4567

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Sun Sep 25 11:25:01 2022

[root@ceph-admin ~]#

提示:可以看到對應image支援分層克隆,排他鎖,物件對映等等特性;到此一個5G大小的磁碟image就建立好了;使用者端可以基於核心發現機制將對應image識別成一塊磁碟裝置進行使用;

啟用radosgw介面

RGW並非必須的介面,僅在需要用到與S3和Swift相容的RESTful介面時才需要部署RGW範例;radosgw介面依賴ceph-rgw程序對外提供服務;所以我們要啟用radosgw介面,就需要在rados叢集上執行ceph-rgw程序;

1、部署ceph-radosgw

[root@ceph-admin ~]# ceph-deploy rgw --help

usage: ceph-deploy rgw [-h] {create} ...

Ceph RGW daemon management

positional arguments:

{create}

create Create an RGW instance

optional arguments:

-h, --help show this help message and exit

[root@ceph-admin ~]#

提示:ceph-deploy rgw命令就只有一個create子命令用於建立RGW範例;

[root@ceph-admin ~]# su - cephadm

Last login: Sat Sep 24 23:16:00 CST 2022 on pts/0

[cephadm@ceph-admin ~]$ cd ceph-cluster/

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy rgw create ceph-mon01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy rgw create ceph-mon01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] rgw : [('ceph-mon01', 'rgw.ceph-mon01')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa658caff80>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function rgw at 0x7fa6592f5140>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts ceph-mon01:rgw.ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph_deploy.rgw][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.rgw][DEBUG ] remote host will use systemd

[ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to ceph-mon01

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon01][WARNIN] rgw keyring does not exist yet, creating one

[ceph-mon01][DEBUG ] create a keyring file

[ceph-mon01][DEBUG ] create path recursively if it doesn't exist

[ceph-mon01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.ceph-mon01 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.ceph-mon01/keyring

[ceph-mon01][INFO ] Running command: sudo systemctl enable [email protected]

[ceph-mon01][WARNIN] Created symlink from /etc/systemd/system/ceph-radosgw.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[ceph-mon01][INFO ] Running command: sudo systemctl start [email protected]

[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph.target

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph-mon01 and default port 7480

[cephadm@ceph-admin ceph-cluster]$

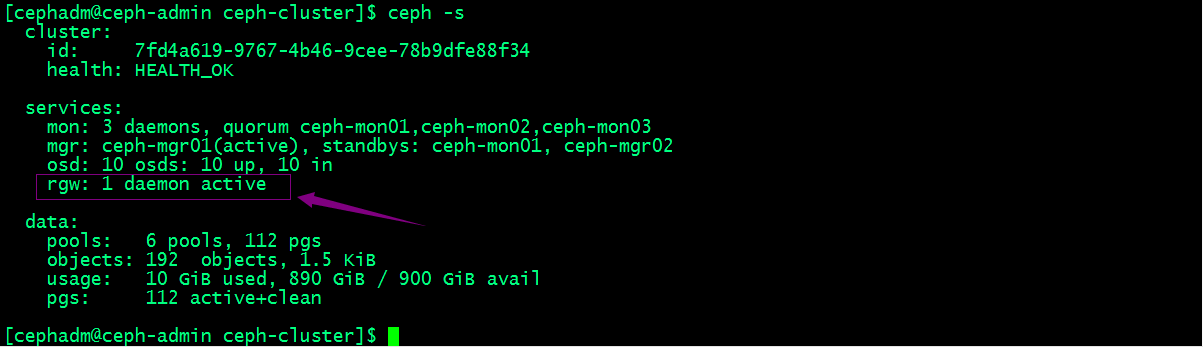

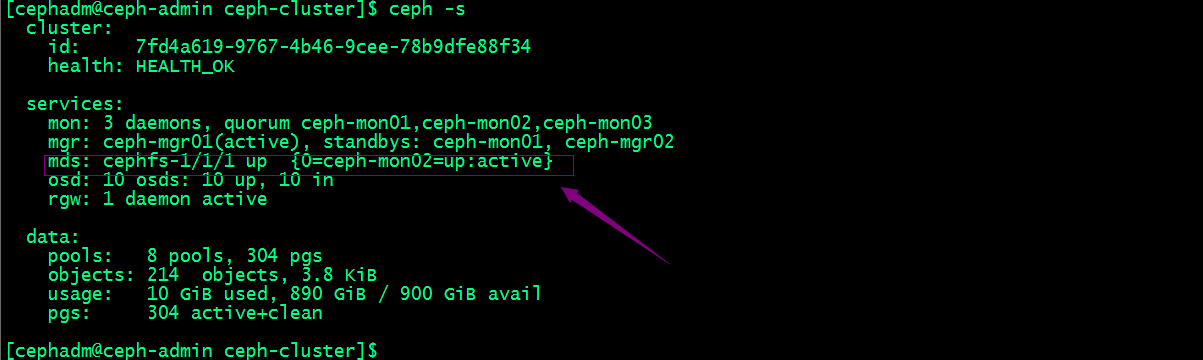

檢視叢集狀態

提示:可以看到現在叢集有一個rgw程序處於活躍狀態;這裡需要說明一點,radosgw部署好以後,對應它會自動建立自己所需要的儲存池,如下所示;

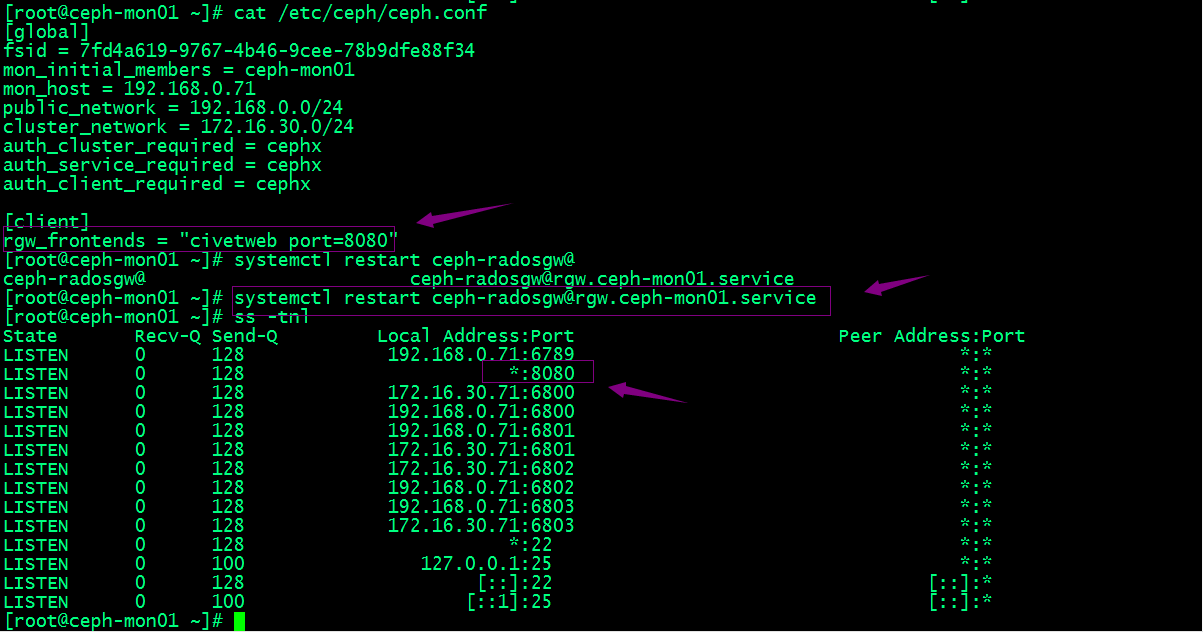

提示:預設情況下,它會建立以上4個儲存池;這裡說一下預設情況下radosgw會監聽tcp協定的7480埠,以web服務形式提供服務;如果我們需要更改對應監聽埠,可以在組態檔/etc/ceph/ceph.conf中設定

[client] rgw_frontends = "civetweb port=8080"

提示:該設定操作需要在對應執行rgw的主機上修改設定,然後重啟對應程序即可;

重啟程序

提示:可以看到現在mon01上沒有監聽7480的埠,而是8080的埠;

用瀏覽器存取8080埠

提示:存取對應主機的8080埠這裡給我們返回一個xml的檔案的介面;RGW提供的是RESTful介面,使用者端通過http與其進行互動,完成資料的增刪改查等管理操作。有上述介面說明我們rgw服務部署並執行成功;到此radosgw介面就啟用Ok了;

啟用檔案系統介面(cephfs)

在說檔案系統時,我們首先會想到後設資料和資料的存放問題;CephFS和rgw一樣它也需要依賴一個程序,cephfs依賴mds程序來幫助它來維護檔案的後設資料資訊;MDS是MetaData Server的縮寫,主要作用就是處理後設資料資訊;它和別的後設資料伺服器不一樣,MDS只負責路由後設資料資訊,它自己並不儲存後設資料資訊;檔案的後設資料資訊是存放在RADOS叢集之上;所以我們要使用cephfs需要建立兩個儲存池,一個用於存放cephfs的後設資料一個用於存放檔案的資料;那麼對於mds來說,它為了能夠讓使用者讀取資料更快更高效,通常它會把一些熱區資料快取在自己本地,以便更快的告訴使用者端,對應資料存放問位置;由於MDS將後設資料資訊存放在rados叢集之上,使得MDS就變成了無狀態,無狀態意味著高可用是以冗餘的方式;至於怎麼高可用,後續要再聊;

1、部署ceph-mds

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mds create ceph-mon02

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mds create ceph-mon02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff12df9e758>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7ff12e1f7050>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('ceph-mon02', 'ceph-mon02')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts ceph-mon02:ceph-mon02

[ceph-mon02][DEBUG ] connection detected need for sudo

[ceph-mon02][DEBUG ] connected to host: ceph-mon02

[ceph-mon02][DEBUG ] detect platform information from remote host

[ceph-mon02][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon02

[ceph-mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon02][WARNIN] mds keyring does not exist yet, creating one

[ceph-mon02][DEBUG ] create a keyring file

[ceph-mon02][DEBUG ] create path if it doesn't exist

[ceph-mon02][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mon02 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mon02/keyring

[ceph-mon02][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mon02

[ceph-mon02][WARNIN] Created symlink from /etc/systemd/system/ceph-mds.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[ceph-mon02][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mon02

[ceph-mon02][INFO ] Running command: sudo systemctl enable ceph.target

[cephadm@ceph-admin ceph-cluster]$

檢視mds狀態

[cephadm@ceph-admin ceph-cluster]$ ceph mds stat , 1 up:standby [cephadm@ceph-admin ceph-cluster]$

提示:可以看到現在叢集有一個mds啟動著,並以standby的方式執行;這是因為我們只是部署了ceph-mds程序,對應在rados叢集上並沒有繫結儲存池;所以現在mds還不能正常提供服務;

2、建立儲存池

[cephadm@ceph-admin ceph-cluster]$ ceph osd pool create cephfs-metadatpool 64 64 pool 'cephfs-metadatpool' created [cephadm@ceph-admin ceph-cluster]$ceph osd pool create cephfs-datapool 128 128 pool 'cephfs-datapool' created [cephadm@ceph-admin ceph-cluster]$ ceph osd pool ls testpool rbdpool .rgw.root default.rgw.control default.rgw.meta default.rgw.log cephfs-metadatpool cephfs-datapool [cephadm@ceph-admin ceph-cluster]$

3、初始化儲存池

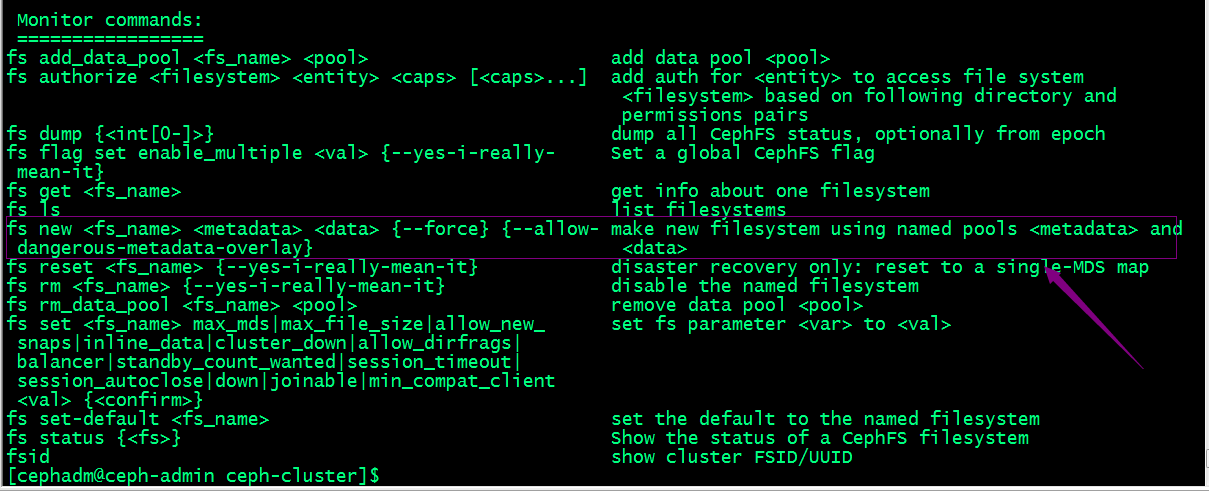

檢視ceph fs幫助

提示:我們可以使用ceph fs new命令來指定cephfs的後設資料池和資料池;

[cephadm@ceph-admin ceph-cluster]$ ceph fs new cephfs cephfs-metadatpool cephfs-datapool new fs with metadata pool 7 and data pool 8 [cephadm@ceph-admin ceph-cluster]$

再次檢視mds狀態

[cephadm@ceph-admin ceph-cluster]$ ceph mds stat

cephfs-1/1/1 up {0=ceph-mon02=up:active}

[cephadm@ceph-admin ceph-cluster]$

檢視叢集狀態

提示:可以看到現在mds已經執行起來,並處於active狀態;

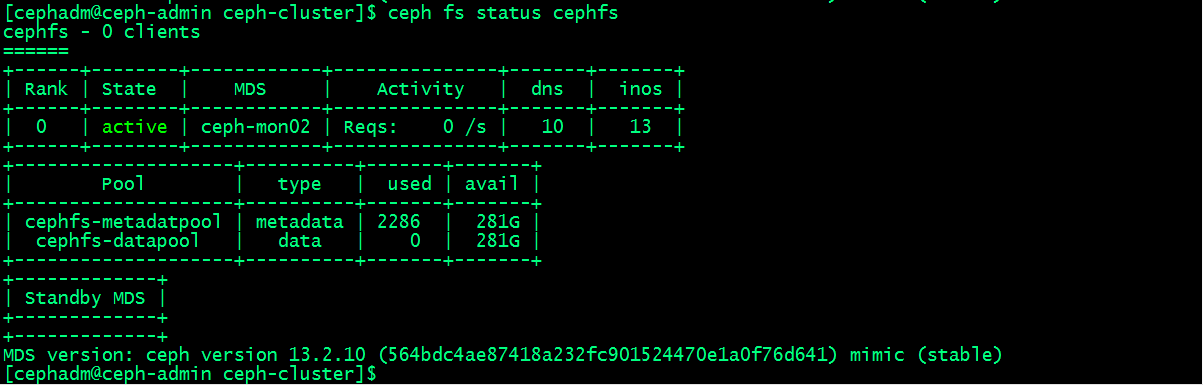

檢視ceph 檔案系統狀態

提示:可以看到對應檔案系統是活躍狀態;隨後,使用者端通過核心中的cephfs檔案系統介面即可掛載使用cephfs檔案系統,或者通過FUSE介面與檔案系統進行互動使用;