使用containerlab搭建cilium BGP環境解析

使用 Containerlab + Kind 快速部署 Cilium BGP 環境一文中使用Containerlab和Cilium實現了模擬環境下的Cilium BGP網路。它使用Containerlab模擬外部BGP路由器,使用Cilium BGP的

CiliumBGPPeeringPolicy與外部路由器建立BGP關係。

containerLab的簡單用法

containerLab支援很多節點和型別設定,相對比較複雜。實際使用中只需要掌握基本的組網即可

安裝

網路佈線

如果沒有指定網路模式,則使用預設的bridge。

container mode用於於其他容器共用網路名稱空間

my-node: kind: linux sidecar-node: kind: linux network-mode: container:my-node #my-node為另一個容器 startup-delay: 10

name: srl02

topology:

kinds:

srl:

type: ixrd3 #srlinux支援的型別,用於模擬硬體

image: ghcr.io/nokia/srlinux #使用的容器映象

nodes:

srl1: #節點1資訊

kind: srl

srl2: #節點2資訊

kind: srl

links:

- endpoints: ["srl1:e1-1", "srl2:e1-1"] #節點1和節點2的對等連線資訊

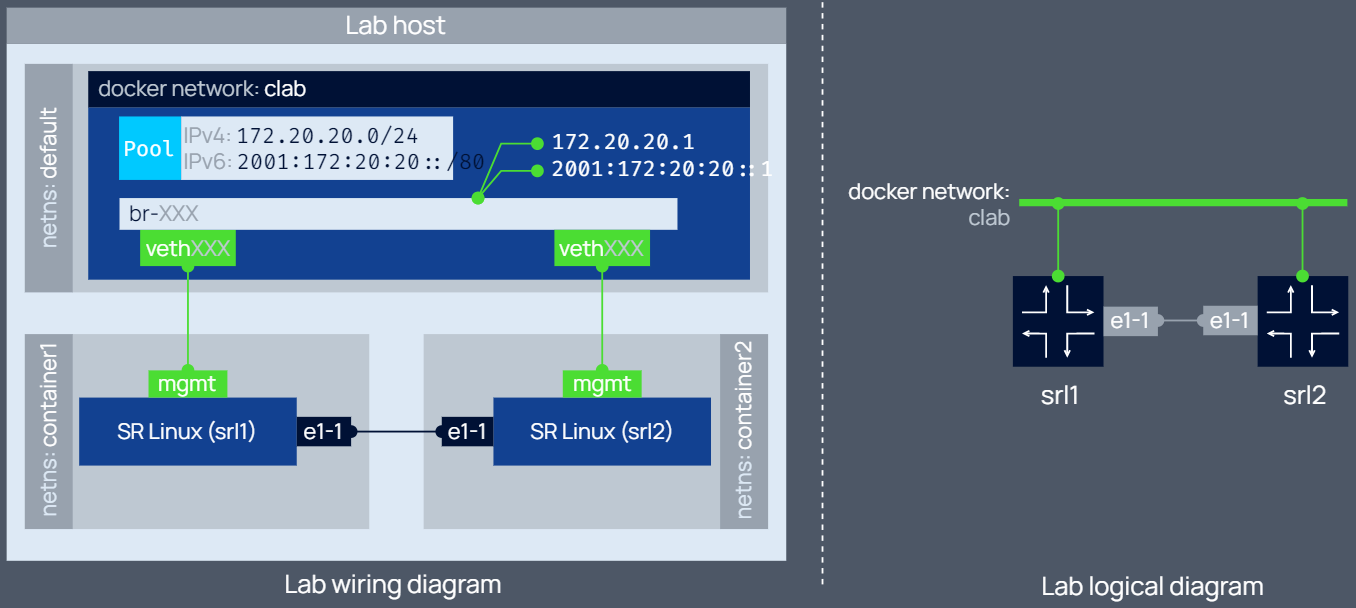

上述設定包含兩個SR Linux節點srl1和srl2,它們通過以下兩種方式互通:

- 都通過介面

mgmt連線到了預設的容器網橋clab(使用docker network ls檢視) - 通過介面

e1-1進行了點到點連線。點到點連線是通過一對veth實現的。enpoints描述了一對veth,因此陣列中有且只能有2個元素

執行如下命令部署網路:

# containerlab deploy -t srl02.clab.yml

生成的容器網路如下:

IPv4: subnet 172.20.20.0/24, gateway 172.20.20.1

IPv6: subnet 2001:172:20:20::/64, gateway 2001:172:20:20::1

設定管理網路

使用者自定義網路

一般情況下使用預設預設設定即可,但如果預設的網路於現有網路出現衝突,則可以手動指定網段:

mgmt:

network: custom_mgmt # management network name

ipv4_subnet: 172.100.100.0/24 # ipv4 range

ipv6_subnet: 2001:172:100:100::/80 # ipv6 range (optional)

topology:

# the rest of the file is omitted for brevity

可以手動給節點指定特定IP,相當於靜態IP,但此時需要給所有容器手動指定IP:

mgmt:

network: fixedips #指定容器網路名稱(預設的容器網路名稱為clab)

bridge: mybridge #指定網橋名稱(預設的網橋名稱為 br-<network-id>)

ipv4_subnet: 172.100.100.0/24

ipv6_subnet: 2001:172:100:100::/80

topology:

nodes:

n1:

kind: srl

mgmt_ipv4: 172.100.100.11 # set ipv4 address on management network

mgmt_ipv6: 2001:172:100:100::11 # set ipv6 address on management network

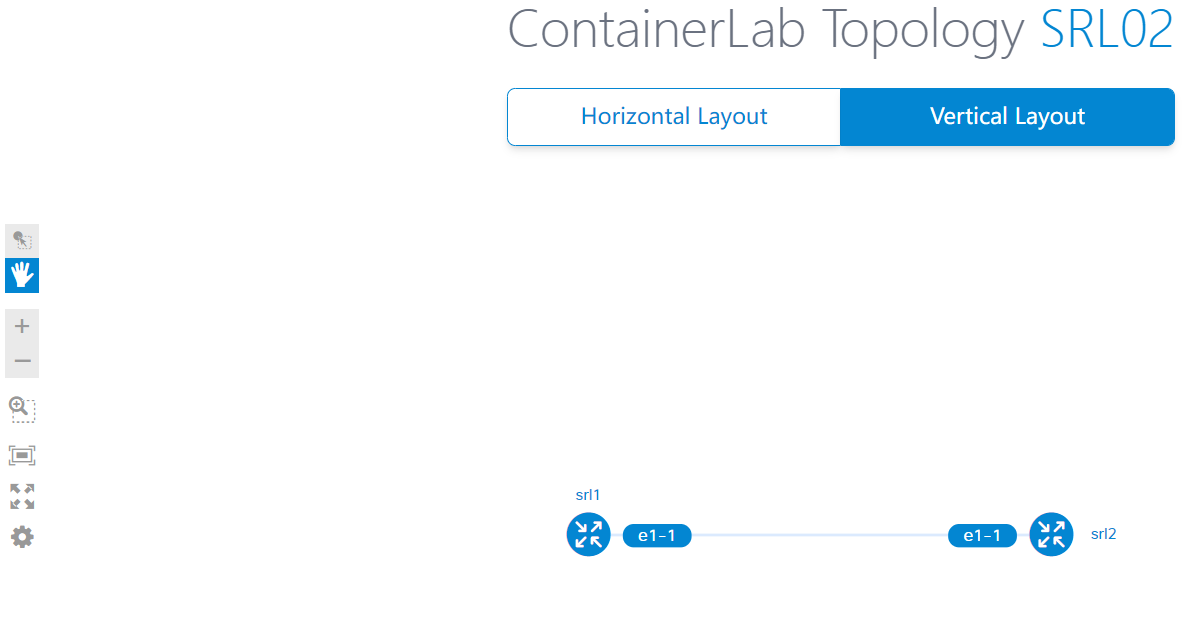

檢視拓撲圖

執行如下命令可以檢視拓撲圖:

# containerlab graph -t srl02.clab.yml

重新設定網路

如果修改了組態檔可以使用如下命令重新設定網路:

# containerlab deploy -t srl02.clab.yml --reconfigure

例子

官方給出了很多設定組網的例子。組網中一般涉及兩種範例:VM和路由器,後者可以使用FRR元件模擬。

原文設定解析

Kubernetes設定

下面使用kind建立了一個kubernetes叢集,其中包含一個控制節點和3個工作節點,並分配和節點IP和pod網段。

注意設定中禁用了預設的CNI,因此使用kind部署之後,節點之間由於無法通訊而不會Ready。

# cluster.yaml

kind: Cluster

name: clab-bgp-cplane-demo

apiVersion: kind.x-k8s.io/v1alpha4

networking:

disableDefaultCNI: true # 禁用預設 CNI

podSubnet: "10.1.0.0/16" # Pod CIDR

nodes:

- role: control-plane # 節點角色

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.0.1.2 # 節點 IP

node-labels: "rack=rack0" # 節點標籤

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.0.2.2

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.0.3.2

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.0.4.2

node-labels: "rack=rack1"

Cilium安裝

原文中的驗證步驟可能不大合理,應該是先啟動kubernetes和cilium,然後再啟動containerlab,否則kubernetes因為沒有CNI,也無法生成路由。

# values.yaml

tunnel: disabled

ipam:

mode: kubernetes

ipv4NativeRoutingCIDR: 10.0.0.0/8

# 開啟 BGP 功能支援,等同於命令列執行 --enable-bgp-control-plane=true

bgpControlPlane:

enabled: true

k8s:

requireIPv4PodCIDR: true

helm repo add cilium https://helm.cilium.io/

helm install -n kube-system cilium cilium/cilium --version v1.12.1 -f values.yaml

完成上述設定之後kubernetes叢集就啟動了,節點也Ready了,下面進行BGP的設定。

BPG設定

原文中使用frrouting/frr:v8.2.2映象來實現BGP路由發現。更多引數設定可以參見官方手冊。文中的containerlab的topo檔案如下:

# topo.yaml

name: bgp-cplane-demo

topology:

kinds:

linux:

cmd: bash

nodes:

router0:

kind: linux

image: frrouting/frr:v8.2.2

labels:

app: frr

exec:

- iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

- ip addr add 10.0.0.0/32 dev lo

- ip route add blackhole 10.0.0.0/8

- touch /etc/frr/vtysh.conf

- sed -i -e 's/bgpd=no/bgpd=yes/g' /etc/frr/daemons

- usr/lib/frr/frrinit.sh start

- >-

vtysh -c 'conf t'

-c 'router bgp 65000'

-c ' bgp router-id 10.0.0.0'

-c ' no bgp ebgp-requires-policy'

-c ' neighbor ROUTERS peer-group'

-c ' neighbor ROUTERS remote-as external'

-c ' neighbor ROUTERS default-originate'

-c ' neighbor net0 interface peer-group ROUTERS'

-c ' neighbor net1 interface peer-group ROUTERS'

-c ' address-family ipv4 unicast'

-c ' redistribute connected'

-c ' exit-address-family'

-c '!'

tor0:

kind: linux

image: frrouting/frr:v8.2.2

labels:

app: frr

exec:

- ip link del eth0

- ip addr add 10.0.0.1/32 dev lo

- ip addr add 10.0.1.1/24 dev net1

- ip addr add 10.0.2.1/24 dev net2

- touch /etc/frr/vtysh.conf

- sed -i -e 's/bgpd=no/bgpd=yes/g' /etc/frr/daemons

- /usr/lib/frr/frrinit.sh start

- >-

vtysh -c 'conf t'

-c 'frr defaults datacenter'

-c 'router bgp 65010'

-c ' bgp router-id 10.0.0.1'

-c ' no bgp ebgp-requires-policy'

-c ' neighbor ROUTERS peer-group'

-c ' neighbor ROUTERS remote-as external'

-c ' neighbor SERVERS peer-group'

-c ' neighbor SERVERS remote-as internal'

-c ' neighbor net0 interface peer-group ROUTERS'

-c ' neighbor 10.0.1.2 peer-group SERVERS'

-c ' neighbor 10.0.2.2 peer-group SERVERS'

-c ' address-family ipv4 unicast'

-c ' redistribute connected'

-c ' exit-address-family'

-c '!'

tor1:

kind: linux

image: frrouting/frr:v8.2.2

labels:

app: frr

exec:

- ip link del eth0

- ip addr add 10.0.0.2/32 dev lo

- ip addr add 10.0.3.1/24 dev net1

- ip addr add 10.0.4.1/24 dev net2

- touch /etc/frr/vtysh.conf

- sed -i -e 's/bgpd=no/bgpd=yes/g' /etc/frr/daemons

- /usr/lib/frr/frrinit.sh start

- >-

vtysh -c 'conf t'

-c 'frr defaults datacenter'

-c 'router bgp 65011'

-c ' bgp router-id 10.0.0.2'

-c ' no bgp ebgp-requires-policy'

-c ' neighbor ROUTERS peer-group'

-c ' neighbor ROUTERS remote-as external'

-c ' neighbor SERVERS peer-group'

-c ' neighbor SERVERS remote-as internal'

-c ' neighbor net0 interface peer-group ROUTERS'

-c ' neighbor 10.0.3.2 peer-group SERVERS'

-c ' neighbor 10.0.4.2 peer-group SERVERS'

-c ' address-family ipv4 unicast'

-c ' redistribute connected'

-c ' exit-address-family'

-c '!'

server0:

kind: linux

image: nicolaka/netshoot:latest

network-mode: container:control-plane

exec:

- ip addr add 10.0.1.2/24 dev net0

- ip route replace default via 10.0.1.1

server1:

kind: linux

image: nicolaka/netshoot:latest

network-mode: container:worker

exec:

- ip addr add 10.0.2.2/24 dev net0

- ip route replace default via 10.0.2.1

server2:

kind: linux

image: nicolaka/netshoot:latest

network-mode: container:worker2

exec:

- ip addr add 10.0.3.2/24 dev net0

- ip route replace default via 10.0.3.1

server3:

kind: linux

image: nicolaka/netshoot:latest

network-mode: container:worker3

exec:

- ip addr add 10.0.4.2/24 dev net0

- ip route replace default via 10.0.4.1

links:

- endpoints: ["router0:net0", "tor0:net0"]

- endpoints: ["router0:net1", "tor1:net0"]

- endpoints: ["tor0:net1", "server0:net0"]

- endpoints: ["tor0:net2", "server1:net0"]

- endpoints: ["tor1:net1", "server2:net0"]

- endpoints: ["tor1:net2", "server3:net0"]

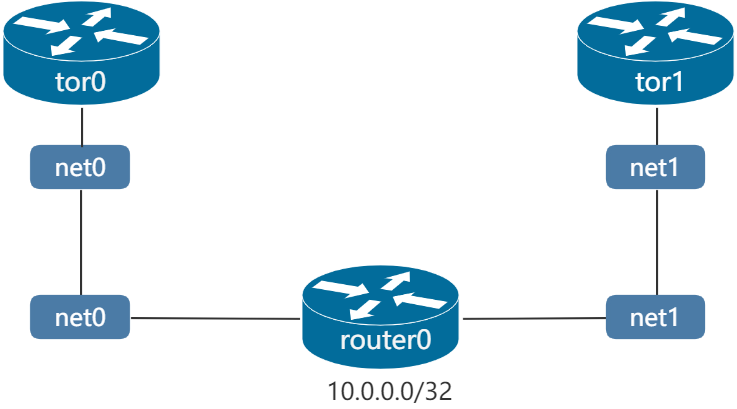

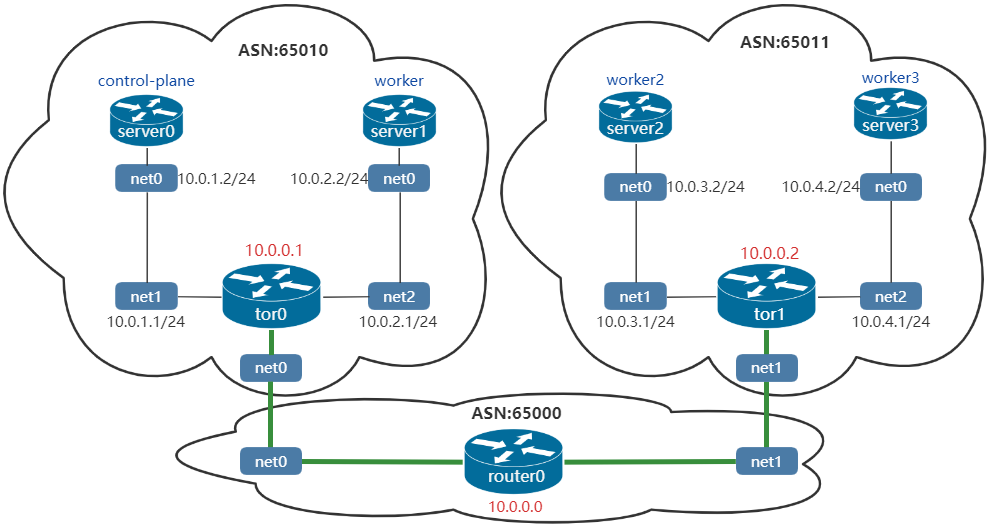

該topo中涉及3個路由器:router0、tor0、tor1。以及4個普通節點:server0、server1、server2、server3,這4個節點與kubernetes的節點(容器部署)共用相同的網路名稱空間。

下面看下各個節點是如何

router0的設定

下面是router0的bgp設定,其地址為10.0.0.0:

vtysh -c 'conf t'

-c 'router bgp 65000'

-c ' bgp router-id 10.0.0.0'

-c ' no bgp ebgp-requires-policy'

-c ' neighbor ROUTERS peer-group'

-c ' neighbor ROUTERS remote-as external'

-c ' neighbor ROUTERS default-originate'

-c ' neighbor net0 interface peer-group ROUTERS'

-c ' neighbor net1 interface peer-group ROUTERS'

-c ' address-family ipv4 unicast'

-c ' redistribute connected'

-c ' exit-address-family'

-c '!'

vtysh -c 'conf t':通過vtysh命令進入互動介面,然後進入設定介面'router bgp 65000':設定BGP路由器的ASN(AS number),BGP協定使用該數值來判斷BGP連線的是內部還是外部。輸入該命令之後就可以執行BGP命令。'bgp router-id 10.0.0.0':指定router-ID,用於標識路由器。此處使用IP作為路由標識'no bgp ebgp-requires-policy':不需要使用策略來交換路由資訊。'neighbor ROUTERS peer-group':定義一個peer group,用於交換路由,一個peer group中可以有多個peer'neighbor ROUTERS remote-as external':router0的鄰居為tor0和tor1,它們都使用不同的ASN,因此將tor0和tor1作為EBGP,EBGP會在傳播路由的時候修改下一跳。參考:EBGP vs IBGP'neighbor ROUTERS default-originate':將預設路由0.0.0.0傳送給鄰居。'neighbor net0 interface peer-group ROUTERS'/'neighbor net0 interface peer-group ROUTERS':將對端繫結到一個peer group。這裡的對端可以是介面名稱或是鄰居標籤'address-family ipv4 unicast':進入IPv4單播設定介面'redistribute connected':將路由從其他協定重新分發到BGP,此處為系統的直連路由。'exit-address-family':退出地址族設定。

上述設定中,為router0新增了鄰居net0(連線到tor0)和net1(連線到tor1),並在BGP中引入了ipv4的直連路由。此時組網如下:

tor0設定

vtysh -c 'conf t'

-c 'frr defaults datacenter'

-c 'router bgp 65010'

-c ' bgp router-id 10.0.0.1'

-c ' no bgp ebgp-requires-policy'

-c ' neighbor ROUTERS peer-group'

-c ' neighbor ROUTERS remote-as external'

-c ' neighbor SERVERS peer-group'

-c ' neighbor SERVERS remote-as internal'

-c ' neighbor net0 interface peer-group ROUTERS'

-c ' neighbor 10.0.1.2 peer-group SERVERS'

-c ' neighbor 10.0.2.2 peer-group SERVERS'

-c ' address-family ipv4 unicast'

-c ' redistribute connected'

-c ' exit-address-family'

此處設定與router0大體相同,它同樣建立了一個EBGP型別的peer group ROUTERS,將net0(連線到router0)作為鄰居。同時它建立一個IBGP型別的peer group SERVERS,並將server0和server1的地址作為鄰居。

tor1與tor0的設定類似,此處不再詳述。最後的組網如下。其中tor0、tor1和router0建立了鄰居關係。另外需要注意的是,containerlab網路中的server0~3分別與kubernetes的對應節點共用網路名稱空間。

在router0上檢視bgp鄰居關係,可以看到router0與tor0(net0)、tor1(net1)建立了鄰居關係:

router0# show bgp summary

IPv4 Unicast Summary (VRF default):

BGP router identifier 10.0.0.0, local AS number 65000 vrf-id 0

BGP table version 8

RIB entries 15, using 2760 bytes of memory

Peers 2, using 1433 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

net0 4 65010 15 15 0 0 0 00:00:20 3 9 N/A

net1 4 65011 15 15 0 0 0 00:00:20 3 9 N/A

Total number of neighbors 2

在tor0上檢視鄰居關係,可以看到,tor0並沒有與kubernetes節點建立鄰居關係,因此無法獲取kubernetes pod節點的路由資訊。

tor0# show bgp summary

IPv4 Unicast Summary (VRF default):

BGP router identifier 10.0.0.1, local AS number 65010 vrf-id 0

BGP table version 9

RIB entries 15, using 2760 bytes of memory

Peers 3, using 2149 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

router0(net0) 4 65000 19 20 0 0 0 00:00:33 6 9 N/A

10.0.1.2 4 0 0 0 0 0 0 never Active 0 N/A

10.0.2.2 4 0 0 0 0 0 0 never Active 0 N/

Total number of neighbors 3

在router0上檢視bgp發現的路由,可以看到不存在pod網段(10.1.0.0/16)的路由

router0# show bgp ipv4 all

For address family: IPv4 Unicast

BGP table version is 8, local router ID is 10.0.0.0, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0/32 0.0.0.0 0 32768 ?

*> 10.0.0.1/32 net0 0 0 65010 ?

*> 10.0.0.2/32 net1 0 0 65011 ?

*> 10.0.1.0/24 net0 0 0 65010 ?

*> 10.0.2.0/24 net0 0 0 65010 ?

*> 10.0.3.0/24 net1 0 0 65011 ?

*> 10.0.4.0/24 net1 0 0 65011 ?

*> 172.20.20.0/24 0.0.0.0 0 32768 ?

Displayed 8 routes and 8 total paths

與kubernetes建立BGP

上述設定中,tor0和tor1已經將kubernetes的節點作為IBGP,下面進行kubernetes側BPG設定。cilium的CiliumBGPPeeringPolicy CRD中可以設定BGP peer資訊。

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack0

spec:

nodeSelector:

matchLabels:

rack: rack0

virtualRouters:

- localASN: 65010

exportPodCIDR: true # 自動宣告 Pod CIDR

neighbors:

- peerAddress: "10.0.0.1/32" # tor0 的 IP 地址

peerASN: 65010

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack1

spec:

nodeSelector:

matchLabels:

rack: rack1

virtualRouters:

- localASN: 65011

exportPodCIDR: true

neighbors:

- peerAddress: "10.0.0.2/32" # tor1 的 IP 地址

peerASN: 65011

上述設定中將標籤為rack=rack0的節點(即control-plane和worker)與tor0建立鄰居,將標籤為rack=rack1的節點(即worker和worker2)與tor1建立鄰居:

# k get node -l rack=rack0

NAME STATUS ROLES AGE VERSION

clab-bgp-cplane-demo-control-plane Ready control-plane 2d11h v1.24.0

clab-bgp-cplane-demo-worker Ready <none> 2d11h v1.24.0

# k get node -l rack=rack1

NAME STATUS ROLES AGE VERSION

clab-bgp-cplane-demo-worker2 Ready <none> 2d11h v1.24.0

clab-bgp-cplane-demo-worker3 Ready <none> 2d11h v1.24.0

CiliumBGPPeeringPolicy各個欄位的說明如下

nodeSelector: Nodes which are selected by this label selector will apply the given policy

virtualRouters: One or more peering configurations outlined below. Each peering configuration can be thought of as a BGP router instance.

virtualRouters[*].localASN: The local ASN for this peering configuration

virtualRouters[*].exportPodCIDR: Whether to export the private pod CIDR block to the listed neighbors

virtualRouters[*].neighbors: A list of neighbors to peer with

neighbors[*].peerAddress: The address of the peer neighbor

neighbors[*].peerASN: The ASN of the peer

完成上述設定之後,containerlab的router0、tor0、tor1就學習到了kubernetes的路由資訊:

檢視tor0的bgp鄰居,可以看到它與clab-bgp-cplane-demo-control-plane(10.0.1.2)和clab-bgp-cplane-demo-worker(10.0.2.2)成功建立了鄰居關係:

tor0# show bgp summary

IPv4 Unicast Summary (VRF default):

BGP router identifier 10.0.0.1, local AS number 65010 vrf-id 0

BGP table version 13

RIB entries 23, using 4232 bytes of memory

Peers 3, using 2149 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

router0(net0) 4 65000 1430 1431 0 0 0 01:10:58 8 13 N/A

clab-bgp-cplane-demo-control-plane(10.0.1.2) 4 65010 46 52 0 0 0 00:02:12 1 11 N/A

clab-bgp-cplane-demo-worker(10.0.2.2) 4 65010 47 53 0 0 0 00:02:15 1 11 N/A

Total number of neighbors 3

檢視tor1的bgp鄰居,可以看到它與clab-bgp-cplane-demo-worker2(10.0.3.2)和clab-bgp-cplane-demo-worker3(10.0.4.2)成功建立了鄰居關係:

tor1# show bgp summary

IPv4 Unicast Summary (VRF default):

BGP router identifier 10.0.0.2, local AS number 65011 vrf-id 0

BGP table version 13

RIB entries 23, using 4232 bytes of memory

Peers 3, using 2149 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

router0(net0) 4 65000 1436 1437 0 0 0 01:11:15 8 13 N/A

clab-bgp-cplane-demo-worker2(10.0.3.2) 4 65011 53 60 0 0 0 00:02:31 1 11 N/A

clab-bgp-cplane-demo-worker3(10.0.4.2) 4 65011 54 61 0 0 0 00:02:33 1 11 N/A

Total number of neighbors 3

檢視route0的設定可以發現其獲取到了Pod的路由資訊:

router0# show bgp ipv4 all

For address family: IPv4 Unicast

BGP table version is 12, local router ID is 10.0.0.0, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0/32 0.0.0.0 0 32768 ?

*> 10.0.0.1/32 net0 0 0 65010 ?

*> 10.0.0.2/32 net1 0 0 65011 ?

*> 10.0.1.0/24 net0 0 0 65010 ?

*> 10.0.2.0/24 net0 0 0 65010 ?

*> 10.0.3.0/24 net1 0 0 65011 ?

*> 10.0.4.0/24 net1 0 0 65011 ?

*> 10.1.0.0/24 net0 0 65010 i

*> 10.1.1.0/24 net1 0 65011 i

*> 10.1.2.0/24 net0 0 65010 i

*> 10.1.3.0/24 net1 0 65011 i

*> 172.20.20.0/24 0.0.0.0 0 32768 ?

Displayed 12 routes and 12 total paths

kubernetes的Pod網路

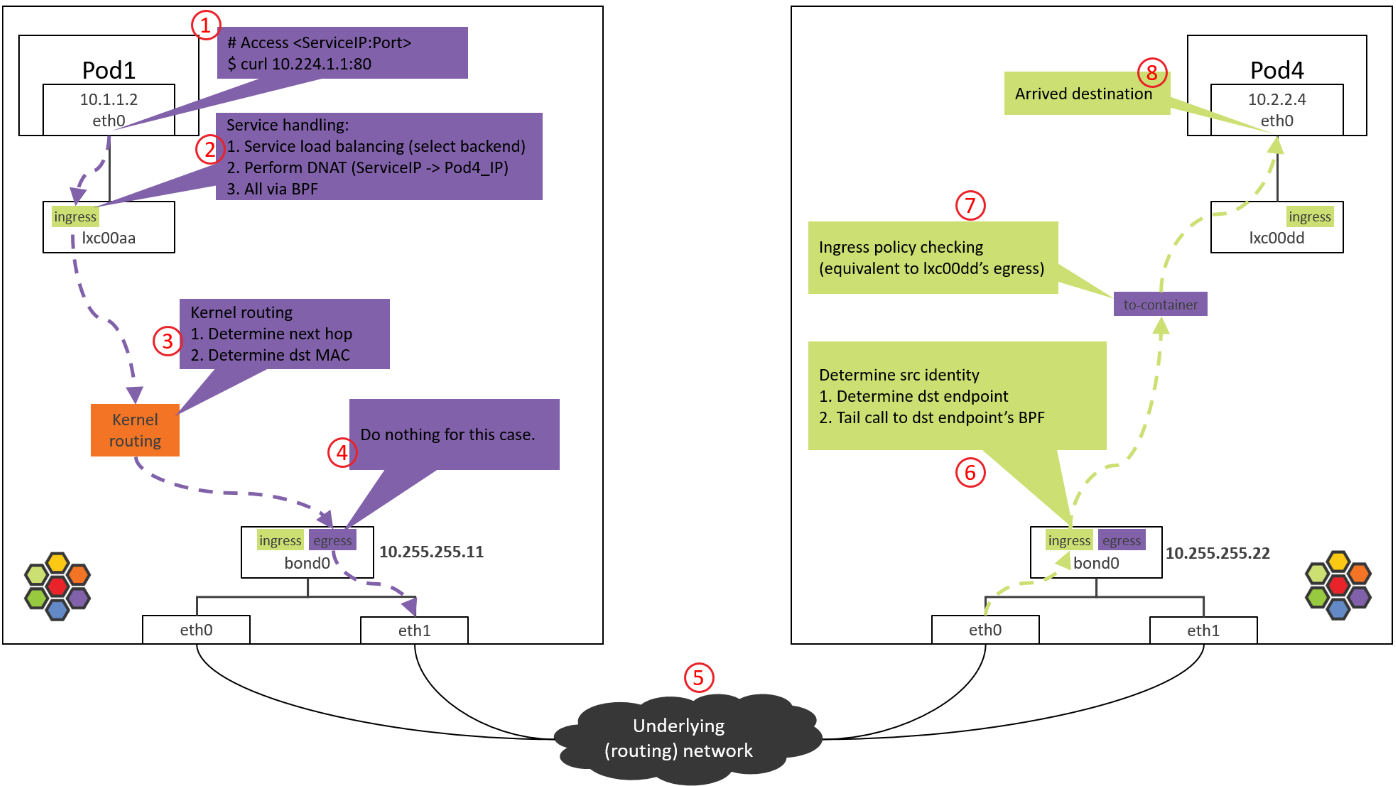

本環境下,kubernetes的Pod通過分別連線到pod名稱空間和系統名稱空間的一對veth實現互通,當報文從pod名稱空間傳遞到系統名稱空間之後就會通過系統路由進行報文分發。

思考

如果將router0、tor0、tor1和kubernetes的所有節點作為一個IBGP會怎麼樣(即所有的ASN都相同,都是internal型別的)?

答:此時由於tor0和tor1無法將學習到的路由轉發給router0,將導致router0缺少pod路由,進而導致網路tor0和tor1不通

EBGP和IBGP在技術實現上的第三個區別在路由轉發的行為上。通過IBGP學習到的路由,不能傳遞給其他的IBGP。這麼作是為了防止路由環路(loop)。EBGP通過BGP協定裡面的AS_PATH和其他元素過濾來自於自己的路由,但是IBGP執行在一個AS內部,沒有AS_PATH,所以IBGP乾脆不轉發來自於其他IBGP的路由。

由於不能轉發路由,這要求所有的IBGP router兩兩相連,組成一個full-mesh的網路。Full-mesh的連線數與節點的關係是n*(n-1),連線數隨著節點數的增加而迅速增加,這給設定和管理帶來了問題。

參考

TIPs:

- BGP簡單偵錯:首先使用

show bgp summary檢視本節點與鄰居是否協商成功,然後使用show bgp ipv4 wide檢視本節點學習到的路由即可 - 此外還可以通過

show bgp neighbor檢視鄰居狀態,以及通過show bgp peer-group檢視peer group的資訊,使用show bgp nexthop檢視下一跳錶

本文來自部落格園,作者:charlieroro,轉載請註明原文連結:https://www.cnblogs.com/charlieroro/p/16712641.html