Centos7 安裝部署Kubernetes(k8s)叢集

一.系統環境

| 伺服器版本 | docker軟體版本 | CPU架構 |

|---|---|---|

| CentOS Linux release 7.4.1708 (Core) | Docker version 20.10.12 | x86_64 |

二.前言

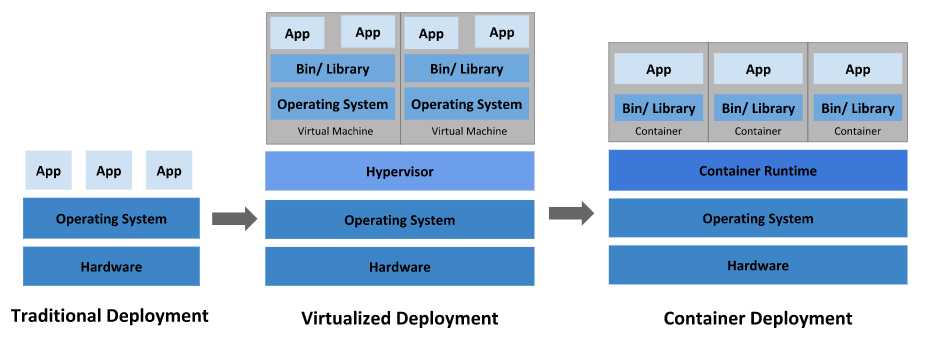

下圖描述了軟體部署方式的變遷:傳統部署時代,虛擬化部署時代,容器部署時代。

傳統部署時代:

早期,各個組織是在物理伺服器上執行應用程式。 由於無法限制在物理伺服器中執行的應用程式資源使用,因此會導致資源分配問題。 例如,如果在同一臺物理伺服器上執行多個應用程式, 則可能會出現一個應用程式佔用大部分資源的情況,而導致其他應用程式的效能下降。 一種解決方案是將每個應用程式都執行在不同的物理伺服器上, 但是當某個應用程式資源利用率不高時,剩餘資源無法被分配給其他應用程式, 而且維護許多物理伺服器的成本很高。

虛擬化部署時代:

因此,虛擬化技術被引入了。虛擬化技術允許你在單個物理伺服器的 CPU 上執行多臺虛擬機器器(VM)。 虛擬化能使應用程式在不同 VM 之間被彼此隔離,且能提供一定程度的安全性, 因為一個應用程式的資訊不能被另一應用程式隨意存取。

虛擬化技術能夠更好地利用物理伺服器的資源,並且因為可輕鬆地新增或更新應用程式, 而因此可以具有更高的可擴縮性,以及降低硬體成本等等的好處。 通過虛擬化,你可以將一組物理資源呈現為可丟棄的虛擬機器器叢集。

每個 VM 是一臺完整的計算機,在虛擬化硬體之上執行所有元件,包括其自己的作業系統。

容器部署時代:

容器類似於 VM,但是更寬鬆的隔離特性,使容器之間可以共用作業系統(OS)。 因此,容器比起 VM 被認為是更輕量級的。且與 VM 類似,每個容器都具有自己的檔案系統、CPU、記憶體、程序空間等。 由於它們與基礎架構分離,因此可以跨雲和 OS 發行版本進行移植。

容器因具有許多優勢而變得流行起來,例如:

- 敏捷應用程式的建立和部署:與使用 VM 映象相比,提高了容器映象建立的簡便性和效率。

- 持續開發、整合和部署:通過快速簡單的回滾(由於映象不可變性), 提供可靠且頻繁的容器映象構建和部署。

- 關注開發與運維的分離:在構建、釋出時建立應用程式容器映象,而不是在部署時, 從而將應用程式與基礎架構分離。

- 可觀察性:不僅可以顯示 OS 級別的資訊和指標,還可以顯示應用程式的執行狀況和其他指標訊號。

- 跨開發、測試和生產的環境一致性:在筆電計算機上也可以和在雲中執行一樣的應用程式。

- 跨雲和作業系統發行版本的可移植性:可在 Ubuntu、RHEL、CoreOS、本地、 Google Kubernetes Engine 和其他任何地方執行。

- 以應用程式為中心的管理:提高抽象級別,從在虛擬硬體上執行 OS 到使用邏輯資源在 OS 上執行應用程式。

- 鬆散耦合、分散式、彈性、解放的微服務:應用程式被分解成較小的獨立部分, 並且可以動態部署和管理 - 而不是在一臺大型單機上整體執行。

- 資源隔離:可預測的應用程式效能。

- 資源利用:高效率和高密度。

三.Kubernetes

3.1 概述

Kubernetes 是一個可移植、可延伸的開源平臺,用於管理容器化的工作負載和服務,可促進宣告式設定和自動化。 Kubernetes 擁有一個龐大且快速增長的生態,其服務、支援和工具的使用範圍相當廣泛。

Kubernetes 這個名字源於希臘語,意為「舵手」或「飛行員」。k8s 這個縮寫是因為 k 和 s 之間有八個字元的關係。 Google 在 2014 年開源了 Kubernetes 專案。 Kubernetes 建立在 Google 大規模執行生產工作負載十幾年經驗的基礎上, 結合了社群中最優秀的想法和實踐。

Kubernetes 為你提供的功能如下:

- 服務發現和負載均衡:Kubernetes 可以使用 DNS 名稱或自己的 IP 地址來曝露容器。 如果進入容器的流量很大, Kubernetes 可以負載均衡並分配網路流量,從而使部署穩定。

- 儲存編排:Kubernetes 允許你自動掛載你選擇的儲存系統,例如本地儲存、公共雲提供商等。

- 自動部署和回滾:你可以使用 Kubernetes 描述已部署容器的所需狀態, 它可以以受控的速率將實際狀態更改為期望狀態。 例如,你可以自動化 Kubernetes 來為你的部署建立新容器, 刪除現有容器並將它們的所有資源用於新容器。

- 自動完成裝箱計算:你為 Kubernetes 提供許多節點組成的叢集,在這個叢集上執行容器化的任務。 你告訴 Kubernetes 每個容器需要多少 CPU 和記憶體 (RAM)。 Kubernetes 可以將這些容器按實際情況排程到你的節點上,以最佳方式利用你的資源。

- 自我修復:Kubernetes 將重新啟動失敗的容器、替換容器、殺死不響應使用者定義的執行狀況檢查的容器, 並且在準備好服務之前不將其通告給使用者端。

- 金鑰與設定管理:Kubernetes 允許你儲存和管理敏感資訊,例如密碼、OAuth 令牌和 ssh 金鑰。 你可以在不重建容器映象的情況下部署和更新金鑰和應用程式設定,也無需在堆疊設定中暴露金鑰。

3.2 Kubernetes 元件

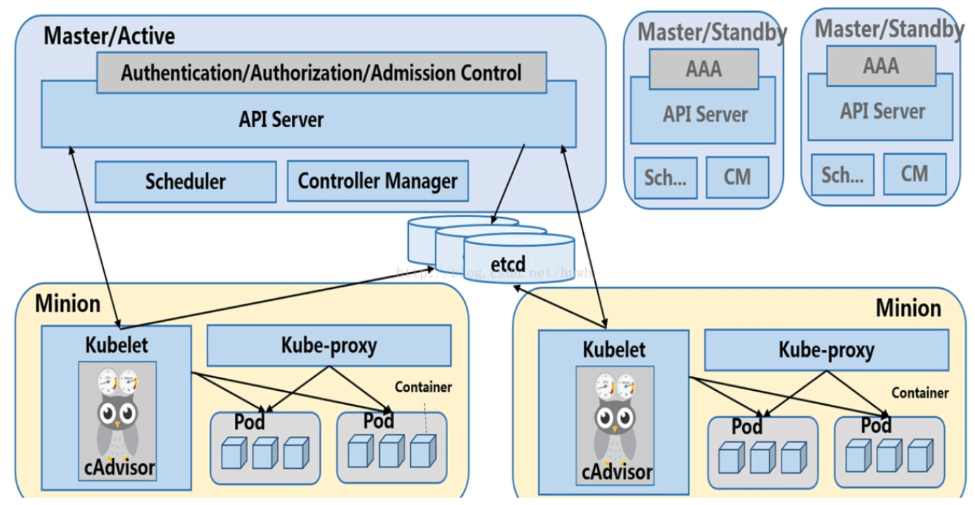

Kubernetes 叢集架構如下:

Kubernetes有兩種節點型別:master節點,worker節點。master節點又稱為控制平面(Control Plane)。控制平面有很多元件,控制平面元件會為叢集做出全域性決策,比如資源的排程。 以及檢測和響應叢集事件,例如當不滿足部署的 replicas 欄位時, 要啟動新的 pod)。

控制平面元件可以在叢集中的任何節點上執行。 然而,為了簡單起見,設定指令碼通常會在同一個計算機上啟動所有控制平面元件, 並且不會在此計算機上執行使用者容器。

3.2.1 控制平面元件

控制平面元件如下:

- kube-apiserver:API 伺服器是 Kubernetes 控制平面的元件, 該元件負責公開了 Kubernetes API,負責處理接受請求的工作。 API 伺服器是 Kubernetes 控制平面的前端。

Kubernetes API 伺服器的主要實現是 kube-apiserver。 kube-apiserver 設計上考慮了水平擴縮,也就是說,它可通過部署多個範例來進行擴縮。 你可以執行 kube-apiserver 的多個範例,並在這些範例之間平衡流量。 - etcd:etcd 是兼顧一致性與高可用性的鍵值對資料庫,可以作為儲存 Kubernetes 所有叢集資料的後臺資料庫。你的 Kubernetes 叢集的 etcd 資料庫通常需要有個備份計劃。

- kube-scheduler:kube-scheduler 是控制平面的元件, 負責監視新建立的、未指定執行節點(node)的 Pods, 並選擇節點來讓 Pod 在上面執行。排程決策考慮的因素包括單個 Pod 及 Pods 集合的資源需求、軟硬體及策略約束、 親和性及反親和性規範、資料位置、工作負載間的干擾及最後時限。

- kube-controller-manager:kube-controller-manager 是控制平面的元件, 負責執行控制器程序。從邏輯上講, 每個控制器都是一個單獨的程序, 但是為了降低複雜性,它們都被編譯到同一個可執行檔案,並在同一個程序中執行。

這些控制器包括:

節點控制器(Node Controller):負責在節點出現故障時進行通知和響應

任務控制器(Job Controller):監測代表一次性任務的 Job 物件,然後建立 Pods 來執行這些任務直至完成

端點控制器(Endpoints Controller):填充端點(Endpoints)物件(即加入 Service 與 Pod)

服務帳戶和令牌控制器(Service Account & Token Controllers):為新的名稱空間建立預設帳戶和 API 存取令牌 - cloud-controller-manager:一個 Kubernetes 控制平面元件, 嵌入了特定於雲平臺的控制邏輯。 雲控制器管理器(Cloud Controller Manager)允許你將你的叢集連線到雲提供商的 API 之上, 並將與該雲平臺互動的元件同與你的叢集互動的元件分離開來。cloud-controller-manager 僅執行特定於雲平臺的控制器。 因此如果你在自己的環境中執行 Kubernetes,或者在本地計算機中執行學習環境, 所部署的叢集不需要有云控制器管理器。

與 kube-controller-manager 類似,cloud-controller-manager 將若干邏輯上獨立的控制迴路組合到同一個可執行檔案中, 供你以同一程序的方式執行。 你可以對其執行水平擴容(執行不止一個副本)以提升效能或者增強容錯能力。

下面的控制器都包含對雲平臺驅動的依賴:

節點控制器(Node Controller):用於在節點終止響應後檢查雲提供商以確定節點是否已被刪除

路由控制器(Route Controller):用於在底層雲基礎架構中設定路由

服務控制器(Service Controller):用於建立、更新和刪除雲提供商負載均衡器

3.2.2 Node元件

節點元件會在每個節點上執行,負責維護執行的 Pod 並提供 Kubernetes 執行環境。

node元件如下:

- kubelet:kubelet 會在叢集中每個節點(node)上執行。 它保證容器(containers)都執行在 Pod 中。kubelet 接收一組通過各類機制提供給它的 PodSpecs, 確保這些 PodSpecs 中描述的容器處於執行狀態且健康。 kubelet 不會管理不是由 Kubernetes 建立的容器。

- kube-proxy:kube-proxy 是叢集中每個節點(node)所上執行的網路代理, 實現 Kubernetes 服務(Service) 概念的一部分。kube-proxy 維護節點上的一些網路規則, 這些網路規則會允許從叢集內部或外部的網路對談與 Pod 進行網路通訊。如果作業系統提供了可用的封包過濾層,則 kube-proxy 會通過它來實現網路規則。 否則,kube-proxy 僅做流量轉發。

四.安裝部署Kubernetes叢集

4.1 環境介紹

Kubernetes叢集架構:k8scloude1作為master節點,k8scloude2,k8scloude3作為worker節點

| 伺服器 | 作業系統版本 | CPU架構 | 程序 | 功能描述 |

|---|---|---|---|---|

| k8scloude1/192.168.110.130 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kube-apiserver,etcd,kube-scheduler,kube-controller-manager,kubelet,kube-proxy,coredns,calico | k8s master節點 |

| k8scloude2/192.168.110.129 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kubelet,kube-proxy,calico | k8s worker節點 |

| k8scloude3/192.168.110.128 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kubelet,kube-proxy,calico | k8s worker節點 |

4.2 設定節點的基本環境

先設定節點的基本環境,3個節點都要同時設定,在此以k8scloude1作為範例

首先設定主機名

[root@localhost ~]# vim /etc/hostname

[root@localhost ~]# cat /etc/hostname

k8scloude1

設定節點IP地址(可選)

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens32

[root@k8scloude1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens32

TYPE=Ethernet

BOOTPROTO=static

NAME=ens32

DEVICE=ens32

ONBOOT=yes

DNS1=114.114.114.114

IPADDR=192.168.110.130

NETMASK=255.255.255.0

GATEWAY=192.168.110.2

ZONE=trusted

重啟網路

[root@localhost ~]# service network restart

Restarting network (via systemctl): [ 確定 ]

[root@localhost ~]# systemctl restart NetworkManager

重啟機器之後,主機名變為k8scloude1,測試機器是否可以存取網路

[root@k8scloude1 ~]# ping www.baidu.com

PING www.a.shifen.com (14.215.177.38) 56(84) bytes of data.

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=1 ttl=128 time=25.9 ms

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=2 ttl=128 time=26.7 ms

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=3 ttl=128 time=26.4 ms

^C

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2004ms

rtt min/avg/max/mdev = 25.960/26.393/26.724/0.320 ms

設定IP和主機名對映

[root@k8scloude1 ~]# vim /etc/hosts

[root@k8scloude1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.110.130 k8scloude1

192.168.110.129 k8scloude2

192.168.110.128 k8scloude3

#複製 /etc/hosts到其他兩個節點

[root@k8scloude1 ~]# scp /etc/hosts 192.168.110.129:/etc/hosts

[root@k8scloude1 ~]# scp /etc/hosts 192.168.110.128:/etc/hosts

#可以ping通其他兩個節點則成功

[root@k8scloude1 ~]# ping k8scloude1

PING k8scloude1 (192.168.110.130) 56(84) bytes of data.

64 bytes from k8scloude1 (192.168.110.130): icmp_seq=1 ttl=64 time=0.044 ms

64 bytes from k8scloude1 (192.168.110.130): icmp_seq=2 ttl=64 time=0.053 ms

^C

--- k8scloude1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.044/0.048/0.053/0.008 ms

[root@k8scloude1 ~]# ping k8scloude2

PING k8scloude2 (192.168.110.129) 56(84) bytes of data.

64 bytes from k8scloude2 (192.168.110.129): icmp_seq=1 ttl=64 time=0.297 ms

64 bytes from k8scloude2 (192.168.110.129): icmp_seq=2 ttl=64 time=1.05 ms

64 bytes from k8scloude2 (192.168.110.129): icmp_seq=3 ttl=64 time=0.254 ms

^C

--- k8scloude2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2001ms

rtt min/avg/max/mdev = 0.254/0.536/1.057/0.368 ms

[root@k8scloude1 ~]# ping k8scloude3

PING k8scloude3 (192.168.110.128) 56(84) bytes of data.

64 bytes from k8scloude3 (192.168.110.128): icmp_seq=1 ttl=64 time=0.285 ms

64 bytes from k8scloude3 (192.168.110.128): icmp_seq=2 ttl=64 time=0.513 ms

64 bytes from k8scloude3 (192.168.110.128): icmp_seq=3 ttl=64 time=0.390 ms

^C

--- k8scloude3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2002ms

rtt min/avg/max/mdev = 0.285/0.396/0.513/0.093 ms

關閉屏保(可選)

[root@k8scloude1 ~]# setterm -blank 0

下載新的yum源

[root@k8scloude1 ~]# rm -rf /etc/yum.repos.d/* ;wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

--2022-01-07 17:07:28-- ftp://ftp.rhce.cc/k8s/*

=> 「/etc/yum.repos.d/.listing」

正在解析主機 ftp.rhce.cc (ftp.rhce.cc)... 101.37.152.41

正在連線 ftp.rhce.cc (ftp.rhce.cc)|101.37.152.41|:21... 已連線。

正在以 anonymous 登入 ... 登入成功!

==> SYST ... 完成。 ==> PWD ... 完成。

......

100%[=======================================================================================================================================================================>] 276 --.-K/s 用時 0s

2022-01-07 17:07:29 (81.9 MB/s) - 「/etc/yum.repos.d/k8s.repo」 已儲存 [276]

#新的repo檔案如下

[root@k8scloude1 ~]# ls /etc/yum.repos.d/

CentOS-Base.repo docker-ce.repo epel.repo k8s.repo

關閉selinux,設定SELINUX=disabled

[root@k8scloude1 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@k8scloude1 ~]# getenforce

Disabled

[root@k8scloude1 ~]# setenforce 0

setenforce: SELinux is disabled

設定防火牆允許所有封包通過

[root@k8scloude1 ~]# firewall-cmd --set-default-zone=trusted

Warning: ZONE_ALREADY_SET: trusted

success

[root@k8scloude1 ~]# firewall-cmd --get-default-zone

trusted

Linux swapoff命令用於關閉系統交換分割區(swap area)。

注意:如果不關閉swap,就會在kubeadm初始化Kubernetes的時候報錯:「[ERROR Swap]: running with swap on is not supported. Please disable swap」

[root@k8scloude1 ~]# swapoff -a ;sed -i '/swap/d' /etc/fstab

[root@k8scloude1 ~]# cat /etc/fstab

# /etc/fstab

# Created by anaconda on Thu Oct 18 23:09:54 2018

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=9875fa5e-2eea-4fcc-a83e-5528c7d0f6a5 / xfs defaults 0 0

4.3 節點安裝docker,並進行相關設定

k8s是容器編排工具,需要容器管理工具,所以三個節點同時安裝docker,還是以k8scloude1為例。

安裝docker

[root@k8scloude1 ~]# yum -y install docker-ce

已載入外掛:fastestmirror

base | 3.6 kB 00:00:00

......

已安裝:

docker-ce.x86_64 3:20.10.12-3.el7

......

完畢!

設定docker開機自啟動並現在啟動docker

[root@k8scloude1 ~]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@k8scloude1 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since 六 2022-01-08 22:10:38 CST; 18s ago

Docs: https://docs.docker.com

Main PID: 1377 (dockerd)

Memory: 30.8M

CGroup: /system.slice/docker.service

└─1377 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

檢視docker版本

[root@k8scloude1 ~]# docker --version

Docker version 20.10.12, build e91ed57

設定docker映象加速器

[root@k8scloude1 ~]# cat > /etc/docker/daemon.json <<EOF

> {

> "registry-mirrors": ["https://frz7i079.mirror.aliyuncs.com"]

> }

> EOF

[root@k8scloude1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://frz7i079.mirror.aliyuncs.com"]

}

重啟docker

[root@k8scloude1 ~]# systemctl restart docker

[root@k8scloude1 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since 六 2022-01-08 22:17:45 CST; 8s ago

Docs: https://docs.docker.com

Main PID: 1529 (dockerd)

Memory: 32.4M

CGroup: /system.slice/docker.service

└─1529 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

設定iptables不對bridge的資料進行處理,啟用IP路由轉發功能

[root@k8scloude1 ~]# cat <<EOF> /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

#使設定生效

[root@k8scloude1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

4.4 安裝kubelet,kubeadm,kubectl

三個節點都安裝kubelet,kubeadm,kubectl:

- Kubelet 是 kubernetes 工作節點上的一個代理元件,執行在每個節點上

- Kubeadm 是一個快捷搭建kubernetes(k8s)的安裝工具,它提供了 kubeadm init 以及 kubeadm join 這兩個命令來快速建立 kubernetes 叢集,kubeadm 通過執行必要的操作來啟動和執行一個最小可用的叢集

- kubectl是Kubernetes叢集的命令列工具,通過kubectl能夠對叢集本身進行管理,並能夠在叢集上進行容器化應用的安裝部署。

#repoid:禁用為給定kubernetes定義的排除

##--disableexcludes=kubernetes 禁掉除了這個之外的別的倉庫

[root@k8scloude1 ~]# yum -y install kubelet-1.21.0-0 kubeadm-1.21.0-0 kubectl-1.21.0-0 --disableexcludes=kubernetes

已載入外掛:fastestmirror

Loading mirror speeds from cached hostfile

正在解決依賴關係

--> 正在檢查事務

---> 軟體包 kubeadm.x86_64.0.1.21.0-0 將被 安裝

......

已安裝:

kubeadm.x86_64 0:1.21.0-0 kubectl.x86_64 0:1.21.0-0 kubelet.x86_64 0:1.21.0-0

......

完畢!

設定kubelet開機自啟動並現在啟動kubelet

[root@k8scloude1 ~]# systemctl enable kubelet --now

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

#kubelet現在是啟動不了的

[root@k8scloude1 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since 六 2022-01-08 22:35:33 CST; 3s ago

Docs: https://kubernetes.io/docs/

Process: 1722 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 1722 (code=exited, status=1/FAILURE)

1月 08 22:35:33 k8scloude1 systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

1月 08 22:35:33 k8scloude1 systemd[1]: Unit kubelet.service entered failed state.

1月 08 22:35:33 k8scloude1 systemd[1]: kubelet.service failed.

4.5 kubeadm初始化

檢視kubeadm哪些版本是可用的

[root@k8scloude2 ~]# yum list --showduplicates kubeadm --disableexcludes=kubernetes

已載入外掛:fastestmirror

Loading mirror speeds from cached hostfile

已安裝的軟體包

kubeadm.x86_64 1.21.0-0 @kubernetes

可安裝的軟體包

kubeadm.x86_64 1.6.0-0 kubernetes

kubeadm.x86_64 1.6.1-0 kubernetes

kubeadm.x86_64 1.6.2-0 kubernetes

......

kubeadm.x86_64 1.23.0-0 kubernetes

kubeadm.x86_64 1.23.1-0

kubeadm init:在主節點k8scloude1上初始化 Kubernetes 控制平面節點

#進行kubeadm初始化

#--image-repository registry.aliyuncs.com/google_containers:使用阿里雲映象倉庫,不然有些映象下載不下來

#--kubernetes-version=v1.21.0:指定k8s的版本

#--pod-network-cidr=10.244.0.0/16:指定pod的網段

#如下報錯:registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0下載不下來,原因為:coredns改名為coredns/coredns了,手動下載coredns即可

#coredns是一個用go語言編寫的開源的DNS服務

[root@k8scloude1 ~]# kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.21.0

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0: output: Error response from daemon: pull access denied for registry.aliyuncs.com/google_containers/coredns/coredns, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

手動下載coredns映象

[root@k8scloude1 ~]# docker pull coredns/coredns:1.8.0

1.8.0: Pulling from coredns/coredns

c6568d217a00: Pull complete

5984b6d55edf: Pull complete

Digest: sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

Status: Downloaded newer image for coredns/coredns:1.8.0

docker.io/coredns/coredns:1.8.0

需要重新命名coredns映象,不然識別不了

[root@k8scloude1 ~]# docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

#刪除coredns/coredns:1.8.0映象

[root@k8scloude1 ~]# docker rmi coredns/coredns:1.8.0

此時可以發現現在k8scloude1上有7個映象,缺一個映象,kubeadm初始化都不能成功

[root@k8scloude1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 9 months ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 9 months ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 9 months ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 9 months ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 12 months ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 14 months ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 16 months ago 253MB

重新進行kubeadm初始化

[root@k8scloude1 ~]# kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.21.0

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8scloude1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.110.130]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8scloude1 localhost] and IPs [192.168.110.130 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8scloude1 localhost] and IPs [192.168.110.130 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 65.002757 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8scloude1 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8scloude1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: nta3x4.3e54l2dqtmj9tlry

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.110.130:6443 --token nta3x4.3e54l2dqtmj9tlry \

--discovery-token-ca-cert-hash sha256:9add1314177ac5660d9674dab8c13aa996520028514246c4cd103cf08a211cc8

根據提示建立目錄和組態檔

[root@k8scloude1 ~]# mkdir -p $HOME/.kube

[root@k8scloude1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8scloude1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

現在已經可以看到master節點了

[root@k8scloude1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8scloude1 NotReady control-plane,master 5m54s v1.21.0

4.6 新增worker節點到k8s叢集

接下來把另外的兩個worker節點也加入到k8s叢集。

kubeadm init的時候輸出瞭如下這句:kubeadm join 192.168.110.130:6443 --token nta3x4.3e54l2dqtmj9tlry --discovery-token-ca-cert-hash sha256:9add1314177ac5660d9674dab8c13aa996520028514246c4cd103cf08a211cc8 ,在另外兩個worker節點執行這一命令就可以把節點加入到k8s叢集裡。

如果加入叢集的token忘了,可以使用如下的命令獲取最新的加入命令token

[root@k8scloude1 ~]# kubeadm token create --print-join-command

kubeadm join 192.168.110.130:6443 --token 8e3haz.m1wrpuf357g72k1u --discovery-token-ca-cert-hash sha256:9add1314177ac5660d9674dab8c13aa996520028514246c4cd103cf08a211cc8

在另外兩個節點執行加入叢集的token命令

[root@k8scloude2 ~]# kubeadm join 192.168.110.130:6443 --token 8e3haz.m1wrpuf357g72k1u --discovery-token-ca-cert-hash sha256:9add1314177ac5660d9674dab8c13aa996520028514246c4cd103cf08a211cc8

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8scloude3 ~]# kubeadm join 192.168.110.130:6443 --token 8e3haz.m1wrpuf357g72k1u --discovery-token-ca-cert-hash sha256:9add1314177ac5660d9674dab8c13aa996520028514246c4cd103cf08a211cc8

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在k8scloude1檢視節點狀態,可以看到兩個worker節點都加入到了k8s叢集

[root@k8scloude1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8scloude1 NotReady control-plane,master 8m43s v1.21.0

k8scloude2 NotReady <none> 28s v1.21.0

k8scloude3 NotReady <none> 25s v1.21.0

可以發現worker節點加入到k8s叢集後多了兩個映象

[root@k8scloude2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 9 months ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 12 months ago 683kB

[root@k8scloude3 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 9 months ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 12 months ago 683kB

4.7 部署CNI網路外掛calico

雖然現在k8s叢集已經有1個master節點,2個worker節點,但是此時三個節點的狀態都是NotReady的,原因是沒有CNI網路外掛,為了節點間的通訊,需要安裝cni網路外掛,常用的cni網路外掛有calico和flannel,兩者區別為:flannel不支援複雜的網路策略,calico支援網路策略,因為今後還要設定k8s網路策略networkpolicy,所以本文選用的cni網路外掛為calico!

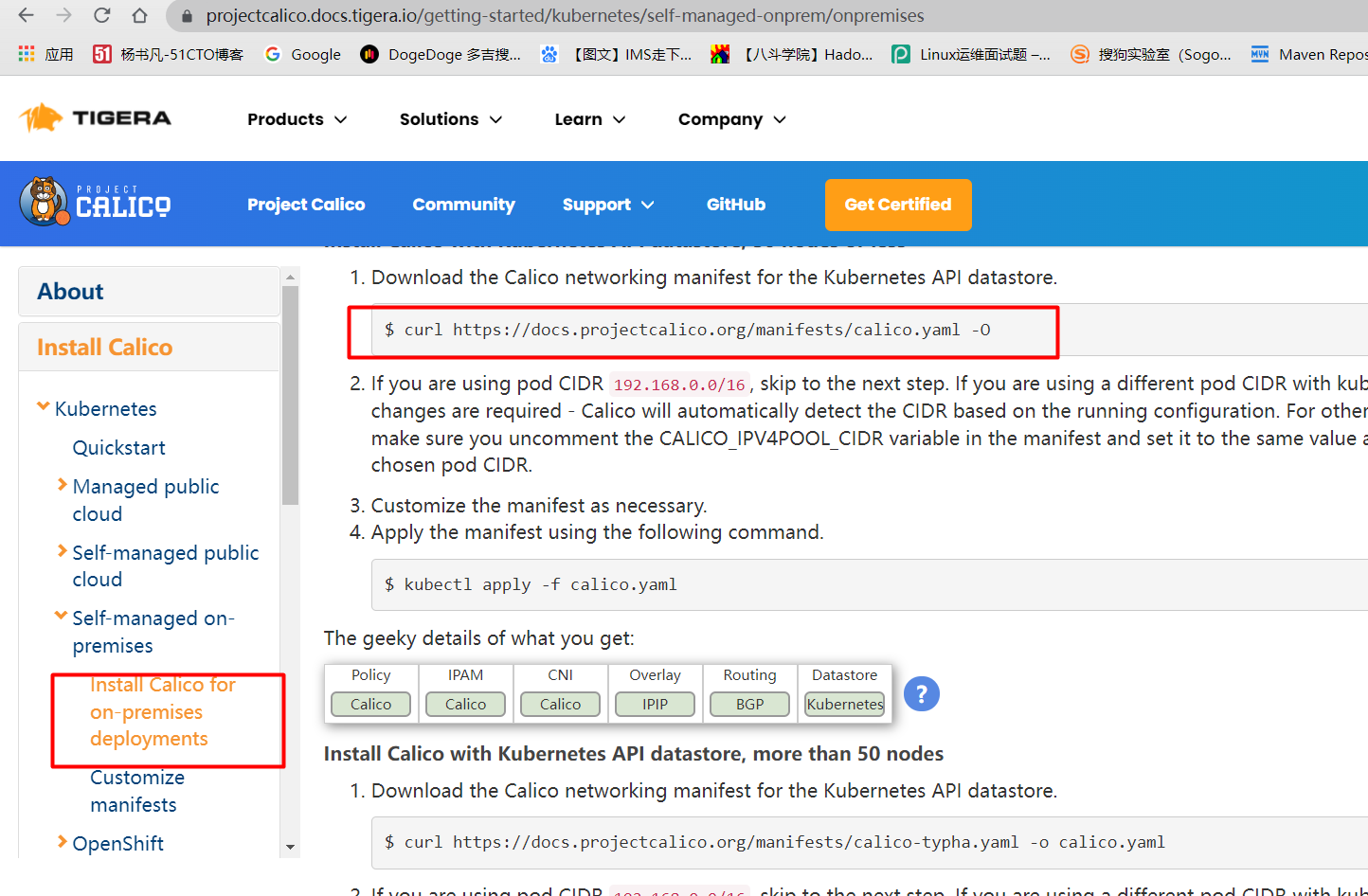

現在去官網下載calico.yaml檔案:

官網:https://projectcalico.docs.tigera.io/about/about-calico

搜尋方塊裡直接搜尋calico.yaml

找到下載calico.yaml的命令

下載calico.yaml檔案

[root@k8scloude1 ~]# curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 212k 100 212k 0 0 44222 0 0:00:04 0:00:04 --:--:-- 55704

[root@k8scloude1 ~]# ls

calico.yaml

檢視需要下載的calico映象,這四個映象需要在所有節點都下載,以k8scloude1為例

[root@k8scloude1 ~]# grep image calico.yaml

image: docker.io/calico/cni:v3.21.2

image: docker.io/calico/cni:v3.21.2

image: docker.io/calico/pod2daemon-flexvol:v3.21.2

image: docker.io/calico/node:v3.21.2

image: docker.io/calico/kube-controllers:v3.21.2

[root@k8scloude1 ~]# docker pull docker.io/calico/cni:v3.21.2

v3.21.2: Pulling from calico/cni

Digest: sha256:ce618d26e7976c40958ea92d40666946d5c997cd2f084b6a794916dc9e28061b

Status: Image is up to date for calico/cni:v3.21.2

docker.io/calico/cni:v3.21.2

[root@k8scloude1 ~]# docker pull docker.io/calico/pod2daemon-flexvol:v3.21.2

v3.21.2: Pulling from calico/pod2daemon-flexvol

Digest: sha256:b034c7c886e697735a5f24e52940d6d19e5f0cb5bf7caafd92ddbc7745cfd01e

Status: Image is up to date for calico/pod2daemon-flexvol:v3.21.2

docker.io/calico/pod2daemon-flexvol:v3.21.2

[root@k8scloude1 ~]# docker pull docker.io/calico/node:v3.21.2

v3.21.2: Pulling from calico/node

Digest: sha256:6912fe45eb85f166de65e2c56937ffb58c935187a84e794fe21e06de6322a4d0

Status: Image is up to date for calico/node:v3.21.2

docker.io/calico/node:v3.21.2

[root@k8scloude1 ~]# docker pull docker.io/calico/kube-controllers:v3.21.2

v3.21.2: Pulling from calico/kube-controllers

d6a693444ed1: Pull complete

a5399680e995: Pull complete

8f0eb4c2bcba: Pull complete

52fe18e41b06: Pull complete

2f8d3f9f1a40: Pull complete

bc94a7e3e934: Pull complete

55bf7cf53020: Pull complete

Digest: sha256:1f4fcdcd9d295342775977b574c3124530a4b8adf4782f3603a46272125f01bf

Status: Downloaded newer image for calico/kube-controllers:v3.21.2

docker.io/calico/kube-controllers:v3.21.2

#主要是如下4個映象

[root@k8scloude1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

calico/node v3.21.2 f1bca4d4ced2 4 weeks ago 214MB

calico/pod2daemon-flexvol v3.21.2 7778dd57e506 5 weeks ago 21.3MB

calico/cni v3.21.2 4c5c32530391 5 weeks ago 239MB

calico/kube-controllers v3.21.2 b20652406028 5 weeks ago 132MB

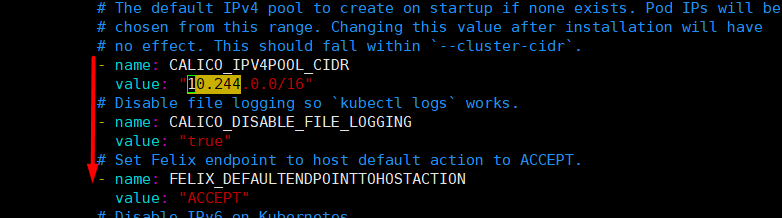

修改calico.yaml 檔案,CALICO_IPV4POOL_CIDR的IP段要和kubeadm初始化時候的pod網段一致,注意格式要對齊,不然會報錯

[root@k8scloude1 ~]# vim calico.yaml

[root@k8scloude1 ~]# cat calico.yaml | egrep "CALICO_IPV4POOL_CIDR|"10.244""

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

不直觀的話看圖片:修改calico.yaml 檔案

應用calico.yaml檔案

[root@k8scloude1 ~]# kubectl apply -f calico.yaml

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

此時發現三個節點都是Ready狀態了

[root@k8scloude1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8scloude1 Ready control-plane,master 53m v1.21.0

k8scloude2 Ready <none> 45m v1.21.0

k8scloude3 Ready <none> 45m v1.21.0

4.8 設定kubectl命令tab鍵自動補全

檢視kubectl自動補全命令

[root@k8scloude1 ~]# kubectl --help | grep bash

completion Output shell completion code for the specified shell (bash or zsh)

新增source <(kubectl completion bash)到/etc/profile,並使設定生效

[root@k8scloude1 ~]# cat /etc/profile | head -2

# /etc/profile

source <(kubectl completion bash)

[root@k8scloude1 ~]# source /etc/profile

此時即可kubectl命令tab鍵自動補全

[root@k8scloude1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8scloude1 Ready control-plane,master 59m v1.21.0

k8scloude2 Ready <none> 51m v1.21.0

k8scloude3 Ready <none> 51m v1.21.0

#注意:需要bash-completion-2.1-6.el7.noarch包,不然不能自動補全命令

[root@k8scloude1 ~]# rpm -qa | grep bash

bash-completion-2.1-6.el7.noarch

bash-4.2.46-30.el7.x86_64

bash-doc-4.2.46-30.el7.x86_64

自此,Kubernetes(k8s)叢集部署完畢!