Go死鎖——當Channel遇上Mutex時

2022-07-13 12:00:28

背景

用metux lock for迴圈,在for迴圈中又 向帶緩衝的Channel 寫資料時,千萬要小心死鎖!

最近,我在測試ws長連結閘道器,平均一個星期會遇到一次服務假死問題,因為並不是所有routine被阻塞,故runtime的檢查無法觸發,http health check又是另開的一個埠,k8s檢查不到異常,無法重啟服務。

經過一番排查論證之後,確定了是 混用帶緩衝的Channel和Metux造成的死鎖 (具體在文末總結)問題,請看下面詳細介紹。

死鎖現象

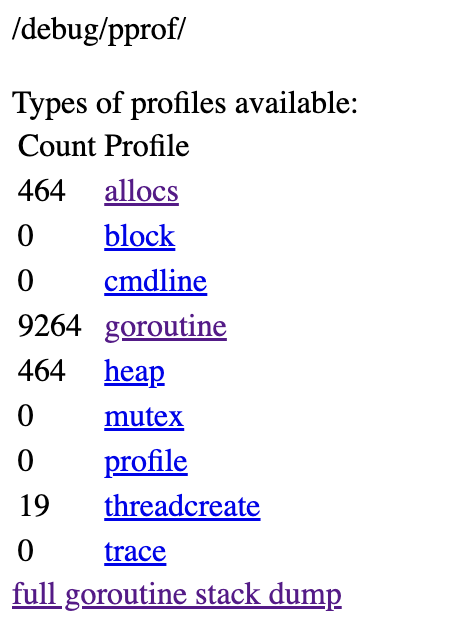

我們使用了gin框架,預先接入了pprof封裝元件,這樣通過http(非生產)就能很方便的檢視go runtime的一些資訊。

果不其然,我們開啟後發現了大量的 goroutine洩漏:

點開 full goroutiine stack dump,可以看到有很多死鎖等待,導致goroutine被阻塞:

其中:

- semacquire阻塞:有9261/2 個 routine

- chan send阻塞:有9處

問題出在哪裡?

啟發

有一個作者:https://wavded.com/post/golang-deadlockish/ 分享了一個類似的問題。下面是參照的部分正文內容。

1)Wait your turn

在我們為應用程式提供的一項支援服務中,每個組都有自己的Room,可以這麼說。我們在向房間廣播訊息之前鎖定了members列表,以避免任何資料競爭或可能的崩潰。像這樣:

func (r *Room) Broadcast(msg string) {

r.membersMx.RLock()

defer r.membersMx.RUnlock()

for _, m := range r.members {

if err := s.Send(msg); err != nil { // ❶

log.Printf("Broadcast: %v: %v", r.instance, err)

}

}

}

請注意,我們等待❶,直到每個成員收到訊息,然後再繼續下一個成員。這很快就會成為問題。

2)另一個線索

測試人員還注意到,他們可以在重新啟動服務時進入房間,並且事情似乎在一段時間內執行良好。然而,他們一離開又回來,應用程式就停止了正常工作。事實證明,他們被這個向房間新增新成員的功能結束通話了:

func (r *Room) Add(s sockjs.Session) {

r.membersMx.Lock() // ❶

r.members = append(r.members, s)

r.membersMx.Unlock()

}

我們無法獲得鎖❶,因為我們的 Broadcast 函數仍在使用它來傳送訊息。

分析

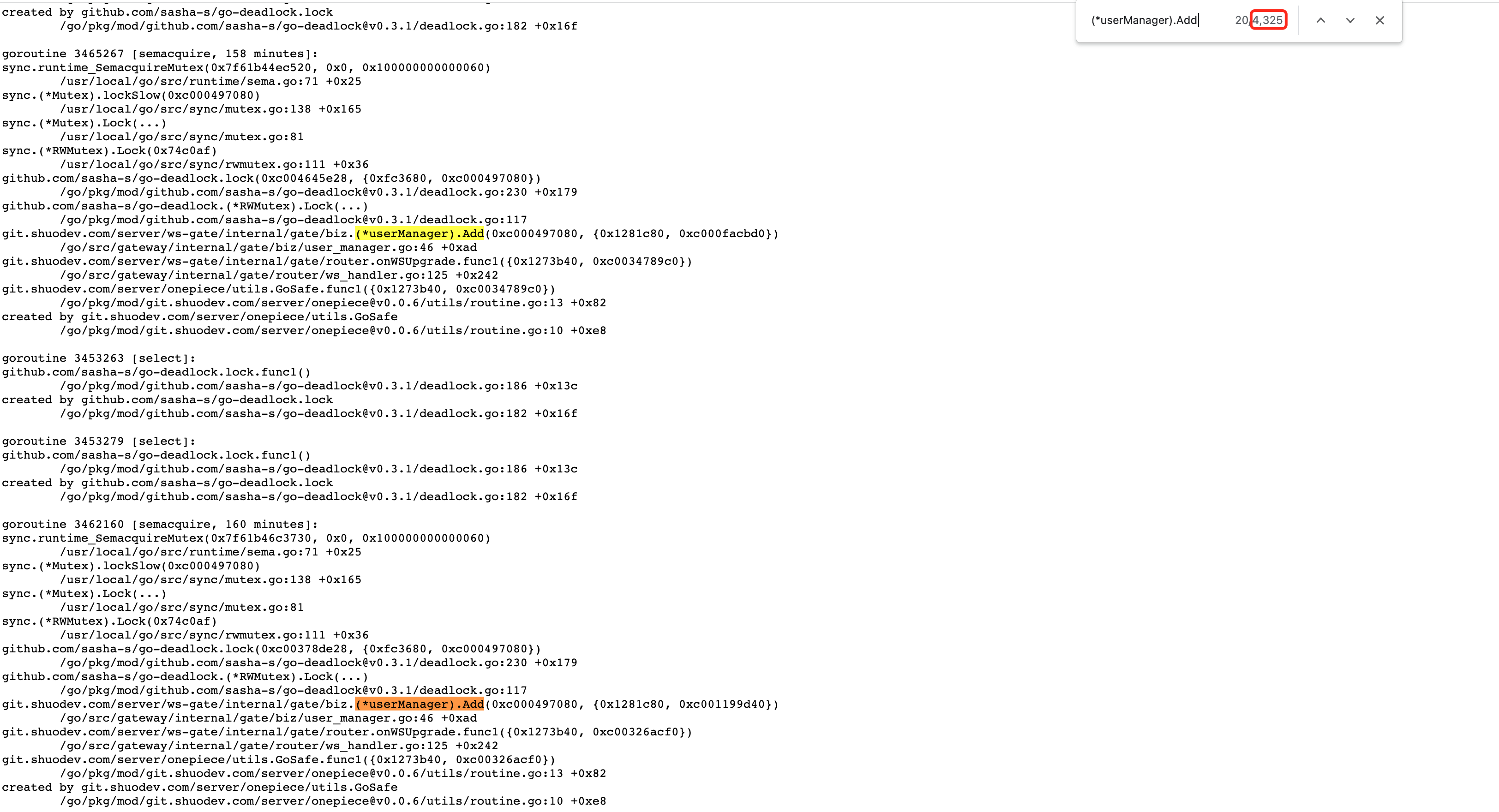

得益於上面的思路,我發現確實有大量的死鎖發生在 Add 位置:

和 wavded 直接呼叫 Send() 不同,我們是往一個帶緩衝的channel中寫資料(因為使用了 github.com/gorilla/websocket 包,它的 Writer() 函數不是執行緒安全的,故需要自己開一個Writer routine來處理資料的傳送邏輯):

func (ud *UserDevice) SendMsg(ctx context.Context, msg *InternalWebsocketMessage) {

// 注意,不是原生的Write

if err = ud.Conn.Write(data); err != nil {

ud.L.Debug("Write error", zap.Error(err))

}

}

func (c *connectionImpl) Write(data []byte) (err error) {

wsMsgData := &MsgData{

MessageType: websocket.BinaryMessage,

Data: data,

}

c.writer <- wsMsgData // 注意這裡,writer是有緩衝的,數量目前是10,如果被寫滿,就會阻塞

return

}

然後在 給room下面的使用者廣播訊息 的業務程式碼(實際有刪減)呼叫:

func (m *userManager) BroadcastMsgToRoom(ctx context.Context, msg *InternalWebsocketMessage, roomId []int64) {

// 這裡有互斥鎖,確保map的遍歷

m.RLock()

defer m.RUnlock()

// m.users 是一個 map[int64]User型別

for _, user := range m.users {

user.SendMsg(ctx, msg) // ❶

}

}

當這個channel寫滿了,位置 ❶ 的程式碼就會被阻塞,從而下面的邏輯也會阻塞(因為它一直在等待讀鎖釋放):

func (m *userManager) Add(device UserDeviceInterface) (User, int) {

uid := device.UID()

m.Lock() // ❶

defer m.Unlock()

user, ok := m.users[uid]

if !ok {

user = NewUser(uid, device.GetLogger())

m.users[uid] = user

}

remain := user.AddDevice(device)

return user, remain

}

那麼,當一個ws連線建立後,它對應的go routine也就一直阻塞在 Add中了。

func onWSUpgrade(ginCtx *gin.Context) {

// ...

utils.GoSafe(ctx, func(ctx context.Context) {

// ...

userDevice.User, remain = biz.DefaultUserManager.Add(userDevice)

}, logger)

}

但是 c.writer <- wsMsgData 為什麼會滿了呢?再繼續跟程式碼,發這裡原來有個超時邏輯:

func (c *connectionImpl) ExecuteLogic(ctx context.Context, device UserDeviceInterface) {

go func() {

for {

select {

case msg, ok := <-c.writer:

if !ok {

return

}

// 寫超時5秒

_ = c.conn.SetWriteDeadline(time.Now().Add(types.KWriteWaitTime))

if err := c.conn.WriteMessage(msg.MessageType, msg.Data); err != nil {

c.conn.Close()

c.onWriteError(err, device.UserId(), device.UserId())

return

}

}

}

}()

}

這下就能解釋的通了!

別人是如何解決的?

既然有人遇到了同樣的問題,我猜一些開源專案中可能就有一些細節處理,開啟goim(https://github.com/Terry-Mao/goim),看到如下細節:

// Push server push message.

func (c *Channel) Push(p *protocol.Proto) (err error) {

select {

case c.signal <- p:

default:

err = errors.ErrSignalFullMsgDropped

}

return

}

有一個select,發現了嗎?如果c.signal緩衝區滿,這個i/o就被阻塞,select輪詢機制會執行到default,那麼呼叫方在迴圈中呼叫Push的時候,也不會block了。

修改為下面程式碼,問題解決:

func (c *connectionImpl) Write(data []byte) (err error) {

wsMsgData := &MsgData{

MessageType: websocket.BinaryMessage,

Data: data,

}

// if buffer full, return error immediate

select {

case c.writer <- wsMsgData:

default:

err = ErrWriteChannelFullMsgDropped

}

return

}

後記

其實runtime是自帶死鎖檢測的,只不過比較嚴格,僅當所有的goroutine被掛起時才會觸發:

func main() {

w := make(chan string, 2)

w <- "1"

fmt.Println("write 1")

w <- "2"

fmt.Println("write 2」)

w <- "3"

}

上面的程式碼建立了帶緩衝的channel,大小為2。然後向其中寫入3個字串,我們故意沒有起go routine來接收資料,來看看執行的效果:

write 1

write 2

fatal error: all goroutines are asleep - deadlock!

goroutine 1 [chan send]:

main.main()

/Users/xu/repo/github/01_struct_mutex/main.go:133 +0xdc

exit status 2

這個程式只有一個 main routine(runtime建立),當它被阻塞時,相當於所有的go routine被阻塞,於是觸發 deadlock 報錯。

我們改進一下,使用 select 來檢查一下channel,發現滿了就直接返回:

func main() {

w := make(chan string, 2)

w <- "1"

fmt.Println("write 1")

w <- "2"

fmt.Println("write 2")

select {

case w <- "3":

fmt.Println("write 3")

default:

fmt.Println("msg flll")

}

}

此時,不會觸發死鎖:

write 1 write 2 msg flll

總結

用metux lock for迴圈,在for迴圈中又 向帶緩衝的Channel 寫資料時,千萬要小心死鎖!

Bad:

func (r *Room) Broadcast(msg string) {

r.mu.RLock()

defer r.mu.RUnlock()

for _, m := range r.members {

r.writer <- msg // Bad

}

}

Good:

func (r *Room) Broadcast(msg string) {

r.mu.RLock()

defer r.mu.RUnlock()

for _, m := range r.members {

// Good