測試右移:線上質量監控 ELK 實戰

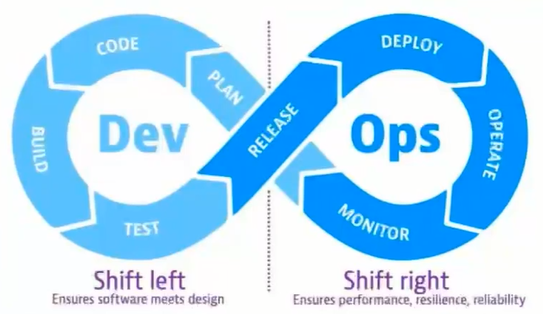

【測試右移】介紹

為什麼要做測試右移?

- 測試環境不能完全模擬線上環境。

- 線上質量不僅取決於已釋出程式碼,還取決於資料變更與設定變更。

- 線上測試可以獲得更多質量資料,以輔助產品更新。

測試右移主要實踐:

- 應用監控

- 綜合監控質量監控(ContinuousQuality Monitoring)

- A/B 測試

- 金絲雀部署(Canary Releases)

- TIP 線上測試

- 故障注入/混沌工程(Chaostesting)

為什麼要搭建監控系統:

- 測試資料收集

- 測試資料分析

- 測試資料視覺化與分析

質量監控:

- apm 應用效能監控

- 全鏈路監控 zipkin、skywalking

- 崩潰率監控 bugly

- 線上使用者體驗特徵監控與分析

測試監控:

- 優化測試的深度

- 優化測試的速度

- 優化測試的廣度

- 優化測試進度管理

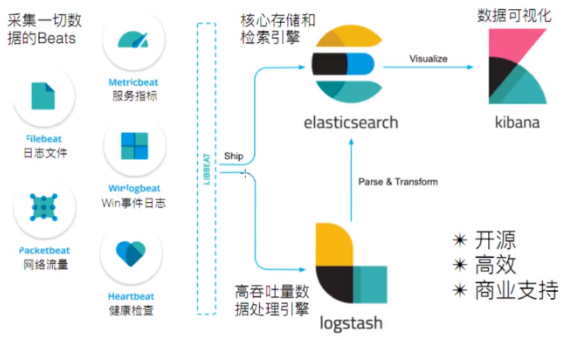

ELK Stack 介紹

ELK Stack 代指 Elasticsearch、Logstash 和 Kibana ,在這個生態圈慢慢發展過程中,加入了一個新成員 Beats。

-

ElasticSearch:基於 Java,一個開源的分散式搜尋引擎。 -

LogStash:基於 Java,開源的用於收集、分析和儲存紀錄檔的工具。(它和 Beats 有重疊的功能。Beats 出現之後,LogStash 則專門做紀錄檔的分析) -

Kibana:基於 Node.js,主要為 ElasticSearch 和 LogStash 提供 Web 頁面展示,可以彙總,分析搜尋資訊。 -

Beats:資料採集,它其實是一個綜合的名字,它由一些子專案組合而成。- Packetbeat(蒐集網路流量資料)

- Topbeat(蒐集系統、程序和檔案系統級別的 CPU 和記憶體使用情況等資料)

- Filebeat(用於監控,蒐集伺服器紀錄檔檔案)

- Winlogbeat( Windows 事件紀錄檔資料收集)

- Metricbeat(可定期獲取外部系統的監控指標資訊,可用於監控、收集 Apache、Mysql、Nginx、Redis、Zookeeper 等服務)

ELK 監控體系搭建

docker pull docker.elastic.co/elasticsearch/elasticsearch:8.1.2

docker pull docker.elastic.co/kibana/kibana:8.1.2

docker pull docker.elastic.co/logstash/logstash:8.1.2

ES & Kibana 搭建

# 建立子網

docker create network elastic

# 啟動 elasticsearch 容器

docker run -d --name es01 --net elastic -p 9200:9200 docker.elastic.co/elasticsearch/elasticsearch:8.1.2

# 由於啟動時間較久,可使用 docker logs -f es01 檢視啟動紀錄檔

記錄啟動紀錄檔中的登入密碼及 Token(注意有效期為半小時):

-> Password for the elastic user (reset with `bin/elasticsearch-reset-password -u elastic`):

DB1ngqLjym5Zg3n-doni

-> HTTP CA certificate SHA-256 fingerprint:

2cffe4439402214dcd28786c835fcd9fdce82f266a8cfd915cdd9fd52facdea3

-> Configure Kibana to use this cluster:

* Run Kibana and click the configuration link in the terminal when Kibana starts.

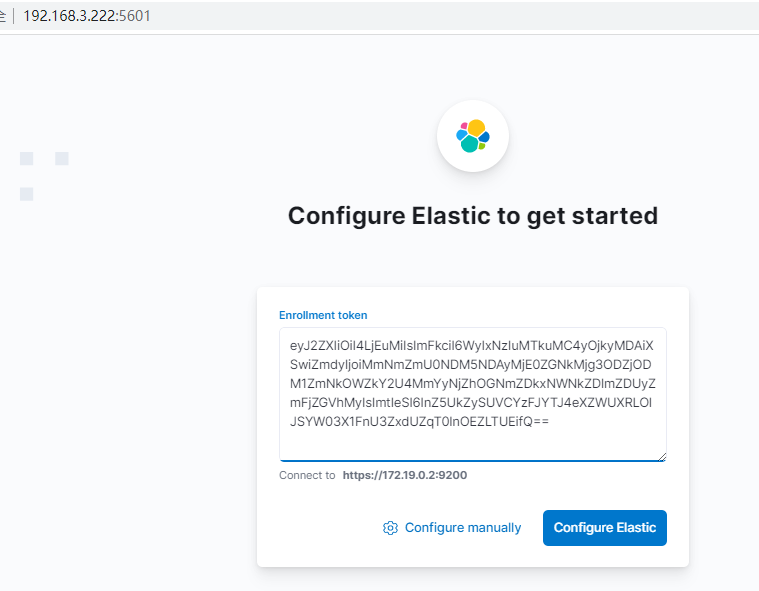

* Copy the following enrollment token and paste it into Kibana in your browser (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjEuMiIsImFkciI6WyIxNzIuMTkuMC4yOjkyMDAiXSwiZmdyIjoiMmNmZmU0NDM5NDAyMjE0ZGNkMjg3ODZjODM1ZmNkOWZkY2U4MmYyNjZhOGNmZDkxNWNkZDlmZDUyZmFjZGVhMyIsImtleSI6InZ5UkZySUVCYzFJYTJ4eXZWUXRLOlJSYW03X1FnU3ZxdUZqT0lnOEZLTUEifQ==

-> Configure other nodes to join this cluster:

* Copy the following enrollment token and start new Elasticsearch nodes with `bin/elasticsearch --enrollment-token <token>` (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjEuMiIsImFkciI6WyIxNzIuMTkuMC4yOjkyMDAiXSwiZmdyIjoiMmNmZmU0NDM5NDAyMjE0ZGNkMjg3ODZjODM1ZmNkOWZkY2U4MmYyNjZhOGNmZDkxNWNkZDlmZDUyZmFjZGVhMyIsImtleSI6IndTUkZySUVCYzFJYTJ4eXZWUXVsOlF0bFNtVnVCVFhXVV83UThUcVZETkEifQ==

If you're running in Docker, copy the enrollment token and run:

`docker run -e "ENROLLMENT_TOKEN=<token>" docker.elastic.co/elasticsearch/elasticsearch:8.1.2`

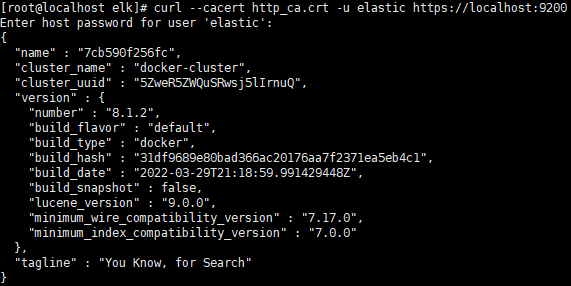

證書認證:

# 拷貝證書到本地

docker cp es01:/usr/share/elasticsearch/config/certs/http_ca.crt .

# 進行認證

curl --cacert http_ca.crt -u elastic https://localhost:9200

# 輸入剛剛記錄下的密碼

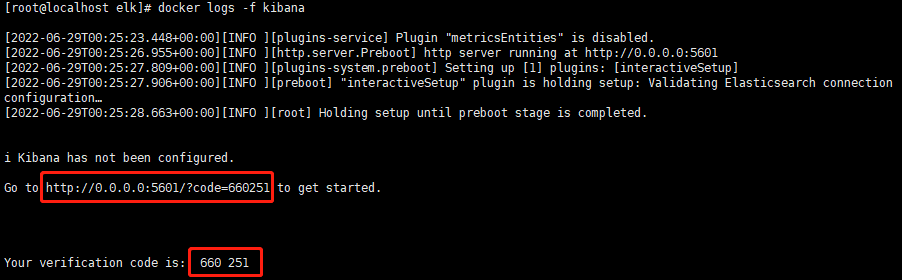

啟動 Kibana:

docker run -d \

--name kibana \

--link elasticsearch:elasticsearch \

--net elastic \

-p 5601:5601 \

docker.elastic.co/kibana/kibana:8.1.2

輸入 ES 啟動紀錄檔中的 Token 及 Kibana 啟動紀錄檔中的 code:

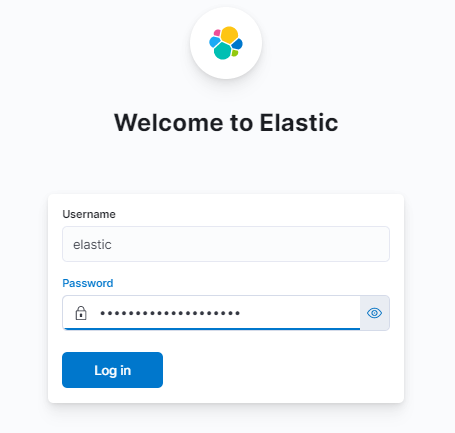

輸入 ES 啟動紀錄檔中的使用者名稱/密碼:

Nginx 紀錄檔自動採集

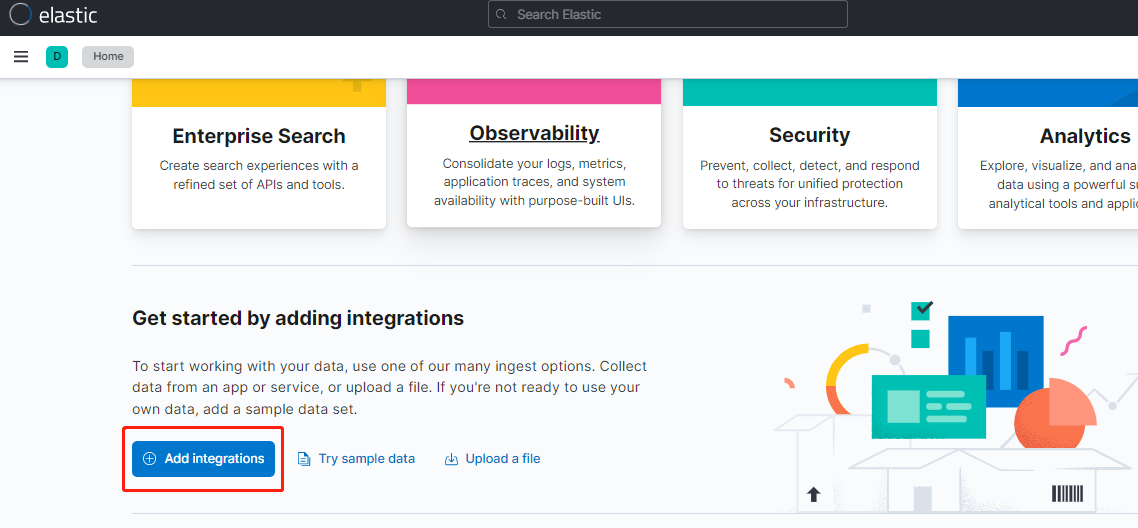

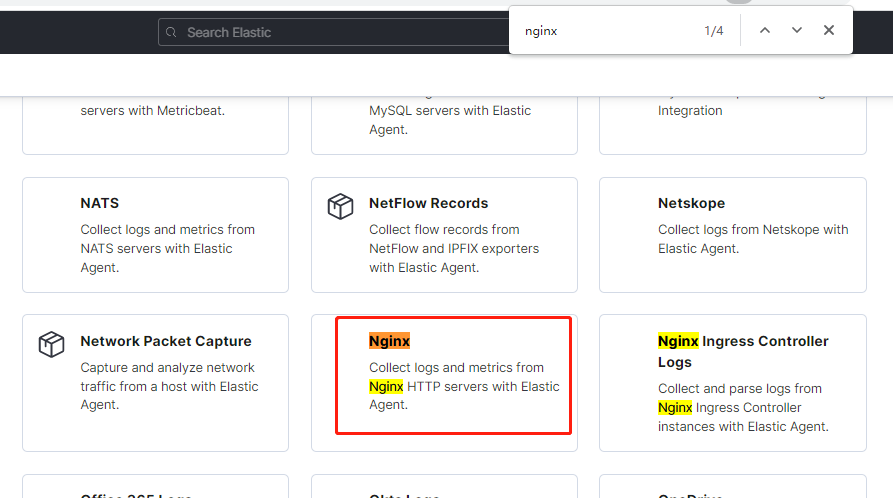

範例:使用 ES 提供的整合工具(Nginx Agent),自動採集伺服器上的 Nginx 紀錄檔資料到 ES 中

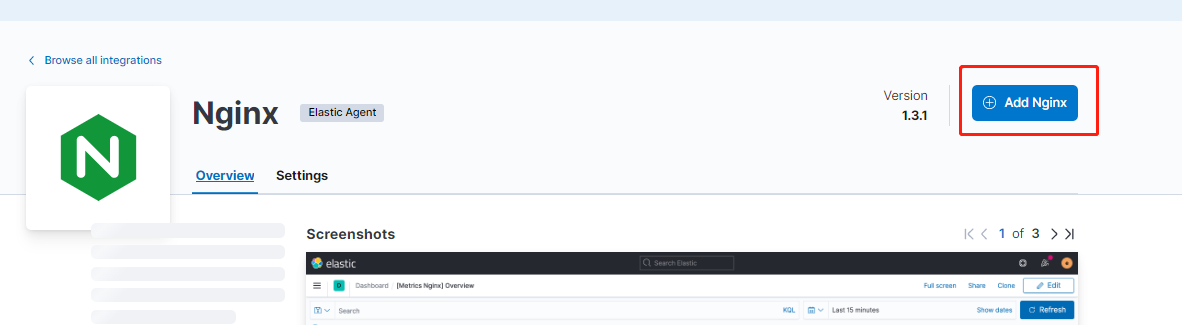

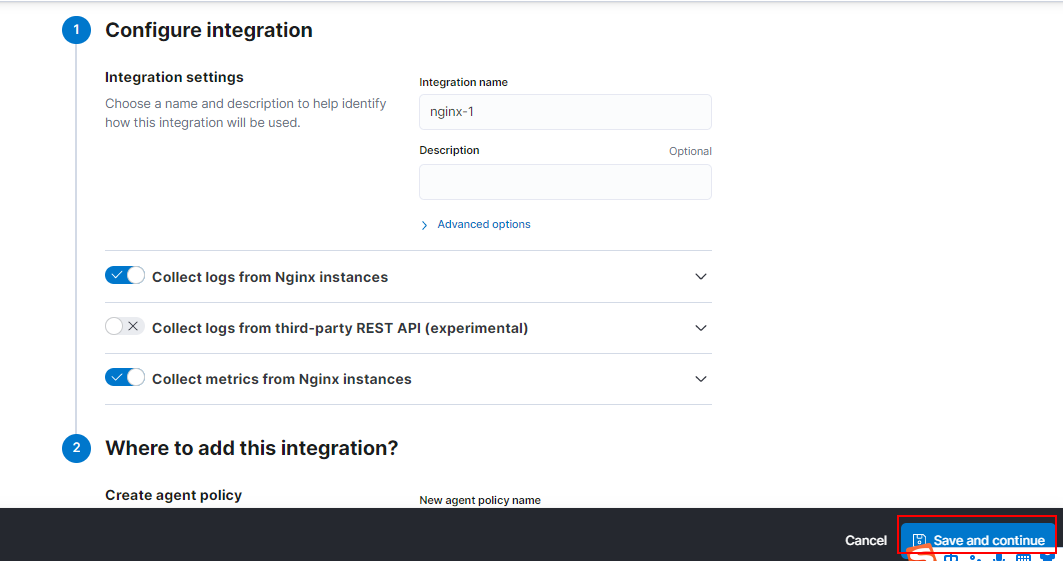

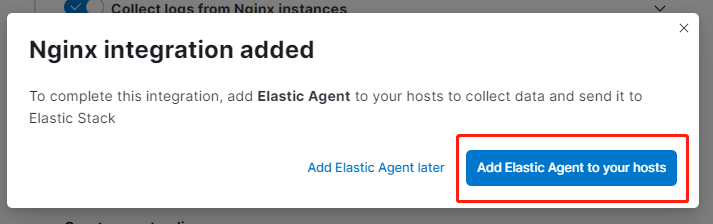

Nginx Agent

使用預設設定進行儲存:

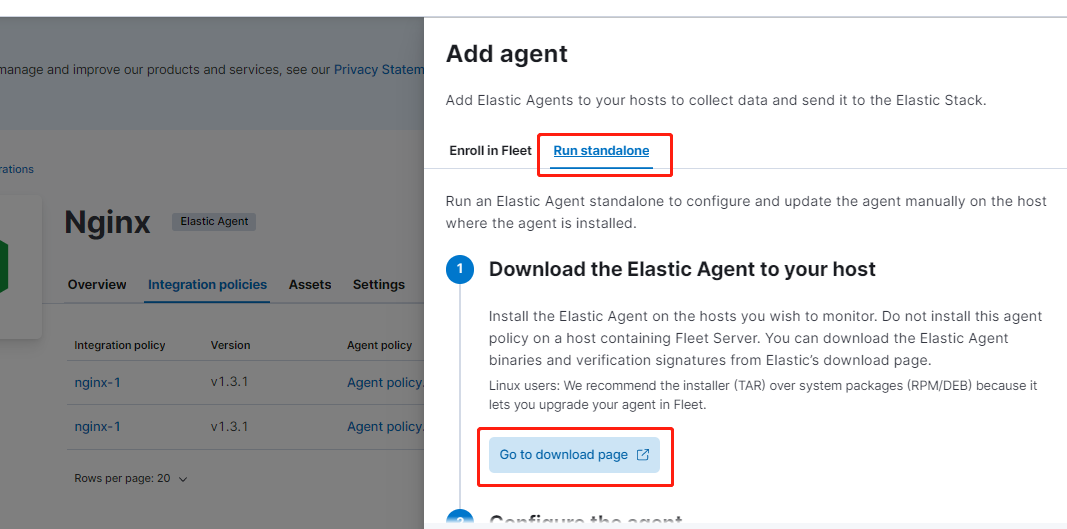

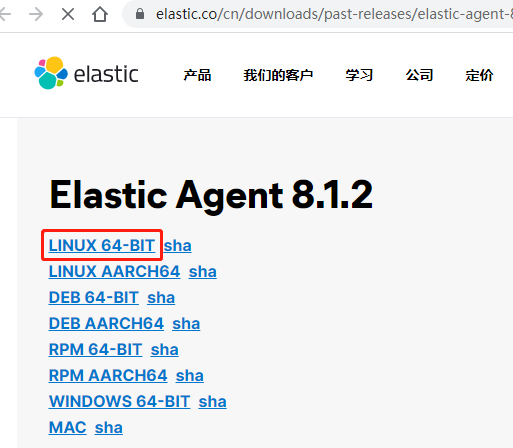

將上述 ES 提供的紀錄檔採集 Agent 下載到 Nginx 伺服器中:

[root@localhost es_agent]# wget https://artifacts.elastic.co/downloads/beats/elastic-agent/elastic-agent-8.1.2-linux-x86_64.tar.gz

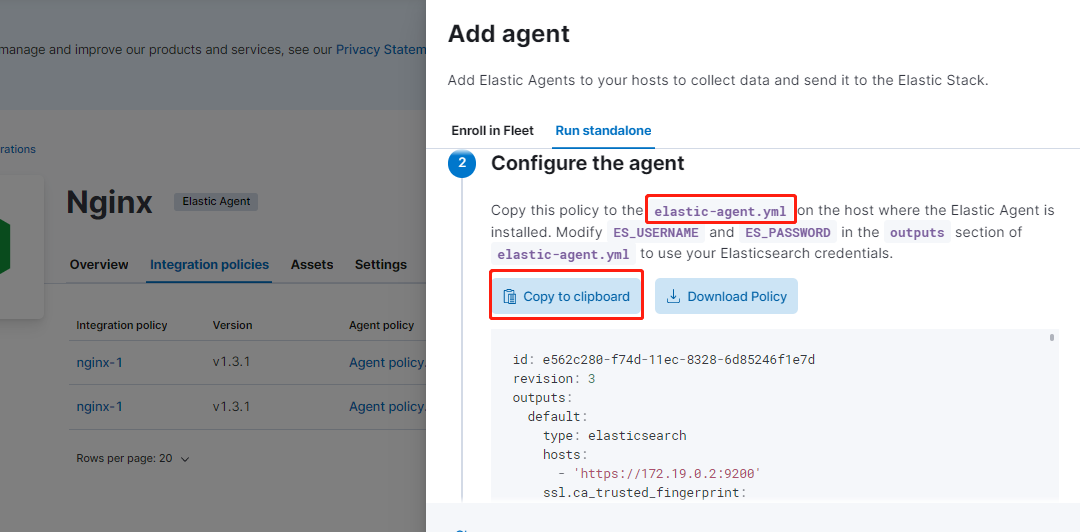

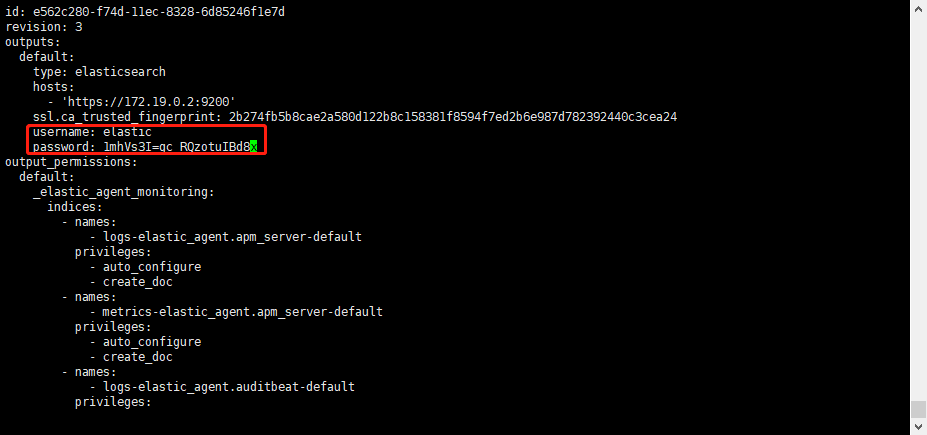

建立 elastic-agent.yml 組態檔:

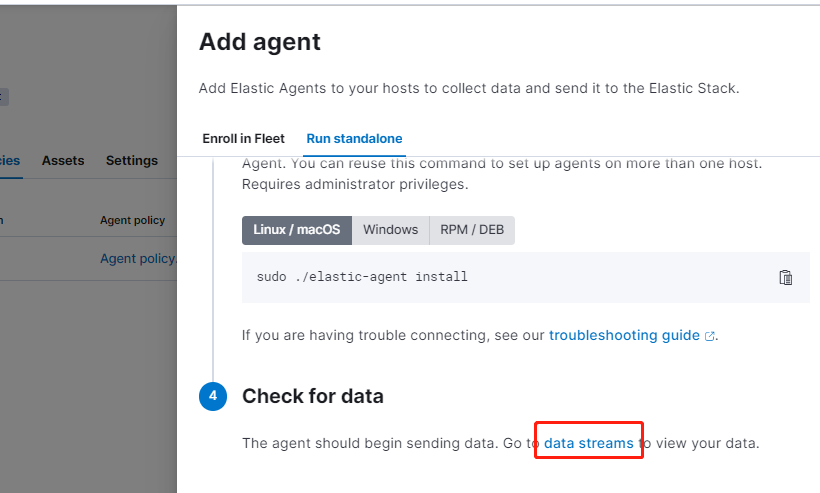

解壓 agent 並執行:

[root@localhost es_agent]# tar -zxvf elastic-agent-8.1.2-linux-x86_64.tar.gz

...

[root@localhost es_agent]# ls

elastic-agent-8.1.2-linux-x86_64 elastic-agent-8.1.2-linux-x86_64.tar.gz elastic-agent.yml

[root@localhost es_agent]# ./elastic-agent-8.1.2-linux-x86_64/elastic-agent install

Elastic Agent will be installed at /opt/Elastic/Agent and will run as a service. Do you want to continue? [Y/n]:y

Do you want to enroll this Agent into Fleet? [Y/n]:n

Elastic Agent has been successfully installed.

# 此時 agent 會被成功安裝在 /opt/Elastic/Agent/ 目錄

替換剛下載的組態檔:

[root@localhost Agent]# mv elastic-agent.yml elastic-agent.yml_bak

[root@localhost Agent]# mv ../elastic-agent.yml .

[root@localhost Agent]# vi elastic-agent.yml

# 重啟 agent

[root@localhost Agent]# ./elastic-agent restart

安裝 Nginx 伺服器

# 下載 nginx

[root@localhost Agent]# yum install nginx

# 啟動 nginx

[root@localhost Agent]# systemctl start nginx

# 檢視 nginx 紀錄檔,發現已被 agent 監控

[root@localhost Agent]# less /var/log/nginx/access.log

127.0.0.1 - - [29/Jun/2022:12:42:23 +0800] "GET /nginx_status HTTP/1.1" 404 3971 "-" "Elastic-Metricbeat/8.1.2 (linux; amd64; 6118f25235a52a7f0c4937a0a309e380c92d8119; 2022-03-29 22:45:47 +0000 UTC)" "-"

127.0.0.1 - - [29/Jun/2022:12:42:40 +0800] "GET /nginx_status HTTP/1.1" 404 3971 "-" "Elastic-Metricbeat/8.1.2 (linux; amd64; 6118f25235a52a7f0c4937a0a309e380c92d8119; 2022-03-29 22:45:47 +0000 UTC)" "-"

...

資料分析

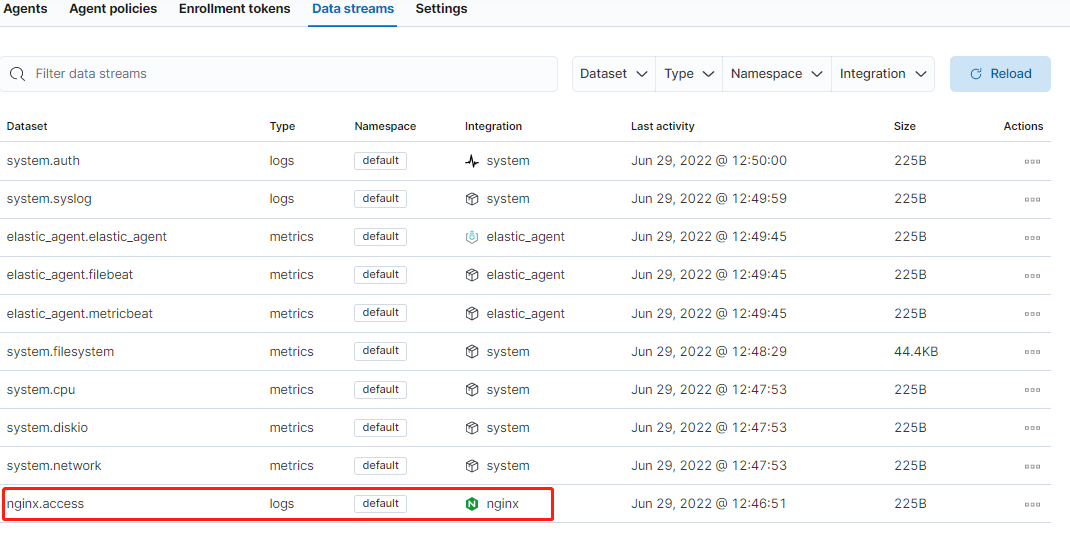

確認紀錄檔資料正常被採集到 ES 中:

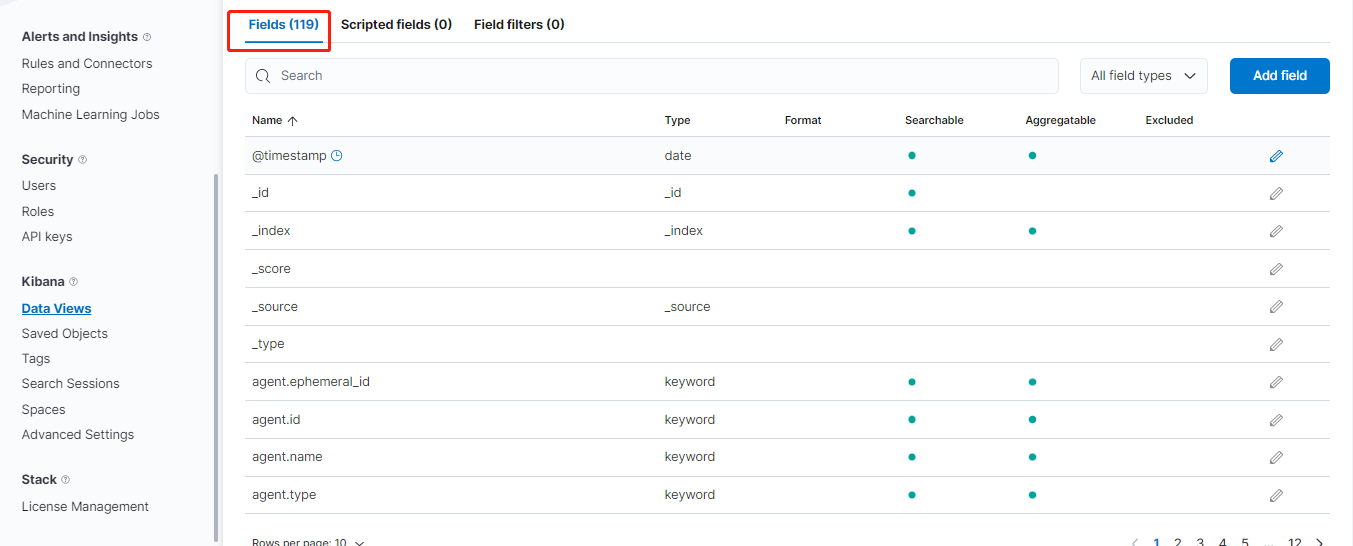

建立自定義檢視(正則匹配所需要的紀錄檔檔案):

建立完成後,會自動解析欄位:

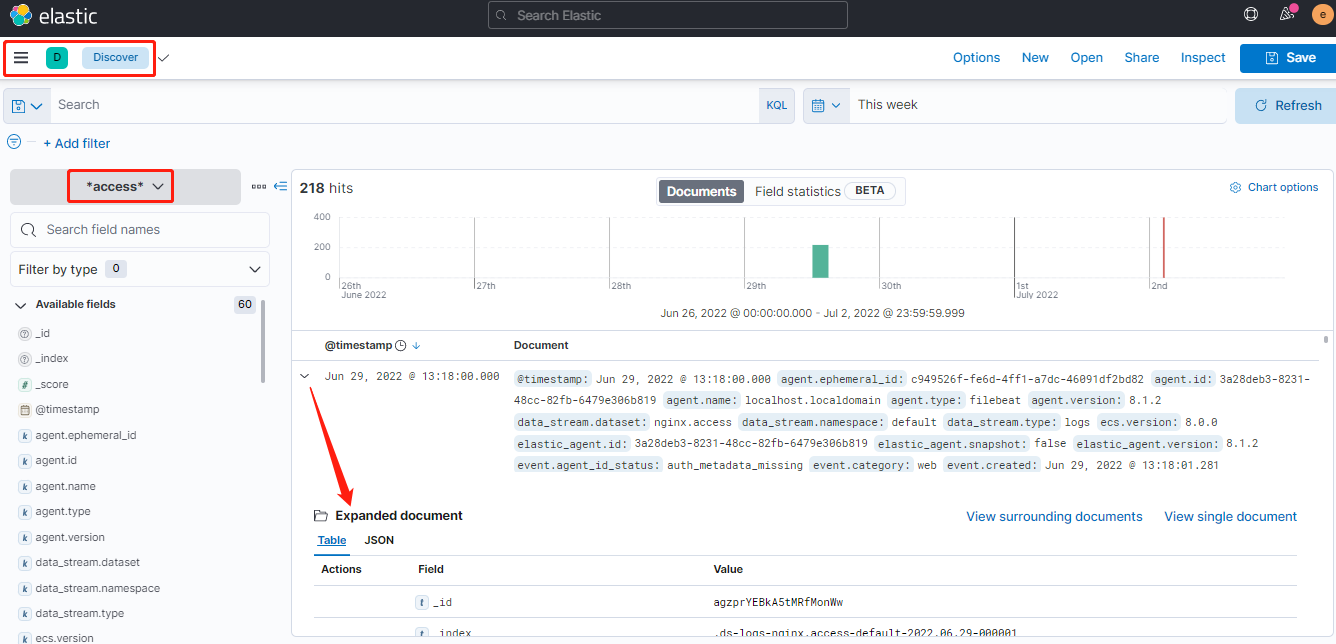

檢視自定義檢視的統計圖及明細資料:

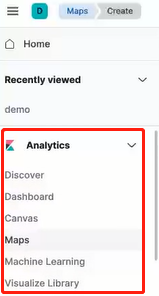

更多的資料分析功能:

Logstash 搭建

組態檔:vi $PWD/pipeline/logstash.conf

# 輸入

input {

file {

path => [ "/data/*.log" ] # logstash的data目錄下的log

}

}

# 過濾

filter {

grok {

match => { "message" => ["%{IPORHOST:[nginx][access][remote_ip]} - %{DATA:[nginx][access][user_name]} \[%{HTTPDATE:[nginx][access][time]}\] \"%{WORD:[nginx][access][method]} %{DATA:[nginx][access][url]} HTTP/%{NUMBER:[nginx][access][http_version]}\" %{NUMBER:[nginx][access][response_code]} %{NUMBER:[nginx][access][body_sent][bytes]} \"%{DATA:[nginx][access][referrer]}\" \"%{DATA:[nginx][access][agent]}\""] }

remove_field => "message"

}

}

# 輸出

output {

stdout {} # 標準輸出

elasticsearch { # 輸出到 es

hosts => ["https://192.168.3.222:9200"] # es主機

index => "logstash-nginx-%{+YYYY.MM.dd}" # 生成的索引

user => "elastic" # es使用者名稱

password => "-yAoRIIni3qy*RW*Q8Nc" # es密碼

ssl_certificate_verification => false # 關閉es證書認證

}

}

啟動 logstash:

# 組態檔

[root@localhost logstash]# vi logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: ["https://192.168.3.222:9200"]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "-yAoRIIni3qy*RW*Q8Nc"

xpack.monitoring.enabled: true

# 啟動 logstash

docker run --name logstash --rm -d --net elastic \

-v $PWD/logstash.yml:/usr/share/logstash/config/logstash.yml \ # 掛載組態檔

-v $PWD/pipeline:/usr/share/logstash/pipeline/ \ # 掛載採集組態檔目錄

-v /var/log/nginx/access.log:/data/access.log \ # 掛載nginx的存取紀錄檔

-v /var/log/nginx/error.log:/data/error.log \ # 掛載nginx的錯誤紀錄檔

docker.elastic.co/logstash/logstash:8.1.2 \

--config.reload.automatic # 熱更新組態檔

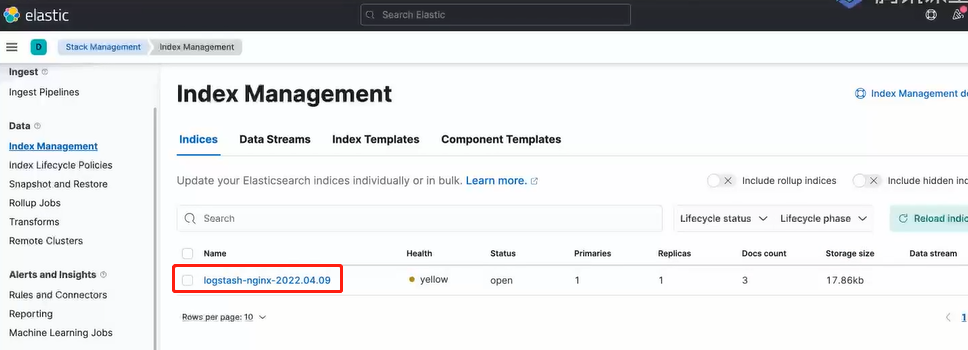

檢視成功生成的索引:

接著便可建立自定義檢視(data view)並進行資料分析。