LightGBM原理與實踐簡記

寫在前面:

LightGBM 用了很久了,但是一直沒有對其進行總結,本文從 LightGBM 的使用、原理及引數調優三個方面進行簡要梳理。

開箱即用

quickstart

使用 LightGBM 官方介面,核心步驟

- 定義引數

- 構造資料

- train

- predict

# 1.定義引數

config = json.load(open("configs/lightgbm_config.json", 'r'))

# 2. 構造資料

index = int(len(features)*0.9)

train_fts, train_lbls = features[:index], labels[:index]

val_fts, val_lbls = features[index:], labels[index:]

train_data = lgb.Dataset(train_fts, label=train_lbls)

val_data = lgb.Dataset(val_fts, label=val_lbls)

# 3. train

bst = lgb.train(params=config, train_set=train_data, valid_sets=[val_data])

# 4. predict

lgb.predict(val_data)

# lightgbm_config.json

{

"objective":"binary",

"task":"train",

"boosting":"gbdt",

"num_iterations":500,

"learning_rate":0.1,

"max_depth":-1,

"num_leaves":64,

"tree_learner":"serial",

"num_threads":0,

"device_type":"cpu",

"seed":0,

"min_data_in_leaf":100,

"min_sum_hessian_in_leaf":0.001,

"bagging_fraction":0.9,

"bagging_freq":1,

"bagging_seed":0,

"feature_fraction":0.9,

"feature_fraction_bynode":0.9,

"feature_fraction_seed":0,

"early_stopping_rounds":10,

"first_metric_only":true,

"max_delta_step":0,

"lambda_l1":0,

"lambda_l2":1,

"verbosity":2,

"is_unbalance":true,

"sigmoid":1,

"boost_from_average":true,

"metric":[

"binary_logloss",

"auc",

"binary_error"

]

}

sklearn 介面

import lightgbm as lgb

# 1. config

"""

objective parameter:

‘regression’ for LGBMRegressor

‘binary’ or ‘multiclass’ for LGBMClassifier

‘lambdarank’ for LGBMRanker.

"""

lgb_clf = lgb.LGBMModel(

objective = 'binary',

metric = 'binary_logloss,auc',

learning_rate = 0.1,

bagging_fraction = 0.8,

feature_fraction = 0.9,

bagging_freq = 5,

n_estimators = 300,

max_depth = 4,

is_unbalance = True

)

# 2. fit

# 3. predict

增量學習

在處理大規模資料時,資料無法一次性載入記憶體,使用增量訓練。

主要通過兩個引數實現:

- init_model

- keep_training_booster

詳細方法見 增量學習/訓練

原理

在LightGBM,Xgboost一直是kaggle的屠榜神器之一,但是,一切都在進步~

回顧Xgboost

-

貪婪演演算法生成樹,時間複雜度\(O(ndKlogn)\),\(d\) 個特徵,每個特徵排序需要\(O(nlogn)\),樹深度為\(K\)

- pre-sorting 對特徵進行預排序並且需要儲存排序後的索引值(為了後續快速的計算分裂點),因此記憶體需要訓練資料的兩倍。

- 在遍歷每一個分割點的時候,都需要進行分裂增益的計算,

-

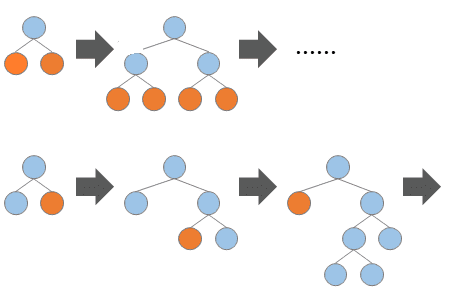

Level-wise 生長,平行計算每一層的分裂節點

- 提高了訓練速度

- 但同時也因為節點增益過小增加了很多不必要的分裂,增加了計算量

LightGBM

- 基於 Histogram 的決策樹演演算法

- 帶深度限制的 Leaf-wise 的葉子生長策略

- 直方圖做差加速

- 直接支援類別特徵(Categorical Feature)

- Cache命中率優化

- 基於直方圖的稀疏特徵優化

- 多執行緒優化

直方圖演演算法

- 將連續的浮點特徵離散成

個離散值,並構造寬度為

的

。預設k為 255

- 遍歷訓練資料,統計每個離散值在直方圖中的累計統計量。

- 在進行特徵選擇時,只需要根據直方圖的離散值,遍歷尋找最優的分割點。

記憶體優化:

- int32存下標,float32存資料 -> 8位元儲存

- 記憶體消耗可以降低為原來的

。

時間優化:

- \(O(nd)\)變為\(O(kd)\)

Leaf-wise 生長

Leaf-wise(按葉子生長)生長策略

- 每次從當前所有葉子中找到分裂增益最大(一般也是資料量最大)的一個葉子

- 然後分裂,如此迴圈。

- 同 Level-wise 相比,在分裂次數相同的情況下,Leaf-wise 可以降低更多的誤差,得到更好的精度。Leaf-wise 的缺點是可能會長出比較深的決策樹,產生過擬合。因此 LightGBM 在 Leaf-wise 之上增加了一個最大深度的限制,在保證高效率的同時防止過擬合。

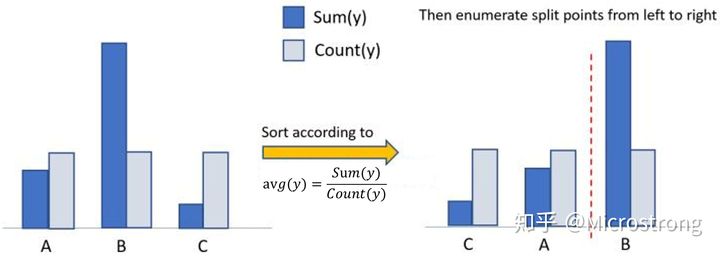

類別型特徵支援

xgboost使用one-hot編碼,LightGBM 採用了 Many vs Many 的切分方式,實現過程如下【7】:

-

將類別的取值當做bin,有多少個取值就是多少個bin(去除樣本極少的bin)

-

統計該特徵中的各取值上的樣本數,按照從樣本數從大到小排序,去除樣本佔比小於1%的類別值

-

對於剩餘的特徵值(可以理解為一個特徵值對應一個桶),統計各個特徵值對應的樣本的一階梯度之和,二階梯度之和,根據正則化係數,算得各個桶的統計量: 一階梯度之和 / (二階梯度之和 + 正則化係數);

-

根據該統計量對各個桶進行從大到小排序;在排序好的桶上,進行最佳切點查詢

並行支援

- 特徵並行:在不同機器在不同的特徵集合上分別尋找最優的分割點,然後在機器間同步最優的分割點。

- 資料並行:讓不同的機器先在本地構造直方圖,然後進行全域性的合併,最後在合併的直方圖上面尋找最優分割點。

不均衡資料處理

- 二分類

is_unbalance=True,表示 正樣本的權重/負樣本的權重 等於負樣本的樣本數/正樣本的樣本數- 或設定

scale_pos_weight,代表的是正類的權重,可以設定為 number of negative samples / number of positive samples

- 多分類

class weight

- 自定義 facal loss【9】

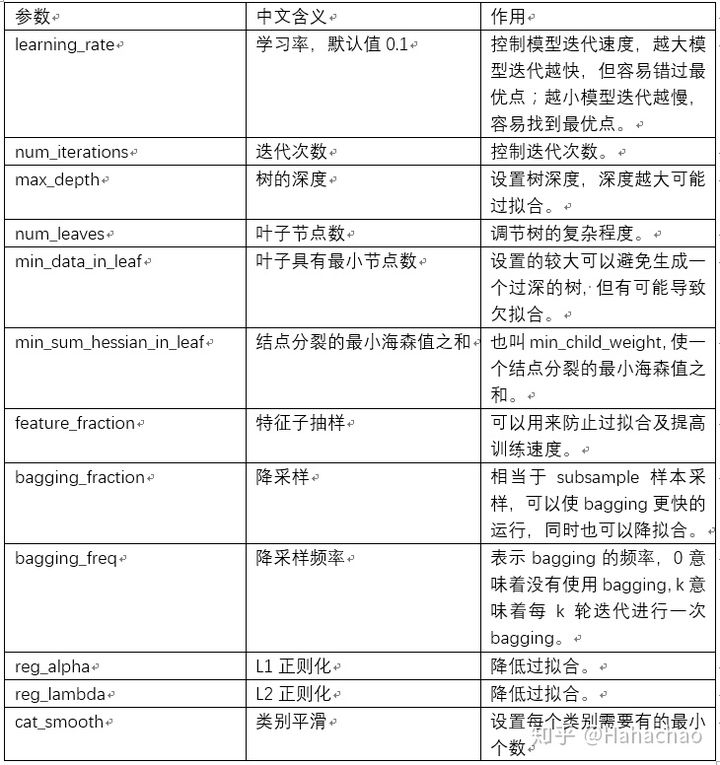

引數調優

引數說明

核心引數

-

boosting / boost / boosting_type

用於指定弱學習器的型別,預設值為 ‘gbdt’,表示使用基於樹的模型進行計算。還可以選擇為 ‘gblinear’ 表示使用線性模型作為弱學習器。

‘gbdt’,使用梯度提升樹 ‘rf’,使用隨機森林 ‘dart’,不太瞭解,官方解釋為 Dropouts meet Multiple Additive Regression Trees ‘goss’,使用單邊梯度抽樣演演算法,速度很快,但是可能欠擬合。 -

objective / application

「regression」,使用L2正則項的迴歸模型(預設值)。 「regression_l1」,使用L1正則項的迴歸模型。 「mape」,平均絕對百分比誤差。 「binary」,二分類。 「multiclass」,多分類。 -

num_class

多分類問題的類別個數 -

增量訓練

keep_training_booster=True # 增量訓練

超參

調優

調優思路與方向

- 樹結構引數

- max_depth :3-8

- num_leaves:最大值是

2^(max_depth) - min_data_in_leaf

- 訓練速度引數

- learning_rate 和 n_estimators,結合early_stopping使用

- max_bin:變數分箱的數量,預設255。調大則準確,但容易過擬合;調小可以加速

- 防止過擬合

- lambda_l1 和 lambda_l2:

L1和L2正則化,對應XGBoost的reg_lambda和reg_alpha - min_gain_to_split:如果你設定的深度很深,但又無法向下分裂,

LGBM就會提示warning,無法找到可以分裂的了,說明資料質量已經達到了極限了。引數含義和XGBoost的gamma是一樣。比較保守的搜尋範圍是(0, 20),它可以用作大型引數網格中的額外正則化 - bagging_fraction:訓練每棵樹的訓練樣本百分比

- feature_fraction:訓練每棵樹時要取樣的特徵百分比

- lambda_l1 和 lambda_l2:

自動調參

使用Optuna,定義優化目標函數:

- 定義訓練引數字典

- 建立模型,訓練

- 定義指標

import optuna # pip install optuna

from sklearn.metrics import log_loss

from sklearn.model_selection import StratifiedKFold

from optuna.integration import LightGBMPruningCallback

def objective(trial, X, y):

param_grid = {

"n_estimators": trial.suggest_categorical("n_estimators", [10000]),

"learning_rate": trial.suggest_float("learning_rate", 0.01, 0.3),

"num_leaves": trial.suggest_int("num_leaves", 20, 3000, step=20),

"max_depth": trial.suggest_int("max_depth", 3, 12),

"min_data_in_leaf": trial.suggest_int("min_data_in_leaf", 200, 10000, step=100),

"max_bin": trial.suggest_int("max_bin", 200, 300),

"lambda_l1": trial.suggest_int("lambda_l1", 0, 100, step=5),

"lambda_l2": trial.suggest_int("lambda_l2", 0, 100, step=5),

"min_gain_to_split": trial.suggest_float("min_gain_to_split", 0, 15),

"bagging_fraction": trial.suggest_float(

"bagging_fraction", 0.2, 0.95, step=0.1

),

"bagging_freq": trial.suggest_categorical("bagging_freq", [1]),

"feature_fraction": trial.suggest_float(

"feature_fraction", 0.2, 0.95, step=0.1

),

}

cv = StratifiedKFold(n_splits=5, shuffle=True, random_state=1121218)

cv_scores = np.empty(5)

for idx, (train_idx, test_idx) in enumerate(cv.split(X, y)):

X_train, X_test = X.iloc[train_idx], X.iloc[test_idx]

y_train, y_test = y[train_idx], y[test_idx]

model = lgbm.LGBMClassifier(objective="binary", **param_grid)

model.fit(

X_train,

y_train,

eval_set=[(X_test, y_test)],

eval_metric="binary_logloss",

early_stopping_rounds=100,

callbacks=[

LightGBMPruningCallback(trial, "binary_logloss")

],

)

preds = model.predict_proba(X_test)

preds = model.predict_proba(X_test)

# 優化指標logloss最小

cv_scores[idx] = log_loss(y_test, preds)

return np.mean(cv_scores)

調優

study = optuna.create_study(direction="minimize", study_name="LGBM Classifier")

func = lambda trial: objective(trial, X, y)

study.optimize(func, n_trials=20)

搜尋完成後,呼叫best_value和bast_params屬性,調參就出來了。

print(f"\tBest value (rmse): {study.best_value:.5f}")

print(f"\tBest params:")

for key, value in study.best_params.items():

print(f"\t\t{key}: {value}")

-----------------------------------------------------

Best value (binary_logloss): 0.35738

Best params:

device: gpu

lambda_l1: 7.71800699380605e-05

lambda_l2: 4.17890272377219e-06

bagging_fraction: 0.7000000000000001

feature_fraction: 0.4

bagging_freq: 5

max_depth: 5

num_leaves: 1007

min_data_in_leaf: 45

min_split_gain: 15.703519227860273

learning_rate: 0.010784015325759629

n_estimators: 10000

得到這個引數組合後,我們就可以拿去跑模型了,看結果再手動微調,這樣就可以省很多時間了。

特徵重要性

lgb_clf.feature_importances_

references

【1】詳解LightGBM兩大利器:基於梯度的單邊取樣(GOSS)和互斥特徵捆綁(EFB)https://zhuanlan.zhihu.com/p/366234433

【2】LightGBM的引數詳解以及如何調優. https://cloud.tencent.com/developer/article/1696852

【3】LightGBM 中文檔案. https://lightgbm.cn/

【4】決策樹(下)——XGBoost、LightGBM(非常詳細)https://zhuanlan.zhihu.com/p/87885678

【5】http://www.showmeai.tech/article-detail/195

【6】https://zhuanlan.zhihu.com/p/99069186

【7】lightgbm離散類別型特徵為什麼按照每一個類別裡對應樣本的一階梯度求和/二階梯度求和排序? - 一直學習一直爽的回答 - 知乎 https://www.zhihu.com/question/386888889/answer/1195897410

【9】LightGBM with the Focal Loss for imbalanced datasets