Redis布隆防擊穿實戰

背景

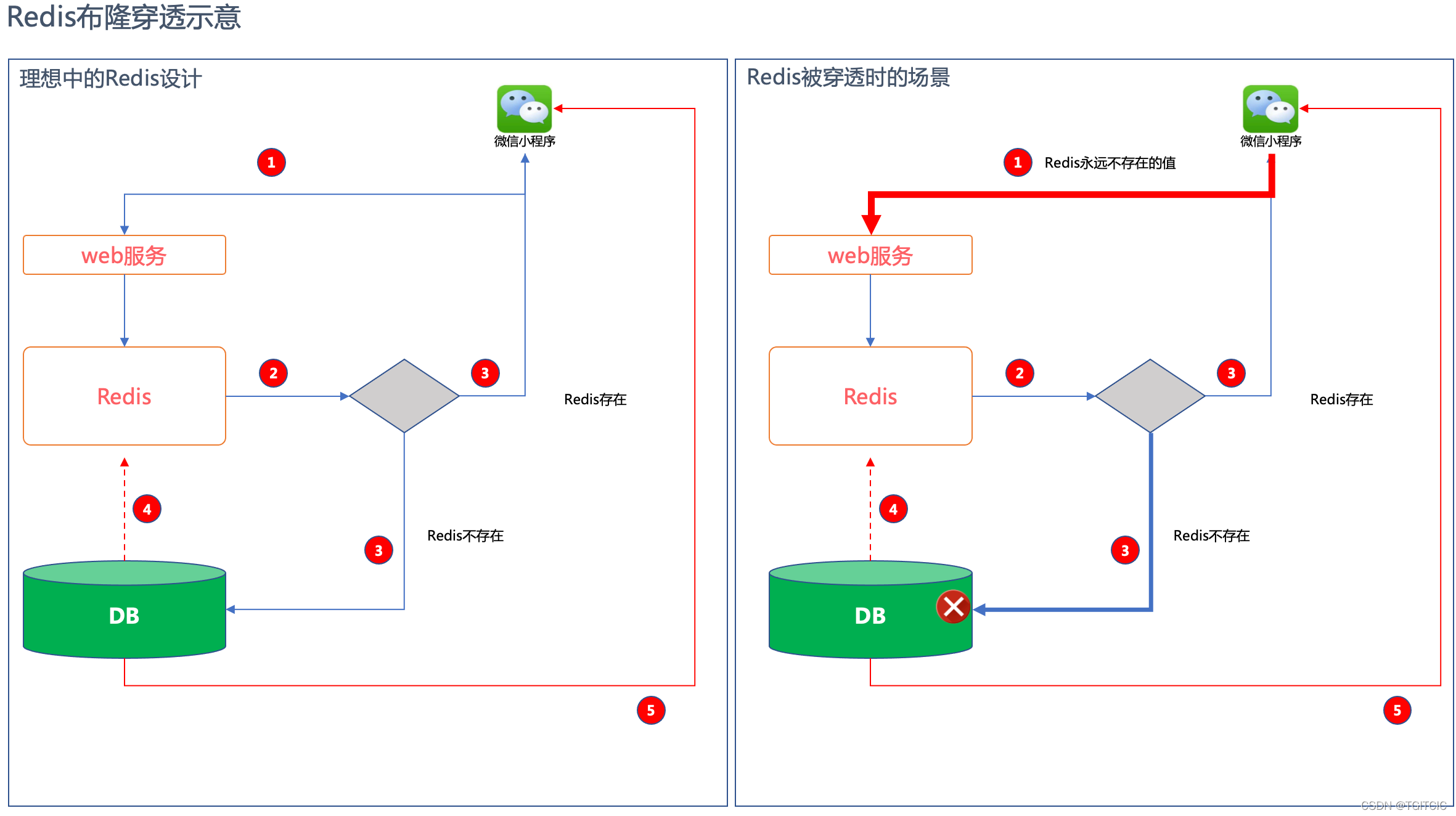

我們聖誕節在生產上碰到了每秒萬級並行,經過WAF結合相關紀錄檔分析我們發覺我們在小程式上有幾個介面被人氾濫了很利害。

而這幾個介面我們前端是使用了varnish來做快取的,理論上因該都是毫秒級返回的。不應該對生產由其首頁造成過多的壓力呀?

於是我們找了近百個使用者的實際請求,進行了「回放」,發覺這幾個請求的response time遠遠高於了我們的varnish對前端返回的速度。

於是我們進一步分析,發現問題出在了這幾個請求-都是get方法且問號(?)後面帶的引數的value竟然都不是我們站內所該有的商品、渠道、模組。而是隨機生成的且每次請求這些引數都不一樣。有些value竟然還傳進來了老K、S、皮蛋、丁勾、嘻嘻、哈哈。你有看到過sku_id傳一個哈哈或者helloworld嗎?真是古今中外之沒有過!

結合了WAF進行了進一步分析,我們發覺這些帶隨機產生系統不存在模組id、產品id的IP都只是存取一次性,但是在存取一次時這些請求的ip很多,多達5位數ip同時在某一個點一起來請求一次。這已經很明顯了,這是典型的黑產或者是爬蟲試圖繞開我們前端的varnish、並且進一步繞開了我們的redis、打中了db導致了首頁載入時DB壓力很大。

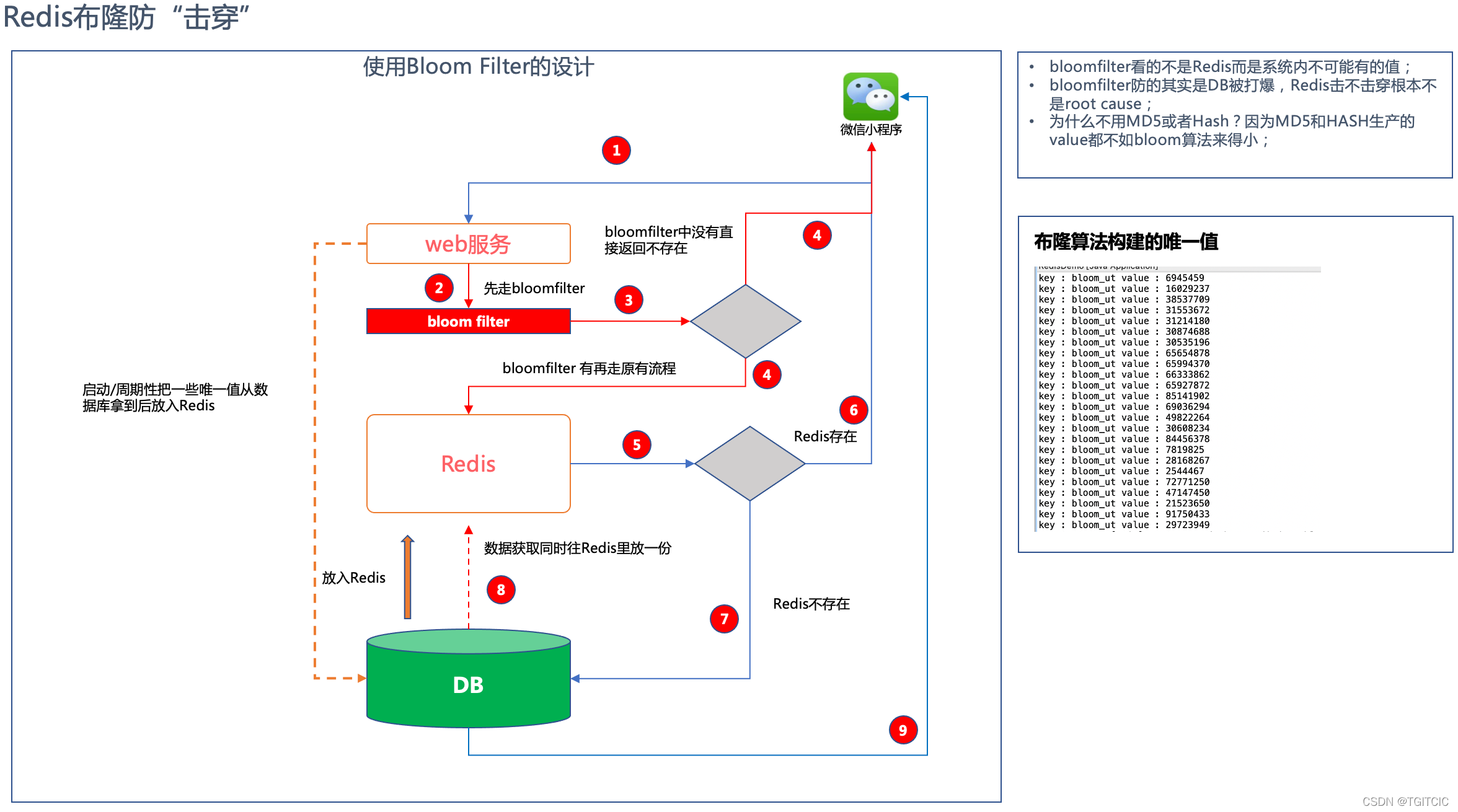

提出改進

黑產、一些資料公司、爬蟲,它們本身擁有的IP是大量的。它們根本不需要高頻來做網站資料的爬取或者是惡意攻擊,它們只要發動6位數甚至7位數的IP每隔幾秒來存取一下你的網站,你的網站就扛不住了。

因此從業務邏輯判斷,我們說這個商品資料,它有一個product_id的,算你10萬個sku不得了了吧,如果來存取時帶著的sku_id在系統中都不存在,這種存取你要它幹什麼呢?

因此我們得到了相應的防擊穿解決方案如下

我們在上一篇SpringBoot+Redis布隆過濾器防惡意流量擊穿快取的正確姿勢中給出的程式碼是依賴於redis本身要load布隆過濾器模組的。

而這次我們堅持使用雲原生,直接用google的guava的工具類使用的bloom演演算法然後把它用setBit存入redis中。因為如果單純的使用guava的話,應用在重新啟動後記憶體中的bloom filter的內容會被清空,因此我們很好的結合了guava的演演算法以及使用redis來做儲存媒介這一手法而不需要像我在上一篇中,要給redis裝bloom filter外掛。

畢竟,在生產上的redis你給他裝外掛,是件很誇張的事。同時事出緊急,給到我們的反應只有20分鐘時間,因此我們需要馬上上一套程式碼來對這樣的惡意請求做攔截,否則小程式應用的首頁是扛不住的,因此我們再次使用了這種「聰明」的手法來做實施。

下面給出工程程式碼。

生產上用的全程式碼(我的開源版比生產程式碼要用的新和更強)^_^

application_local.yml

server:

port: 9080

tomcat:

max-http-post-size: -1

max-http-header-size: 10240000

spring:

application:

name: redis-demo

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

redis:

password: 111111

sentinel:

nodes: localhost:27001,localhost:27002,localhost:27003

master: master1

database: 0

switchFlag: 1

lettuce:

pool:

max-active: 50

max-wait: 10000

max-idle: 10

min-idl: 5

shutdown-timeout: 2000

timeBetweenEvictionRunsMillis: 5000

timeout: 5000

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.mk.demo</groupId>

<artifactId>springboot-demo</artifactId>

<version>0.0.1</version>

</parent>

<artifactId>redis-demo</artifactId>

<name>rabbitmq-demo</name>

<packaging>jar</packaging>

<dependencies>

<dependency>

<groupId>com.auth0</groupId>

<artifactId>java-jwt</artifactId>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-crypto</artifactId>

</dependency>

<!-- redis must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- jedis must -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

</dependency>

<!--redisson must start -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-boot-starter</artifactId>

<version>3.13.6</version>

<exclusions>

<exclusion>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-23</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

<!--redisson must end -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-21</artifactId>

<version>3.13.1</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-thymeleaf</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

</dependencies>

</project>給出幾個關鍵的pom.xml中使用的parent的資訊吧

不要抄網上的,大部分都是錯的。一定記得這邊的spring boot的版本、和guava的版本、redis的版本、redission的版本、jackson的版本是有嚴格講究的,log4j相關的內容根據我之前的部落格自行替換成2.7.1好了(目前apache log4j2.7.1是比較安全的版本)

<properties>

<java.version>1.8</java.version>

<jacoco.version>0.8.3</jacoco.version>

<aldi-sharding.version>0.0.1</aldi-sharding.version>

<!-- <spring-boot.version>2.4.2</spring-boot.version> -->

<spring-boot.version>2.3.1.RELEASE</spring-boot.version>

<!-- spring-boot.version>2.0.6.RELEASE</spring-boot.version> <spring-cloud-zk-discovery.version>2.1.3.RELEASE</spring-cloud-zk-discovery.version -->

<zookeeper.version>3.4.13</zookeeper.version>

<spring-cloud.version>Greenwich.SR5</spring-cloud.version>

<dubbo.version>2.7.3</dubbo.version>

<curator-framework.version>4.0.1</curator-framework.version>

<curator-recipes.version>2.8.0</curator-recipes.version>

<!-- druid.version>1.1.20</druid.version -->

<druid.version>1.2.6</druid.version>

<guava.version>27.0.1-jre</guava.version>

<fastjson.version>1.2.59</fastjson.version>

<dubbo-registry-nacos.version>2.7.3</dubbo-registry-nacos.version>

<nacos-client.version>1.1.4</nacos-client.version>

<!-- mysql-connector-java.version>8.0.13</mysql-connector-java.version -->

<mysql-connector-java.version>5.1.46</mysql-connector-java.version>

<disruptor.version>3.4.2</disruptor.version>

<aspectj.version>1.8.13</aspectj.version>

<spring.data.redis>1.8.14-RELEASE</spring.data.redis>

<seata.version>1.0.0</seata.version>

<netty.version>4.1.42.Final</netty.version>

<nacos.spring.version>0.1.4</nacos.spring.version>

<lombok.version>1.16.22</lombok.version>

<javax.servlet.version>3.1.0</javax.servlet.version>

<mybatis.version>2.1.0</mybatis.version>

<pagehelper-mybatis.version>1.2.3</pagehelper-mybatis.version>

<spring.kafka.version>1.3.10.RELEASE</spring.kafka.version>

<kafka.client.version>1.0.2</kafka.client.version>

<shardingsphere.jdbc.version>4.0.0</shardingsphere.jdbc.version>

<xmemcached.version>2.4.6</xmemcached.version>

<swagger.version>2.9.2</swagger.version>

<swagger.bootstrap.ui.version>1.9.6</swagger.bootstrap.ui.version>

<swagger.model.version>1.5.23</swagger.model.version>

<swagger-annotations.version>1.5.22</swagger-annotations.version>

<swagger-models.version>1.5.22</swagger-models.version>

<swagger-bootstrap-ui.version>1.9.5</swagger-bootstrap-ui.version>

<sky-sharding-jdbc.version>0.0.1</sky-sharding-jdbc.version>

<cxf.version>3.1.6</cxf.version>

<jackson-databind.version>2.11.1</jackson-databind.version>

<gson.version>2.8.6</gson.version>

<groovy.version>2.5.8</groovy.version>

<logback-ext-spring.version>0.1.4</logback-ext-spring.version>

<jcl-over-slf4j.version>1.7.25</jcl-over-slf4j.version>

<spock-spring.version>2.0-M2-groovy-2.5</spock-spring.version>

<xxljob.version>2.2.0</xxljob.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<compiler.plugin.version>3.8.1</compiler.plugin.version>

<war.plugin.version>3.2.3</war.plugin.version>

<jar.plugin.version>3.1.1</jar.plugin.version>

<quartz.version>2.2.3</quartz.version>

<h2.version>1.4.197</h2.version>

<zkclient.version>3.4.14</zkclient.version>

<httpcore.version>4.4.10</httpcore.version>

<httpclient.version>4.5.6</httpclient.version>

<mockito-core.version>3.0.0</mockito-core.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<oseq-aldi.version>2.0.22-RELEASE</oseq-aldi.version>

<poi.version>4.1.0</poi.version>

<poi-ooxml.version>4.1.0</poi-ooxml.version>

<poi-ooxml-schemas.version>4.1.0</poi-ooxml-schemas.version>

<dom4j.version>1.6.1</dom4j.version>

<xmlbeans.version>3.1.0</xmlbeans.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<nacos-discovery.version>2.2.5.RELEASE</nacos-discovery.version>

<spring-cloud-alibaba.version>2.2.1.RELEASE</spring-cloud-alibaba.version>

<redission.version>3.16.1</redission.version>

</properties>然後是自動裝配類

BloomFilterHelper

package org.mk.demo.redisdemo.bloomfilter;

import com.google.common.base.Preconditions;

import com.google.common.hash.Funnel;

import com.google.common.hash.Hashing;

public class BloomFilterHelper<T> {

private int numHashFunctions;

private int bitSize;

private Funnel<T> funnel;

public BloomFilterHelper(Funnel<T> funnel, int expectedInsertions, double fpp) {

Preconditions.checkArgument(funnel != null, "funnel不能為空");

this.funnel = funnel;

// 計算bit陣列長度

bitSize = optimalNumOfBits(expectedInsertions, fpp);

// 計算hash方法執行次數

numHashFunctions = optimalNumOfHashFunctions(expectedInsertions, bitSize);

}

public int[] murmurHashOffset(T value) {

int[] offset = new int[numHashFunctions];

long hash64 = Hashing.murmur3_128().hashObject(value, funnel).asLong();

int hash1 = (int) hash64;

int hash2 = (int) (hash64 >>> 32);

for (int i = 1; i <= numHashFunctions; i++) {

int nextHash = hash1 + i * hash2;

if (nextHash < 0) {

nextHash = ~nextHash;

}

offset[i - 1] = nextHash % bitSize;

}

return offset;

}

/**

* 計算bit陣列長度

*/

private int optimalNumOfBits(long n, double p) {

if (p == 0) {

// 設定最小期望長度

p = Double.MIN_VALUE;

}

int sizeOfBitArray = (int) (-n * Math.log(p) / (Math.log(2) * Math.log(2)));

return sizeOfBitArray;

}

/**

* 計算hash方法執行次數

*/

private int optimalNumOfHashFunctions(long n, long m) {

int countOfHash = Math.max(1, (int) Math.round((double) m / n * Math.log(2)));

return countOfHash;

}

}

RedisBloomFilter自動裝配類

package org.mk.demo.redisdemo.bloomfilter;

import com.google.common.base.Preconditions;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

@Service

public class RedisBloomFilter {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private RedisTemplate redisTemplate;

/**

* 根據給定的布隆過濾器新增值

*/

public <T> void addByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

Preconditions.checkArgument(bloomFilterHelper != null, "bloomFilterHelper不能為空");

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

logger.info(">>>>>>add into bloom filter->key : " + key + " " + "value : " + i);

redisTemplate.opsForValue().setBit(key, i, true);

}

}

/**

* 根據給定的布隆過濾器判斷值是否存在

*/

public <T> boolean includeByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

Preconditions.checkArgument(bloomFilterHelper != null, "bloomFilterHelper不能為空");

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

logger.info(">>>>>>check key from bloomfilter : " + key + " " + "value : " + i);

if (!redisTemplate.opsForValue().getBit(key, i)) {

return false;

}

}

return true;

}

}

RedisSentinelConfig-Redis核心設定類

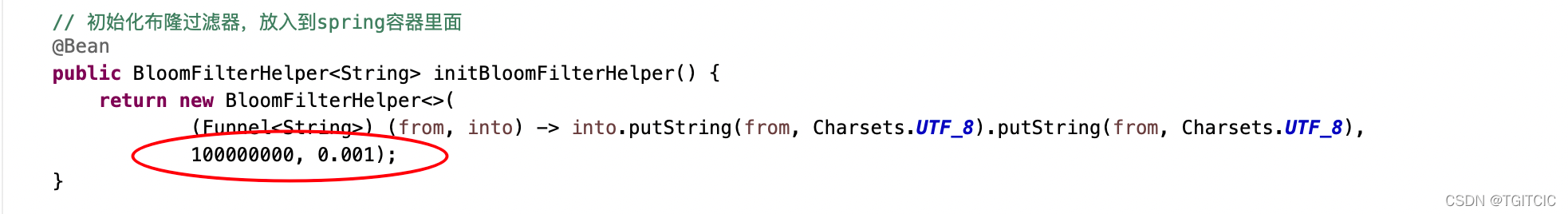

在裡面我申明瞭一個BloomFilterHelper<String>返回型別的initBloomFilterHelper方法

package org.mk.demo.redisdemo.config;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import redis.clients.jedis.HostAndPort;

import org.apache.commons.lang3.StringUtils;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.mk.demo.redisdemo.bloomfilter.BloomFilterHelper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.condition.ConditionalOnMissingBean;

import org.springframework.cache.annotation.EnableCaching;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.*;

import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;

import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import org.springframework.stereotype.Component;

import java.time.Duration;

import java.util.LinkedHashSet;

import java.util.Set;

import com.google.common.base.Charsets;

import com.google.common.hash.Funnel;

@Configuration

@EnableCaching

@Component

public class RedisSentinelConfig {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Value("${spring.redis.nodes:localhost:7001}")

private String nodes;

@Value("${spring.redis.max-redirects:3}")

private Integer maxRedirects;

@Value("${spring.redis.password}")

private String password;

@Value("${spring.redis.database:0}")

private Integer database;

@Value("${spring.redis.timeout}")

private int timeout;

@Value("${spring.redis.sentinel.nodes}")

private String sentinel;

@Value("${spring.redis.lettuce.pool.max-active:8}")

private Integer maxActive;

@Value("${spring.redis.lettuce.pool.max-idle:8}")

private Integer maxIdle;

@Value("${spring.redis.lettuce.pool.max-wait:-1}")

private Long maxWait;

@Value("${spring.redis.lettuce.pool.min-idle:0}")

private Integer minIdle;

@Value("${spring.redis.sentinel.master}")

private String master;

@Value("${spring.redis.switchFlag}")

private String switchFlag;

@Value("${spring.redis.lettuce.pool.shutdown-timeout}")

private Integer shutdown;

@Value("${spring.redis.lettuce.pool.timeBetweenEvictionRunsMillis}")

private long timeBetweenEvictionRunsMillis;

public String getSwitchFlag() {

return switchFlag;

}

/**

* 連線池設定資訊

*

* @return

*/

@Bean

public LettucePoolingClientConfiguration getPoolConfig() {

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMaxTotal(maxActive);

config.setMaxWaitMillis(maxWait);

config.setMaxIdle(maxIdle);

config.setMinIdle(minIdle);

config.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

LettucePoolingClientConfiguration pool = LettucePoolingClientConfiguration.builder().poolConfig(config)

.commandTimeout(Duration.ofMillis(timeout)).shutdownTimeout(Duration.ofMillis(shutdown)).build();

return pool;

}

/**

* 設定 Redis Cluster 資訊

*/

@Bean

@ConditionalOnMissingBean

public LettuceConnectionFactory lettuceConnectionFactory() {

LettuceConnectionFactory factory = null;

String[] split = nodes.split(",");

Set<HostAndPort> nodes = new LinkedHashSet<>();

for (int i = 0; i < split.length; i++) {

try {

String[] split1 = split[i].split(":");

nodes.add(new HostAndPort(split1[0], Integer.parseInt(split1[1])));

} catch (Exception e) {

logger.error(">>>>>>出現設定錯誤!請確認: " + e.getMessage(), e);

throw new RuntimeException(String.format("出現設定錯誤!請確認node=[%s]是否正確", nodes));

}

}

// 如果是哨兵的模式

if (!StringUtils.isEmpty(sentinel)) {

logger.info(">>>>>>Redis use SentinelConfiguration");

RedisSentinelConfiguration redisSentinelConfiguration = new RedisSentinelConfiguration();

String[] sentinelArray = sentinel.split(",");

for (String s : sentinelArray) {

try {

String[] split1 = s.split(":");

redisSentinelConfiguration.addSentinel(new RedisNode(split1[0], Integer.parseInt(split1[1])));

} catch (Exception e) {

logger.error(">>>>>>出現設定錯誤!請確認: " + e.getMessage(), e);

throw new RuntimeException(String.format("出現設定錯誤!請確認node=[%s]是否正確", sentinelArray));

}

}

redisSentinelConfiguration.setMaster(master);

redisSentinelConfiguration.setPassword(password);

factory = new LettuceConnectionFactory(redisSentinelConfiguration, getPoolConfig());

}

// 如果是單個節點 用Standalone模式

else {

if (nodes.size() < 2) {

logger.info(">>>>>>Redis use RedisStandaloneConfiguration");

for (HostAndPort n : nodes) {

RedisStandaloneConfiguration redisStandaloneConfiguration = new RedisStandaloneConfiguration();

if (!StringUtils.isEmpty(password)) {

redisStandaloneConfiguration.setPassword(RedisPassword.of(password));

}

redisStandaloneConfiguration.setPort(n.getPort());

redisStandaloneConfiguration.setHostName(n.getHost());

factory = new LettuceConnectionFactory(redisStandaloneConfiguration, getPoolConfig());

}

} else {

logger.info(">>>>>>Redis use RedisClusterConfiguration");

RedisClusterConfiguration redisClusterConfiguration = new RedisClusterConfiguration();

nodes.forEach(n -> {

redisClusterConfiguration.addClusterNode(new RedisNode(n.getHost(), n.getPort()));

});

if (!StringUtils.isEmpty(password)) {

redisClusterConfiguration.setPassword(RedisPassword.of(password));

}

redisClusterConfiguration.setMaxRedirects(maxRedirects);

factory = new LettuceConnectionFactory(redisClusterConfiguration, getPoolConfig());

}

}

return factory;

}

@Bean

public RedisTemplate<String, Object> redisTemplate(LettuceConnectionFactory lettuceConnectionFactory) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(lettuceConnectionFactory);

Jackson2JsonRedisSerializer jacksonSerial = new Jackson2JsonRedisSerializer<>(Object.class);

ObjectMapper om = new ObjectMapper();

// 指定要序列化的域,field,get和set,以及修飾符範圍,ANY是都有包括private和public

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

jacksonSerial.setObjectMapper(om);

StringRedisSerializer stringSerial = new StringRedisSerializer();

template.setKeySerializer(stringSerial);

// template.setValueSerializer(stringSerial);

template.setValueSerializer(jacksonSerial);

template.setHashKeySerializer(stringSerial);

template.setHashValueSerializer(jacksonSerial);

template.afterPropertiesSet();

return template;

}

// 初始化布隆過濾器,放入到spring容器裡面

@Bean

public BloomFilterHelper<String> initBloomFilterHelper() {

return new BloomFilterHelper<>(

(Funnel<String>) (from, into) -> into.putString(from, Charsets.UTF_8).putString(from, Charsets.UTF_8),

100000000, 0.001);

}

}

程式碼的使用

布降過濾器記得只可以追加或者刪除後重新喂資料。

在生產上我們可以這麼幹,以兩個例子來告訴大家如何操作。

例子一、對於所有的在活動日期開始前的劍客級會員進行防護

設活動開始日期為2022年1月1號元旦,在此之前所有的劍客級會員需要進入防護。

於是我們寫一個JOB,一次性把上千萬會員一次load進布隆過濾器內。然後在1月1號早上9:00活動開始生效時,只要帶著系統內不存在的會員token如:ut這個值進來存取,全部在bloom filter一層就把請求給擋掉了。

例子二、對於所有的類目中的sku_id進行防護。

設小程式或者是前端app的商品類目有16類,每類有1千種sku,差不多有12萬的sku。這些sku會伴隨著每天會有那麼2-3次的上下架操作。那麼我們會做一個job,這個job在5分鐘內執行一下。從資料庫內撈出所有「上架狀態」的sku,喂入bloom filter。喂入前先把bloom filter的這個key刪了。當然,為了做到更精準,我們會使用mq非同步,上下架全完成了後點一下【生效】這個按鈕後,緊接著一條MQ通知一個Service先刪除原來的bloom filter裡的key再喂入全量的sku_id,全過程不地秒級內完成。

下面就以例子1來看業務程式碼的實現。在此,我們做了一個Service,它在應用一啟動時裝載所有的使用者的token。

UTBloomFilterInit

package org.mk.demo.redisdemo.bloomfilter;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.ApplicationArguments;

import org.springframework.boot.ApplicationRunner;

import org.springframework.core.annotation.Order;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

@Component

@Order(1) // 如果有多個類實現了ApplicationRunner介面,可以使用此註解指定執行順序

public class UTBloomFilterInit implements ApplicationRunner {

private final static String BLOOM_UT = "bloom_ut";

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private BloomFilterHelper bloomFilterHelper;

@Autowired

private RedisBloomFilter redisBloomFilter;

@Autowired

private RedisTemplate redisTemplate;

@Override

public void run(ApplicationArguments args) throws Exception {

try {

if (redisTemplate.hasKey(BLOOM_UT)) {

logger.info(">>>>>>bloom filter key->" + BLOOM_UT + " existed, delete it first then init");

redisTemplate.delete(BLOOM_UT);

}

for (int i = 0; i < 10000; i++) {

StringBuffer ut = new StringBuffer();

ut.append("ut_");

ut.append(i);

redisBloomFilter.addByBloomFilter(bloomFilterHelper, BLOOM_UT, ut.toString());

}

logger.info(">>>>>>init ut into redis bloom successfully");

} catch (Exception e) {

logger.info(">>>>>>init ut into redis bloom failed:" + e.getMessage(), e);

}

}

}

這個Service會在spring boot啟動時模擬載入全量的使用者進到布隆過濾器中。

RedisBloomController

package org.mk.demo.redisdemo.controller;

import org.mk.demo.redisdemo.bean.UserBean;

import org.mk.demo.redisdemo.bloomfilter.BloomFilterHelper;

import org.mk.demo.redisdemo.bloomfilter.RedisBloomFilter;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("demo")

public class RedisBloomController {

private final static String BLOOM_UT = "bloom_ut";

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private BloomFilterHelper bloomFilterHelper;

@Autowired

private RedisBloomFilter redisBloomFilter;

@PostMapping(value = "/redis/checkBloom", produces = "application/json")

@ResponseBody

public String check(@RequestBody UserBean user) {

try {

boolean b = redisBloomFilter.includeByBloomFilter(bloomFilterHelper, BLOOM_UT, user.getUt());

if (b) {

return "existed";

} else {

return "not existed";

}

} catch (Exception e) {

logger.error(">>>>>>init bloom error: " + e.getMessage(), e);

return "check error";

}

}

}

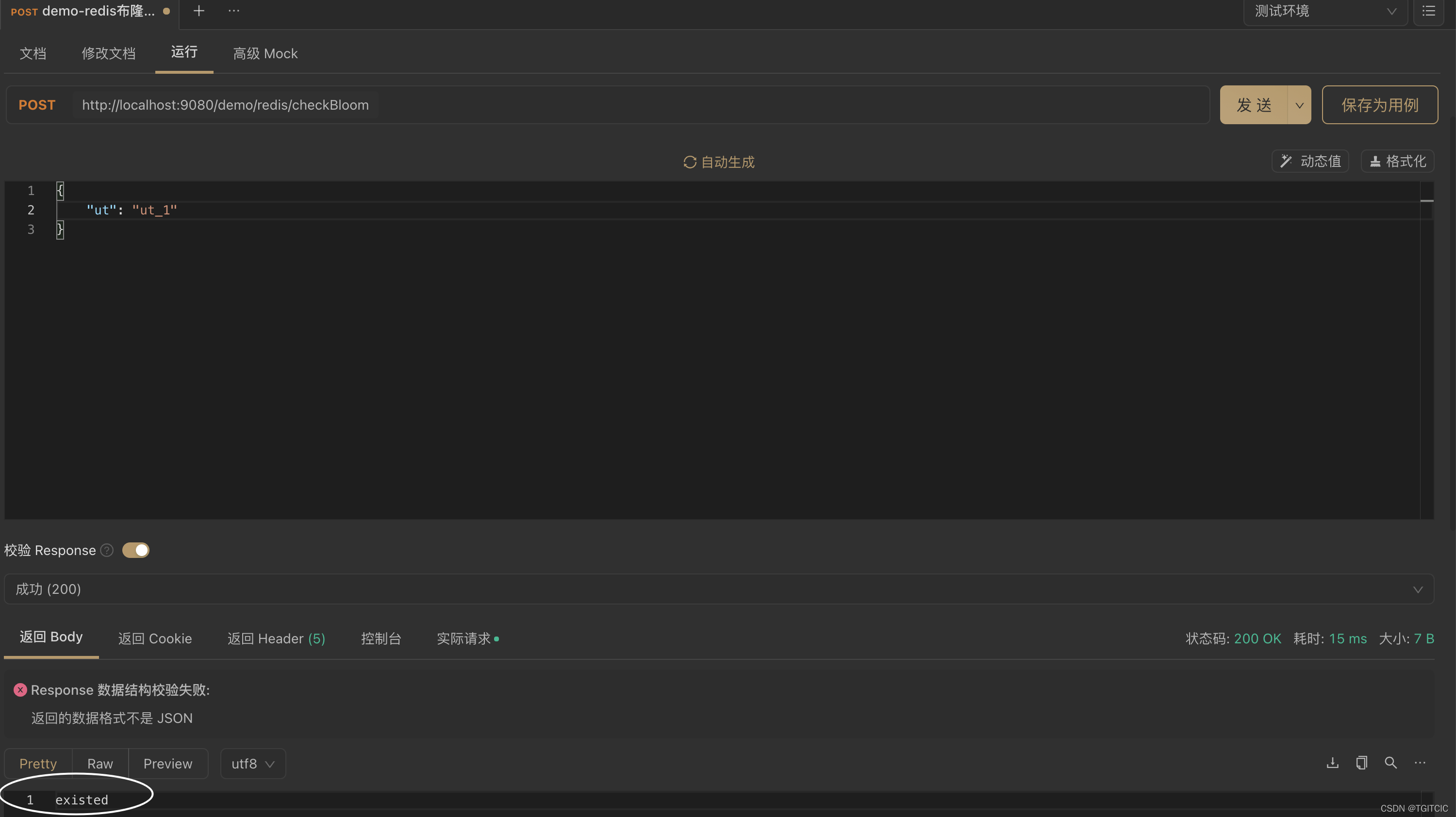

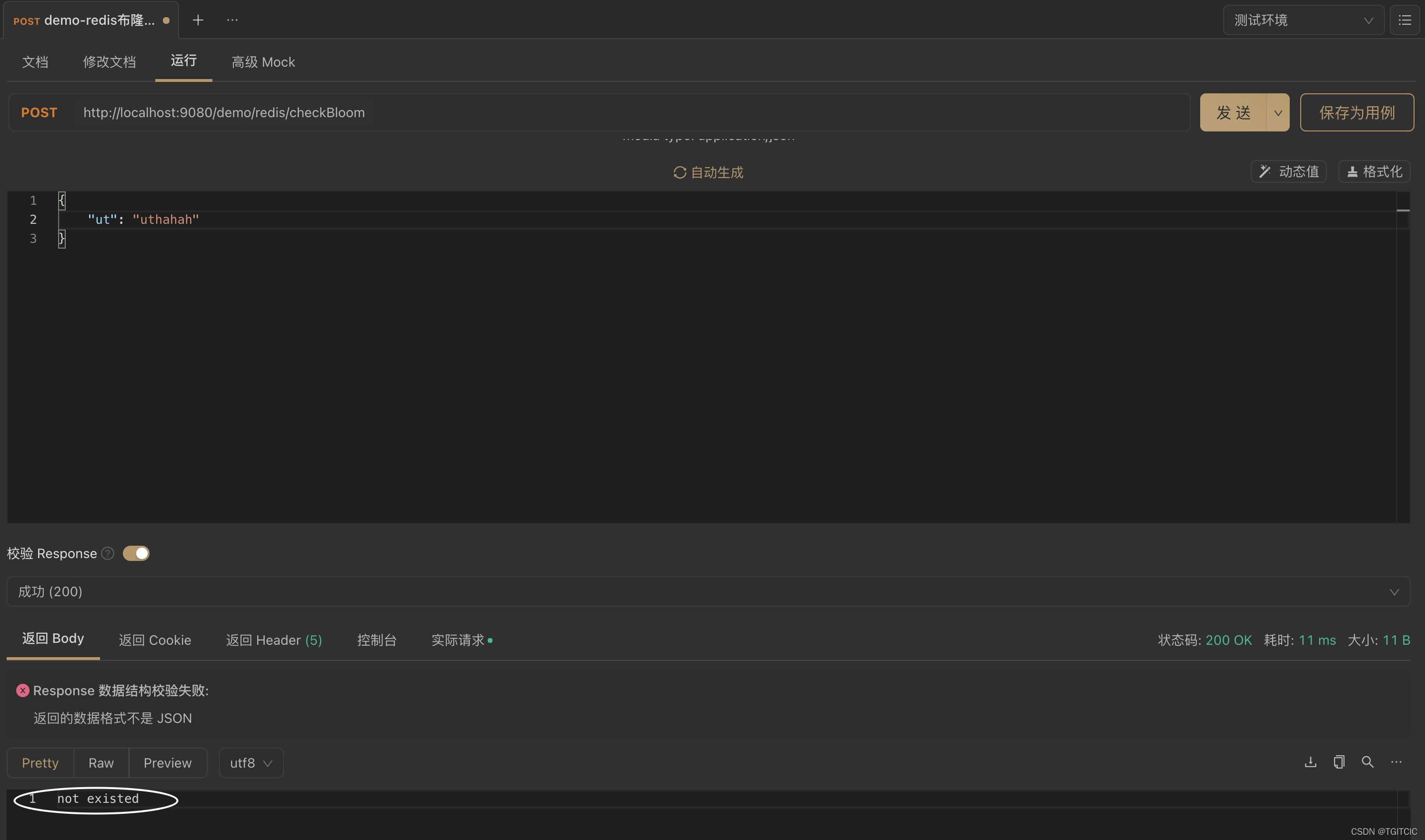

測試業務攔截

我們使用布隆過濾器中存在的ut來存取,得到如下結果

我們使用布隆過濾器中不存在的ut來存取,得到如下結果

這種判斷都是在3,000並行下毫秒級別響應

總結

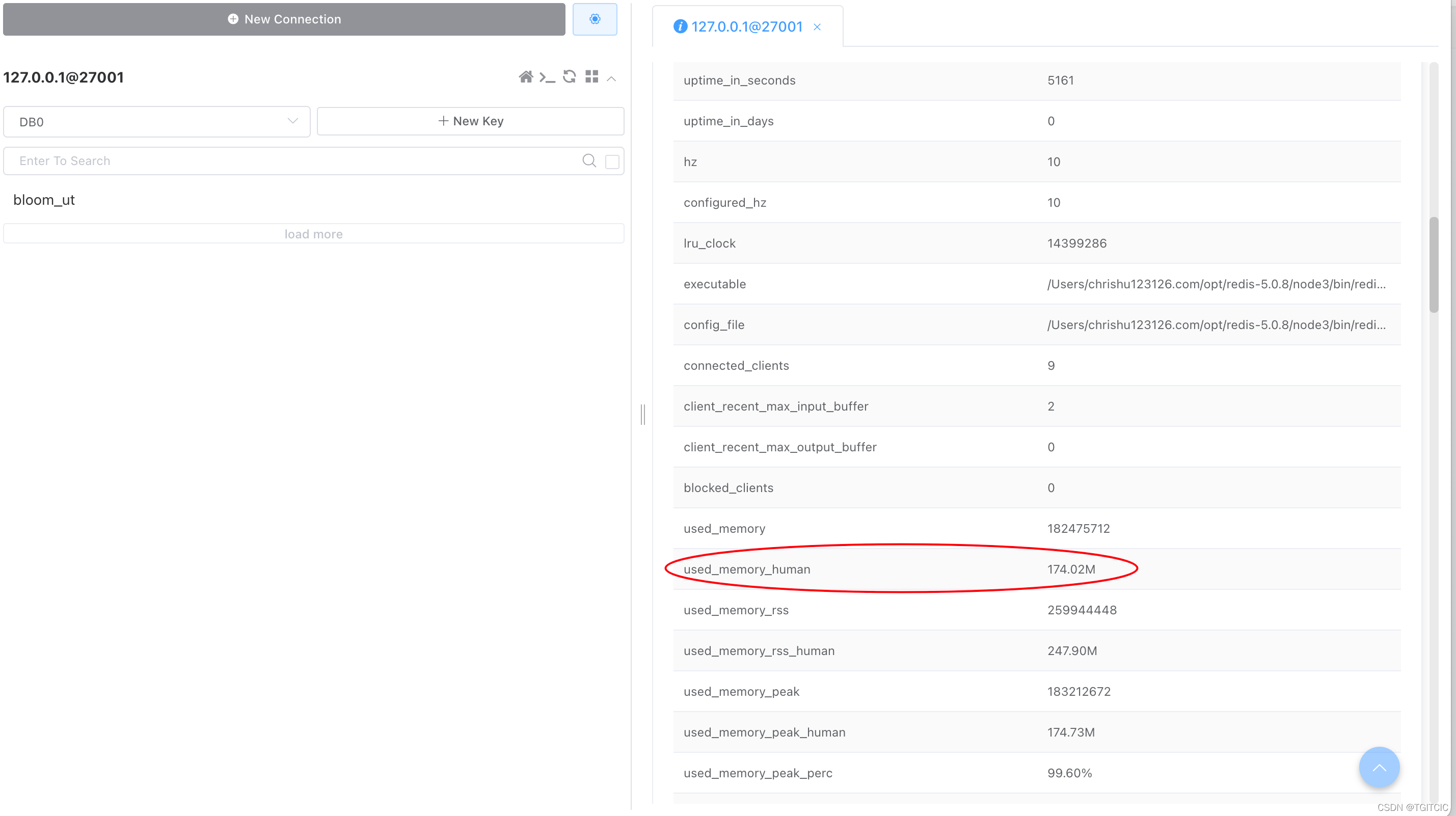

事實上生產我們可以load上億條資料進入bloom過濾器。而bloom的大小遠遠比hash或者是md5要小、且幾乎不重複。

布隆內資料的大小依賴於這兩個值來決定的:

這兩個值解讀為億條資料內,出錯(遺漏)精度在萬分之1.布隆過濾器返回false,100%可以認為不存在。精度越高redis記憶體儲的空間所需越大。它是一次性劃分掉的,並不是現在只有1萬條因此需要200k下次變成了10萬條因此需要12兆。我們看一下億條資料萬分之一精度在redis內佔用多少資源吧。

億條資料不過200兆,這對生產Redis來說太小case了。

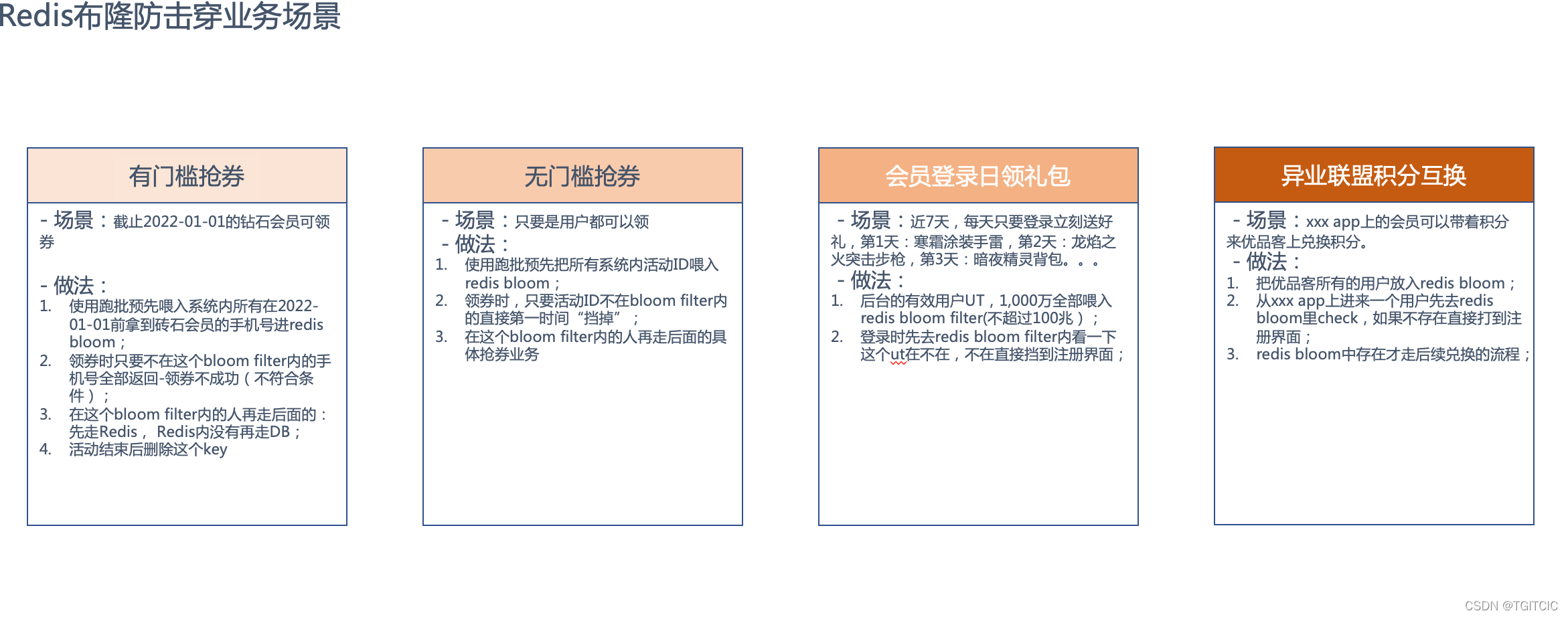

布隆過濾器的常用場景的介紹這邊也順便給大家梳理一下