[Kaggle] Digit Recognizer 手寫數位識別(折積神經網路)

2020-10-14 13:01:02

文章目錄

相關博文:

[Hands On ML] 3. 分類(MNIST手寫數位預測)

[Kaggle] Digit Recognizer 手寫數位識別

[Kaggle] Digit Recognizer 手寫數位識別(簡單神經網路)

04.折積神經網路 W1.折積神經網路

上一篇的簡單神經網路,將28*28的圖片展平了,每個畫素在空間上的位置關係是沒有考慮的,空間的資訊丟失。

1. 使用 LeNet 預測

LeNet神經網路 參考博文

1.1 匯入包

from keras import backend as K # 相容不同後端的程式碼

from keras.models import Sequential

from keras.layers.convolutional import Conv2D

from keras.layers.convolutional import MaxPooling2D

from keras.layers.core import Activation

from keras.layers.core import Dense

from keras.layers.core import Flatten

from keras.utils import np_utils

from keras.optimizers import SGD, Adam, RMSprop

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

1.2 建立 LeNet 模型

# 圖片格式問題

# K.image_data_format() == 'channels_last'

# 預設是last是通道 K.set_image_dim_ordering("tf")

# K.image_data_format() == 'channels_first' # K.set_image_dim_ordering("th")

class LeNet:

@staticmethod

def build(input_shape, classes):

model = Sequential()

model.add(Conv2D(20,kernel_size=5,padding='same',

input_shape=input_shape,activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(50,kernel_size=5,padding='same',activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2),strides=(2,2)))

model.add(Flatten())

model.add(Dense(500, activation='relu'))

model.add(Dense(classes,activation='softmax'))

return model

1.3 讀入資料

train = pd.read_csv('train.csv')

y_train_full = train['label']

X_train_full = train.drop(['label'], axis=1)

X_test_full = pd.read_csv('test.csv')

X_train_full.shape

輸出:

(42000, 784)

- 資料格式轉換,增加一個通道維度

X_train = np.array(X_train_full).reshape(-1,28,28) / 255.0

X_test = np.array(X_test_full).reshape(-1,28,28)/255.0

y_train = np_utils.to_categorical(y_train_full, 10) # 轉成oh編碼

X_train = X_train[:, :, :, np.newaxis]

# m,28,28 --> m, 28, 28, 1(單通道)

X_test = X_test[:, :, :, np.newaxis]

1.4 定義模型

model = LeNet.build(input_shape=(28, 28, 1), classes=10)

- 定義優化器,設定模型

opt = Adam(learning_rate=0.001, beta_1=0.9, beta_2=0.999, decay=0.01)

model.compile(loss="categorical_crossentropy",

optimizer=opt, metrics=["accuracy"])

注意:標籤不採用 one-hot 編碼的話,這裡使用 loss="sparse_categorical_crossentropy"

1.5 訓練

history = model.fit(X_train, y_train, epochs=20, batch_size=128,

validation_split=0.2)

Epoch 1/20

263/263 [==============================] - 26s 98ms/step -

loss: 0.2554 - accuracy: 0.9235 -

val_loss: 0.0983 - val_accuracy: 0.9699

Epoch 2/20

263/263 [==============================] - 27s 103ms/step -

loss: 0.0806 - accuracy: 0.9761 -

val_loss: 0.0664 - val_accuracy: 0.9787

...

...

Epoch 20/20

263/263 [==============================] - 25s 97ms/step -

loss: 0.0182 - accuracy: 0.9953 -

val_loss: 0.0405 - val_accuracy: 0.9868

可以看見第2輪迭代結束,訓練集準確率就 97.6%了,效果比之前的簡單神經網路好很多

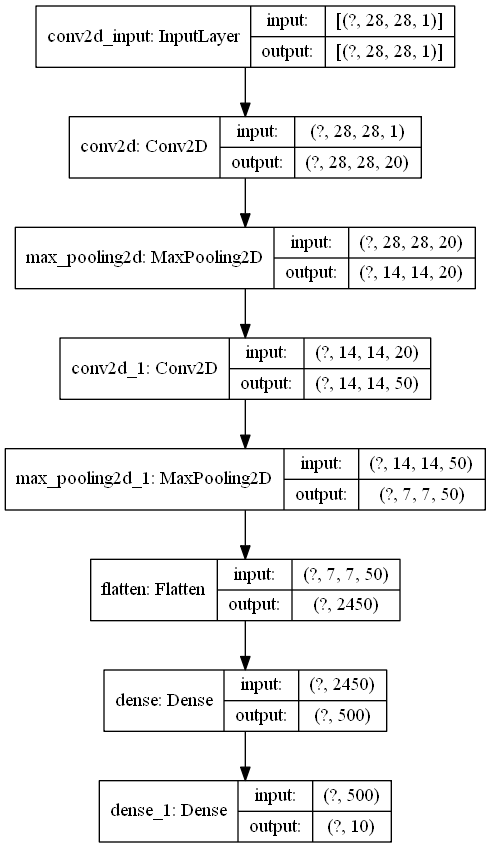

- 模型總結

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 28, 28, 20) 520

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 14, 14, 20) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 14, 14, 50) 25050

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 7, 7, 50) 0

_________________________________________________________________

flatten (Flatten) (None, 2450) 0

_________________________________________________________________

dense (Dense) (None, 500) 1225500

_________________________________________________________________

dense_1 (Dense) (None, 10) 5010

=================================================================

Total params: 1,256,080

Trainable params: 1,256,080

Non-trainable params: 0

_________________________________________________________________

- 繪製模型結構圖

from keras.utils import plot_model

plot_model(model, './model.png', show_shapes=True)

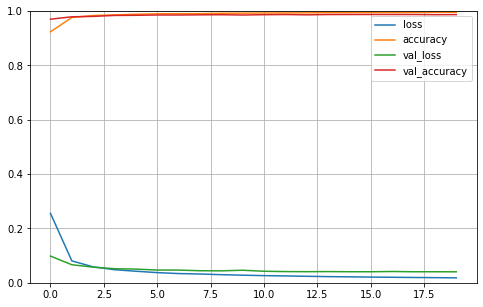

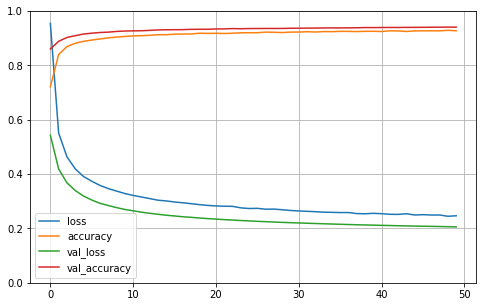

1.6 繪製訓練曲線

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1) # set the vertical range to [0-1]

plt.show()

1.7 預測提交

y_pred = model.predict(X_test)

pred = y_pred.argmax(axis=1).reshape(-1)

print(pred.shape)

image_id = pd.Series(range(1,len(pred)+1))

output = pd.DataFrame({'ImageId':image_id, 'Label':pred})

output.to_csv("submission_NN.csv", index=False)

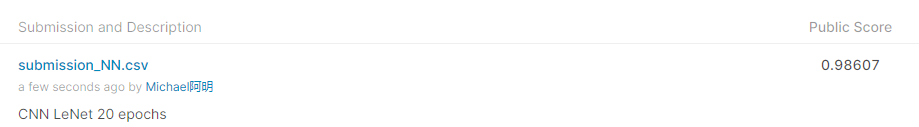

LeNet 模型得分 0.98607,比上一篇的簡單NN模型(得分 0.97546),好了 1.061%

2. 使用 VGG16 遷移學習

VGG16 help 檔案:

Help on function VGG16 in module tensorflow.python.keras.applications.vgg16:

VGG16(include_top=True, weights='imagenet', input_tensor=None, input_shape=None, pooling=None, classes=1000, classifier_activation='softmax')

Instantiates the VGG16 model.

Reference paper:

- [Very Deep Convolutional Networks for Large-Scale Image Recognition](

https://arxiv.org/abs/1409.1556) (ICLR 2015)

By default, it loads weights pre-trained on ImageNet. Check 'weights' for

other options.

This model can be built both with 'channels_first' data format

(channels, height, width) or 'channels_last' data format

(height, width, channels).

The default input size for this model is 224x224.

Caution: Be sure to properly pre-process your inputs to the application.

Please see `applications.vgg16.preprocess_input` for an example.

Arguments:

include_top: whether to include the 3 fully-connected

layers at the top of the network.

weights: one of `None` (random initialization),

'imagenet' (pre-training on ImageNet),

or the path to the weights file to be loaded.

input_tensor: optional Keras tensor

(i.e. output of `layers.Input()`)

to use as image input for the model.

input_shape: optional shape tuple, only to be specified

if `include_top` is False (otherwise the input shape

has to be `(224, 224, 3)`

(with `channels_last` data format)

or `(3, 224, 224)` (with `channels_first` data format).

It should have exactly 3 input channels,

and width and height should be no smaller than 32.

E.g. `(200, 200, 3)` would be one valid value.

pooling: Optional pooling mode for feature extraction

when `include_top` is `False`.

- `None` means that the output of the model will be

the 4D tensor output of the

last convolutional block.

- `avg` means that global average pooling

will be applied to the output of the

last convolutional block, and thus

the output of the model will be a 2D tensor.

- `max` means that global max pooling will

be applied.

classes: optional number of classes to classify images

into, only to be specified if `include_top` is True, and

if no `weights` argument is specified.

classifier_activation: A `str` or callable. The activation function to use

on the "top" layer. Ignored unless `include_top=True`. Set

`classifier_activation=None` to return the logits of the "top" layer.

Returns:

A `keras.Model` instance.

Raises:

ValueError: in case of invalid argument for `weights`,

or invalid input shape.

ValueError: if `classifier_activation` is not `softmax` or `None` when

using a pretrained top layer.

2.1 匯入包

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import cv2

from keras.optimizers import Adam

from keras.models import Model

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Flatten

from keras.layers import Dense

from keras.layers import Input

from keras.layers import Dropout

from keras.applications.vgg16 import VGG16

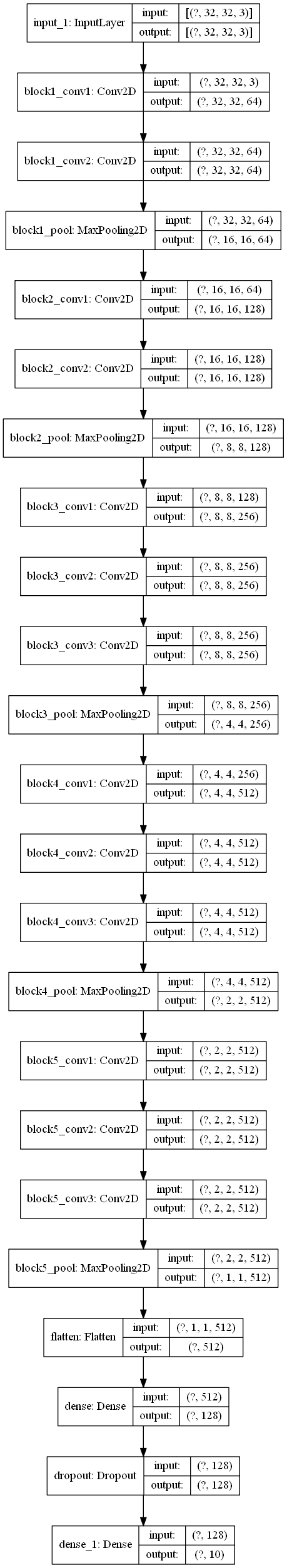

2.2 定義模型

vgg16 = VGG16(weights='imagenet',include_top=False,

input_shape=(32, 32, 3))

# VGG16 模型在include_top=False時,可以自定義輸入大小,至少32x32,通道必須是3

mylayer = vgg16.output

mylayer = Flatten()(mylayer)

mylayer = Dense(128, activation='relu')(mylayer)

mylayer = Dropout(0.3)(mylayer)

mylayer = Dense(10, activation='softmax')(mylayer)

model = Model(inputs=vgg16.inputs, outputs=mylayer)

for layer in vgg16.layers:

layer.trainable = False # vgg16的各個層不訓練

2.3 資料處理

train = pd.read_csv('train.csv')

y_train_full = train['label']

X_train_full = train.drop(['label'], axis=1)

X_test_full = pd.read_csv('test.csv')

- 將單通道的資料,複製成3通道的(vgg16要求3通道的),再resize成

32*32的,vgg16 要求圖片最低解析度是32*32

def process(data):

data = np.array(data).reshape(-1,28,28)

output = np.zeros((data.shape[0], 32, 32, 3))

for i in range(data.shape[0]):

img = data[i]

rgb_array = np.zeros((img.shape[0], img.shape[1], 3), "uint8")

rgb_array[:, :, 0], rgb_array[:, :, 1], rgb_array[:, :, 2] = img, img, img

pic = cv2.resize(rgb_array, (32, 32), interpolation=cv2.INTER_LINEAR)

output[i] = pic

output = output.astype('float32')/255.0

return output

y_train = np_utils.to_categorical(y_train_full, 10)

X_train = process(X_train_full)

X_test = process(X_test_full)

print(X_train.shape)

print(X_test.shape)

輸出:

(42000, 32, 32, 3)

(28000, 32, 32, 3)

- 看一看處理後的圖片

img = X_train[0]

plt.imshow(img)

np.set_printoptions(threshold=np.inf)# 全部顯示矩陣

# print(X_train[0])

2.4 設定模型、訓練

opt = Adam(learning_rate=0.001, beta_1=0.9, beta_2=0.999, decay=0.01)

model.compile(loss="categorical_crossentropy",

optimizer=opt, metrics=["accuracy"])

history = model.fit(X_train, y_train, epochs=50, batch_size=128,

validation_split=0.2)

輸出:

Epoch 1/50

263/263 [==============================] - 101s 384ms/step -

loss: 0.9543 - accuracy: 0.7212 -

val_loss: 0.5429 - val_accuracy: 0.8601

...

Epoch 10/50

263/263 [==============================] - 110s 417ms/step -

loss: 0.3284 - accuracy: 0.9063 -

val_loss: 0.2698 - val_accuracy: 0.9263

...

Epoch 40/50

263/263 [==============================] - 114s 433ms/step -

loss: 0.2556 - accuracy: 0.9254 -

val_loss: 0.2121 - val_accuracy: 0.9389

...

Epoch 50/50

263/263 [==============================] - 110s 420ms/step -

loss: 0.2466 - accuracy: 0.9272 -

val_loss: 0.2058 - val_accuracy: 0.9406

model.summary()

輸出:

Model: "functional_15"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_23 (InputLayer) [(None, 32, 32, 3)] 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 32, 32, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 32, 32, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 16, 16, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 16, 16, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 16, 16, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 8, 8, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 8, 8, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 8, 8, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 8, 8, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 4, 4, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 4, 4, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 4, 4, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 4, 4, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 2, 2, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 2, 2, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 2, 2, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 2, 2, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 1, 1, 512) 0

_________________________________________________________________

flatten_19 (Flatten) (None, 512) 0

_________________________________________________________________

dense_28 (Dense) (None, 128) 65664

_________________________________________________________________

dropout_9 (Dropout) (None, 128) 0

_________________________________________________________________

dense_29 (Dense) (None, 10) 1290

=================================================================

Total params: 14,781,642

Trainable params: 66,954

Non-trainable params: 14,714,688

_________________________________________________________________

- 繪製模型結構

from keras.utils import plot_model

plot_model(model, './model.png', show_shapes=True)

2.5 預測提交

y_pred = model.predict(X_test)

pred = y_pred.argmax(axis=1).reshape(-1)

print(pred.shape)

print(pred)

image_id = pd.Series(range(1,len(pred)+1))

output = pd.DataFrame({'ImageId':image_id, 'Label':pred})

output.to_csv("submission_NN.csv", index=False)

預測得分:0.93696

可能是由於 VGG16模型是用 224*224 的圖片訓練的權重,我們使用的是 28*28 的圖片,可能不能很好的使用VGG16已經訓練好的權重

我的CSDN部落格地址 https://michael.blog.csdn.net/

長按或掃碼關注我的公眾號(Michael阿明),一起加油、一起學習進步!