【記錄】CDH5.12.1整合Flink1.9.0,並實現cm5.12.1監控flink

寫在前頭:最近在CDH上整合flink,現在新版本的CDH6.3以上的,官網有提供parcel跟csd檔案,但是我們使用的是CDH5.12.1,還是比較老的版本,所以要自己編譯。在這期間蒐集了不少資料,但是沒有一篇詳細的文章是從flink編譯=>生成csd、parcel檔案=>整合cm這一整套流程完整走下來的。因此我整理了之前收集的資料,並把自己在安裝過程出現的問題也整理了出來。希望對大家有所幫助

一、安裝準備

我使用的軟體版本:

Hadoop 2.6.0-cdh5.12.1

Apache Maven 3.6.3

Java version: 1.8.0_161

Cloudera Manager 5.12.1

我們需要做的事情:

1、從官網上下載flink的原始碼及依賴包

2、對原始碼及依賴包進行相應的編譯

3、將編譯完成的flink包製作成cloudera manager需要的csd檔案、parcel檔案

4、cm一鍵安裝部署flink,並將flink服務新增到管理列表中

明確了我們需要做的事情之後,一步步操作就好了,這個過程我會盡可能詳細的描述操作過程。

二、軟體包下載

這裡可以根據自己需要的版本下載自己需要包即可。這裡我選擇的1.9.0,對應的依賴包是7.0

- flink的安裝包下載

wget https://github.com/apache/flink/archive/release-1.9.0.zip

- flink依賴包

wget https://archive.apache.org/dist/flink/flink-shaded-7.0/flink-shaded-7.0-src.tgz

三、編譯

- flink-1.9.0編譯

1.解壓

unzip release-1.9.0.zip -d ./

2.修改根目錄下的pom.xml

vim flink-release-1.9.0/pom.xml

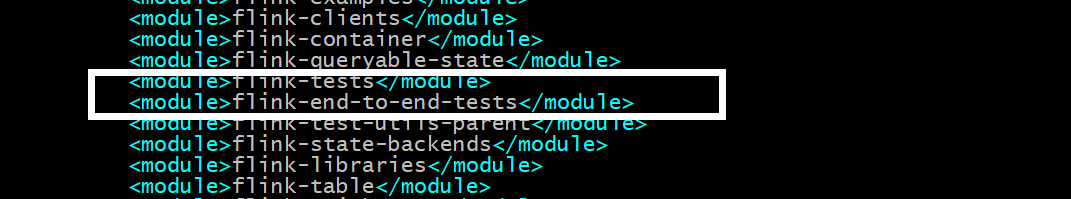

刪除test相關modules,減少編譯時間,大約在80-90行

增加下載源

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

<repository>

<id>mvnrepository</id>

<url>https://mvnrepository.com</url>

</repository>

</repositories>

3.開始編譯

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.6.0-cdh5.12.1 -Dmaven.javadoc.skip=true -Dcheckstyle.skip=true -Drat.skip=true

編譯成功

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for flink 1.9.0:

[INFO]

[INFO] force-shading ...................................... SUCCESS [ 1.579 s]

[INFO] flink .............................................. SUCCESS [ 1.484 s]

[INFO] flink-annotations .................................. SUCCESS [ 40.863 s]

[INFO] flink-shaded-curator ............................... SUCCESS [01:34 min]

[INFO] flink-metrics ...................................... SUCCESS [ 0.059 s]

[INFO] flink-metrics-core ................................. SUCCESS [01:58 min]

[INFO] flink-test-utils-parent ............................ SUCCESS [ 0.066 s]

[INFO] flink-test-utils-junit ............................. SUCCESS [ 0.485 s]

[INFO] flink-core ......................................... SUCCESS [02:14 min]

[INFO] flink-java ......................................... SUCCESS [ 19.734 s]

[INFO] flink-queryable-state .............................. SUCCESS [ 0.047 s]

[INFO] flink-queryable-state-client-java .................. SUCCESS [ 0.469 s]

[INFO] flink-filesystems .................................. SUCCESS [ 0.050 s]

[INFO] flink-hadoop-fs .................................... SUCCESS [04:23 min]

[INFO] flink-runtime ...................................... SUCCESS [32:45 min]

[INFO] flink-scala ........................................ SUCCESS [01:01 min]

[INFO] flink-mapr-fs ...................................... SUCCESS [ 0.397 s]

[INFO] flink-filesystems :: flink-fs-hadoop-shaded ........ SUCCESS [02:05 min]

[INFO] flink-s3-fs-base ................................... SUCCESS [01:35 min]

[INFO] flink-s3-fs-hadoop ................................. SUCCESS [ 18.102 s]

[INFO] flink-s3-fs-presto ................................. SUCCESS [08:16 min]

[INFO] flink-swift-fs-hadoop .............................. SUCCESS [03:06 min]

[INFO] flink-oss-fs-hadoop ................................ SUCCESS [02:38 min]

[INFO] flink-azure-fs-hadoop .............................. SUCCESS [12:05 min]

[INFO] flink-optimizer .................................... SUCCESS [ 1.839 s]

[INFO] flink-clients ...................................... SUCCESS [ 9.584 s]

[INFO] flink-streaming-java ............................... SUCCESS [ 12.332 s]

[INFO] flink-test-utils ................................... SUCCESS [03:42 min]

[INFO] flink-runtime-web .................................. SUCCESS [10:55 min]

[INFO] flink-examples ..................................... SUCCESS [ 0.084 s]

[INFO] flink-examples-batch ............................... SUCCESS [ 14.505 s]

[INFO] flink-connectors ................................... SUCCESS [ 0.039 s]

[INFO] flink-hadoop-compatibility ......................... SUCCESS [ 7.456 s]

[INFO] flink-state-backends ............................... SUCCESS [ 0.038 s]

[INFO] flink-statebackend-rocksdb ......................... SUCCESS [08:05 min]

[INFO] flink-tests ........................................ SUCCESS [ 41.102 s]

[INFO] flink-streaming-scala .............................. SUCCESS [ 51.065 s]

[INFO] flink-table ........................................ SUCCESS [ 0.039 s]

[INFO] flink-table-common ................................. SUCCESS [ 1.371 s]

[INFO] flink-table-api-java ............................... SUCCESS [ 0.940 s]

[INFO] flink-table-api-java-bridge ........................ SUCCESS [ 0.460 s]

[INFO] flink-table-api-scala .............................. SUCCESS [ 7.612 s]

[INFO] flink-table-api-scala-bridge ....................... SUCCESS [ 11.582 s]

[INFO] flink-sql-parser ................................... SUCCESS [07:27 min]

[INFO] flink-libraries .................................... SUCCESS [ 0.035 s]

[INFO] flink-cep .......................................... SUCCESS [ 2.308 s]

[INFO] flink-table-planner ................................ SUCCESS [04:18 min]

[INFO] flink-orc .......................................... SUCCESS [ 37.190 s]

[INFO] flink-jdbc ......................................... SUCCESS [02:48 min]

[INFO] flink-table-runtime-blink .......................... SUCCESS [ 34.623 s]

[INFO] flink-table-planner-blink .......................... SUCCESS [03:13 min]

[INFO] flink-hbase ........................................ SUCCESS [21:52 min]

[INFO] flink-hcatalog ..................................... SUCCESS [13:21 min]

[INFO] flink-metrics-jmx .................................. SUCCESS [ 0.321 s]

[INFO] flink-connector-kafka-base ......................... SUCCESS [05:50 min]

[INFO] flink-connector-kafka-0.9 .......................... SUCCESS [05:34 min]

[INFO] flink-connector-kafka-0.10 ......................... SUCCESS [ 21.502 s]

[INFO] flink-connector-kafka-0.11 ......................... SUCCESS [08:17 min]

[INFO] flink-formats ...................................... SUCCESS [ 0.036 s]

[INFO] flink-json ......................................... SUCCESS [ 0.361 s]

[INFO] flink-connector-elasticsearch-base ................. SUCCESS [15:19 min]

[INFO] flink-connector-elasticsearch2 ..................... SUCCESS [ 17.888 s]

[INFO] flink-connector-elasticsearch5 ..................... SUCCESS [17:39 min]

[INFO] flink-connector-elasticsearch6 ..................... SUCCESS [22:05 min]

[INFO] flink-csv .......................................... SUCCESS [ 0.250 s]

[INFO] flink-connector-hive ............................... SUCCESS [ 01:38 h]

[INFO] flink-connector-rabbitmq ........................... SUCCESS [01:41 min]

[INFO] flink-connector-twitter ............................ SUCCESS [06:29 min]

[INFO] flink-connector-nifi ............................... SUCCESS [14:28 min]

[INFO] flink-connector-cassandra .......................... SUCCESS [46:47 min]

[INFO] flink-avro ......................................... SUCCESS [05:38 min]

[INFO] flink-connector-filesystem ......................... SUCCESS [ 0.709 s]

[INFO] flink-connector-kafka .............................. SUCCESS [26:12 min]

[INFO] flink-connector-gcp-pubsub ......................... SUCCESS [21:06 min]

[INFO] flink-sql-connector-elasticsearch6 ................. SUCCESS [ 9.831 s]

[INFO] flink-sql-connector-kafka-0.9 ...................... SUCCESS [ 0.403 s]

[INFO] flink-sql-connector-kafka-0.10 ..................... SUCCESS [ 0.545 s]

[INFO] flink-sql-connector-kafka-0.11 ..................... SUCCESS [ 0.770 s]

[INFO] flink-sql-connector-kafka .......................... SUCCESS [ 1.288 s]

[INFO] flink-connector-kafka-0.8 .......................... SUCCESS [07:11 min]

[INFO] flink-avro-confluent-registry ...................... SUCCESS [02:30 min]

[INFO] flink-parquet ...................................... SUCCESS [28:53 min]

[INFO] flink-sequence-file ................................ SUCCESS [ 0.283 s]

[INFO] flink-examples-streaming ........................... SUCCESS [01:48 min]

[INFO] flink-examples-table ............................... SUCCESS [ 8.582 s]

[INFO] flink-examples-build-helper ........................ SUCCESS [ 0.078 s]

[INFO] flink-examples-streaming-twitter ................... SUCCESS [ 0.875 s]

[INFO] flink-examples-streaming-state-machine ............. SUCCESS [ 0.448 s]

[INFO] flink-examples-streaming-gcp-pubsub ................ SUCCESS [ 19.498 s]

[INFO] flink-container .................................... SUCCESS [ 0.341 s]

[INFO] flink-queryable-state-runtime ...................... SUCCESS [ 0.544 s]

[INFO] flink-end-to-end-tests ............................. SUCCESS [ 0.034 s]

[INFO] flink-cli-test ..................................... SUCCESS [ 0.158 s]

[INFO] flink-parent-child-classloading-test-program ....... SUCCESS [ 0.165 s]

[INFO] flink-parent-child-classloading-test-lib-package ... SUCCESS [ 0.098 s]

[INFO] flink-dataset-allround-test ........................ SUCCESS [ 0.223 s]

[INFO] flink-dataset-fine-grained-recovery-test ........... SUCCESS [ 0.157 s]

[INFO] flink-datastream-allround-test ..................... SUCCESS [ 1.400 s]

[INFO] flink-batch-sql-test ............................... SUCCESS [ 0.162 s]

[INFO] flink-stream-sql-test .............................. SUCCESS [ 0.193 s]

[INFO] flink-bucketing-sink-test .......................... SUCCESS [ 0.327 s]

[INFO] flink-distributed-cache-via-blob ................... SUCCESS [ 0.167 s]

[INFO] flink-high-parallelism-iterations-test ............. SUCCESS [ 11.756 s]

[INFO] flink-stream-stateful-job-upgrade-test ............. SUCCESS [ 1.165 s]

[INFO] flink-queryable-state-test ......................... SUCCESS [ 2.381 s]

[INFO] flink-local-recovery-and-allocation-test ........... SUCCESS [ 0.211 s]

[INFO] flink-elasticsearch2-test .......................... SUCCESS [ 6.770 s]

[INFO] flink-elasticsearch5-test .......................... SUCCESS [ 8.624 s]

[INFO] flink-elasticsearch6-test .......................... SUCCESS [ 4.310 s]

[INFO] flink-quickstart ................................... SUCCESS [ 0.927 s]

[INFO] flink-quickstart-java .............................. SUCCESS [06:36 min]

[INFO] flink-quickstart-scala ............................. SUCCESS [ 0.107 s]

[INFO] flink-quickstart-test .............................. SUCCESS [ 0.516 s]

[INFO] flink-confluent-schema-registry .................... SUCCESS [ 2.396 s]

[INFO] flink-stream-state-ttl-test ........................ SUCCESS [ 5.924 s]

[INFO] flink-sql-client-test .............................. SUCCESS [ 0.722 s]

[INFO] flink-streaming-file-sink-test ..................... SUCCESS [ 0.168 s]

[INFO] flink-state-evolution-test ......................... SUCCESS [ 1.181 s]

[INFO] flink-e2e-test-utils ............................... SUCCESS [01:34 min]

[INFO] flink-mesos ........................................ SUCCESS [03:29 min]

[INFO] flink-yarn ......................................... SUCCESS [ 0.966 s]

[INFO] flink-gelly ........................................ SUCCESS [ 2.158 s]

[INFO] flink-gelly-scala .................................. SUCCESS [ 19.871 s]

[INFO] flink-gelly-examples ............................... SUCCESS [ 19.212 s]

[INFO] flink-metrics-dropwizard ........................... SUCCESS [ 13.693 s]

[INFO] flink-metrics-graphite ............................. SUCCESS [ 5.615 s]

[INFO] flink-metrics-influxdb ............................. SUCCESS [03:16 min]

[INFO] flink-metrics-prometheus ........................... SUCCESS [01:00 min]

[INFO] flink-metrics-statsd ............................... SUCCESS [ 0.190 s]

[INFO] flink-metrics-datadog .............................. SUCCESS [ 0.327 s]

[INFO] flink-metrics-slf4j ................................ SUCCESS [ 0.184 s]

[INFO] flink-cep-scala .................................... SUCCESS [ 13.547 s]

[INFO] flink-table-uber ................................... SUCCESS [ 3.382 s]

[INFO] flink-table-uber-blink ............................. SUCCESS [ 3.937 s]

[INFO] flink-sql-client ................................... SUCCESS [03:47 min]

[INFO] flink-state-processor-api .......................... SUCCESS [ 0.659 s]

[INFO] flink-python ....................................... SUCCESS [02:14 min]

[INFO] flink-scala-shell .................................. SUCCESS [ 13.666 s]

[INFO] flink-dist ......................................... SUCCESS [01:21 min]

[INFO] flink-end-to-end-tests-common ...................... SUCCESS [ 0.334 s]

[INFO] flink-metrics-availability-test .................... SUCCESS [ 0.167 s]

[INFO] flink-metrics-reporter-prometheus-test ............. SUCCESS [ 0.140 s]

[INFO] flink-heavy-deployment-stress-test ................. SUCCESS [ 11.352 s]

[INFO] flink-connector-gcp-pubsub-emulator-tests .......... SUCCESS [04:15 min]

[INFO] flink-streaming-kafka-test-base .................... SUCCESS [ 0.285 s]

[INFO] flink-streaming-kafka-test ......................... SUCCESS [ 11.284 s]

[INFO] flink-streaming-kafka011-test ...................... SUCCESS [ 10.451 s]

[INFO] flink-streaming-kafka010-test ...................... SUCCESS [ 9.843 s]

[INFO] flink-plugins-test ................................. SUCCESS [ 0.079 s]

[INFO] flink-tpch-test .................................... SUCCESS [ 23.368 s]

[INFO] flink-contrib ...................................... SUCCESS [ 0.034 s]

[INFO] flink-connector-wikiedits .......................... SUCCESS [ 9.878 s]

[INFO] flink-yarn-tests ................................... SUCCESS [ 13.034 s]

[INFO] flink-fs-tests ..................................... SUCCESS [ 0.403 s]

[INFO] flink-docs ......................................... SUCCESS [ 30.525 s]

[INFO] flink-ml-parent .................................... SUCCESS [ 0.033 s]

[INFO] flink-ml-api ....................................... SUCCESS [ 0.243 s]

[INFO] flink-ml-lib ....................................... SUCCESS [ 0.176 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

PS:編譯時間有點長,在這個過程中可能會報錯,我將自己遇到的錯誤及整理資料發現的錯誤進行一下彙總

問題一:

[ERROR] Failed to execute goal org.apache.rat:apache-rat-plugin:0.12:check (default) on project flink-parent: Too many files with unapproved license: 1 See RAT report in: /home/dwhuangzhou/flink-release-1.9.0/target/rat.txt -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :flink-parent

解決方案:編譯命令增加 -Drat.skip=true ,跳過檢查

- flink-shaded-7.0編譯

1.解壓

tar -zxvf flink-shaded-7.0-src.tgz -C ./

2.修改根目錄下的pom.xml

vim flink-shaded-7.0/pom.xml

增加下載源

<repositories>

<repository>

<id>vdc</id>

<url>http://nexus.saas.hand-china.com/content/repositories</url>

</repository>

<repository>

<id>horton-works-releases</id>

<url>http://repo.hortonworks.com/content/groups/public/</url>

</repository>

<repository>

<id>mvn repository</id>

<url>https://mvnrepository.com/artifact/</url>

</repository>

<repository>

<id>CDH</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

3.修改根目錄下flink-shaded-hadoop-2-uber的pom.xml

這步很重要,不做在執行FLINK的時候會報錯的!

vim flink-shaded-7.0/flink-shaded-hadoop-2-uber/pom.xml

增加如下依賴:

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.3.1</version>

</dependency>

4.回到根目錄下開始編譯

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.6.0-cdh5.12.1 -Dmaven.javadoc.skip=true -Dcheckstyle.skip=true -Drat.skip=true

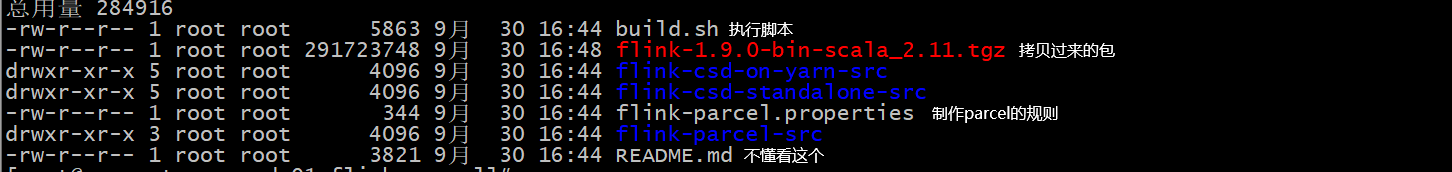

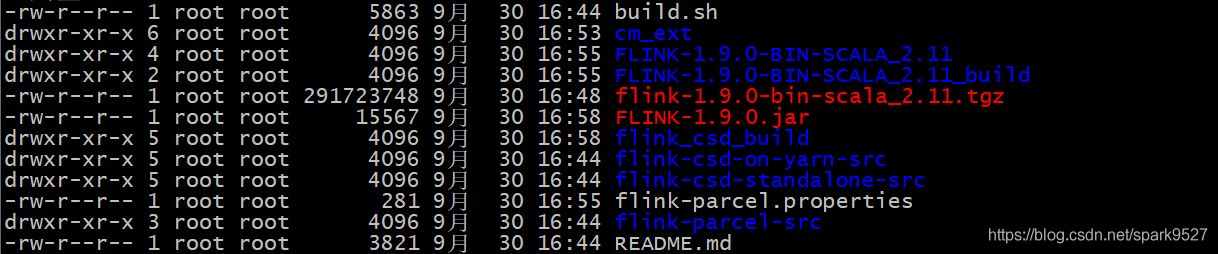

四、製作parcel

1.建立一個資料夾用來製作parcel,並將編譯好的flink包拷貝出來

mkdir /home/makeflink

cp flink-release-1.9.0/flink-dist/target/flink-1.9.0-bin/flink-1.9.0/flink-1.9.0.tgz /home/makeflink/

cp flink-shaded-7.0/flink-shaded-hadoop-2-uber/target/flink-shaded-hadoop-2-uber-2.6.0-cdh5.12.1-7.0.jar /home/makeflink/

2.下載製作parcel的模板

git clone https://github.com/gaozhangmin/flink-parcel

3.將拷貝過來的flink包放到flink-parcel裡邊,並改名

mv flink-1.9.0.tgz flink-parcel/flink-1.9.0-bin-scala_2.11.tgz

4.修改parcel的製作規則

vim flink-parcel.properties

#FLINk 下載地址

FLINK_URL=./flink-1.9.0-bin-scala_2.11.tgz

#flink版本號

FLINK_VERSION=1.9.0

#擴充套件版本號

EXTENS_VERSION=BIN-SCALA_2.11

#作業系統版本,以centos為例

OS_VERSION=6

#CDH 小版本

CDH_MIN_FULL=5.2

CDH_MAX_FULL=6.2

#CDH大版本

CDH_MIN=5

CDH_MAX=6

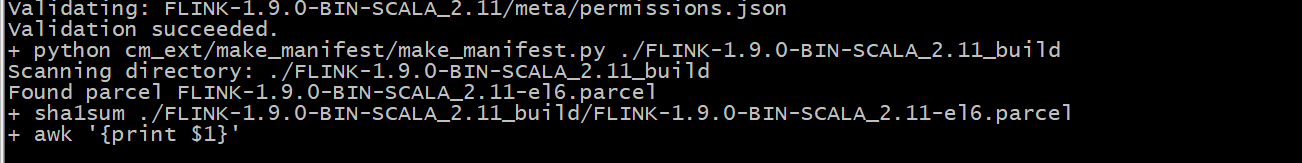

5.開始製作

bash build.sh parcel

6.parcel製作完成

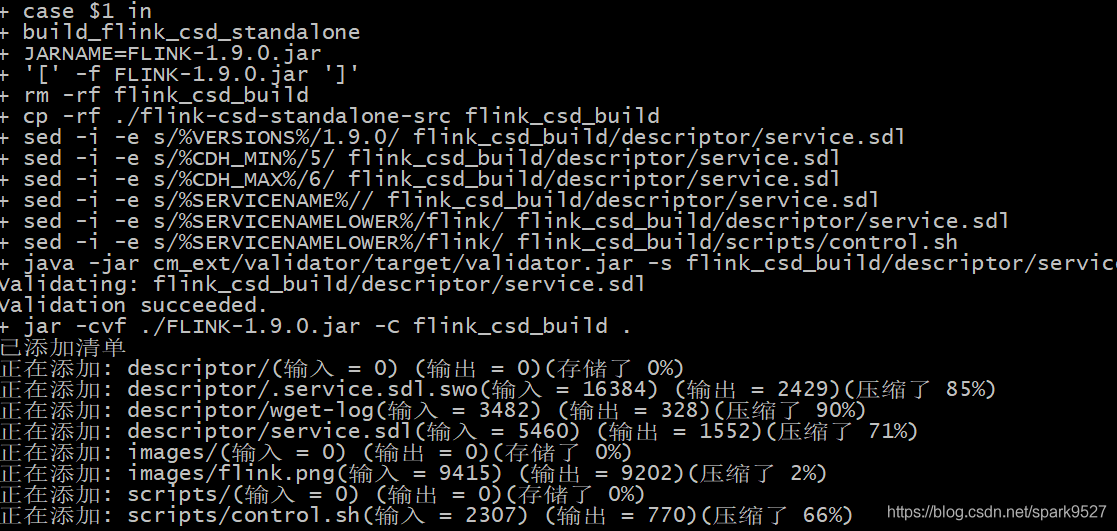

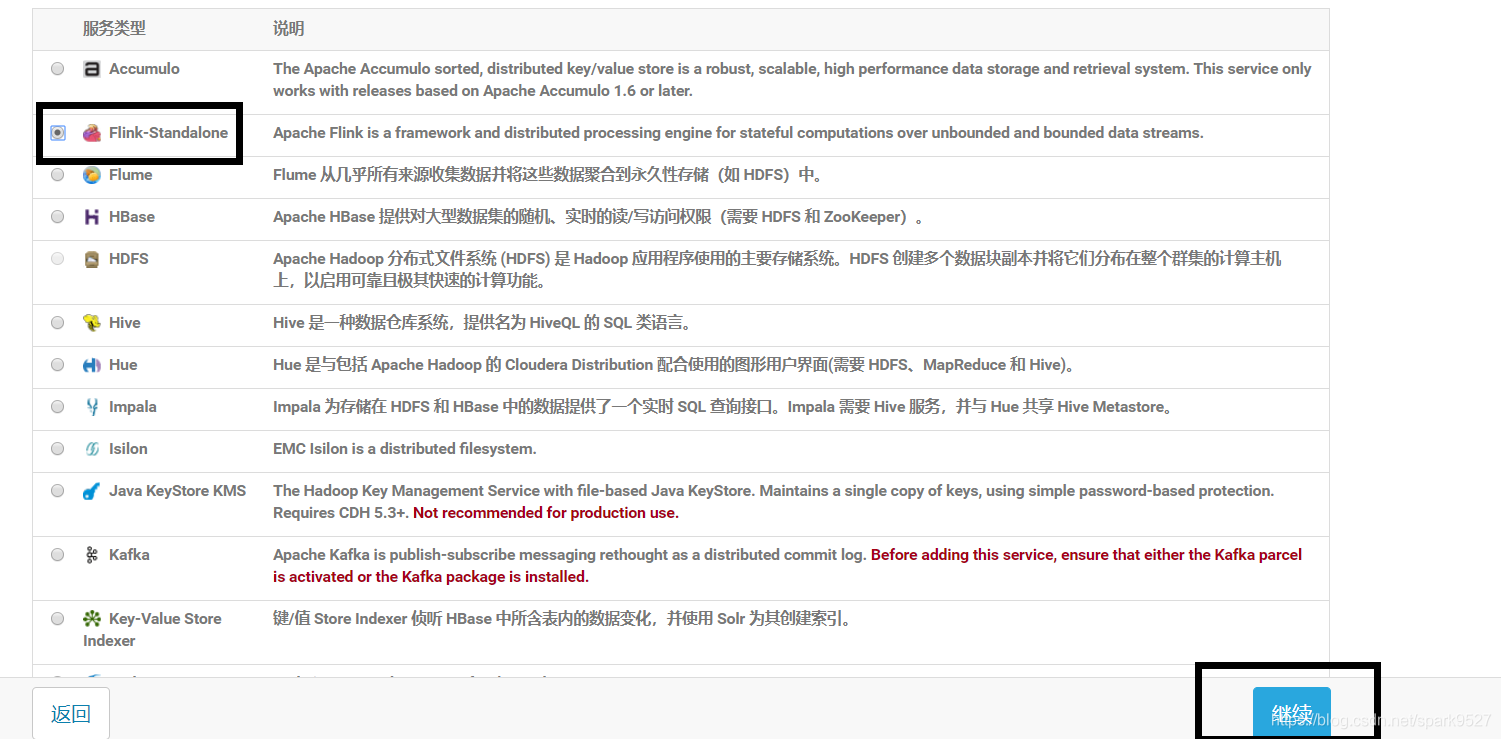

7.製作flink的csd(描述檔案)

因為我只需要standalone版本所以執行

bash build.sh csd_standalone

ps:如果你是需要的flink on yarn 的話 則執行

bash build.sh csd_on_yarn

8.將生成好的檔案放在cm能識別到的地方

- 把生成的csd檔案放在cloudera的csd目錄下

cp FLINK-1.9.0.jar /opt/cloudera/csd/

需要注意的是,csd目錄的許可權應為cloudera-scm

- 把生成好的parcel放在cloudera的pracel-repo中

cp FLINK-1.9.0-BIN-SCALA_2.11_build/* /opt/cloudera/parcel-repo/

步驟進行到這裡,flink的parcel檔案就製作好了

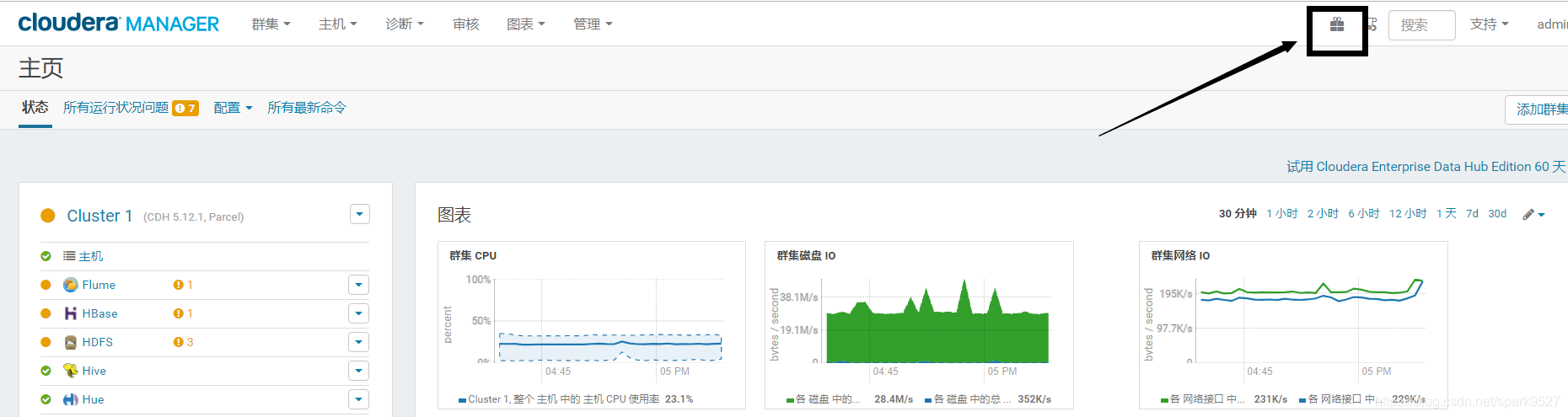

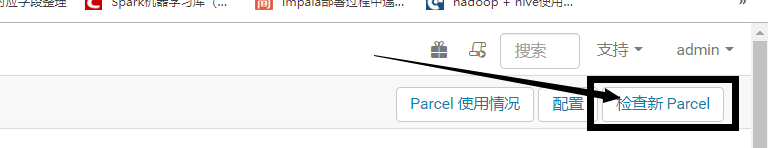

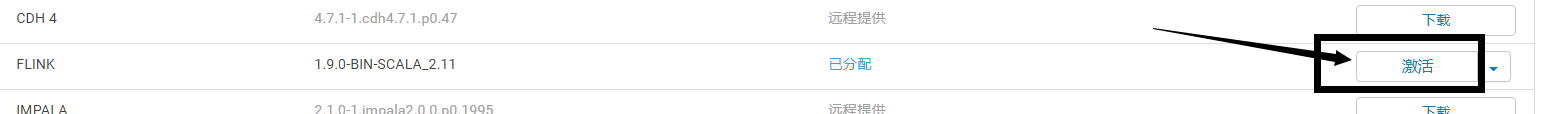

三、CM監控及一鍵安裝部署

1、開啟cm管理介面

http://namenode01:7180/cmf/home

2、找到parcel

3、安裝部署flink

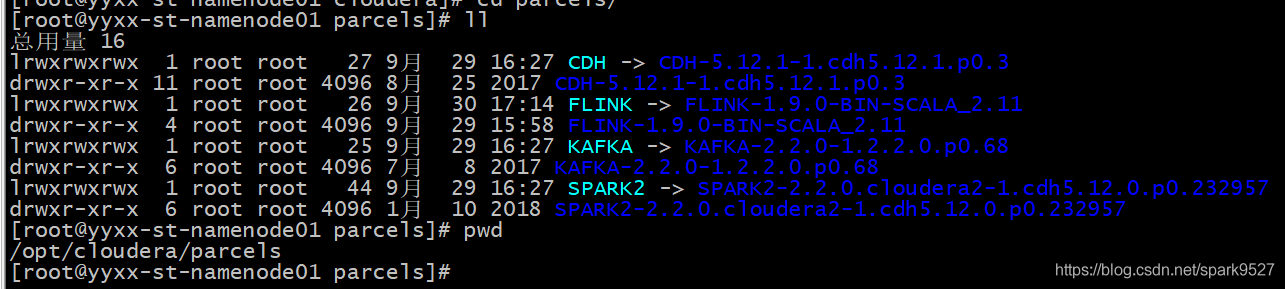

此時,在/opt/cloudera/parcels目錄下應該已經有了FLINK

然後把我們製作的flink依賴包拷貝到FLINK/lib/flink/lib/下

注意:這兩步一定要做,不然flink起不起來的!

cp /home/makeflink/flink-shaded-hadoop-2-uber-2.6.0-cdh5.12.1-7.0.jar /opt/cloudera/parcels/FLINK/lib/flink/lib/

然後給其他機器也發一下

cd /opt/cloudera/parcels/FLINK/lib/flink/lib/

scp flink-shaded-hadoop-2-uber-2.6.0-cdh5.12.1-7.0.jar node01:$PWD

scp flink-shaded-hadoop-2-uber-2.6.0-cdh5.12.1-7.0.jar node02:$PWD

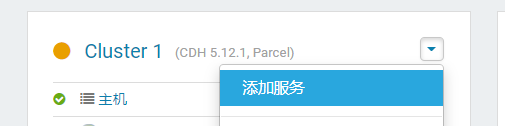

4、新增服務到cm頁面上

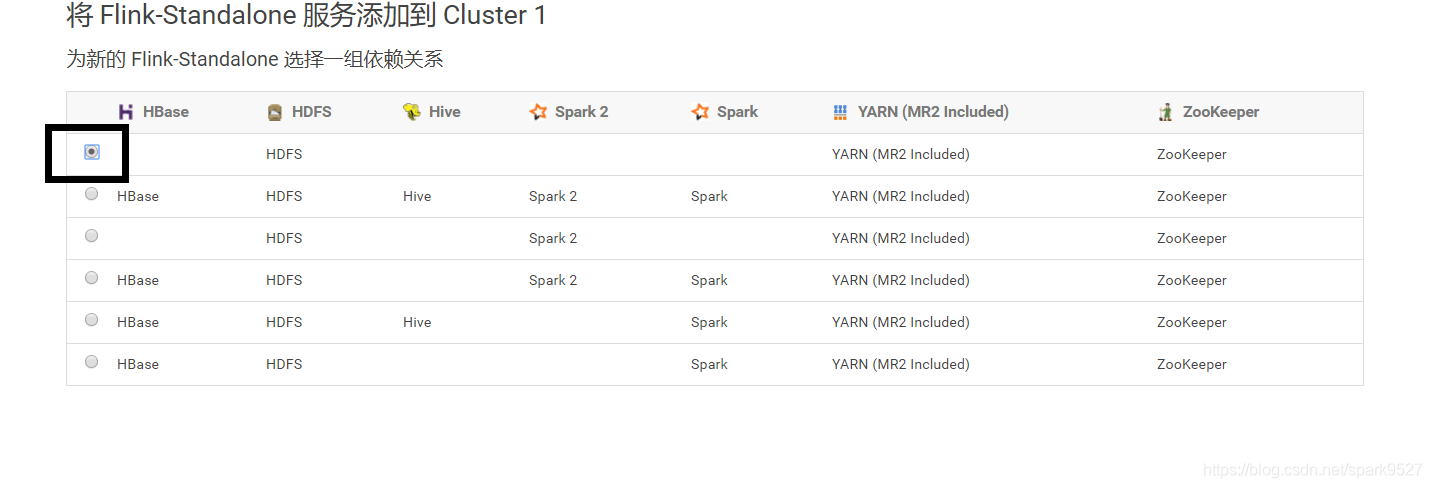

根據自己需求新增依賴關係

角色分配

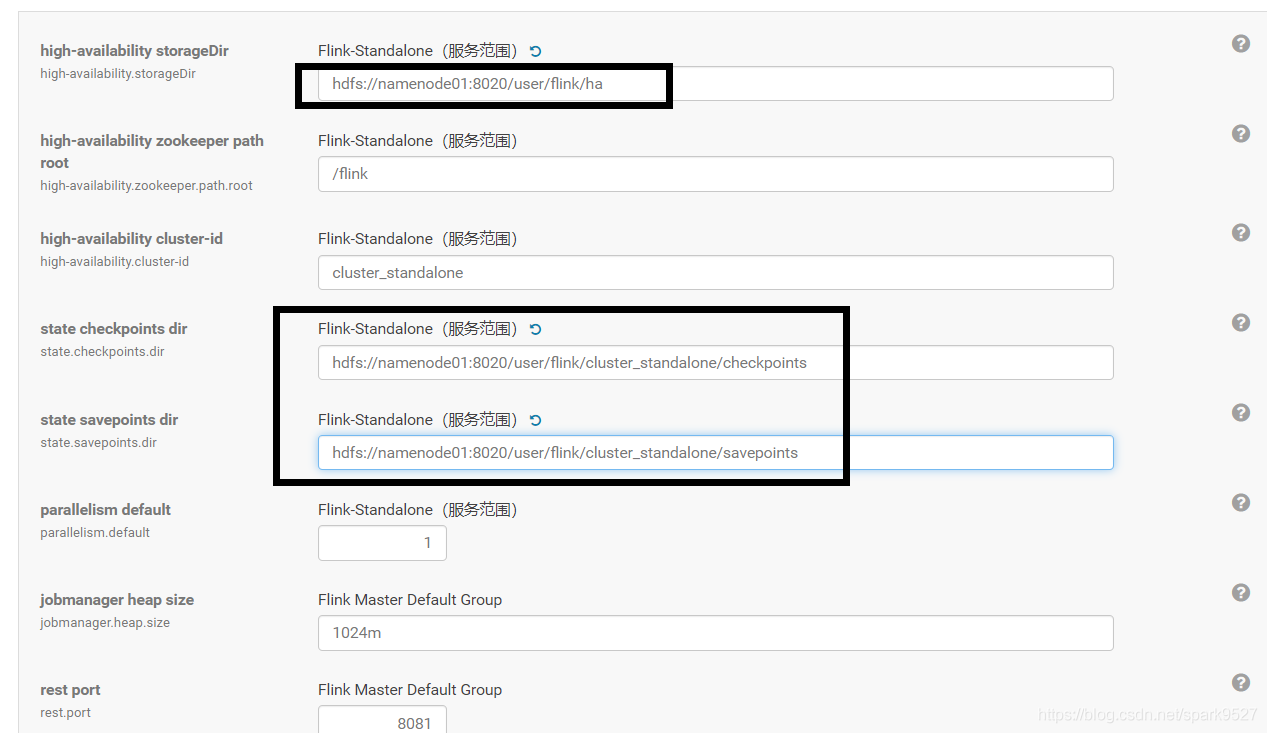

設定flink,注意!我框起來的地方寫全路徑

到這裡所有的步驟就都完成了。上述操作中細緻一點兒一般不會有什麼問題,有不理解的地方可以私信我。我後續會將常見的錯誤整理出來,供大家參考。