LLAPNDK_DOUBLE聲波傳送程式碼分析

總覽

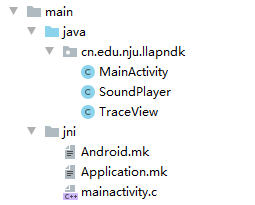

Project中主要的程式碼在這幾個檔案中,控制聲波傳送的程式碼主要在MainActivity.java和SoundPlayer.java中。

SoundPlayer.java

void genTone(){

// fill out the array

for (int i = 0; i < numSamples; ++i) {

sample[i]=0;

for(int j=0;j<numfreq;j++) {

sample[i] =sample[i]+ Math.cos(2 * Math.PI * i / (sampleRate / (freqOfTone[j])));//第i個取樣點,t為i/sampleRate

}

sample[i]=sample[i]/numfreq;

}

// convert to 16 bit pcm sound array

// assumes the sample buffer is normalised.

int idx = 0;

for (final double dVal : sample) {

// scale to maximum amplitude

final short val = (short) ((dVal * 30000));//加振幅,加音量

// in 16 bit wav PCM, first byte is the low order byte

generatedSound[idx++] = (byte) (val & 0x00ff);//低8位元

generatedSound[idx++] = (byte) ((val & 0xff00) >>> 8);//高8位元

}

}

該函數是生成聲波的函數,先填入sample陣列每個取樣點的值。這裡的聲波是多個頻率的cos函數相加除以頻率數,論文中表示使用多個頻率是為了減輕多徑效應的影響。接著,該函數將聲波的振幅調到最大,並且將聲波訊號轉換成PCM編碼,存入generatedSound陣列中。sample[0]的低8位元放在generatedSound[0]中,高8位元放在generatedSound[1]中;然後sample[1]的低8位元放在generatedSound[2]中,且將高8位元放在generatedSound[3]中,以此類推。

public void PrepareSound() {

genTone();

Log.d("llap", "" +audioTrack.write(generatedSound, 0, generatedSound.length));//write函數向audioTrack寫入聲波資料

audioTrack.setLoopPoints(0, generatedSound.length / 2, -1);//迴圈

}

該函數先呼叫genTone()函數,genTone()函數執行完後要傳送的聲波訊號就轉換為PCM編碼儲存在generatedSound陣列中了。Log函數是把資訊寫到紀錄檔裡,audioTrack.write函數將聲波訊號的PCM編碼generatedSound寫入audioTrack中,audioTrack.setLoopPoints函數用於設定迴圈數,把loopcount設定成了-1,表示無限迴圈。

SoundPlayer(int setsamplerate, int setnumfreq, double setfreqs[]) {

sampleRate=setsamplerate;

numfreq=setnumfreq;

for (int i=0;i<numfreq;i++)

{

freqOfTone[i]=setfreqs[i];

}

//STREAM_MUSIC STREAM_VOICE_CALL

audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, sampleRate, AudioFormat.CHANNEL_OUT_MONO,

AudioFormat.ENCODING_PCM_16BIT, generatedSound.length, AudioTrack.MODE_STATIC);//初始化

PrepareSound();

}

該函數是SoundPlayer類別建構函式,給freqOfTone陣列賦值,new一個audioTrack,呼叫PrepareSound函數生成聲波並將聲波的PCM編碼傳給AudioTrack。

MainActivicty.java

//初始化wavefreqs陣列和wavelength陣列

for(int i=0;i<numfreq;i++)

{

wavefreqs[i]=startfreq+i*freqinter;//直線,初始化,startfreq = 15050,freqinter = 350

wavelength[i]=soundspeed/wavefreqs[i]*1000;

}

此段程式碼在用於初始化wavefreqs陣列和wavelength陣列。

btnPlayRecord.setOnClickListener(new OnClickListener()

{

@Override

public void onClick(View v)

{

btnPlayRecord.setEnabled(false);

btnStopRecord.setEnabled(true);

recBufSize = AudioRecord.getMinBufferSize(sampleRateInHz,

channelConfig, encodingBitrate);

mylog( "recbuffersize:" + recBufSize);

playBufSize = AudioTrack.getMinBufferSize(sampleRateInHz,

channelConfig, encodingBitrate);

audioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC,

sampleRateInHz, channelConfig, encodingBitrate, recBufSize);

mylog("channels:" + audioRecord.getChannelConfiguration());

new ThreadInstantPlay().start();

new ThreadInstantRecord().start();

new ThreadSocket().start();

}

});

該事件監聽器負責設定audioRecord並且啟動傳送聲波訊號的執行緒。

class ThreadInstantPlay extends Thread

{

@Override

public void run()

{

SoundPlayer Player= new SoundPlayer(sampleRateInHz,numfreq,wavefreqs);

blnPlayRecord=true;

Player.play();

while (blnPlayRecord==true){}

Player.stop();

}

}

該執行緒是傳送聲波訊號的執行緒,new一個SoundPlayer並且呼叫play()方法傳送聲波。